Abstract

A variety of planning research is being actively conducted in multiple research fields. The focus of these studies is to flexibly utilize both immediate and deliberative planning in response to the environment and to adaptively prioritize multiple goals and actions in a human-like manner. To achieve this, a method that applies active propagation to multi-agent planning (agent activation spreading network) has been proposed and is being utilized in various research fields. Furthermore, with the recent development of large-scale artificial intelligence models, we should soon be able to incorporate tacit human knowledge into this architecture. However, there is not yet a method for adjusting the parameters in this architecture which creates a barrier to future extension. In response, we have developed a method for automatically adjusting the parameters using evolutionary computation. Our experimental results showed that (1) the proposed method enables a higher degree of adaptation, thanks to taking the agent’s semantics into account, and (2) it is possible to obtain parameters that are appropriate to the environment even when the experimental environment is changed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A variety of planning research is being actively conducted in multiple research fields. These studies focus on achieving both immediate planning and deliberative planning in real-world environments and prioritizing multiple goals and actions similarly humans.

Deep learning-based planning methods have attracted significant attention with the recent development of deep learning technology such as [1, 2], a model constructed as a multi-layer neural network that mimics the human brain. By learning the parameters of the neural network from data through use of an evaluation function, these models can take appropriate actions for complex tasks. Deep learning-based planning methods have attracted attention in recent years for various reasons, including the development of computer processing capabilities, the ability to extract features from large amounts of observed data automatically, and the ability of deep learning to handle time series data processing. However, because they learn from observed data, these models are not great at dealing with dynamic environments. In particular, they struggle with cases in which it is necessary to change goals in the middle of planning or when it is necessary to change the priority of sub-goals in an environment with multiple goals. This is because learning priorities by considering the immediacy and deliberateness of multiple goals in every situation is not practical, as learning load would be too high. Adapting to dynamically changing environments such as those found in the real world is thus quite challenging. The current state of the art is limited to architectures that can perform optimized actions only for specific tasks that have already been learned.

In this paper, we focus on the Subsumption Architecture (SA) [3] and Agent Network Architecture (ANA) proposed by Maes [4], which are classical planning methods but a study that considers immediacy and deliberateness. We refer to the architecture that extends ANA as the agent activation spreading network (AASN).

SA is an architecture in which many simple actions are symbolically divided into agents. A hierarchical structure is given to these agents so that higher-level ones can control the lower-level ones. Lower-level agents are typically utilized to achieve an important goal (e.g., reaching a destination), which requires deliberate planning, while the goal of higher-level agents is to avoid an urgent situation (e.g., there is a hole in front of them), which requires immediate planning. SA uses this hierarchical structure to dynamically distinguish between immediate and deliberative planning in accordance with the environment. The methodology based on SA has been adapted to Roomba, the well-known cleaning robot [5]. However, because this mechanism requires a hierarchy of agents, it is challenging to decide how to distribute agents to each layer as the number of goals increases, and selecting goals at each layer becomes increasingly difficult.

Similar to SA, AASN is a multi-agent planning method with many simple symbolic agents. It differs from SA in that it does not have a hierarchy but rather controls agents by activity propagation. If the state of the environment matches the conditions for the agent to function, activity propagation is used to activate the agent. Each goal also propagates to activate an agent that can achieve it, and that agent propagates to activate further the agents that activate themselves. The activity propagation from the state of the environment is the activity propagation for immediate planning, while that from the goal is for deliberative planning. This mechanism enables dynamic planning in response to the environment. With the recent development of deep learning, there has been much research on acquiring tacit knowledge from large-scale artificial intelligence (AI) models [6, 7]. Further development is expected by incorporating knowledge acquired from these large-scale AI models as agents. By combining them with AASN, we can expect to achieve both immediate and deliberative planning, which is necessary for coping with dynamic environments and is currently a significant weakness of deep learning-based systems.

However, there is no established method for adjusting the parameters in AASN, so each agent’s parameters have to be adjusted manually. This causes problems because many agents are required for applying AASN to the real world, and it is not easy to adjust all of the parameters manually. If they could be adjusted automatically, AASN would be able to operate not only in simple environments but also in more complex ones.

Therefore, our aim in this paper is to automatically adjust the parameters of agents in AASN. To this end, we investigate (1) which methods are suitable for adjusting the parameters of agents in AASN and (2) whether the parameters adjusted by these methods are sufficiently versatile.

The architecture we used is a combination of ANA and Multi-agent Real-time Reactive (MRR)-planning [8]. Evolutionary computation methods were applied to adjust the parameters of the agents in this architecture automatically. Since some of the evolutionary computation methods adjust agents on basis of their semantics, we changed the names of the agents in our experimental tile world [9] environment from abstract ones (e.g., “Planning Enemy” or “Planning Tile”) to concrete ones (e.g., “Avoid Enemy” or “Go to Tile”). This operation ensures that agents with similar functions have similar parameters when new agents are added.

We used the tile world simulation as the experimental environment for comparing parameter tuning methods. Our findings showed that evolutionary methods with mutation manipulations are the most suitable, and that using the agent’s meaning to adjust parameters is more versatile in obtaining parameters.

Sections 2 and 3 of this paper describe previous work on planning and parameter tuning methods, respectively Sect. 4 gives an overview of the proposed architecture. In Sect. 5, we describe the experimental environment used for testing our method, and in Sect. 6, we report the results. We conclude in Sect. 7 with a brief summary and mention future work.

2 Planning

We discuss ANA, an example of AASN proposed by P. Maes, in Sect. 2.1, and discuss MRR-planning in Sect. 2.2. We then summarize the current study on AASN in Sect. 2.3.

2.1 ANA

ANA is a multi-agent planning method that combines immediacy and deliberateness. Each agent has a condition list, an add list, and a delete list. The add/delete list symbolizes the states added/deleted to/from the environment in which the agent functions. Each agent is connected to the network through these states. In addition, as shown in Fig. 1, ANA contains successor/predecessor/conflictor links. A successor link connects an agent to the following agent that can be executed if the agent’s function is successful. A predecessor link is a link from one agent to another agent that can satisfy the preconditions for executing the agent (link by condition list). A conflictor link is a link from one agent to another agent that cannot be executed if the agent is executed (link by delete list).

ANA differs from SA in that its architecture controls agents by activity propagation rather than a hierarchy. If the state of the environment matches the conditions for the agent to function, activity propagation is used to activate it. Each goals also propagates to activate the agent that can achieve it, and the agent propagates to further activate the agent that can activate itself. The successor link (from the state of the environment) is the activity propagation for immediate planning, while the predecessor link (from the goal) is the activity propagation for deliberative planning. When agents have competing preconditions, the one that accumulates the least amount of activity propagation (conflictor link) is used to attenuate the activity propagation. This mechanism enables dynamic planning in response to the environment. With the recent development of deep learning, there has been much research on acquiring tacit knowledge from large-scale AI models, and further development is expected by incorporating the knowledge acquired from these large-scale AI models as agents. For example, the design of agents, which is currently done manually, can be made more scalable while maintaining consistency utilizing knowledge acquired from large-scale AI models. Furthermore, by combining a large-scale AI model with AASN, we can expect to achieve a better balance between an immediate planning and the deliberative planning required to deal with dynamic environments.

2.2 MRR planning

ANA can only receive discrete binary values of the state of the environment. However, humans deal with continuous values, such as how hungry or sleepy they are. Therefore, MRR planning incorporates continuous values of the environment and its internal state into the agent so that it can dynamically switch between immediate planning and deliberative planning in response to the environment from a more meta-perspective (Fig. 2). MRR planning consists of a planning agent (P agent), a behavior agent (B agent), and a behavior selection agent (BS agent). The P agent encompasses a group of agents that decide which goal to plan for and propagate the activity to the decided action (B agent) to be taken for the object. The BS agent monitors the activity propagation accumulated in the B agents and decides which B agent to execute. The value of the activity propagation set by the P agent is determined using two functions.

The first is a function that multiplies the environment information “env_info” by the agent’s intensity I as in Eq. 1, where a specific value is sent as an active propagation when the condition is met (i.e., having a Tile). Note that the “env_info” is derived from the environment or from itself, such as hunger or distance from others. Therefore, Eq. 1 is applied to P agents that need to be processed in deliberative planning. For example, a P agent that acquires a Tile or acquires a point by placing a Tile in a Hole uses Eq. 1 to perform a deliberative planning when there is no urgency. MRR planning allows for meta-planning, whether “env_info” is discrete or continuous, while still achieving the larger goal.

The second is a function that multiplies the inverse of “env_info” by the agent’s intensity in Eq. 2. Using Eq. 2, the larger the value obtained from the environment or itself, the smaller the value of the active propagation. The smaller the value of the active propagation, the more rapidly it increases. Therefore, Eq. 2 should be applied to P agents that need to respond immediately. For example, a P agent avoiding from an enemy or a P agent re-supplying its energy utilizes Eq. 2 so that it can respond immediately in the case of urgency.

2.3 Applied research in AASN

Although AASN is a relatively older method, it is still attracting fresh research interest today.

One example is its application to a conversation system with a human user [10, 11], where it is utilized in the decision part with the user to make appropriate conversation choices. Users having a conversation with this system exhibited more trust compared to when a conventional method was used.

There are also hybrid systems that combine AASN with other methods [12, 13]. In these systems, the goal selection is performed by Stanford Research Institute Problem Solver (STRIPS) [14]-like classical planning methods, which reduces the number of active propagations and enables complex multiple goals to be achieved.

The architecture parameters described thus far are global parameters, meaning that all agents have the same parameters for planning. All of these parameters need to be designed manually.

In [15], the hybrid approach combining AASN with symbol planning is described as the concept with the most potential for achieving a method that is simultaneously adaptive and goal-driven. It is particularly, promising in that is enables integration of self-adaptation and self-organizing functions. However, the risk is that AASN might fall into unnecessary cycles by tailoring the network parameters to the specific application. In other words, this architecture is helpful if the parameters are well adjusted, but if they are not, the usefulness will diminish.

Our system has addressed this problem by implementing learning in AASN [16, 17]. Romero [16] uses an evolutionary behavioral model for decision making, where the structure and global parameters of AASN are evolved using gene expression programming (GEP). Although the network’s topology structure and global parameters can be automatically adjusted, the evolution becomes more difficult when the number of agents and states increases. Hrabia et al. [17] also added reinforcement learning to the activity propagation mechanism. The study is critical of learning the threshold and intensity but does not experiment with ways of adjusting them. Moreover, the performance is not necessarily much better than other methods.

Therefore, this paper focused on automatic parameter adjustment. We set each agent to have a different parameter set instead of using a standard global parameter set for all agents. The parameters are then automatically adjusted using evolutionary computation methods. This approach enables us to distinguish between agents that need to act immediately and those that need to be more deliberate, which leads to more flexible planning in a dynamic environment.

3 Learning

In this section, we explain how evolutionary computation can be utilized as a parameter tuning method in Sect. 3.1 and then discuss four methods of evolutionary computation we used in Sects. 3.2–3.5.

3.1 Evolutionary computation

Evolutionary computation [18] mimics the evolution of living organisms and is an optimization method that searches for approximate solutions by retaining individuals that are better adapted to the environment.

The main advantages of the evolutionary computation are as follows—

-

Because it is a non-gradient algorithm, it can be applied even if the objective function is discrete. In other words, it is possible to find a solution without depending on the solution method of a specific problem.

-

Even if it is not optimal, a solution can be obtained within a practical time range.

-

It can be applied without changing the form of the parameters to be optimized.

The reward function is non-gradient because the reward of an agent in AASN is derived from the changes in the environment and the agent’s state as a result of planning. Therefore, the gradient optimization method cannot be used to adjust parameters if both immediate and deliberative actions to be performed. In the case of reinforcement learning [19], the parameters need to be replaced by Q values and then updated, resulting in information loss. Therefore, to meet the requirements of our paper, we opted to use evolutionary computation to adjust the parameters.

We utilized four types of evolutionary computation: genetic algorithm (GA) [20], particle swarm optimization (PSO) [21], differential evolution (DE) [22], and NeuroEvolution of Augmenting Topologies (NEAT) [23].

3.2 GA

GA is an algorithm that preserves the next generation of individuals through selection, crossover, mutation, and elite strategies. In selection and crossover, the genes of two individuals are combined to search for a better combination of gene sequences. In our method, roulette selection is applied to select two individuals as parents, and a uniform crossover is used to create offspring. The probability of each individual being selected by roulette selection in generation t is denoted by Eq. 3, where \(p_{i}\) is the probability of selection of the ith individual being selected. For simplicity, let N be the number of individuals, \(fitness_{i}\) be the fitness of individual i, and t be the number of generations.

Mutation enables the exploration of hithero unexplored parameters that have not been explored before by randomly changing their values with a certain probability. The mutation rate probability changes within each parameter’s defined range. The elite strategy also enables a more stable parameter search by saving some good individuals of the current generation for the next generation.

3.3 PSO

PSO is a method of optimizing the degree of adaptation by each particle’s position \(X_{i}\). Six additional variables are handled in this calculation: the particle’s velocity \(V_{i}\), the personal best position \(X^{{\textrm{pbest}}}_{i}\), the global best position \(X^{{\textrm{gbest}}}\), the coefficient w (referring to how much the velocity at the previous time is considered), the coefficient \(c_{1}\) (how much the individual optimal position is considered), and the coefficient \(c_{2}\) (how much the global optimal position is considered). The position of the particle at time \(t+1\) is shown in Eq. 4 and its velocity in Eq. 5.

3.4 DE

DE is an algorithm that mutates the parameters of an individual of interest using the differences of the surrounding populations. The parameters are randomly arranged in the early stages of the search, so the differences are significant, and a global search is performed. In contrast, individuals with close parameters are more likely to be selected at the end of the search, so the differences are minor, and a local search can be performed.

We use DE/rand/1/bin in this paper. The following is a description of DE/rand/1/bin algorithm. First, a set of parameters of N initial search points is randomly generated. Next, for each search point \(X_{i}(t)\), three search points \(X_{r1}(t)\), \(X_{r2}(t)\), and \(X_{r3}(t)\) other than itself are randomly selected. We generate the mutation point \(V_{i}(t)\) using the following Eq. 6, where \(F\in [0,1]\) is a constant that indicates how much the difference between the search points \(X_{r2}(t)\) and \(X_{r3}(t)\) is taken into account.

Then, at the search and mutation points, we use Eq. 7 and the intersection ratio \(cr\in [0,1]\) to determine which parameter is the parameter of the candidate point. j in Eq. 7 is the number of each parameter and \(rand_{j}\in (0,1)\) denotes sampling from a 0–1 uniform distribution.

\(U_{i}(t)\) represents a group of parameters of one individual, and \(U_{ij}(t)\) represents each parameter present in one individual. The obtained candidate point \(U_{i}(t)\) is compared with the fitness of the original search point \(X_{i}(t)\), and the individual with the higher fitness is designated as the next-generation individual.

3.5 NEAT

NEAT is a GA-based method for optimizing the structure and weights in a neural network. For this purpose, information on nodes and connections between nodes is represented by gene arrays. The genotypes of information on these nodes contain connections between nodes and the corresponding phenotypes which are the network structures. As the network structure evolves, the weights are adjusted by applying the selection, crossover, mutation, and elite strategies of the GA algorithm to these genotypes.

In our method, we adjust the parameters by evolving a network with the agent’s name as input and its parameters as output. Therefore, NEAT does not evolve the parameters and structure of AASN itself but rather the network’s structure for outputting the agent’s parameters and the parameters within that network. In other words, NEAT can be used to infer the parameters of an agent from the agent’s semantics, and it can handle an increase in the number of agents. Similar agents can be set to similar parameters.

4 Proposed architecture

In this section, we describe AASN used as the proposed system in Sect. 4.1, and explain how to apply evolutionary computation to the AASN in Sect. 4.2.

4.1 AASN

The architecture we used combines ANA and MRR-planning methods introduced in Sects. 2.1 and 2.2. Specifically, ANA incorporates the continuous values used in MRR planning. In ANA, the parameters of the agents are controlled as global parameters, while in MRR planning, each agent has its own parameters.

Each agent has three parameters to distinguish whether it is an immediate or deliberative agent without having to design the agent in advance where the threshold is \(T_{i}\), intensity is \(I_{i}\), and for active propagation, the ratio at which Eqs. 1 and 2 are used is \(r_{i}\). Agents exchange activity propagation with other agents and the environment, thereby accumulating an activation level for each. When the activation level exceeds \(agent_{i}\)’s threshold \(T_{i}\), it performs its function and sets its activation level to 0. The value of the activity propagation from \(agent_{i}\) to other agents is determined by the propagation value \(I_{i}\) and the function selection ratio \(r_{i}\), which is the ratio that determines whether the sensor is a responsive agent or a contemplative agent and determines which ratio of Eqs. 1 and 2 should be used. When \(r_{i}\) is small, the proportion using Eq. 2 is large, and the agent is responsive to the environment. Conversely, when \(r_{i}\) is large, a large percentage of the time, the agent uses Eq. 1, always sending the same stimuli, thus making it a deliberative agent.

By automatically adjusting these parameters, we should be able to create agents without having to consider whether they are responsive or contemplative during the agent design phase. In this paper, we utilize NEAT, which adjusts the parameters by considering the agent’s name, so we changed the names from abstract ones, (e.g., “Planning Enemy”) to concrete ones (e.g., “Avoid Enemy”). “Planning X” only considers the target that enters X, but by separating this into “Go to X” and “Avoid X”, it is possible to adjust parameters considering both the action (go to, avoid) and the target (X).

The actual architecture we use in tile world is shown in Fig. 3. Sensor agents are agents that receive appropriate “env_info”. “Sensor Enemy” receives the distance from the Enemy as “env_info”. When the Enemy is close, it is desirable to take the action of running away, and when it is far away, it is not necessary to plan an evasive action for it. “Sensor Tile” and “Sensor Hole” receive two values as “env_info”, regardless of whether they have a Tile or not. If the situation is not urgent, the agent can think about its route contemplatively in accordance with whether it has a Tile or not and whether it has a Tile or Hole to gain points. “Sensor Base” receives its energy as “env_info”. When the amount of its energy is low, it needs move toward the Base to obtain energy.

Sensor agents perform activity propagation to the corresponding group of planning agents in accordance with to the value of “env_info”. The arrows in Fig. 3 represent the flow of activity propagation. “Sensor Enemy” receives the distance to the Enemy every time and sends the activity propagation to “Avoid Enemy” according to this value. When the accumulation of activity propagation to “Avoid Enemy” exceeds a certain threshold level of activity, it will propagate activity in the direction of the Enemy to escape. (If the Enemy enters above it, it will propagate its activity to the Down of the action agent.) Similarly, when the action agent is activated, it is instructed to execute the action. The same planning is performed for other Tiles/Holes/Bases. Using “env_info”, a value from the environment and its state, to propagate activity, the planning can be done flexibly in accordance with the dynamic environment.

4.2 Application of evolutionary computation

This section describes how we apply evolutionary computation to AASN.

The first phase is the generation of initial individuals. We focus here on three parameters of AASN: the threshold value (threshold), the stimulus strength (intensity), and the ratio of Eqs. 1–2 (function selection ratio). The number of modules is N, the threshold of the i-th module is \(T_{i}\), the intensity is \(I_{i}\), and the function selection ratio is \(r_{i}\). In GA, PSO, and DE, each is given a random value in the range of \(0 \le T_{i} \le 100\), \(0 \le I_{i} \le 100\), and \(0 \le r_{i} \le 100\). In NEAT, the network input is a vector representation (one-hot encoding) of the words in each agent, and the output is the parameter for each agent, \(T_{i}\), \(I_{i}\) and \(r_{i}\). The network structure of the initial individual used in NEAT is shown in Fig. 4.

Next, we calculate the fitness of the individuals generated in the initial individual generation phase, which is expressed as

Then, the next generation of individuals is generated, which we update using the evolutionary computation utilized in GA, PSO, DE, and NEAT.

This iterative process of calculating the degree of fitness and updating the individuals is continued until the termination condition is satisfied. We define the termination condition as either “the predetermined average individual adaptability is exceeded” or “the predetermined number of epochs is reached”. When the end condition is met, the algorithm outputs the individual with the highest fitness and finishes learning the parameters.

The flow of the proposed algorithm is schematically shown in Fig. 5.

The hyper parameters of the evolutionary computation used in this paper are listed in Table 1. The hyper parameters for NEAT are taken from the Neat-Python software.

5 Experiments

In this section, we describe the experimental environment in Sect. 5.1, and then explain the details of each of the four experiments we conducted in Sects. 5.2–5.5.

5.1 Experimental environment

We utilized an extended version of tile world [9] for the experimental environments, as done in a variety of previous planning studies [26, 27]. The initial state of tile world is shown in Fig. 6, where the Player, Tile, Hole, Enemy, Base, and Obstacle are depicted. The Player have various goals which is to efficiently collect the Tiles and Holes, avoid the Enemy and Obstacles, and replenish its energy at the Base. The details of each object are listed in Table 2. In addition, since we have created AASN in line with this environment, we show the functions of the agents in Table 3.

In our experiments, “env_info” is a value that a sensor agent in the architecture can determine from its environment and the player’s state. This value is different for each sensor agent. “Sensor Enemy” receives the distance from the Enemy as “env_info”. “Sensor Tile” and “Sensor Hole” receive two values as “env_info” whether they have a Tile or not. “Sensor Base” receives the player’s energy as “env_info”.

The degree of fitness of the player was determined using Eq. 9 in accordance with the number of Tiles and Holes acquired.

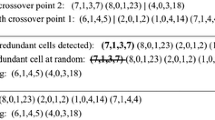

5.2 Experiment 1: Comparison of learning method and learning target

In this experiment, we compared the learning method (“Method”) and learning target (“Target”) shown in Fig. 6. The fitness for learning was determined in accordance with the number of Tiles and Holes acquired by the agent (maximum fitness: 12). We define “Target” as \(2^{3}-1=7\) patterns of learning or fixing the threshold, intensity, and function selection ratio of the parameters of the network (when fixed, the threshold is 50, the intensity is 20, and the function selection ratio is 50). In total, 28 patterns were trained, and we compared the changes in learning fitness for each. The point of this experiment was to determine which “Method” and “Target” are suitable for learning the parameters of AASN.

5.3 Experiment 2: Comparison of learning by changing the parameters of tile world

In this experiment, we used the best combination of “Target” and “Method” obtained in Experiment 1 to compare how learning occurs when the parameters in the tile world are changed. Specifically, we compared the results when the amount of energy that the Enemy can take from the player (“Enemy_gets_energy”) is changed to (10, 30, 50) and when the amount of energy that the player can acquire from the Base (“energy_in_Base”) is changed to (10, 20, 30). (Note that on Experiment 1, “Enemy_gets_energy” was set to 30, and “energy_in_Base” was set to 20.) The point of this experiment was to examine how parameter learning adapts to the environment.

5.4 Experiment 3: Comparison of changes in behavior generation by adding a new object to tile world

In this experiment, we placed an additional object named Money in the tile world environment (Fig. 7). The point of this experiment was determine if, once an appropriate agent for the new object is designed in AASN, a player can acquire the Money by adjusting its parameters appropriately in NEAT using the agent’s meaning. With the addition of Money, the agents “Sensor Money”, “Go to Money”, and “Get Money” are added to the agent activation spreading network. “Sensor Money”, “Go to Money”, and “Get Money” agents were added to AASN. The parameters of these agents were given random values for GA, DE, and PSO, while the values for NEAT were given using the trained network.

5.5 Experiment 4: Comparison of learning by changing object placement in tile world

In this experiment, we used the best learning “Method” obtained in Experiment 1 and changed the environment of tile world, as shown in Fig. 8. The point of this experiment was to determine if the learning proceeds in the same way as in Experiment 1, even when the environment is significantly changed.

6 Results and discussion

6.1 Experiment 1

Tables 4 and 5 list the average of the maximum fitness and the number of epochs taken to obtain the maximum fitness by each “Method” and “Target”, respectively. In the tables, from left to right, values such as TTF indicate whether the threshold, intensity, and function selection ratio were used to learn the parameters or were fixed, e.g., TTF \(\longrightarrow \) threshold: True, intensity: True, function selection ratio: False.

First, we consider “Method”. We can see in Table 4 that the maximum fitness is larger in the order of NEAT> GA> DE> PSO, and the number of epochs required to obtain the maximum fitness is smaller in the order of NEAT< GA< PSO< DE. These comparisons also show that there is a large difference in the degree of fitness between NEAT/GA and DE/PSO. This may be due to the fact that there is a mutation manipulation in NEAT and GA, but not in DE and PSO. Mutation allows for a wider range of searches and is presumed to prevent falling into a local solution. The difference in the degree of adaptation between NEAT and GA can be attributed to whether the parameters are adjusted in consideration of the meaning of the agents. NEAT converts the names of agents into a vector and uses this information to adjust the parameters, whereas GA does not randomly assigns parameters instead. Therefore, the parameters that can be obtained differ depending on the algorithm used. In this experiment, we found that NEAT, a method that does not fall into local solutions and that considers the meaning of agents, is most suitable for adjusting the parameters of AASN.

As for “Target”, we can see in Table 5 that the case where the threshold and function selection ratio are learned and the intensity is fixed (TFT) has the best values for all the items of the maximum fitness and the number of epochs taken to obtain the maximum fitness. There are two possible reasons for this: first, the adjustment of the threshold controls the ease of execution of the agents, and second, the adjustment of the function selection ratio distinguishes between the agents that require immediate or deliberate action. These two parameters are presumably what enables the achievement of multiple goals and the generation of actions that match the dynamically changing environment. In AASN, stimulus is determined by selecting two functions, so adjusting the intensity would have resulted in a more complex adjustment. This implies that the intensity should not be adjusted.

Therefore, in Experiment 2, we trained a combination of the best “Method” (NEAT) and “Target” (threshold and function selection ratio). In Experiment 3, we used the parameters that trained “Target” (threshold and function selection ratio) for each “Method” in Experiment 1. In Experiment 4, we trained “Target” (threshold and function selection ratio) that was learned for all “Method”.

6.2 Experiment 2

Tables 6 and 7 show the average of the function selection ratio by changing in “Enemy_gets_energy” and “energy_in_Base”, respectively. We can see here that the function selection ratio of “Enemy Sensor” is significantly affected by these changes, whereas that of “Tile Sensor” is not. As mentioned earlier, a small function selection ratio indicates an immediate agent (Eq. 2), while a large one indicates a deliberative agent (Eq. 1). When “Enemy_gets_energy” increases, the function selection ratio of “Enemy Sensor” decreases. This may be due to the fact that the threat of the Enemy becomes more immediate in this case. Similarly, when “energy_in_Base” increases, the function selection ratio of “Enemy Sensor” increases. This is presumably because when the energy obtained from the Base is large, the Player thinks it would not have a problem even if it loses some of its energy, and thus its immediateness in relation to the Enemy is reduced. The fact that the function selection ratio of “Tile Sensor” remains unchanged indicates that the Player thought and acted deliberately when it had time, regardless of the situation. These results confirm that the use of the evolutionary computation enables appropriate parameter learning in response to changes in the environment.

6.3 Experiment 3

The number of Tiles, Holes, and Money that changed due to the addition of a new object is shown in Table 8. As we can see, NEAT acquired the largest number of all three compared to the other “Method”. In other words, the network trained by NEAT can adjust the parameters for new agents more appropriately. NEAT performs parameter tuning based on the meaning of the agent, which is presumably why a more generalized network representation can be acquired.

6.4 Experiment 4

Figure 9 shows the fitness curves for each “Method”, where we can see that the learning progresses well in the order of NEAT> GA> DE> PSO. This is consistent with the results and discussion presented in Experiment 1, and confirms that the parameter learning results change depending on the nature of “Method”. In addition, as shown in Experiment 1, we found that the best way to train AASN is to use NEAT while learning the threshold and function selection ratio.

7 Conclusion

In this paper, we applied evolutionary computation to the parameter learning of AASN and experimentally investigated the properties of the evolutionary computation and the parameter learning. Our findings showed that learning the parameters of the threshold and function selection ratio by NEAT is the most efficient way to learn the parameters. There are two main requirements when it comes to learning with a large number of parameters and local solutions: first, the method should be able to search a wide range of local solutions (e.g., mutation), and second, it should be able to adjust the parameters by considering the meaning of the module. We found that NEAT could effectively acquire parameters corresponding to changes in the environment.

To utilize the agent activation spreading network with agents in the real world, additional research on automatic module generation will be necessary. In the case of the small environment (tile world) examined in this paper, modules can be created manually, but in the real world, the number of modules is enormous. Therefore, our future work will involve improving the method so that it can automatically generate modules and adjust their parameters.

Data availability

No data was used for the research described in the article.

References

Hafner D, Lillicrap T, Norouzi M, Ba J (2020). Mastering Atari with discrete world models. https://doi.org/10.48550/arXiv.2010.02193

Chen L, Lu K, Rajeswaran A, Lee K, Grover A, Laskin M, Abbeel P, Srinivas A, Mordatch I (2021) Decision transformer: reinforcement learning via sequence modeling. Adv Neural Inf Process Syst 34:15084–15097

Brooks R (1986) A robust layered control system for a mobile robot. IEEE J Robot Autom 2(1):14–23

Maes P (1991) The agent network architecture (ANA). ACM SIGART Bull 2(4):115–120

Jones JL (2006) Robots at the tipping point: the road to iRobot Roomba. IEEE Robot Autom Mag 13(1):76–78

Brown T, Mann B, Ryder N, Subbiah M, Kaplan JD, Dhariwal P, Neelakantan A, Shyam P, Sastry G, Askell A (2020) Language models are few-shot learners. Adv Neural Inf Process Syst 33:1877–1901

West P, Bhagavatula C, Hessel J, Hwang JD, Jiang L, Bras R, Lu X, Welleck S, Choi Y (2021). Symbolic knowledge distillation: from general language models to commonsense models. https://doi.org/10.48550/arXiv.2110.07178

Kurihara S, Aoyagi S, Onai R, Sugawara T (1995) Adaptive selection of reactive/deliberate planning for the dynamic environment. Robot Auton Syst 24(3–4):183–195

Pollack ME, Ringuette M (1990) Introducing the tile world: experimentally evaluating agent architectures. AAAI 90:183–189

Romero OJ, Ran Z, Justine C (2017) Cognitive-inspired conversational-strategy reasoner for socially-aware agents. In: International joint conferences on artificial intelligence, pp 3807–3813

Pecune F, Chen J, Matsuyama Y, Cassell J (2018) Field trial analysis of socially aware robot assistant. In: Proceedings of the 17th international conference on autonomous agents and multiagent systems, pp 1241–1249

Yang KM, Cho SB (2013) STRIPS planning with modular behavior selection networks for smart home agents. In: 2013 IEEE 10th international conference on ubiquitous intelligence and computing and 2013 IEEE 10th international conference on autonomic and trusted computing, pp 301–307

Hrabia CE, Wypler S, Albayrak S (2017) Towards goal-driven behaviour control of multi-robot systems. In: 2017 3rd international conference on control, automation and robotics, pp 166–173

Fikes RE, Nilsson NJ (1971) Strips: a new approach to the application of theorem proving to problem solving. Artif Intell 3–4(2):189–208

Hrabia CE, Lützenberger M, Albayrak S (2018) Towards adaptive multi-robot systems: self-organization and self-adaptation. Knowl Eng Rev 33:1

Romero OJ (2011) An evolutionary behavioral model for decision making. Adapt Behav 19(6):451–475

Hrabia CE, Lehmann PM, Albayrak S (2019) Increasing self-adaptation in a hybrid decision-making and planning system with reinforcement learning. In: 2019 IEEE 43rd annual computer software and applications conference, vol 1, pp 469–478

Slowik A, Kwasnicka H (2020) Evolutionary algorithms and their applications to engineering problems. Neural Comput Appl 32:12363–12379

Sutton RS, Barto AG (1998) Reinforcement learning: an introduction. MIT Press, Cambridge

Holland JH (1992) Adaptation in natural and artificial systems: an introductory analysis with applications to biology, control, and artificial intelligence. MIT Press, London

Kennedy J, Eberhart R (1995) Particle swarm optimization. In: ICNN’95 international conference on neural networks, vol 4, pp 1942–1948

Storn R, Price K (1997) Differential evolution: a simple and efficient heuristic for global optimization over continuous spaces. J Global Optim 11(4):341–359

Stanley KO, Miikkulainen R (2002) Evolving neural networks through augmenting topologies. Evol Comput 10(2):99–127

Slowik A, Kwasnicka H (2020) Evolutionary algorithms and their applications to engineering problems. Neural Comput Appl 32(16):12363–12379

Galván E, Mooney P (2021) Neuroevolution in deep neural networks: current trends and future challenges. IEEE Trans Artif Intell 2(6):467–493

Li X, Yang M, Wu S (2018) Niching genetic network programming with rule accumulation for decision making: an evolutionary rule-based approach. Expert Syst Appl 114:374–387

Cicirelli F, Giordano A, Nigro L (2015) Efficient environment management for distributed simulation of large-scale situated multi-agent systems. Concurr Comput Pract Exp 27(3):610–632

Acknowledgements

This work was supported by a grant through the NEDO/Technology Development Project on Next-Generation Artificial Intelligence Evolving Together With Humans “Constructing An Interactive Story-Type Contents Generation System”.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was presented in part at the joint symposium of the 27th International Symposium on Artificial Life and Robotics, the 7th International Symposium on BioComplexity, and the 5th International Symposium on Swarm Behavior and Bio-Inspired Robotics (Online, January 25–27, 2022).

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Shimokawa, D., Yoshida, N., Koyama, S. et al. Automatic parameter learning method for agent activation spreading network by evolutionary computation. Artif Life Robotics 28, 571–582 (2023). https://doi.org/10.1007/s10015-023-00873-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10015-023-00873-z