Abstract

Objectives

Diagnosing oral potentially malignant disorders (OPMD) is critical to prevent oral cancer. This study aims to automatically detect and classify the most common pre-malignant oral lesions, such as leukoplakia and oral lichen planus (OLP), and distinguish them from oral squamous cell carcinomas (OSCC) and healthy oral mucosa on clinical photographs using vision transformers.

Methods

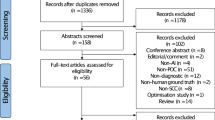

4,161 photographs of healthy mucosa, leukoplakia, OLP, and OSCC were included. Findings were annotated pixel-wise and reviewed by three clinicians. The photographs were divided into 3,337 for training and validation and 824 for testing. The training and validation images were further divided into five folds with stratification. A Mask R-CNN with a Swin Transformer was trained five times with cross-validation, and the held-out test split was used to evaluate the model performance. The precision, F1-score, sensitivity, specificity, and accuracy were calculated. The area under the receiver operating characteristics curve (AUC) and the confusion matrix of the most effective model were presented.

Results

The detection of OSCC with the employed model yielded an F1 of 0.852 and AUC of 0.974. The detection of OLP had an F1 of 0.825 and AUC of 0.948. For leukoplakia the F1 was 0.796 and the AUC was 0.938.

Conclusions

OSCC were effectively detected with the employed model, whereas the detection of OLP and leukoplakia was moderately effective.

Clinical relevance

Oral cancer is often detected in advanced stages. The demonstrated technology may support the detection and observation of OPMD to lower the disease burden and identify malignant oral cavity lesions earlier.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Diagnosing oral potentially malignant disorders (OPMD) is crucial in dental examinations. It plays a significant role in providing adequate treatment, educating patients about associated risks, and preventing oral cancer. OPMD refers to a group of mucosal lesions with an increased risk of developing into oral squamous cell carcinoma (OSCC). Therefore, accurate diagnosis, careful observation, or timely resection of OPMD are essential to prevent the transformation into oral cancer [1].

Common OPMD conditions include leukoplakia, proliferative verrucous leukoplakia, erythroplakia, oral submucous fibrosis, and oral lichen planus [1, 2] Leukoplakia is among the most frequently encountered OPMD, with a global prevalence of 4,11% [3]. It is diagnosed based on clinical presentation and can be defined as a not wipeable “predominantly white plaque of questionable risk, having excluded other known diseases or disorders that do not carry an increased risk for cancer.” [2].

Oral lichen planus (OLP) is an autoimmune chronic inflammatory disease affecting the skin, oral, esophageal, and vaginal mucosa. In its oral form, OLP typically manifests with a distinctive white reticular pattern and can present in erosive, atrophic, bullous, or plaque-like forms [4]. Differential diagnoses for OLP include frictional keratosis, candidiasis, leukoplakia, lupus erythematosus, pemphigus vulgaris, mucous membrane pemphigoid, and chronic ulcerative stomatitis [5]. The prevalence of OLP is approximately 1.27%, with slightly higher rates in women than men [6, 7].

The transformation rate of OPMD varies significantly, ranging from 1.4 to 49.5%. Predicting the risk of malignant transformation remains challenging due to the lack of accurate diagnostic methods [8]. On average, the transformation rate for OLP is 1.4%, while erythroplakia has an average transformation rate of 21% [9,10,11]. Notably, patients with proliferative verrucous leukoplakia have a transformation rate of up to 74% [12, 13].

Various methods are employed to diagnose OPMD, including visual inspection supported by vital staining [14], autofluorescence, and reflectance spectroscopy [15]. Invasive approaches such as brush biopsy with cytologic testing and incision biopsy are also utilized [16, 17]. Cytologic testing of suspicious lesions has demonstrated a sensitivity of 0.92 and a specificity of 0.94 for OPMD and OSCC [1]. However, the diagnostic accuracy of autofluorescence, vital staining, and reflectance spectroscopy in identifying OPMD and OSCC is limited [1]. Autofluorescence shows higher sensitivity (0.90) and specificity (0.72) for suspicious lesions compared to innocuous lesions (sensitivity 0.50, specificity 0.39) [1, 18]. Similarly, vital staining and reflectance spectroscopy exhibit relatively low diagnostic accuracies for suspicious lesions [1].

Deep learning models have gained significant traction in medical image analysis. Specifically, convolutional neural networks (CNN) and vision transformer (ViT) models have emerged as powerful tools for detecting pathologies in photographic images. Previous studies assessed the accuracy of CNN models based on images of OSCC and other photos of oral mucosal lesions, including OPMD [5, 19,20,21,22,23,24,25,26,27] [5, 19].

Recognizing the need for alternatives to biopsies performed on subjectively selected areas, the present study investigates the performance of deep learning models in detecting OPMD, specifically leukoplakia and OLP, and OSCC using photographic images of the oral cavity. The study hypothesized that a Mask R-CNN architecture with a Swin Transformer backbone would obtain high accuracy in the multi-class detection of OLP, leukoplakia, and OSCC. By leveraging the capabilities of machine learning models and harnessing the potential of vision transformers, this research aims to advance non-invasive diagnostic methods for OPMD and oral cancer.

Materials and methods

Data description

A total of 4,161 clinical photographs were retrospectively collected from the Department of Oral and Maxillofacial Surgery at Charité – Universitätsmedizin Berlin, Germany. These images comprehensively covered various regions of the oral cavity. The dataset included images featuring OPMD, specifically OLP, and leukoplakia and pictures of OSCC covering TNM stages I to IV. The image resolution was consistently maintained at a minimum of 74 pixels per inch (ppi). All image data were anonymized before analysis. This study was conducted according to the Declaration of Helsinki. The Institutional Review Board (EA2/089/22) approved this study on the 19th of May 2022.

Data annotation

Disorders in the photographs were pixel-wise segmented and labeled as OLP, leukoplakia, or OSCC. Pictures with a different disorder were excluded from this study. Each photo was annotated by different clinicians independently using the DARWIN Version 2.0 software (V7, London, UK). Three clinicians subsequently reviewed and revised all segmented and labeled photographs (TF, DT, TX). The three reviewers have at least ten years of clinical experience and have completed their specialty training. Each clinician was instructed and calibrated in the annotation task using a standardized protocol before the annotation and reviewing process.

Data pre-processing

The annotated pixels were clustered into objects as connected pixels of the same label. Objects close together were aggregated by merging their annotated pixels (Fig. 1, middle row). More specifically, given a label, all objects of that label were morphologically dilated, and groups of overlapping dilated objects were determined. The original pixels from all objects in a group were merged to form a new object, and the original smaller objects were removed. This was repeated for the three labels (Lichen, Leukoplakia, and OSCC).

Model architecture

The study employed a Mask R-CNN with a Swin Transformer. Mask R-CNN enhances object detection and instance segmentation, augmenting Faster R-CNN with a mask prediction branch. Swin Transformer’s key attribute is its distinctive window shifting among self-attention layers. This novel technique, connecting windows across layers, efficiently enhances modeling of long-range dependencies. The approach significantly boosts the architecture’s ability to capture intricate patterns and improves the integration of information from various locations within an image.

Model training

The annotated photographs were divided into 3,337 images for training and validation and 412 images for testing with stratification on the type of disorder, supplemented by a random selection of 412 pictures without an oral disorder. The training and validation images were further divided into five folds with stratification. All images of a patient were strictly grouped either within the test split or within one fold. The Mask R-CNN model was trained five times with cross-validation. The held-out test split was used to evaluate the model performance after training.

The Mask R-CNN model was pre-trained on the COCO dataset and used the small variant of the Swin Transformer as backbone. The model optimization used the AdamW optimizer with a weight decay set 0.05. The training was performed for a maximum of 24 epochs with an initial learning rate of 5e-5 divided by ten after epochs 16 and 22. The Mask R-CNN architecture used the categorical cross-entropy loss function to optimize the classification branch and the L1 loss function to optimize the bounding box regression branch. Images with a rare combination of disorders were oversampled to address class imbalance, and a mini-batch size of 4 was used. The model was implemented in MMDetection based on PyTorch and trained on a single NVIDIA® RTX A6000 48G.

Model inference

The disorder within a photograph was predicted twice by providing the model with a normal and flipped picture version (test-time augmentation). The image was finally labelled by the disorder segmentation with the highest confidence. If this confidence was below 0.75, the image was labelled as having no disorder.

Statistical analysis

All models’ predictions on the test photographs were aggregated and compared to the reference annotations using scikit-learn (version 1.3.0). Image-level classification scores were determined for each label by taking the maximum confidence of the model for any predicted object with that label. Classification metrics were reported as follows: \(\text{p}\text{r}\text{e}\text{c}\text{i}\text{s}\text{i}\text{o}\text{n} =\frac{TP}{TP+FP}\), \(\text{F}1 \text{s}\text{c}\text{o}\text{r}\text{e} =\frac{2TP}{2TP+FP+FN}\)(also known as the Dice-coefficient), \(\text{r}\text{e}\text{c}\text{a}\text{l}\text{l} =\frac{TP}{TP+FN}\)(also known as sensitivity), \(\text{s}\text{p}\text{e}\text{c}\text{i}\text{f}\text{i}\text{c}\text{i}\text{t}\text{y} = \frac{TN}{TN+FP}\), and \(\text{a}\text{c}\text{c}\text{u}\text{r}\text{a}\text{c}\text{y} =\frac{TP+TN}{TP+TN+FP+FN}\), where TP, TN, FP, and FN denote true positives, true negatives, false positives, and false negatives, respectively. Furthermore, the area under the receiver operating characteristics curve (AUC) of all models and the confusion matrix of the most effective model were presented.

Results

The Mask R-CNN model with Swin Transformer backbone effectively detected OSCC (F1 = 0.852, AUC = 0.974). Detection of OLP and leukoplakia disorders was moderately effective (OLP: F1 = 0.825, AUC = 0.948; leukoplakia: F1 = 0.796, AUC = 0.938) (Table 1; Fig. 2). Figures 1 and 3 show that the model could not consistently reproduce the reference segmentations. The image-level predictions often agreed with the reference, as the ROC curves in Fig. 4 depict.

Confusion matrix of the results of multi-class image-level disorder detection. The results of the most effective cross-validation model on the test images are shown. Predicted disorders with a maximum score ≥ 0.75 are matched to reference disorder. The colors are normalized by the number of predicted labels

Examples of incorrect predictions. The left column is the input image, the middle is the reference annotation, and the right is the prediction. The scores in the right column are the confidences of the model. The first row illustrates the false-positive prediction. The second row shows the false-negative prediction. The last row represents model’s misclassification

Receiver operating characteristic (ROC) curves of image-level classification. On the left side, the ROC curve illustrates the binary classification (pathology versus no disorder). On the right side, the ROC curve shows the binary classifications for the respective pathologies (OSCC versus no disorder; leukoplakia versus no disorder; OLP versus no disorder). Each center line and peripheral line represent the mean and the mean plus and minus the standard deviation across cross-validation models

Discussion

OPMD should be accurately diagnosed and treated to minimize patient morbidity and prevent oral cancer. The early detection and treatment of oral cancer are associated with a good prognosis [28]. Nevertheless, the majority of oral cancer cases are detected in an advanced stage, where patients experience symptoms and mucosal changes for several months [29].

The diagnostic accuracy of various non-invasive methods, such as chemiluminescence, autofluorescence imaging, toluidine blue staining, as well as narrow band imaging, ranges from 50.0 to 93.9% for sensitivity and from 12.5 to 94.6% for specificity. However, these examinations are often affected by significant operator-related variability [30]. Consequently, it is concluded that autofluorescence detection and toluidine blue staining are sensitive for detecting oral cancer and malignant lesions but lack specificity [31]. Therefore, employing autofluorescence, tissue reflectance, or vital staining is not recommended to evaluate clinically evident, innocuous, or suspicious oral mucosa lesions. Cytologic adjuncts exhibit slightly higher diagnostic accuracy compared to autofluorescence, tissue reflectance, and vital staining, making them more suitable as screening tools for patients who initially have declined biopsy.

Automatic image analysis has the potential to replace the previous diagnostic aids for OPMD. In recent years, different studies have assessed the potential of AI-based solutions for the early detection of oral cancer and OPMDs [19, 21,22,23,24,25,26,27, 32]. In these studies, the datasets were either curated before training to include a specific pathology or healthy mucosa [5, 19], or the multi-class detection did not differentiate between the different OPMDs [20].

The predominant approach in these studies involves using a binary classification model to distinguish between normal mucosa and pathological mucosa. Notably, these studies consistently reported high accuracies with F1-scores above 95% [19, 21,22,23,24,25,26,27, 32]. Despite these high metrics, binary classification models should be considered cautiously, as they have potential limitations. Firstly, confronted with a limited dataset for training, these models exhibit a disparity between training and testing accuracy, leading to overfitting issues and a lack of generalizability. Furthermore, the accuracy of binary classifiers is compromised when images are captured from varying angles and backgrounds. Not does the orientation of images influences the diagnostic accuracy, but also the changes in lighting conditions. Binary classification models tend to perform suboptimal when images are taken in more diverse lighting scenarios than the training dataset [33, 34].

To address these limitations, we employed an instance segmentation model. Instance segmentation provides detailed information about object boundaries and localization of individual lesions within an image. Therefore, it allows for counting and differentiation of overlapping lesions. Additionally, instance segmentation models augment the interpretability of the decision-making processes through the use of segmentation masks. In the medical field, generating clear and understandable explanatory structures is crucial to offer clinicians a transparent and explainable system.

The current study utilized a Mask R-CNN model with a Swin Transformer backbone for oral disorder detection. The model demonstrated high accuracies in detecting OSCC with an F1 score of 0.852 and AUC of 0.974, whereas the detection of OLP and leukoplakia disorders was moderately effective, with F1 scores of 0.825 and 0.796, respectively. Despite challenges in reproducing reference segmentations, image-level predictions were often aligned with references, as indicated by ROC curves (Fig. 4). Interestingly, our model encounters challenges similar to those faced by clinicians. The most frequent misclassification for OSCC was Leukoplakia (n = 9, Fig. 2), potentially attributed to including Leukoplakia with dysplasia and early-stage OSCC. Clinicians may find it challenging to differentiate these findings, a difficulty also exhibited by the model [22]. Additionally, the misclassification of Leukoplakia as OLP (n = 7), previously noted by McParland and Warnakulasuriya, underscores the complexity of differentiation. In a cohort of 51 patients later diagnosed with proliferative verrucous leukoplakia, the initial clinical examination diagnosed OLP in 30 patients [35].

Future research should include multi-center image data representing diverse populations and clinical representations of OPMD. Furthermore, annotations from diverse clinicians will help training more robust algorithms. The application of the models in clinical settings is the next step to research the impact of early detection of OPMD and oral cancer.

Conclusions

OSCC were effectively detected with the employed deep learning model whereas the detection of OLP and leukoplakia was moderately effective. The automatic detection of OPMD and OSCC through clinical photographs may facilitate precise diagnosis to initiate appropriate treatment.

Data availability

No datasets were generated or analysed during the current study.

References

Lingen MW, Abt E, Agrawal N et al (2017) Evidence-based clinical practice guideline for the evaluation of potentially malignant disorders in the oral cavity a report of the American Dental Association. J Am Dent Assoc 148:712–727e10. https://doi.org/10.1016/j.adaj.2017.07.032

Warnakulasuriya S, Johnson NW, Waal IVD (2007) Nomenclature and classification of potentially malignant disorders of the oral mucosa. J Oral Pathol Med 36:575–580. https://doi.org/10.1111/j.1600-0714.2007.00582.x

Mello FW, Miguel AFP, Dutra KL et al (2018) Prevalence of oral potentially malignant disorders: a systematic review and meta-analysis. J Oral Pathol Med 47:633–640. https://doi.org/10.1111/jop.12726

Meij EHVD, Waal IVD (2003) Lack of clinicopathologic correlation in the diagnosis of oral lichen planus based on the presently available diagnostic criteria and suggestions for modifications. J Oral Pathol Med 32:507–512. https://doi.org/10.1034/j.1600-0714.2003.00125.x

Achararit P, Manaspon C, Jongwannasiri C et al (2023) Artificial Intelligence-based diagnosis of oral Lichen Planus using deep convolutional neural networks. Eur J Dent. https://doi.org/10.1055/s-0042-1760300

Carrozzo M (2008) How common is oral lichen planus? Évid-Based Dent 9:112–113. https://doi.org/10.1038/sj.ebd.6400614

Schruf E, Biermann MH, Jacob J et al (2022) Lichen Planus in Germany – epidemiology, treatment, and comorbidity. A retrospective claims data analysis. Jddg J Der Deutschen Dermatologischen Gesellschaft. https://doi.org/10.1111/ddg.14808

Iocca O, Sollecito TP, Alawi F et al (2020) Potentially malignant disorders of the oral cavity and oral dysplasia: a systematic review and meta-analysis of malignant transformation rate by subtype. Head Neck 42:539–555. https://doi.org/10.1002/hed.26006

Holmstrup P, Vedtofte P, Reibel J, Stoltze K (2006) Long-term treatment outcome of oral premalignant lesions. Oral Oncol 42:461–474. https://doi.org/10.1016/j.oraloncology.2005.08.011

Chuang S-L, Wang C-P, Chen M-K et al (2018) Malignant transformation to oral cancer by subtype of oral potentially malignant disorder: a prospective cohort study of Taiwanese nationwide oral cancer screening program. Oral Oncol 87:58–63. https://doi.org/10.1016/j.oraloncology.2018.10.021

Feng J, Xu Z, Shi L et al (2013) Expression of cancer stem cell markers ALDH1 and Bmi1 in oral erythroplakia and the risk of oral cancer. J Oral Pathol Med 42:148–153. https://doi.org/10.1111/j.1600-0714.2012.01191.x

Giuliani M, Troiano G, Cordaro M et al (2019) Rate of malignant transformation of oral lichen planus: a systematic review. Oral Dis 25:693–709. https://doi.org/10.1111/odi.12885

Cabay RJ, Morton TH, Epstein JB (2007) Proliferative verrucous leukoplakia and its progression to oral carcinoma: a review of the literature. J Oral Pathol Med 36:255–261. https://doi.org/10.1111/j.1600-0714.2007.00506.x

Parakh MK, Ulaganambi S, Ashifa N et al (2019) Oral potentially malignant disorders: clinical diagnosis and current screening aids: a narrative review. Eur J cancer Prev: off J Eur Cancer Prev Organ (ECP) 29:65–72. https://doi.org/10.1097/cej.0000000000000510

Ramesh S, Nazeer SS, Thomas S et al (2021) Optical diagnosis of oral lichen planus: a clinical study on the use of autofluorescence spectroscopy combined with multivariate analysis. Spectrochim Acta Part A: Mol Biomol Spectrosc 248:119240. https://doi.org/10.1016/j.saa.2020.119240

Neumann FW, Neumann H, Spieth S, Remmerbach TW (2022) Retrospective evaluation of the oral brush biopsy in daily dental routine — an effective way of early cancer detection. Clin Oral Invest 1–7. https://doi.org/10.1007/s00784-022-04620-9

Rao RS, Chatura KR, SV S et al (2020) Procedures and pitfalls in incisional biopsies of oral squamous cell carcinoma with respect to histopathological diagnosis. Dis-a-Mon 66:101035. https://doi.org/10.1016/j.disamonth.2020.101035

Mehrotra R, Singh M, Thomas S et al (2010) A cross-sectional study evaluating chemiluminescence and autofluorescence in the detection of clinically innocuous precancerous and cancerous oral lesions. J Am Dent Assoc 141:151–156. https://doi.org/10.14219/jada.archive.2010.0132

Keser G, Bayrakdar İŞ, Pekiner FN et al (2023) A deep learning algorithm for classification of oral lichen planus lesions from photographic images: a retrospective study. J Stomatology Oral Maxillofac Surg 124:101264. https://doi.org/10.1016/j.jormas.2022.08.007

Warin K, Limprasert W, Suebnukarn S et al (2022) AI-based analysis of oral lesions using novel deep convolutional neural networks for early detection of oral cancer. PLoS ONE 17:e0273508. https://doi.org/10.1371/journal.pone.0273508

Welikala RA, Remagnino P, Lim JH et al (2020) Automated detection and classification of oral lesions using deep learning for early detection of oral Cancer. Ieee Access 8:132677–132693. https://doi.org/10.1109/access.2020.3010180

Shamim MZM, Syed S, Shiblee M et al (2020) Automated detection of oral pre-cancerous tongue lesions using deep learning for early diagnosis of oral Cavity Cancer. Comput J 65:91–104. https://doi.org/10.1093/comjnl/bxaa136

Song B, Sunny S, Uthoff RD et al (2018) Automatic classification of dual-modalilty, smartphone-based oral dysplasia and malignancy images using deep learning. Biomed Opt Express 9:5318. https://doi.org/10.1364/boe.9.005318

Jubair F, Al-karadsheh O, Malamos D et al (2022) A novel lightweight deep convolutional neural network for early detection of oral cancer. Oral Dis 28:1123–1130. https://doi.org/10.1111/odi.13825

A RNB GK, S CH, et al (2021) An Ensemble Deep Neural Network Approach for oral Cancer screening. Int J Online Biomed Eng (iJOE) 17:121–134. https://doi.org/10.3991/ijoe.v17i02.19207

Talwar V, Singh P, Mukhia N et al (2023) AI-Assisted screening of oral potentially malignant disorders using smartphone-based photographic images. Cancers 15:4120. https://doi.org/10.3390/cancers15164120

Flügge T, Gaudin R, Sabatakakis A et al (2023) Detection of oral squamous cell carcinoma in clinical photographs using a vision transformer. Sci Rep-uk 13:2296. https://doi.org/10.1038/s41598-023-29204-9

Seoane J, Takkouche B, Varela-Centelles P et al (2012) Impact of delay in diagnosis on survival to head and neck carcinomas: a systematic review with meta‐analysis. Clin Otolaryngol 37:99–106. https://doi.org/10.1111/j.1749-4486.2012.02464.x

van Harten MC, de Ridder M, Hamming-Vrieze O et al (2014) The association of treatment delay and prognosis in head and neck squamous cell carcinoma (HNSCC) patients in a Dutch comprehensive cancer center. Oral Oncol 50:282–290. https://doi.org/10.1016/j.oraloncology.2013.12.018

Mazur M, Ndokaj A, Venugopal DC et al (2021) In vivo imaging-based techniques for early diagnosis of oral potentially malignant disorders—systematic review and Meta-analysis. Int J Environ Res Pu 18:11775. https://doi.org/10.3390/ijerph182211775

Petruzzi M, Lucchese A, Nardi GM et al (2014) Evaluation of autofluorescence and toluidine blue in the differentiation of oral dysplastic and neoplastic lesions from non dysplastic and neoplastic lesions: a cross-sectional study. J Biomed Opt 19:076003–076003. https://doi.org/10.1117/1.jbo.19.7.076003

Warin K, Limprasert W, Suebnukarn S et al (2021) Performance of deep convolutional neural network for classification and detection of oral potentially malignant disorders in photographic images. Int J Oral Max Surg. https://doi.org/10.1016/j.ijom.2021.09.001

Santos VCA, Cardoso L, Alves R (2023) The quest for the reliability of machine learning models in binary classification on tabular data. Sci Rep 13:18464. https://doi.org/10.1038/s41598-023-45876-9

Kim K, Lee B, Kim JW (2017) Feasibility of Deep Learning algorithms for Binary classification problems. J Intell Inf Syst 23:95–108. https://doi.org/10.13088/jiis.2017.23.1.095

McParland H, Warnakulasuriya S (2021) Lichenoid morphology could be an early feature of oral proliferative verrucous leukoplakia. J Oral Pathol Med 50:229–235. https://doi.org/10.1111/jop.13129

Acknowledgements

None.

Funding

None.

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

SV: Conceptualization, Method, Investigation, Formal Analysis, Software, Writing – original draft. TF: Investigation, Validation, Supervision, Project administration, Writing – original draft. NvN: Validation, Visualization, Data curation, Writing – review & editing. TX: Investigation, Validation, Data curation, Supervision, Writing – review & editing. TV: Investigation, Validation, Supervision, Writing – review & editing. DT: Investigation, Validation, Writing – review & editing. MH: Investigation, Validation, Supervision, Writing – review & editing. RR: Investigation, Validation, Data curation, Supervision, Writing – review & editing. AT: Investigation, Data curation, Validation, Supervision, Writing – review & editing. MK: Investigation, Validation, Supervision, Writing – review & editing. SB: Investigation, Validation, Supervision, Writing – review & editing.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study was conducted following the code of ethics of the World Medical Association (Declaration of Helsinki) and the ICH-GCP. The xxx approved this study, and informed consent was not required as all image data were anonymized before analysis (EA2/089/22).

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Vinayahalingam, S., van Nistelrooij, N., Rothweiler, R. et al. Advancements in diagnosing oral potentially malignant disorders: leveraging Vision transformers for multi-class detection. Clin Oral Invest 28, 364 (2024). https://doi.org/10.1007/s00784-024-05762-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00784-024-05762-8