Abstract

Objective

The objective of this clinical trial was to compare facial expressions (magnitude, shape change, time, and symmetry) before (T0) and after (T1) orthognathic surgery by implementing a novel method of four-dimensional (4D) motion capture analysis, known as videostereophotogrammetry, in orthodontics.

Methods

This prospective, single-centre, single-arm trial included a total of 26 adult patients (mean age 28.4 years; skeletal class II: n = 13, skeletal class III: n = 13) with indication for orthodontic-surgical treatment. Two reproducible facial expressions (maximum smile, lip purse) were captured at T0 and T1 by videostereophotogrammetry as 4D face scan. The magnitude, shape change, symmetry, and time of the facial movements were analysed. The motion changes were analysed in dependence of skeletal class and surgical movements.

Results

4D motion capture analysis was feasible in all cases. The magnitude of the expression maximum smile increased from 15.24 to 17.27 mm (p = 0.002), while that of the expression lip purse decreased from 9.34 to 8.31 mm (p = 0.01). Shape change, symmetry, and time of the facial movements did not differ significantly pre- and postsurgical. The changes in facial movements following orthodontic-surgical treatment were observed independently of skeletal class and surgical movements.

Conclusions

Orthodontic-surgical treatment not only affects static soft tissue but also soft tissue dynamics while smiling or lip pursing.

Clinical relevance

To achieve comprehensive orthodontic treatment plans, the integration of facial dynamics via videostereophotogrammetry provides a promising approach in diagnostics.

Trial registration number

DRKS00017206.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Facial expressions as a type of nonverbal communication play an essential role in daily conversations. When assessing emotions, facial expressions carry more weight than the spoken message [1, 2]. As early as 1872, Charles Darwin postulated the universality of emotional facial expressions across cultural boundaries in his work “The expression of the emotions in man and animals” [3]. However, the extent of the facial movements can vary significantly between individuals depending on the facial morphology [4,5,6]. This is also evident in patients with dentofacial deformities, whose movement patterns with certain facial expressions deviate from those of individuals with neutral jaw relation [7]. Orthodontic-surgical treatment seems to standardise facial expressions [7, 8]. Despite these findings, the evaluation of facial movements is not yet a routinely used parameter in orthodontics. Therefore, asymmetries and abnormalities that manifest during facial expressions may remain undetected [9, 10].

Since orthodontic treatment, and in particular orthodontic-surgical treatment, always affects the appearance of the facial soft tissue, three-dimensional (3D) imaging using stereophotogrammetry has become increasingly important in recent years. In orthodontic-surgical treatment, this helps to predict postsurgical soft tissue changes and to improve the surgical plan [11]. However, the surgical effects in the areas of the lower face and the lips, which are very dynamic structures in daily communication, can only be predicted to a limited extent [12,13,14].

Non-invasive, four-dimensional (4D) video stereophotogrammetry offers a modern and promising approach for the objective recording and evaluation of facial movements [15,16,17], which has hardly been used in orthodontics to date. This technology allows to record a sequence of 60 3D images with stepwise changes in facial expressions and a radius of 180 to 360° depending on the number and orientation of the cameras [18]. Therefore, after implementing 3D imaging into orthodontic-surgical treatment, the next step is the integration of 4D motion capture.

The aim of the present study was to compare facial expressions before (T0) and after (T1) orthognathic surgery by implementing the novel method of 4D videostereophotogrammetry in orthodontics. We hypothesised that the magnitude, shape change, symmetry, and time of facial expressions change from T0 until T1.

Subjects and methods

Trial design

This study was a prospective, single-centre, single-arm trial and investigated facial expressions before (T0) and 4 months after orthognathic surgery (T1). It was approved by the institutional ethics committee (application number 13/2/19) in accordance with the Declaration of Helsinki. All participants gave written informed consent to take part in the study. The trial was registered before recruitment started (DRKS00017206). No changes occurred after trial commencement.

Participants, eligibility criteria, and settings

Patients seeking orthodontic-surgical treatment with an orthodontics first approach at the Department of Orthodontics, University Medical Center Goettingen, Germany, were enrolled consecutively. The eligibility criteria for participants were adults over 18 years old, orthodontic therapy with fixed appliance, pronounced malocclusion (skeletal class II: Wits appraisal > 2 mm; skeletal class III: Wits-appraisal < − 2 mm), and indication for combined orthodontic-surgical treatment (classified as grade 4 or higher using the index of orthognathic functional treatment need [19]). Patients with cleft lip and/or palate, craniofacial syndromes, impaired facial motion, previous orthognathic surgery, Menton deviation > 4 mm, or beard were excluded.

Sample size

As this trial is an early clinical trial with the aim to implement the novel method of 4D videostereophotogrammetry, sample size calculation was not based on a priori-hypothesis testing. Based on previous treatment numbers, in total 45 patients (1 skeletal class I, 21 skeletal class II, 23 skeletal class III) were operated in an interval similar to the scheduled recruitment phase of this study; a feasible sample size of 20 patients was planned for recruitment. The final sample size was 26 patients (13 skeletal class II, 13 skeletal class III) and a complete case analysis was performed.

Intervention

All patients underwent virtually planned, splint-based orthognathic surgery performed by an interdisciplinary team of orthodontists and maxillofacial surgeons as described previously [20]. The surgical procedure included the preservation of a pre-surgical defined condylar position using a centric splint [21,22,23]. In bimaxillary surgeries, Le Fort I osteotomy was performed first, followed by bilateral sagittal split osteotomy according to well-recognised protocols [24,25,26,27]. All patients were invited to record their facial expressions during smiling and lip purse at baseline (T0, after orthodontic decompensation, 1 to 6 weeks before surgery) and 4 months post-surgical (T1).

Blinding

Blinding was not applicable.

Outcome—facial expressions

Facial expressions were recorded as 3D videos using non-invasive 4D stereophotogrammetry with nine cameras (6 greyscaled and 3 coloured) and 60 frames per seconds (see Fig. 1; DI4D PRO System, Dimensional Imaging Ltd., Glasgow, UK). Before each capture, the system was calibrated according to a standardised protocol. Each patient was asked to sit upright approximately 95 cm in front of the cameras, to keep the eyes open, to have the head in natural head position and to move the head as little as possible. Then, the patients performed two reproducible facial expressions: (1) maximum smile and (2) lip purse. Both were demonstrated to the patients and practiced several times prior video acquisition.

Motion capture system recording facial expressions as 3D videos using non-invasive 4D stereophotogrammetry with nine cameras (6 greyscaled and 3 coloured) from three directions (DI4D PRO System, Dimensional Imaging Ltd., Glasgow, UK). [1] and [3], video lighting system; [2] blue screen; [4, 5], and [6] camera pods

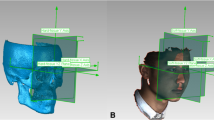

All video captures and post-processing were performed by the same trained investigator (J.H.). The 3D videos were cut to analyse the movements from the last frame in rest position (= start frame) to the first frame with the facial expression at its maximum extent (= end frame) (DI4D Setup, Dimensional Imaging Ltd., Glasgow, UK). To track the facial movement, a dummy mesh was applied to the individual patient morphology in the first frame of each video (see Fig. 2). This patient characteristic mesh was automatically tracked throughout the sequence of 3D images and manually corrected if necessary (see Fig. 3; DI4D track, Dimensional Imaging Ltd., Glasgow, UK). The Euclidean distance d between the points of the start frame s and the end frame e in the 3D space were calculated using Python and a self-edited SciPy based code [28].

Two regions of interest were determined (see Fig. 4):

-

the lower face: the area of the lower face limited by a plane through the landmark subnasale parallel to Frankfort Horizontal containing the same automatically selected 559 points for each participant, and

-

the landmarks undergoing the biggest change during facial expression: cheilion left and right for maximum smile, and labrale superius and inferius for lip purse.

Data analysis in Python. Left side: selection of the region of interest “lower face” limited by a plane through subnasale (green points). The region of interest was determined in the first patient analysed and automatically transferred to each subsequent patient. Right side: the landmarks undergoing the biggest change during facial expression (green points) were determined individually for each capture

For each facial expression, four parameters were calculated to objectively evaluate the movement (see Table 1 and Fig. 5).

Exemplary illustration of the measurement “magnitude” for the facial expression maximum smile at the landmark cheilion right. The image at rest position (yellow) and the image at the maximum extent of the movement (transparent) are superimposed and the Euclidian distance between the landmark cheilion right at rest position and at maximum smile is measured (orange arrow)

Method error analysis

To assess the reliability of the 4D tracking of facial expressions, the same investigator repeated the application of the dummy mesh to the individual patients’ morphology in ten cases. Additionally, a second examiner performed the same task one time. Agreement was excellent for the magnitude of facial expression (ICC = 0.982; 95% CI [0.933, 0.995]) and good to excellent for the shape change in the region of interest lower face (ICC = 0.947; 95% CI [0.814, 0.986]).

Statistical analysis

The analysis was performed using linear mixed-effects models with factors time, skeletal class, and their interaction. A sensitivity analysis using a paired Wilcoxon-signed rank test was performed. Estimated marginal means are reported with 95% confidence interval and compared between time and skeletal class [29]. Pre- and postoperative differences of 4D tracking variables were correlated within a multivariate linear model. Intra-class-correlation (ICC) was calculated to assess agreement between raters and consistency within raters. Baseline data are described using means and standard deviation. Statistical analysis was done using the statistical programming language R 4.1.3 [30].

Results

Participant flow and recruitment

Recruitment lasted from September 2020 until August 2021. The last follow-up was completed in December 2021. Of a total of 39 consecutive patients assessed for eligibility, 31 fulfilled the inclusion criteria (see CONSORT flow diagram in Fig. 6). During the observation, 1 patient declined surgery, 1 patient missed his follow-up appointment, 2 patients had surgical complications, and 1 patient was still exceptionally swollen at T1. The final sample consisted of 26 participants. Table 2 shows their baseline demographic data and clinical characteristics.

4D motion capture

4D motion capture was feasible using stereophotogrammetry and resulted in 3D videos with a rate of 60 frames per second. Colour and texture were recorded as well as surface geometry (see Supplementary Information 1 for a video example). All movements could be tracked from the start frame (rest position) to the end frame (maximum movement) semi-automatically.

Facial expressions

For maximum smile the mean magnitude of movement increased significantly from T0 until T1 by 2 mm. At T1, smiling became more asymmetric. No differences in shape change or time was observed. The mean magnitude of lip purse decreased from T0 until T1 by 1 mm. Shape change, symmetry, and time for the facial expression did not differ significantly between T0 and T1 (Table 3).

The post-surgical increase in the magnitude of maximum smile and the decrease in the magnitude of lip purse were observed in all but 5 and 6 patients, respectively.

Subgroup analysis showed that these results apply to patients with skeletal classes II and III (Table 4).

The results of the multivariate linear model with the difference in the magnitude of maximum smile as dependent variable showed that the pre- and postsurgical differences in the magnitude of smile cannot be explained by the surgical movements, the type of surgical intervention, or the skeletal class (Table 5). The multivariate linear model for the magnitude of lip purse indicated the same—the postsurgical change in the movement of the lips showed no correlation with the surgical intervention or the skeletal class (Table 6).

Harms

No harms were observed.

Discussion

Main findings in context of the existing evidence—method

The main strength of this study was the implementation of modern videostereophotogrammetry in orthodontics and its use to capture facial movements in 4D. In contrast to previous attempts analysing facial expressions using markers on the patients’ face [4, 6, 7], static recordings of the movement at its maximal extent [31, 32], two-dimensional videography [33, 34], or the use of the facial acting coding system [8, 35], 4D stereophotogrammetry offers an objective and reliable method to measure facial movements. Static recordings at rest position and maximum extent bear the risk of unnatural freezing of the facial expression. Compared to 3D measurements, 2D analyses using videos underestimate the amplitude of facial motion by as much as 43% [36]. This discrepancy is particularly pronounced in the area of the lower face, which is usually in the focus of orthodontics. Marker-based tracking systems are criticised because the direct positioning of the markers on the patient’s face shows large variances and may affect the natural facial expressions [37]. Videostereophotogrammetry proved to be a feasible objective tool for assessing the impact of surgical interventions on facial movements [15], and demonstrated good to excellent reliability within the sample of this study.

A current disadvantage of videostereophotogrammetry is that the necessary cameras, lightning systems, computers, and software are expensive and bulky. However, portable low-cost 4D cameras already exist and make their way in clinical application [38, 39]. Big companies like Microsoft are working on these technologies trying to facilitate automatic landmark tracking, which will not only reduce costs of videostereophotogrammetry but also emphasise the impact of 4D measurements in the near future [40].

Main findings in context of the existing evidence—results

The main finding was that orthodontic-surgical treatment increases the magnitude of the facial expression maximum smile by approximately 13% (= 2 mm), while it decreases that of the facial expression lip purse by approximately 11% (= 1 mm). The parameters shape change, time, and symmetry of the movement demonstrated no relevant changes from T0 to T1. The novelty of videostereophotogrammetry makes it difficult to compare these results with existing evidence. There are first attempts to integrate the dynamics of facial motion in orthodontic-surgical research [6, 8, 17, 33]. However, the interpretation of the data is limited by small study samples, methodological limitations, and divergent results. In 13 patients with maxillary hypoplasia and Le Fort I osteotomy, Al-Hiyali and co-workers reported a reduction in the magnitude of facial expressions of approximately 23% [17]. Since the authors summarised the data for three facial expressions (maximum smile, lip purse, and cheek puff), it remains unclear whether the change in the magnitude of movement differed between maximum smile and lip purse like observed in the current sample. Furthermore, in accordance with our observations, they demonstrated no clinically relevant difference in the asymmetry score for patients without facial asymmetry. For three patients with skeletal class III, Nooreyazdan and co-workers reported greater upward movement for cheilion left and right during smiling and reduced lip movement during lip purse [6], which is in agreement with the present results. Johns and co-workers, who also observed an increase in facial movement while smiling in patients with maxillary advancement, postulated that the anterior movement of the maxilla lengthens the facial muscles resulting in increased mobility [33]. This might only explain the increased magnitude in maximum smile in part as we found no interaction between the surgical movements and the parameters of facial expressions. Maybe, the explanation for the increased smile is as simple as the patients prefer smiling and displaying their teeth after surgical correction of the dentofacial deformity more than before. Nevertheless, it can be speculated that the surgical change in the underlying skeletal support affects the mobility of the overlying soft tissues. How good this adaption succeeds and whether the dynamics of facial soft tissues have an impact on skeletal relapse remain to be elucidated and is an aspect for future studies using the novel method of 4D motion capture. However, no significant impact of surgical movements, type of surgical intervention, or skeletal morphology on soft tissue dynamics was observed in this preliminary study. This may be due to the small sample sizes, implying a comparably low statistical power, but indicates that the postsurgical adaptation is highly individual and prediction of postsurgical facial movements is currently not possible. In contrast, Nooreyazdan and co-workers found presurgical differences between patients with skeletal class II, open bite, and class III for the movement lip purse, while there were no differences for the movements smile, mouth opening, cheek puff, eye opening, eye closure, or grimace even though their sample size was even smaller than in the present study [6].

Furthermore, the question arises whether 1 or 2 mm (11–13%) changes in the magnitude of the facial expressions, like found in the present study, are clinically relevant. With regard to social interaction the interpretation of the data gets even more complex as culture and gender shapes our expectations about the intensity of facial expressions in the sense of emotions [41, 42]. In contrast to orthopaedics, where gait measures start to establish as potential disease biomarkers [43, 44], no references for an ideal facial movement exist in orthodontics. Therefore, efforts should be made to collect movement data in class I subjects and the integration of facial dynamics in diagnostics should be driven forward to achieve patient-centred comprehensive treatment plans. Until then, differences of 1–2 mm should be judged as relevant because studies assessing the activity of other muscles of the skull, e.g. the dimension of masticatory muscles or jaw movement, often show pre-/postsurgical differences of 1–2 mm [45, 46].

Limitations

The participants of the study were not operated on by the same surgeon, but all surgeries were performed at a single centre following the same stringent protocol. Since a multi-centre study revealed that even different surgeons at different centres obtain similar accuracy when following a virtual treatment plan [47], it can be assumed that the surgical results within this study were reproducible and representative for orthodontic-surgical interventions.

It is possible that the patients’ compliance, the intensity of care provided by the orthodontic-surgical treatment team, and subsequently the speed of post-surgical recovery were influenced by the fact that the patients were part of a clinical trial. Because of that Hawthorne effect, the participants in the present study might show increased facial expressiveness.

The observed changes in maximum smile and lip purse were rather small. Due to the novelty of the method, there are no reference values which indicate what amount of change should be considered clinical relevant and what extent of change is detected by orthodontists, surgeons, laypersons, or the patients themselves. Future studies are needed to generate norm values.

Generalisability

The results of this trial were from a single specialist clinic in Germany, which might reduce the applicability to other clinical settings. However, the eligibility criteria include most patients undergoing orthodontic-surgical treatment and the followed surgery protocol is widely used and well established.

This study did not have the purpose to investigate gender differences in orthodontic surgical treatment and gender distribution within the study sample was not equal. This reflects the real clinical situation since women are more likely to accept surgical intervention than men [48,49,50]. The results may be generalised to a similar population with indication for orthodontic-surgical treatment and similar gender distribution.

Further research

The focus of this study was the integration of videostereophotogrammetry in orthodontic-surgical treatment. To fully understand the effect of orthodontic-surgical treatment on facial expressions, long-term observations and extended study periods are needed. Baseline assessment was performed after orthodontic decompensation with fixed appliances in situ, which might have affected facial movements. Future studies should consider recruitment of patients with severe malocclusions prior orthodontic treatment. The follow-up of 4 months post-surgical in this study was chosen because the swelling has usually subsided by that time [51, 52] and the fixed appliance is still in place. This allowed a comparison of the pre- and post-surgical data without considering an influence of the orthodontic appliance. Since we expected an average post-surgical orthodontic treatment time of 5 to 6 months [53, 54], T1 was set at 4 months post-surgical to reduce dropouts. However, studies on changes of masticatory performance following orthodontic-surgical treatment indicate that an observation period of up to 5 years is necessary to show all effects induced by the intervention since the muscles of mastication need time to regain full strength [55, 56]. Whether this also applies to the facial muscles has not yet been investigated and further studies with an extended follow-up period are required.

Conclusions

The present study implemented the novel approach of 4D motion capture using videostereophotogrammetry in orthodontic research. Within the limitations of this clinical trial, the following conclusion can be made:

-

1.

Implementation of videostereophotogrammetry in orthodontic-surgical treatment is feasible and allows the evaluation of facial movements.

-

2.

The magnitude of the expression maximum smile increases while that of lip purse decreases.

-

3.

This change in facial movements following orthodontic-surgical treatment was observed independently of skeletal classes, surgical movements, or type of surgical intervention in this preliminary study.

Data Availability

The data that support the findings of this study are not openly available due to reasons of sensitivity and are available from the corresponding author upon reasonable request.

References

Ekman P (1993) Facial expression and emotion. Am Psychol 48:384–392

Mehrabian A, Ferris SR (1967) Inference of attitudes from nonverbal communication in two channels. J Consult Psychol 31:248–252

Ekman P (2009) Darwin’s contributions to our understanding of emotional expressions. Philos Trans R Soc Lond B Biol Sci 364:3449–3451. https://doi.org/10.1098/rstb.2009.0189

Weeden JC, Trotman CA, Faraway JJ (2001) Three dimensional analysis of facial movement in normal adults: influence of sex and facial shape. Angle Orthod 71:132–140. https://doi.org/10.1043/0003-3219(2001)071%3c0132:TDAOFM%3e2.0.CO;2

Holberg C, Maier C, Steinhauser S et al (2006) Inter-individual variability of the facial morphology during conscious smiling. J Orofac Orthop 67:234–243. https://doi.org/10.1007/s00056-006-0518-8

Nooreyazdan M, Trotman C-A, Faraway JJ (2004) Modeling facial movement: II. A dynamic analysis of differences caused by orthognathic surgery. J Oral Maxillofac Surg 62:1380–1386

Trotman C-A, Faraway JJ (2004) Modeling facial movement: I. A dynamic analysis of differences based on skeletal characteristics. J Oral Maxillofac Surg 62:1372–1379

Nafziger YJ (1994) A study of patient facial expressivity in relation to orthodontic/surgical treatment. Am J Orthod Dentofacial Orthop 106:227–237. https://doi.org/10.1016/S0889-5406(94)70041-9

Hallac RR, Feng J, Kane AA et al (2017) Dynamic facial asymmetry in patients with repaired cleft lip using 4D imaging (video stereophotogrammetry). J Craniomaxillofac Surg 45:8–12. https://doi.org/10.1016/j.jcms.2016.11.005

Zhao C, Hallac RR, Seaward JR (2021) Analysis of facial movement in repaired unilateral cleft lip using three-dimensional motion capture. J Craniofac Surg 32:2074–2077. https://doi.org/10.1097/SCS.0000000000007636

Ho C-T, Lin H-H, Liou EJW et al (2017) Three-dimensional surgical simulation improves the planning for correction of facial prognathism and asymmetry: a qualitative and quantitative study. Sci Rep 7:512. https://doi.org/10.1038/srep40423

Elshebiny T, Morcos S, Mohammad A et al (2018) Accuracy of three-dimensional soft tissue prediction in orthognathic cases using dolphin three-dimensional software. J Craniofac Surg. https://doi.org/10.1097/SCS.0000000000005037

Resnick CM, Dang RR, Glick SJ et al (2017) Accuracy of three-dimensional soft tissue prediction for Le Fort I osteotomy using Dolphin 3D software: a pilot study. Int J Oral Maxillofac Surg 46:289–295. https://doi.org/10.1016/j.ijom.2016.10.016

Modabber A, Baron T, Peters F et al (2022) Comparison of soft tissue simulations between two planning software programs for orthognathic surgery. Sci Rep 12:2014. https://doi.org/10.1038/s41598-022-08991-7

Shujaat S, Khambay BS, Ju X et al (2014) The clinical application of three-dimensional motion capture (4D): a novel approach to quantify the dynamics of facial animations. Int J Oral Maxillofac Surg 43:907–916. https://doi.org/10.1016/j.ijom.2014.01.010

Ju X, O’Leary E, Peng M et al (2016) Evaluation of the reproducibility of nonverbal facial expressions using a 3D motion capture system. Cleft Palate Craniofac J 53:22–29. https://doi.org/10.1597/14-090r

Al-Hiyali A, Ayoub A, Ju X et al (2015) The impact of orthognathic surgery on facial expressions. J Oral Maxillofac Surg 73:2380–2390. https://doi.org/10.1016/j.joms.2015.05.008

Gattani S, Ju X, Gillgrass T et al (2020) An innovative assessment of the dynamics of facial movements in surgically managed unilateral cleft lip and palate using 4D imaging. Cleft Palate Craniofac J 57:1125–1133. https://doi.org/10.1177/1055665620924871

Ireland AJ, Cunningham SJ, Petrie A et al (2014) An index of orthognathic functional treatment need (IOFTN). J Orthod 41:77–83. https://doi.org/10.1179/1465313314Y.0000000100

Quast A, Santander P, Kahlmeier T et al (2021) Predictability of maxillary positioning: a 3D comparison of virtual and conventional orthognathic surgery planning. Head Face Med 17:27. https://doi.org/10.1186/s13005-021-00279-x

Luhr HG (1989) The significance of condylar position using rigid fixation in orthognathic surgery. Clin Plast Surg 16:147–156

Schwestka R, Engelke D, Kubein-Meesenburg D (1990) Condylar position control during maxillary surgery: the condylar positioning appliance and three-dimensional double splint method. Int J Adult Orthodon Orthognath Surg 5:161–165

Quast A, Santander P, Trautmann J et al (2020) A new approach in three dimensions to define pre- and intraoperative condyle–fossa relationships in orthognathic surgery – is there an effect of general anaesthesia on condylar position? Int J Oral Maxillofac Surg 49:1303–1310. https://doi.org/10.1016/j.ijom.2020.02.011

Bell WH (1975) Le Forte I osteotomy for correction of maxillary deformities. J Oral Surg 33:412–426

Epker BN, Wylie GA (1986) Control of the condylar-proximal mandibular segments after sagittal split osteotomies to advance the mandible. Oral Surg Oral Med Oral Pathol 62:613–617. https://doi.org/10.1016/0030-4220(86)90251-3

Hunsuck EE (1968) A modified intraoral sagittal splitting technic for correction of mandibular prognathism. J Oral Surg 26:250–253

Trauner R, Obwegeser H (1957) The surgical correction of mandibular prognathism and retrognathia with consideration of genioplasty. Oral Surg Oral Med Oral Pathol 10:677–689. https://doi.org/10.1016/S0030-4220(57)80063-2

Virtanen P, Gommers R, Oliphant TE et al (2020) SciPy 1.0: fundamental algorithms for scientific computing in Python. Nat Methods 17:261–272. https://doi.org/10.1038/s41592-019-0686-2

Lenth R (2022) emmeans: estimated marginal means, aka least-squares means. R package version 1.7.3. https://CRAN.R-project.org/package=emmeans. Accessed 20 Mar 2023

R Core Team R: a language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. https://www.r-project.org/. Accessed 20 Mar 2023

Johnston P, Mayes A, Hughes M et al (2013) Brain networks subserving the evaluation of static and dynamic facial expressions. Cortex 49:2462–2472. https://doi.org/10.1016/j.cortex.2013.01.002

Verze L, Bianchi FA, Dell’Acqua A et al (2011) Facial mobility after bimaxillary surgery in class III patients: a three-dimensional study. J Craniofac Surg 22:2304–2307. https://doi.org/10.1097/SCS.0b013e318232a7f0

Johns FR, Johnson PC, Buckley MJ et al (1997) Changes in facial movement after maxillary osteotomies. J Oral Maxillofac Surg 55:1044–8 (discussion 1048-9)

Paletz JL, Manktelow RT, Chaban R (1994) The shape of a normal smile: implications for facial paralysis reconstruction. Plast Reconstr Surg 93:784–9 (discussion 790-1)

Ekman P (1978) Facial action coding system. Consulting Psychologists Press Inc, Palo Alto, Calif

Gross MM, Trotman CA, Moffatt KS (1996) A comparison of three-dimensional and two-dimensional analyses of facial motion. Angle Orthod 66:189–194. https://doi.org/10.1043/0003-3219(1996)066%3c0189:ACOTDA%3e2.3.CO;2

Popat H, Richmond S, Benedikt L et al (2009) Quantitative analysis of facial movement—a review of three-dimensional imaging techniques. Comput Med Imaging Graph 33:377–383. https://doi.org/10.1016/j.compmedimag.2009.03.003

Siena FL, Byrom B, Watts P et al (2018) Utilising the Intel RealSense camera for measuring health outcomes in clinical research. J Med Syst 42:53. https://doi.org/10.1007/s10916-018-0905-x

ten Harkel TC, Speksnijder CM, van der Heijden F et al (2017) Depth accuracy of the RealSense F200: low-cost 4D facial imaging. Sci Rep 7:16263. https://doi.org/10.1038/s41598-017-16608-7

Avidan S, Brostow G, Cissé M et al (eds) (2022) Computer Vision – ECCV 17th European Conference, Tel Aviv, Israel, October 23–27, 2022. In: Proceedings, Part XVI (Lecture Notes in Computer Science, 13676, Band 13676) Paperback

Jack RE, Garrod OGB, Yu H et al (2012) Facial expressions of emotion are not culturally universal. Proc Natl Acad Sci U S A 109:7241–7244. https://doi.org/10.1073/pnas.1200155109

Hess U, Blairy S, Kleck RE (1997) The intensity of emotional facial expressions and decoding accuracy. J Nonverbal Behav 21:241–257. https://doi.org/10.1023/A:1024952730333

Desai R, Blacutt M, Youdan G et al (2022) Postural control and gait measures derived from wearable inertial measurement unit devices in Huntington’s disease: recommendations for clinical outcomes. Clin Biomech 96:105658. https://doi.org/10.1016/j.clinbiomech.2022.105658

Bois A, Tervil B, Moreau A et al (2022) A topological data analysis-based method for gait signals with an application to the study of multiple sclerosis. PLoS One 17:e0268475. https://doi.org/10.1371/journal.pone.0268475

Muftuoglu O, Akturk ES, Eren H et al (2023) Long-term evaluation of masseter muscle activity, dimensions, and elasticity after orthognathic surgery in skeletal class III patients. Clin Oral Investig. https://doi.org/10.1007/s00784-023-05004-3

Lee D-H, Yu H-S (2012) Masseter muscle changes following orthognathic surgery: a long-term three-dimensional computed tomography follow-up. Angle Orthod 82:792–798. https://doi.org/10.2319/111911-717.1

Hsu SS-P, Gateno J, Bell RB et al (2013) Accuracy of a computer-aided surgical simulation protocol for orthognathic surgery: a prospective multicenter study. J Oral Maxillofac Surg 71:128–142. https://doi.org/10.1016/j.joms.2012.03.027

Proffit WR, Phillips C, Dann C (1990) Who seeks surgical-orthodontic treatment? Int J Adult Orthodon Orthognath Surg 5:153–160

Proffit WR, White RP (1990) Who needs surgical-orthodontic treatment? Int J Adult Orthodon Orthognath Surg 5:81–89

Espeland L, Hogevold HE, Stenvik A (2007) A 3-year patient-centred follow-up of 516 consecutively treated orthognathic surgery patients. Eur J Orthod 30:24–30. https://doi.org/10.1093/ejo/cjm081

Yamamoto S, Miyachi H, Fujii H et al (2016) Intuitive facial imaging method for evaluation of postoperative swelling: a combination of 3-dimensional computed tomography and laser surface scanning in orthognathic Surgery. J Oral Maxillofac Surg 74:2506.e1-2506.e10. https://doi.org/10.1016/j.joms.2016.08.039

Semper-Hogg W, Fuessinger MA, Dirlewanger TW et al (2017) The influence of dexamethasone on postoperative swelling and neurosensory disturbances after orthognathic surgery: a randomized controlled clinical trial. Head Face Med 13:19. https://doi.org/10.1186/s13005-017-0153-1

Lee RJH, Perera A, Naoum S et al (2021) Treatment time for surgical-orthodontic cases: a private versus public setting comparison. Australas Orthod J 37:31–36. https://doi.org/10.21307/aoj-2021-003

Dowling PA, Espeland L, Krogstad O et al (1999) Duration of orthodontic treatment involving orthognathic surgery. Int J Adult Orthodon Orthognath Surg 14:146–152

van den Braber W, van der Bilt A, van der Glas H et al (2006) The influence of mandibular advancement surgery on oral function in retrognathic patients: a 5-year follow-up study. J Oral Maxillofac Surg 64:1237–1240. https://doi.org/10.1016/j.joms.2006.04.019

Magalhães IB, Pereira LJ, Marques LS et al (2010) The influence of malocclusion on masticatory performance. Angle Orthod 80:981–987. https://doi.org/10.2319/011910-33.1

Funding

Open Access funding enabled and organized by Projekt DEAL. This work was supported by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation)—427616114—and by the Deutsche Gesellschaft für Kieferorthopädie (DGKFO, German Orthodontic Society).

Author information

Authors and Affiliations

Contributions

A.Q., M.S., H.S. and P.M.M. contributed to study conception and design. A.Q., J.H. and P.K. recruited participants and collected the data. J.H. performed the 4D tracking. J.M. wrote the code for data analysis. A.Q., T.A. and C.D. analyzed and interpreted the data. A.Q. and P.M.M. drafted the manuscript. All authors critically revised the manuscript and gave final approval.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

This study was approved by the institutional ethics committee (application number 13/2/19) in accordance with the Declaration of Helsinki.

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file1 (MP4 39959 KB)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Quast, A., Sadlonova, M., Asendorf, T. et al. The impact of orthodontic-surgical treatment on facial expressions—a four-dimensional clinical trial. Clin Oral Invest 27, 5841–5851 (2023). https://doi.org/10.1007/s00784-023-05195-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00784-023-05195-9