Abstract

We introduce a simulation scheme for Brownian semistationary processes, which is based on discretizing the stochastic integral representation of the process in the time domain. We assume that the kernel function of the process is regularly varying at zero. The novel feature of the scheme is to approximate the kernel function by a power function near zero and by a step function elsewhere. The resulting approximation of the process is a combination of Wiener integrals of the power function and a Riemann sum, which is why we call this method a hybrid scheme. Our main theoretical result describes the asymptotics of the mean square error of the hybrid scheme, and we observe that the scheme leads to a substantial improvement of accuracy compared to the ordinary forward Riemann-sum scheme, while having the same computational complexity. We exemplify the use of the hybrid scheme by two numerical experiments, where we examine the finite-sample properties of an estimator of the roughness parameter of a Brownian semistationary process and study Monte Carlo option pricing in the rough Bergomi model of Bayer et al. (Quant. Finance 16:887–904, 2016), respectively.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We study simulation methods for Brownian semistationary (\(\mathcal {BSS}\)) processes, first introduced by Barndorff-Nielsen and Schmiegel [8, 9], which form a flexible class of stochastic processes that are able to capture some common features of empirical time series, such as stochastic volatility (intermittency), roughness, stationarity, and strong dependence. By now these processes have been applied in various contexts, most notably in the study of turbulence in physics [7, 16] and in finance as models of energy prices [4, 11]. A \(\mathcal {BSS}\) process \(X\) is defined via the integral representation

where \(W\) is a two-sided Brownian motion providing the fundamental noise innovations, the amplitude of which is modulated by a stochastic volatility (intermittency) process \(\sigma\) that may depend on \(W\). This driving noise is then convolved with a deterministic kernel function \(g\) that specifies the dependence structure of \(X\). The process \(X\) can also be viewed as a moving average of volatility-modulated Brownian noise, and setting \(\sigma_{s} = 1\), we see that stationary Brownian moving averages are nested in this class of processes.

In the applications mentioned above, the case where \(X\) is not a semimartingale is particularly relevant. This situation arises when the kernel function \(g\) behaves like a power-law near zero; more specifically, when for some \(\alpha\in( -\frac{1}{2},\frac {1}{2}) \setminus\{0\}\),

Here we write “∝” to indicate proportionality in an informal sense, anticipating a rigorous formulation of this relationship given in Sect. 2.2 using the theory of regular variation [15], which plays a significant role in our subsequent arguments. The case \(\alpha= -\frac{1}{6}\) in (1.2) is important in statistical modeling of turbulence [16] as it gives rise to processes that are compatible with Kolmogorov’s scaling law for ideal turbulence. Moreover, processes of similar type with \(\alpha\approx- 0.4\) have been recently used in the context of option pricing as models of rough volatility [1, 10, 18, 19]; see Sects. 2.5 and 3.3 below. The case \(\alpha=0\) would (roughly speaking) lead to a process that is a semimartingale, which is thus excluded.

Under (1.2), the trajectories of \(X\) behave locally like the trajectories of a fractional Brownian motion with Hurst index \(H = \alpha+ \frac{1}{2} \in(0,1) \setminus\{ \frac{1}{2} \}\). While the local behavior and roughness of \(X\), measured in terms of Hölder regularity, are determined by the parameter \(\alpha\), the global behavior of \(X\) (e.g., whether the process has long or short memory) depends on the behavior of \(g(x)\) as \(x \rightarrow\infty \), which can be specified independently of \(\alpha\). This should be contrasted with fractional Brownian motion and related self-similar models, which necessarily must conform to a restrictive affine relationship between their Hölder regularity (local behavior and roughness) and Hurst index (global behavior), as elucidated by Gneiting and Schlather [20]. Indeed, in the realm of \(\mathcal {BSS}\) processes, local and global behavior are conveniently decoupled, which underlines the flexibility of these processes as a modeling framework.

In connection with practical applications, it is important to be able to simulate the process \(X\). If the volatility process \(\sigma\) is deterministic and constant in time, then \(X\) will be strictly stationary and Gaussian. This makes \(X\) amenable to exact simulation using the Cholesky factorization or circulant embeddings; see e.g. [2, Chapter XI]. However, it seems difficult, if not impossible, to develop an exact method that is applicable with a stochastic \(\sigma\), as the process \(X\) is then neither Markovian nor Gaussian. Thus in the general case, one must resort to approximative methods. To this end, Benth et al. [13] have recently proposed a Fourier-based method of simulating \(\mathcal{BSS}\) processes, and more general Lévy semistationary (\(\mathcal{LSS}\)) processes, which relies on approximating the kernel function \(g\) in the frequency domain.

In this paper, we introduce a new discretization scheme for \(\mathcal{BSS}\) processes based on approximating the kernel function \(g\) in the time domain. Our starting point is the Riemann-sum discretization of (1.1). The Riemann-sum scheme builds on an approximation of \(g\) using step functions, which has the pitfall of failing to capture appropriately the steepness of \(g\) near zero. In particular, this becomes a serious defect under (1.2) when \(\alpha \in( -\frac{1}{2},0)\). In our new scheme, we mitigate this problem by approximating \(g\) using an appropriate power function near zero and a step function elsewhere. The resulting discretization scheme can be realized as a linear combination of Wiener integrals with respect to the driving Brownian motion \(W\) and a Riemann sum, which is why we call it a hybrid scheme. The hybrid scheme is only slightly more demanding to implement than the Riemann-sum scheme, and the schemes have the same computational complexity as the number of discretization cells tends to infinity.

Our main theoretical result describes the exact asymptotic behavior of the mean square error (MSE) of the hybrid scheme and, as a special case, that of the Riemann-sum scheme. We observe that switching from the Riemann-sum scheme to the hybrid scheme reduces the asymptotic root mean square error (RMSE) substantially. Using merely the simplest variant the of hybrid scheme, where a power function is used in a single discretization cell, the reduction is at least \(50\%\) for all \(\alpha\in( 0,\frac{1}{2})\) and at least \(80\%\) for all \(\alpha\in( -\frac{1}{2},0)\). The reduction in RMSE is close to \(100\%\) as \(\alpha\) approaches \(-\frac{1}{2}\), which indicates that the hybrid scheme indeed resolves the problem of poor precision that affects the Riemann-sum scheme.

To assess the accuracy of the hybrid scheme in practice, we perform two numerical experiments. Firstly, we examine the finite-sample performance of an estimator of the roughness index \(\alpha\), introduced by Barndorff-Nielsen et al. [6] and Corcuera et al. [16]. This experiment enables us to assess how faithfully the hybrid scheme approximates the fine properties of the \(\mathcal {BSS}\) process \(X\). Secondly, we study Monte Carlo option pricing in the rough Bergomi stochastic volatility model of Bayer et al. [10]. We use the hybrid scheme to simulate the volatility process in this model, and we find that the resulting implied volatility smiles are indistinguishable from those obtained using a method that involves exact simulation of the volatility process. Thus we are able to propose a solution to the problem of finding an efficient simulation scheme for the rough Bergomi model, left open in the paper [10].

The rest of this paper is organized as follows. In Sect. 2, we recall the rigorous definition of a \(\mathcal {BSS}\) process and introduce our assumptions. We also introduce the hybrid scheme, state our main theoretical result concerning the asymptotics of the mean square error and discuss an extension of the scheme to a class of truncated \(\mathcal{BSS}\) processes. Section 3 briefly discusses the implementation of the discretization scheme and presents the numerical experiments mentioned above. Finally, Sect. 4 contains the proofs of the theoretical and technical results given in the paper.

2 The model and theoretical results

2.1 Brownian semistationary process

Let \((\varOmega,\mathcal{F},(\mathcal{F}_{t})_{t \in \mathbb {R}}, \mathbb{P})\) be a filtered probability space, satisfying the usual conditions, supporting a (two-sided) standard Brownian motion \(W=(W_{t})_{t \in \mathbb {R}}\). We consider a Brownian semistationary process

where \(\sigma= (\sigma_{t})_{t\in \mathbb {R}}\) is an \((\mathcal{F}_{t})_{t \in \mathbb {R}}\)-predictable process with locally bounded trajectories, which captures the stochastic volatility (intermittency) of \(X\), and where \(g: (0,\infty) \to[0,\infty)\) is a Borel-measurable kernel function.

To ensure that the integral (2.1) is well defined, we assume that the kernel function \(g\) is square-integrable, that is, \(\int _{0}^{\infty}g(x)^{2} \mathrm {d}x < \infty\). In fact, we shortly introduce some more specific assumptions on \(g\) that imply its square-integrability. Throughout the paper, we also assume that the process \(\sigma\) has finite second moments, \(\mathbb {E}[\sigma_{t}^{2}] < \infty\) for all \(t \in \mathbb {R}\), and that the process is covariance-stationary, namely,

These assumptions imply that also \(X\) is covariance-stationary, that is,

However, the process \(X\) need not be strictly stationary as the dependence between the volatility process \(\sigma\) and the driving Brownian motion \(W\) may be time-varying.

2.2 Kernel function

As mentioned above, we consider a kernel function that satisfies \(g(x) \propto x^{\alpha}\) for some \(\alpha\in(-\frac{1}{2},\frac{1}{2})\setminus\{0 \}\) when \(x>0\) is near zero. To make this idea rigorous and to allow additional flexibility, we formulate our assumptions on \(g\) using the theory of regular variation [15] and, more specifically, slowly varying functions.

To this end, recall that a measurable function \(L : (0,1] \rightarrow [0,\infty)\) is slowly varying at 0 if for any \(t>0\),

Moreover, a function \(f(x) = x^{\beta}L(x)\), \(x \in(0,1]\), where \(\beta \in \mathbb {R}\) and \(L\) is slowly varying at 0, is said to be regularly varying at 0, with \(\beta\) being the index of regular variation.

Remark 2.1

Conventionally, slow and regular variation are defined at \(\infty\) [15, Sects. 1.2.1 and 1.4.1]. However, \(L\) is slowly varying (resp. regularly varying) at 0 if and only if \(x \mapsto L(1/x)\) is slowly varying (resp. regularly varying) at \(\infty\).

A key feature of slowly varying functions, which will be very important in the sequel, is that they can be sandwiched between polynomial functions as follows. If \(\delta>0\) and \(L\) is slowly varying at 0 and bounded away from 0 and \(\infty\) on every interval \((u,1]\), \(u \in(0,1)\), then there exist constants \(\overline{C}_{\delta} \geq \underline{C}_{\delta} >0\) such that

The inequalities above are an immediate consequence of the so-called Potter bounds for slowly varying functions; see [15, Theorem 1.5.6(ii)] and (4.1) below. Making \(\delta\) very small, we see that slowly varying functions are asymptotically negligible in comparison with polynomially growing/decaying functions. Thus, by multiplying power functions and slowly varying functions, regular variation provides a flexible framework to construct functions that behave asymptotically like power functions.

Our assumptions concerning the kernel function \(g\) are as follows:

-

(A1)

For some \(\alpha\in(-\frac{1}{2},\frac{1}{2}) \setminus \{0\}\),

$$\begin{aligned} g(x) = x^{\alpha}L_{g}(x), \quad x \in(0,1], \end{aligned}$$where \(L_{g} : (0,1] \to[0,\infty)\) is continuously differentiable, slowly varying at 0 and bounded away from 0. Moreover, there exists a constant \(C>0\) such that the derivative \(L'_{g}\) of \(L_{g}\) satisfies

$$\begin{aligned} |L_{g}'(x)| \leq C(1+x^{-1}), \quad x\in(0,1]. \end{aligned}$$ -

(A2)

The function \(g\) is continuously differentiable on \((0,\infty)\), with derivative \(g'\) that is ultimately monotonic and also satisfies \(\int_{1}^{\infty}g'(x)^{2} \mathrm {d}x\).

-

(A3)

For some \(\beta\in(-\infty,-\frac{1}{2})\),

$$ g(x) = \mathcal{O}(x^{\beta}), \quad x \rightarrow\infty. $$

(Here, and in the sequel, we use the notation \(f(x) = \mathcal {O}(h(x))\), \(x \rightarrow a\), to indicate that \(\limsup_{x \rightarrow a} |\frac{f(x)}{h(x)}| < \infty\). Additionally, analogous notation is later used for sequences and computational complexity.) In view of the bound (2.2), these assumptions ensure that \(g\) is square-integrable. It is worth pointing out that (A1) accommodates functions \(L_{g}\) with \(\lim_{x \rightarrow 0} L_{g}(x) = \infty\), e.g. \(L_{g}(x) = 1 - \log x\).

The assumption (A1) influences the short-term behavior and roughness of the process \(X\). A simple way to assess the roughness of \(X\) is to study the behavior of its variogram (also called second-order structure function in the turbulence literature)

as \(h \rightarrow0\). Note that by covariance-stationarity,

Under our assumptions, we have the following characterization of the behavior of \(V_{X}\) near zero, which generalizes a result of Barndorff-Nielsen [3, p. 9] and implies that \(X\) has a locally Hölder-continuous modification. Therein, and in what follows, we write \(a(x) \sim b(x)\), \(x \rightarrow y\), to indicate that \(\lim_{x \rightarrow y} \frac{a(x)}{b(x)} = 1\). The proof of this result is carried out in Sect. 4.1.

Proposition 2.2

Suppose that (A1)–(A3) hold.

-

(i)

The variogram of \(X\) satisfies

$$ V_{X}(h) \sim \mathbb {E}[\sigma_{0}^{2}] \bigg(\frac{1}{2\alpha+ 1} + \int_{0}^{\infty}\big( (y+1)^{\alpha}- y^{\alpha}\big)^{2} \mathrm {d}y\bigg) h^{2\alpha+1} L_{g}(h)^{2}, \quad h \rightarrow0, $$which implies that \(V_{X}\) is regularly varying at zero with index \(2\alpha+1\).

-

(ii)

The process \(X\) has a modification with locally \(\phi\)-Hölder-continuous trajectories for any \(\phi\in(0,\alpha+ \frac{1}{2})\).

Motivated by Proposition 2.2, we call \(\alpha\) the roughness index of the process \(X\). Ignoring the slowly varying factor \(L_{g}(h)^{2}\) in (2.2), we see that the variogram \(V(h)\) behaves like \(h^{2\alpha+1}\) for small values of \(h\), which is reminiscent of the scaling property of the increments of a fractional Brownian motion (fBm) with Hurst index \(H = \alpha+ \frac{1}{2}\). Thus, the process \(X\) behaves locally like such an fBm, at least when it comes to second order structure and roughness. (Moreover, the factor \(\frac{1}{2\alpha + 1} + \int_{0}^{\infty}( (y+ 1)^{\alpha}- y^{\alpha})^{2} \mathrm {d}y\) coincides with the normalization coefficient that appears in the Mandelbrot–Van Ness representation [23, Theorem 1.3.1] of an fBm with \(H = \alpha+ \frac{1}{2}\).)

Let us now look at two examples of a kernel function \(g\) that satisfies our assumptions.

Example 2.3

The so-called gamma kernel

with parameters \(\alpha\in(-\frac{1}{2},\frac{1}{2}) \setminus\{ 0 \} \) and \(\lambda> 0\), has been used extensively in the literature on \(\mathcal{BSS}\) processes. It is particularly important in connection with statistical modeling of turbulence, see Corcuera et al. [16], but it also provides a way to construct generalizations of Ornstein–Uhlenbeck (OU) processes with a roughness that differs from the usual semimartingale case \(\alpha= 0\), while mimicking the long-term behavior of an OU process. Moreover, \(\mathcal {BSS}\) and \(\mathcal{LSS}\) processes defined using the gamma kernel have interesting probabilistic properties; see [24]. An in-depth study of the gamma kernel can be found in [3]. Setting \(L_{g} (x) := e^{-\lambda x}\), which is slowly varying at 0 since \(\lim_{x \rightarrow0} L_{g}(x) = 1\), it is evident that (A1) holds. Since \(g(x)\) decays exponentially fast to 0 as \(x \rightarrow\infty\), it is clear that also (A3) holds. To verify (A2), note that \(g\) satisfies

where \(\lim_{x \rightarrow\infty}((\frac{\alpha}{x}-\lambda)^{2}- \frac {\alpha}{x^{2}})= \lambda^{2}>0\), so \(g'\) is ultimately increasing with

Thus, \(\int_{1}^{\infty}g'(x)^{2} \mathrm {d}x < \infty\) since \(g\) is square-integrable.

Example 2.4

Consider the power-law kernel function

with parameters \(\alpha\in(-\frac{1}{2},\frac{1}{2})\setminus\{0\}\) and \(\beta\in(-\infty,-\frac{1}{2})\). The behavior of this kernel function near zero is similar to that of the gamma kernel, but \(g(x)\) decays to zero polynomially as \(x \rightarrow\infty\), so it can be used to model long memory. In fact, it can be shown that if \(\beta\in (-1,-\frac{1}{2})\), then the autocorrelation function of \(X\) is not integrable. Clearly, (A1) holds with \(L_{g}(x) := (1+x)^{\beta -\alpha}\), which is slowly varying at 0 since \(\lim_{x \rightarrow0} L_{g}(x) = 1\). Moreover, note that we can write

where \(K_{g}(x):=(1+x^{-1})^{\beta-\alpha}\) satisfies \(\lim_{x \rightarrow\infty}K_{g}(x)=1\). Thus, also (A3) holds. We can check (A2) by computing

where \(-\alpha-2\alpha x- \beta x^{2} \rightarrow\infty\) when \(x \rightarrow\infty\) (as \(\beta< -\frac{1}{2}\)), so \(g'\) is ultimately increasing. Additionally, we note that

implying \(\int_{1}^{\infty}g'(x)^{2} \mathrm {d}x < \infty\) since \(g\) is square-integrable.

2.3 Hybrid scheme

Let \(t \in \mathbb {R}\) and consider discretizing \(X_{t}\) based on its integral representation (2.1) on the grid \(\mathcal{G}^{n}_{t} := \{t,t-\frac {1}{n}, t-\frac{2}{n},\ldots\}\) for \(n \in \mathbb {N}\). To derive our discretization scheme, let us first note that if the volatility process \(\sigma\) does not vary too much, then it is reasonable to use the approximation

that is, we keep \(\sigma\) constant in each discretization cell. (Here, and in the sequel, “≈” stands for an informal approximation used for purely heuristic purposes.) If \(k\) is “small”, then due to (A1), we may approximate

as the slowly varying function \(L_{g}\) varies “less” than the power function \(y \mapsto y^{\alpha}\) near zero; cf. (2.2). If \(k\) is “large”, or at least \(k \geq2\), then choosing \(b_{k} \in [k-1,k]\) provides an adequate approximation

by (A2). Applying (2.4) to the first \(\kappa\) terms, where \(\kappa= 1,2,\ldots{}\), and (2.5) to the remaining terms in the approximating series in (2.3) yields

For completeness, we also allow \(\kappa= 0\), in which case we require that \(b_{1} \in(0,1]\) and interpret the first sum on the right-hand side of (2.6) as zero. To make numerical implementation feasible, we truncate the second sum on the right-hand side of (2.6) so that both sums have \(N_{n} \geq\kappa+1\) terms in total. Thus, we arrive at a discretization scheme for \(X_{t}\), which we call a hybrid scheme, given by

where

and \(\mathbf{b}:=(b_{k})_{k=\kappa+1}^{\infty}\) is a sequence of real numbers, evaluation points, that must satisfy \(b_{k} \in[k-1,k]\setminus \{0\}\) for each \(k\geq\kappa+1\), but otherwise can be chosen freely.

As it stands, the discretization grid \(\mathcal{G}^{n}_{t}\) depends on the time \(t\), which may seem cumbersome with regard to sampling \(X^{n}_{t}\) simultaneously for different times \(t\). However, note that whenever times \(t\) and \(t'\) are separated by a multiple of \(\frac{1}{n}\), the corresponding grids \(\mathcal{G}^{n}_{t}\) and \(\mathcal{G}^{n}_{t'}\) will intersect. In fact, the hybrid scheme defined by (2.7) and (2.8) can be implemented efficiently, as we shall see in Sect. 3.1, below. Since

the degenerate case \(\kappa=0\) with \(b_{k} = k\) for all \(k \geq1\) corresponds to the usual Riemann-sum discretization scheme of \(X_{t}\) with (Itô type) forward sums from (2.8). Henceforth, we denote the associated sequence \(( k)_{k=\kappa+1}^{\infty}\) by \(\mathbf {b}_{\mathrm{FWD}}\), where the subscript “\(\mathrm{FWD}\)” alludes to forward sums. However, including terms involving Wiener integrals of a power function given by (2.7), that is, having \(\kappa\geq1\), improves the accuracy of the discretization considerably, as we shall see. Having the leeway to select \(b_{k}\) within the interval \([k-1,k]\setminus\{ 0 \}\), so that the function \(g(t-\cdot)\) is evaluated at a point that does not necessarily belong to \(\mathcal {G}^{n}_{t}\), leads additionally to a moderate improvement.

The truncation in the sum (2.8) entails that the stochastic integral (2.1) defining \(X_{t}\) is truncated at \(t - \frac{N_{n}}{n}\). In practice, the value of the parameter \(N_{n}\) should be large enough to mitigate the effect of truncation. To ensure that the truncation point \(t - \frac{N_{n}}{n}\) tends to \(-\infty\) as \(n \rightarrow\infty\) in our asymptotic results, we introduce the following assumption:

-

(A4)

For some \(\gamma>0\),

$$\begin{aligned} N_{n} \sim n^{\gamma+ 1},\quad n \rightarrow\infty. \end{aligned}$$

2.4 Asymptotic behavior of mean square error

We are now ready to state our main theoretical result, which gives a sharp description of the asymptotic behavior of the mean square error (MSE) of the hybrid scheme as \(n \rightarrow\infty\). We defer the proof of this result to Sect. 4.2.

Theorem 2.5

Suppose that (A1)–(A4) hold, so that

and that for some \(\delta>0\),

Then for all \(t\in\mathbb{R}\),

where

Remark 2.6

Note that if \(\alpha\in(-\frac{1}{2},0)\), then having

for all \(\theta\in(0,1)\), ensures that (2.10) holds. (Take, say, \(\delta:= \frac{1}{2}(1 - (2\alpha+1))>0\) and \(\theta:= 2\alpha+1 + \delta= \alpha+ 1 \in(0,1)\).)

When the hybrid scheme is used to simulate the \(\mathcal{BSS}\) process \(X\) on an equidistant grid \(\{0,\frac{1}{n},\frac{2}{n},\ldots,\frac {\lfloor nT \rfloor}{n} \}\) for some \(T>0\) (see Sect. 3.1 on the details of the implementation), the following consequence of Theorem 2.5 ensures that the covariance structure of the simulated process approximates that of the actual process \(X\).

Corollary 2.7

Suppose that the assumptions of Theorem 2.5 hold. Then for any \(s\), \(t\in \mathbb {R}\) and \(\varepsilon>0\),

Proof

Let \(s\), \(t\in \mathbb {R}\). Applying the Cauchy–Schwarz inequality, we get

We have \(\sup_{n \in \mathbb {N}} \mathbb {E}[(X^{n}_{t})^{2}]^{1/2} <\infty\) since \(\mathbb {E}[(X^{n}_{t})^{2}] \rightarrow \mathbb {E}[X_{t}^{2}]<\infty\) as \(n \rightarrow\infty\), by Theorem 2.5. Moreover, Theorem 2.5 and the bound (2.2) imply that we also have \(\mathbb {E}[|X_{s}-X^{n}_{s}|^{2}]^{1/2}=\mathcal{O}(n^{-(\alpha+\frac{1}{2})+\varepsilon})\) and \(\mathbb {E}[|X_{t}-X^{n}_{t}|^{2}]^{1/2}=\mathcal{O}(n^{-(\alpha+\frac {1}{2})+\varepsilon})\) for any \(\varepsilon>0\). □

In Theorem 2.5, the asymptotics of the MSE (2.11) are determined by the behavior of the kernel function \(g\) near zero, as specified in (A1). The condition (2.9) ensures that the error from approximating \(g\) near zero is asymptotically larger than the error induced by the truncation of the stochastic integral (2.1) at \(t - \frac{N_{n}}{n}\). In fact, a different kind of asymptotics of the MSE, where truncation error becomes dominant, could be derived when (2.9) does not hold, under some additional assumptions, but we do not pursue this direction in the present paper.

While the rate of convergence in (2.11) is fully determined by the roughness index \(\alpha\), which may seem discouraging at first, it turns out that the quantity \(J(\alpha,\kappa,\mathbf{b})\), which we call the asymptotic MSE, can vary a lot, depending on how we choose \(\kappa\) and \(\mathbf{b}\), and can have a substantial impact on the precision of the approximation of \(X\). It is immediate from (2.12) that increasing \(\kappa\) will decrease \(J(\alpha,\kappa ,\mathbf{b})\). Moreover, for given \(\alpha\) and \(\kappa\), it is straightforward to choose \(\mathbf{b}\) so that \(J(\alpha,\kappa,\mathbf {b})\) is minimized, as shown in the following result.

Proposition 2.8

Let \(\alpha\in(-\frac{1}{2},\frac{1}{2})\setminus\{ 0\}\) and \(\kappa \geq0\). Among all sequences \(\mathbf{b}=(b_{k})_{k=\kappa+1}^{\infty}\) with \(b_{k} \in[k-1,k]\setminus\{0 \}\) for \(k \geq\kappa+1\), the function \(J(\alpha,\kappa,\mathbf{b})\), and consequently the asymptotic MSE induced by the discretization, is minimized by the sequence \(\mathbf {b}^{*}\) given by

Proof

Clearly, a sequence \(\mathbf{b}=(b_{k})_{k=\kappa+1}^{\infty}\) minimizes the function \(J(\alpha,\kappa,\mathbf{b})\) if and only if \(b_{k}\) minimizes \(\int_{k-1}^{k} (y^{\alpha}-b_{k}^{\alpha})^{2} \mathrm {d}y\) for any \(k \geq \kappa+1\). By standard \(L^{2}\)-space theory, \(c \in \mathbb {R}\) minimizes the integral \(\int_{k-1}^{k} (y^{\alpha}-c)^{2} \mathrm {d}y\) if and only if the function \(y \mapsto y^{\alpha}- c\) is orthogonal in \(L^{2}\) to all constant functions. This is tantamount to

and computing the integral and solving for \(c\) yields

Setting \(b^{*}_{k} := c^{1/\alpha} \in(k-1,k)\) completes the proof. □

To understand how much increasing \(\kappa\) and using the optimal sequence \(\mathbf{b}^{*}\) from Proposition 2.8 improves the approximation, we study numerically the asymptotic root mean square error (RMSE) \(\sqrt{J(\alpha,\kappa,\mathbf{b})}\). In particular, we assess how much the asymptotic RMSE decreases relative to the RMSE of the forward Riemann-sum scheme (\(\kappa=0\) and \(\mathbf {b} = \mathbf{b}_{\mathrm{FWD}}\)) by using the quantity

The results are presented in Fig. 1. We find that employing the hybrid scheme with \(\kappa\geq1\) leads to a substantial reduction in the asymptotic RMSE relative to the forward Riemann-sum scheme when \(\alpha\in(-\frac{1}{2},0)\). Indeed, when \(\kappa\geq 1\), the asymptotic RMSE, as a function of \(\alpha\), does not blow up as \(\alpha\rightarrow-\frac{1}{2}\), while with \(\kappa= 0\) it does. This explains why the reduction in the asymptotic RMSE approaches \(100\% \) as \(\alpha\rightarrow-\frac{1}{2}\). When \(\alpha\in(0,\frac {1}{2})\), the improvement achieved using the hybrid scheme is more modest, but still considerable. Figure 1 also highlights the importance of using the optimal sequence \(\mathbf{b}^{*}\), instead of \(\mathbf{b}_{\mathrm{FWD}}\), as evaluation points in the scheme, in particular when \(\alpha\in(0,\frac{1}{2})\). Finally, we observe that increasing \(\kappa\) beyond 2 does not appear to lead to a significant further reduction. Indeed, in our numerical experiments, reported in Sects. 3.2 and 3.3 below, we observe that using \(\kappa= 1,2\) already leads to good results.

(Left) The asymptotic RMSE given by \(\sqrt{J(\alpha,\kappa,\mathbf{b})}\) as a function of \(\alpha\in(-\frac{1}{2},\frac {1}{2})\setminus\{0\}\) for \(\kappa= 0,1,2,3\) using \(\mathbf{b} = \mathbf{b}^{*}\) of Proposition 2.8 (solid lines) and \(\mathbf{b}=\mathbf{b}_{\mathrm{FWD}}\) (dashed lines). (Right) Reduction in the asymptotic RMSE relative to the forward Riemann-sum scheme (\(\kappa=0\) and \(\mathbf{b}=\mathbf{b}_{\mathrm {FWD}}\)) given by the formula (2.13), plotted as a function of \(\alpha\in(-\frac{1}{2},\frac{1}{2})\setminus\{0\}\) for \(\kappa= 0,1,2,3\) using \(\mathbf{b} = \mathbf{b}^{*}\) (solid lines) and for \(\kappa= 1,2,3\) using \(\mathbf{b}=\mathbf{b}_{\mathrm{FWD}}\) (dashed lines). In all computations, we have used the approximations outlined in Remark 2.9 with \(N=\mbox{1000000}\)

Remark 2.9

It is non-trivial to evaluate the quantity \(J(\alpha,\kappa,\mathbf{b})\) numerically. Computing the integral in (2.12) explicitly, we can approximate \(J(\alpha,\kappa,\mathbf{b})\) by

with some large \(N\in \mathbb {N}\). This approximation is adequate when \(\alpha \in(-\frac{1}{2},0)\), but its accuracy deteriorates when \(\alpha \rightarrow\frac{1}{2}\). In particular, the singularity of the function \(\alpha\mapsto J(\alpha,\kappa,\mathbf{b})\) at \(\frac{1}{2}\) is difficult to capture using \(J_{N}(\alpha,\kappa,\mathbf{b})\) with numerically feasible values of \(N\). To overcome this numerical problem, we introduce a correction term in the case \(\alpha\in(0,\frac {1}{2})\). The correction term can be derived informally as follows. By the mean value theorem, and since \(b^{*}_{k} \approx k-\frac{1}{2}\) for large \(k\), we have

where \(\xi= \xi(y,b_{k}) \in[k-1,k]\), for large \(k\). Thus, for large \(N\), we obtain

where \(\zeta(x,s) := \sum_{k=0}^{\infty}\frac{1}{(k+s)^{x}}\), \(x>1\), \(s > 0\), is the Hurwitz zeta function, which can be evaluated using accurate numerical algorithms.

Remark 2.10

Unlike the Fourier-based method of Benth et al. [13], the hybrid scheme does not require truncating the singularity of the kernel function \(g\) when \(\alpha\in(-\frac {1}{2},0)\), which is beneficial to maintaining the accuracy of the scheme when \(\alpha\) is near \(-\frac{1}{2}\). Let us briefly analyze the effect on the approximation error of truncating the singularity of \(g\); cf. [13, pp. 75–76]. Consider for any \(\varepsilon>0\) the modified \(\mathcal{BSS}\) process

defined using the truncated kernel function

Adapting the proof of Theorem 2.5 in a straightforward manner, it is possible to show that under (A1) and (A3),

for any \(t \in \mathbb {R}\). While the rate of convergence, as \(\varepsilon \downarrow0\), of the MSE that arises from replacing \(g\) with \(g_{\varepsilon}\) is analogous to the rate of convergence of the hybrid scheme, it is important to note that the factor \(\tilde{J}(\alpha)\) blows up as \(\alpha\downarrow-\frac{1}{2}\). In fact, \(\tilde{J}(\alpha )\) is equal to the first term in the series that defines \(J(\alpha,0,\mathbf{b}_{\mathrm{FWD}})\) and

which indicates that the effect of truncating the singularity, in terms of MSE, is similar to the effect of using the forward Riemann-sum scheme to discretize the process when \(\alpha\) is near \(-\frac{1}{2}\). In particular, the truncation threshold \(\varepsilon\) would then have to be very small in order to keep the truncation error in check.

2.5 Extension to truncated Brownian semistationary processes

It is useful to extend the hybrid scheme to a class of non-stationary processes that are closely related to \(\mathcal{BSS}\) processes. This extension is important in connection with an application to the so-called rough Bergomi model which we discuss in Sect. 3.3 below. More precisely, we consider processes of the form

where the kernel function \(g\), volatility process \(\sigma\) and driving Brownian motion \(W\) are as before. We call \(Y\) a truncated Brownian semistationary (\(\mathcal{TBSS}\)) process, as \(Y\) is obtained from the \(\mathcal{BSS}\) process \(X\) by truncating the stochastic integral in (2.1) at 0. Of the preceding assumptions, only (A1) and (A2) are needed to ensure that the stochastic integral in (2.14) exists—in fact, of (A2), only the requirement that \(g\) is differentiable on \((0,\infty)\) comes into play.

The \(\mathcal{TBSS}\) process \(Y\) does not have covariance-stationary increments, so we define its (time-dependent) variogram as

Extending Proposition 2.2, we can describe the behavior of \(h \mapsto V_{Y}(h,t)\) near zero as follows. The existence of a locally Hölder-continuous modification is then a straightforward consequence. We omit the proof of this result, as it would be a straightforward adaptation of the proof of Proposition 2.2.

Proposition 2.11

Suppose that (A1) and (A2) hold.

-

(i)

The variogram of \(Y\) satisfies for any \(t \geq0\) that as \(h \rightarrow0\),

$$ V_{Y}(h,t) \sim \mathbb {E}[\sigma_{0}^{2}] \bigg(\frac{1}{2\alpha+ 1} + \mathbf{1}_{(0,\infty)}(t)\int_{0}^{\infty}\big( (y+1)^{\alpha}- y^{\alpha}\big)^{2} \mathrm {d}y\bigg) h^{2\alpha+1} L_{g}(h)^{2}, $$which implies that \(h \mapsto V_{Y}(h,t)\) is regularly varying at zero with index \(2\alpha+1\).

-

(ii)

The process \(Y\) has a modification with locally \(\phi\)-Hölder-continuous trajectories for any \(\phi\in(0,\alpha+ \frac{1}{2})\).

Note that while the increments of \(Y\) are not covariance-stationary, the asymptotic behavior of \(V_{Y}(h,t)\) is the same as that of \(V_{X}(h)\) as \(h \rightarrow0\) (cf. Proposition 2.2) for any \(t>0\). Thus, the increments of \(Y\) (apart from increments starting at time 0) are locally like the increments of \(X\).

We define the hybrid scheme to discretize \(Y_{t}\), for any \(t \geq0\), as

where

In effect, we simply drop the summands in (2.7) and (2.8) that correspond to integrals and increments on the negative real line. We make remarks on the implementation of this scheme in Sect. 3.1 below.

The MSE of the hybrid scheme for the \(\mathcal{TBSS}\) process \(Y\) has the following asymptotic behavior as \(n \rightarrow\infty\), which is in fact identical to the asymptotic behavior of the MSE of the hybrid scheme for \(\mathcal{BSS}\) processes. We omit the proof of this result, which would be a simple modification of the proof of Theorem 2.5.

Theorem 2.12

Suppose that (A1) and (A2) hold, and that for some \(\delta>0\),

Then for all \(t>0\),

where \(J(\alpha,\kappa,\mathbf{b})\) is as in Theorem 2.5.

Remark 2.13

Under the assumptions of Theorem 2.12, the conclusion of Corollary 2.7 holds mutatis mutandis. In particular, the covariance structure of the discretized \(\mathcal{TBSS}\) process approaches that of \(Y\) when \(n \rightarrow\infty\).

3 Implementation and numerical experiments

3.1 Practical implementation

Simulating the \(\mathcal{BSS}\) process \(X\) on the equidistant grid \(\{0,\frac{1}{n},\frac{2}{n},\ldots,\frac{\lfloor nT \rfloor}{n} \}\) for some \(T>0\) using the hybrid scheme entails generating

Provided that we can simulate the random variables

we can compute (3.1) via the formula

In order to simulate (3.2) and (3.3), it is instrumental to note that the \(\kappa +1\)-dimensional random vectors

are i.i.d. according to a multivariate Gaussian distribution with mean zero and covariance matrix \(\varSigma\) given by

for \(j = 2,\ldots,\kappa+1\), and

for \(j\), \(k = 2,\ldots,\kappa+1\) such that \(j < k\), where \({}_{2} F_{1}\) stands for the Gauss hypergeometric function; see e.g. [17, Sect. 2.1.1] for the definition. (When \(k < j\), set \(\varSigma _{j,k} = \varSigma_{k,j}\).) For the convenience of the reader, we provide a proof of (3.5) in Sect. 4.3.

Thus, \((\mathbf{W}^{n}_{i})_{i=-N_{n}}^{\lfloor nT \rfloor-1}\) can be generated by taking independent draws from the multivariate Gaussian distribution \(N_{\kappa+1}(\mathbf{0},\varSigma)\). If the volatility process \(\sigma\) is independent of \(W\), then \((\sigma ^{n}_{i})_{i=-N_{n}}^{\lfloor nT \rfloor-1}\) can be generated separately, possibly using exact methods. (Exact methods are available e.g. for Gaussian processes, as mentioned in the introduction, and diffusions; see [14].) In the case where \(\sigma\) depends on \(W\), simulating \((\mathbf{W}^{n}_{i})_{i=-N_{n}}^{\lfloor nT \rfloor-1}\) and \((\sigma^{n}_{i})_{i=-N_{n}}^{\lfloor nT \rfloor-1}\) is less straightforward. That said, if \(\sigma\) is driven by a standard Brownian motion \(Z\), correlated with \(W\), say, one could rely on a factor decomposition

where \(\rho\in[-1,1]\) is the correlation parameter and \((W^{\perp}_{t})_{t \in[0,T]}\) is a standard Brownian motion independent of \(W\). Then one would first generate \((\mathbf{W}^{n}_{i})_{i=-N_{n}}^{\lfloor nT \rfloor-1}\), use (3.6) to generate \(( Z_{\frac {i+1}{n}}-Z_{\frac{i}{n}} )_{i=-N_{n}}^{\lfloor nT \rfloor-1}\), and employ some appropriate approximate method to produce \((\sigma ^{n}_{i})_{i=-N_{n}}^{\lfloor nT \rfloor-1}\) thereafter. This approach has, however, the caveat that it induces an additional approximation error, not quantified in Theorem 2.5.

Remark 3.1

In the case of the \(\mathcal{TBSS}\) process \(Y\) introduced in Sect. 2.5, the observations \(Y^{n}_{\frac {i}{n}}\), \(i = 0,1,\ldots,\lfloor nT \rfloor\), given by the hybrid scheme (2.15) can be computed via

using the random vectors \(\mathbf{W}^{n}_{i}\), \(i=0,1,\ldots,\lfloor nT \rfloor-1\), and random variables \(\sigma^{n}_{i}\), \(i= 0,1,\ldots,\lfloor nT \rfloor-1\).

In the hybrid scheme, it typically suffices to take \(\kappa\) to be at most 3. Thus, in (3.4), the first sum \(\check{X}^{n}_{\frac{i}{n}}\) requires only a negligible computational effort. By contrast, the number of terms in the second sum \(\hat{X}^{n}_{\frac {i}{n}}\) increases as \(n \rightarrow\infty\). It is then useful to note that

where

and \(\varGamma\star\varXi\) stands for the discrete convolution of the sequences \(\varGamma\) and \(\varXi\). It is well known that the discrete convolution can be evaluated efficiently using a fast Fourier transform (FFT). The computational complexity of simultaneously evaluating \((\varGamma\star\varXi)_{i}\) for all \(i = 0,1,\ldots,\lfloor nT \rfloor\) using an FFT is \(\mathcal{O}(N_{n} \log N_{n})\), see [22, Sect. 3.3.4], which under (A4) translates to \(\mathcal{O}(n^{\gamma+1} \log n)\). The computational complexity of the entire hybrid scheme is then \(\mathcal {O}(n^{\gamma+1} \log n)\), provided that \((\sigma ^{n}_{i})_{i=-N_{n}}^{\lfloor nT \rfloor-1}\) is generated using a scheme with complexity not exceeding \(\mathcal{O}(n^{\gamma+1} \log n)\). As a comparison, we mention that the complexity of an exact simulation of a stationary Gaussian process using circulant embeddings is \(\mathcal {O}(n \log n)\) [2, Chapter XI, Sect. 3], whereas the complexity of the Cholesky factorization is \(\mathcal{O}(n^{3})\) [2, Chapter XI, Sect. 2].

Remark 3.2

With \(\mathcal{TBSS}\) processes, the computational complexity of the hybrid scheme via (3.7) is \(\mathcal {O}(n \log n)\).

Figure 2 presents examples of trajectories of the \(\mathcal{BSS}\) process \(X\) using the hybrid scheme with \(\kappa= 1, 2\) and \(\mathbf{b} = \mathbf{b}^{*}\). We choose the kernel function \(g\) to be the gamma kernel (Example 2.3) with \(\lambda= 1\). We also discretize \(X\) using the Riemann-sum scheme, \(\kappa= 0\) with \(\mathbf{b} \in\{ \mathbf{b}_{\mathrm{FWD}},\mathbf{b}^{*}\}\) (that is, the forward Riemann-sum scheme and its counterpart with optimally chosen evaluation points). We can make two observations. Firstly, we see how the roughness parameter \(\alpha\) controls the regularity properties of the trajectories of \(X\)—as we decrease \(\alpha\), the trajectories of \(X\) become increasingly rough. Secondly, and more importantly, we see how the simulated trajectories coming from the Riemann-sum and hybrid schemes can be rather different, even though we use the same innovations for the driving Brownian motion. In fact, the two variants of the hybrid scheme (\(\kappa= 1, 2\)) yield almost identical trajectories, while the Riemann-sum scheme (\(\kappa= 0\)) produces trajectories that are comparatively smoother, this difference becoming more apparent as \(\alpha\) approaches \(-\frac{1}{2}\). Indeed, in the extreme case with \(\alpha= -0.499\), both variants of the Riemann-sum scheme break down and yield anomalous trajectories with very little variation, while the hybrid scheme continues to produce accurate results. The fact that the hybrid scheme is able to reproduce the fine properties of rough \(\mathcal {BSS}\) processes, even for values of \(\alpha\) very close to \(-\frac{1}{2}\), is backed up by a further experiment reported in the following section.

Discretized trajectories of a \(\mathcal{BSS}\) process, where \(g\) is the gamma kernel (Example 2.3), \(\lambda= 1\) and \(\sigma_{t} = 1\) for all \(t\in \mathbb {R}\). Trajectories consisting of \(n=50\) observations on \([0,1]\) were generated with the hybrid scheme (\(\kappa = 1, 2\) and \(\mathbf{b} = \mathbf{b}^{*}\)) and Riemann-sum scheme (\(\kappa =0\) and \(\mathbf{b} = \mathbf{b}^{*}\) (solid lines), \(\mathbf{b}=\mathbf{b}_{\mathrm{FWD}}\) (dashed lines)), using the same innovations for the driving Brownian motion in all cases and \(N_{n} =\lfloor50^{1.5}\rfloor = 353\). The simulated processes were normalized to have unit (stationary) variance

3.2 Estimation of the roughness parameter

Suppose that we have observations \(X_{\frac{i}{m}}\), \(i = 0,1,\ldots ,m\), of the \(\mathcal {BSS}\) process \(X\) given by (2.1), for some \(m \in \mathbb {N}\). Barndorff-Nielsen et al. [6] and Corcuera et al. [16] discuss how the roughness index \(\alpha\) can be estimated consistently as \(m \rightarrow\infty\). The method is based on the change-of-frequency (COF) statistics

which compare the realized quadratic variations of \(X\), using second-order increments, with two different lag lengths. Corcuera et al. [16] have shown that under some assumptions on the process \(X\), which are similar to (A1)–(A3) albeit slightly more restrictive, it holds that

An in-depth study of the finite sample performance of this COF estimator can be found in [12].

To examine how well the hybrid scheme reproduces the fine properties of the \(\mathcal {BSS}\) process in terms of regularity/roughness, we apply the COF estimator to discretized trajectories of \(X\), where the kernel function \(g\) is again the gamma kernel (Example 2.3) with \(\lambda= 1\), generated using the hybrid scheme with \(\kappa= 1,2,3\) and \(\mathbf{b} = \mathbf{b}^{*}\). We consider the case where the volatility process satisfies \(\sigma_{t} = 1\), that is, the process \(X\) is Gaussian. This allows us to quantify and control the intrinsic bias and noisiness, measured in terms of standard deviation, of the estimation method itself, by initially applying the estimator to trajectories that have been simulated using an exact method based on the Cholesky factorization. We then study the behavior of the estimator when applied to a discretized trajectory, while decreasing the step size of the discretization scheme. More precisely, we simulate \(\hat{\alpha}(X^{n},m)\), where \(m=500\) and \(X_{n}\) is the hybrid scheme for \(X\) with \(n = ms\) and \(s \in\{1,2,5\}\). This means that we compute \(\hat{\alpha}(X^{n},m)\) using \(m\) observations obtained by subsampling every \(s\)th observation in the sequence \(X^{n}_{\frac{i}{n}}\), \(i = 0,1,\ldots,n\). As a comparison, we repeat these simulations substituting the hybrid scheme with the Riemann-sum scheme, using \(\kappa= 0\) with \(\mathbf {b}\in\{\mathbf{b}_{\mathrm{FWD}},\mathbf{b}^{*}\}\).

The results are presented in Fig. 3. We observe that the intrinsic bias of the estimator with \(m = 500\) observations is negligible, and hence the bias of the estimates computed from discretized trajectories is then attributable to approximation error arising from the respective discretization scheme, where positive (resp. negative) bias indicates that the simulated trajectories are smoother (resp. rougher) than those of the process \(X\). Concentrating first on the baseline case \(s = 1\), we note that the hybrid scheme produces essentially unbiased results when \(\alpha\in(-\frac {1}{2},0)\), while there is moderate bias when \(\alpha\in(0,\frac {1}{2})\), which disappears when passing from \(\kappa=1\) to \(\kappa=3\), even for values of \(\alpha\) very close to \(\frac{1}{2}\). (The largest value of \(\alpha\) considered in our simulations is \(\alpha= 0.49\); one would expect the performance to weaken as \(\alpha\) approaches \(\frac {1}{2}\), cf. Fig. 1, but this range of parameter values seems to be of limited practical interest.) The standard deviations exhibit a similar pattern. The corresponding results for the Riemann-sum scheme are clearly inferior, exhibiting significant bias, while using optimal evaluation points (\(\mathbf{b} = \mathbf{b}^{*}\)) improves the situation slightly. In particular, the bias in the case \(\alpha\in(-\frac{1}{2},0)\) is positive, indicating too smooth discretized trajectories, which is connected with the failure of the Riemann-sum scheme with \(\alpha\) near \(-\frac{1}{2}\), illustrated in Fig. 2. With \(s=2\) and \(s=5\), the results improve with both schemes. Notably, in the case \(s=5\), the performance of the hybrid scheme even with \(\kappa= 1\) is on a par with the exact method. However, the improvements with the Riemann-sum scheme are more meager, as considerable bias persists when \(\alpha\) is near \(-\frac{1}{2}\).

Bias and standard deviation of the COF estimator (3.8) of the roughness index \(\alpha\), when applied to discretized trajectories of a \(\mathcal {BSS}\) process with the gamma kernel (Example 2.3), \(\lambda= 1\) and \(\sigma_{t} = 1\) for all \(t\in \mathbb {R}\). Trajectories were generated using an exact method based on the Cholesky factorization, the hybrid scheme (\(\kappa= 1, 2, 3\) and \(\mathbf{b} = \mathbf{b}^{*}\)) and the Riemann-sum scheme (\(\kappa=0\) and \(\mathbf{b} = \mathbf{b}^{*}\) (solid lines), \(\mathbf{b}=\mathbf {b}_{\mathrm{FWD}}\) (dashed lines)). In the experiment, \(n = ms\) observations were generated on \([0,1]\), where \(m=500\) and \(s \in\{1, 2, 5\}\), using \(N_{n}= \lfloor n^{1.5} \rfloor\). Every \(s\)th observation was then subsampled, resulting in \(m=500\) observations that were used to compute the estimate \(\hat{\alpha }(X^{n},m)\) of the roughness index \(\alpha\). The number of Monte Carlo replications is 10000

3.3 Option pricing under rough volatility

As another experiment, we study Monte Carlo option pricing in the rough Bergomi (rBergomi) model of Bayer et al. [10]. In the rBergomi model, the logarithmic spot variance of the price of the underlying is modeled by a rough Gaussian process, which is a special case of (2.14). By virtue of the rough volatility process, the model fits well to observed implied volatility smiles [10, Sect. 5].

More precisely, the price of the underlying in the rBergomi model with time horizon \(T>0\) is defined, under an equivalent martingale measure identified with ℙ, as

using the spot variance process

Above, \(S_{0}>0\), \(\eta>0\) and \(\alpha\in(-\frac{1}{2},0)\) are deterministic parameters, and \(Z\) is a standard Brownian motion given by

where \(\rho\in(-1,1)\) is the correlation parameter and \((W^{\perp}_{t})_{t \in[0,T]}\) is a standard Brownian motion independent of \(W\). The process \((\xi^{0}_{t})_{t \in[0,T]}\) is the so-called forward variance curve [10, Sect. 3], which we assume here to be flat, \(\xi^{0}_{t} = \xi>0\) for all \(t \in[0,T]\).

We aim to compute by using Monte Carlo simulation the price of a European call option struck at \(K>0\) with maturity \(T\), which is given by

The approach suggested by Bayer et al. [10] involves sampling the Gaussian processes \(Z\) and \(Y\) on a discrete time-grid using exact simulation and then approximating \(S\) and \(v\) using Euler discretization. We modify this approach by using the hybrid scheme to simulate \(Y\), instead of the computationally more costly exact simulation. As the hybrid scheme involves simulating increments of the Brownian motion \(W\) driving \(Y\), we can conveniently simulate the increments of \(Z\), needed for the Euler discretization of \(S\), by using the representation (3.9).

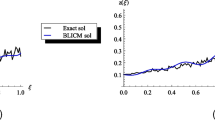

We map the option price \(\mathrm{C}(S_{0},K,T)\) to the corresponding Black–Scholes implied volatility \(\mathrm{IV}(S_{0},K,T)\). Reparametrizing the implied volatility using the log-strike \(k := \log (K/S_{0})\) allows us to drop the dependence on the initial price, so we abuse notation slightly and write \(\mathrm{IV}(k,T)\) for the corresponding implied volatility. Figure 4 displays implied volatility smiles obtained from the rBergomi model using the hybrid and Riemann-sum schemes to simulate \(Y\), as discussed above, and compares these to the smiles obtained using an exact simulation of \(Y\) via Cholesky factorization. The parameter values are given in Table 1. They have been adopted from Bayer et al. [10], who demonstrate that they result in realistic volatility smiles. We consider two different maturities: “short”, \(T = 0.041\), and “long”, \(T = 1\).

Implied volatility smiles corresponding to the option price (3.10), computed using Monte Carlo simulation (500 time steps, 1000000 replications), with two maturities: \(T = 0.041\) (left) and \(T = 1\) (right). The spot variance process \(v\) was simulated using an exact method, the hybrid scheme (\(\kappa=1,2\) and \(\mathbf{b} = \mathbf{b}^{*}\)) and the Riemann-sum scheme (\(\kappa=0\) and \(\mathbf{b} = \mathbf{b}^{*}\) (solid lines), \(\mathbf{b}=\mathbf{b}_{\mathrm{FWD}}\) (dashed lines)). The parameter values used in the rBergomi model are given in Table 1

We observe that the Riemann-sum scheme (\(\kappa=0\), \(\mathbf{b} \in\{\mathbf{b}_{\mathrm{FWD}},\mathbf{b}^{*}\}\)) is able to capture the shape of the implied volatility smile, but not its level. But the method even breaks down with more extreme log-strikes (the prices are so low that the root-finding algorithm used to compute the implied volatility would return zero). In contrast, the hybrid scheme with \(\kappa= 1,2\) and \(\mathbf{b}=\mathbf{b}^{*}\) yields implied volatility smiles that are indistinguishable from the benchmark smiles obtained using exact simulation. Further, there is no discernible difference between the smiles obtained using \(\kappa=1\) and \(\kappa=2\). As in the previous section, we observe that the hybrid scheme is indeed capable of producing very accurate trajectories of \(\mathcal{TBSS}\) processes, in particular in the case \(\alpha\in(-\frac {1}{2},0)\), even when \(\kappa= 1\).

4 Proofs

Throughout the proofs below, we rely on two useful inequalities. The first one is the Potter bound for slow variation at 0, which follows immediately from the corresponding result for slow variation at \(\infty \) [15, Theorem 1.5.6]. Namely, if \(L : (0,1] \rightarrow(0,\infty )\) is slowly varying at 0 and bounded away from 0 and \(\infty\) on every interval \((u,1]\), \(u \in(0,1)\), then for any \(\delta>0\), there exists a constant \(C_{\delta}>0\) such that

The second one is the elementary inequality

which can be easily shown by using the mean value theorem. Additionally, we use the following variant of Karamata’s theorem for regular variation at 0. Its proof is similar to the one of the usual Karamata theorem for regular variation at \(\infty\) [15, Proposition 1.5.10].

Lemma 4.1

If \(\alpha\in(-1,\infty)\) and \(L : (0,1] \rightarrow[0,\infty)\) is slowly varying at 0, then

4.1 Proof of Proposition 2.2

(i) By the covariance-stationarity of the volatility process \(\sigma\), we may express the variogram \(V(h)\) for any \(h \geq0\) as

Invoking (A1) and Lemma 4.1, we find that

We may clearly assume that \(h<1\), which allows us to work with the decomposition

where

According to (A2), there exists \(M>1\) such that \(x \mapsto |g'(x)|\) is nonincreasing on \([M,\infty)\). Thus, using the mean value theorem, we deduce that

where \(\xi= \xi(x,h) \in[x,x+h]\). It follows then that

which in turn implies that

Making the substitution \(y=\frac{x}{h}\), we next obtain

where

By the definition of slow variation at 0,

We show below that the functions \(G_{h}\), \(h \in(0,1)\), have an integrable majorant. Thus, by the dominated convergence theorem,

Since \(\alpha< \frac{1}{2}\), we have \(\lim_{h \rightarrow0} \frac {A'_{h}}{h^{2\alpha+ 1 } L_{g}(h)^{2}}=0\) by (2.2) and (4.5), so we get from (4.4) and (4.6) that

which together with (4.3) implies the assertion.

It remains to justify the use of the dominated convergence theorem to deduce (4.6). For any \(y \in(0,1]\), we have by the Potter bound (4.1) and the elementary inequality \((u+v)^{2} \leq 2u^{2}+2v^{2}\) that

where we choose \(\delta_{1} \in(0,\alpha+\frac{1}{2})\) to ensure that \(2(\alpha-\delta_{1}) > -1\). Consider then \(y \in[1,\infty)\). By adding and subtracting the term \((y+1)^{\alpha}\frac{L_{g}(hy)}{L_{g}(h)}\) and using again the inequality \((u+v)^{2} \leq2u^{2}+2v^{2}\), we get

We recall that \(\underline{L}_{g} := \inf_{x \in(0,1]} L_{g}(x) >0\) by (A1), so

Using the mean value theorem and the bound for the derivative of \(L_{g}\) from (A1), we observe that

where \(\xi= \xi(y,h) \in[hy,h(y+1)]\). Noting that the constraint \(y < \frac{1}{h}-1\) is equivalent to \(h < \frac{1}{y+1}\), we obtain further

as \(y\geq1\), which we then use to deduce that

Additionally, we observe that by (4.1) and (4.2),

where we choose \(\delta_{2} \in(0,\frac{1}{2}-\alpha)\), ensuring that \(2(\alpha-1+\delta_{2}) < -1\). We may finally define the function

which satisfies \(0 \leq G_{h}(y) \leq G(y)\) for any \(y \in(0,\infty)\) and \(h \in(0,1)\), and is integrable on \((0,\infty)\) with the aforementioned choices of \(\delta_{1}\) and \(\delta_{2}\).

(ii) To show existence of the modification, we need a localization procedure that involves the ancillary process

We first check that \(F\) is locally bounded under (A1) and (A2), which is essential for localization. To this end, let \(T \in(0,\infty)\) and write, for any \(t \in[-T,T]\),

where

and \(M>1\) is such that \(x \mapsto|g'(x)|\) is nonincreasing on \([M,\infty)\), as in the proof of (i). Since \(g'\) is continuous on \((0,\infty)\) and \(\sigma\) is locally bounded, we have for any \(t \in[-T,T]\) that

Further, when \(t \in[-T,T]\),

where \(g'(t-u)^{2} \leq g'(-T-u)^{2}\) since the arguments satisfy

Thus,

for any \(t \in[-T,T]\) almost surely, as we have

where we change the order of expectation and integration relying on Tonelli’s theorem and where the final equality follows from the covariance-stationarity of \(\sigma\). So we can conclude that \(F\) is indeed locally bounded.

Let now \(m \in \mathbb {N}\) and, for localization, define the sequence of stopping times

that satisfies \(\tau_{m,n} \uparrow\infty\) almost surely as \(n \rightarrow\infty\) since both \(F\) and \(\sigma\) are locally bounded. (We follow the usual convention that \(\inf\varnothing= \infty\).) Consider now the modified \(\mathcal {BSS}\) process

that coincides with \(X\) on the stochastic interval \([\![-m,\tau _{m,n} ]\!]\). The process \(X^{m,n}\) satisfies the assumptions of [5, Lemma 1]; so for any \(p>0\), there exists a constant \(\hat{C}_{p}>0\) such that

Using the upper bound in (2.2), we can deduce from (i) that for any \(\delta> 0\), there are constants \(\tilde {C}_{\delta}>0\) and \(\underline{h}_{\delta}> 0\) such that

Applying (4.8) to (4.7), we get for \(s,t \in[-m,\infty)\), \(|s-t| < \underline{h}_{\delta}\) that

We may note that \(p(\alpha+ \frac{1}{2}-\frac{\delta}{2}-\frac {1}{p})>0\) for small enough \(\delta\) and large enough \(p\), and in particular,

as \(\delta\downarrow0\) and \(p \uparrow\infty\). Thus it follows from the Kolmogorov–Chentsov theorem [21, Theorem 3.22] that \(X^{m,n}\) has a modification with locally \(\phi\)-Hölder-continuous trajectories for any \(\phi\in(0,\alpha+ \frac{1}{2})\). Moreover, a modification of \(X\) on ℝ, having locally \(\phi\)-Hölder-continuous trajectories for any \(\phi\in(0,\alpha+ \frac{1}{2})\), can then be constructed from these modifications of \(X^{m,n}\), \(m \in \mathbb {N}\), \(n \in \mathbb {N}\), by letting first \(n \rightarrow\infty\) and then \(m \rightarrow \infty\). □

4.2 Proof of Theorem 2.5

As a preparation, we first establish an auxiliary result that deals with the asymptotic behavior of certain integrals of regularly varying functions.

Lemma 4.2

Suppose that \(L : (0,1] \rightarrow[0,\infty)\) is bounded away from 0 and \(\infty\) on any set of the form \((u,1]\), \(u\in(0,1)\), and slowly varying at 0. Moreover, let \(\alpha\in(-\frac{1}{2},\infty) \) and \(k \geq1\). If \(b \in[k-1,k] \setminus\{0\}\), then

-

(i)

\({\displaystyle\lim_{n \rightarrow\infty}\int _{k-1}^{k} \bigg( x^{\alpha}\frac{L(x/n)}{L(1/n)} - b^{\alpha}\frac {L(b/n)}{L(1/n)} \bigg)^{2} \mathrm {d}x = \int_{k-1}^{k} (x^{\alpha} - b^{\alpha })^{2} \mathrm {d}x<\infty}\).

-

(ii)

\({\displaystyle\lim_{n \rightarrow\infty}\int _{k-1}^{k} x^{2\alpha}\bigg( \frac{L(x/n)}{L(1/n)} - \frac {L(b/n)}{L(1/n)} \bigg)^{2} \mathrm {d}x = 0}\).

Proof

We only prove (i) as (ii) can be shown similarly. By the definition of slow variation at 0, the function

satisfies \(\lim_{n \rightarrow\infty} f_{n}(x) = (x^{\alpha} - b^{\alpha })^{2}\) for any \(x \in[k-1,k] \setminus\{0 \}\). In view of the dominated convergence theorem, it suffices to find an integrable majorant for the functions \(f_{n}\), \(n \in \mathbb {N}\). The construction of the majorant is quite similar to the one seen in the proof of Proposition 2.2, but we provide the details for the convenience of the reader.

Using the Potter bound (4.1) and the inequality \((u+v)^{2} \leq2u^{2}+2v^{2}\), we find that for any \(x \in[k-1,k] \setminus \{0 \}\),

where we choose \(\delta\in(0,\alpha+\frac{1}{2})\). When \(k \geq2\), we have \(x\geq1\) and \(b \geq1\), so

is a bounded function of \(x\) on \([k-1,k]\). When \(k=1\), we have \(x\leq 1\) and \(b \leq1\), implying that

where \(2(\alpha-\delta) > - 1\) with our choice of \(\delta\), so \(f\) is an integrable function on \((0,1]\). □

Proof of Theorem 2.5

Let \(t \in \mathbb {R}\) be fixed. It will be convenient to write \(X^{n}_{t}\) as

Moreover, we introduce an ancillary approximation of \(X_{t}\), namely

By Minkowski’s inequality, we have

which together, after taking squares, imply that

where

Using the Itô isometry and recalling that \(\sigma\) is covariance-stationary, we obtain

and

where

(We may assume without loss of generality that \(N_{n} > n >\kappa\), as this will be the case for large enough \(n\).) In what follows, we study the asymptotic behavior of the terms \(D_{n}\), \(D'_{n}\), \(D''_{n}\) and \(D'''_{n}\) separately, showing that \(D_{n}\), \(D''_{n}\) and \(D'''_{n}\) are negligible in comparison with \(D'_{n}\), and that \(D'_{n}\) gives rise to the convergence rate given in Theorem 2.5.

Let us analyze the terms \(D'''_{n}\), \(D''_{n}\) and \(D_{n}\) first. By (A3) and (A4), we have

Regarding the term \(D_{n}''\), recall that by (A2), there is \(M>1\) such that \(x \mapsto|g'(x)|\) is nonincreasing on \([M,\infty)\). So we have by the mean value theorem that

where \(\xi= \xi(\frac{b_{k}}{n},s) \in[\frac{k-1}{n},\frac{k}{n}]\). Thus,

which implies

To analyze the behavior of \(D_{n}\), we substitute \(y=ns\) and invoke (A1), yielding

where by Lemma 4.2(ii), we have

for any \(k = 1,\ldots,\kappa\). Thus we find that

The asymptotic behavior of the term \(D'_{n}\) is more delicate to analyze. By (A1) and substituting \(y = ns\), we can write

where

Let us study the asymptotic behavior of the sum \(\sum_{k=\kappa+1}^{n} A_{n,k}\) as \(n \rightarrow\infty\). By Lemma 4.2, we have for any \(k \in \mathbb {N}\) that

To be able to then deduce, using the dominated convergence theorem, that

we seek a sequence \((A_{k})_{k=\kappa+1}^{\infty} \subseteq[0,\infty)\) such that

and such that \(\sum_{k=\kappa+1}^{\infty}A_{k} < \infty\). Let us assume, without loss of generality, that \(\kappa= 0\). Clearly, we may set \(A_{1} := \sup_{n \in \mathbb {N}} A_{n,1} < \infty\). Consider now \(k\geq2\). The construction of \(A_{k}\) in this case parallels some arguments seen in the proof of Proposition 2.2, but we provide the details for the sake of clarity. By adding and subtracting \(b^{\alpha}_{k} \frac {L_{g}(y/n)}{L_{g}(1/n)}\) and using the inequality \((u+v)^{2} \leq2u^{2} + 2v^{2}\), we get

Recall that \(\underline{L}_{g} := \inf_{x \in(0,1]} L_{g}(x)>0\); so by the estimates

valid when \(\alpha< \frac{1}{2}\), we obtain

Note that thanks to (A1) and the mean value theorem,

where \(\xi= \xi(y/n,b_{k}/n) \in[\frac{k-1}{n},\frac{k}{n}]\) and where the final inequality follows since \(k- 1 < n\). Thus,

Moreover, the Potter bound (4.1) and inequality (4.2) imply

where we choose \(\delta\in(0,\frac{1}{2}-\alpha)\). Applying the bounds (4.15) and (4.16) to (4.14) shows that

where \(2(\alpha-1)<-1\) and \(2(\alpha-1+\delta) < -1\) with our choice of \(\delta\), so that we get \(\sum_{k=1}^{\infty}A_{k} < \infty\). Thus we have shown (4.13), which in turn implies that

We now use the obtained asymptotic relations, (4.10)–(4.12) and (4.17), to complete the proof. To this end, it is convenient to introduce the relation \(x_{n} \gg y_{n}\) for any sequences \((x_{n})_{n=1}^{\infty}\) and \((y_{n})_{n=1}^{\infty}\) of positive real numbers satisfying \(\lim_{n \rightarrow\infty}\frac {x_{n}}{y_{n}} = \infty\). By (4.12), we have \(D'_{n} \gg D_{n}\). Since \(2\alpha +1 < 2\), we find that also \(D'_{n} \gg D''_{n}\), in view of (4.11). The assumption \(\gamma> -\frac{2\alpha+1}{2\beta+ 1}\) is equivalent to \(-(2\alpha+1) > \gamma(2\beta+1)\); so by the estimate (2.2) for slowly varying functions, we have \(D'_{n} \gg D'''_{n}\). It then follows that \(E_{n} \sim \mathbb {E}[\sigma_{0}^{2}] D'_{n}\) as \(n \rightarrow \infty\). Further, the condition (2.10) implies that \(E_{n} \gg E'_{n}\). In view of (4.9), we finally find that \(\mathbb{E}[| X^{n}_{t} - X_{t} |^{2}] \sim \mathbb {E}[\sigma_{0}^{2}] D'_{n}\) as \(n \rightarrow\infty\), which completes the proof. □

4.3 Proof of equation (3.5)

In order to prove (3.5), we rely on the following integral identity for the Gauss hypergeometric function \({}_{2} F_{1}\).

Lemma 4.3

For all \(\alpha \in(-1,\infty)\) and \(0 \leq a < b\),

Proof

In the case \(a=0\), the asserted identity becomes trivial, so we may assume that \(a>0\). Substituting \(y = \frac{x}{a}\), we get

Using Euler’s formula [17, Sect. 2.1.3, Eq. (10)], we find that

where \(\varGamma\) denotes the gamma function; note that this is valid since \(\alpha+ 2 > 1 > 0\) and \(0 < \frac{a}{b} < 1\) under our assumptions. By the connection between the gamma function \(\varGamma\) and the beta function \(\mathrm{B}\) (see e.g. [17, Sect. 1.5, Eq. (5)]), we obtain further

concluding the proof. □

Proof of equation (3.5)

Let \(\alpha\in(-\frac{1}{2},\frac{1}{2}) \setminus\{0 \}\) and let \(j\), \(k = 2,\ldots,\kappa+1\) be such that \(j < k\). By the Itô isometry, we have

where we have substituted \(x = ns\). The second integral above can now be expressed as

where the second equality follows from Lemma 4.3, which is applicable to both integrals on the second line as \(0 < j-1< k-1\) and \(0 \leq j-2 < k-2\). □

References

Alòs, E., León, J.A., Vives, J.: On the short-time behavior of the implied volatility for jump-diffusion models with stochastic volatility. Finance Stoch. 11, 571–589 (2007)

Asmussen, S., Glynn, P.W.: Stochastic Simulation: Algorithms and Analysis. Springer, New York (2007)

Barndorff-Nielsen, O.E.: Notes on the gamma kernel (2012). Thiele Centre Research Report, No. 03, May 2012. Available online at http://data.math.au.dk/publications/thiele/2012/math-thiele-2012-03.pdf

Barndorff-Nielsen, O.E., Benth, F.E., Veraart, A.E.D.: Modelling energy spot prices by volatility modulated Lévy-driven Volterra processes. Bernoulli 19, 803–845 (2013)

Barndorff-Nielsen, O.E., Corcuera, J.M., Podolskij, M.: Multipower variation for Brownian semistationary processes. Bernoulli 17, 1159–1194 (2011)

Barndorff-Nielsen, O.E., Corcuera, J.M., Podolskij, M.: Limit theorems for functionals of higher order differences of Brownian semistationary processes. In: Shiryaev, A.N., et al. (eds.) Prokhorov and Contemporary Probability Theory, pp. 69–96. Springer, Berlin (2013)

Barndorff-Nielsen, O.E., Pakkanen, M.S., Schmiegel, J.: Assessing relative volatility/intermittency/energy dissipation. Electron. J. Stat. 8, 1996–2021 (2014)

Barndorff-Nielsen, O.E., Schmiegel, J.: Ambit processes: with applications to turbulence and tumour growth. In: Benth, F.E., et al. (eds.) Stochastic Analysis and Applications, Abel Symp., vol. 2, pp. 93–124. Springer, Berlin (2007)

Barndorff-Nielsen, O.E., Schmiegel, J.: Brownian semistationary processes and volatility/intermittency. In: Albrecher, H., et al. (eds.) Advanced Financial Modelling. Radon Ser. Comput. Appl. Math., vol. 8, pp. 1–25. Walter de Gruyter, Berlin (2009)

Bayer, C., Friz, P.K., Gatheral, J.: Pricing under rough volatility. Quant. Finance 16, 887–904 (2016)

Bennedsen, M.: A rough multi-factor model of electricity spot prices. Energy Econ. 63, 301–313 (2017)

Bennedsen, M., Lunde, A., Pakkanen, M.S.: Discretization of Lévy semistationary processes with application to estimation (2014). Working paper. Available online at http://arxiv.org/abs/1407.2754

Benth, F.E., Eyjolfsson, H., Veraart, A.E.D.: Approximating Lévy semistationary processes via Fourier methods in the context of power markets. SIAM J. Financ. Math. 5, 71–98 (2014)

Beskos, A., Roberts, G.O.: Exact simulation of diffusions. Ann. Appl. Probab. 15, 2422–2444 (2005)

Bingham, N.H., Goldie, C.M., Teugels, J.L.: Regular Variation. Cambridge University Press, Cambridge (1989)

Corcuera, J.M., Hedevang, E., Pakkanen, M.S., Podolskij, M.: Asymptotic theory for Brownian semistationary processes with application to turbulence. Stoch. Process. Appl. 123, 2552–2574 (2013)

Erdélyi, A., Magnus, W., Oberhettinger, F., Tricomi, F.G.: Higher Transcendental Functions, vol. I. McGraw-Hill, New York (1953)

Fukasawa, M.: Short-time at-the-money skew and rough fractional volatility. Quant. Finance 17, 189–198 (2017)

Gatheral, J., Jaisson, T., Rosenbaum, M.: Volatility is rough (2014). Working paper. Available online at http://arxiv.org/abs/1410.3394

Gneiting, T., Schlather, M.: Stochastic models that separate fractal dimension and the Hurst effect. SIAM Rev. 46, 269–282 (2004)

Kallenberg, O.: Foundations of Modern Probability, 2nd edn. Springer, New York (2002)

Mallat, S.: A Wavelet Tour of Signal Processing, 3rd edn. Elsevier, Amsterdam (2009)

Mishura, Y.S.: Stochastic Calculus for Fractional Brownian Motion and Related Processes. Springer, Berlin (2008)

Pedersen, J., Sauri, O.: On Lévy semistationary processes with a gamma kernel. In: Mena, R.H., et al. (eds.) XI Symposium on Probability and Stochastic Processes, pp. 217–239. Birkhäuser, Basel (2015)

Acknowledgements

We should like to thank Heidar Eyjolfsson and Emil Hedevang for useful discussions regarding the simulation of \(\mathcal{BSS}\) processes and Ulises Márquez for assistance with symbolic computation. Our research has been supported by CREATES (DNRF78), funded by the Danish National Research Foundation, by Aarhus University Research Foundation (project “Stochastic and Econometric Analysis of Commodity Markets”) and by the Academy of Finland (project 258042).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Bennedsen, M., Lunde, A. & Pakkanen, M.S. Hybrid scheme for Brownian semistationary processes. Finance Stoch 21, 931–965 (2017). https://doi.org/10.1007/s00780-017-0335-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00780-017-0335-5

Keywords

- Stochastic simulation

- Discretization

- Brownian semistationary process

- Stochastic volatility

- Regular variation

- Estimation

- Option pricing

- Rough volatility

- Volatility smile