Abstract

In this paper, which is a continuation of the discrete-time paper (Björk and Murgoci in Finance Stoch. 18:545–592, 2004), we study a class of continuous-time stochastic control problems which, in various ways, are time-inconsistent in the sense that they do not admit a Bellman optimality principle. We study these problems within a game-theoretic framework, and we look for Nash subgame perfect equilibrium points. For a general controlled continuous-time Markov process and a fairly general objective functional, we derive an extension of the standard Hamilton–Jacobi–Bellman equation, in the form of a system of nonlinear equations, for the determination of the equilibrium strategy as well as the equilibrium value function. The main theoretical result is a verification theorem. As an application of the general theory, we study a time-inconsistent linear-quadratic regulator. We also present a study of time-inconsistency within the framework of a general equilibrium production economy of Cox–Ingersoll–Ross type (Cox et al. in Econometrica 53:363–384, 1985).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The purpose of this paper is to study a class of stochastic control problems in continuous time which have the property of being time-inconsistent in the sense that they do not allow a Bellman optimality principle. As a consequence, the very concept of optimality becomes problematic, since a strategy which is optimal given a specific starting point in time and space may be non-optimal when viewed from a later date and a different state. In this paper, we attack a fairly general class of time-inconsistent problems by using a game-theoretic approach; so instead of searching for optimal strategies, we search for subgame perfect Nash equilibrium strategies. The paper presents a continuous-time version of the discrete-time theory developed in our previous paper [5]. Since we build heavily on the discrete-time paper, the reader is referred to that for motivating examples and more detailed discussions on conceptual issues.

1.1 Previous literature

For a detailed discussion of the game-theoretic approach to time-inconsistency using Nash equilibrium points as above, the reader is referred to [5]. A list of some of the most important papers on the subject is given by [2, 6, 8–14, 16, 18–25].

All the papers above deal with particular model choices, and different authors use different methods in order to solve the problems. To our knowledge, the present paper, which is the continuous-time part of the working paper [4], is the first attempt to study a reasonably general (albeit Markovian) class of time-inconsistent control problems in continuous time. We should, however, like to stress that for the present paper, we have been greatly inspired by [2, 9, 11].

1.2 Structure of the paper

The structure of the paper is roughly as follows.

-

In Sect. 2, we present the basic setup, and in Sect. 3, we discuss the concept of equilibrium. This replaces in our setting the optimality concept for a standard stochastic control problem, and in Definition 3.4, we give a precise definition of the equilibrium control and the equilibrium value function.

-

Since the equilibrium concept in continuous time is quite delicate, we build the continuous-time theory on the discrete-time theory previously developed in [5]. In Sect. 4, we start to study the continuous-time problem by going to the limit for a discretized problem, and using the results from [5]. This leads to an extension of the standard HJB equation to a system of equations with an embedded static optimization problem. The limiting procedure described above is done in an informal manner. It is largely heuristic, and it thus remains to clarify precisely how the derived extended HJB system is related to the precisely defined equilibrium problem under consideration.

-

The needed clarification is in fact delivered in Sect. 5. In Theorem 5.2, which is the main theoretical result of the paper, we give a precise statement and proof of a verification theorem. This theorem says that a solution to the extended HJB system does indeed deliver the equilibrium control and equilibrium value function to our original problem.

-

In Sect. 6, the results of Sect. 5 are extended to a more general reward functional.

-

Section 7 treats the infinite-horizon case.

-

In Sect. 8, we study a time-inconsistent version of the linear-quadratic regulator to illustrate how the theory works in a concrete case.

-

Section 9 is devoted to a rather detailed study of a general equilibrium model for a production economy with time-inconsistent preferences.

-

In Sect. 10, we review some remaining open problems.

For extensions of the theory as well as worked out examples such as point process models, non-exponential discounting, mean-variance control, and state-dependent risk, see the working paper overview [3].

2 The model

We now turn to the formal continuous-time theory. In order to present this, we need some input data.

Definition 2.1

The following objects are given exogenously:

-

1.

A drift mapping \({\mu}:{\mathbb{R}_{+} \times\mathbb{R}^{n} \times {{\mathbb{R}^{k}}}}\rightarrow{\mathbb{R}^{n}}\).

-

2.

A diffusion mapping \({\sigma}:{\mathbb{R}_{+} \times\mathbb{R}^{n} \times{{\mathbb{R}^{k}}}}\rightarrow{M(n,d)}\), where \(M(n,d)\) denotes the set of all \(n \times d\) matrices.

-

3.

A control constraint mapping \({U}:{\mathbb{R}_{+} \times \mathbb{R} ^{n} }\rightarrow{2^{\mathbb{R}^{k}}}\).

-

4.

A mapping \({F}:{\mathbb{R}^{n} \times\mathbb {R}^{n}}\rightarrow {\mathbb{R}}\).

-

5.

A mapping \({G}:{\mathbb{R}^{n} \times\mathbb {R}^{n}}\rightarrow {\mathbb{R}}\).

We now consider, on the time interval \([0,T]\), a controlled SDE of the form

where the state process \(X\) is \(n\)-dimensional, the Wiener process \(W\) is \(d\)-dimensional, and the control process \(u\) is \(k\)-dimensional, with the constraint \(u_{t} \in U(t,X_{t})\).

Loosely speaking, our objective is to maximize, for every initial point \((t,x)\), a reward functional of the form

This functional is not of a form which is suitable for dynamic programming, and this will be discussed in detail below, but first we need to specify our class of controls. In this paper, we restrict the controls to admissible feedback control laws.

Definition 2.2

An admissible control law is a map \({{\mathbf{u}}}:{[0,T] \times\mathbb{R}^{n}}\rightarrow{\mathbb{R}^{k}}\) satisfying the following conditions:

-

1.

For each \((t,x)\in[0,T] \times\mathbb{R}^{n}\), we have \({\mathbf{u}}(t,x) \in U(t,x)\).

-

2.

For each initial point \((s,y)\in[0,T] \times\mathbb {R}^{n}\), the SDE

$$dX_{t}=\mu\big(t,X_{t},{\mathbf{u}}(t,X_{t})\big)dt + \sigma\big(t,X _{t},{\mathbf{u}}(t,X_{t})\big)dW_{t},\quad X_{s}=y $$has a unique strong solution denoted by \(X^{{\mathbf{u}}}\).

The class of admissible control laws is denoted by \({\mathbf{U}}\). We sometimes use the notation \({\mathbf{u}}_{t}(x)\) instead of \({\mathbf{u}}(t,x)\).

We now go on to define the controlled infinitesimal generator of the SDE above. In the present paper, we use the (somewhat non-standard) convention that the infinitesimal operator acts on the time variable as well as on the space variable; so it includes the term \(\frac{\partial }{\partial t}\).

Definition 2.3

Consider the SDE (2.1), and let ′ denote matrix transpose.

-

For any fixed \(u \in{{\mathbb{R}^{k}}}\), the functions \(\mu^{u}\), \(\sigma^{u}\) and \(C^{u}\) are defined by

$$\begin{aligned} \mu^{u} (t,x) =&\mu(t,x,u),\quad\sigma^{u} (t,x)=\sigma(t,x,u), \\ C^{u}(t,x) =&\sigma(t,x,u)\sigma(t,x,u)'. \end{aligned}$$ -

For any admissible control law \({\mathbf{u}}\), the functions \(\mu^{\mathbf{u}}\), \(\sigma^{\mathbf{u}}\), \(C^{\mathbf{u}}(t,x)\) are defined by

$$\begin{aligned} \mu^{\mathbf{u}} (t,x) =&\mu\big(t,x,{\mathbf{u}}(t,x)\big),\quad \sigma^{\mathbf{u}} (t,x)=\sigma\big(t,x,{\mathbf{u}}(t,x)\big), \\ C^{\mathbf{u}}(t,x) =&\sigma\big(t,x,{\mathbf{u}}(t,x)\big)\sigma \big(t,x,{\mathbf{u}}(t,x)\big)'. \end{aligned}$$ -

For any fixed \(u \in{{\mathbb{R}^{k}}}\), the operator \({{\mathbf{A}}} ^{u}\) is defined by

$${{\mathbf{A}}}^{u}=\frac{\partial}{\partial t} +\sum_{i=1}^{n}\mu _{i}^{u}(t,x)\frac{\partial}{\partial x_{i}}+ \frac{1}{2}\sum_{i,j=1} ^{n}C_{ij}^{u}(t,x)\frac{{\partial}^{2} }{\partial{x_{i}}\partial {x_{j}}}. $$ -

For any admissible control law \({\mathbf{u}}\), the operator \({{\mathbf{A}}}^{\mathbf{u}}\) is defined by

$${{\mathbf{A}}}^{\mathbf{u}}=\frac{\partial}{\partial t} + \sum_{i=1} ^{n}\mu_{i}^{\mathbf{u}}(t,x)\frac{\partial}{\partial x_{i}}+ \frac{1}{2}\sum_{i,j=1}^{n}C_{ij}^{\mathbf{u}}(t,x)\frac{{\partial} ^{2} }{\partial{x_{i}}\partial{x_{j}}}. $$

3 Problem formulation

In order to formulate our problem, we need an objective functional. We thus consider the two functions \(F\) and \(G\) from Definition 2.1.

Definition 3.1

For a fixed \((t,x) \in[0,T] \times\mathbb{R}^{n}\) and a fixed admissible control law \({\mathbf{u}}\), the corresponding reward functional \(J\) is defined by

Remark 3.2

In Sect. 6, we consider a more general reward functional. The restriction to the functional (3.1) above is done in order to minimize the notational complexity of the derivations below, which otherwise would be somewhat messy.

In order to have a nondegenerate problem, we need a formal integrability assumption.

Assumption 3.3

We assume that for each initial point \((t,x)\in[0,T] \times \mathbb{R}^{n}\) and each admissible control law \({\mathbf{u}}\), we have

and hence

Our objective is loosely that of maximizing \(J(t, x,{\mathbf{u}})\) for each \((t,x)\), but conceptually this turns out to be far from trivial, so instead of optimal controls we will study equilibrium controls. The equilibrium concept is made precise in Definition 3.4 below, but in order to motivate that definition, we need a brief discussion concerning the reward functional above.

We immediately note that in contrast to a standard optimal control problem, the family of reward functionals above are not connected by a Bellman optimality principle. The reasons for this are as follows:

-

The present state \(x\) appears in the function \(F\).

-

In the second term, we have (even apart from the appearance of the present state \(x\)) a nonlinear function \(G\) operating on the expected value \(E_{t,x}[ X_{T}^{{\mathbf{u}}} ]\).

Since we do not have a Bellman optimality principle, it is in fact unclear what we should mean by the term “optimal”, since the optimality concept would differ at different initial times \(t\) and for different initial states \(x\).

The approach of this paper is to adopt a game-theoretic perspective and look for subgame perfect Nash equilibrium points. Loosely speaking, we view the game as follows:

-

Consider a non-cooperative game where we have one player for each point in time \(t\). We refer to this player as “Player \(t\)”.

-

For each fixed \(t\), Player \(t\) can only control the process \(X\) exactly at time \(t\). He/she does that by choosing a control function \({\mathbf{u}}(t, \cdot)\); so the action taken at time \(t\) with state \(X_{t}\) is given by \({\mathbf{u}}(t, X_{t})\).

-

Gluing together the control functions for all players, we thus have a feedback control law \({{\mathbf{u}}}:{[0,T] \times\mathbb{R}^{n}} \rightarrow{\mathbb{R}^{k}}\).

-

Given the feedback law \({\mathbf{u}}\), the reward to Player \(t\) is given by the reward functional

$$J(t, x,{\mathbf{u}})=E_{t,x}[ F(x,X_{T}^{{\mathbf{u}}}) ] + G( {x, E _{t,x}[ X_{T}^{{\mathbf{u}}} ]} ). $$

A slightly naive definition of an equilibrium for this game would be to say that a feedback control law \(\hat{{\mathbf{u}}}\) is a subgame perfect Nash equilibrium if for each \(t\), it has the following property:

-

If for each \(s > t\), Player \(s\) chooses the control \(\hat{{\mathbf{u}}}(s, \cdot)\), then it is optimal for Player \(t\) to choose \(\hat{{\mathbf{u}}}(t, \cdot)\).

A definition like this works well in discrete time; but in continuous time, this is not a bona fide definition. Since Player \(t\) can only choose the control \(\mathbf{u}(t,\cdot)\) exactly at time \(t\), he only influences the control on a time set of Lebesgue measure zero; so for a controlled SDE of the form (2.1), the control chosen by an individual player will have no effect on the dynamics of the process. We thus need another definition of the equilibrium concept, and we in fact follow an approach first taken by [9] and [11]. The formal definition of equilibrium is as follows.

Definition 3.4

Consider an admissible control law \(\hat{{\mathbf{u}}}\) (informally viewed as a candidate equilibrium law). Choose an arbitrary admissible control law \({\mathbf{u}} \in{\mathbf{U}}\) and a fixed real number \(h >0\). Also fix an arbitrarily chosen initial point \((t,x)\). Define the control law \({\mathbf{u}}_{h}\) by

If

for all \({\mathbf{u}} \in{\mathbf{U}}\), we say that \(\hat{{\mathbf {u}}}\) is an equilibrium control law. Corresponding to the equilibrium law \(\hat{{\mathbf{u}}}\), we define the equilibrium value function \(V\) by

We sometimes refer to this as an intrapersonal equilibrium, since it can be viewed as a game between different future manifestations of your own preferences.

Remark 3.5

This is our continuous-time formalization of the corresponding discrete-time equilibrium concept.

Note the necessity of dividing by \(h\), since for most models we trivially should have

We also note that we do not get a perfect correspondence with the discrete-time equilibrium concept since if the limit above equals zero for all \({\mathbf{u}} \in{\mathbf{U}}\), it is not clear whether this corresponds to a maximum or just to a stationary point.

4 An informal derivation of the extended HJB equation

We now assume that there exists an equilibrium control law \(\hat{{\mathbf{u}}}\) (not necessarily unique), and we go on to derive an extension of the standard Hamilton–Jacobi–Bellman (henceforth HJB) equation for the determination of the corresponding value function \(V\). To clarify the logical structure of the derivation, we outline our strategy as follows:

-

We discretize (to some extent) the continuous-time problem. We then use our results from discrete-time theory to obtain a discretized recursion for \(\hat{{\mathbf{u}}}\), and we then let the time step tend to zero.

-

In the limit, we obtain our continuous-time extension of the HJB equation. Not surprisingly, it will in fact be a system of equations.

-

In the discretizing and limiting procedure, we mainly rely on informal heuristic reasoning. In particular, we do not claim that the derivation is rigorous. The derivation is, from a logical point of view, only of motivational value.

-

In Sect. 5, we then go on to show that our (informally derived) extended HJB equation is in fact the “correct” one, by proving a rigorous verification theorem.

4.1 Deriving the equation

In this section, we derive, in an informal and heuristic way, a continuous-time extension of the HJB equation. Note again that we have no claims to rigor in the derivation, which is only motivational. We assume that there exists an equilibrium law \(\hat{{\mathbf{u}}}\) and argue as follows:

-

Choose an arbitrary initial point \((t,x)\). Also choose a “small” time increment \(h >0\) and an arbitrary admissible control \({\mathbf{u}}\).

-

Define the control law \({\mathbf{u}}_{h}\) on the time interval \([t,T]\) by

$${\mathbf{u}}_{h}(s,y)= \left\{ \textstyle\begin{array}{ccl} {\mathbf{u}}(s,y)&& \mbox{for} \ t \leq s < t+h, y \in\mathbb{R}^{n}, \\ \hat{{\mathbf{u}}}(s,y)&& \mbox{for} \ t+h \leq s \leq T, y \in \mathbb{R}^{n}. \end{array}\displaystyle \right. $$ -

If now \(h\) is “small enough”, we expect to have

$$J(t,x,{\mathbf{u}}_{h}) \leq J(t,x,\hat{{\mathbf{u}}}), $$and in the limit as \(h \rightarrow0\), we should have equality if \({\mathbf{u}}(t,x)=\hat{{\mathbf{u}}}(t,x)\).

We now refer to the discrete-time results, as well as the notation, from Theorem 3.13 of [5], with \(n\) and \(n+1\) replaced by \(t\) and \(t+h\). We then obtain the inequality

Here we have used the following notation from [5]:

-

For any fixed \(y \in\mathbb{R}^{n}\), the mapping \({f^{y}}:{[0,T] \times\mathbb{R}^{n}}\rightarrow{\mathbb{R}}\) is defined by

$$f^{y}(t,x)=E_{t,x}[ F( {y,X_{T}^{\hat{{\mathbf{u}}}}} ) ]. $$ -

The function \({f}:{[0,T] \times\mathbb{R}^{n} \times\mathbb{R}^{n}} \rightarrow{\mathbb{R}}\) is defined by

$$f(t,x,y)=f^{y}(t,x). $$We sometimes also, with a slight abuse of notation, denote the entire family of functions \(\left\{ {f^{y}: y\in\mathbb{R}^{n}} \right\} \) by \(f\).

-

For any function \(k(t,x)\), the operator \({\mathbf{A}}_{h}^{{\mathbf{u}}}\) is defined by

$$( {{\mathbf{A}}_{h}^{{\mathbf{u}}}k} )(t,x)= E_{t,x}[ k(t+h, X_{t+h} ^{\mathbf{u}}) ]-k(t,x). $$ -

The function \({g}:{[0,T] \times\mathbb{R}^{n}}\rightarrow{\mathbb{R} ^{n}}\) is defined by

$$g(t,x)=E_{t,x}[ X_{T}^{\hat{{\mathbf{u}}}} ]. $$ -

The function \(G \diamond g\) is defined by

$$\left( {G \diamond g} \right) (t,x)=G\big( {x,g(t,x)} \big). $$ -

The term \({\mathbf{H}}_{h}^{{\mathbf{u}}}g\) is defined by

$$( {{\mathbf{H}}_{h}^{{\mathbf{u}}}g} )(t,x)=G\big(x,E_{t,x}[ g(t+h,X _{t+h}^{{\mathbf{u}}}) ]\big)- G\big(x,g(t,x)\big). $$

We now divide the above inequality by \(h\) and let \(h\) tend to zero. Then the term coming from the operator \({\mathbf{A}}_{h}^{{\mathbf{u}}}\) converges to the infinitesimal operator \({\mathbf{A}}^{u}\), where \(u= {\mathbf{u}}(t,x)\), but the limit of \(h^{-1}( {{\mathbf{H}}_{h}^{ {\mathbf{u}}}g} )(t,x) \) requires closer investigation.

From the definition of the infinitesimal operator, we have the approximation

and using a standard Taylor approximation, we obtain

where

We thus obtain

Collecting all results, we arrive at our proposed extension of the HJB equation. To stress the fact that the arguments above are largely informal, we state the equation as a definition rather than as proposition.

Definition 4.1

The extended HJB system of equations for \(V\), \(f\) and \(g\) is defined as follows:

-

1.

The function \(V\) is determined by

$$\begin{aligned} \sup_{u \in U(t,x)} \big( ( {{\mathbf{A}}^{u}V} )(t,x) - ( {{\mathbf{A}} ^{u}f} )(t,x,x)+( &{\mathbf{A}}^{u} f^{x})(t,x) \\ - {\mathbf{A}}^{u} ( {{G \diamond g}} )(t,x) + ( {{\mathbf{H}}^{u}g} )(t,x) \big) & = 0,\quad0 \leq t \leq T, \\ V(T,x) & = F(x,x)+G(x,x). \end{aligned}$$(4.1) -

2.

For every fixed \(y \in\mathbb{R}^{n}\), the function \((t,x) \mapsto f ^{y}(t,x)\) is defined by

$$ \textstyle\begin{array}{rcl} {\mathbf{A}}^{\hat{{\mathbf{u}}}}f^{y}(t,x)&=&0,\quad0 \leq t \leq T, \\ f^{y}(T,x)&=&F(y,x). \end{array} $$(4.2) -

3.

The function \(g\) is defined by

$$ \textstyle\begin{array}{rcl} {\mathbf{A}}^{\hat{{\mathbf{u}}}}g(t,x)&=&0, \quad0 \leq t \leq T, \\ g(T,x)&=&x. \end{array} $$(4.3)

We now have some comments on the extended HJB system:

-

The first point to notice is that we have a system of equations (4.1)–(4.3) for the simultaneous determination of \(V\), \(f\) and \(g\).

-

In the expressions above, \(\hat{{\mathbf{u}}}\) always denotes the control law which realizes the supremum in the first equation.

-

The equations (4.2) and (4.3) are the Kolmogorov backward equations for the expectations

$$\begin{aligned} f^{y}(t,x) =&E_{t,x}[ F( {y,X_{T}^{\hat{{\mathbf{u}}}}} ) ], \\ g(t,x) =&E_{t,x}[ X_{T}^{\hat{{\mathbf{u}}}} ]. \end{aligned}$$ -

In order to solve the \(V\)-equation, we need to know \(f\) and \(g\), but these are determined by the equilibrium control law \(\hat{{\mathbf{u}}}\), which in turn is determined by the sup-part of the \(V\)-equation.

-

We have used the notation

$$\begin{aligned} f(t,x,y) =&f^{y}(t,x),\quad\left( {G \diamond g} \right) (t,x)=G \big(x,g(t,x)\big), \\ {\mathbf{H}}^{u}g(t,x) =&G_{y}\big(x,g(t,x)\big) {\mathbf{A}}^{u}g(t,x), \quad G_{y}(x,y)=\frac{\partial G}{\partial y}(x,y). \end{aligned}$$ -

The operator \({\mathbf{A}}^{u}\) only operates on variables within parentheses. So for instance, the expression \(\left( {{\mathbf{A}} ^{u}f} \right) (t,x,x)\) is interpreted as \(\left( {{\mathbf{A}}^{u}h} \right) (t,x)\) with \(h\) defined by \(h(t,x)=f(t,x,x)\). In the expression \(\left( {{\mathbf{A}}^{u}f^{y}} \right) (t,x)\) the operator does not act on the upper case index \(y\), which is viewed as a fixed parameter. Similarly, in the expression \(\left( {{\mathbf{A}}^{u}f ^{x}} \right) (t,x)\), the operator only acts on the variables \(t,x\) within the parentheses, and does not act on the upper case index \(x\).

-

If \(F(x,y)\) does not depend on \(x\) and there is no \(G\)-term, the problem trivializes to a standard time-consistent problem. The terms \(\left( {{\mathbf{A}}^{u}f} \right) (t,x,x)+\left( {{\mathbf{A}}^{u}f ^{x}} \right) (t,x)\) in the \(V\)-equation cancel, and the system reduces to the standard Bellman equation

$$( {{\mathbf{A}}^{u}V} )(t,x)=0,\quad V(T,x)=F(x). $$ -

We note that the \(g\) function above appears, in a more restricted framework, already in [2, 9, 11].

4.2 Existence and uniqueness

The task of proving existence and/or uniqueness of solutions to the extended HJB system seems (at least to us) to be technically extremely difficult. We have no idea about how to proceed, so we leave it for future research. It is thus very much an open problem. See Sect. 10 for more open problems.

5 A verification theorem

As we have noted above, the derivation of the continuous-time extension of the HJB equation in the previous section was very informal. Nevertheless, it seems reasonable to expect that the system in Definition 4.1 will indeed determine the equilibrium value function \(V\). The following two conjectures are natural:

-

1.

Assume that there exists an equilibrium law \(\hat{{\mathbf {u}}}\) and that \(V\) is the corresponding value function. Assume furthermore that \(V\) is in \(C^{1,2}\). Define \(f^{y}\) and \(g\) by

$$\begin{aligned} f^{y}(t,x) =&E_{t,x}[ F(y,X_{T}^{\hat{{\mathbf{u}}}}) ], \end{aligned}$$(5.1)$$\begin{aligned} g(t,x) =&E_{t,x}[ X_{T}^{\hat{{\mathbf{u}}}} ]. \end{aligned}$$(5.2)We then conjecture that \(V\) satisfies the extended HJB system and that \(\hat{{\mathbf{u}}}\) realizes the supremum in the equation.

-

2.

Assume that \(V\), \(f\) and \(g\) solve the extended HJB system and that the supremum in the \(V\)-equation is attained for every \((t,x)\). We then conjecture that there exists an equilibrium law \(\hat{{\mathbf{u}}}\), and that it is given by the maximizing \(u\) in the \(V\)-equation. Furthermore, we conjecture that \(V\) is the corresponding equilibrium value function, and \(f\) and \(g\) allow the interpretations (5.1) and (5.2).

In this paper, we do not attempt to prove the first conjecture. Even for a standard time-consistent control problem within an SDE framework, it is well known that this is technically quite complicated, and it typically requires the theory of viscosity solutions. It is thus left as an open problem. We shall, however, prove the second conjecture. This obviously has the form of a verification result, and from standard theory, we should expect that it can be proved with a minimum of technical complexity. We now give the precise formulation and proof of the verification theorem, but first we need to define a function space.

Definition 5.1

Consider an arbitrary admissible control \({\mathbf{u}} \in{\mathbf {U}}\). We say that a function \({h}:{\mathbb{R}_{+} \times\mathbb {R}^{n}}\rightarrow {\mathbb{R}}\) belongs to the space \(L_{T}^{2}(X^{{\mathbf{u}}})\) if it satisfies the condition

for every \((t,x)\). In this expression, \(h_{x}\) denotes the gradient of \(h\) in the \(x\)-variable.

We can now state and prove the main result of the present paper.

Theorem 5.2

(Verification theorem)

Assume that (for all \(y\)) the functions \(V(t,x)\), \(f^{y}(t,x)\), \(g(t,x)\) and \(\hat{{\mathbf{u}}}(t,x)\) have the following properties:

-

1.

\(V\), \(f^{y}\) and \(g\) solve the extended HJB system in Definition 4.1.

-

2.

\(V(t,x)\) and \(g(t,x)\) are smooth in the sense that they are in \(C^{1,2}\), and \(f(t,x,y)\) is in \(C^{1,2,2}\).

-

3.

The function \(\hat{{\mathbf{u}}}\) realizes the supremum in the \(V\)-equation, and \(\hat{{\mathbf{u}}}\) is an admissible control law.

-

4.

\(V\), \(f^{y}\), \(g\) and \(G \diamond g\) as well as the function \((t,x) \mapsto f(t,x,x)\) all belong to the space \(L_{T}^{2}(X^{ \hat{{\mathbf{u}}}})\).

Then \(\hat{{\mathbf{u}}}\) is an equilibrium law, and \(V\) is the corresponding equilibrium value function. Furthermore, \(f\) and \(g\) can be interpreted according to (5.1) and (5.2).

Proof

The proof consists of two steps:

-

We start by showing that \(f\) and \(g\) have the interpretations (5.1) and (5.2) and that \(V\) is the value function corresponding to \(\hat{{\mathbf{u}}}\), i.e., that \(V(t,x)=J(t,x, \hat{{\mathbf{u}}})\).

-

In the second step, we then prove that \(\hat{{\mathbf{u}}}\) is indeed an equilibrium control law.

To show that \(f\) and \(g\) have the interpretations (5.1) and (5.2), we apply the Itô formula to the processes \(f^{y}(s,X _{s}^{\hat{{\mathbf{u}}}})\) and \(g(s,X_{s}^{\hat{{\mathbf{u}}}})\). Using (4.2) and (4.3) and the assumed integrability conditions for \(f^{y}\) and \(g\), it follows that the processes \(f^{y}(s,X_{s}^{ \hat{{\mathbf{u}}}})\) and \(g(s,X_{s}^{\hat{{\mathbf{u}}}})\) are martingales; so from the boundary conditions for \(f^{y}\) and \(g\), we obtain our desired representations of \(f^{y}\) and \(g\) as

To show that \(V(t,x)=J(t,x,\hat{{\mathbf{u}}})\), we use the \(V\)-equation (4.1) to obtain

where

Since \(f\) and \(g\) satisfy (4.2) and (4.3), we have

so that (5.5) takes the form

for all \(t\) and \(x\).

We now apply the Itô formula to the process \(V(s,X_{s}^{ \hat{{\mathbf{u}}}})\). Integrating and taking expectations gives

where the stochastic integral part has vanished because of the integrability condition \(V \in L_{T}^{2}(X^{\hat{{\mathbf{u}}}})\). Using (5.6), we thus obtain

In the same way, we obtain

Using this and the boundary conditions for \(V\), \(f\) and \(g\), we get

i.e.,

Plugging (5.3) and (5.4) into (5.7), we get

and so we obtain the desired result

We now go on to show that \(\hat{{\mathbf{u}}}\) is indeed an equilibrium law, but first we need a small temporary definition. For any admissible control law \({\mathbf{u}}\), we define \(f^{{\mathbf{u}}}\) and \(g^{{\mathbf{u}}}\) by

so that in particular, we have \(f=f^{\hat{{\mathbf{u}}}}\) and \(g=g^{\hat{{\mathbf{u}}}}\). For any \(h >0\) and any admissible control law \({\mathbf{u}} \in{\mathbf{U}}\), we now construct the control law \({\mathbf{u}}_{h}\) as in Definition 3.4. From Lemmas 3.3 and 8.8 in [5], applied to the points \(t\) and \(t+h\), we obtain

Since \({\mathbf{u}}_{h}={\mathbf{u}}\) on \([t,t+h)\), we have by continuity that \(X_{t+h}^{{\mathbf{u}}_{h}}=X_{t+h}^{{\mathbf{u}}}\), and since \({\mathbf{u}}_{h}=\hat{{\mathbf{u}}}\) on \([t+h,T]\), we have

Therefore we obtain

Furthermore, from the \(V\)-equation (4.1), we have

where we have used the notation \(u={\mathbf{u}}(t,x)\). This gives

or, after simplification,

Combining this with the expression for \(J(t,x,{\mathbf{u}}_{h})\) above, and with the fact that (as we have proved) \(V(t,x)=J(t,x, \hat{{\mathbf{u}}})\), we obtain

so

and we are done. □

6 The general case

We now turn to the most general case of the present paper, where the functional \(J\) is given by

To study this reward functional, we need a slightly modified integrability assumption.

Assumption 6.1

We assume that for each initial point \((t,x)\in[0,T] \times \mathbb{R}^{n}\) and each admissible control law \({\mathbf{u}}\), we have

The treatment of this case is very similar to the previous one; so we directly give the final result, which is the relevant extended HJB system.

Definition 6.2

Given the objective functional (6.1), the extended HJB system for \(V\) is given by (6.2)–(6.7) below:

-

1.

The function \(V\) is determined by

$$\begin{aligned} & \sup_{u \in{{\mathbb{R}^{k}}}} \big( ( {{\mathbf{A}}^{u}V} )(t,x) + H(t,x,t,x,u) -({\mathbf{A}}^{u}f)(t,x,t,x)+ ({\mathbf{A}}^{u}f^{tx})(t,x) \\ & \phantom{ \sup_{u \in{{\mathbb{R}^{k}}}} \big(}- {\mathbf{A}}^{u} \left( {{G \diamond g}} \right) (t,x) + ( {{\mathbf{H}}^{u}g} )(t,x) \big) = 0, \end{aligned}$$(6.2)with boundary condition

$$ V(T,x)=F(T,x,x)+G(T,x,x). $$(6.3) -

2.

For each fixed \(s\) and \(y\), the function \(f^{sy}(t,x)\) is defined by

$$\begin{aligned} {\mathbf{A}}^{\hat{{\mathbf{u}}}}f^{sy}(t,x)+H\big(s,y,t,x, \hat{{\mathbf{u}}}_{t}(x)\big) =&0, \quad0 \leq t \leq T , \end{aligned}$$(6.4)$$\begin{aligned} f^{sy}(T,x) =&F(s,y,x). \end{aligned}$$(6.5) -

3.

The function \(g(t,x)\) is defined by

$$\begin{aligned} {\mathbf{A}}^{\hat{{\mathbf{u}}}}g(t,x) =&0,\quad0 \leq t \leq T , \end{aligned}$$(6.6)$$\begin{aligned} g(T,x) =&x. \end{aligned}$$(6.7)

In the definition above, \(\hat{{\mathbf{u}}}\) always denotes the control law which realizes the supremum in the \(V\)-equation, and we have used the notation

Also for this case, we have a verification theorem. The proof is almost identical to that of Theorem 5.2, so we omit it.

Theorem 6.3

(Verification theorem)

Assume that for all \((s,y)\), the functions \(V(t,x)\), \(f^{sy}(t,x)\), \(g(t,x)\) and \(\hat{{\mathbf{u}}}(t,x)\) have the following properties:

-

1.

\(V\), \(f^{sy}\) and \(g\) are a solution to the extended HJB system in Definition 6.2.

-

2.

\(V\), \(f^{sy}\) and \(g\) are smooth in the sense that they are in \(C^{1,2}\).

-

3.

The function \(\hat{{\mathbf{u}}}\) realizes the supremum in the \(V\)-equation, and \(\hat{{\mathbf{u}}}\) is an admissible control law.

-

4.

\(V\), \(f^{sy}\), \(g\) and \(G \diamond g\) as well as the function \((t,x) \mapsto f(t,x,t, x)\) all belong to the space \(L_{T}^{2}(X^{ \hat{{\mathbf{u}}}})\).

Then \(\hat{{\mathbf{u}}}\) is an equilibrium law, and \(V\) is the corresponding equilibrium value function. Furthermore, \(f\) and \(g\) have the probabilistic representations

7 Infinite horizon

The results above can easily be extended to the case with infinite horizon, i.e., when \(T=+ \infty\). The natural reward functional then has the form

so that the functions \(F\) and \(G\) are not present. In this case, \(V(t,x)=f(t,x,t,x)\) and hence \(\left( {{\mathbf{A}}^{u}V} \right) (t,x)=( {\mathbf{A}}^{u}f)(t,x,t,x)\). The extended HJB system is thus reduced to the system

We also have an obvious verification theorem, where the relevant integrability condition is that for each \((s,y)\), the function \(f^{sy}(t,x)\) must belong to \(L_{T}^{2}(X^{\hat{{\mathbf{u}}}})\) for all finite \(T\). The proof is almost identical to the earlier case.

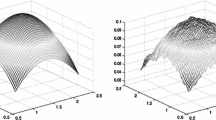

8 Example: the time-inconsistent linear-quadratic regulator

To illustrate how the theory works in a simple case, we consider a variation of the classical linear-quadratic regulator. Other “quadratic” control problems are considered in [2, 6, 8], which study mean-variance problems within the present game-theoretic framework. In the papers [19] and [20], the authors study the mean-variance criterion where you are continuously rolling over instantaneously updated pre-committed strategies.

The model we consider is specified as follows:

-

The value functional for Player \(t\) is given by

$$E_{t,x}\left[ \frac{1}{2}\int_{t}^{T}u_{s}^{2}ds \right] + \frac{ \gamma}{2}E_{t,x}[ \left( {X_{T}-x} \right) ^{2} ], $$where \(\gamma\) is a positive constant.

-

The state process \(X\) is scalar with dynamics

$$dX_{t}=(aX_{t} + bu_{t})dt+\sigma dW_{t}, $$where \(a\), \(b\) and \(\sigma\) are given constants.

-

The control \(u\) is scalar with no constraints.

This is a time-inconsistent version of the classical linear-quadratic regulator. The time-inconsistency stems from the fact that the target point \(x=X_{t}\) is changing as time goes by. In discrete time, this problem is studied in [5]. For this problem, we have

and as usual we introduce the functions \(f^{y}(t,x)\) and \(f(t,x,x)\) by

In the present case, we have \(V(t,x)=f(t,x,x)\) and it is easy to see that the extended HJB system of Sect. 6 takes the form

From the \(X\)-dynamics, we see that

Denoting for shortness of notation partial derivatives by lower case indices (so, for example, \(f_{x}=\frac{\partial f}{\partial x}\)), we thus obtain the extended HJB equation

The coupled system for \(f^{y}\) is given by

The first order condition in the HJB equation gives \(\hat{{\mathbf{u}}}(t,x)={-b f_{x}(t,x,x)} \), and inspired by the standard regulator problem, we now make the ansatz

where all coefficients are deterministic functions of time. We now insert the ansatz into the HJB system, and perform a number of extremely boring calculations. As a result, it turns out that the variables separate in the expected way and we have the following result.

Proposition 8.1

For the time-inconsistent regulator, the function \(f\) is given by (8.1), and the equilibrium control is given by

where the coefficient functions solve the following system of ODEs:

with boundary conditions

Proof

It remains to check that the technical conditions of the verification theorem are satisfied. Firstly we need to check that the candidate equilibrium control in (8.2) is admissible. Since the equilibrium control is linear, the equilibrium state dynamics are linear; so admissibility is clear. Secondly we need to check that the functions \(V(t,x)\), \(f(t,x,x)\) and \(f^{y}(t,x)\) are in \(L_{T}^{2}( {X^{\hat{{\mathbf{u}}}}} )\). Since the state dynamics are linear with constant diffusion term, the condition for a function \(h(t,x)\) to be in \(L_{T}^{2}( {X^{\hat{{\mathbf{u}}}}} )\) is simply that

In our case, \(V(t,x)=f(t,x,x)\) which is quadratic in \(x\). This implies that \(V_{x}^{2}(t,x)\) is quadratic in \(x\), and since the dynamics for \(X^{\hat{{\mathbf{u}}}}\) are linear, we have square-integrability; so the integrability condition above is satisfied. The same argument applies to \(f^{y}(t,x)\) for every fixed \(y\). □

9 Example: a Cox–Ingersoll–Ross production economy with time-inconsistent preferences

In this section, we apply the previously developed theory to a rather detailed study of a general equilibrium model for a production economy with time-inconsistent preferences. The model under consideration is a time-inconsistent analogue of the classic Cox–Ingersoll–Ross model in [7]. Our main objective is to investigate the structure of the equilibrium short rate, the equilibrium Girsanov kernel, and the equilibrium stochastic discount factor.

There are a few earlier papers on equilibrium with time-inconsistent preferences. In [1] and [17], the authors study continuous-time equilibrium models of a particular type of time-inconsistency, namely non-exponential discounting. While [1] considers a deterministic neoclassical model of economic growth, [17] analyze general equilibrium in a stochastic endowment economy.

Our present study is much inspired by the earlier paper [15] which in very great detail studies equilibrium in a very general setting of an endowment economy with dynamically inconsistent preferences that are not limited to the particular case of non-exponential discounting.

Unlike the papers mentioned above, which all studied endowment models, we study a stochastic production economy of Cox–Ingersoll–Ross type.

9.1 The model

We start with some formal assumptions concerning the production technology.

Assumption 9.1

We assume that there exists a constant-returns-to-scale physical production technology process \(S\) with dynamics

The economic agents can invest unlimited positive amounts in this technology, but since it is a matter of physical investment, short positions are not allowed.

From a purely formal point of view, investment in the technology \(S\) is equivalent to investing in a risky asset with price process \(S\), with the constraint that short selling is not allowed.

We also need a risk-free asset, and this is provided by the next assumption.

Assumption 9.2

We assume that there exists a risk-free asset in zero net supply with dynamics

where \(r\) is the short rate process, which will be determined endogenously. The risk-free rate \(r\) is assumed to be of the form

where \(X\) denotes the portfolio value of the representative investor (to be defined below).

Interpreting the production technology \(S\) as above, the wealth dynamics is given by

where \(u\) is the portfolio weight on production, so \(1-u\) is the weight on the risk-free asset. Finally, we need an economic agent.

Assumption 9.3

We assume that there exists a representative agent who at every point \((t,x)\) wishes to maximize the reward functional

9.2 Equilibrium definitions

We now go on to study equilibrium in our model. We shall in fact have two equilibrium concepts:

-

intrapersonal equilibrium;

-

market equilibrium.

The intrapersonal equilibrium is related to the lack of time-consistency in preferences, whereas the market equilibrium is related to market clearing. We now discuss these concepts in more detail.

9.2.1 Intrapersonal equilibrium

Consider, for a given short rate function \(r(t,x)\), the control problem with reward functional

and wealth dynamics

where \(r_{t}\) is shorthand for \(r(t,X_{t})\). If the agent wants to maximize the reward functional for every initial point \((t,x)\), then, because of the appearance of \((t,x)\) in the utility function \(U\), this is a time-inconsistent control problem. In order to handle this situation, we use the game-theoretic setup and results developed in Sects. 1–6 above. This subgame perfect Nash equilibrium concept is henceforth referred to as the intrapersonal equilibrium.

9.2.2 Market equilibrium

By a market equilibrium, we mean a situation where the agent follows an intrapersonal equilibrium strategy and the market clears for the risk-free asset. The formal definition is as follows.

Definition 9.4

A market equilibrium of the model is a triple of real-valued functions \(\hat{c}(t,x),\hat{u}(t,x),r(t,x)\) such that the following hold:

-

1.

Given the risk-free rate of the functional form \(r(t,x)\), the intrapersonal equilibrium consumption and investment are given by \(\hat{c}\) and \(\hat{u}\) respectively.

-

2.

The market clears for the risk-free asset, i.e.,

$$\hat{u}(t,x)\equiv1. $$

9.3 Main goals of the study

As will be seen below, there is a unique equilibrium martingale measure \(Q\) with corresponding likelihood process \(L_{t} = \frac{dQ}{dP} \vert_{\mathcal{F}_{t}}\), where \(L\) has dynamics

The process \({\varphi}\) is referred to as the equilibrium Girsanov kernel. There is also an equilibrium short rate process \(r\), which is related to \({\varphi}\) by the standard no-arbitrage relation

which says that \(S/B\) is a \(Q\)-martingale. There is also a unique equilibrium stochastic discount factor \(M\) defined by

For ease of notation, however, we only identify the stochastic discount factor \(M\) up to a multiplicative constant; so for any arbitrage-free (non-dividend) price process \((p_{t})\), we have the pricing equation

Our goal is to obtain expressions for \({\varphi}\), \(r\) and \(M\).

9.4 The extended HJB equation

In order to determine the intrapersonal equilibrium, we use the results from Sect. 6. At this level of generality, we are not able to provide a priori conditions on the model which guarantee that the conditions of the verification theorem are satisfied. For the general theory below (but of course not for the concrete example presented later), we are thus forced to make the following ad hoc assumption.

Assumption 9.5

We assume that the model under study is such that the verification theorem is in force.

This assumption of course has to be checked for every concrete application. In Sect. 9.8.2, we consider the example of power utility and for this case, we can in fact prove that the assumption is satisfied.

In the present case, we have \(V(t,x)=f(t,x,t,x)\) and it is easy to see that we can write the extended HJB equation as

and \(f^{sy}\) is determined by

with the probabilistic representation

The term \({\mathbf{A}}^{u,c}f^{tx}(t,x)\) is given by

where \(f\) and the derivatives are evaluated at \((t,x,t,x)\) and we use the notation

The first order conditions for an interior optimum are

9.5 Determining market equilibrium

In order to determine the market equilibrium, we use the equilibrium condition \(\hat{u}=1\). Plugging this into (9.2), we immediately obtain our first result.

Proposition 9.6

With the assumptions as above, the following hold:

-

The equilibrium short rate is given by

$$ r(t,x)=\alpha+ \sigma^{2} \frac{xf_{xx}(t,x,t,x)}{f_{x}(t,x,t,x)}. $$(9.3) -

The equilibrium Girsanov kernel \({\varphi}\) is given by

$$ {\varphi}(t,x)=\sigma\frac{xf_{xx}(t,x,t,x)}{f_{x}(t,x,t,x)}. $$(9.4) -

The extended equilibrium HJB system has the form

$$ \textstyle\begin{array}{rcl} \displaystyle U(t,x,t,\hat{c}) +f_{t}+ ( {\alpha x -\hat{c}} )f_{x}+\frac{1}{2}x ^{2} \sigma^{2} f_{xx}&=&0, \\ {\mathbf{A}}^{\hat{{\mathbf{c}}}}f^{sy}(t,x)+U\left( {s,y,t,\hat{c}(t,x)} \right) &=&0. \end{array} $$(9.5) -

The equilibrium consumption \(\hat{c}\) is determined by the first order condition

$$U_{c}(t,x,t,\hat{c})=f_{x}(t,x,t,x). $$ -

The term \({\mathbf{A}}^{\hat{{\mathbf{c}}}}f^{tx}(t,x)\) is given by

$${\mathbf{A}}^{\hat{{\mathbf{c}}}}f^{tx}(t,x)=f_{t}+ x\left( {\alpha-r} \right) f_{x}+ (rx-\hat{c})f_{x}+\frac{1}{2}x^{2} \sigma^{2} f_{xx}. $$ -

The equilibrium dynamics for \(X\) are given by

$$dX_{t}=( {\alpha X_{t} -\hat{c}_{t}} )dt + X_{t}\sigma dW_{t}. $$

Proof

The formula (9.4) follows from (9.3) and (9.1). The other results are obvious. □

9.6 Recap of standard results

We can compare the above results with the standard case where the utility functional for the agent is of the time-consistent form

In this case, we have a standard HJB equation of the form

and the equilibrium quantities are given by the well-known expressions

We note the strong structural similarities between the old and the new formulas, but we also note important differences. Let us take the formulas for the equilibrium short rate \(r\) as an example. We recall the standard and the time-inconsistent formulas

For the time-inconsistent case, we have the relation \(V^{e}(t,x)=f(t,x,t,x)\) (where temporarily, and for the sake of clearness, \(V^{e}\) denotes the equilibrium value function); so it is tempting to think that we should be able to write (9.9) as

which would be structurally identical to (9.8). This, however, turns out to be incorrect: Since \(V^{e}(t,x)=f(t,x,t,x)\), we have \(V_{x}^{e}(t,x)=f_{x}(t,x,t,x) + f_{y}(t,x,t,x)\), where \(f_{y}\) is the partial derivative \(\frac{\partial f}{\partial y}(t,x,s,y)\), and a similar argument holds for the term \(V_{xx}^{e}\). We thus see that formally replacing \(V\) by \(V^{e}\) in (9.8) does not give (9.9).

9.7 The stochastic discount factor

We now go on to investigate our main object of interest, namely the equilibrium stochastic discount factor \(M\). We recall from general arbitrage theory that

where \(L\) is the likelihood process \(L_{t}=\frac{dQ}{dP} \vert_{\mathcal{F}_{t}}\), with \(dL_{t}=L_{t}{\varphi}_{t}dW_{t}\). This immediately gives the dynamics of \(M\) as

so we can identify the short rate \(r\) and the Girsanov kernel \({\varphi}\) from the dynamics of \(M\).

From Proposition 9.6, we know \(r\) and \({\varphi}\), so in principle we have in fact already determined \(M\); but we now want to investigate the relation between \(M\), the direct utility function \(U\), and the indirect utility function \(f\) in the extended HJB equation.

We recall from standard theory that for the usual time-consistent case, the (non-normalized) stochastic discount factor \(M\) is given by

or equivalently by

along the equilibrium path. In our present setting, we have

so a conjecture could perhaps be that the stochastic discount factor for the time-inconsistent case is given by one of the formulas

along the equilibrium path. In order to check if any of these formulas are correct, we only have to compute the corresponding differential \(dM_{t}\) and check whether it satisfies (9.10). It is then easily seen that none of the above formulas for \(M\) is correct. The situation is thus more complicated, and we now go on to derive the correct representation of the stochastic discount factor.

9.7.1 A representation formula for \(M\)

We now go back to the time-inconsistent case with utility of the form

We present below a representation for the stochastic discount factor \(M\) in the production economy, but first we need to introduce some new notation.

Definition 9.7

Let \(X\) be a (possibly vector-valued) semimartingale and \(Y\) an optional process. For a \(C^{2}\) function \(f(x,y)\) we introduce the “partial stochastic differential” \(\partial_{x}\) by the formula

The intuitive interpretation of this is that

and we have the following proposition, which generalizes the standard result for the time-consistent theory.

Theorem 9.8

The stochastic discount factor \(M\) is determined by

where the partial differential \(\partial_{t,x}\) only operates on the variables \((t,x)\) in \(f_{x}(t,x,s,y)\). Alternatively, we can write

where \(Z\) is determined by

Remark 9.9

Note again that the operator \(\partial_{tx}\) in (9.13) only acts on the first occurrence of \(t\) and \(X_{t}\) in \(f_{x} \left( {t,X_{t},t, X_{t}} \right) \), whereas the operator \(d\) acts on the entire process \(t \mapsto f_{x}\left( {t,X_{t},t, X_{t}} \right) \).

Proof

of Theorem 9.8 Formulas (9.12) and (9.13) follow from (9.11) and the first order condition \(U_{c}( {t,X_{t},t, \hat{c}_{t}} ) =f_{x} ( {t,X_{t},t, X_{t}} )\). It thus remains to prove (9.11).

From (9.10), it follows that we need to show that

where \(r\) and \({\varphi}\) are given by (9.3) and (9.4). Applying Itô’s formula and the definition of \(\partial_{t,x}\), we obtain

where

From (9.4), we see that indeed \(B(t,x)={\varphi}(t,x)\); so, using (9.3), it remains to show that

To show this, we differentiate the equilibrium HJB equation (9.5), use the first order condition \(U_{c}=f_{x}\), and obtain

where \(f_{tx}=f_{tx}(t,x,t,x)\) and similarly for other derivatives, \(\hat{c}=\hat{c}(t,x)\) and \(U_{x}=U_{x}( {t,x,t,\hat{c}(t,x)} )\). From the extended HJB system, we also recall the PDE for \(f^{sy}\) as

Differentiating this equation with respect to the variable \(y\) and evaluating at \((t,x,t,x)\) and \(\hat{c}(t,x)\), we obtain

We can now plug this into (9.16) to obtain

Plugging this into (9.14), we can write \(A\) as

which is exactly (9.15). □

9.8 Production economy with non-exponential discounting

A case of particular interest occurs when the utility function is of the form

so the utility functional has the form

9.8.1 Generalities

In the case of non-exponential discounting, it is natural to consider the case with infinite horizon. We thus assume that \(T= \infty\) so that we have the functional

The function \(f(t,x,s,y)\) will now be of the form \(f(t,x,s)\), and because of the time-invariance, it is natural to look for time-invariant equilibria where

Observing that \(f_{x}(t,x,t)=g_{x}(0,x)=V_{x}(x)\) and similarly for second order derivatives, we may now restate Proposition 9.6.

Proposition 9.10

With the assumptions as above, the following hold:

-

The equilibrium short rate is given by

$$r(x)=\alpha+ \sigma^{2} \frac{xV_{xx}(x)}{V_{x}(x)}. $$ -

The equilibrium Girsanov kernel \({\varphi}\) is given by

$${\varphi}(x)=\sigma\frac{xV_{xx}(x)}{V_{x}(x)}. $$ -

The extended equilibrium HJB system has the form

$$\begin{aligned} U(\hat{c}) +g_{t}(0,x)+ ( {\alpha x -\hat{c}} )g_{x}(0,x)+\frac{1}{2}x ^{2} \sigma^{2} g_{xx}(0,x) =&0, \\ {\mathbf{A}}^{\hat{{\mathbf{c}}}}g(t,x)+\beta(t)U\big( {\hat{c}(x)} \big) =&0. \end{aligned}$$ -

The function \(g\) has the representation

$$g(t,x)=E_{0,x}\left[ \int_{0}^{\infty}\beta(t+ s)U( {\hat{c}_{s}} )ds \right] . $$ -

The equilibrium consumption \(\hat{c}\) is determined by the first order condition

$$ U_{c}(\hat{c})=g_{x}(0,x). $$(9.17) -

The term \({\mathbf{A}}^{\hat{{\mathbf{c}}}}g(t,x)\) is given by

$$ {\mathbf{A}}^{\hat{{\mathbf{c}}}}g(t,x)=g_{t}(t,x)+ \left( {\alpha x - \hat{c}(x)} \right) g_{x}(t,x)+ \frac{1}{2}x^{2} \sigma^{2} g_{xx}(t,x). $$ -

The equilibrium dynamics of \(X\) are given by

$$ dX_{t}=( {\alpha X_{t} -\hat{c}_{t}} )dt + X_{t}\sigma dW_{t}. $$(9.18)

We see that the short rate \(r\) and the Girsanov kernel \({\varphi}\) have exactly the same structural form as the standard case formulas (9.6) and (9.7). We now move to the stochastic discount factor and after some calculations, we have the following version of Theorem 9.8.

Proposition 9.11

The stochastic discount factor \(M\) is determined by

where \(g_{x}\) is evaluated at \((0,X_{t})\). Alternatively, we can write \(M\) as

9.8.2 Power utility

We now specialize to the case of a constant relative risk aversion (CRRA) utility of the form

with \(\gamma> 0\), \(\gamma\neq1\). We make the obvious ansatz

where \(a\) and \(b\) are deterministic functions of time. The natural boundary conditions are

From the first order condition (9.17) for \(c\), we have the equilibrium consumption given by

where

From Proposition 9.10, the short rate and the Girsanov kernel are

The function \(a\) is determined by the equilibrium HJB equation for \(g\), which leads us to the linear ODE

with

9.8.3 Checking the verification theorem conditions for power utility

We now need to check that he conditions in the verification theorem of Sect. 7 are satisfied, i.e., we have to check that for each \(s\), the function \(f^{s}(t,x)\) belongs to \(L_{T}^{2}( {X^{ \hat{{\mathbf{c}}}}} )\) for all positive finite \(T\). From the equilibrium dynamics (9.18), we see that a function \(h(t,x)\) belongs to \(L_{T}^{2}( {X^{\hat{{\mathbf{c}}}}} )\) if and only if it satisfies the condition

where \(\hat{X}\) denotes the equilibrium state process. In our case, \(f^{s}(t,x)=g(t-s,x)\); so using (9.19), we only need to check the condition

Using the equilibrium dynamics of \(X\), it is easy to see that

where

So the integrability condition is indeed satisfied.

From this example with non-exponential discounting, we see that the risk-free rate and Girsanov kernel only depend on the production opportunities in the economy. These objects are unaffected by the time-inconsistency stemming from non-exponential discounting. The equilibrium consumption, however, is determined by the discounting function of the representative agent.

10 Conclusion and open problems

In this paper, we have presented a fairly general class of time-inconsistent stochastic control problems. Using a game-theoretic perspective, we have derived an extended HJB system of PDEs for the determination of the equilibrium control as well as for the equilibrium value function. We have proved a verification theorem, and we have studied a couple of concrete examples. For more examples and extensions, see the working paper [3]. Some obvious open research problems are the following:

-

A theorem proving convergence of the discrete-time theory to the continuous-time limit. For the quadratic case, this is done in [8], but the general problem is open.

-

An open and difficult problem is to provide conditions on primitives which guarantee that the functions \(V\) and \(f\) are regular enough to satisfy the extended HJB system.

-

A related (hard) open problem is to prove existence and/or uniqueness for solutions of the extended HJB system.

-

Another related problem is to give conditions on primitives which guarantee that the assumptions of the verification theorem are satisfied.

-

The present theory depends critically on the Markovian structure. It would be interesting to see what can be done without this assumption.

References

Barro, R.: Ramsey meets Laibson in the neoclassical growth model. Q. J. Econ. 114, 1125–1152 (1999)

Basak, S., Chabakauri, G.: Dynamic mean-variance asset allocation. Rev. Financ. Stud. 23, 2970–3016 (2010)

Björk, T., Khapko, M., Murgoci, A.: Time inconsistent stochastic control in continuous time: Theory and examples. Working paper (2016). Available online at http://arxiv.org/abs/1612.03650

Björk, T., Murgoci, A.: A general theory of Markovian time inconsistent stochastic control problems. Working paper (2010). Available online at https://ssrn.com/abstract=1694759

Björk, T., Murgoci, A.: A theory of Markovian time-inconsistent stochastic control in discrete time. Finance Stoch. 18, 545–592 (2014)

Björk, T., Murgoci, A., Zhou, X.Y.: Mean-variance portfolio optimization with state dependent risk aversion. Math. Finance 24, 1–24 (2014)

Cox, J., Ingersoll, J., Ross, S.: An intertemporal general equilibrium model of asset prices. Econometrica 53, 363–384 (1985)

Czichowsky, C.: Time-consistent mean-variance portfolio selection in discrete and continuous time. Finance Stoch. 17, 227–271 (2013)

Ekeland, I., Lazrak, A.: The golden rule when preferences are time inconsistent. Math. Financ. Econ. 4, 29–55 (2010)

Ekeland, I., Mbodji, O., Pirvu, T.A.: Time-consistent portfolio management. SIAM J. Financ. Math. 3, 1–32 (2010)

Ekeland, I., Pirvu, T.A.: Investment and consumption without commitment. Math. Financ. Econ. 2, 57–86 (2008)

Goldman, S.: Consistent plans. Rev. Econ. Stud. 47, 533–537 (1980)

Harris, C., Laibson, D.: Dynamic choices of hyperbolic consumers. Econometrica 69, 935–957 (2001)

Harris, C., Laibson, D.: Instantaneous gratification. Q. J. Econ. 128, 205–248 (2013)

Khapko, M.: Asset pricing with dynamically inconsistent agents. Working paper (2015). Available online at https://ssrn.com/abstract=2854526

Krusell, P., Smith, A.: Consumption and savings decisions with quasi-geometric discounting. Econometrica 71, 366–375 (2003)

Luttmer, E., Mariotti, T.: Subjective discounting in an exchange economy. J. Polit. Econ. 111, 959–989 (2003)

Marín Solano, J., Navas, J.: Consumption and portfolio rules for time-inconsistent investors. Eur. J. Oper. Res. 201, 860–872 (2010)

Pedersen, J.L., Peskir, G.: Optimal mean-variance portfolio selection. Math. Financ. Econ. 11, 137–160 (2017)

Pedersen, J.L., Peskir, G.: Optimal mean-variance selling strategies. Math. Financ. Econ. 10, 203–220 (2016)

Peleg, B., Menahem, E.: On the existence of a consistent course of action when tastes are changing. Rev. Econ. Stud. 40, 391–401 (1973)

Pirvu, T.A., Zhang, H.: Investment-consumption with regime-switching discount rates. Math. Soc. Sci. 71, 142–150 (2014)

Pollak, R.: Consistent planning. Rev. Econ. Stud. 35, 185–199 (1968)

Strotz, R.: Myopia and inconsistency in dynamic utility maximization. Rev. Econ. Stud. 23, 165–180 (1955)

Vieille, N., Weibull, J.: Multiple solutions under quasi-exponential discounting. Econ. Theory 39, 513–526 (2009)

Acknowledgements

The authors are greatly indebted to the Associate Editor, two anonymous referees, Ivar Ekeland, Ali Lazrak, Martin Schweizer, Traian Pirvu, Suleyman Basak, Mogens Steffensen, and Eric Böse-Wolf for very helpful comments.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Björk, T., Khapko, M. & Murgoci, A. On time-inconsistent stochastic control in continuous time. Finance Stoch 21, 331–360 (2017). https://doi.org/10.1007/s00780-017-0327-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00780-017-0327-5

Keywords

- Time-consistency

- Time-inconsistency

- Time-inconsistent control

- Dynamic programming

- Stochastic control

- Bellman equation

- Hyperbolic discounting

- Mean-variance

- Equilibrium