Abstract

Driving an automated vehicle requires a clear understanding of its automation capabilities and resulting duties on the driver’s side. This is true across all levels of automation but especially so on SAE levels 3 and below, where the driver has an active driving task performance and/or monitoring role. If the automation capabilities and a driver’s understanding of them do not match, misuse can occur, resulting in decreased safety. In this paper, we present the results from a simulator study that investigated driving mode awareness support via ambient lights across automation levels 0, 2, and 3. We found lights in the steering wheel to be useful for momentary and lights below the windshield for permanent indication of automation-relevant information, whereas lights in the footwell showed to have little to no positive effects on driving mode awareness.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

With more degrees of automation, vehicles can perform an increasing number of driving tasks in an ever wider growing range of driving environments. As long as full automation (Level 5 on the SAE scale [21]) is not achieved, both the range of tasks that can be performed by the vehicle on its own, as well as the range of contexts the automated vehicle can be operated in (also referred to as the Operational Design Domain (ODD)), are limited. Properly interacting with any vehicle requires that the driver knows about its capabilities and limitations. This is especially the case when the vehicle is equipped with automation functions that can be manually activated or deactivated. Different degrees of automation require different levels of involvement and making these known to the driver (thereby ensuring mode awareness [7]) is part of appropriate interaction design.

As very recent incidents have shown [15], this is unfortunately not always clear to drivers of modern vehicles. With vehicle automation technology being still relatively novel and rapidly advancing at the same time, it can be expected that properly calibrating the public’s and drivers’ perception of vehicle automation will remain a challenge for quite some time [1, 38]. As one of the forerunners of widespread vehicle automation on public roads, Tesla uses the hands-on-steering wheel visual metaphor (already before but especially after the incident in 2016 [12]) to communicate the active driver and observer role on SAE Level 2, with others using similar designs [2, 48].

The spectrum of vehicle automation and driver involvement is greater, however, than what can be summed up via the dichotomy of hands-on versus hands-off the steering wheel. Higher levels of automation also allow the driver to stop monitoring the driving environment and engage in tasks that are not related to the driving for longer periods of time and/or with higher levels of cognitive engagement. Such tasks are referred to as Non-Driving-Related Activities (NDRAs). NDRAs encompass anything that is not directly related to operating the vehicle, including simple and short tasks such as adjusting the radio volume or more involved ones such as reading a newspaper. Thus, NDRAs are relevant and a potential safety hazard from SAE Level 0 onward.

Designing for the driver space is a balancing act between providing information and causing distraction, as the driver role is complex and requires divided attention to the driving environment, vehicle controls, and already present in-vehicle indicators. As such, adding additional indicators needs to be done sparingly and with care. Ambient lights have been proposed for calibrating drivers’ attention in automated vehicles, in particular related to transitions on SAE Level 3 [17, 32]. We adapted this idea for mode awareness and NDRA-support, intending to use (ambient) lights as secondary indicators, that communicate the current degree of automation (and by extension, whether or which NDRAs can be performed) to the driver without capturing their attention to the same degree a traditional visual indicators would. In this paper, we present the results from a simulator study, in which we investigated a setup consisting of ambient lights on the steering wheel, below the windshield, and in the footwell.

The study focused on investigating the suitability of ambient lights to assist the driver of an automated vehicle. More specifically, the aim was to support communicating the current degree of automation, as well as information regarding whether NDRAs can be performed, as well as assist with transitions between manual and automated driving modes. To this end, the study was guided by the following research questions:

-

RQ1: Is an ambient light interface installed in the steering wheel, below the windshield, and in the footwell an effective transition support for the driver between manual and automated driving modes in terms of (a) being aware of the current mode of vehicle automation and (b) understanding the currently required driver engagement (driving tasks, NDRAs)?

-

RQ2: Does the cognitive workload increase/decrease when an ambient light interface is active?

-

RQ3: Does the proposed light interface influence the driver’s trust in the vehicle?

-

RQ4: Is there an advantage when using animated vs. statics lights in terms of RQs1–3?

2 Related work

This section gives an overview of current interfaces to support mode awareness, ambient light displays and non-driving-related activities (NDRAs) in automated driving.

2.1 Mode awareness

Mode Awareness can be described as driver’s awareness of the current level of automation, capabilities of the system, and their responsibilities in the current mode [39]. The current level of automation is part of the driving situation, so mode awareness can be considered a part of Situation Awareness [4] in the narrower sense, limited to systems and contexts with automated functions that can be activated or deactivated.

Mode confusion is a common problem in every type of automation-human interaction and crucial to avoid to build trust and safety in automated driving. Different fields have worked on developing interfaces, tools, and methods to support mode awareness of drivers; however, current solutions to support mode awareness are either causing overload of information or lacking the depth of information to improve mode awareness [8]. Mode information is most commonly displayed as written information with or without abbreviations on the central information cluster or with a different type of HMI (for example HUD displays) [50]. This written information, in general, tells the driver if an automation mode (automation assistant, autopilot) is active or not [10, 11, 40, 41].

In some cases, this information is represented with certain graphical representations, such as icons, symbols, and flashing elements to draw attention to the screen displaying the information [19, 27, 42]. To prevent an excessive use of visual displays, several multi-modal interfaces were developed. Amongst these, auditory feedback is often used to notify the driver about certain actions to avoid further visual distractions [26, 42, 47]. Haptic or vibro-tactile interfaces on the steering wheel or the seat are designed and tested [22, 23, 28, 43, 45]. Other than these, olfactory, thermal, and pitch motion feedback are explored as complementary solution to screens inside the car [5, 35, 51]. However, a majority of studies were conducted with ambient light cues in the peripheral vision of the driver.

2.2 Ambient light displays

Up to date, ambient lights displays in peripheral vision are used to support the drivers in many different conditions. These ambient light displays are often mounted at the bottom of the windshield, on the sides, along the a-pillar and/or on the steering wheel [32]. Several studies involve helping with certain tasks such lane change decision [31, 33], speed regulation [36], and reverse parking [18]. In the context of automated driving, ambient lights are developed to provide warning signals and in most cases, cues for the driver to action, e.g. aiming to reduce takeover times without increasing the workload [3, 28] or increasing trust [24, 30]. During highly automated driving, the peripheral cues could be beneficial for overcoming motion sickness [13]. There are only a few studies involving ambient light interfaces to support mode awareness [10, 11], in most cases communicating only binary (on/off) information to the driver [8, 37].

2.3 Non-driving-related activities (NDRAs)

The main advantage of automated driving for drivers is having the opportunity to engage in non-driving-related activities (NDRAs). However, distraction and high engagement caused by NDRAs could be one of the important challenges of the future of automated driving. Studies investigating driver’s needs and preferences for NDRAs during automated driving showed the most desired activities gather around using mobile and multimedia applications in the car [6, 16, 44]. Furthermore, in one of these studies, participants ranked interacting with touch screens and integrated keyboards highest as preferred input technology [44]. When it comes to simulation studies, NDRAs are used as a distraction to cause cognitive overload for the driver, to investigate the level of engagement, to measure trust in automation [6], and to measure the required time for takeovers in automated driving [9, 25, 34, 52,53,54].

3 The ambient light interface

Our ambient light interface is intended as a supportive display that can communicate baseline information on a high level of abstraction. Additionally, it shall be able to direct the driver’s attention towards relevant parts of the cabin for important observation or interaction tasks, based on the current degree of automation.

3.1 Design principles and automated driving levels

The interface was not intended to be ambient in the sense of illuminating the entire cabin. Rather, the overall idea behind the light interface was to provide visual background support in the areas of the cabin that are relevant to the main tasks when driving: lateral control, longitudinal control, and monitoring the driving environment. Thus, we used lights in the steering wheel (lateral), the footwell (longitudinal), and below the windshield (monitoring).

One of the intended main purposes of the light interface was to visualise different degrees of automation with distinct lighting. The SAE J3016 standard defines six levels of automation from Level 0 to Level 5 [21]. The range consists of no automation (0), driver assistance (1), partial automation (2), conditional automation (3), high automation (4), and full automation (5). From a human-centred perspective, Levels 2 and 3 are considered particularly challenging [37], as both still require considerable involvement on the human in parallel to the automation.

The SAE Levels of Automation [21] are defined on a system level, which means that a vehicle can be equipped with systems of a certain level (depending of how much of the driving task the system is able to perform). These systems can be active or inactive, requiring more or less driver attention or input as a result but it is, per definition, not possible to transition from one SAE Level to another nor is it technically correct to speak of a “level X automated vehicle” instead of an automated system according to the standard.

Therefore, in order to be able to investigate different levels of driver engagement during the same drive, we defined Automated Driving Levels (ADLs) based on the SAE Levels of Automation [21]. An ADL applies to the vehicle as a whole and is not defined on the system level, and rather to be understood as a driving mode. This also means that the vehicle can switch between ADLs while driving. E.g., if an SAE Level 3 system is active, the vehicle is driving in ADL3. When the system is inactive, the vehicle reverts to ADL0 (manual mode) or another ADL, if another automation system is activated as the Level 3 system is deactivated (e.g. ADL1 if cruise control is activated. Thus, as far as the automation on the functional level is concerned, the ADLs correspond to the SAE Levels. The main difference relevant to the purpose of this paper is that by translating the SAE Levels to ADLs, the system-level automation can be reflected on the same scale while driving. While it might seem a technicality, it is important to remain consistent with the technical standards and acknowledge deviations.

For this study, we focused on ADLs 0 (manual), 2, and 3 (corresponding to SAE Levels 0, 2, and 3, respectively). ADL0 simply because it is the standard mode of driving when no automation is active, ADL2 because it is the highest mode of automation that still requires full driver attention, and ADL3 as the lowest automation mode that enables extended NDRAs.

3.2 Automated driving level indicator colours

We used three colours to communicate the different ADLs: white, blue, and cyan. White is the indication of full manual control when driver’s full control and attention is required. Blue indicates the ADL2 condition in which driver’s attention is still required but controls are handed to the car. Cyan is used to indicate ADL3. Only on ADL3 is the driver allowed to perform NDRAs while taking attention off the road.

These colours were chosen based on the results of an online preparatory survey (N=68). In it, we asked participants to rate eight colours (white, green, blue, cyan, magenta, red, orange, yellow) regarding the perceived degree of vehicle automation, driver engagement, and urgency. The survey consisted of two parts: an individual colour rating and cross-colour ranking. In the rating-part, the participants were presented with an image of a vehicle cockpit, overlaid with one of the investigated colours (see Fig. 1) and a context description (a vehicle equipped with automation functions but that can also be driven fully manually.

They were then asked to rate their perceived degrees of vehicle automation, driver engagement, and urgency related to that colour within the situation on a scale from 0 to 100 via three separate sliders. This was repeated for every colour (eight in total). At the end, the participants were then shown all colours at once (only the basic as a coloured square without the cabin overlay) and asked to rank them from highest to lowest according to their perceived association with vehicle automation, driver engagement, and urgency. This was done to control for possible effects of the cabin overlay, as the basic colour could look differently (brighter or darker) in the cockpit depending on the surface.

As can be seen in Figs. 2, 3, and 4, both ratings and rankings yielded similar results. Overall, cyan was the highest rated colour for vehicle automation, with blue the second highest. The inverse was true for driver engagement. White was rated high regarding driver engagement, lower for vehicle automation, and had the lowest association with urgency out of all investigated colours, thus being chosen as the colour for manual driving. Urgency ratings had resulted in the expected results, with red being the highest, followed by orange, and yellow coming after. While magenta had received slightly higher urgency than yellow, it was also rated moderately high for driver engagement and vehicle automation, making its association less clear, so we decided to not use it in the end.

3.3 LEDs and centre stack ADL icon display

The colours were implemented via light displays as follows in the different parts of the interface (see Fig. 5; modes are shown from left to right in the order of manual, ADL2, ADL3): The footwell was illuminated in the current ADL colour and would only change when a switch to another ADL was completed. The steering wheel behaved like the footwell. Additionally, at ADL2, two segments on the upper part of the rim were illuminated instead of the entire wheel, to suggest that hands should be on the wheel (only for ADL2, since on manual, the driver had no choice but to steer manually). The light below the windshield showed the current ADL in the centre. When a transition was available or requested by the vehicle, the available ADL was shown on the left and right edges in addition to the permanent ADL display in the centre. If a transition request was not responded to, then the warning colours would extend across the frontal light, with the light changing and the length of the illuminated segment increasing in three levels of escalation: yellow, orange, red; short, medium, maximum length (see bottom row of Fig. 5).

As the light interface is intended to be a supportive interface, we wanted to make sure that the LEDs were not the sole carriers of information and that all the information communicated via the lights would be accessible via a standard visual display, as in any regular vehicle. For this reason, we applied the colour scheme to a standard icon language that used a hands-on-wheel and eye metaphor to communicate vehicle automation and resulting driver engagement for each ADL. Figure 6 shows the icons for each ADL and the escalation colours as they were implemented in the icon display. Again the icons in the top row show each driving mode from left to right in the order of manual (white), ADL2 (blue), and ADL3 (cyan). The icons in the bottom row show the icon changes in order of escalation when a transition request is not responded to (yellow-orange-red). The icons were shown in the centre stack above a touchscreen intended for NDRAs (see Fig. 8). This way, the icon display would always be within the cone of vision of the driver and we could exclude effects due to a badly visible baseline interface.

3.4 Technical implementation

Windshield, wheel, and footwell elements of the interface were based on individually addressable WS2812B LED strips with a diode density of 144/m. Both strips were placed in a non-diffusing silicone tubing and adhered to the frame of the simulator. The steering wheel was a modified 3D printed Logitech G29 steering wheel. The original file was altered to accommodate for two buttons (for switching between manual and automated driving modes) as well as a tube in which the lights were installed. The tube-cover was printed from natural PLA, whereas the rest of the wheel was printed from black PLA. The spokes of the wheel were reinforced using aluminium plates attached with two-part epoxy glue. As the icon display, we used an iPhone 6 with a size of 4.7 inches attached in the middle console. The phone was paired with a Bluetooth speaker attached to the driver’s headrest to play short audio cues whenever the ADL changed.

To ensure simultaneous operation each of the light elements was controlled by a dedicated Arduino Uno microcontroller. The Adafruit_NeoPixel library was used to control the LEDs. Each microcontroller’s RX pin (receiving TTL serial data) was connected to the TX (transmitting TLL serial data) pin of a HC-05 Bluetooth module. The Bluetooth module received ASCII commands from a Bluetooth Terminal HC-05 android application installed on an Android tabled. Each of the commands was programmed to trigger a specific behaviour of each element of the interface, enabling a Wizard-of-Oz control setup.

The colours displayed by the interface are identical across the elements of the interface. The colours are gamma corrected accounting for how colours appear when displayed by the LEDs. The colours used in the study were the following (in Decimal Colour Code R, G, B): White (255, 255, 255), Cyan (0, 255, 255), Blue (0, 0, 255), Yellow (255, 255, 0), Orange (255, 125, 0), Red (255, 0, 0). The brightness of each of the elements was adjusted individually based on its position in driver’s line of sight, diffusion of the light, and exposure to the ambient light within the simulator room whether from the ceiling lights or the projector. The ceiling lights in the simulator room were dimmed to approximately 30% in order to achieve, together with the light from the projector, lighting that is close to regular daylight conditions (see Fig. 8).

4 Study design

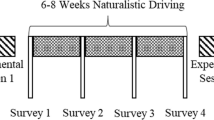

The study was set up in a driving simulator environment. The driving scenario was realised with SCANeR™ software by AVSimulation [46]. The ride starts in a suburban area with low traffic density and then continues into the city with medium to heavy traffic density involving elements such as a roundabout, a construction zone, and a pedestrian unexpectedly crossing the street. Driving the predefined route once, took about 15 min and allowed for several transitions between ADL2, ADL3, and manual driving (ADL0). ADLs were requested by the vehicle and could be performed via pressing a button on the steering wheel. For an overview on the routing and where the transitions between driving levels were scripted to happen, see Fig. 7. In total, participants had to pass the driving scenario three times under three different trial conditions (Control Condition — CC, Light Condition Static — LCS, and Light Condition Animated — LCA), which are described below:

-

Control Condition (CC): only the icons depicting the different driving levels (manual, ADL2, ADL3) were visible on centre display and were accompanied by a sound cue when an ADL was available or confirmed. The icons would blink and alternate in colour when a transition was requested. Whenever a transition from a higher to a lower ADL occurred (meaning that higher driver engagement was requested), the blinking was reinforced by an escalating emergency colour code starting from yellow to orange to red in an interval of 5 s each.

-

Light Condition Static (LCS): in addition to the icons, the static LEDs, switching between different colours (white, blue, and cyan), were communicating the transition from one driving level to another in LCS.

-

Light Condition Animated (LCA): LCA was similar to LCS with the difference that state changes (ADL available/confirmed) were accompanied by short blinking (3 times) initially, to capture the driver’s attention.

The conditions were randomised. In order to simulate NDRAs a second tablet was mounted in the centre stack below the icon display with a messenger application installed. Here, the participants would receive (and had to respond to) riddle questions (Example: “Steven tells his friends: ‘It’s funny, the day before yesterday I was 25 years old and next year I’ll already be 28.’ Do you think Steven is telling the truth?”). The participants could respond via touch input or a wireless keyboard placed on the middle console. The NDRAs were set up such so that they would appear when it was not appropriate to respond, i.e. during manual driving or ADL2, and the participants would need to decide (based on the information they had via the interface) whether and when it was appropriate to respond. The automation modes were implemented in the simulator and activated as soon as the participant pressed the button on the steering wheel. The lights were controlled manually by a human wizard who observed the interaction. See Fig. 8 for an overview of the study setup in the simulator.

4.1 Methods and procedure

In order to assess the interface, we used a mix of quantitative and qualitative methods. We used the NASA Task Load Index (TLX) [14] to measure the impact on workload, the User Experience Questionnaire (UEQ) [29] (subscales efficiency, perspicuity, usefulness, and visual aesthetics) as the primary quantitative assessment of usability and user experience, and finally the Situational Trust Scale for Automated Driving (STS-AD) [20] to assess trust effects. Post-interviews were utilised to gain qualitative insights on suitability, appropriateness, awareness, and understanding of the interfaces. The following questions were asked:

-

Which indicators did you notice in the cabin while you were driving?

-

During the study, were there any specific situations in which you felt confused? Was anything unclear for you?

-

Please describe situations, in which it was clear or unclear to you, which automation level you were in or what you were supposed or allowed to do.

-

Which interface was most helpful to identify the current automation level and what you were supposed or allowed to do? (Options: lights, animations, others).

-

Did you notice any difference between static lights and animation (bars, wheel)?

-

How realistic did you find the setup? (Did the driving scenario feel realistic to you?) Why / Why not?

In addition, we collected demographic data (age, gender, driving experience) and asked participants to rank each condition in order of preference. We recorded via an observation protocol in which mode participants performed the NDRAs and whether any irregularities occurred. Each session was, also, fully audio- and video-recorded.

The study proceeded in four phases: In phase 1, participants were introduced to the study goals and agenda, gave their informed consent, and filled in the demographics questionnaire. In phase 2, participants could familiarise themselves with the simulator by driving one or several laps across the track. We also asked them to fill in the NASA-TLX after the trial run for the purpose of weighting. In phase 3, participants drove three full rounds across the track, one for each condition (CC, LCS, LCA), followed by the NASA-TLX, UEQ, and STS-AD after each round. Finally, in phase 4, participants responded to the interview questions, and ranked the conditions. One study instance with one participant took about 100 to 120 min in total. For recruitment, due to Covid-regulations, convenience sampling of people affiliated with the University was conducted. Therefore, most of the participants were (former) students. None of them were familiar with the study or had participated in previous studies within the project. Each participant received a compensation of 20 Euros.

5 Results

We report results starting with the sample description, then the quantitative, and finally the qualitative results.

5.1 Sample description

Overall, 32 participants took part in the study. 40.6% were male (N=13) and 59.4% were female (N=19). The youngest person was 18 years old, the oldest 65. With a mean age of 25 years, and a median age of 23 years, the sample was rather young (SD=8.4). No participant indicated to suffer from a perception disorder or epileptic seizures, which would have been the reason for exclusion from the study. Only one participant indicated to be prone to motion sickness and could not complete the third round in the simulator due to it. All participants had a valid driving license for at least one year (N=32), with a mean of 7.5 years and a median of 5 years. 21.9% (N=7) had been actively driving less than 3 years, 28.1% (N=9) 3 to 5 years, 34.4% (N=11) 6 to 10 years, and 15.6% (N=5) more than then 10 years. More than half of the sample indicated to drive every day or at least several days a week (N=17, 53.1%), 15.6% (N=5) drive once a week, 21.9% (N=7) drive a few times a month, and 9.7% drive only a few times a year (N=3). Slightly less than 50% (N=15, 46.9%) are using advanced driver assistance systems (ADAS), while 53.1% (N=17) are not using them. Amongst the ADAS that participants indicated to be using, were lane keeping assistants, parking assistants, and cruise control systems.

5.2 Questionnaires

In the following, the different interfaces are always referred to as Control Condition (CC), Light Condition Static (LCS), and Light Condition Animated (LCA) (see Fig. 4). The number of participants, whose answers were considered in the analysis, is always indicated in the figures. As one participant had to drop out earlier due to motion sickness symptoms, sometimes only the data of the remaining 31 participant could be used for that condition (LCS).

On the NASA TLX, all conditions reached a rather low overall workload score of 31 for the CC interface, 31 for the LCS interface, and 32 for the LCA interface (see Fig. 9). All three interfaces were experienced very similar, and although the mental demand was indicated to contribute the most to the overall workload, in the actual driving situation, the performance subscale was contributing the most to the workload, followed by the mental load and the effort subscale. A Friedman test was run, but not significant differences in the workload ratings of the three interfaces were found (Mental Demand (\(\chi ^2(2) = 1.500\); p= 0.472), Physical Demand (\(\chi ^2(2) = 0.037\); p= 0.982), Temporal Demand (\(\chi ^2(2) = 4.095\); p= 0.129), Performance (\(\chi ^2(2) = 0.543\); p = 0.762), Effort (\(\chi ^2(2) = 0.022\); p= 0.989), Frustration (\(\chi ^2(2) = 1.976\); p= 0.372)).

The UEQ yielded medium to high ratings (> 0 on a range from -2 to +2) on all scales (efficiency, perspicuity, usefulness) for all conditions, with only the visual aesthetics being rated lower overall. LCS consistently rated highest except for visual aesthetics, where it was second to LCA. In general, all ratings were very close. A Friedman test was run to determine if the differences were statistically significant, which was not the case for any of the scales (efficiency (\(\chi ^2(2) = 1.436\), p = 0.488), perspicuity (\(\chi ^2(2) = 1.921\), p = 0.383), usefulness (\(\chi ^2(2) = 4.637\), p = 0.098), visual aesthetics (\(\chi ^2(2) = 5.105\), p = 0.078)).

On the STS-AD scale, for the items “I trust the automation in this situation” (Trust), “In this situation, the automated vehicle performs well enough for me to engage in other activities (such as reading)” (NDRT), and “The automated vehicle reacted appropriately to the environment” (Reaction) the ratings were high (not below 5.4 on the 7-point Likert scale). This indicates a general high level of trust in the interfaces. On the other hand, the items “I would have performed better than the automated vehicle in this situation” (Performance), “The situation was risky” (Risk), and “The automated vehicle made an unsafe judgement in this situation” (Judgement) were rated rather low with the highest rating being a 3.3 for the CC interface on the performance item, which underlines the high level of trust in all the interfaces. In general, again, all ratings per item were close, and no significant differences were found, when a Friedman test was conducted (Trust (\(\chi ^2(2) = 2.800\); p= 0.247), Performance (\(\chi ^2(2) = 4.630\); p= 0.099), NDRT (\(\chi ^2(2) = 0.758\); p= 0.685), Risk (\(\chi ^2(2) = 2.098\); p= 0.350), Judgement (\(\chi ^2(2) = 0.725\); p= 0.696), Reaction (\(\chi ^2(2) = 1.420\); p= 0.492)) (Fig. 10).

The ranking used a simply points system with 2-0 points being awarded for each position. The points were then multiplied by the number of rankings (N=32), which resulted in an overall score of 0–96 per interface (see Fig. 11). Overall, LCS was the preferred one with a score of 47. The others were clearly rated lower and very close to each other, with LCA (23) being ranked even lower than CC (26).

Participants received two text messages (NDRAs) per round — one during ADL 2 and one during manual driving. Across all three conditions, this results in 96 responses to the first and the same number of responses to the second message, respectively. The observation protocol showed participants read the messages 32 times in ADL2: 11 times in CC, 10 times in LCA and 7 times in LCS.

The messages were answered 22 times during ADL2: 10 times in CC, 7 times in LCA and 5 times in LCS. During manual driving, reading of the text message was observed 12 times: 5 times in LCS, 4 times in LCA and 2 times in CC. There were only two instances of answering during manual driving, both by the same participant.

5.3 Post-interview

In the following, we summarise the results from the post-interaction interviews clustered by noticeability of HMI elements, helpfulness and mode confusion, as well as animations vs. static lights.

Noticeability The icon control interface was explicitly mentioned to have been noticed by most (N=26) of the participants, which means it served its purpose of primary information interface. The lights on the steering wheel were noticed by 23 participants, the strip below the windshield by 21. The lights in the footwell were only mentioned by four participants, implying a particular low noticeability of these. The auditory cues were explicitly mentioned by one third (N=10) of all participants. It should be noted that participants were asked to list any HMI elements that first came to their mind and these were recorded in terms of numbers for noticeability. The interviewer then asked specifically for each HMI element whether it had or had not been noticed at all. Only one participant mentioned to not have noticed any lights in the cabin at all due to being too focused on either the road or the centre stack.

Helpfulness and mode confusion Most (N=23) participants stated to have had no insecurities regarding the active ADL and almost as many (N=22) regarding what they were supposed or allowed to do. The latter result was at odds with the protocols (see previous sections), where participants frequently performed the NDRA during ADL2 or even during manual driving. Similar results were shown by Feldhütter et al. [10], who found that in partial automation driver develop an overreliance to the automated vehicle arising from the experience with a very reliable partially automated system. When asked about this, participants would respond that they were aware but chose to do so regardless. Their reasoning was that they felt confident enough and considered the NDRA a simple enough task to perform while driving or monitoring. The self-reported confusion was generally on the lower end, with most instances occurring at the beginning — either during the trial run or early in the first round, where some (N=5) participants mentioned to having needed more time to get accustomed to all visual indicators and/or the buttons to switch between automated and manual modes. The pedestrian suddenly crossing the street had caused confusion regarding ADL2 with four participants, who were not sure whether they should have interfered or not. The colours for ADLs 2 and 3 (blue and cyan, respectively) were stated as not sufficiently clear by seven participants.

In terms of overall helpfulness, the icon control display was considered most helpful (N=18), followed by the windshield-strip (N=11), and then the steering wheel (N=9). The wheel was often mentioned to be the most visible part but also had the highest annoyance potential. The LED below the windshield achieved more of a middle ground, with good visibility and lower annoyance. Five participants found the lights in general to be the most helpful HMI element, without differentiating between wheel, windshield, or footwell. No participant mentioned the lights in the footwell in this regard, which reflects their low performance regarding noticeability.

Animation vs. static lights About one third (N=10) if all participants stated to have noticed a difference between the LCS and LCA conditions, whereas a bit more than a third (N=13) explicitly stated to not have noticed any differences. The rest were unsure or not confident to make a decisive statement either way. Of the 10 participants who had noticed a difference, two stated to not having found the animation any more useful than the static lights, three found the animation more useful, and five found the difference to not be impactful enough to make a statement either way.

6 Discussion

We found that the proposed light interface, in particular LCS, did show improvements regarding both mode awareness and NDRA-support (RQ1). We also note, however, that the improvements over the baseline were not major and generally close between light and control conditions, with only exception being subjective preference. Since the light interface is supposed to serve as a set of supporting indicators and not a standalone interface, we consider this minor improvement still appropriate to the intended purpose. Nonetheless, we also assume that there is room for better performance and potentially larger effects with an improved light interface, especially in light of the results regarding distraction by the steering wheel light and low visibility of the lights in the footwell.

Our results support findings of Kunze et al. [28], who showed advantages of peripheral awareness displays over a visualisation in the instrument cluster in terms of takeover performance. We further found that lights in the footwell were not effective and that a reduced setup with lights only in the steering wheel and below the windshield would serve the same purpose. The light interface was found to have no impact on cognitive workload (RQ2). Trust-related factors were generally found to be positive across all conditions, with none having a significant impact on driver trust in the vehicle (RQ3). We found animations, even if brief to only capture attention, to be more of a hindrance than helpful (RQ4), suggesting static displays to be more suitable than animated ones. We will now discuss these findings in more detail.

6.1 On appropriate NDRA-support

One of the elementary aspects we were interested regarding mode awareness (RQ1) was how or whether the interface could foster understanding when NDRAs could be performed. Only in 22 of 96 instances (23%) did the drivers decide to actively perform an NDRA (writing) during ADL2 (and only in two instances during manual mode), so the amount of NDRA-related misconducts was low overall. While not significant quantitatively, they occurred twice as often in the control condition (CC) than in the best performing light condition (LCS), with LCA being in-between. Together with the findings from the interviews, we do surmise that lights can provide effective support to increase understanding of when NDRAs can performed and when they cannot, though the performance increase is limited insofar as the participants’ behaviour was already rather safe even in the baseline (CC).

Due to the low number of instances we do not think NDRA-performance during manual driving can safely be interpreted quantitatively. However, we do want to highlight the fact that in most cases of NDRA-misconduct, including the one participant who chose to do so during manual driving, the participants were fully aware of what they were supposed to (not) do and made the conscious decision to perform the NDRA regardless. Thus, there are two aspects to providing appropriate NDRA-support: Properly cueing the driver when an NDRA can be performed and fostering understanding why NDRAs are supported or not supported within a specific context. We found that the supportive light interface can assist with the former but not with the latter.

6.2 Static vs. dynamic or why animated cues can be counterproductive

Regarding the performance of animated cues (RQ4), apart from causing the lowest amount of NDRA-related misconduct, the static light condition (LCS) was also ranked highest in the preference ranking and performed highest in the UEQ overall. Regarding RQ3, It is interesting to note that LCS actually had the lowest rating on the NDRT-subscale of the STS-AD (but performed best on the Trust-subscale), although all conditions performed very closely and none of the differences on the STS-AD were found to be significant. Together with the rankings, NDRA-observations, and statements from the interviews, we conclude LCS to be the best performing condition overall.

The motivation behind LCA was to communicate the same content but adding an additional animated cue at the beginning to better capture the drivers’ attention. As it turns out, this was not necessary or beneficial, as no performance advantages of LCA in any regard could be identified. Rather, the animated cue seemed to only distract even though it was kept short and only at the beginning (afterwards it was identical to LCS). These results are in line with findings of Borojeni et al. [3], who also found that presentation patterns have no effect on takeover performance. We surmise that contrary to what we assumed, a static display is sufficiently visible and adding animations is an attempt to address a problem that does not actually exist, thus worsening the interaction. It should be noted that the workload measured via the NASA-TLX was similar for all three conditions, so while LCA was not ideal, the conservative animation approach seemed to cause more annoyance and subjective annoyance but had no measurable impact on workload. Borojeni et al. [3] reported that moving patterns were perceived less demanding than static ones, but could not find a significant difference between the two options.

6.3 Colours and positions

While validating the colour model was not a specific goal of this study, it was still important that it matched the participants’ expectations in terms of high vs. low automation and related degree of driver engagement and an interesting supplement to the findings regarding understanding the animation modes (RQ1). Due to the pervasion of cyan in the media in relation to automated vehicles, it was no surprise that it ended up being the one most associated with vehicle automation. In isolation, the colour model of white-blue-cyan for increasing degrees of automation made sense, although the implementation via a light interface showed a disadvantage that made it not as clear-cut as we had hoped, causing some drivers to report confusion and room for improvement in the interviews: Perceived brightness was necessarily uneven, since the colours transitioned from bright (white) to darker (blue) to bright again (cyan), which does not appropriately represent the increasing automation degree. One way to address this could be to remove blue entirely and represent the spectrum between fully manual and the highest degree of automation via a transition between white and cyan. This, however, might the degrees in-between less easy to discern, so a good solution with clear discernability and conforming with the popular colour perception regarding vehicle automation still needs to be found.

The positions in the cabin were motivated by existing or proposed solutions (see [32, 49, 50]) and logically to highlight the relevant areas of attention and intervention (lateral control, longitudinal control, road monitoring). Due to the physical attributes of the vehicle cabin and the general gaze behaviour, we found the tree positions to be far from equal: Despite their relevance to executing longitudinal control, we found lighting in the footwell to be ineffective. Lights in the steering wheel and below the windshield were both found to be effective and with good visibility, which is not as surprising considering that both are in direct frontal view, which is also supported by Löcken et al. [32]. But even there both positions were not perceived equally by the drivers, as especially the interview showed that the indicators were seen as more distracting or irritating on the steering wheel than below the windshield, as that was the most central position in the drivers’ field of view. Thus, it might be better to keep persistent automation indicators in the indicator below the windshield, while the steering wheel should only be used for momentary information (e.g. when a transition is requested/available).

6.4 Limitations

As a lab study, the ecological validity is naturally limited to a certain degree. In our simulator setup, the nonpanoramic view (frontal projection only) and the absence of integral car interfaces such as indicators and a clutch contributed to a more artificial setting. A higher number of participants might have resulted in more expressive quantitative results but due to the ongoing Covid-19 pandemic and resulting restrictions as well as the rather lengthy (up to two hours) and demanding study design, recruiting was time-consuming and challenging, with many slots that had to be rescheduled and a high number of dropouts. Additionally, it should be noted that we deliberately kept the focus of the study narrow in order to obtain meaningful results. Future studies in other setup constellations and study environments may shed light on further possibilities with regard to colours, brightness, or further in-car application areas of ambient light interfaces in automated vehicles.

7 Conclusion

In this paper, we reported on a study where we investigated two ambient light designs (one static, one with animations) to support mode awareness and appropriate NDRA-engagement. We found that the ambient light interfaces can improve mode awareness and NDRA-support. Ambient lights in the footwell were not as effective as lights on the steering wheel or below the windshield. Ambient lights had no impact on cognitive workload. Ambient light interfaces were trusted, but did not impact drivers overall trust in the vehicle itself. Animations are more a hindrance than helpful for the control transition.

Thus, we conclude an ambient light interface with indicators in the vehicle and below the windshield to be appropriate as a supportive in-vehicle display, with future work to focus on extending the functionality especially in terms of fostering understandability of NDRA-availability and refinement of how the different degrees of automation and resulting driver engagement are communicated via light (colours, brightness, and gradients).

Data availability

The datasets generated during and/or analysed during the current study are not publicly available due to data management and protection provisions this work is subject to requiring a specific cause for sharing of individual data sets. They can be made available from the corresponding author on reasonable request.

References

Andersson J, Habibovic A, Rizgary D (2021) First encounter effects in testing of highly automated vehicles during two experimental occasions–the need for recurrent testing. it-Inform Technol 63(2):99–110

BMW (2019) How to change lanes automatically - bmw how-to - youtube. https://www.youtube.com/watch?v=BLnkBVORn4Y

Borojeni SS, Chuang L, Heuten W, Boll S (2016) Assisting drivers with ambient take-over requests in highly automated driving. In: Proceedings of the 8th international conference on automotive user interfaces and interactive vehicular applications. pp 237–244

Capallera M, Angelini L, Meteier Q, Khaled OA, Mugellini E (2022) Human-vehicle interaction to support driver’s situation awareness in automated vehicles: A systematic review. IEEE Transactions on intelligent vehicles pp 1–19. https://doi.org/10.1109/TIV.2022.3200826

Cramer S, Kaup I, Siedersberger KH (2018) Comprehensibility and perceptibility of vehicle pitch motions as feedback for the driver during partially automated driving. IEEE Transactions on intelligent vehicles 4(1):3–13

Detjen H, Pfleging B, Schneegass S (2020) A wizard of oz field study to understand non-driving-related activities, trust, and acceptance of automated vehicles. In: 12th International conference on automotive user interfaces and interactive vehicular applications. pp 19–29

Dönmez Özkan Y, Mirnig AG, Meschtscherjakov A, Demir C, Tscheligi M (2021) Mode awareness interfaces in automated vehicles, robotics, and aviation: A literature review. In: 13th International conference on automotive user interfaces and interactive vehicular applications. AutomotiveUI ’21, Association for Computing Machinery, New York, NY, USA, pp 147–158. https://doi.org/10.1145/3409118.3475125

Dönmez Özkan Y, Mirnig AG, Meschtscherjakov A, Demir C, Tscheligi M (2021) Mode awareness interfaces in automated vehicles, robotics, and aviation: A literature review. In: 13th International conference on automotive user interfaces and interactive vehicular applications. pp 147–158

Feierle A, Danner S, Steininger S, Bengler K (2020) Information needs and visual attention during urban, highly automated driving-an investigation of potential influencing factors. Information 11(2):62

Feldhütter A, Härtwig N, Kurpiers C, Hernandez JM, Bengler K (2018) Effect on mode awareness when changing from conditionally to partially automated driving. In: Congress of the international ergonomics association. Springer, pp 314–324

Feldhütter A, Segler C, Bengler K (2017) Does shifting between conditionally and partially automated driving lead to a loss of mode awareness? In: International conference on applied human factors and ergonomics. Springer, pp 730–741

Guardian T (2016) Tesla driver dies in first fatal crash while using autopilot mode. https://www.theguardian.com/technology/2016/jun/30/tesla-autopilot-death-self-driving-car-elon-musk

Hainich R, Drewitz U, Ihme K, Lauermann J, Niedling M, Oehl M (2021) Evaluation of a human-machine interface for motion sickness mitigation utilizing anticipatory ambient light cues in a realistic automated driving setting. Information 12(4):176

Hart SG (2006) Nasa-task load index (nasa-tlx); 20 years later. In: Proceedings of the human factors and ergonomics society annual meeting. Sage publications Sage CA: Los Angeles, CA, 50:904–908

Hawkins AJ (2022) A tesla vehicle using ‘smart summon’ appears to crash into a $ 3.5 million private jet. https://www.theverge.com/2022/4/22/23037654/tesla-crash-private-jet-reddit-video-smart-summon

Hecht T, Feldhütter A, Draeger K, Bengler K (2019) What do you do? an analysis of non-driving related activities during a 60 minutes conditionally automated highway drive. In: International conference on human interaction and emerging technologies. Springer, pp 28–34

Hecht T, Weng S, Kick LF, Bengler K (2022) How users of automated vehicles benefit from predictive ambient light displays. Appl Ergon 103:103762

Hipp M, Löcken A, Heuten W, Boll S (2016) Ambient park assist: supporting reverse parking maneuvers with ambient light. In: Adjunct proceedings of the 8th international conference on automotive user interfaces and interactive vehicular applications. pp 45–50

Hock P, Babel F, Kraus J, Rukzio E, Baumann M (2019) Towards opt-out permission policies to maximize the use of automated driving. In: Proceedings of the 11th international conference on automotive user interfaces and interactive vehicular applications. pp 101–112

Holthausen BE, Wintersberger P, Walker BN, Riener A (2020) Situational trust scale for automated driving (sts-ad): Development and initial validation. In: 12th International conference on automotive user interfaces and interactive vehicular applications. pp 40–47

International S (2021) Taxonomy and definitions for terms related to driving automation systems for on-road motor vehicles. Standard J3016_202104

Jochum S, Saupp L, Bavendiek J, Brockmeier C, Eckstein L (2021) Investigating kinematic parameters of a turning seat as a haptic and kinesthetic hmi to support the take-over request in automated driving. In: International conference on applied human factors and ergonomics. Springer, pp 301–307

Johns M, Mok B, Sirkin D, Gowda N, Smith C, Talamonti W, Ju W (2016) Exploring shared control in automated driving. In: 2016 11th ACM/IEEE International conference on human-robot interaction (HRI). IEEE, pp 91–98

el Jouhri A, el Sharkawy A, Paksoy H, Youssif O, He X, Kim S, Happee R (2022) The influence of a colour themed hmi on trust and take-over performance in automated vehicles. https://doi.org/10.13140/RG.2.2.15003.13607

Ko SM, Ji YG (2018) How we can measure the non-driving-task engagement in automated driving: comparing flow experience and workload. Appl Ergon 67:237–245

Koo J, Shin D, Steinert M, Leifer L (2016) Understanding driver responses to voice alerts of autonomous car operations. Int J Veh Des 70(4):377–392

Kraft AK, Naujoks F, Wörle J, Neukum A (2018) The impact of an in-vehicle display on glance distribution in partially automated driving in an on-road experiment. Transp Res Part F: Traffic Psychol Behav 52:40–50

Kunze A, Summerskill SJ, Marshall R, Filtness AJ (2019) Conveying uncertainties using peripheral awareness displays in the context of automated driving. In: Proceedings of the 11th international conference on automotive user interfaces and interactive vehicular applications. pp 329–341

Laugwitz B, Held T, Schrepp M (2008) Construction and evaluation of a user experience questionnaire. In: Symposium of the Austrian HCI and usability engineering group. Springer, pp 63–76

Löcken A, Frison AK, Fahn V, Kreppold D, Götz M, Riener A (2020) Increasing user experience and trust in automated vehicles via an ambient light display. In: 22nd International conference on human-computer interaction with mobile devices and services. pp 1–10

Löcken A, Heuten W, Boll S (2015) Supporting lane change decisions with ambient light. In: Proceedings of the 7th international conference on automotive user interfaces and interactive vehicular applications. pp 204–211

Löcken A, Heuten W, Boll S (2016) Autoambicar: using ambient light to inform drivers about intentions of their automated cars. In: Adjunct proceedings of the 8th international conference on automotive user interfaces and interactive vehicular applications. pp 57–62

Löcken A, Yan F, Heuten W, Boll S (2019) Investigating driver gaze behavior during lane changes using two visual cues: ambient light and focal icons. J Multimodal User Interfaces 13(2):119–136

Louw T, Kuo J, Romano R, Radhakrishnan V, Lenné MG, Merat N (2019) Engaging in ndrts affects drivers’ responses and glance patterns after silent automation failures. Transp Res Part F: Traffic Psychol behav 62:870–882

Meng X, Han J, Chernyshov G, Ragozin K, Kunze K (2022) Thermaldrive-towards situation awareness over thermal feedback in automated driving scenarios. In: 27th International conference on intelligent user interfaces. pp 101–104

Meschtscherjakov A, Döttlinger C, Rödel C, Tscheligi M (2015) Chaselight: ambient led stripes to control driving speed. In: Proceedings of the 7th international conference on automotive user interfaces and interactive vehicular applications. pp 212–219

Mirnig AG, Gärtner M, Laminger A, Meschtscherjakov A, Trösterer S, Tscheligi M, McCall R, McGee F (2017) Control transition interfaces in semiautonomous vehicles: A categorization framework and literature analysis. In: Proceedings of the 9th international conference on automotive user interfaces and interactive vehicular applications. pp 209–220

Mirnig AG, Gärtner M, Meschtscherjakov A, Tscheligi M (2020) Blinded by novelty: a reflection on participant curiosity and novelty in automated vehicle studies based on experiences from the field. In: Proceedings of the conference on mensch und computer. pp 373–381

Monk A (1986) Mode errors: A user-centred analysis and some preventative measures using keying-contingent sound. Int J Man-mach Stud 24(4):313–327

Naujoks F, Forster Y, Wiedemann K, Neukum A (2017) A human-machine interface for cooperative highly automated driving. In: Advances in human aspects of transportation. Springer, pp 585–595

Nguyen-Phuoc DQ, De Gruyter C, Oviedo-Trespalacios O, Ngoc SD, Tran ATP (2020) Turn signal use among motorcyclists and car drivers: The role of environmental characteristics, perceived risk, beliefs and lifestyle behaviours. Accid Anal & Prev 144:105611

Novakazi F, Johansson M, Erhardsson G, Lidander L (2021) Who’s in charge? it-Information Technology. 63(2):77–85

Petermeijer SM, De Winter JC, Bengler KJ (2015) Vibrotactile displays: A survey with a view on highly automated driving. IEEE Transactions on intelligent transportation systems 17(4):897–907

Pfleging B, Rang M, Broy N (2016) Investigating user needs for non-driving-related activities during automated driving. In: Proceedings of the 15th international conference on mobile and ubiquitous multimedia. pp 91–99

Revell KM, Brown JW, Richardson J, Kim J, Stanton NA (2021) How was it for you? comparing how different levels of multimodal situation awareness feedback are experienced by human agents during transfer of control of the driving task in a semi-autonomous vehicle. In: Designing interaction and interfaces for automated vehicles. CRC Press, pp 101–113

Scaner catalog (2021). https://www.avsimulation.com/scaner-catalog/

Schartmüller C, Weigl K, Löcken A, Wintersberger P, Steinhauser M, Riener A (2021) Displays for productive non-driving related tasks: Visual behavior and its impact in conditionally automated driving. Multimodal Technol Interact 5(4):21

Verge T (2017) Mercedes will give tesla’s autopilot its first real competition this year. https://www.theverge.com/ces/2017/1/6/14177872/mercedes-benz-drive-pilot-self-driving-tesla-autopilot-ces-2017

Volkswagen (2022) Hello id. light! - how the new id. models communicate with the vehicle occupants via a light strip. https://www.volkswagen-newsroom.com/en/stories/hello-id-light-how-the-new-id-models-communicate-with-the-vehicle-occupants-via-a-light-strip-6963

Wang J, Wang W, Hansen P, Li Y, You F (2020) The situation awareness and usability research of different hud hmi design in driving while using adaptive cruise control. In: International conference on human-computer interaction. Springer, pp 236–248

Wintersberger P, Dmitrenko D, Schartmüller C, Frison AK, Maggioni E, Obrist M, Riener A (2019) S (c) entinel: monitoring automated vehicles with olfactory reliability displays. In: Proceedings of the 24th international conference on intelligent user interfaces, pp 538–546

Wörle J, Metz B, Othersen I, Baumann M (2020) Sleep in highly automated driving: Takeover performance after waking up. Accid Anal & Prev 144:105617

Yoon SH, Lee SC, Ji YG (2021) Modeling takeover time based on non-driving-related task attributes in highly automated driving. Appl Ergon 92:103343

Zhang B, de Winter J, Varotto S, Happee R, Martens M (2019) Determinants of take-over time from automated driving: A meta-analysis of 129 studies. Transp Res Part F: Traffic Psychol Behav 64:285–307

Acknowledgements

The authors thank Virtual Vehicle Research GmbH (ViF) for providing the initial icon designs that were adapted for the study.

Funding

Open access funding provided by Paris Lodron University of Salzburg. Open access funding provided by Paris Lodron University of Salzburg. This research was conducted within the project HADRIAN, which has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No. 875597. The Innovation and Networks Executive Agency (INEA) is not responsible for any use that may be made of the information this document contains.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mirnig, A.G., Gärtner , M., Wallner, V. et al. Enlightening mode awareness. Pers Ubiquit Comput 27, 2307–2320 (2023). https://doi.org/10.1007/s00779-023-01781-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00779-023-01781-6