Abstract

In this paper, we describe a proof-of-concept for the implementation of a wearable auditory biofeedback system based on a sensor-instrumented insole. Such a system aims to assist everyday users with static and dynamic exercises for gait rehabilitation interventions by providing auditory feedback based on plantar pressure distribution and automated classification of functional gait disorders. As ground reaction force (GRF) data are frequently used in clinical practice to quantitatively describe human motion and have been successfully used for the classification of gait patterns into clinically relevant classes, a feed-forward neural network was implemented on the firmware of the insoles to estimate the GRFs using pressure and acceleration data. The estimated GRFs approximated well the GRF measurements obtained from force plates. To distinguish between physiological gait and gait disorders, we trained and evaluated a support vector machine with labeled data from a publicly accessible dataset. The automated gait classification was then sonified for auditory feedback. The potential of the implemented auditory feedback for preventive and supportive applications in physical therapy was finally assessed with both expert and non-expert participants. A focus group revealed experts’ expectations for the proposed system, while a usability study assessed the clarity of the auditory feedback to everyday users. The evaluation shows promising results regarding the usefulness of our system in this application area.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Methods for detecting and assessing gait impairments range from traditional apparent diagnoses by medical experts to sophisticated technical procedures based on motion capturing systems and measurements with force plates to analyze kinematic and kinetic gait characteristics. Although the latter are the gold standard for human gait and motion analysis, they are quite expensive and entail time-consuming procedures, significantly limiting their widespread use [1]. In addition, such systems can only be operated in laboratory environments and are therefore not suitable for recording the actual walking behavior of a person in their everyday environment [2]. If the gait patterns recorded in the gait laboratory deviate beyond a certain tolerance from a person’s movement behavior in everyday life, this can also lead to incorrect diagnoses and inadequate treatment measures. In order to solve this problem, an early diagnosis of deviations from physiological (i.e., “normal”) gait patterns as well as adequate preventive and therapeutic measures for gait training is essential for potentially affected people. Motor learning and gait re-education usually require feedback that is often provided either by a therapist or by special measurement systems using visual, auditory, haptic, and multimodal feedback strategies [3]. Under laboratory conditions, however, the frequency of providing feedback and its modes of application are limited. Simple mobile systems are needed to provide feedback for everyday situations beyond therapy settings and laboratory equipment [4]. In addition, such systems need to be tailored to the customer demands, so that systems provide easy-to-use feedback and can be deployed independently by the users in tele-rehabilitation settings [5].

In individuals with normal knee alignment, their load is evenly distributed on their base of support during standing. In contrast, varus or valgus malalignments cause the load to be concentrated more on the lateral or medial side of the foot, respectively. This shift can lead to problems during walking, therefore various static and dynamic exercises are performed in therapy to counteract the effects of these malalignments

Several interventions have been shown to improve not only gait ability, but also balance and rate of falls [6]. These interventions include static and dynamic exercises, such as standing on one leg, tandem foot standing, altering the base of support, and line walking, among others. During the execution of such exercises, therapists pay special attention to the patient’s knee alignment. Knee malalignment has been shown to increase the risk for lesions and cartilage loss, and to affect load distribution [7]. A neutral joint alignment of the lower extremity, as opposed to varus and valgus malalignment, helps to better distribute the load on the base of support, which is then reflected on ground reaction forces (GRFs) (see Fig. 1). This paper is an extended version of our previous work [8] in which we introduced a proof-of-concept approach for the implementation of a mobile auditory biofeedback system to support everyday users on both static and dynamic exercise interventions by providing auditory feedback based on plantar pressure distribution and automated classification of functional gait disorders (GD) using a sensor-instrumented insole (equipped with pressure sensors and accelerometers)Footnote 1.

Our study aims to investigate (1) the feasibility of the solution and (2) to assess its potential for preventive and supportive applications in physical therapy, such as supervised therapy settings and tele-rehabilitation. The study was conducted by an interdisciplinary team consisting of researchers, developers, and physical therapists who were consulted throughout the entire process. We start our proof-of-concept approach by obtaining simultaneous records of ground reaction force measurements and data from mobile sensor-instrumented insoles used by 48 healthy volunteers while walking (Section 3.2). Then, we evaluated different machine learning-based approaches to estimate GRFs based on the pressure and acceleration data from the insole device (Section 3.3). Thirdly, to distinguish between “normal” physiological gait (NG) and GD, we then trained and evaluated a support vector machine (SVM) with labeled data from a publicly accessible database [9] (Section 3.4). We then developed a sonification model providing auditory feedback according to the automated gait classification (Section 4). And finally, to assess the impact and potential of the auditory feedback for physical therapy, we assessed the proposed system with the help of expert reviews (Section 5) and a usability study with non-expert participants (Section 6). Section 7 discusses the results of the conducted experiments and evaluations, as well as their limitations and possible future applications.

2 Related work

Biofeedback is a frequently used tool in clinical settings to assist motor learning and re-education. On the one hand, it allows creating awareness of deviating aspects, such as deviations from physiological gait patterns or unfavorable loading patterns, while on the other hand, it helps with the rehabilitation process. Such feedback follows a stimulus–response approach. It is ideally provided by professionals such as physical therapists and is usually communicated verbally, visually, or tactilely [10]. However, lasting changes in movement behavior often require regular practice over time and continuous feedback to internalize them. Therefore, it is crucial that patients with GD practice independently and beyond the therapy setting (therapy sessions usually take place at weekly intervals) [4] and receive immediate feedback on their walking behavior. A special method is the acoustic representation of motion sequences through real-time sonification [11,12,13,14], as it is implemented in the field of gait analysis, especially in combination with sensor-equipped insoles [2, 15]. The integrated sensor technology allows cost-effective and wireless data transmission to stationary or web-based servers and mobile devices [16]. As a result, numerous products — ranging from prototypical approaches to market maturity — have been realized in recent years that are mobile and sufficiently powerful to be used as auditory feedback systems in everyday life as well as in clinical rehabilitation [2, 17, 18].

In sports [11, 12, 19] as well as in rehabilitation [4, 20, 21], the effectiveness of sonification for control and (re-)learning of motor functions has already been demonstrated. The complexity of the implementations varies widely. In the field of gait rehabilitation, it ranges from systems that support heel strike by a simple synthetic click to sonic representations of the swing phase while walking [2, 15, 17]. To some extent, these systems are already capable of making users more aware of their gait behavior through auditory feedback, thereby supporting motor learning processes [2]. In order to apply them as a comprehensive diagnostic tool in gait analysis, mobile monitoring of GRFs for feedback could be of great value. Considered a well-established standard in gait analysis [17], GRFs are familiar indicators for clinical experts. The GRF vector is composed of the vertical (\(GRF_{V}\)), anteroposterior (\(GRF_{AP}\)), and mediolateral (\(GRF_{ML}\)) force components, which are generally determined using force plates. For the calculation of GRF data using sensor-equipped soles, several approaches will be presented in the following.

The mobile measurement of GRFs that is comparable to force plates in terms of the method used is only possible with “outsoles”, which are attached as a second sole underneath the actual shoe sole and can thus record the reaction forces of the ground [22]. Such systems are quite vulnerable to the impact of intruding dirt and are too expensive for everyday use [23]. Unlike outsoles, soles implemented inside shoes (insoles) do not have direct contact with the ground. In addition to optical [24], capacitive [25] or foam sensors [26], pressure sensors are used in most cases to determine the pressure distribution during standing or walking [17, 23]. Various authors achieve an approximate estimation of effective GRFs using insole pressure data in combination with additional visual motion analysis [27] or adaptive pattern recognition algorithms [28]. Approaches that perform an algorithmic categorization of gait patterns based on insole data have also shown promising results [29, 30].

The use of insoles with and without auditory feedback has been investigated in several research approaches [17, 22, 31,32,33,34,35,36,37]. To the best of our knowledge, however, there has been no approach that provides auditory feedback based on a medically approved insole to support both dynamic and static intervention exercises in an automated way.

3 Gait measurement and classification using integrated insole devices

In this chapter, we present an overview of the used insole including its technical configuration and functionality as well as a detailed view of the GRF estimation and automated gait classification.

3.1 Design of the sensor-instrumented insole

The insole was developed by one of the project partners and originally designed as a multiple-sensor insole for everyday use in combination with a cloud-based application for mobile devices. To make the device accessible to a large customer base, a retail price of approximately 300 euros for a pair of insoles was targeted. Meanwhile, the insole has been certified as a class 1 medical device (93/42/EWG-Medical Device Directive MDD).

The version of the insole used in the presented approach is instrumented with twelve textile pressure sensors per insole and additional inertial measurement unit (IMU) sensors, out of which the 3-degrees-of-freedom (3DOF) accelerometers in each insole are used for measurements. Sensor data can be recorded to an internal flash drive at sample rates of 50 to 100 samples per second. Additionally, data are transmitted via Bluetooth Low Energy (BLE 4.2) to a PC software at 100 samples per second. Besides raw sensor data, the data stream also includes the estimated GRFs, the center of pressure (COP), and class estimation that have been developed and implemented within our approach (Sections 3.3 and 3.4). The PC software includes a visualization of parameters such as pressure distribution or COP (see Fig. 2), and forwards all incoming data to an Open Sound Control (OSC) stream for further utilization, e.g., to generate the auditory feedback.

3.2 Gait recordings on insoles and force plates

As a reference for the estimation of the GRFs obtained from the insole, a set of 828 steps from 18 male and 30 female healthy participants was recorded. Participants were on average 38.8 years old (\(SD = 10.3\)), with mean weight of 73.6 kg (\(SD = 13.2\)), mean height of 172.8 cm (\(SD = 8.8\)), and mean shoe size of 41.2 EU (\(SD = 2.3\)). The test sample consisted of students and faculty members recruited at the St. Pölten University of Applied Sciences. They were asked to report any mobility issues before the data collection.

Steps were recorded following a protocol similar to Dumphart et al. [38] on a ten-meter walkway using a force plate (type 9286B, Kistler GmbH) sampled at 300 Hz, while the above-described insole sampled at 100 Hz (see Fig. 3). The force plate was flush with the ground and covered with the same walkway surface material. To avoid taking a targeted step towards the force plate, participants were only instructed to walk across the walkway without drawing their attention to the embedded force plate. The participants were required to walk unassisted and without a walking aid at a self-selected walking speed in standardized sneakers (Nike SB Check solar) equipped with the insole. Five to eight valid force plate hits of the dominant leg were recorded using Vicon Nexus (v. 2.9, Vicon Motion Systems Ltd UK). These recordings were validated by the moderator when the participant walked naturally and there was a clean foot strike on the force plate. The raw data from the force plate (GRFs) and the insoles were preprocessed (filtering and normalization) using Matlab (v. 2019b, The Mathworks, Inc).

3.3 Neural network for GRF estimation

To calculate and estimate GRFs from the insole data we tested and compared different neural network (NN) architectures including a feed-forward neural network (FFNN) [39], a wavelet neural network (WNN) [37], and a long short-term memory network (LSTM) [40].Footnote 2

Single steps of the force plate recordings (see Section 3.2) served as ground truth for the training and evaluation of these NNs. The recorded steps of the insole were synchronized by timestamps to the GRFs obtained from the force plate. To reduce complexity and calculation time, data of the seven pressure sensors placed at the forefoot and the five pressure sensors at the mid-rear foot were weighted and added up. We utilized these two aggregated signals in addition to the 3DOF accelerometer data and the step length as input to the NNs. To compensate for varying step durations, the data of each step were time-normalized to 101 data points (100% stance).

The output layer of the NNs was set to a fixed number of data points (101) for each component of the GRF. A low-pass Butterworth filter of \(2^{nd}\) order with a cutoff frequency at 40Hz and 20Hz was used to smooth the estimated signals [41]. Figure 4 shows a comparison of the estimated GRF components with and without the use of this post-processing step and the original GRF components obtained with the force plate.

For the experiments, the dataset was split into a training (90%) and a test set (10%) so that data from the same participant could not be in both sets. The training set served to train the NNs, whereas the test set was used to evaluate the generalization ability of the trained models and to compare the different NN architectures. We followed a repeated cross-validation approach in which the partitioning of the data was performed several times to provide a robust evaluation value of the normalized root mean square error (NRMSE).

The results show that for all examined architectures \(GRF_{V}\) and \(GRF_{AP}\) are relatively well estimated, while \(GRF_{ML}\) proves to be the most difficult component to model (Table 1). The simpler models, i.e., FFNN and WNN, performed better on all components, as they seem to cope better with smaller datasets. The small amount of data does not seem to have been sufficient to train robust LSTMs.

In addition to performance, resource constraints in the insole are also an important consideration when selecting an NN architecture. Therefore, we decided to implement FFNN models in the firmware of the insole, as they have the smallest number of parameters and thus require the least amount of memory. An FFNN was implemented for each of the three GRF components. These models provide estimates of the individual components after each step.

The first phase, i.e., the evaluation of the different models, was performed in Matlab 2019b (MathWorks, USA), while the subsequent implementation in the firmware of the insole was carried out in the programming language C.

3.4 Automatic classification of physiological and atypical gait patterns

To classify the estimated GRF data from the pressure insole into physiological and pathological gait patterns, another machine learning model was trained based on the publicly accessible GaitRec GRF dataset [42]. It comprises GRF measurements from 2084 patients with various musculoskeletal impairments and data from 211 healthy control subjects. This dataset is the largest and, in terms of pathology, the most diverse dataset available to date.

A balanced subset (on the subject, trial, and pathology levels) was randomly generated from the overall dataset. This subset includes partitioning the data into a physiological and a pathological class, each containing data from 180 individuals. For each person, six trials are selected randomly, resulting in a total of 2160 trials. Using the predefined training and test split from the GaitRec dataset, the balanced training and test sets used for this study comprise 1584 and 576 trials, respectively.

Due to promising results of approximately 91% classification accuracy on a similar dataset [9], an SVM with a linear kernel was implemented for the underlying classification task using the LIBSVM library [43]. First, a hyperparameter tuning was conducted in the form of a five-fold cross-validation on the training data. After finding the best hyperparameters, the linear SVM was trained on the whole training data and evaluated on the unseen test data. While using all three GRF components for the training of the SVM, the GaitRec test data were correctly classified with an accuracy of 91%. As an additional evaluation step, all estimated physiological GRFs from the FFNN models (see Section 3.3) were classified using the same SVM model and achieved an accuracy of 97%.

In a final step, this pre-trained SVM was transferred to the firmware of the insoles by implementing the detection function as described in [44].

4 Auditory feedback

In a former study on auditory feedback of plantar pressure distributions provided by sensor-instrumented insoles [2], we compared sonification models representing ankle-foot-rollovers based on several synthesis algorithms and showed their impact on the gait behavior of the test participants. For auditory feedback that represents classification estimates, i.e., static states, more simplistic sonification models seem suitable.

With reference to the work of Biesmans and Markopoulos [31], we followed a design that supports users to rely on their own proprioception by providing a minimum of auditory information. This was achieved through an event-based sonification that only responds to changes in the classification estimate (threshold) from NG to GD or vice versa.

For the sonification application, we used the MAX8 programming environmentFootnote 3 which — due to its dataflow-oriented programming paradigm — is particularly suitable for prototype implementations in the field of auditory display. The incoming data stream from the left and right insoles was transmitted via OSC (see Section 3.1) including raw sensor data as well as estimated classification labels (distinguishing between NG and GD). The auditory feedback was designed to be easy to understand, pleasant and adjustable (pitch, sequence, duration, loudness) to the individual needs of different target users, such as users with gait impairments using the insoles for daily exercises. For the feedback, two acoustic events are needed: one triggers an ascending three-tone sequence (see Table 2) synthesized by a guitar-like Karpus-Strong implementation (positive feedback) when the decision of the SVM changes from GD to NG; and a second one in the case of a change back to GD. In this case, a descending three-tone sequence (negative feedback) is played. It is noteworthy that only changes in the classes (i.e., states) cause an acoustic event. Additional reverb is used to smoothen the signals.

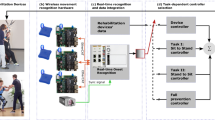

4.1 User-centered training

To achieve the second aim of our scoping studyFootnote 4, we pursued an alternative approach to obtain meaningful expert feedback and focus on a user-centered setup. We used the raw sensor data from the insole and integrated a linear SVM [45,46,47] as a classifier into the sonification application (see Fig. 5). This model can be trained individually for a specific user to classify certain gait patterns, e.g., as correct or incorrect. The input data consist of 15 features (12 pressure sensors and three accelerometer axes) for each sample, which is recorded at a sample rate of 25 samples per second. Through a graphical user interface, the operator records the insole data and immediately trains the model with these data and the associated ground truth label. After training the model with the user’s data, different sonification models can be associated with the different labels for online feedback.

The model was also successfully trained and tested with static postures in simple exercises such as a lunge or squat, where the model was able to detect weight shifts from the lateral (“outer”) to the medial (“inner”) side of the foot (and vice versa). During these exercises, a physical therapist is especially interested in supporting the patient in distributing more weight to the lateral side of her or his foot, thereby maintaining a neutral frontal alignment of the knee. If the patient puts more weight over the medial side of the foot, there is a higher risk of increasing unfavorable loads to the knee. To provide adequate auditory feedback for the lateral and medial weight shifts, the sonification model described in the previous section was extended by two variants of continuous feedback representing a wind (concrete) and a trumpet metaphor (abstract). The wind sound, based on the model by Farnell [48], is implemented in a howling and a whistling version, which can be clearly distinguished. The alternative trumpet tones (low/high) are created through FM synthesis. Following the attack and decay phases, it reaches a soft sustain phase, which sightly modulates (see Table 3).

With the implemented continuous feedback, it is now possible to display two-fold state deviations (e.g., medial and lateral weight shifts) from normal state or NG independently. This is an important aspect, since people may respond to auditory feedback (howling wind) on a deviation by overcompensation (e.g., deviation in the opposite direction). In this case, a second sound (whistling wind) is used to indicate this. All components besides the wind/trumpet selection can be arbitrarily activated.

User study under static positions. On the left, participants wearing the smart insoles are asked to keep their load evenly distributed on the base of support while holding two positions: a one-leg stand and a lunge. On the right, the different mediolateral load shift and imbalance scenarios are displayed, which trigger auditory feedback to guide the participant

5 Assessment by physical therapists

To evaluate our first prototypes of automated auditory feedback, we conducted a focus group session with two physical therapists (1 female and 1 male, with 7 and 28 years of experience) from our institution. Both therapists were not associated with the project. The session lasted 2 h and encompassed:

-

(a)

an introduction to the approach, its functionality, and the intended target group;

-

(b)

a demonstration session including two scenarios: (1) recording and training of two walking states (physiological and lateral load) by one of the team members, including testing based on real-time changes of gait behavior, and (2) recording and training of three posture states (normal standing, and standing with weight distributed to the medial or lateral aspect of the foot), including testing on the basis of real-time changes of load shifting, and

-

(c)

an overall discussion and evaluation of the potential of the approach for application as a preventive and supportive tool for physical therapy and tele-rehabilitation.

Experts were addressed alternately, and their feedback was transcribed on paper during the session by the moderator and an observer. At the end of the session, the summary of the notes was presented to both experts for their validation. Both experts agreed that they could clearly distinguish between all the auditory feedback implementations and attribute them to the observed gait behavior. The wind noise associated with the external load was clearly recognizable and attributable to the gait behavior by the experts. The method offers a lot of potentials and is particularly useful at the beginning of treatment. The trumpet sounds were also clearly attributable to the gait behavior by the experts. They were initially perceived as more pleasant than the wind sounds. According to the experts, however, the trumpet sounds can be expected to be more intrusive than the wind sounds with longer-lasting tests. Wind sounds in general were not completely appreciated by one expert, who suggested that there should be several sounds for a user to choose from. Sounds should always be perceived as pleasant (and not as a punishment) for therapy to be successful.

Both physical therapists found continuous auditory feedback easier to comprehend than the event-based sonification that they had previously heard. The ascending and descending three-tone sequences of the event-based sonification were perceived as rather complex, particularly with respect to the cognitive workload imposed on potential elderly clients. Generally, pre-testing of the perceptional abilities of prospective patients was recommended.

During the observation, the experts noted that compensatory movements could be triggered in response to auditory feedback. After all, the absence of an auditory signalFootnote 5 would not mean that the response resulted in a physiological gait pattern. The following demonstration, in which three static postures were trained, offered the first approach to making compensatory movements audible.

In conclusion, the two experts suggested that a procedure for future implementations in the field of physical therapy should begin with an initial placement test and that the difficulty of the static positions should be gradually increased since the therapy setup focuses more on static positions, which are usually faster and easier to interpret.

6 Usability of auditory feedback

An additional user study was designed to assess the clarity of the proposed auditory signals for non-expert users. Clarity and easy-of-use are fundamental for the autonomy of users when performing the intervention exercises proposed by their therapists at home. To assess the clarity of the auditory signal, participants should be able to use the provided feedback to assess their load distribution and whether it is centered or not on their base of support. For this purpose, the physical therapists in our team proposed two static exercises: one-leg stand and lunge hold. For both exercises, the person holding the position must maintain their weight evenly distributed on the base of support, which can be directly tracked in real-time by the smart insoles. In this setup, whenever our machine learning models detect a major load shift, the insole user is warned by an auditory cue (see Fig. 6).

6.1 Test design and setup

This user study aimed at assessing the usability of the system and the user experience of non-expert users with auditory guidance. Three auditory cues were selected for evaluation: an event signal, and two continuous signals (wind and trumpet sounds). The event-based signal — Event Signal — was previously used in our study for signaling atypical gait patterns (see Section 4). It is designed as a guitar-like sound, with variations to indicate both negative (triggered at the moment the system detects a load shift to the medial (inner) or lateral (outer) side of their base of support) and positive feedback (triggered at an even load distribution). The continuous sounds — Continuous (wind) and Continuous (trumpet) — were used in our expert review with low and high frequencies to indicate different states (see Section 4). In this user study, only the high-frequency components were used to indicate that the user had an incorrect load distribution. Under these conditions, whenever the load was not evenly distributed, continuous auditory feedback was played.

The effects of the three auditory conditions on easiness, workload, user preference, and user performance were assessed in a within-subject test design. Each test participant was asked to wear the insoles and hold both static positions for a given time for each leg (right and left). For the One-Leg Stand, participants were asked to stand on the requested leg and raise the other leg towards the back for 40 s before switching legs. For the Lunge Hold, participants were asked to perform a forward lunge and hold it with \(90^\circ\) of knee angle for 20 s before switching legs. The tasks were performed in front of two loudspeakers. Users stood 150 cm away from the speakers, which were placed 80 cm apart from each other and 80 cm above the floor. After performing both positions for both legs with an auditory condition, participants were asked to answer standard questionnaires related to that task. The procedure was repeated for each of the three auditory conditions, which were counterbalanced with a 3X3 Latin square. At the end of the session, the participants answered final questions about the system and their experiences. Figure 7 shows the overall test protocol, including an initial training phase in which our SVM classifier was trained for each participant.

Data from the dependent variables were collected through log files and questionnaires. After-task questionnaires included the Single Ease Question (SEQ) [49] with a single 7-point Likert scale to assess ease of use, the (non-weighted) NASA Task Load Index [50] with six 7-point Likert scales to assess workload, and two additional 4-point Likert scales for easiness per position. After-study questionnaires included two additional questions, each one with three 5-point Likert scales, to assess user satisfaction and perceived performance with each auditory condition. Finally, the System Usability Scale (SUS) [51] was used to assess the perceived usability of our system. The SUS was made to assess the appropriateness of a tool or system in a specified context and, therefore, also used to assess auditory and multisensory interfaces [52,53,54]. The scale is shown to reliably measure the ability of users to complete tasks with the assessed system, the level of resource consumed in performing the tasks, and the users’ subjective reactions to using the system [55].

6.2 Participants

We recruited 10 participants via emails, class announcements, and direct contact. The sample comprised 3 male and 7 female participants, with ages ranging from 21 to 57 years (\(M = 32\), \(SD = 11.5\)). Six of them were students, two were faculty members, and two were not enrolled in the university. All participants read and signed an informed consent form before the experiment.

Two participants reported having academic/professional experience with physical therapy. Four participants reported having a mobility problem, namely two cases of torn right ankle ligaments, one twisted right ankle, and one case of knee pain. There were correlations found between the participants’ reported mobility issues and their ratings during the experiment, which are presented and discussed in the next section.

6.3 Results

Figure 8 shows the main results for ease of use between auditory signals and positions. The task under the three different auditory cues was equally rated as easy, with no effect of the auditory condition. However, there was a clear difference between the tested static positions. A non-parametric Friedman test showed that Lunge Hold was perceived as significantly more difficult than One-Leg Stand (\(X_{2}(1) = 4.5\), \(p =.03\)).

Overall, the tasks under different auditory conditions were rated not only as easy, but all of them yielded low workload scores (see Fig. 9). There was no significant difference in workload between Event Signal (\(M = 20\), \(SD = 11.2\)), Continuous (wind) (\(M = 20\), \(SD = 8.9\)), and Continuous (trumpet) (\(M = 22.5\), \(SD = 11.3\)). In addition, SUS scores yielded an average of 74 (\(SD = 17.2\)), which means that the overall usability of the system was “Good” when compared to a benchmark [56, 57], despite its prototypical aspect. Six out of ten participants rated the system above the SUS average, which is 68.

Moreover, users’ ratings for how satisfied they were with each auditory condition after the study were lower for Continuous (trumpet) (\(M = 2\), \(SD = 1.2\)) in comparison to both the Event Signal (\(M = 3.7\), \(SD = 1.1\)) and the Continuous (wind) (\(M = 3.5\), \(SD = 1.2\)) conditions. The same was observed for users’ ratings of their own performance under each auditory condition, in which the Continuous (trumpet) (\(M = 3.3\), \(SD = 1.2\)) yielded lower scores in comparison to both Event Signal (\(M = 4.2\), \(SD = 0.4\)) and Continuous (wind) (\(M = 4.1\), \(SD = 0.7\)) conditions. A non-parametric Friedman test confirms the effect of the auditory condition on both satisfaction (\(X_2{2}(2) = 7.4\), \(p =.02\)) and perceived performance ratings (\(X_{2}(2) = 8\), \(p =.01\)) (see Fig. 10).

Finally, based on the users’ after-study answers, there was a strong correlation between the reported mobility problems and the rating of easiness of the different positions. A Spearman correlation test showed a significant negative association between having a mobility problem and the satisfaction with the condition Continuous (wind) (\(r(28) = -.37\), \(p =.04\)). In addition, a significant negative association was also found between having a mobility problem and the perceived performance with the condition Continuous (trumpet) (\(r(28) = -.40\), \(p =.02\)). There was no effect found for the reported mobility problems with the NASA-TLX scores. Overall, for the continuous feedback, the wind sound was reported as more “soothing” than the trumpets, as the latter “sounded like a train” moving towards the user [User #5]. However, in the opinion of one of the users, who also reported having mobility issues, “the bell sound made it easier to make corrections because of the different versions of sounds: one for straying away from the right position and one for being right again” [User #6].

7 Discussion and conclusions

In this paper, we present a proof-of-concept study in which we implement and assess different components of a mobile system based on sensor-instrumented insoles. Our system is designed to provide an automated classification of deviations from functional movements in order to assist everyday users with static and dynamic gait and rehabilitation interventions by providing clear auditory guidance. To this end, we start by training multiple models for estimating GRFs from data provided by the insoles. Then, we design and assess auditory models for guiding users and experts on both dynamic and static exercises.

In order to deploy such a system for supervised therapy and tele-rehabilitation settings, it is fundamental that the system can provide easy-to-use feedback. Therefore, we extend an initial report of our study [8] with the assessment of the user experience of non-expert individuals when using our proposed system with different auditory guiding signals. Results show that, although different auditory cues can assist users with comparable performance and ease of use, there are subject-dependent measures that affect user preferences. For example, when using continuous feedback to indicate load imbalances, an ambient sound can be preferred. In addition, mobility issues can affect user preferences.

7.1 Limitations

Due to the scope of the project, an evaluation including patients with gait disorders was not possible. Thus, we evaluated our approach only with healthy gait patterns. In addition, a correlation was found between age, gender, and knowledge of physical therapy and differences in user experience scores. However, due to our limitations in terms of sample size and skewness (e.g., the proportion of male and female participants), we are unable to assess the effects of these variables on usability and user experience. Further studies are recommended to assess such aspects with a larger sample, including participants with gait impairments.

Although focus groups can provide the possibility of a broad range of viewpoints and insights, there are known limitations related to the group dynamics that could be a factor in our expert evaluation [58]. Especially with our small sample size, differences such as the years of experience of our invited experts could affect their responses. Further expert evaluation should also include interviews and observation of experts using the insoles with their clients.

7.2 Potential for future applications and treatments of pathologies

Gait-related pathologies can affect all age groups and range from traumatic and neurological to geriatric patients. The availability of various tools allows the best possible support for patients during the rehabilitation process and assistance in achieving the specified therapy goal. In the shared decision-making process, different therapy options and therapeutic appliances must be discussed with the client, that are feasible and fit the client’s expectations [59]. There are also strategies needed to provide support to clients in rural communities with a shortage of health care professionals [60] or special circumstances like pandemic-induced contact restrictions [61].

One focus of this project was to combine a mobile gait classification system with auditory feedback. A future application may be for patients with traumatic or orthopedic pathologies who need partial weight-bearing in their health care. The auditory feedback could warn the client if the load on the injured leg is too high to prevent damage during post-operative care. As the client’s perception of the actual load is essential, easily available concurrent auditory feedback while walking or climbing stairs in a clinic could be decisive at this stage of rehabilitation.

This auditory biofeedback application could also help the client at home while performing activities of everyday life without the physical presence of a health professional. Moreover, a tool that provides feedback to the user while practicing independently can be crucial in order to actively involve a client in the rehabilitation process. According to self-determination theory, this may support a client’s autonomy and thus foster motivation and adherence, and consequently improve the therapy outcome [62].

Elderly clients in particular have a higher risk of falls, which can impact their independence and in severe cases lead to death [61]. To assess the fall risk of a client, different assessments are used in a clinic or hospital to poll risk factors, e.g., history of falls, muscle weakness, poor balance, etc. [63]. A future application, in this case, might be a tool that observes the gait behavior of the client [5]. If the application registers deviations from the normal individual gait behavior and load shift, it can send auditory feedback to alert the user. The client can then consciously check the situation. The recordings of these situations can help the client discuss various solutions to reduce the risk of falls with health professionals. To prevent future falls, an exercise program for muscle strengthening and improving balance could be applied with the introduced tool, including auditory biofeedback for personalized support at home and in rural communities.

In conclusion, we successfully demonstrated the feasibility of an auditory feedback system based on automated classification of functional gait disorders using an instrumented insole. An updated version of the insoles is by now classified as a medical product, which opens a large scope of potential applications and should be addressed in future research projects. The high potential of the implemented auditory feedback for preventive and supportive applications in physical therapy, such as supervised therapy settings and tele-rehabilitation, was highlighted by final empirical studies. Whether the user’s joint alignment and gait patterns improve after using the proposed auditory biofeedback system will be the topic of our next studies.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Notes

The NNs have been tested with multiple configurations of hidden layers and numbers of nodes. For the WNN, we used one wavelet layer with the size of 25, and for the LSTM one layer with 25 hidden units. In the end, an FFNN with one hidden layer and 25 nodes was implemented.

Assessing the potential of auditory feedback based on automated gait classification for preventive and supportive applications in physical therapy.

In a setup with continuous feedback on one-directional deviations.

References

Tucker, C.A.: Measuring walking: a handbook of clinical gait analysis. LWW (2014)

Horsak B, Dlapka R, Iber M, Gorgas A-M, Kiselka A, Gradl C, Siragy T, Doppler J (2016) Sonigait: a wireless instrumented insole device for real-time sonification of gait. J Multimodal User Interfaces 10(3):195–206

Sigrist R, Rauter G, Riener R, Wolf P (2013) Augmented visual, auditory, haptic, and multimodal feedback in motor learning: a review. Psychon Bull Rev 20(1):21–53

Pietschmann J, Flores FG, Jöllenbeck T (2019) Gait training in orthopedic rehabilitation after joint replacement-back to normal gait with sonification? Int J Comput Sci Sport 18(2):34–48

Brodie MA, Okubo Y, Annegarn J, Wieching R, Lord SR, Delbaere K (2016) Disentangling the health benefits of walking from increased exposure to falls in older people using remote gait monitoring and multi-dimensional analysis. Physiol Meas 38(1):45

Cadore EL, Rodríguez-Mañas L, Sinclair A, Izquierdo M (2013) Effects of different exercise interventions on risk of falls, gait ability, and balance in physically frail older adults: a systematic review. Rejuvenation Res 16(2):105–114

Van Rossom S, Wesseling M, Smith CR, Thelen DG, Vanwanseele B, Jonkers I et al (2019) The influence of knee joint geometry and alignment on the tibiofemoral load distribution: a computational study. Knee 26(4):813–823

Iber M, Dumphart B, de Jesus Oliveira V-A, Ferstl S, M. Reis J, Slijepčević D, Heller M, Raberger A-M, Horsak B, (2021) Mind the steps: towards auditory feedback in tele-rehabilitation based on automated gait classification. Audio Mostly 2021:139–146

Slijepcevic D, Zeppelzauer M, Gorgas A-M, Schwab C, Schüller M, Baca A, Breiteneder C, Horsak B (2017) Automatic classification of functional gait disorders. IEEE J Biomed Health Inform 22(5):1653–1661

Magill R, Anderson D (2013) Motor learning and control: concepts and applications. McGraw-Hill Publishing New York

Boyd J, Godbout A (2010) Corrective sonic feedback for speed skating: a case study. Georgia Institute of Technology

Schaffert N, Mattes K (2012) Acoustic feedback training in adaptive rowing. Georgia Institute of Technology

Turchet L (2014) Custom made wireless systems for interactive footstep sounds synthesis. Appl Acoust 83:22–31

Turchet L (2016) Footstep sounds synthesis: design, implementation, and evaluation of foot-floor interactions, surface materials, shoe types, and Walkers’ features. Appl Acoust 107:46–68

Baram Y, Miller A (2007) Auditory feedback control for improvement of gait in patients with multiple sclerosis. J Neurol Sci 254(1–2):90–94

Turchet L, Fazekas G, Lagrange M, Ghadikolaei HS, Fischione C (2020) The internet of audio things: state of the art, vision, and challenges. IEEE Internet Things J 7(10):10233–10249

Howell AM, Kobayashi T, Hayes HA, Foreman KB, Bamberg SJM (2013) Kinetic gait analysis using a low-cost insole. IEEE Trans Biomed Eng 60(12):3284–3290

Rodger MWM, Young WR, Craig CM (2014) Synthesis of walking sounds for alleviating gait disturbances in Parkinson’s disease. IEEE Trans Neural Syst Rehabil Eng 22(3):543–548. https://doi.org/10.1109/TNSRE.2013.2285410

van Rheden V, Grah T, Meschtscherjakov A (2020) Sonification approaches in sports in the past decade: a literature review. In: Proceedings of the 15th International Conference on Audio Mostly. pp 199–205

Gorgas A-M, Schön L, Dlapka R, Doppler J, Iber M, Gradl C, Kiselka A, Siragy T, Horsak B (2017) Short-term effects of real-time auditory display (sonification) on gait parameters in people with Parkinsons’ disease-a pilot study. In: Converging Clinical and Engineering Research on Neurorehabilitation II. Springer, pp 855–859

Guerra J, Smith L, Vicinanza D, Stubbs B, Veronese N, Williams G (2020) The use of sonification for physiotherapy in human movement tasks: a scoping review. Sci Sports 35(3):119–129. https://doi.org/10.1016/j.scispo.2019.12.004

Park J, Na Y, Gu G, Kim J (2016) Flexible insole ground reaction force measurement shoes for jumping and running. In: 2016 6th IEEE International Conference on Biomedical Robotics and Biomechatronics (BioRob). IEEE, pp 1062–1067

Dyer PS, Bamberg SJM (2011) Instrumented insole vs. force plate: a comparison of center of plantar pressure. In: 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE, pp 6805–6809

De Rossi S, Donati M, Vitiello N, Lenzi T, Giovacchini F, Carrozza M (2012) A wireless pressure-sensitive insole for gait analysis. Congr Naz di Bioingegneria 1–2

Putti A, Arnold G, Cochrane L, Abboud R (2007) The pedar® in-shoe system: repeatability and normal pressure values. Gait & Posture 25(3):401–405

Rosquist PG (2017) Modeling three dimensional ground reaction force using nanocomposite Piezoresponsive foam sensors. Brigham Young University

Cordero AF, Koopman H, Van Der Helm F (2004) Use of pressure insoles to calculate the complete ground reaction forces. J Biomech 37(9):1427–1432

Rouhani H, Favre J, Crevoisier X, Aminian K (2010) Ambulatory assessment of 3D ground reaction force using plantar pressure distribution. Gait Posture 32(3):311–316

He J, Lippmann K, Shakoor N, Ferrigno C, Wimmer MA (2019) Unsupervised gait retraining using a wireless pressure-detecting shoe insole. Gait Posture 70:408–413

Turner A, Hayes S (2019) The classification of minor gait alterations using wearable sensors and deep learning. IEEE Trans Biomed Eng 66(11):3136–3145

Biesmans S, Markopoulos P (2020) Design and evaluation of sonis, a wearable biofeedback system for gait retraining. Multimodal Technol Interact 4(3):60

González I, Fontecha J, Hervás R, Bravo J (2015) An ambulatory system for gait monitoring based on wireless sensorized insoles. Sensors 15(7):16589–16613

Hohagen J, Wöllner C (2018) Bewegungssonifikation: psychologische grundlagen und auswirkungen der verklanglichung menschlicher handlungen in der rehabilitation, im sport und bei musikaufführungen. Jahrbuch Musikpsychologie 28:1--36

Hu X, Zhao J, Peng D, Sun Z, Qu X (2018) Estimation of foot plantar center of pressure trajectories with low-cost instrumented insoles using an individual-specific nonlinear model. Sensors 18(2):421

Jagos H (2016) Mobile gait analysis via instrumented shoe insoles-eshoe: detection of movement patterns and features in healthy subjects and hip fracture patients. PhD thesis, TU Wien

Martínez-Martí F, Martínez-García MS, García-Díaz SG, García-Jiménez J, Palma AJ, Carvajal MA (2014) Embedded sensor insole for wireless measurement of gait parameters. Australas Phys Eng Sci Med 37(1):25–35

Sim T, Kwon H, Oh SE, Joo S-B, Choi A, Heo HM, Kim K, Mun JH (2015) Predicting complete ground reaction forces and moments during gait with insole plantar pressure information using a wavelet neural network. J Biomech Eng 137(9):091001

Dumphart, B., Schimakno, M., Nöstlinger, S., Iber, M., Horsak, B., Heller, M.: Validity and reliability of a mobile insole to measure vertical ground reaction force during walking. Virtual Meeting (2021)

Sivakumar S, Gopalai A, Gouwanda D, Hann LK (2016) ANN for gait estimations: a review on current trends and future applications. In: 2016 IEEE EMBS Conference on Biomedical Engineering and Sciences (IECBES). IEEE, pp 311–316

Zhen T, Yan L, Yuan P (2019) Walking gait phase detection based on acceleration signals using LSTM-DNN algorithm. Algorithms 12(12):253

Yu B, Gabriel D, Noble L, An K-N (1999) Estimate of the optimum cutoff frequency for the butterworth low-pass digital filter. J Appl Biomech 15(3):318–329

Horsak B, Slijepcevic D, Raberger A-M, Schwab C, Worisch M, Zeppelzauer M (2020) Gaitrec, a large-scale ground reaction force dataset of healthy and impaired gait. Sci Data 7(1):1–8

Chang C-C, Lin C-J (2001) LIBSVM: a library for support vector machines. http.www.csie.ntu.edu.tw/cjlin/libsvm/

Burges CJ (1998) A tutorial on support vector machines for pattern recognition. Data Min Knowl Disc 2(2):121–167

Bullock J, Momeni A (2015) Ml. lib: robust, cross-platform, open-source machine learning for max and pure data. In: NIME. pp 265–270

Chang C-C, Lin C-J (2001) LIBSVM: a library for support vector machines. http.www.csie.ntu.edu.tw/cjlin/libsvm/

Gillian N, Paradiso JA (2014) The gesture recognition toolkit. J Mach Learn Res 15(1):3483–3487

Farnell A (2010) Designing sound. Mit Press

Sauro J, Dumas JS (2009) Comparison of three one-question, post-task usability questionnaires. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. ACM

Hart SG (2006) NASA-task load index (NASA-TLX); 20 years later. In: Proceedings of the Human Factors and Ergonomics Society Annual Meeting, vol 50. Sage Publications, pp 904–908

Bangor A, Kortum PT, Miller JT (2008) An empirical evaluation of the system usability scale. Int J Hum Comput Interact 24(6):574–594

Garzo A, Silva PA, Garay-Vitoria N, Hernandez E, Cullen S, Cochen De Cock V, Ihalainen P, Villing R (2018) Design and development of a gait training system for Parkinson’s disease. PloS One 13(11):0207136

Hall S, Wild F et al (2019) Real-time auditory biofeedback system for learning a novel arm trajectory: a usability study. In: Perspectives on Wearable Enhanced Learning (WELL). Springer, pp 385–409

Gao Y, Zhai Y, Hao M, Wang L, Hao A (2021) Research on the usability of hand motor function training based on VR system. In: 2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct). IEEE, pp 354–358

Brooke J et al (1996) SUS-A quick and dirty usability scale. Usability Evaluation in Industry 189(194):4–7

Brooke J (2013) SUS: a retrospective. J Usability Stud 8(2):29–40

Bangor A, Kortum P, Miller J (2009) Determining what individual SUS scores mean: Adding an adjective rating scale. J Usability Stud 4(3):114–123

Lazar J, Feng JH, Hochheiser H (2017) Research methods in human-computer interaction. Morgan Kaufmann

Barry MJ, Edgman-Levitan S (2012) Shared decision making–the pinnacle patient-centered care

World Health Organization, World Health Organization. Ageing, & Life Course Unit (2008) WHO global report on falls prevention in older age. World Health Organization

Monaghesh E, Hajizadeh A (2020) The role of telehealth during COVID-19 outbreak: a systematic review based on current evidence. BMC Public Health 20(1):1–9

Lewthwaite R, Chiviacowsky S, Drews R, Wulf G (2015) Choose to move: The motivational impact of autonomy support on motor learning. Psychon Bull Rev 22(5):1383–1388

Park S-H (2018) Tools for assessing fall risk in the elderly: a systematic review and meta-analysis. Aging Clin Exp Res 30(1):1–16

Acknowledgements

We further wish to thank our colleagues Kerstin Prock, Andreas Stübler, and Susanne Mayer for contributing their expertise through an assessment of our approach.

Funding

Open access funding provided by FH St. Pölten - University of Applied Sciences. Our research is funded by the Austrian Ministry of Digital and Economic Affairs within the FFG IKT der Zukunft - BENEFIT project SONIGait II (868220). Brian Horsak was partly supported by the Gesellschaft für Forschungsförderung NÖ (Research Promotion Agency of Lower Austria) within the Endowed Professorship for Applied Biomechanics and Rehabilitation Research (SP19-004).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The co-authors Stefan Ferstl and Joschua Reis were employed by the company partner stAPPtronics for the duration of this study.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

de Jesus Oliveira, V.A., Slijepčević, D., Dumphart, B. et al. Auditory feedback in tele-rehabilitation based on automated gait classification. Pers Ubiquit Comput 27, 1873–1886 (2023). https://doi.org/10.1007/s00779-023-01723-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00779-023-01723-2