Abstract

Transactional stream processing (TSP) strives to create a cohesive model that merges the advantages of both transactional and stream-oriented guarantees. Over the past decade, numerous endeavors have contributed to the evolution of TSP solutions, uncovering similarities and distinctions among them. Despite these advances, a universally accepted standard approach for integrating transactional functionality with stream processing remains to be established. Existing TSP solutions predominantly concentrate on specific application characteristics and involve complex design trade-offs. This survey intends to introduce TSP and present our perspective on its future progression. Our primary goals are twofold: to provide insights into the diverse TSP requirements and methodologies, and to inspire the design and development of groundbreaking TSP systems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Stream processing, originating in the late 1990s and early 2000s with publish–subscribe systems and data stream management systems (DSMS) [10], has become vital for real-time data handling. Various frameworks like Apache Storm, Apache Flink, and Apache Kafka Streams, each with unique features, have been developed. The integration of relational queries with continuous stream processing has been explored since the first-generation stream processing engine (SPE) [37]. However, modern SPEs often lack the capability to maintain or query relational tables consistently during processing [2, 9, 49]. This leads to two key limitations: the absence of transactional guarantees and inconsistency across distributed systems [53].

Transactional stream processing (TSP) systems have emerged as a solution, blending real-time data stream management with ACID (atomicity, consistency, isolation, durability) guarantees [4, 7, 16, 30, 33, 39, 52, 76]. Unlike traditional databases, in TSP systems, transactions initiate via streaming events and can be triggered individually or in batches. To qualify as a TSP system, two core criteria must be met: (1) real-time data processing through immediate handling of discrete data tuples, thus negating the need for batch processing or extensive data storage; and (2) robust transactional integrity assured by ACID properties. Transactions in this context may alter the system’s internal state—including data aggregates, intermediate results, or configurations—and potentially initiate external notifications or other side effects. Moreover, TSP systems provide interfaces that accommodate both continuous and relational queries, enabling versatile client interactions.

Integrating streaming and transactional capabilities introduces a unique set of challenges, resulting in varied design and implementation approaches [3, 16, 27, 36, 40, 59, 88]. These variations typically stem from application-specific requirements. TSP systems use streaming mechanisms to maintain up-to-date system states and offer real-time shared table views. Transactional features, on the other hand, ensure the consistent maintenance of these states and views.

The absence of standardized transaction models or query languages complicates the design of TSP interfaces and APIs. This lack of uniformity introduces a range of approaches, making it challenging to establish consistent terminology and feature sets across the field. Our survey aims to illuminate these variations and identify common threads. In TSP systems, query languages must support both continuous streams and transactional operations. The system’s internal state not only is influenced by incoming streams, but can also be altered via explicit insert/update queries. This dual approach to state management adds complexity yet offers flexibility, allowing diverse transactional properties based on interaction modalities with the system.

Leaderboard Maintenance (LM) [53]

Streaming Ledger (SL) [33]

1.1 Example use cases

We present two scenarios illustrating the value and necessity of transactional stream processing (TSP), with further application scenarios detailed in Sect. 5.

Leaderboard maintenance. Described by Meehan et al. [53], this use case pertains to real-time leaderboard updates during a TV voting show like American Idol (Fig. 1). Viewers vote for contestants, and the system must instantly and accurately rank them. The challenge includes maintaining different leaderboards (top-3, bottom-3, top-3 trending) and continuously updating them. Key requirements include ACID guarantees for validating and recording votes in a shared table, Votes, and ordering guarantees to tally votes sequentially.

Streaming ledger. Proposed by dataArtisans [33], the Streaming Ledger (SL) (Fig. 2) involves two types of requests accessing shared mutable states for fund transfers and deposits. The aim is to process a stream of these requests and output the results. To handle a large volume of concurrent requests, ACID guarantees and ordering guarantees are necessary. For instance, transfers may be rolled back if they violate data integrity, such as causing a negative account balance. Additionally, the system may need to process requests in temporal order by timestamp.

Modern SPEs and databases are inadequate for the described use cases. SPEs’ restriction to disjoint state subsets [21] breaches transactional consistency for multi-key input tuples. Conversely, databases are ill-equipped for high-velocity input streams. TSP uniquely unifies ACID guarantees and stream processing.

1.2 Scope

This survey offers a comprehensive examination of TSP, encompassing the key challenges, design trade-offs, current trends, and potential future directions in the field. Our focus is on stateful stream processing, which involves on-the-fly processing of dynamic or streaming data, and is not confined to specific design and implementation choices such as dataflow engines and distributed systems. We emphasize six aspects of TSP:

-

Background. The history, motivation, and various transaction models over data streams.

-

Properties. Key properties like transactions, delivery guarantees, and state management.

-

Design aspects. Examination of design factors including languages, APIs, and architectures.

-

Technologies. Analysis of the technologies and alternatives used in TSP implementation.

-

Systems. A review of representative TSP systems, highlighting features, strengths, and weaknesses.

-

Applications. An overview of real-world applications and scenarios where TSP offers valuable insights and supports improved decision-making.

1.3 Outline of the survey

The remainder of this survey is organized as follows: Section 2 introduces TSP’s background, conceptual framework, and transaction models over data streams. Section 3 discusses the taxonomy of TSP systems, including properties, design aspects, and implementation technologies. Section 4 surveys and compares early and recent TSP systems based on the taxonomy from Sect. 3. Section 5 shows applications and use cases such as stream processing optimization and concurrent stateful processing. Section 6 highlights open challenges and future directions including novel applications and hardware platforms. Section 7 concludes with a summary of key findings and insights.

2 Background

In this section, we provide an overview of key terms and concepts central to understanding transactional stream processing systems (TSP). We begin with fundamental definitions, present a conceptual framework of TSP, and explore various transaction models over data streams, referencing sources such as Babcock et al. [10].

2.1 Terms and definitions

A data stream represents a continuous flow of data reflecting underlying signals, such as network traffic streams. An event e is a 2-tuple \(e = <t, v>\), comprising a timestamp and a payload, signifying the occurrence time and the relevant data, respectively. Stream queries consist of operators, fundamental computational units, that process events continuously. They can be traditional, like join and aggregation, or user-defined in modern stream processing engines (SPEs). Windows are subsets of streams, allowing the system to handle infinite streams, and they can be categorized into types like tumbling, sliding, and session windows. The state enables the juxtaposition of current data with historical data, vital for analyzing streams.

A dataflow model serves as a powerful abstraction in stream processing. A data flow breaks tasks into smaller units and coordinates their potentially parallel execution across nodes, typically represented as directed acyclic graphs (DAGs), where nodes are operators and edges denote data that is being transferred between the operators. Dataflow models are commonly used in stream processing. However, there are also other paradigms (e.g., relational model [39]), which we consider in this survey.

2.2 Conceptual framework of TSP

This section provides a comprehensive conceptual framework for transactional stream processing (TSP) systems, embracing various paradigms and models beyond the conventional dataflow approach. The expanded scope caters to a broader class of systems such as STREAM [39], recognizing the diversity and complexity in TSP systems.

2.2.1 Key components

A TSP system is comprised of five components: transactions, transaction models, operators, scheduler, and storage.

Transactions are critical components of TSP systems, ensuring that data is processed and maintained consistently and reliably. Transactions combine the real-time nature of stream processing with the reliability and consistency guarantees of traditional transactional systems. Transaction models describe the granularity and scope of transactions within a TSP system. In TSP, a transaction is considered committed when it has successfully completed an operation (e.g., insertion, update, deletion) and the system has confirmed that transactional changes are consistent with the desired transactional guarantees (e.g., consistency, isolation, durability). Upon committing a transaction, the system ensures that its effects are persistent and can be recovered in the event of a failure. This concept of “commit” is essential in TSP systems to maintain the integrity and consistency of the data during processing.

Operators are responsible for processing incoming and outgoing data, and performing operations, such as filtering, aggregation, transformation, or joins. Operators may also have mutable state that needs to be managed in a transactional manner. Scheduler manages the execution of operators and ensures that transactions are executed in the correct order, according to the chosen consistency and isolation models. Schedulers may need to handle out-of-order events, coordinate distributed execution, and manage resource allocation.

The storage of a TSP system handles both transient and permanent states. Due to performance reasons, TSPs will often employ two different memory subsystems for storing these different types of state: (1) a in-memory storage that holds the mutable state and intermediate results, allowing fast access but vulnerable to system failure; and (2) a persistent storage that ensures durability through databases or logs, which enable state recovery in failure scenarios.

2.2.2 Key design aspects

The conceptual framework of a TSP system covers the key aspects to consider when designing and implementing a TSP system, taking into account various classes and models, and including but not limited to dataflow models. This multi-dimensional view of the TSP system offers a rich and flexible understanding that accommodates different perspectives in the field and sets the boundaries for our analysis. The framework is inclusive and reflective of the dynamic and multifaceted nature of TSP systems.

Language. The language aspect of a TSP system defines the syntax and semantics for expressing streaming and on-demand queries, as well as the transactional properties (e.g., consistency, isolation, durability). This aspect is related to the transaction models and operators, as it provides the means for developers to define and manipulate transactions and the operations they perform.

Programming model. The programming model refers to the way developers interact with the TSP system to define and manage stateful operations and transactional guarantees. This aspect is related to transactions, transaction models, and operators, as the programming model provides the framework for working with these components in a structured and organized manner.

Execution model. The execution model focuses on how the TSP system processes both streaming and on-demand queries while providing transactional guarantees. This aspect is related to the scheduler and operators, as the execution model determines how the scheduler manages the execution of operators and ensures that transactions are executed in the correct order, according to their consistency and isolation models.

Architecture. The architecture aspect of a TSP system encompasses the overall design and structural organization of the system. This includes the arrangement and interaction of the key components such as transactions, transaction models, operators, scheduler, and storage, and how they work together to meet the requirements of stream processing and data management. Specifically, the architecture: (1) defines the physical layout of the system, including the distribution and placement of processing nodes, network topology, and data storage locations; (2) dictates the system’s behavior, including the strategies for parallel processing, fault tolerance, scalability, and resource management; and (3) shapes the system’s extensibility, including how new features, components, or optimizations can be added or modified to meet evolving needs.

2.3 Transaction models over data streams

Next, we discuss some notable transaction models over data streams, along with their implementation approaches, to provide an understanding of transactional guarantees in stream processing.

2.3.1 Abstract models

Various transaction models have been explored for stream processing applications to address consistency guarantees. These models serve as processing paradigms in TSP systems, defining the boundaries of a transaction, i.e., the set of related state changes that are committed as a single unit. The state changes within a transaction can include both internal state modifications and external side effects, such as sending a message to a sink. Below, we describe five common transaction models in TSP systems:

Per-tuple transactions. In this model, each tuple in the data stream triggers a set of related state changes, and these changes are grouped and treated as a single transaction, adhering to ACID properties. Essentially, every tuple results in a transaction that encapsulates all state changes caused by that tuple. This approach is suitable for scenarios requiring atomic and isolated processing of individual events. However, it may introduce significant overhead due to frequent coordination between processing nodes.

Micro-batch transactions. Here, data streams are divided into small, bounded micro-batches, and transactions are executed over these batches. Unlike per-tuple transactions where each tuple defines a transaction, micro-batch transactions treat a whole batch as a single transaction boundary, grouping the changes caused by all tuples in the batch. This reduces the overhead associated with per-tuple transactions and allows for parallelism and optimization opportunities, but may introduce additional latency.

Window-based transactions. These transactions are executed over windows defined by criteria such as a unit of time or the number of events. The windows aggregate related events, and transactions are executed over these windows, encompassing all state changes within the window boundary. This approach provides stronger consistency guarantees, but can be challenging to manage, especially when dealing with out-of-order events or evolving window types.

Group-based transactions. Unlike window-based transactions that are defined by temporal or numerical boundaries, group-based transactions are executed over groups of related events defined by specific criteria, such as thematic relationships or business rules. This model provides fine-grained control over transaction boundaries, offering stronger consistency guarantees for complex processing tasks, but can be complex to manage.

Adaptive transactions. This model enables flexibility in defining transaction boundaries within TSP systems, allowing adjustments based on workload, system state, or application-specific needs. Rather than being an engine implementation detail, this adaptiveness is a fundamental part of the transaction model, providing a responsive framework tailored to the dynamic nature of stream processing. Implementing adaptive transactions can be challenging, as it requires real-time monitoring and the adaptation of transaction boundaries.

2.3.2 Implementation approaches

Implementation approaches for the aforementioned transaction models can be classified into three categories: unified transactions, embedded transactions, and state transactions. Depending on an application’s specific requirements, an appropriate combination of implementation approach and particular transaction model should be chosen to achieve the desired performance, scalability, and fault tolerance capability.

Unified transactions. This approach embeds stream processing operations into a transaction, providing a single framework for handling both stream processing and transactions. Unified transactions can potentially support various transaction models, as it allows flexibility in defining the scope and granularity of transactions. However, it might be more suitable for fine-grained transaction models, such as per-tuple or micro-batch transactions.

Embedded transactions. This approach embeds transaction processing into stream processing, allowing for transactional semantics without the need for separate transaction management. Embedded transactions can be more efficient for certain transaction models, particularly when a lightweight transaction mechanism is required. It might be better suited for per-tuple, micro-batch, or adaptive transactions, where the overhead of separate transaction management can be minimized.

State transactions. This approach separates transaction processing and stream processing, focusing on managing shared mutable state through transactions. State transactions can also support different transaction models, but are more suitable for scenarios where state management is a primary concern, such as window-based, group-based, or adaptive transactions.

3 Taxonomy of TSP

In this section, we examine a taxonomy of transactional stream processing (TSP) as illustrated in Fig. 3. The taxonomy is structured into three key categories: Properties of TSP: Here we examine the characteristics and requirements of TSP systems, including ordering, ACID properties, state management, and reliability. Analyzing these properties enables us to better understand the fundamental issues and challenges prevalent in TSP systems that seek to ensure accurate and reliable data processing. Design Aspects of TSP: Here we explore the design considerations in TSP systems, spanning transaction implementation, boundaries, execution, delivery guarantees, and state management. Investigating these design aspects aids us in evaluating the suitability of TSP systems for specific applications and provides insights into various design choices and trade-offs. Implementation Details: Here we address the practical aspects of TSP system implementation, such as programming languages, APIs, system architectures, and component integration. This section also discusses performance metrics and evaluation criteria for TSP systems. By examining these implementation details, we gain a deeper understanding of the practical challenges and proposed solutions in the development of TSP systems. Ultimately, this enables us to be more informed about the choices that must be made when designing or selecting which TSP system to employ for a given use case.

3.1 Properties of TSP

A TSP model is required to meet both the ordering properties of streaming operators and events, and the ACID properties of transactions, while also addressing state management, reliability, fault tolerance, and durability. These elements along with the CAP theorem’s implications are crucial to the design and functionality of TSP systems. Hence, we will delve into these properties in the subsequent sections, starting with ordering properties followed by ACID properties.

3.1.1 Ordering properties

In TSP systems, there are two critical ordering properties: event ordering and operation ordering.

Event ordering. Event ordering requires that transactions be processed according to the order of their triggering events, typically based on timestamps or some other logical ordering mechanism. This property is crucial to ensure transactions are executed in the correct sequence, so as to avoid inconsistencies. It also helps prevent race conditions or out-of-order processing, which can occur in distributed TSP systems with high levels of parallelism. It is worth noting that the ordering schedule is determined explicitly by the input event rather than the transaction execution order.

To maintain event order TSP systems may employ strategies such as locking, versioning, or optimistic concurrency control. Golab et al. [36] propose two stronger serialization properties with ordering guarantees. The first is called window-serializable, which requires a read-only transaction to perform a read either strictly before a window is updated or after all subwindows of the window are updated. The second is called latest window-serializable, which only allows a read on the latest version of the window, i.e., after the window has been completely updated. Instead of imposing an event ordering, FlowDB [3] enables developers to optionally ensure that the effects of transactions are the same as if they were executed sequentially (i.e., in the same order in which they started).

Operation ordering. In many TSP systems, applications can be represented using dataflow models, such as directed acyclic graphs (DAGs), where operators are connected by data streams [88]. Operation ordering refers to the sequence in which operators are executed in a TSP system, which impacts the correctness and efficiency of the system. Via operation ordering, the data that flows through the pipeline will be processed correctly, thereby contributing to the consistency and correctness prevalent in a TSP system. When an application is represented using a DAG, the operation ordering is determined by the directed edges between the operators. Although the operation ordering may be expressed differently for alternative representations, the ordering property guarantees the outcome will be the same. Consider a scenario where a TSP system adopts a dataflow model for stream processing—the ordering property is inherently upheld in such a setup. Nevertheless, if a database system incorporates TSP, an added prerequisite for operation ordering becomes necessary to guarantee consistency and accuracy, as detailed in the work of S-Store [53].

3.1.2 ACID properties

TSP systems manage the flow of data tuples, where transactions can involve state changes within the system and potential side effects, such as notifications to external components. The state in TSP refers to the information held within the system at any point, including data aggregates, intermediate results, or configurations. The application of a transaction in this context means executing a series of operations that may update this state, following certain rules or models. TSP can offer traditional ACID guarantees [12] similar to those in relational databases, with some necessary adaptations. These are briefly described below:

Atomicity. Atomicity ensures that transactions are either fully completed or aborted. In TSP systems, all operations within a transaction are either successfully processed together or not processed at all, thereby preventing partial updates that could lead to an inconsistent state. Atomicity in TSP varies depending on the transaction model. Traditional commit protocols, such as two-phase commit (2PC), ensure atomicity by coordinating commit or abort decisions among distributed participants. In contrast, sagas [35] allow the exposure of intermediate (uncommitted) state and require developers to define compensating actions for each operation, thereby offering a more flexible way to handle atomicity at the cost of strong isolation guarantees. Some TSP systems, like the one proposed by Wang et al. [84], relax atomicity in certain contexts, which enables developers to choose the desired consistency level. In such cases, alternative atomicity models, like sagas, can be adopted to balance the trade-offs between consistency, performance, and availability. Understanding these differences is crucial for designing TSP systems with appropriate atomicity guarantees.

Consistency. Consistency in the context of ACID refers to the requirement that every transaction moves the system from one consistent state to another. This involves the preservation of integrity constraints, which are rules defining the valid states of the data within the stream processing system, such as relationships between entities or domain-specific rules. In TSP systems, consistency also encompasses the processing and updating of data according to a specified consistency model, such as strong or causal consistency. This aspect is crucial in stream processing, where real-time data interactions guide the interactions between continuous transactions. The choice of consistency level may have implications for complexity and performance, as stronger consistency guarantees typically require more stringent enforcement of integrity constraints and other rules [3, 88].

Isolation. Isolation prevents concurrent transactions from interfering with one another [83]. In TSP systems, isolation is essential for ensuring that the output data remains consistent despite the concurrent execution of transactions triggered by the processing of concurrent input events. Different isolation levels can be provided by TSP systems, such as serializability, snapshot isolation, or read committed [3]. Some TSP systems may offer configurable isolation levels, allowing developers to adjust the isolation guarantees according to the application’s specific needs.

Durability.Durability in TSP systems guarantees that once a transaction is committed, its changes are permanently stored, typically ensured through replication, logging, or checkpointing [83]. Unlike classic transactional systems, TSP’s recovery mechanism might replay input streams to rebuild the state, a process that may not always restore an identical state due to factors such as concurrent processing and timing differences. The consequences of not reaching an identical state can lead to deviations in processing results, differentiating TSP from traditional transactional systems. Therefore, TSP systems need to satisfy properties like input preservation, state maintenance, and output persistence, often achieved through strategies like replication, logging, or checkpointing. These strategies must balance trade-offs between performance, availability, and the specific requirements of the application. This complex yet essential aspect of durability in TSP systems underscores the need for careful design to build resilient TSP systems that meet the expectations and needs of the applications and users.

3.1.3 State management properties

Effective state management is essential in TSP systems to ensure data consistency, support the stateful processing of operations, and failure recovery. Among the primary state management properties are state types, access scope, state recovery, and the management of large states.

State types. States in TSP systems can be characterized by the interaction between different components or actors that may read or write the state: (a) Read-only state: In some scenarios, the state of the system is considered read-only from the perspective of external clients, as they can only observe or query the content of the state. Internally, the system may update its state upon receiving new events from the input streams, but these updates are not accessible to the clients. (b) Read–write state: In other scenarios, the state within the TSP system itself is modifiable as stream events are processed. This type of state allows both reads and writes during the execution, requiring careful management of concurrency control, especially when multiple entities (e.g., threads or processing units) may concurrently modify the same state. This categorization highlights the complexity of state management in TSP systems, reflecting the different interactions and permissions regarding state access from various perspectives within and outside the system.

Access scope. Access scope in TSP systems defines the visibility and accessibility of state information and can influence both the implementation and the programming interface. From an implementation perspective, the access scope dictates whether the state is shared globally, partitioned across parallel processing instances, or limited to specific operators or groups of operators. Shared states may include structures like the index of an input stream or other user-defined data structures shared among threads of the same operator, operators, and queries. From the programming interface perspective, the access scope also dictates the types of queries that can be made on the state. Some systems may support on-demand queries of the state, with varying levels of expressivity. Queries may be restricted to single operators or tables, single partitions, or may even integrate data from multiple operators. The flexibility and restrictions in querying significantly impacts the programming model and influences aspects like performance, consistency, and fault tolerance. By addressing both these perspectives, access scope forms a critical aspect of state management in TSP systems.

State recovery. State recovery in TSP systems is integral to maintaining the integrity and continuity of transaction processing over data streams. In the event of a system failure, state recovery ensures that processing can resume without loss of consistency or reliability. This involves preserving ACID even during failures. The state recovery property in TSP systems must prioritize a balance between recovery speed and assurance that the restored state aligns with the pre-failure state. This reflects the unique challenge in TSP systems of managing continuous, real-time transactions where both prompt recovery and adherence to transactional principles are paramount.

Management of large states.Managing large states is a fundamental challenge in TSP systems, particularly with growing data volumes and transaction numbers. Several traditional techniques might be adapted to TSP, though they require further investigation and innovation: (1) Data sharding and state partitioning: These methods [32] could provide scalability, but need careful exploration within TSP; (2) State compaction: A promising strategy [61, 77] for reducing storage needs, yet its practical implementation in TSP is still unexplored; (3) State checkpointing: Introducing state checkpointing [11] to TSP is currently being researched, and it could enhance recovery and durability; (4) State replication, eviction, and expiration strategies: While widely applied, these techniques [45] remain largely unexplored in TSP, but may offer benefits for resource management and fault tolerance.

3.1.4 Reliability and delivery guarantees

Fault tolerance, durability, and deliverability are essential properties of TSP systems. These properties ensure that TSP systems can accurately process data and maintain their state, even in the face of failures, duplicate messages, and out-of-order events.

Delivery guarantees. Delivery guarantees in TSP systems define how input events are processed especially when failures, duplicate messages, or out-of-order events occur. Common delivery guarantees include at-most-once, at-least-once, and exactly-once processing. Exactly-once processing is often the most desirable for TSP systems, as it ensures that each event is processed precisely once, irrespective of issues during processing.

Fault tolerance. A fault-tolerant TSP system continues processing events and adhering to delivery guarantees despite failures. Recovery mechanisms, such as state replication, checkpointing, and log-based recovery, enable a system to minimize data loss and quickly recover. However, the ability to guarantee exactly-once processing requires that recovery leads to a consistent state that reflects all committed transactions.

Durability. Durability involves persistent data storage to safeguard its availability for retrieval. This property is essential for upholding delivery guarantees. However, as noted in Sect. 3.1.2, recovery mechanisms may not always lead to the same state. The commitment to exactly-once processing implies that the system must ensure a consistent recovery that honors all processed and committed input events, rather than strictly restoring the exact previous state.

CAP theorem. The CAP theorem applies to distributed systems, stating that it is impossible to simultaneously achieve consistency (across replicas), availability, and partition tolerance. In the context of TSP systems, these principles must be balanced according to specific needs. Emphasizing consistency and partition tolerance may slow response times, whereas prioritizing availability can lead to faster responses but potentially stale data. It is vital to differentiate consistency in the CAP theorem from transactional consistency in ACID transactions, as they relate to distinct aspects of TSP systems.

Remark 1

(Beyond exactly-once guarantee) TSP systems may face unique challenges requiring more stringent delivery guarantees than the exactly-once guarantee typically found in many SPEs. Specifically, TSP systems must replay failed tuples in the exact timestamp sequence of their triggering input events and prevent duplicate message processing. This is crucial because results depend on the local state of an operator and the time ordering of input streams.

One approach to achieving this advanced level of delivery guarantee involves checkpointing or archiving each input event before processing and sequentially replaying them in case of failure [23]. While this method provides the desired guarantee, it incurs significant overhead, making it unsuitable for many TSP systems. Consequently, further research is required to identify more efficient mechanisms that can satisfy the delivery guarantee needs of TSP systems while minimizing performance overhead.

3.2 Design aspects of TSP

The design aspects of TSP systems focus on the critical components required to create a functional and efficient TSP system. These components include the implementation of transactions, determining transaction boundaries, executing transactions, delivering guarantees, and managing the state. Understanding these design aspects is crucial for evaluating the suitability of different TSP systems for specific application requirements and providing insights into various design choices and trade-offs.

3.2.1 Implementing transactions

To actualize a transaction model over data streams, three primary approaches have been proposed: embedding stream processing operations into transactions (i.e., unified transactions), embedding transaction processing into stream processing (i.e., embedded transactions), or combining transaction processing and stream processing (i.e., state transactions). The choice among these approaches depends on the specific requirements and constraints of the system, and they may interact with each other in complex ways. Below, we discuss each of these in turn:

Approach 1: Unified transactions. Unified transactions integrate stream processing and transaction processing within systems adopting a relational query model, treating data streams and relational data uniformly [7, 39, 55]. This approach leverages existing relational data management techniques for transactional consistency, enabling a unified framework that accommodates both stream processing and transactional aspects. Several studies have explored the implementation of continuous queries as sequences of onetime queries, which are executed as a result of data source modifications or periodic execution [16, 53, 57, 58]. These studies illustrate methods to unify stream processing and transaction processing, so as to enable the use of a single execution engine for both types of operations.

Definition 1

(Unified transaction) Let \( DS = \{S_i, S_o, \ldots \} \) be a set of data sources and sinks, and \( DI \) be the data items in \( DS \). A unified transaction \( T \) is represented by the pair \( T = (O_i, \le _T) \), where:

-

\( O_i \): A set of read (r) and write (w) operations on \( DS \), each operation \( o \in O_i \) is a function \( o: DI \rightarrow DI \) that modifies or retrieves a specific data item \( d \in DI \). This set represents the operations on the data items within the given data sources and sinks.

-

\( \le _T \): A partial order satisfying:

-

New streaming events and continuous query executions are represented as write (w) and read (r) operations, respectively.

-

For all \( op, oq \in O_i \) accessing the same data item, with at least one write (w), either \( op \le _T oq \) or \( oq \le _T op \).

-

Constraints: The operations in \( O_i \) must adhere to certain constraints depending on the specific requirements of the TSP system, such as maintaining consistency or availability, as defined by the system’s design and the nature of the data items.

Design aspects. The design space for unified transactions consists of several aspects, including data modeling, query execution, and transaction management. (1) Data modeling: Data streams are represented as time-varying relations, with both input and output streams treated as continuous relations that are updated with new events, each marked with a timestamp. This representation, where each stream corresponds to a single relation, enables the use of relational algebra and SQL-like queries, and simplifies integration with existing database systems. (2) Query execution: Queries over data streams are often continuous: They are evaluated over an unbounded sequence of input data. Unified transactions represent continuous queries as a sequence of onetime queries triggered by data source modifications or periodic execution. This approach allows for the reuse of existing query processing techniques from relational databases while ensuring the continuous nature of stream processing is maintained. (3) Transaction management: To ensure transactional consistency and correctness, the unified transactions approach relies on relational data management techniques, in particular, concurrency control and recovery mechanisms, such as two-phase locking and logging, to provide isolation, atomicity, and durability guarantees.

Pros and cons: The unified transactions approach simplifies system architecture and leverages well-established relational data management techniques.

However, this approach may introduce additional overhead due to the transformation of data streams into relational data, a process that can be computationally expensive for several reasons. First, the transformation requires mapping continuous, unbounded data streams into discrete, time-varying relations, involving time windowing, data discretization, and possible aggregation. These computations require additional processing resources and may induce latency. Second, unifying the data model between streams and relational data may necessitate complex schema matching and data type conversions, further contributing to computational overhead. Finally, maintaining consistency and transactional guarantees within this unified model may require additional locking, logging, or other concurrency control mechanisms, which can also increase the system’s complexity and resource requirements. Thus, this approach may not be suitable for all TSP systems, particularly those with strict latency requirements or complex stream processing needs.

Approach 2: Embedded transactions. Embedded transactions integrate transaction processing into the stream processing pipeline [69], allowing real-time processing while maintaining consistency and reliability. Several studies, including those exploring incremental continuous query processing with isolation guarantees [69], have explored the embedded transactions approach. For instance, Shaikh et al [69] propose a model that treats each incoming data item as a part of the processing pipeline, allowing real-time processing while maintaining consistency and reliability guarantees.

Definition 2

(Embedded transaction) Let \( S \!=\! \{s_1, s_2, \ldots , s_n\} \) be a data stream consisting of data items and \( O = \{o_1, o_2, \ldots , o_m\} \) be processing operations that act on the data items, including join operations with relations. An embedded transaction treats incoming data as a continuous flow, where:

-

Each data item \( s_i \in S \) initiates a subset of operations \( O_i \subseteq O \), where each operation \( o \in O_i \) is a function \( o: S \rightarrow S \) that modifies or retrieves a specific data item.

-

The processing follows properties such as isolation, atomicity, and consistency, with constraints to ensure that the operations meet the specific requirements of the system.

-

Mechanisms like snapshot isolation and optimistic concurrency control may be used to maintain consistency in the presence of concurrent updates.

Design aspects. Key aspects of embedded transactions involve processing events as they arrive, managing state information between processing steps, and incorporating data partitioning, replication, and checkpointing to achieve transactional guarantees. Next, we examine each of these aspects in turn. (1) Event-driven processing: The embedded transaction approach employs event-driven processing, where each event in the data stream triggers one or more stream processing operations. This allows for low-latency processing and maintains the temporal order of data streams. (2) State management: State management is crucial in the embedded approach since it enables the retention and manipulation of information between processing steps. State management varies across systems, with some using distributed storage systems or in-memory data structures for efficient state management. (3) Transactional guarantees: The embedded transaction approach provides transactional guarantees, such as consistency, isolation, and durability within the stream processing pipeline. To achieve these guarantees, they employ techniques, such as data partitioning, replication, and checkpointing.

Pros and cons: The embedded transaction approach offers benefits, such as low-latency processing and the native handling of complex stream processing tasks. Nevertheless, implementing transactional guarantees within the stream processing pipeline could necessitate substantial effort. Furthermore, this approach might not be optimal for applications necessitating integration with established relational databases or traditional transaction processing systems. Developers should carefully assess the requirements and constraints of their specific application to determine whether this approach is appropriate for their TSP system.

Approach 3: State transactions. The state transaction approach merges transaction processing and stream processing within a single system and handles state access operations as transactions. This enables TSP systems to provide transactional guarantees while processing unbounded data streams and ensure correctness via transactional semantics and the modeling of state accesses as state transactions.

Definition 3

(State transaction) Let \( S = \{s_1, s_2, \ldots , s_n\} \) be a data stream of incoming events, \( R = \{r_1, r_2, \ldots , r_p\} \) be shared mutable states that represent different aspects of the system’s state, and \( O = \{o_1, o_2, \ldots , o_m\} \) be processing operations that may read from \( S \), modify \( R \), or both. A state transaction \( T_i \) is represented by the triplet \( (S_i, R_i, O_i) \), where:

-

\( S_i \subseteq S \) is a subset of the data stream that the transaction operates on.

-

\( R_i \subseteq R \) is a subset of the shared mutable states that may be affected by the transaction.

-

\( O_i \subseteq O \) is a subset of operations that are applied to the elements of \( S_i \) and may modify the states in \( R_i \).

-

All operations within the transaction share a timestamp \( ts \), ensuring coordinated execution and consistency across the state.

Design aspects. State transactions focus on managing shared mutable states through transactions. Key aspects include managing state information between processing steps and decoupling transaction processing from stream processing. Design considerations include dataflow models, state access operations, coordination mechanisms, and fault tolerance and recovery. Next, we examine each of these design considerations in turn. (1) Dataflow models: The state transaction approach typically uses dataflow models to process data streams consisting of interconnected stateful operators. These operators process events, update state, and produce output events, which is amenable for parallel and distributed processing. (2) State access operations: State transactions treat state access operations as transactions, where each operation is associated with a unique timestamp. This enables complex processing tasks and transactional guarantees, like consistency, isolation, and durability. (3) Coordination mechanisms: The state transaction approach coordinates state transactions across the dataflow pipeline using mechanisms, such as two-phase commit protocols, timestamp-based ordering, or conflict resolution strategies, to maintain consistency and isolation. (4) Fault tolerance and recovery: The state transaction approach provides fault tolerance and recovery mechanisms, such as replication, checkpointing, and logging, to ensure durability and resilience against failures.

Pros and cons: The state transaction approach integrates transaction processing into the stream processing pipeline, handles complex processing tasks, and provides transactional guarantees. However, this approach may introduce additional complexity due to the use of coordination and fault tolerance mechanisms. Developers should carefully assess their application’s requirements and weigh the benefits against the potential complexities introduced by this approach.

Remark 2

(Comparing among approaches) We illustrate the differences among the three approaches using a common example scenario. Consider a system that monitors traffic in a smart city. It continuously receives data from sensors at intersections to control traffic lights, update maps, and alert drivers.

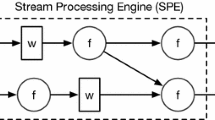

Unified transactions implementation. This approach integrates continuous stream processing with transaction processing:

where \( w \) and \( r \) denote write and read operations, respectively. The sequence includes writing sensor data, reading traffic maps and light states, and writing updates.

Embedded transactions implementation. Each incoming sensor data piece is treated as part of a continuous flow:

Operations include analyzing data, updating the traffic map, controlling lights, and sending alerts.

State transactions implementation. This approach handles both sensor data and mutable states:

The process includes reading data, managing shared states (traffic map and lights), and performing operations like analysis, updates, and alerting.

Summary: Unified Transactions integrate streaming with transactional operations, representing a complex sequence of reads and writes. Embedded Transactions treat the continuous flow of sensor data, translating each reading into operations. State Transactions manage shared mutable states (traffic map and lights), encapsulating the entire process in a state transaction. Each approach offers unique advantages and trade-offs: Unified transactions are suited for complex queries; embedded transactions for real-time continuous processing; and state transactions for robust handling of shared states within a transactional framework.

3.2.2 Determining transaction boundaries

Determining transaction boundaries is an essential aspect of TSP system design, as it defines which operations are grouped into transactions. It turns out that establishing transaction boundaries over streams can be quite flexible, particularly for state transaction implementation. First, various conditions can initiate a transaction (i.e., triggering unit), such as per input event or per batch of events with a common timestamp. Second, different entities can generate a transaction (i.e., generating unit), such as per operator and per query. Furthermore, transactions themselves can spawn additional transactions. Let us examine each of these settings in turn.

Setting 1: Triggering units. TSP systems rely on incoming streaming events to initiate transactions. The granularity of transaction boundaries is defined by various types of triggering units, including time-based, batch event-based, single-event-based, and user-defined triggers. Next, we describe each of these in turn.

Time-based triggers. Time-based triggers refer to transactional models in which the transaction boundaries are determined by time intervals. These intervals can be either fixed or dynamically adjusted based on the application requirements or the characteristics of the data streams. It often assumes that events with a common timestamp are executed atomically. This approach is employed in both academic projects, such as STREAM [7, 39] and commercial products, like Coral8 [31]. Time-based triggers are suitable for the concurrent aggregation of sliding windows, the association of transaction boundaries with window boundaries, and the management of long-running queries with specified re-execution frequencies [30, 36, 59].

Batch event-based triggers. These transactions are triggered when a batch of events with a shared characteristic (e.g., common timestamp, originating from the same stream) are processed. Batch event-based triggers are used in DataCell [47, 48], S-Store [53], and Chen et al.’s cycle-based transaction model [27, 28].They can handle large volumes of data and provide consistent processing across multiple streams.

Single-event-based triggers. In this type, a transaction is triggered for each incoming event. Single-event-based triggers ensure fine-grained control and consistency on an event-by-event basis. They have been implemented in various systems, such as Aurora and Borealis [1, 2], ACEP [84], SPASS [64], and TStream [88]. This type of transaction is suitable for applications requiring strict consistency guarantees and low-latency processing. The reason for this suitability lies in the individual handling of each event as a separate transaction. This ensures that every event is processed in isolation, maintaining strong consistency. Furthermore, by initiating a transaction for every individual event, the system can quickly react to incoming data, thereby facilitating low-latency processing. This can be critical for real-time applications where swift response to each input is required.

User-defined triggers. In this type, users can define custom triggering conditions based on their specific application requirements, which offers flexibility when establishing transaction boundaries and declaring processing guarantees. Botan et al. [16] and Chen et al. [26] demonstrate the use of user-defined transactions in their respective systems.

Setting 2: Generating units. While triggering units determine “when” a transaction is created, generating units focus on “who” generates a transaction. Transactions can be generated by user clients directly or through continuous queries on a per-query or per-operator basis. Next, we describe each unit type in turn.

Query-based generator. These transactions group operations involved in the onetime execution of an entire query [7, 39]. Early stream processing engines (SPEs) employed query-based triggers for the interactive processing of both relational and streaming data, such as the STREAM project [7, 39]. This type simplifies the transactional model, making it easier to manage and understand. However, it may lack flexibility in some cases, where individual operators within a query need to have separate transactional boundaries or different isolation levels.

Operator-based triggers. In this type, each operator in a query generates its own transactions, such as S-Store [53] and TStream [88]. Operator-based triggers provide a finer level of granularity and flexibility compared to query-based triggers, which enables more precise control over shared states in streaming dataflow graphs. This can lead to better performance and resource utilization in certain scenarios. However, this increased flexibility may also result in potential conflicts or dilemmas, such as deadlocks and contention, which may require additional mechanisms to resolve [53, 88].

User-defined triggers. Some applications may require ad hoc transactional queries or user-driven transactions during stream processing [3, 4, 26]. User-defined transactions allow users to specify where consistency needs to be enforced and which consistency constraints are required, as demonstrated in the work of Affetti et al. [3, 4]. This type grants more control to the user, thereby allowing them to tailor transactional semantics to their specific needs. However, this flexibility can make system-level optimizations more challenging, as the transaction types are not known in advance. Additionally, users bear the responsibility of ensuring that the system is free of any dilemmas or conflicts [3, 4].

Setting 3: Transaction spawning. Transaction spawning, i.e., the ability of transactions to trigger and generate other transactions, is another way to determine transaction boundaries in TSP systems. This concept is particularly relevant in systems that require complex interactions and dependencies between different transactions. For example, in a service-oriented architecture, where functionalities are treated as services, requiring atomic execution of those transactions results in composite transactions. This approach allows for a more flexible and dynamic processing flow, accommodating continuous service executions and interactions. Transaction spawning consists of a non-empty set of services, some of which have continuous executions, and others may spawn new transactions. In the context of TSP, this provides the means to represent intricate processing logic and dependencies, aligning with specific user or application requirements [80,81,82].

3.2.3 Executing transactions

Executing transactions involves processing the operations within a transaction according to the defined transaction boundaries and ensuring that the system maintains the required ACID and streaming properties. Table 1 summarizes the execution mechanisms adopted by relevant systems. These can be classified into five approach types: single-version lock-based, multi-version lock-based, static partition-based, dynamic partition-based, and optimistic.

Lock-based approaches. Lock-based approaches ensure the correct execution of transactions by controlling access to shared resources. Using locks to protect shared states, these methods prevent concurrent access and maintain consistency. Lock-based approaches can be classified into two main types:

a) Single-version lock-based: These approaches utilize a single version of the shared state and apply locks to ensure proper transaction execution. The challenge lies in balancing synchronization and performance without causing excessive contention or delays in transaction processing. We discuss three notable examples of single-version lock-based approaches as follows.

In STREAM [39], synopses enable different operators to share common states. To guarantee that operators view the correct version of a state, the system must track the progress of each stub and present the appropriate view (i.e., a subset of tuples) to each stub. This is achieved through a local timestamp-based execution model with a global schedule that coordinates the successive execution of individual operators via time slot assignments. Batches of tuples with the same timestamp are executed atomically to ensure progress correctness, with a simple lock-based transactional processing mechanism implicitly involved.

An earlier study by Wang et al. [84] describes a strict two-phase locking (S2PL)-based algorithm that allows multiple state transactions to run concurrently while maintaining both ACID and streaming properties. Unlike the original S2PL [12] algorithm, Wang et al. [84] lock each transaction ahead of all query and rule processing. In this process, each transaction’s timestamp is compared against a monotonically increasing counter to ensure that the transaction with the smallest timestamp always obtains a lock first, thereby guaranteeing access to the proper state sequence. Once lock acquisition is complete, the system increases the counter and allows the next transaction to proceed, regardless of whether the transaction was fully processed. To fulfill event ordering constraints, read or write locks are strictly invoked in their triggering event order. However, the locking mechanism must synchronize the execution for every single input event, which may negatively impact system performance.

Oyamada et al. [57] propose three pessimistic transaction execution algorithms: synchronous transaction sequence invocation (STSI), asynchronous transaction sequence invocation (ATSI), and order-preserving asynchronous transaction sequence invocation (OPATSI). STSI processes transactions triggered by event streams one at a time, in event-arrival order. ATSI removes the blocking behavior of STSI by asynchronously spawning new threads that wait for the transaction to complete. OPATSI extends ATSI through a priority queue to further guarantee the order of the results.

b) Multi-version lock-based: These approaches employ multiple versions of shared states and use locks to control access to different state versions. The main challenge is ensuring that the correct state version is accessed while avoiding outdated writes.

A notable example is Wang et al. [84], who propose an algorithm called LWM (Low-Water-Mark), which relies on the multi-versioning of shared states. LWM leverages a global synchronization primitive to guard the transaction processing sequence: Write operations must be performed monotonically in event order, but read operations are allowed to execute as long as they can read the correct version of the data (i.e., its timestamp is earlier than the LWM). The key differences between LWM and the traditional multi-version concurrency control (MVCC) scheme are twofold. First, MVCC aborts and then restarts a transaction when an outdated write occurs, while LWM ensures that writes are permitted strictly in their timestamp sequence, preventing outdated writes. Second, MVCC assumes that the timestamp of a transaction is system-generated upon receipt, whereas LWM sets the timestamp of a transaction to the triggering event. This distinction enables LWM to maintain a more event-driven approach to transaction management, better aligning with the streaming nature of TSP systems.

In summary, lock-based approaches to transaction execution in TSP systems offer various methods for managing access to shared resources and maintaining consistency. While single-version lock-based approaches focus on balancing synchronization and performance within a single shared state, multi-version lock-based approaches provide greater flexibility by managing multiple versions of shared states. Both types of approaches present their own challenges and trade-offs.

Partition-based approaches. Partition-based approaches to transaction execution in TSP systems involve dividing the internal states or transactions into smaller units, which can then be executed in parallel or with reduced contention. These methods aim to improve performance while maintaining consistency and adhering to event order constraints. There are two primary types of partition-based approaches:

a) Static partition-based: These approaches divide the internal states of streaming applications into disjoint partitions and use partition-level locks to synchronize access. This approach is suitable for transactions that can be perfectly partitioned into disjoint groups.

For example, S-Store [53] splits the streaming application’s internal states into multiple disjoint partitions. The computation on each subpartition is performed by a single thread. To guarantee state consistency, S-Store uses partition-level locks to synchronize access. However, state partitioning only performs well on transactions that can be perfectly partitioned into disjoint groups, given that acquiring partition-level locks on cross-partition states significantly impacts performance due to the overhead.

b) Dynamic partition-based: These approaches involve decomposing transactions into smaller steps and executing them in parallel to improve performance while ensuring serializability and meeting event order constraints (e.g., TStream [88] and MorphStream [50]).

The sagas model [35] allows a transaction to be split into several smaller steps, each of which executes as a transaction with an associated compensating transaction. Either all steps are executed or in a partial execution compensating transactions are executed for steps that are completed. Thus, isolation is relaxed in the original transaction and delegated to the individual steps. It exposes an intermediate (uncommitted) state and requires developers to define compensating actions. A similar idea of splitting transactions has been adopted in TSP systems such as TStream [88] and MorphStream [50], but does not expose uncommitted states and hence does not require compensating actions.

In particular, TStream [88] is a recently proposed TSP system that adopts transaction decomposition to improve stream transaction processing performance on modern multi-core processors. Despite the relaxed isolation properties, TStream ensures serializability, as all conflicting operations (being decomposed from the original transactions) are executed sequentially as determined by the event sequence. The successor of TStream [88], MorphStream, pushes the idea further and proposes cost-model-guided dynamic transaction decomposition and scheduling to further improve the system performance.

Optimistic approach. Optimistic approaches avoid locking resources by employing timestamps and conflict detection mechanisms to maintain transaction consistency at the desired isolation level, aborting and rescheduling transactions when necessary. These approaches handle transactions by predicting the order of events or by speculative execution to improve system performance. The challenge is to ensure that speculation is accurate and efficiently manages rollback or recovery when needed.

Golab et al. [36] present a scheduler targeting window-serializable properties, which optimistically executes window movements and utilizes serialization graph testing (SGT) to abort any read-only transactions causing read–write conflicts. A conflict-serializable schedule is achieved if the precedence graph remains acyclic. They also suggest reordering read operations within transactions to minimize the number of aborted transactions. FlowDB/TSpoon [3, 4] proposes an optimistic timestamp-based protocol that refrains from locking resources and instead uses timestamps to ensure transactions consistently read or update versions aligned with the desired isolation level. If this is not feasible, transactions are aborted and rescheduled for execution. This approach aims to minimize contention and improve performance by avoiding lock-based mechanisms while still maintaining the necessary consistency and isolation requirements.

Snapshot isolation approach. These approaches employ snapshot isolation to split a stream into a sequence of bounded chunks and apply database transaction semantics to each chunk. Processing a sequence of data chunks generates a sequence of state snapshots. By storing multiple versions of values as commit and delete timestamps, readers can access the latest version of a state, ensuring consistency and isolation among concurrent transactions.

A number of TSP systems employ snapshot isolation [1, 16, 27, 29, 38], aiming to split a stream into a sequence of bounded chunks and apply the semantics of a database transaction to each chunk. By putting the operation on a data chunk within a transaction boundary, a state snapshot is produced. In this way, processing a sequence of data chunks generates a sequence of state snapshots. For example, Götze and Sattler [38] present a snapshot isolation approach for TSP. Each state has multiple versions of values, each stored as a commit timestamp, delete timestamp, and value. Consequently, readers can access the latest version of a state using the commit and delete timestamps. This approach provides consistency and isolation among concurrent transactions while avoiding the need for locking mechanisms, which can improve system performance.

3.2.4 Ensuring delivery guarantees

In this subsection, we explore various design aspects of TSP systems that help ensure reliability and delivery guarantees. We discuss strategies for achieving ACID properties and streaming properties under failures and their implications on TSP system design. For a comprehensive survey on fault tolerance mechanisms in SPEs, refer to [78]. While modern SPEs usually offer fault tolerance mechanisms while ensuring various delivery guarantees, they may not always fulfill the requirements of TSP due to the combined need to satisfy ACID and streaming properties.

Achieving ACID properties. In the event of a failure, TSP systems generally need to recover all states, including input/output streams, operator states, and shared mutable states. This ensures committed transactions remain stable, while uncommitted transactions do not impact this state. Transactions that have started, but have not yet been committed should be undone upon failure and reinvoked with the correct input parameters once the system is stable again. This necessitates an upstream backup and an undo/redo mechanism akin to an ACID-compliant database.

For instance, TSP systems must guarantee atomicity when updating shared states, even under failures. An atomic transaction ensures a commit either fully completes the entire operation or, in cases of failure (e.g., system failures or transaction aborts), rolls back the database (or shared states in TSP) to its pre-commit state. Journaling or logging in database systems mainly accomplish atomicity, while distributed database systems require additional atomic commit protocols to ensure atomicity. Regrettably, most prior works on TSP either do not explicitly mention their mechanisms to ensure atomicity under failure [3, 88] or rely on mechanisms provided by their storage systems (e.g., traditional database systems [53]). Making this more transparent could help users better understand which properties are not guaranteed when employing a TSP system in practice.

Achieving streaming properties. To satisfy streaming properties further, the recovered states in TSP systems should be equivalent to the one under construction when no failure occurred. Achieving this requires an order-aware recovery mechanism [72]. However, the commonly adopted recovery operation in modern SPEs, particularly the parallel recovery operation, might result in different transactional states due to the absence of guarantees on the event processing sequence during recovery. To the best of our knowledge, there is still no in-depth study on designing efficient fault tolerance mechanisms for TSP systems.

3.2.5 Implementing state management

State management is a crucial aspect of transactional stream processing (TSP) systems, as it enables the coordination of concurrent transactions, maintains consistency, and provides fault tolerance [6, 88]. The design space for state management in TSP systems can be characterized by several dimensions, such as access scope, storage model, data manipulation statements, and state management strategies. Next, we delve into these dimensions and explore their implications for system design and optimization.

Access scope. The access scope of state management ranges from intra-operator to inter-systems. Depending on the application’s requirements, TSP systems may need to manage state locally within a processing node or share state across multiple nodes or even external systems [21, 38]. It is worth noting that when OLTP workloads are implemented in a TSP system, the access scope of a shared state is within a transaction, which can be attributed to a single operator or multiple operators. a) Intra-operator state management focuses on maintaining state among instances of a single operator, making it suitable for applications with localized data access patterns and minimal coordination requirements [36]. b) Inter-operator state management involves sharing state across multiple operators within the same query/system [3, 88]. This approach is particularly relevant for applications that require coordination among different operators. c) Inter-system/global state management extends the scope of state sharing even further, enabling TSP systems to exchange state information with external systems, such as other stream processors, databases, or distributed file systems [52]. This approach allows TSP systems to leverage the capabilities of external systems, such as query processing or storage, and can facilitate seamless integration with existing data processing pipelines. However, managing state across system boundaries can introduce additional complexity, latency, and potential consistency issues.

Storage models. There are two primary storage models for implementing state management in TSP systems: relations and key–value pairs. Each has its trade-offs and implications for system design and optimization.

a) Relations: In this model, states are represented as time-varying relations that map a time domain to a finite but unbounded bag of tuples adhering to a relational schema [39, 53]. This approach leverages well-developed techniques from relational databases, such as persistence and recovery mechanisms. Storing states as relations can help minimize system complexity, especially when a foreign key constraint is required in TSP [52]. However, incorporating time into the relational model can add complexity to query processing and optimization [39].

Representative examples include STREAM, S-Store, and TStream. STREAM [39] represents the state as a time-varying relation, mapping a time domain to a finite, but unbounded bag of tuples adhering to the relational schema. To treat relational and streaming data uniformly, STREAM introduces two operations: To_Table to convert streaming data to relational data and To_Stream to convert relational data to streaming data. S-Store [53] does not implement its own state management component, but instead relies on H-Store [74] to ensure the transactional properties of shared states represented as relations. TStream [88] uses the Cavalia relational database [86] to support the storage of shared states.

b) Key–Value Pairs: In this model, states are represented as key–value pairs, which simplifies the design of TSP systems [6, 21]. This approach is suitable for scenarios that mainly require select and update statements for manipulating shared states during stream processing [4, 50]. However, it may not be the best choice for applications that require more complex data manipulation operations or constraints, such as foreign key constraints [52].

Representative examples include MillWheel [6], Flink with RocksDB, AIM (Analytics in Motion) [17], FlowDBMS/TSpoon [3, 4], and TStream/MorphStream [50, 88]. MillWheel [6] maintains state as an opaque byte string on a per-key basis, with users implementing serialization and deserialization methods. The persistent state is backed by a replicated and highly available data store, such as Bigtable [25] or Spanner [32], ensuring data integrity and transparency for the end user. Flink [21] relies on an LSM-based key–value store engine called RocksDB [65] to support shared queryable state. Götze and Sattler [38] also adopt a key–value store for transactional state representation, using multi-version concurrency control, where each state (i.e., key) has multiple commit timestamps, delete timestamps, or values.

AIM [17] represents state in a distributed in-memory key–value store, where nodes store system state as horizontally partitioned data in a ColumnMap layout. The Analytics Matrix system state provides a materialized view of numerous aggregates for each individual customer (subscriber). When an event arrives in an SPE, the corresponding record in the Analytics Matrix is updated atomically. In FlowDBMS/TSpoon [3, 4], a key–value store is employed, with the state maintained by a special type of stateful stream operator called the state operator. All state access requests must be routed to and subsequently handled by state operators defined in the application.

Data manipulation statements. TSP systems need to define and support different data manipulation statements employed in applications that constrain both system design and potential optimizations. These statements may include operations such as insert, update, delete, and query, which must be executed efficiently and consistently in the context of transactional stream processing.

Storing shared states as relations could be a reasonable choice of system design when applications require insert (I) or delete (D) statements and need to maintain foreign key constraints, such as in streaming ETL [52]. However, when applications only need select (S) and update (U) statements for manipulating shared states during stream processing, storing shared states as vanilla key–value pairs is sufficient and simplifies the design of TSP systems. Specific optimizations should be adopted by the TSP systems according to application needs.

State management strategies. The choice of state management strategy can significantly impact system performance, fault tolerance, and scalability [6, 21, 74]. There are three main strategies for managing state in TSP systems: a) In-memory state: This strategy maintains state within the processing nodes’ memory, enabling low-latency access. However, it can be limited by available memory and may require replication and distributed transactions for fault tolerance and consistency guarantees [17, 88]. b) External state stores: In this strategy, state is stored in external systems, such as transactional databases or distributed key–value stores with transactional support [38, 65]. This approach allows for improved fault tolerance, consistency guarantees, and scalability, but may introduce additional latency [25, 32]. c) Hybrid state management: This approach combines the advantages of in-memory state and external state stores, using in-memory caching to minimize latency and external transactional storage for fault tolerance, consistency guarantees, and scalability [3, 4].

Remark 3

(Failure of concurrency control protocols) Conventional concurrency control (CC) protocols, widely used in OLTP database systems, fails to guarantee the properties of TSP Systems. To illustrate why, we use conventional timestamp-ordering concurrency control (T/O CC) as an example [13], with discussions also found in prior work [84]. Let \(txn_1=write(k1,v1)\) and \(txn_2=read(k1)\) be two distinct transactions. For simplicity, assume that only these two transactions are in the system, and that events are parallel processed. Suppose that \(txn_2\) is generated and processed by the system earlier.

If \(txn_2.ts > txn_1.ts\), then both transactions will be successfully committed. However, since \(txn_2\) is processed earlier, it will read the old state value of k1, violating the event order constraint, leading to a serial order of \(txn_2 \rightarrow txn_1\). On the other hand, if \(txn_2.ts < txn_1.ts\), then \(txn_2\) will be successfully committed, but \(txn_1\) will be aborted since the writes come too late, making the undo of an externally visible output or action unacceptable in TSP applications.

Similarly, other conventional CC protocols may result in either the wrong serial order or the need to abort one of the transactions, leading to incorrect serial order upon restart. In other words, conventional CC protocols are not yet ready for such event-driven transaction execution.

Remark 4

(Timestamp assignment dilemma) In TSP systems, aligning transaction timestamps with the triggering events is a reflection of external consistency or linearizability in distributed systems. This alignment ensures proper ordering, but can lead to dilemmas. The dilemma, illustrated in Fig. 4, arises when transactions are generated by both external and internal events, such as operator outputs. Consider a scenario involving operators A, B, and C processing events and generating new transactions. Events are parallel processed, and \(txn_{e_a}\) and \(txn_{e_a}'\) can infinitely wait for each other to be committed, leading to a deadlock due to conflicting ordering constraints. Two potential solutions to this dilemma are: (1) enforcing additional ordering constraints between operators or (2) diversifying timestamps for generated events. However, these solutions present challenges, as implementing strong consistency like linearizability may affect latency, and a generalized solution to this dilemma remains unresolved.

3.3 Technologies employed in TSP implementation