Abstract

Bipartite graphs are of great importance in many real-world applications. Butterfly, which is a complete \(2 \times 2\) biclique, plays a key role in bipartite graphs. In this paper, we investigate the problem of efficient counting the number of butterflies. The most advanced techniques are based on enumerating wedges which is the dominant cost of counting butterflies. Nevertheless, the existing algorithms cannot efficiently handle large-scale bipartite graphs. This becomes a bottleneck in large-scale applications. In this paper, instead of the existing layer-priority-based techniques, we propose a vertex-priority-based paradigm \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) to enumerate much fewer wedges; this leads to a significant improvement of the time complexity of the state-of-the-art algorithms. In addition, we present cache-aware strategies to further improve the time efficiency while theoretically retaining the time complexity of \({\mathsf {BFC}}\)-\({\mathsf {VP}}\). We also show that our proposed techniques can work efficiently in external and parallel contexts. Moreover, we study the butterfly counting problem on batch-dynamic graphs. Specifically, given a bipartite graph G and a batch-update of edges B, we aim to maintain the number of butterflies in G. To tackle this problem, fast vertex-priority-based algorithms are proposed with optimizations for reducing the computation of existing wedges in G. Our extensive empirical studies demonstrate that the proposed techniques significantly outperform the baseline solutions on real datasets.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

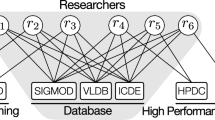

When modeling relationships between two different types of entities, bipartite graph arises naturally as a data model in many real-world applications [14, 39]. For example, in online shopping services (e.g., Amazon and Alibaba), the purchase relationships between users and products can be modeled as a bipartite graph, where users form one layer, products form the other layer, and the links between users and productions represent purchase records as shown in Fig. 1. Other examples include author-paper relationships, actor-movie networks, etc.

Since network motifs (i.e., repeated sub-graphs) are regarded as basic building blocks of complex networks [45], finding and counting motifs of networks/graphs is a key to network analysis. In unipartite graphs, there are extensive studies on counting and listing triangles in the literature [5, 16, 19, 31, 38, 57, 58, 60,61,62]. In bipartite graphs, butterfly (i.e., 2 \(\times \) 2 biclique) is the simplest bi-clique configuration with equal numbers of vertices of each layer (apart from the trivial single edge configuration) that has drawn reasonable attention recently [4, 30, 53, 54, 56, 64, 73]; for instance, Fig. 1 shows the record that Adam and Mark both purchased Balm and Doll forms a butterfly. In this sense, the butterfly can be viewed as an analogue of the triangle in a unipartite graph. Moreover, without butterflies, a bipartite graph will not have any community structure [4].

In this paper, we study the butterfly counting problem. Specifically, we aim to compute the total number of butterflies in a bipartite graph G, which is denoted by  . The importance of butterfly counting has been demonstrated in the literature of network analysis and graph theory. Below are some examples.

. The importance of butterfly counting has been demonstrated in the literature of network analysis and graph theory. Below are some examples.

Network measurement. The bipartite clustering coefficient [4, 41, 47, 53] is a cohesiveness measurement of bipartite graphs. Given a bipartite graph G, its bipartite clustering coefficient equals  /

/ , where

, where  is the total number of caterpillars (i.e., three-paths) in G. For example, (\(u_0\), \(v_0\), \(u_1\), \(v_1\)) in Fig. 1 is a three-path. High bipartite clustering coefficient indicates localized closeness and redundancy in bipartite graphs [4, 53]; for instance, in user-product networks, bipartite clustering coefficients can be used frequently to analyze the sale status for products in different categories. These statistics can also be used in Twitter network for internet advertising where the Twitter network is a bipartite graph consisting of Twitter users and the URLs they mentioned in their postings. Since

is the total number of caterpillars (i.e., three-paths) in G. For example, (\(u_0\), \(v_0\), \(u_1\), \(v_1\)) in Fig. 1 is a three-path. High bipartite clustering coefficient indicates localized closeness and redundancy in bipartite graphs [4, 53]; for instance, in user-product networks, bipartite clustering coefficients can be used frequently to analyze the sale status for products in different categories. These statistics can also be used in Twitter network for internet advertising where the Twitter network is a bipartite graph consisting of Twitter users and the URLs they mentioned in their postings. Since  can be easily computed in O(m) time where m denotes the total number of edges in G [4], computing

can be easily computed in O(m) time where m denotes the total number of edges in G [4], computing  becomes a bottleneck in computing the clustering coefficient.

becomes a bottleneck in computing the clustering coefficient.

Summarizing inter-corporate relations. In a director-board network, two directors on the same two boards can be modeled as a butterfly. These butterflies can reflect inter-corporate relations [48,49,50]. The number of butterflies indicates the extent to which directors re-meet one another on two or more boards. A large butterfly counting number indicates a large number of inter-corporate relations and formal alliances between companies [53].

Computing k-wing in bipartite graphs. Counting the number of butterflies for each edge also has applications. For example, it is the first step to compute a k-wing [56] (or k-bitruss [67, 68, 73]) for a given k where k-wing is the maximal subgraph of a bipartite graph with each edge in at least k butterflies. Discovering such dense subgraphs is proved useful in many applications, e.g., community detection [25, 26, 69, 72], word-document clustering [21], and viral marketing [24, 42, 65, 71]. Given a bipartite graph G, the proposed algorithms [56, 67, 68, 73] for k-wing computation are to first count the number of butterflies on each edge in G. After that, the edge with the lowest number of butterflies is iteratively removed from G until all the remaining edges appear in at least k butterflies.

Note that in real applications, butterfly counting may happen not only once in a graph. We may need to conduct such a computation against an arbitrarily specified subgraph. Indeed, there can exist a high demand for butterfly counting in large networks. However, the existing solutions cannot efficiently handle large-scale bipartite graphs. As shown in [54], on the Tracker network with \(10^8\) edges, their algorithm needs about 9000 s to compute  . Therefore, the study of efficient butterfly counting is imperative to support online large-scale data analysis. Moreover, some applications demand exact butterfly counting in bipartite graphs. For example, in k-wing computation, approximate counting does not make sense since the k-wing decomposition algorithm in [56] needs to iteratively remove the edges with the lowest number of butterflies; the number has to be exact.

. Therefore, the study of efficient butterfly counting is imperative to support online large-scale data analysis. Moreover, some applications demand exact butterfly counting in bipartite graphs. For example, in k-wing computation, approximate counting does not make sense since the k-wing decomposition algorithm in [56] needs to iteratively remove the edges with the lowest number of butterflies; the number has to be exact.

Notably, dynamic graphs have attracted significant interest in recent research studies [11, 15, 28, 44, 46, 61] since there can exist a large number of constant updates on graphs in real applications. To enable computational sharing and increase system throughout (i.e., average processing time per update) in practice, many existing studies consider processing the updates in a batch-dynamic way which handles updates as a set of batches [1, 8, 23, 27, 43]. However, there is no systematic study over the butterfly counting problem on batch-dynamic graphs in the literature. To fill this research gap, we investigate how to design efficient parallel butterfly counting algorithms for batch-dynamic settings in this paper. Specifically, given a bipartite graph G and a batch-update of edges B, we aim to maintain the number of butterflies in G.

State-of-the-art. Consider that there can be \(O (m^2)\) butterflies in the worst case. Wang et al. in [64] propose an algorithm to avoid enumerating all the butterflies. It has two steps. At the first step, a layer is randomly selected. Then, the algorithm iteratively starts from every vertex u in the selected layer, computes the 2-hop reachable vertices from u, and for each 2-hop reachable vertex w, counts the number \(n_{uw}\) of times reached from u. At the second step, for each 2-hop reachable vertex w from u, we count the number of butterflies containing both u and w as \(n_{uw} (n_{uw}-1)/2\). For example, regarding Fig. 1, if the lower layer is selected, starting from the vertex \(v_0\), vertices \(v_1\), \(v_2\), and \(v_3\) are 2-hop reached 3 times, 1 time, and 1 time, respectively. Thus, there are \(C_3^2\)Footnote 1 (\(=3\)) butterflies containing \(v_0\) and \(v_1\) and no butterfly containing \(v_0\) and \(v_2\) (or \(v_0\) and \(v_3\)). Iteratively, the algorithm first uses \(v_0\) as the start-vertex, then \(v_1\), and so on. Then, we add all the counts together; the added counts divided by two is the total number of butterflies.

Observe that the time complexity of the algorithm in [64] is \(O (\sum _{u \in U(G)}deg_G(u)^2))\) if the lower layer L(G) is chosen to have start-vertices, where U(G) is the upper layer. Sanei et al. in [54] chooses a layer S such that \(O (\sum _{v \in S}deg_G(v)^2))\) is minimized among the two layers.

Observation. In the existing algorithms [54, 64], the dominant cost is at Step 1 that enumerates wedges to compute 2-hop reachable vertices and their hits. For example, regarding Fig. 1, we have to traverse 3 wedges, \((v_0, u_0, v_1)\), \((v_0, u_1, v_1)\), and \((v_0, u_2, v_1)\) to get all the hits from \(v_0\) to \(v_1\). Here, in the wedge \((v_0, u_0, v_1)\), we refer \(v_0\) as the start-vertex, \(u_0\) as the middle-vertex, and \(v_1\) as the end-vertex. Continue with the example in Fig. 1, using \(u_2\) as the middle-vertex, starting from \(v_0\), \(v_1\), and \(v_2\), respectively, we need to traverse totally 6 wedges.

We observe that the choice of middle-vertices of wedges (i.e., the choice of start-vertices) is a key to improving the efficiency of counting butterflies. For example, consider the graph G with 2002 vertices and 3000 edges in Fig. 2a. In G, \(u_0\) is connected with 1000 vertices (\(v_0\) to \(v_{999}\)), \(v_{1000}\) is also connected with 1000 vertices (\(u_1\) to \(u_{1000}\)), and for \(0 \le i \le 999\), \(v_i\) is connected with \(u_{i+1}\). The existing algorithms need to go through \(u_0\) (or \(v_{1000}\)) as the middle-vertex if choosing L(G) (or U(G)) to start. Therefore, regardless of whether the upper or the lower layer is selected to start, we have to traverse totally \(C_{1000}^2\) (\(=499{,}500\)) plus 1000 different wedges by the existing algorithms [54, 64].

Challenges. The main challenges of efficient butterfly counting are as follows.

-

1.

Using high-degree vertices as middle-vertices may generate numerous wedges to be scanned. The existing techniques [54, 64], including the layer-priority-based techniques [54], cannot avoid using unnecessary high-degree vertices as middle-vertices as illustrated earlier. Therefore, it is a challenge to effectively handle high-degree vertices.

-

2.

Effectively utilizing CPU cache can often reduce the computation dramatically. Therefore, it is also a challenge to utilize CPU cache to speed up the counting of butterflies.

-

3.

On batch-dynamic graphs, we need to handle a batch of updates on the original graph, which can be very large. Thus, it is a challenge to explore possible sharing opportunities and reduce the computation that does not lead to any new butterflies.

Our approaches. To address Challenge 1, instead of the existing layer-priority-based algorithm, we propose a vertex-priority-based algorithm \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) that can effectively handle hub vertices (i.e., high-degree vertices). To avoid over-counting or miss-counting, we propose that for each edge (u, v), the new algorithm \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) uses the vertex with a higher degree as the start-vertex so that the vertex with a lower degree will be used as the middle-vertex. Specifically, the \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) algorithm will choose one end vertex of an edge (u, v) as the start-vertex, say u, according to its priority. Note that the vertex priority is a total ordering of vertices, and we use degree-based priority in this paper. A higher degree indicates a higher priority, and the tie is broken by the ID of vertices. For example, regarding Fig. 2a, the \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) algorithm will choose \(u_0\) and \(v_{1000}\) as start-vertices; consequently, only 2000 wedges in total will be scanned by our algorithm compared with 500, 500 different wedges generated by the existing algorithms as illustrated earlier. Once all edges from the starting vertex u are exhausted, \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) moves to another edge. This is the main idea of our \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) algorithm.

As a result, the time complexity of our \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) algorithm is \(O(\sum _{(u, v) \in E(G)}min \{deg_G(u), deg_G(v)\})\), which is in general significantly lower than the time complexity of the state-of-the-art algorithm in [54] (i.e., \(O (\min \{ \sum _{v \in U(G)}deg_G(v)^2, \sum _{v \in L(G)}deg_G(v)^2)\} )\)). Note that the time complexity of \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) is also bounded by \(O(\alpha \cdot m)\), where \(\alpha \) is the arboricity of G [17].

In the \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) algorithm, there are O(n) accesses of start-vertices because we need to explore every vertex as a start-vertex only once, O(m) accesses of middle-vertices and \(O(\sum _{(u, v) \in E(G)}min\{deg_G(u), deg_G(v)\})\) accesses of end-vertices in the processed wedges. Thus, the number of accesses to end-vertices is dominant. Given that the cache miss latency takes a big part of the memory access time [3], improving the CPU cache performance when accessing the end-vertices becomes a key issue. Our second algorithm, the cache-aware algorithm \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\), aims to improve the CPU cache performance of \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) by having high-degree vertices as end-vertices to enhance the locality while retaining the total number of accesses of end-vertices (thus, retain the time complexity of the \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) algorithm). Consequently, \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) proposes to request the end-vertices to be prioritized in the same way as the start-vertices in the \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) algorithm.

For example, considering the graph in Fig. 2b, we have \(p(v_0)> p(v_3)> p(u_0)> p(v_2) > p(v_1)\) according to their degrees where p(v) denotes the priority of a vertex v. In this example, starting from \(v_0\) to \(v_3\), going through \(u_0\), \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) needs to process 5 wedges using \(u_0\) as the middle-vertex (i.e., \((v_0, u_0, v_1)\), \((v_0, u_0, v_2)\), \((v_0, u_0, v_3)\), \((v_3, u_0, v_1)\) and \((v_3, u_0, v_2)\)), and there are 3 vertices, \(v_1\), \(v_2\) and \(v_3\) that need to be performed as end-vertices. Note that these are the only 5 wedges using \(u_0\) as the middle-vertex since \(p(u_0)> p(v_2) > p(v_1)\). Regarding the same example, \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) also needs to process exactly 5 wedges with \(u_0\) as the middle-vertex, \((v_1, u_0, v_0)\), \((v_1, u_0, v_3)\), \((v_2, u_0, v_0)\), \((v_2, u_0, v_3)\) and \((v_3, u_0, v_0)\); however, only 2 vertices, \(v_0\) and \(v_3\), are performed as end-vertices.

We also propose the cache-aware reordering strategy to improve the cache performance by storing high-priority (more frequently accessed) end-vertices together to reduce the cache-miss [70]. Considering the example in Fig. 2b, \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) will store \(v_0\) and \(v_3\) together after reordering.

To handle batch-dynamic graphs, we propose efficient algorithms by identifying the affected scope (subgraph) of the batch-update. Then, we can also utilize our vertex-priority-based techniques to accelerate the computation process. In addition, we categorize the new butterflies into different cases and propose effective pruning techniques to further reduce the computation (of old wedges on the original graph) that does not lead to any new butterflies.

Contribution. We summarize the principal contributions of this paper as follows.

-

We propose a novel vertex-priority-based algorithm \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) to count the butterflies that reduce the time complexities of the state-of-the-art algorithms significantly in both theory and practice.

-

We propose a novel cache-aware butterfly counting algorithm \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) by adopting cache-aware strategies to \({\mathsf {BFC}}\)-\({\mathsf {VP}}\). Compared with \({\mathsf {BFC}}\)-\({\mathsf {VP}}\), the \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) algorithm achieves a better CPU cache performance.

-

We can replace the exact counting algorithm in the approximate algorithm [54] by our exact counting algorithm for a speedup.

-

We also present an external-memory algorithm and a parallel algorithm for butterfly counting.

-

This is also the first work to study butterfly counting on batch-dynamic graphs. We propose a work-efficient parallel algorithm to solve the problem.

-

We conduct comprehensive experimental evaluations on real datasets. It shows that our proposed algorithms \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) and \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) outperform the existing algorithms by up to two orders of magnitude. For instance, on the Tracker dataset, the \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) algorithm can count \(10^{12}\) butterflies in 50 s, and the state-of-the-art butterfly counting algorithm [54] runs about 9000 s. In addition, our advanced algorithm \({{\mathsf {BFCB}}}\text {-}{{\mathsf {IG^+}}} \) for batch-dynamic butterfly counting is up to 2 orders of magnitude faster than the baseline algorithm.

Organization We organize the rest of the paper as follows. In Sect. 2, we show the preliminaries. Section 3 discusses the existing algorithms \({\mathsf {BFC}}\)-\({\mathsf {BS}}\) and \({\mathsf {BFC}}\)-\({\mathsf {IBS}}\). We introduce the \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) algorithm in Sect. 4. Section 5 explores cache-awareness. Section 6 extends our algorithms to count butterflies against each edge, the parallel execution of our proposed algorithms, and the external memory solution. Section 7 presents our algorithms for batch-dynamic butterfly counting. Section 8 reports experimental results. Section 9 reviews the related works. Section 10 presents the conclusion.

2 Preliminaries

We define our problem over a bipartite graph \(G(V=(U, L), E)\). Here U(G) contains all the upper layer vertices, L(G) contains all the lower layer vertices, and E(G) is the edge set. Note that \(U(G) \cap L(G) = \emptyset \). An edge connecting two vertices u and v is represented as (u, v) (or (v, u)). \(N_G(u)\) is the neighbor set of a vertex u in G, and u’s degree is represented as \(deg_G(u) = |N_G(u)|\). The 2-hop neighbor set of u (i.e., the set of vertices which are exactly two edges away from u) is denoted as \(2hop_G(u)\). Note that we assume each vertex in G has an id, and the IDs of the vertices in U(G) are always higher than that of the vertices in L(G). m and n are used to represent the number of edges and vertices in G. We use |G| to denote the size of G, where \(|G| = m + n\).

Definition 1

(Wedge) Consider a bipartite graph G, and three vertices u, v, \(w \in V(G)\). A wedge (u, v, w) is a path starting from u, going through v and ending at w. u, v, and w are called the start-, the middle-, and the end-vertex in the wedge (u, v, w), respectively.

Definition 2

(Butterfly) Consider a bipartite graph G and four vertices \(u, w \in U(G)\) and \(v, x \in L(G)\). A butterfly [u, v, w, x] is a complete bipartite subgraph (i.e., \(2 \times 2\)-biclique) induced by u, v, w, x.

The total number of butterflies containing a vertex u and an edge e are denoted as  and

and  , respectively. In addition, the count of butterflies in G is denoted as

, respectively. In addition, the count of butterflies in G is denoted as  .

.

Problem statement Given a bipartite graph G, the butterfly counting problem is to compute  .

.

3 Existing algorithms

Here, we discuss the two existing algorithms, the baseline butterfly counting algorithm \({\mathsf {BFC}}\)-\({\mathsf {BS}}\) [64] and the improved baseline butterfly counting algorithm \({\mathsf {BFC}}\)-\({\mathsf {IBS}}\) [54]. As discussed earlier, both algorithms are based on enumerating wedges. Lemma 1 [64] is a key to the two algorithms.

Lemma 1

In a bipartite graph G, the following equations hold:

In fact, \({\mathsf {BFC}}\)-\({\mathsf {IBS}}\) has the same framework as \({\mathsf {BFC}}\)-\({\mathsf {BS}}\) and improves \({\mathsf {BFC}}\)-\({\mathsf {BS}}\) in two aspects: (1) pre-choosing the layer of start-vertices to achieve a lower time complexity; (2) using a hash map to speed up the implementation. We show the details of \({\mathsf {BFC}}\)-\({\mathsf {IBS}}\) in Algorithm 1.

Note that to avoid counting a butterfly twice, for each middle-vertex \(v \in N_G(u)\) and the corresponding end-vertex \(w \in N_G(v)\), \({\mathsf {BFC}}\)-\({\mathsf {IBS}}\) processes the wedge (u, v, w) only if \(w.id > u.id\); consequently, in Algorithm 1 we do not need to use the factor \(\frac{1}{2}\) in Equation 2 of Lemma 1.

Note that the \({\mathsf {BFC}}\)-\({\mathsf {BS}}\) algorithm has the time complexity of \(O(\sum _{v \in L(G)}deg_G(v)^2)\) if starting from the layer U(G), while the time complexity of \({\mathsf {BFC}}\)-\({\mathsf {IBS}}\) is \(O(min\{\sum _{u \in U(G)}deg_G(u)^2, \sum _{v \in L(G)}deg_G(v)^2\})\).

4 Algorithm by vertex priority

In \({\mathsf {BFC}}\)-\({\mathsf {BS}}\) and \({\mathsf {BFC}}\)-\({\mathsf {IBS}}\), the time complexity is related to the total number of 2-hop neighbors visited (i.e., the total number of wedges processed). When starting from one vertex layer, the number of processed wedges is decided by the sum of degree squares of middle-vertices of the other layer. If all the vertices with low degrees are distributed in one vertex layer as middle-vertices, \({\mathsf {BFC}}\)-\({\mathsf {IBS}}\) can just start from the vertices in the other layer and obtain a much lower computation cost. However, when there are vertices with high degrees (i.e., hub vertices) exist in both layers, which is not uncommon in real datasets (e.g., Tracker dataset), choosing which layer to start cannot achieve a better performance. For example, consider the graph G with 2002 vertices and 4000 edges in Fig. 3, where \(u_0\) and \(u_1\) are connected with 1, 000 vertices (\(v_0\) to \(v_{999}\)), \(v_{1000}\) and \(v_{1001}\) are also connected with 1000 vertices (\(u_2\) to \(u_{1001}\)). In this example, choosing either of the two layers still needs to go through hub vertices, \(u_0, u_1 \in U(G)\) or \(v_{1000}, v_{1001} \in L(G)\).

Optimization strategy. Clearly, \([u_0, v_0, u_1, v_1]\) in Fig. 3 can be constructed in the following two ways: (1) by the wedges \((u_0, v_0, u_1)\) and \((u_0, v_1, u_1)\), or (2) by the wedges \((v_0, u_0, v_1)\) and \((v_0, u_1, v_1)\). Consequently, a hub vertex (e.g., \(u_0\) in Fig. 3) may not always necessary to become a middle-vertex in a wedge for the construction of a butterfly. Thus, it is possible to design an algorithm which can avoid using hub vertices unnecessarily as middle-vertices. To achieve this objective, we introduce the vertex-priority-based butterfly counting algorithm \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) which runs in a vertex level (i.e., choosing which vertex to be processed as the start-vertex) rather than a layer level (i.e., choosing which vertex-layer to be processed as the start-layer).

Given a graph G, \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) first assigns a priority to each vertex \(u \in V(G)\).

Definition 3

(Priority) Consider a bipartite graph G. The vertex priority is a total ordering of the vertices in G. Specifically, the priority p(u) of a vertex \(u \in V(G)\) is an integer where \(p(u) \in [1, n]\). Given \(u, v \in V(G)\), \(p(u) \ne p(v)\) if \(u \ne v\).

According to the concept of priority, we can always construct a butterfly from two wedges (u, v, w) and (u, x, w) where the start-vertex u has a higher priority than the middle-vertices v and x. This is because we can always find a vertex that has the highest priority and connects to two vertices with lower priorities in a butterfly.

Based on the above observation, the \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) algorithm can get all the butterflies by only processing the wedges where the priorities of start-vertices are higher than the priorities of middle-vertices. In this way, the algorithm \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) will avoid processing the wedges where middle-vertices have higher priorities than start-vertices (e.g., \((v_0, u_0, v_1)\) in Fig. 3 if we consider that a higher vertex degree indicates a higher priority). In addition, in order to avoid duplicate counting, another constraint should also be satisfied in \({\mathsf {BFC}}\)-\({\mathsf {VP}}\): \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) only processes the wedges where start-vertices have higher priorities than end-vertices. To avoid processing unnecessary wedges in the implementation, we sort the neighbors of vertices in ascending order of their priorities. Then we can early terminate the processing once we meet an end-vertex that has higher priority than the start-vertex (or meet a middle-vertex that has higher priority than the start-vertex). We show the details of the \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) algorithm in Algorithm 2.

Firstly, \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) assigns a priority to each vertex \(u \in V(G)\) according to Definition 3 and sort the neighbors of u. After that, \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) processes wedges from each start-vertex u (Line 4). For each middle-vertex \(v \in N_G(u)\), it processes v if it satisfies \(p(v) < p(u)\). Then, it only processes \(w \in N_G(v)\) which satisfies \(p(w) < p(u)\) to avoid duplicate counting. After that, the value \(|N_G(u) \cap N_G(w)|\) can be obtained for u and w which is equal to \(wedge\_cnt(w)\). Then, according to Lemma 1, \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) computes  . Finally, we return

. Finally, we return  .

.

Correctness and complexity analysis of the \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) algorithm. Below we show theoretical analysis of the \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) algorithms.

Theorem 1

The \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) algorithm correctly solves the butterfly counting problem.

Proof

We prove that \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) correctly computes  for a bipartite graph G. A butterfly can always be constructed from two different wedges with the same start-vertex and the same end-vertex. Thus, we only need to prove that each butterfly in G will be counted exactly once by \({\mathsf {BFC}}\)-\({\mathsf {VP}}\). Given a butterfly [x, u, v, w], we assume x has the highest priority. The vertex priority distribution must be one of the three situations as shown in Fig. 4 (the other situations can be transformed into the above by symmetry), where \(p_i\) is the corresponding vertex priority. Regarding the case in Fig. 4a, b, or c, \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) only counts the butterfly [x, u, v, w] once from the wedges (x, u, v) and (x, w, v). Thus, we can prove that \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) correctly solves the butterfly counting problem. \(\square \)

for a bipartite graph G. A butterfly can always be constructed from two different wedges with the same start-vertex and the same end-vertex. Thus, we only need to prove that each butterfly in G will be counted exactly once by \({\mathsf {BFC}}\)-\({\mathsf {VP}}\). Given a butterfly [x, u, v, w], we assume x has the highest priority. The vertex priority distribution must be one of the three situations as shown in Fig. 4 (the other situations can be transformed into the above by symmetry), where \(p_i\) is the corresponding vertex priority. Regarding the case in Fig. 4a, b, or c, \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) only counts the butterfly [x, u, v, w] once from the wedges (x, u, v) and (x, w, v). Thus, we can prove that \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) correctly solves the butterfly counting problem. \(\square \)

Theorem 2

The time complexity of \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) is \(O(\sum _{(u, v) \in E(G), p(u) > p(v)} deg_G(v))\).

Proof

Algorithm 2 has two phases: initializing in the first phase and computing  in the second phase. The time complexity of the first phase is \(O(n + m)\). Firstly, we need O(m) to get the degrees of vertices and O(n) time to get the priorities by sorting the vertices using bin sort [37]. Secondly, we need O(m) time to sort the neighbors of vertices in ascending order of their priorities. To achieve this, we generate a new empty neighbor list T(u) for each vertex u. Then, we process the vertex with lower priority first and for each vertex u and its neighbor v, we put u into T(v). Finally, the neighbors of vertices are ordered in T.

in the second phase. The time complexity of the first phase is \(O(n + m)\). Firstly, we need O(m) to get the degrees of vertices and O(n) time to get the priorities by sorting the vertices using bin sort [37]. Secondly, we need O(m) time to sort the neighbors of vertices in ascending order of their priorities. To achieve this, we generate a new empty neighbor list T(u) for each vertex u. Then, we process the vertex with lower priority first and for each vertex u and its neighbor v, we put u into T(v). Finally, the neighbors of vertices are ordered in T.

The time cost of the second phase is bounded by the number of wedge counting operations executed in Algorithm 2 Line 8 (i.e., the number of wedges traversed in \({\mathsf {BFC}}\)-\({\mathsf {VP}}\)). According to the processing rule of \({\mathsf {BFC}}\)-\({\mathsf {VP}}\), the wedge counting operations consume O(deg(v)) time for each edge \((u, v) \in E(G)\) with \(p(u) > p(v)\). This is because only the wedges where the priorities of middle-vertices are lower than the priorities of start-vertices are processed in \({\mathsf {BFC}}\)-\({\mathsf {VP}}\). Hence, \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) needs \(O(\sum _{(u, v) \in E(G), p(u) > p(v)} deg_G(v))\) time in total, and this theorem holds. \(\square \)

According to the above analysis, how to order the vertices can affect the complexity of the algorithm. In this paper, we propose using the degree-based priority to achieve both good practical and theoretical results.

Definition 4

(Degree priority) Consider a bipartite graph G. The degree priority \(p_d(u)\) of a vertex \(u \in V(G)\) is an integer where \(p_d(u) \in [1, n]\). Given \(u, v \in V(G)\), \(p_d(u) > p_d(v)\) if

-

\(deg_G(u) > deg_G(v)\), or

-

\(deg_G(u) = deg_G(v)\), \(u.id > v.id\).

Based on Definition 4, we can have the following theorem.

Theorem 3

The time complexity of \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) is \(O(\sum _{(u, v) \in E(G)}min\{deg_G(u), deg_G(v)\}) = O(\alpha \cdot m)\), if the degree priority is applied.

Proof

Since the degree priority is applied, the time complexity of \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) is \(O(\sum _{(u, v) \in E(G), p_d(u) > p_d(v)} deg_G(v))\) \(= O(\sum _{(u, v) \in E(G)}min\{deg_G(u), deg_G(v)\})\). According to [17], the time complexity of \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) can be simplified to \(O(\alpha \cdot m)\), where \(\alpha \) is the arboricity of G. \(\square \)

In the following parts, we simply call the degree priority as the priority and use p(u) to denote the degree priority when the context is clear.

Theorem 4

The space complexity of \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) is O(m).

Proof

In Algorithm 2, we need O(m) space to store the graph structure and O(n) space to store the arrays for the priority of vertices and counting the number of wedges. Thus, the space cost of the \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) algorithm is bounded by O(m). \(\square \)

Lemma 2

In a bipartite graph G, the following equation holds:

The equality happens if and only if one of the following two conditions is satisfied: (1) for every edge \((u, v) \in E(G)\) and \(u \in U(G)\), \(deg_G(u) \le deg_G(v)\); (2) for every edge \((u, v) \in E(G)\) and \(u \in U(G)\), \(deg_G(v) \le deg_G(u)\).

Proof

Given a bipartite graph G, since there are \(deg_G(u)\) edges attached to a vertex u, we can get that:

Similarly,

Thus, we can prove that Eq. (3) holds. The condition of equality can be easily proved by contradiction which is omitted here. \(\square \)

From Lemma 2, we can get that \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) improves the time complexity of \({\mathsf {BFC}}\)-\({\mathsf {IBS}}\). Now we illustrate how \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) efficiently handles the hub-vertices compared with \({\mathsf {BFC}}\)-\({\mathsf {IBS}}\) using the following example.

Example 1

Consider the example in Fig. 3.

\({\mathsf {BFC}}\)-\({\mathsf {VP}}\) first assigns a priority to each vertex in G where \(p(u_1)> p(u_0)> p(v_{1001})> p(v_{1000})> p(u_{1001})> p(u_{1000})>\cdots> p(v_1) > p(v_0)\). Starting from \(u_1\), \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) needs to process 1000 wedges ending at \(u_0\). Similarly, starting from \(v_{1001}\), \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) needs to process 1000 wedges ending at \(v_{1000}\). No other wedges need to be processed by \({\mathsf {BFC}}\)-\({\mathsf {VP}}\). In total, \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) needs to process 2000 wedges.

\({\mathsf {BFC}}\)-\({\mathsf {IBS}}\) processes each vertex \(u \in U(G)\) as start-vertex. Starting from \(u_0\), \({\mathsf {BFC}}\)-\({\mathsf {IBS}}\) needs to process 1000 wedges ending at \(u_1\). Starting from \(u_1\), no wedges need to be processed. In addition, starting from the vertices in \(\{u_2, u_3,\ldots , u_{1001}\}\), \({\mathsf {BFC}}\)-\({\mathsf {IBS}}\) needs to process 999, 000 wedges. In total, \({\mathsf {BFC}}\)-\({\mathsf {IBS}}\) needs to process 1, 000, 000 wedges.

5 Cache-aware techniques

As discussed in Sect. 1, below is the breakdown of memory accesses to vertices required when processing the wedges in the \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) algorithm: O(n) accesses of start-vertices, O(m) accesses of middle-vertices, and \(O(\sum _{(u, v) \in E(G)}min\{deg_G(u), deg_G(v)\})\) accesses of end-vertices. Thus, the total access of end-vertices is dominant. For example, by running the \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) algorithm on Tracker dataset, there are about \(6 \times 10^ 9\) accesses of end-vertices, while the accesses of start-vertices and middle-vertices are only \(4 \times 10^ 7\) and \(2 \times 10^ 8\), respectively. Since the cache miss latency takes a big part of the memory access time [3], we try to improve the CPU cache performance when accessing the end-vertices.

Because the CPU cache is hard to control in algorithms, a general approach to improve the CPU cache performance is storing frequently accessed vertices together. Suppose there is a buffer BF that is partitioned into a low-frequency area LFA and a high-frequency area HFA as shown in Fig. 5. The vertices are stored in BF and only a limited number of vertices are stored in HFA. For an access of the end-vertex w, we compute miss(w) by the following equation:

We want to minimize F which is computed by:

Here, W is the set of processed wedges of an algorithm.

Since F can only be derived after finishing the algorithm, the minimum value of F cannot be pre-computed. We present two strategies that aim to decrease F:

-

Cache-aware wedge processing which performs more high-priority vertices as end-vertices, while retaining the total number of accesses of end-vertices (thus, the same time complexity of \({\mathsf {BFC}}\)-\({\mathsf {VP}}\)). Doing this will enhance the access locality.

-

Cache-aware graph reordering which stores vertices with high-priority together in HFA.

5.1 Cache-aware wedge processing

Issues in wedge processing of \({\mathsf {BFC}}\)-\({\mathsf {VP}}\). In \({\mathsf {BFC}}\)-\({\mathsf {VP}}\), the processing rule restricts the priorities of end-vertices should be lower than the priorities of start-vertices in the processed wedges. Because of that, the accesses of end-vertices exhibit bad locality (i.e., not clustered in memory). For example, by counting the accesses of end-vertices over Tracker dataset, as shown in Fig. 6a, \(79\%\) of total accesses are accesses of low-degree vertices (i.e., degree \(< 500\)) while the percentage of high-degree vertices (i.e., degree \(> 2000\)) accesses is only \(9\%\) in \({\mathsf {BFC}}\)-\({\mathsf {VP}}\). Since the locality of accesses is a key aspect of improving the CPU cache performance, we explore whether the locality of end-vertex-accesses can be improved. With the total access of end-vertices remaining unchanged, we hope the algorithm can access more high-degree vertices as end-vertices. In that manner, the algorithm will have more chance to request the same memory location repeatedly and the accesses of HFA are more possible to increase (i.e., F is more possible to decrease).

New wedge processing strategy. Based on the above observation, we present a new wedge processing strategy: processing the wedges where the priorities of end-vertices are higher than the priorities of middle-vertices and start-vertices. We name the algorithm using this new strategy as \({\mathsf {BFC}}\)-\({\mathsf {VP^{+}}}\). \({\mathsf {BFC}}\)-\({\mathsf {VP^{+}}}\) will perform more high-priority vertices as the end-vertices than \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) because of the restriction of priorities of end-vertices. For example, considering the graph in Fig. 2b, we have \(p(v_0)> p(v_3)> p(u_0)> p(v_2) > p(v_1)\) according to their degrees. We analyze the processed wedges starting from \(v_0\) to \(v_3\), going through \(u_0\). \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) needs to process 5 wedges (i.e., \((v_0, u_0, v_1)\), \((v_0, u_0, v_2)\), \((v_0, u_0, v_3)\), \((v_3, u_0, v_1)\) and \((v_3, u_0, v_2)\)) and 3 vertices (i.e., \(v_1\), \(v_2\) and \(v_3\)) are performed as end-vertices. Utilizing the new wedge processing strategy, as shown in Fig. 2b, the number of processed wedges of \({\mathsf {BFC}}\)-\({\mathsf {VP^{+}}}\) is still 5 (i.e., \((v_1, u_0, v_0)\), \((v_1, u_0, v_3)\), \((v_2, u_0, v_0)\), \((v_2, u_0, v_3)\) and \((v_3, u_0, v_0)\)) but only 2 vertices with high-priorities (i.e., \(v_0\) and \(v_3\)) are performed as end-vertices. Thus, the number of accessing different end-vertices is decreased from 3 to 2 (i.e., the accesses exhibit better locality). Also as shown in Fig. 6b, after applying the new wedge processing strategy, the percentage of accesses of high-degree vertices (i.e., degree \(> 2000\)) increases from 9 to 81% on Tracker dataset.

Analyzing the new wedge processing strategy. Although the new wedge processing strategy can improve the CPU cache performance of \({\mathsf {BFC}}\)-\({\mathsf {VP}}\), there are two questions that arise naturally: (1) whether the number of processed wedges is still the same as \({\mathsf {BFC}}\)-\({\mathsf {VP}}\); (2) whether the time complexity is still the same as \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) after utilizing the new wedge processing strategy. We denote the set of processed wedges of \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) as \(W_{vp}\) and the set of processed wedges of \({\mathsf {BFC}}\)-\({\mathsf {VP^{+}}}\) as \(W_{vp^+}\), and the following lemma holds.

Lemma 3

\(|W_{vp}| = |W_{vp^+}|\).

Proof

For a wedge \((u, v, w) \in W_{vp}\), it always satisfies \(p(u) > p(v)\) and \(p(u) > p(w)\) according to Algorithm 2. For a wedge \((u, v, w) \in W_{vp^+}\), it always satisfies \(p(w) > p(v)\) and \(p(w) > p(u)\) according to the new wedge processing strategy. In addition, p(u) is unique for each vertex u and the new wedge processing strategy does not change p(u) of u. Thus, for each wedge \((u, v, w) \in W_{vp}\), we can always find a wedge \((w, v, u) \in W_{vp^+}\). Similarly, for each wedge \((u, v, w) \in W_{vp^+}\), we can always find a wedge \((w, v, u) \in W_{vp}\). Therefore, we prove that \(|W_{vp}| = |W_{vp^+}|\). \(\square \)

Since no duplicate wedges are processed, based on the above lemma, \({\mathsf {BFC}}\)-\({\mathsf {VP^{+}}}\) will process the same number of wedges with \({\mathsf {BFC}}\)-\({\mathsf {VP}}\). However, if only applying this strategy, when going through a middle-vertex, we need to check all its neighbors to find the end-vertices which have higher priorities than the middle vertex and the start-vertex. The time complexity will increase to \(O(\sum _{u \in V(G), v \in N_G(u)}deg_G(u)deg_G(v))\) because each middle-vertex v has \(deg_G(v)\) neighbors. In order to reduce the time complexity, for each vertex, we need to sort the neighbors in descending order of their priorities. After that, when dealing with a middle-vertex, we can early terminate the priority checking once we meet a neighbor which has a lower priority than the middle-vertex or the start-vertex.

5.2 Cache-aware graph reordering

Motivation. After utilizing the cache-aware wedge processing strategy, end-vertices are mainly high-priority vertices. Generally, vertices are sorted by their ids when storing in the buffer. Figure 7 shows accesses of the buffer when processing end-vertices (i.e., \(v_0\) and \(v_3\)) starting from \(v_0\) to \(v_3\) and going through \(u_0\) in Fig. 2b by \({\mathsf {BFC}}\)-\({\mathsf {VP}}\). We can see that although end-vertices are mostly high-priority vertices, the distance between two end-vertices (e.g., \(v_0\) and \(v_3\)) can be very long. This is because many low-priority vertices are stored in the middle of high-priority vertices. In addition, real graphs usually follow power-law distributions which do not contain too many vertices with high priorities (degrees). For example, in the Tracker dataset with about 40, 000, 000 vertices, there are only 10, 338 vertices with degree \(\ge 1000\), and only \(1\%\) vertices (400, 000) with degree \(\ge 37\). Motivated by the above observations, we propose the graph reordering strategy which can further improve the cache performance.

Graph reordering strategy. The main idea of the graph reordering strategy is reordering the given bipartite graph G into a reordering graph \(G^*\) using a 1 to 1 bijective function f. The reordering graph \(G^*\) is defined as follows.

Definition 5

(Reordering graph) For a bipartite graph G, the reordering graph \(G^*\) is defined as: \(G^* \leftarrow reordering(G, f)\), where f is a bijection from E(G) to \(E(G^*)\). For each \(e = (u, v) \in E(G)\), \(e^* = (u^*, v^*)= f(e)\) where \(u^* \in U(G^*)\), \(v^* \in L(G^*)\), and \(u^*.id = rankU(u) + l\), \(v^*.id = rankL(v)\). \(rankU(u)\in [0, r-1]\) (\(rankL(v)\in [0, l-1]\)) denotes the rank of the priority of \(u \in U(G)\) (the rank of the priority of \(v \in L(G)\)).

Note that our linear graph reordering uses a 1 to 1 bijective function to relabel the vertex-IDs which does not change the graph structure. Thus, the number of vertices and edges are both unchanged after reordering. After reordering the original graph G into the reordering graph \(G^*\), the vertices with high priorities will be stored together. In this manner, we can store more high-priority vertices consecutively in HFA. Figure 7 illustrates the idea of graph reordering using the example in Fig. 2b. After obtaining the reordering graph \(G^*\), we can see that the distance between two high-priority end-vertices becomes much shorter, e.g., the distance between \(v^*_1\) and \(v^*_2\) is 1 while the distance between \(v_0\) and \(v_3\) before reordering is 3. In the experiments, we prove that the algorithms applying with the graph reordering strategy achieve a much lower cache miss ratio than \({\mathsf {BFC}}\)-\({\mathsf {VP}}\).

5.3 Putting cache-aware strategies together

The \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) algorithm. Putting the above strategies together, we show the details of the algorithm \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) in Algorithm 3. \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) first generates a reordering graph \(G^{*}\) according to Definition 5 and for each vertex \(u^* \in V(G^{*})\), we sort its neighbors. Then, \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) finds \(N_{G^*}(u^*)\) for each vertex \(u^* \in V(G^{*})\). For each vertex \(v^* \in N_{G^*}(u^*)\), we find \(w^* \in N_{G^*}(v^*)\) with \(p(w^*) > p(u^*)\) and \(p(w^*) > p(v^*)\) (Lines 5 - 12). After Lines 6 - 12, we have \(|N_G(u^*) \cap N_G(w^*)|\) for the start-vertex \(u^*\) and the end-vertex \(w^* \in 2hop_G(u^*)\). Finally, we compute  (Lines 13–14).

(Lines 13–14).

Theorem 5

\({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) solves the butterfly counting problem correctly.

Proof

We prove that \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) correctly computes  for a bipartite graph G. Since the graph reordering strategy just renumbers the vertices, it does not affect the structure of G. Given a butterfly [x, u, v, w], we assume x has the highest priority. We only need to prove that \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) will count exactly once for each butterfly in Fig. 4. Regarding the case in Fig. 4a, b, or c, \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) only counts the butterfly [x, u, v, w] once from the wedges (v, u, x) and (v, w, x). Thus, we can get that the \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) algorithm correctly solves the butterfly counting problem. \(\square \)

for a bipartite graph G. Since the graph reordering strategy just renumbers the vertices, it does not affect the structure of G. Given a butterfly [x, u, v, w], we assume x has the highest priority. We only need to prove that \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) will count exactly once for each butterfly in Fig. 4. Regarding the case in Fig. 4a, b, or c, \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) only counts the butterfly [x, u, v, w] once from the wedges (v, u, x) and (v, w, x). Thus, we can get that the \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) algorithm correctly solves the butterfly counting problem. \(\square \)

Theorem 6

The time complexity of \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) is \(O(\alpha \cdot m)\).

Proof

Algorithm 3 has two phases including the initialization phase and  computation phase. In the first phase, similar to \({\mathsf {BFC}}\)-\({\mathsf {VP}}\), the algorithm needs \(O(n + m)\) time to compute the priority number, sort the neighbors of vertices and compute the reordering graph. Secondly, we analyze how many wedge counting operations executed by \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) in Algorithm 3 Line 10 (i.e., the number of wedges processed) as follows. In \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\), we only need to process the wedges where the degree of the end-vertex is higher than or equal to the middle-vertex. Thus, the wedge counting operations consume \(O(deg_G(v))\) time to process each edge \((u, v) \in E(G)\) connecting an end-vertex u and a middle-vertex v with \(deg(u) \ge deg(v)\). Hence, \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) needs \(O(\sum _{(u, v) \in E(G)}min\{deg_G(u), deg_G(v)\}) = O(\alpha \cdot m)\) time in total, and this theorem holds. \(\square \)

computation phase. In the first phase, similar to \({\mathsf {BFC}}\)-\({\mathsf {VP}}\), the algorithm needs \(O(n + m)\) time to compute the priority number, sort the neighbors of vertices and compute the reordering graph. Secondly, we analyze how many wedge counting operations executed by \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) in Algorithm 3 Line 10 (i.e., the number of wedges processed) as follows. In \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\), we only need to process the wedges where the degree of the end-vertex is higher than or equal to the middle-vertex. Thus, the wedge counting operations consume \(O(deg_G(v))\) time to process each edge \((u, v) \in E(G)\) connecting an end-vertex u and a middle-vertex v with \(deg(u) \ge deg(v)\). Hence, \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) needs \(O(\sum _{(u, v) \in E(G)}min\{deg_G(u), deg_G(v)\}) = O(\alpha \cdot m)\) time in total, and this theorem holds. \(\square \)

Theorem 7

The space cost of \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) is O(m).

Proof

In Algorithm 3, we need O(m) space to store the graph structure and O(n) space to store the arrays for the priority of vertices and counting the number of wedges. The graph reordering process also needs O(m) space for the reordering graph. Thus, the space cost of the \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) algorithm is bounded by O(m). \(\square \)

Remark

Note that our algorithms (i.e., \({\mathsf {BFC}}\)-\({\mathsf {VP}}\) and \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\)) are able to output all the butterflies in a compact format in \(O(\alpha \cdot m)\) time. For instance, we can use \([u_i, u_j, \{v_x, v_y, v_z\}]\) to represent three butterflies that contain \(u_i\) and \(u_j\) with the other two vertices chosen from \(\{v_x, v_y, v_z\}\). If we want to enumerate all the butterflies one by one, it needs an additional  time based on this compact data structure. Here,

time based on this compact data structure. Here,  denotes the total number of butterflies in G, which can reach \(O(m^2)\) in the worst-case.

denotes the total number of butterflies in G, which can reach \(O(m^2)\) in the worst-case.

6 Handling other cases

In this section, firstly, we extend our algorithms to compute  for each e in G. Secondly, we extend our algorithms to parallel algorithms. Thirdly, we introduce the external memory butterfly counting algorithm to handle large graphs with limited memory size.

for each e in G. Secondly, we extend our algorithms to parallel algorithms. Thirdly, we introduce the external memory butterfly counting algorithm to handle large graphs with limited memory size.

6.1 Counting the butterflies for each edge

For an edge e in G, the following equation holds [64]:

Based on the above equation, our \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) algorithm can be extended to compute the butterfly count for each edge. In Algorithm 3, for a start-vertex \(u^*\) and a valid end-vertex \(w^* \in 2hop_G(u)\), the value \(|N_G(u^*) \cap N_G(w^*)|\) is already computed which can be used directly to compute  .

.

Here, we present the \({\mathsf {BFC}}\)-\({\mathsf {EVP^{++}}}\) algorithm to compute  . The details of \({\mathsf {BFC}}\)-\({\mathsf {EVP^{++}}}\) are shown in Algorithm 4. In the initialization process, we initialize

. The details of \({\mathsf {BFC}}\)-\({\mathsf {EVP^{++}}}\) are shown in Algorithm 4. In the initialization process, we initialize  for each edge e in G. After that, for each start-vertex \(u^*\), we run Algorithm 3 Lines 6–12 to compute \(|N_G(u^*) \cap N_G(w^*)|\). Then, we run another round of wedge processing and update

for each edge e in G. After that, for each start-vertex \(u^*\), we run Algorithm 3 Lines 6–12 to compute \(|N_G(u^*) \cap N_G(w^*)|\). Then, we run another round of wedge processing and update  ,

,  according to Eq. (8) (Lines 5–14). Finally, we return the result.

according to Eq. (8) (Lines 5–14). Finally, we return the result.

In Algorithm 4, we only need an extra array to store  for each edge e. In addition, because it just runs the wedge processing procedure twice, we can get that the time complexity of the \({\mathsf {BFC}}\)-\({\mathsf {EVP^{++}}}\) algorithm is the same as \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\).

for each edge e. In addition, because it just runs the wedge processing procedure twice, we can get that the time complexity of the \({\mathsf {BFC}}\)-\({\mathsf {EVP^{++}}}\) algorithm is the same as \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\).

6.2 Parallelization

Shared-memory parallelization. In Algorithm 3, only read operations occur on the graph structure. This motivates us to consider the shared-memory parallelization. Assume we have multiple threads and these threads can handle different start-vertices simultaneously. No conflict occurs when these threads read the graph structure simultaneously. However, conflicts may occur when they update \(wedge\_cnt\) and  simultaneously in Algorithm 3. Thus, we can divide the data space into the global data space and the local data space. In the global data space, the threads can access the graph structure simultaneously. In the local data space, we use \(local\_wedge\_cnt\) and

simultaneously in Algorithm 3. Thus, we can divide the data space into the global data space and the local data space. In the global data space, the threads can access the graph structure simultaneously. In the local data space, we use \(local\_wedge\_cnt\) and  for each thread to avoid conflicts. Thus, we can use \(O(n * t + m)\) space to extend \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) into a parallel version, where t denotes the number of threads.

for each thread to avoid conflicts. Thus, we can use \(O(n * t + m)\) space to extend \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) into a parallel version, where t denotes the number of threads.

The algorithm \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) in parallel. We show the details of the algorithm \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) in parallel in Algorithm 5. Note that we use the priority-based dynamic scheduling strategy by considering the workload balance [66]. Similar as \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\), we first generate a reordering graph \(G^{*}\). Then, the algorithm sequentially processes the start-vertices in non-ascending order by their priorities. For a vertex \(u^* \in V(G^{*})\), it will be dynamically allocated to an idle thread i. Note that, for each thread i, we generate an independent space for \(local\_wedge\_cnt[i]\) and  . After all the threads finishing their computation, we compute

. After all the threads finishing their computation, we compute  on the master thread.

on the master thread.

Note that the work-span model is popularly used to analyze the parallel algorithms. The work of an algorithm measures the total number of operations, and the span of an algorithm is the longest dependency path [20]. Based on this model, Algorithm 5 takes \(O(\alpha \cdot m)\) work and O(log(m)) span with high probability, which can be analyzed similarly as done in [59].

6.3 External memory butterfly counting

In order to handle large graphs with limited memory size, we introduce the external memory algorithm \({\mathsf {BFC}}\)-\({\mathsf {EM}}\) in Algorithm 6 which is also based on the vertex priority. We first run an external sorting on the edges to group the edges with the same vertex-IDs together. Then, we compute the priorities of vertices by sequentially scanning these edges once. Then, for each vertex \(v \in V(G)\), we sequentially scan its neighbors from the disk and generate the wedges (u, v, w) with \(p(w) > p(v)\) and \(p(w) > p(u)\) where \(w \in N_G(v)\) and \(u \in N_G(v)\) (Lines 4–6). For each wedge (u, v, w), we only store the vertex-pair (u, w) on disk. After that, we maintain the vertex-pairs on disk such that all (u, w) pairs with the same u and w values are stored continuously (Line 7). This can be simply achieved by running an external sorting on these (u, w) pairs. Then, we sequentially scan these vertex-pairs, and for the vertex-pair (u, w), we count the occurrence of it and compute  based on Lemma 1 (Lines 8–10).

based on Lemma 1 (Lines 8–10).

I/O complexity analysis. We use the standard notations in [2] to analyze the I/O complexity of \({\mathsf {BFC}}\)-\({\mathsf {EM}}\): M is the main memory size and B is the disk block size. The I/O complexity to scan N elements is \(scan(N) = \varTheta (\frac{N}{B})\), and the I/O complexity to sort N elements is \(sort(N) = O(\frac{N}{B} log_{\frac{M}{B}}\frac{N}{B})\). In \({\mathsf {BFC}}\)-\({\mathsf {EM}}\), the dominate cost is to scan and sort the vertex-pairs. Since there are \(O(\alpha \cdot m)\) vertex-pairs generated by the \({\mathsf {BFC}}\)-\({\mathsf {EM}}\) algorithm, we can get that the I/O complexity of \({\mathsf {BFC}}\)-\({\mathsf {EM}}\) is \(O(scan(\alpha \cdot m)+sort(\alpha \cdot m))\).

7 Batch-dynamic butterfly counting

In this section, we discuss the problem of batch-dynamic butterfly counting. Given a bipartite graph G and a batch-update B, we aim to compute the number of butterflies resulting from the batch-update B (denoted as  ). Here, a batch-update B is a batch of edge insertion and edge deletion operations. In other words, suppose we already know how many butterflies in G, we want to obtain the number of butterflies after updating B on G. Since B contains edge insertion and deletion operations, we use \(B^+\) to represent the set of inserting edges and use \(B^-\) to represent the set of deleting edges. We suppose \(B^+\) and \(B^-\) are disjoint (i.e., \(B^+ \cap B^- = \emptyset \)) since we can safely remove all the common edges in these two sets without affecting the final butterfly counts. Then, we can first count the number of affected butterflies of \(B^-\) and then count the number of affected butterflies of \(B^+\). Note that the counting procedures of the deletion batch and the insertion batch are inherently the same since we can consider deleting \(B^-\) from G as inserting \(B^-\) into \(G \backslash B^-\). All we need to know is the number of affected butterflies resulting from a batch of updates. It is worth noticing that to transform a deletion case into an insertion case, we need to adjust the initial data structures. Specifically, we remove \(B^-\) from G and consider \(B^-\) as \(B^+\). As evaluated in our experiments, such overhead is small. For the ease of presentation, we suppose all the updates are edge insertions in the following parts (i.e., \(B = B^+\)).

). Here, a batch-update B is a batch of edge insertion and edge deletion operations. In other words, suppose we already know how many butterflies in G, we want to obtain the number of butterflies after updating B on G. Since B contains edge insertion and deletion operations, we use \(B^+\) to represent the set of inserting edges and use \(B^-\) to represent the set of deleting edges. We suppose \(B^+\) and \(B^-\) are disjoint (i.e., \(B^+ \cap B^- = \emptyset \)) since we can safely remove all the common edges in these two sets without affecting the final butterfly counts. Then, we can first count the number of affected butterflies of \(B^-\) and then count the number of affected butterflies of \(B^+\). Note that the counting procedures of the deletion batch and the insertion batch are inherently the same since we can consider deleting \(B^-\) from G as inserting \(B^-\) into \(G \backslash B^-\). All we need to know is the number of affected butterflies resulting from a batch of updates. It is worth noticing that to transform a deletion case into an insertion case, we need to adjust the initial data structures. Specifically, we remove \(B^-\) from G and consider \(B^-\) as \(B^+\). As evaluated in our experiments, such overhead is small. For the ease of presentation, we suppose all the updates are edge insertions in the following parts (i.e., \(B = B^+\)).

7.1 Computing  from each new edge

from each new edge

It is apparent that each new butterfly contains at least one new edge from B. As a result, a straightforward algorithm \({\mathsf {BFCB}}\)-\({\mathsf {BS}}\) can be designed by sequentially processing each edge \((u, v) \in B\). For a new edge \((u, v) \in B\), we insert it into G and compute the number of new butterflies resulting from it according to Eq. (8). The details of \({\mathsf {BFCB}}\)-\({\mathsf {BS}}\) are shown in Algorithm 7.

The \({\mathsf {BFCB}}\)-\({\mathsf {BS}}\) Algorithm. For each new edge (u, v) in B, we first insert it into G and get the neighbor set \(N_G(v)\) of v. Then, for each \(w \in N_G(v)\), we compute the number of common neighbors between u and w (i.e., \(|N_G(u) \cap N_G(w)|\)) and add \(|N_G(u) \cap N_G(w)| - 1\) to  according to Eq. (8). When computing the common neighbors of u and w, we can construct a hash map H using the neighbor set of u (Line 4). Then, we can just look up each vertex \(x \in N_G(w)\) to check whether it is in H (Lines 4–8). The time cost of the above procedure for processing each edge (u, v) is \(O(\sum _{w \in N(v)}deg_G(w))\). To reduce the time cost, when processing the edge (u, v), we first compute the values of \(\sum _{x \in N_G(u)}deg_G(x)\) and \(\sum _{w \in N_G(v)}deg_G(w)\). If it satisfies \(\sum _{x \in N_G(u)}deg_G(x) < \sum _{w \in N_G(v)}deg_G(w)\), we exchange u and v (i.e., process the neighbor set of u instead of that of v). In this way, we can reduce the number of wedges processed in the algorithm. One may also consider pre-computing the hash map of neighbor set for each vertex in G. Then, when computing \(|N_G(u) \cap N_G(w)|\), we can choose to scan the smaller set between \(N_G(u)\) and \(N_G(w)\) with \(O(min\{deg_G(u), deg_G(w)\})\) time. However, it incurs additional time cost to compute the hash map for each vertex in G which can be a very large overhead under the batch-dynamic context.

according to Eq. (8). When computing the common neighbors of u and w, we can construct a hash map H using the neighbor set of u (Line 4). Then, we can just look up each vertex \(x \in N_G(w)\) to check whether it is in H (Lines 4–8). The time cost of the above procedure for processing each edge (u, v) is \(O(\sum _{w \in N(v)}deg_G(w))\). To reduce the time cost, when processing the edge (u, v), we first compute the values of \(\sum _{x \in N_G(u)}deg_G(x)\) and \(\sum _{w \in N_G(v)}deg_G(w)\). If it satisfies \(\sum _{x \in N_G(u)}deg_G(x) < \sum _{w \in N_G(v)}deg_G(w)\), we exchange u and v (i.e., process the neighbor set of u instead of that of v). In this way, we can reduce the number of wedges processed in the algorithm. One may also consider pre-computing the hash map of neighbor set for each vertex in G. Then, when computing \(|N_G(u) \cap N_G(w)|\), we can choose to scan the smaller set between \(N_G(u)\) and \(N_G(w)\) with \(O(min\{deg_G(u), deg_G(w)\})\) time. However, it incurs additional time cost to compute the hash map for each vertex in G which can be a very large overhead under the batch-dynamic context.

Example 2

Consider the original graph G and the batch-update \(B = \{(u_3, v_1), (u_3, v_2)\}\) in Fig. 8a. We first insert \((u_3, v_1)\) into G. Since we have \(\sum _{x \in N_G(u_3)}deg_G(x) < \sum _{w \in N_G(v_1)}deg_G(w)\), we process the neighbor set of \(u_3\). For each \(x \in N_G(u_3)\backslash v_1\) (i.e., \(v_0\)), we compute \(|N_G(v_1) \cap N_G(v_0)| = 3\) (i.e., \(u_0\), \(u_1\), and \(u_3\)). Then, we can get the number of new butterflies containing \((u_3, v_1)\) is \(3 - 1 = 2\). After that, we insert \((u_3, v_2)\) into G, and get the number of new butterflies containing \((u_3, v_2)\) is 5. In total, we can get the number of new butterflies resulting from B is 7.

Theorem 8

The time complexity of \({\mathsf {BFCB}}\)-\({\mathsf {BS}}\) is \(O(\sum _{(u,v) \in B} min\{\sum _{x \in N(u)}deg_G(x), \sum _{w \in N(v)}deg_G(w)\})\) in the worst-case.

Proof

Algorithm 7 processes the edges in B one by one. For each inserted edge \(e=(u,v)\), the new butterflies can be enumerated by exploring the two-hop neighbors of u and check if they are connected to v, which takes \(O(\sum _{x \in N(u)}deg_G(x))\) time. An alternative approach is to explore the two-hop neighbors of v that are connected to u, which takes \(O(\sum _{w \in N(v)}deg_G(w))\) time. With the time costs of these two approaches pre-computed (Line 4), the time complexity of enumerating new butterflies for each edge (u, v) is the smaller of the two. Summing this time complexity over all the inserted edges completes the proof. \(\square \)

In addition, \({\mathsf {BFCB}}\)-\({\mathsf {BS}}\) has the space complexity of \(O(|G|+|B|)\) since we need \(O(|G|+|B|)\) space to store the graph structure, and the hashmap H used in Algorithm 7 needs additional \(O(|V(G \cup B)|)\) space.

Analysis of \({\mathsf {BFCB}}\)-\({\mathsf {BS}}\) Reviewing Algorithm 7, the drawbacks of \({\mathsf {BFCB}}\)-\({\mathsf {BS}}\) are apparent. (1) \({\mathsf {BFCB}}\)-\({\mathsf {BS}}\) needs to enumerate each butterfly containing a new edge in B which is inefficient. The in-depth reason is that if we want to count the number of butterflies containing exactly one new edge, we cannot use vertex-priority-based techniques to amortize the time cost like Algorithm 4. (2) It is not easy to parallelize \({\mathsf {BFCB}}\)-\({\mathsf {BS}}\) since it needs to process each edge \(e \in B\) sequentially and insert the edge e into G after processing it. Specifically, if we directly parallelize Line 2 in Algorithm 7, the parallel algorithm will likely overlook the new butterflies with more than one new edge which are processed by different threads simultaneously. There is no simple parallel implementation of \({\mathsf {BFCB}}\)-\({\mathsf {BS}}\) which can address this issue efficiently.

7.2 Computing  from the affected subgraph

from the affected subgraph

To address the above-mentioned issues, we propose an affected-subgraph-based algorithm \({\mathsf {BFCB}}\)-\({\mathsf {IG}}\). The main intuition behind \({\mathsf {BFCB}}\)-\({\mathsf {IG}}\) is that before computing the new butterflies resulting from B, we first update B to G and identify the affected scope (or subgraph) of B. In this way, we not only can use the vertex-priority-based algorithms (in a subgraph with limited size) but also can easily derive a parallel implementation. We define the affected subgraph as follows.

Definition 6

(Affected subgraph) Given a bipartite graph G and a batch-update B, the affected subgraph \(G_A\) due to B is the induced subgraph of all the vertices in \(V^1_B \cup V^2_B\). Here, \(V^1_B\) is the set of vertices that are incident to at least one new edge in B. \(V^2_B\) is the set of vertices that are the neighbors of at least one vertex in \(V^1_B\). For instance, the affected subgraph \(G_A\) of G is shown in Fig. 8.

Lemma 4

Consider a bipartite graph G and a batch-update B. All the new butterflies resulting from B are contained in the affected subgraph \(G_A\).

Proof

For each new butterfly, it must contain at least one new edge \(e = (u, v)\). Since \(V^1_B\) includes all the vertices incident to the new edges, u and v must be in \(V^1_B\). Due to the butterfly structure, the other two vertices of the new butterfly must be connected to u and v, which must be contained in \(V^2_B\) (Definition 6). Therefore, all of the new butterflies resulting from B are contained in the affected subgraph \(G_A\). \(\square \)

Based on the above lemma, we can guarantee that all the new butterflies are contained in \(G_A\). Since \(G_A\) may also contain many butterflies which originally exist in G (i.e., old butterflies), we need to subtract the count of these old butterflies when applying the butterfly counting algorithm (e.g., \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\)) on \(G_A\). Reviewing the \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) algorithm (i.e., Algorithm 3), we can see that it starts from each vertex and processes valid wedges to count the number of butterflies. In the batch-dynamic context, we call a wedge is a new wedge if it contains at least one new edge from B. Otherwise, we call it is an old wedge. For example, \((v_0, u_3, v_1)\) is a new wedge. It is easy to observe that any new butterfly in \(G_A\) is composed of (1) one new wedge and one old wedge; or (2) two new wedges. Thus, we can have the following lemma.

Lemma 5

Given an affected subgraph \(G_A\) and a vertex \(u \in G_A\), the number of new butterflies containing u denoted as  can be computed by the following equation:

can be computed by the following equation:

Here \(C_\mathrm{old}(w)\) is the number of old wedges containing u and w in \(G_A\).

Proof

For any two vertices \(x_1, x_2\) in \(N_{G_A}(u) \cap N_{G_A}(w)\), \([u, x_1, w, x_2]\) is a butterfly in \(G_A\), so the first term in the equation counts the number of butterflies containing u and w. To compute the number of new butterflies in \(G_A\), we need to subtract the number of old butterflies. Note that an old butterfly can only be formed by two old wedges. Thus, the number of old butterflies can be computed as \(\left( {\begin{array}{c}C_\mathrm{old}(w)\\ 2\end{array}}\right) \).  is computed by taking the difference of these numbers and summing over all 2-hop neighbors of u, which completes the proof. \(\square \)

is computed by taking the difference of these numbers and summing over all 2-hop neighbors of u, which completes the proof. \(\square \)

The \({\mathsf {BFCB}}\)-\({\mathsf {IG}}\) Algorithm. Based on the above lemma, we design the algorithm \({\mathsf {BFCB}}\)-\({\mathsf {IG}}\) to count the number of new butterflies in \(G_A\) as shown in Algorithm 8. We first insert each edge in B into G. Then, we construct the affected subgraph \(G_A\) according to Definition 6. After that, we re-organized \(G_A\) using the cache-aware graph reordering technique and compute the vertex priority on \(G_A\). Then, starting from each vertex in \(V(G_A)\), we use the same strategy as \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\) to process the wedges. Unlike \({\mathsf {BFC}}\)-\({\mathsf {VP^{++}}}\), \({\mathsf {BFCB}}\)-\({\mathsf {IG}}\) needs two hash maps \(C_\mathrm{total}\) and \(C_\mathrm{old}\) to record the number of wedges and the number of old wedges containing an end-vertex w, respectively. This is because we need to obtain the number of new butterflies which can be computed according to Lemma 5 (Line 17).

Example 3

Consider the example in Fig. 8a and the batch-update \(B = \{(u_3, v_1), (u_3, v_2)\}\). We first construct \(G_A\) as shown in Fig. 8b. In \(G_A\), we have \(p(v_2)> p(v_1)> p(u_3)> p(u_1)> p(u_0)> p(v_0)> p(u_2) > p(v_3)\). Then, we start from each vertex in \(G_A\) to count the number of new butterflies by traversing valid wedges. From \(v_0\), we get wedges \((v_0, u_0, v_1)\), \((v_0, u_1, v_1)\), \((v_0, u_3, v_1)\), \((v_0, u_0, v_2)\), \((v_0, u_1, v_2)\), and \((v_0, u_3, v_2)\). Since only \((v_0, u_3, v_1)\) and \((v_0, u_3, v_2)\) are new wedges, we have \(C_\mathrm{total}(v_1) = 3\), \(C_\mathrm{old}(v_1) = 2\), \(C_\mathrm{total}(v_2) = 3\), \(C_\mathrm{old}(v_2) = 2\). Thus, the number of new butterflies can be obtained is \(\left( {\begin{array}{c}3\\ 2\end{array}}\right) - \left( {\begin{array}{c}2\\ 2\end{array}}\right) + \left( {\begin{array}{c}3\\ 2\end{array}}\right) - \left( {\begin{array}{c}2\\ 2\end{array}}\right) = 4\). Then, when starting from \(v_2\), we will get 3 new butterflies similarly. In total, we have 7 new butterflies.

Theorem 9

The \({\mathsf {BFCB}}\)-\({\mathsf {IG}}\) algorithm correctly solves the batch-dynamic butterfly counting problem.

Proof

By Lemma 5, the new butterflies due to the batch-update are all contained in the affected subgraph \(G_A\). Algorithm 8 visits the vertices in the affected subgraph \(G_A\) and counts the number of new butterflies. Specifically, for each visited vertex \(u \in V(G_A)\), the algorithm explores the two-hop neighbors of u and counts how many new wedges and old wedges are formed according to the vertex priority. For each two-hop neighbor w of u, \(C_\mathrm{total}(w)\) counts the total number of wedges containing u and w and \(C_\mathrm{old}(w)\) counts the old wedges (Lines 6, 7, 11, and 13). Note that only the wedges in which w has the highest priority are visited (Lines 9–10), which can avoid counting duplicate butterflies. By Lemma 5, the new butterflies containing u and w is computed by subtracting the number of the old butterflies containing u and w from the total count (Line 17). Since  keeps an accumulating sum of the number of new butterflies containing the visited vertices,

keeps an accumulating sum of the number of new butterflies containing the visited vertices,  becomes the number of new butterflies when the for-loop terminates in line 18, which completes the proof. \(\square \)

becomes the number of new butterflies when the for-loop terminates in line 18, which completes the proof. \(\square \)

Theorem 10

The worst-case time complexity of \({\mathsf {BFCB}}\)-\({\mathsf {IG}}\) is \(O(TC_{G_A} + \sum _{(u,v)\in E(G_A)} min\{deg_{G_A}(u), deg_{G_A}(v)\}\). Here, \(TC_{G_A}\) is the worst-case time complexity of constructing \(G_A\), bounded by \(O(|B| + \sum _{u \in V(G_A)} deg_G(u))\).

Proof

Algorithm 8 constructs the affected subgraph \(G_A\) from B first and then counts the new butterflies on \(G_A\). When constructing \(G_A\), the algorithm inserts the edges in B into G, which takes O(|B|) time. Then, it needs to visit the vertices in \(V(G_A)\) and scan through each vertex’s neighbors in G to construct the affected subgraph. Therefore, the affected subgraph construction takes \(TC_{G_A} = O(|B| + \sum _{u \in V(G_A)} deg_G(u))\) time. Also, Algorithm 8 needs to enumerate all the valid wedges similar to Algorithm 3. By Theorem 3, this process takes \(O(\sum _{(u, v) \in E(G_A)}min\{deg_{G_A}(u), deg_{G_A}(v)\})\) time. Adding it to the time complexity of \(G_A\) construction completes the proof. \(\square \)

In addition, \({\mathsf {BFCB}}\)-\({\mathsf {IG}}\) has the space complexity of \(O(|G|+|B|)\) since apart from the graph structure, the data structures used in \({\mathsf {BFCB}}\)-\({\mathsf {IG^+}}\) (e.g., \(C_\mathrm{total}\) and \(C_\mathrm{old}\)) are all bounded by \(O(|G|+|B|)\).

7.3 Reducing the computation of old wedges

Motivation. Although the algorithm \({\mathsf {BFCB}}\)-\({\mathsf {IG}}\) can effectively utilize the vertex-priority-based techniques, it can be further sped up by reducing the computation of old wedges. In \({\mathsf {BFCB}}\)-\({\mathsf {IG}}\), the number of new butterflies is obtained based on Lemma 5 which needs to compute the total number of butterflies and the number of old butterflies on \(G_A\). In this manner, we need to traverse many old wedges, and some of them do not lead to a new butterfly. For instance, consider the affected subgraph \(G_A\) in Fig. 10, we need to traverse many wedges which do not form any new butterfly such as \((u_1, v_1, u_2\)) and \((u_1, v_2, u_2)\). To explore how to reduce the computation of old wedges (and old butterflies), we first present all the cases of new butterflies as shown in Fig. 9. These cases are based on the fact that each new butterfly must contain at least one new edge.

-

The new butterfly contains one new edge. Case 1 and Case 2. The number of new edges that w is incident to: Case 1: 0; Case 2: 1.

-

The new butterfly contains two new edges. Case 3–Case 6. The number of new edges that w is incident to: Case 3: 1 (two new edges are incident edges); Cases 4: 0; Case 5: 1 (two new edges are not incident edges); Case 6: 2.

-

The new butterfly contains three new edges. Case 7 and Case 8. The number of old edges that w is incident to: Case 7: 0; Case 8: 1.

-

The new butterfly contains four new edges. Case 9.

Handling new butterflies of different cases. Reviewing the vertex-priority-based algorithm \({\mathsf {BFCB}}\)-\({\mathsf {IG}}\), a butterfly is counted by composing two wedges which both use a vertex w with the highest priority as the end-vertex. We show all the cases of new butterflies in Fig. 22. We can see that the butterflies in Cases 4–9 are all composed of two new wedges (i.e., a wedge composed by at least one new edge). Thus, to avoid touching old wedges when identifying these butterflies, we can split \(N_{G_A}\) into \(N_\mathrm{old}(u)\) and \(N_{new}(u)\) for each \(u \in V(G_A)\) which contain the old neighbors and new inserted neighbors of u, separately. Then, when starting from a vertex u, we can choose a vertex v from \(N_\mathrm{old}(u)\) (or \(N_{new}(u)\)) and choose a vertex w from \(N_{new}(v)\) (or \(N_{G_A}(v)\)) to only traverse new wedges.