Abstract

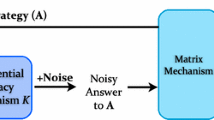

Private selection algorithms, such as the exponential mechanism, noisy max and sparse vector, are used to select items (such as queries with large answers) from a set of candidates, while controlling privacy leakage in the underlying data. Such algorithms serve as building blocks for more complex differentially private algorithms. In this paper we show that these algorithms can release additional information related to the gaps between the selected items and the other candidates for free (i.e., at no additional privacy cost). This free gap information can improve the accuracy of certain follow-up counting queries by up to 66%. We obtain these results from a careful privacy analysis of these algorithms. Based on this analysis, we further propose novel hybrid algorithms that can dynamically save additional privacy budget.

Similar content being viewed by others

Notes

This was a surprising result given the number of incorrect attempts at improving SVT based on flawed manual proofs [33] and shows the power of automated program verification techniques.

That is, for each input D, there might be some random vectors H for which M does not terminate, but the total probability of these vectors is 0, so we can ignore them.

In our algorithm, we set \(\sigma \) to be the standard deviation of the noise distribution.

In the case of monotonic queries, if \(\forall i: q_i \ge q^\prime _i\), then the alignment changes slightly: We set \(\eta ^\prime =\eta \) (the random variable added to the threshold) and set the adjustment to noise in the winning “if” branches to \(q_i-q^\prime _i\) instead of \(1+q_i-q^\prime _i\). (Hence cost terms become \(|q_i-q^\prime _i|\) instead of \(|1+q_i-q^\prime _i|\).) If \(\forall i: q_i \le q^\prime _i\) then we keep the original alignment but in the cost calculation we note that \(|1+q_i-q^\prime _i|\le 1\) (due to the monotonicity and sensitivity).

Selecting thresholds for SVT in experiments is difficult, but we feel this may be fairer than averaging the answer to the top kth and \(k+1\)th queries as was done in prior work [33].

References

Abadi, M., Chu, A., Goodfellow, I., McMahan, H.B., Mironov, I., Talwar, K., Zhang, L.: Deep learning with differential privacy. In: CCS (2016)

Abowd, J.M.: The us census bureau adopts differential privacy. In: KDD (2018)

Albarghouthi, A., Hsu, J.: Synthesizing coupling proofs of differential privacy. In: POPL (2017)

Barthe, G., Gaboardi, M., Gregoire, B., Hsu, J., Strub, P.Y.: Proving differential privacy via probabilistic couplings. In: LICS (2016)

Beimel, A., Nissim, K., Stemmer, U.: Private learning and sanitization: pure vs. approximate differential privacy. Theory Comput. 12(1), 1–61 (2016)

Bhaskar, R., Laxman, S., Smith, A., Thakurta, A.: Discovering frequent patterns in sensitive data. In: KDD (2010)

Bittau, A., Erlingsson, U., Maniatis, P., Mironov, I., Raghunathan, A., Lie, D., Rudominer, M., Kode, U., Tinnes, J., Seefeld, B.: Prochlo: strong privacy for analytics in the crowd. In: SOSP (2017)

Bun, M., Steinke, T.: Concentrated differential privacy: simplifications, extensions, and lower bounds. In: TCC (2016)

Bureau, U.S.C.: On the map: longitudinal employer-household dynamics. https://lehd.ces.census.gov/applications/help/onthemap.html#!confidentiality_protection

Chaudhuri, K., Hsu, D., Song, S.: The large margin mechanism for differentially private maximization. In: NIPS (2014)

Chaudhuri, K., Monteleoni, C., Sarwate, A.D.: Differentially private empirical risk minimization. J. Mach. Learn. Res. 12(Mar), 1069–1109 (2011)

Chen, Y., Machanavajjhala, A., Reiter, J.P., Barrientos, A.F.: Differentially private regression diagnostics. In: ICDM (2016)

Ding, B., Kulkarni, J., Yekhanin, S.: Collecting telemetry data privately. In: NIPS (2017)

Ding, Z., Wang, Y., Zhang, D., Kifer, D.: Free gap information from the differentially private sparse vector and noisy max mechanisms. In: PVLDB (2019)

Dwork, C.: Differential privacy. In: ICALP (2006)

Dwork, C., Kenthapadi, K., McSherry, F., Mironov, I., Naor, M.: Our data, ourselves: privacy via distributed noise generation. In: Annual International Conference on the Theory and Applications of Cryptographic Techniques, pp. 486–503. Springer (2006)

Dwork, C., Lei, J.: Differential privacy and robust statistics. In: STOC (2009)

Dwork, C., McSherry, F., Nissim, K., Smith, A.: Calibrating noise to sensitivity in private data analysis. In: Theory of Cryptography Conference. Springer (2006)

Dwork, C., Roth, A.: The algorithmic foundations of differential privacy. Found. Trends Theor. Comput. Sci. 9(3–4), 211–407 (2014)

Erlingsson, Ú., Feldman, V., Mironov, I., Raghunathan, A., Talwar, K., Thakurta, A.: Amplification by shuffling: from local to central differential privacy via anonymity. In: SODA (2019)

Erlingsson, Ú., Pihur, V., Korolova, A.: Rappor: Randomized aggregatable privacy-preserving ordinal response. In: CCS (2014)

Fanaeepour, M., Rubinstein, B.I.P.: Histogramming privately ever after: Differentially-private data-dependent error bound optimisation. In: ICDE (2018)

Geng, Q., Viswanath, P.: The optimal mechanism in differential privacy. In: ISIT (2014)

Ghosh, A., Roughgarden, T., Sundararajan, M.: Universally utility-maximizing privacy mechanisms. In: STOC. pp. 351–360 (2009)

Gumbel, E.: Statistical Theory of Extreme Values and Some Practical Applications: A Series of Lectures. Applied Mathematics Series, U.S. Government Printing Office, Washington (1954)

Haney, S., Machanavajjhala, A., Abowd, J.M., Graham, M., Kutzbach, M., Vilhuber, L.: Utility cost of formal privacy for releasing national employer–employee statistics. In: SIGMOD (2017)

Hardt, M., Ligett, K., McSherry, F.: A simple and practical algorithm for differentially private data release. In: NIPS (2012)

Johnson, N., Near, J.P., Song, D.: Towards practical differential privacy for SQL queries. In: PVLDB (2018)

Kotsogiannis, I., Machanavajjhala, A., Hay, M., Miklau, G.: Pythia: data dependent differentially private algorithm selection. In: SIGMOD (2017)

Lehmann, E., Casella, G.: Theory of Point Estimation. Springer, Berlin (1998)

Ligett, K., Neel, S., Roth, A., Waggoner, B., Wu, S.Z.: Accuracy first: selecting a differential privacy level for accuracy constrained ERM. In: NIPS (2017)

Liu, J., Talwar, K.: Private selection from private candidates (2018). arXiv preprint arXiv:1811.07971

Lyu, M., Su, D., Li, N.: Understanding the sparse vector technique for differential privacy. In: PVLDB (2017)

Machanavajjhala, A., Kifer, D., Abowd, J., Gehrke, J., Vilhuber, L.: Privacy: from theory to practice on the map. In: ICDE (2008)

Maddison, C.J., Tarlow, D., Minka, T.: A\(\ast \) sampling. In: NIPS (2014)

McSherry, F., Talwar, K.: Mechanism design via differential privacy. In: FOCS (2007)

McSherry, F.D.: Privacy integrated queries: an extensible platform for privacy-preserving data analysis. In: SIGMOD (2009)

Mironov, I.: Rényi differential privacy. In: 30th IEEE Computer Security Foundations Symposium. CSF (2017)

Nocedal, J., Wright, S.J.: Numerical Optimization, 2nd edn. Springer, New York (2006)

Papernot, N., Song, S., Mironov, I., Raghunathan, A., Talwar, K., Úlfar Erlingsson: scalable private learning with pate. In: ICLR (2018)

Raskhodnikova, S., Smith, A.D.: Lipschitz extensions for node-private graph statistics and the generalized exponential mechanism. In: FOCS (2016)

Tang, J., Korolova, A., Bai, X., Wang, X., Wang, X.: Privacy loss in apple’s implementation of differential privacy. In: 3rd Workshop on the Theory and Practice of Differential Privacy at CCS (2017)

Team, A.D.P., Team: Learning with privacy at scale. Appl. Mach. Learn. J. 1(8), 1–25 (2017)

Thakurta, A.G., Smith, A.: Differentially private feature selection via stability arguments, and the robustness of the lasso. In: COLT (2013)

Wang, Y., Ding, Z., Wang, G., Kifer, D., Zhang, D.: Proving differential privacy with shadow execution. In: PLDI (2019)

Zhang, D., Kifer, D.: Lightdp: towards automating differential privacy proofs. In: POPL (2017)

Zhang, D., McKenna, R., Kotsogiannis, I., Hay, M., Machanavajjhala, A., Miklau, G.: Ektelo: A framework for defining differentially-private computations. In: SIGMOD (2018)

Acknowledgements

This work was supported by NSF Awards CNS-1702760 and CNS-1931686.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A Proofs

A Proofs

1.1 A.1 Proof of Theorem 4 (BLUE)

Proof

Let \(q_1, \ldots , q_k\) be the true answers to the k queries selected by Noisy Top-K with gap algorithm. Let \(\alpha _i\) be the estimate of \(q_i\) using Laplace mechanism, and \(g_i\) be the estimate of the gap between \(q_i\) and \(q_{i+1}\) from Noisy Top-K with gap.

Recall that \(\alpha _i = q_i + \xi _i\) and \(g_i = q_i + \eta _i - q_{i+1} - \eta _{i+1}\) where \(\xi _i\) and \(\eta _i\) are independent Laplacian random variables. Assume without loss of generality that \({{\,\mathrm{Var}\,}}(\xi _i)=\sigma ^2\) and \({{\,\mathrm{Var}\,}}(\eta _i) = \lambda \sigma ^2\). Write in vector notation

then \({\varvec{\alpha }}= {\varvec{q}}+ {\varvec{\xi }}\) and \({\varvec{g}}= N({\varvec{q}}+{\varvec{\eta }})\) where

Our goal is then to find the best linear unbiased estimate (BLUE) \({\varvec{\beta }}\) of \({\varvec{q}}\) in terms of \({\varvec{\alpha }}\) and \({\varvec{g}}\). In other words, we need to find a \(k\times k\) matrix X and a \(k\times (k-1)\) matrix Y such that

with \(E(\left\Vert {\varvec{\beta }}- {\varvec{q}}\right\Vert ^2) \) as small as possible. Unbiasedness implies that \(\forall {\varvec{q}}, E({\varvec{\beta }}) = X{\varvec{q}}+ YN{\varvec{q}}= {\varvec{q}}\). Therefore \(X+YN = I_k\) and thus

Plugging this into (6), we have \({\varvec{\beta }}= (I_k - YN){\varvec{\alpha }}+ Y{\varvec{g}}= {\varvec{\alpha }}-Y(N{\varvec{\alpha }}- {\varvec{g}})\). Recall that \({\varvec{\alpha }}= {\varvec{q}}+ {\varvec{\xi }}\) and \({\varvec{g}}= N({\varvec{q}}+{\varvec{\eta }})\), we have \(N{\varvec{\alpha }}-{\varvec{g}}= N({\varvec{q}}+ {\varvec{\xi }}- {\varvec{q}}- {\varvec{\eta }}) = N({\varvec{\xi }}- {\varvec{\eta }})\). Thus

Write \({\varvec{\theta }}= N({\varvec{\xi }}- {\varvec{\eta }})\), then we have \( {\varvec{\beta }}- {\varvec{q}}= {\varvec{\alpha }}- {\varvec{q}}- Y{\varvec{\theta }}= {\varvec{\xi }}- Y{\varvec{\theta }}\). Therefore, finding the BLUE is equivalent to solving the optimization problem \(Y = \arg \min \varPhi \) where

Taking the partial derivatives of \(\varPhi \) w.r.t Y, we have

By setting \(\frac{\partial \varPhi }{\partial Y} = 0\) we have \(YE({\varvec{\theta }}{\varvec{\theta }}^T) = E({\varvec{\xi }}{\varvec{\theta }}^T)\) thus

Recall that \(({\varvec{\xi }}{\varvec{\theta }}^T)_{ij} = \xi _i(\xi _j -\xi _{j+1} -\eta _j + \eta _{j+1} )\), we have

Hence

Similarly, we have

Thus

Hence

It can be directly computed that \(E({\varvec{\theta }}{\varvec{\theta }}^T)^{-1} \) is a symmetric matrix whose lower triangular part is

i.e., \(E({\varvec{\theta }}{\varvec{\theta }}^T)^{-1}_{ij} = E({\varvec{\theta }}{\varvec{\theta }}^T)^{-1}_{ji} = \frac{1}{k(1+\lambda )\sigma ^2}\cdot (k-i)\cdot j\) for all \(1\le i\le j \le k-1\). Therefore, \(Y = E({\varvec{\xi }}{\varvec{\theta }}^T) E({\varvec{\theta }}{\varvec{\theta }}^T)^{-1}= \)

Hence

\(\square \)

1.2 A.2 Proof of Corollary 1

Recall that \(\alpha _i = q_i + \xi _i\) and \(g_i = q_i + \eta _i - q_{i+1} - \eta _{i+1}\) where \(\xi _i\) and \(\eta _i\) are independent Laplacian random variables. Assume without loss of generality that \({{\,\mathrm{Var}\,}}(\xi _i)=\sigma ^2\) and \({{\,\mathrm{Var}\,}}(\eta _i) = \lambda \sigma ^2\) as before. From the matrices X and Y in Theorem 4 we have that \(\beta _i = \frac{x_i + y_i}{k(1+\lambda )}\) where

and

Therefore

and thus \({{\,\mathrm{Var}\,}}(\beta _i) = \frac{{{\,\mathrm{Var}\,}}(x_i) + {{\,\mathrm{Var}\,}}(y_i)}{k^2(1+\lambda )^2} =\frac{1 + k\lambda }{k+k\lambda }\sigma ^2\). Recall that \({{\,\mathrm{Var}\,}}(\alpha _i) = {{\,\mathrm{Var}\,}}(\xi _i) = \sigma ^2\), we have \(\frac{{{\,\mathrm{Var}\,}}(\beta _i)}{{{\,\mathrm{Var}\,}}(\alpha _i)} = \frac{1 + k\lambda }{k+k\lambda }.\)

1.3 A.3 Proof of Lemma 3

The density function of \(\eta _i - \eta \) is \(f_{\eta _i-\eta } (z) = \int _{-\infty }^\infty f_{\eta _i}(x) f_{\eta }(x-z)\,{\mathrm{d}}x =\frac{\epsilon _0\epsilon _*}{4} \int _{-\infty }^\infty e^{-\epsilon _*\left|x\right|} e^{-\epsilon _0\left|x-z\right|}\,{\mathrm{d}}x.\) First consider the case \(\epsilon _0\ne \epsilon _*\). When \(z\ge 0\), we have

Thus by symmetry we have that for all \(z\in \mathbb {R}\), \(f_{\eta _i-\eta } (z) = \frac{\epsilon _0\epsilon _* (\epsilon _0 e^{-\epsilon _*\left|z\right|} - \epsilon _* e^{-\epsilon _0\left|z\right|})}{2(\epsilon _0^2-\epsilon _*^2)}\), and

Now if \(\epsilon _0 = \epsilon _*\), by similar computations we have \(f_{\eta _i-\eta } (z) = (\frac{\epsilon _0}{4} + \frac{\epsilon _0^2\left|z\right|}{4})e^{-\epsilon _0\left|z\right|}\) and \( \mathbb {P}(\eta _i - \eta \ge -t ) = 1 - (\frac{2+\epsilon _0t}{4})e^{-\epsilon _0t}. \)

1.4 A.4 Proofs in Sect. 8 (Exp. Mech. with Gap)

A well-known, but inefficient, folklore algorithm for the exponential mechanism is based on the Gumbel-Max trick [25, 35]: Given numbers \(\mu _1,\dots , \mu _n\), add independent Gumbel(0) noise to each and select the index of the largest noisy value. This is the same as sampling the ith item with probability proportional to \(e^{\mu _i}\). Let \({{\,\mathrm{Cat}\,}}(\mu _1,\dots , \mu _n)\) denote the categorical distribution that returns item \(\omega _i\) with probability \(\tfrac{\exp (\mu _i)}{\sum _{j=1}^n\exp (\mu _j)}\). The Gumbel-Max theorem provides distributions for the identity of the noisy maximum and the value of the noisy maximum:

Theorem 9

(The Gumbel-Max Trick [25, 35]) Let \(G_i\), \(\dots \), \(G_n\) be i.i.d. \({{\,\mathrm{Gumbel}\,}}(0)\) random variables and let \(\mu _1\), \(\dots \), \(\mu _n\) be real numbers. Define \(X_i = G_i+\mu _i\). Then

-

1.

The distribution of \(\arg \max _{i} (X_1,\dots , X_n)\) is the same as \({{\,\mathrm{Cat}\,}}(\mu _1, \dots , \mu _n)\).

-

2.

The distribution of \(\max _{i} (X_1,\dots , X_n)\) is the same as the \({{\,\mathrm{Gumbel}\,}}(\ln \sum _{i=1}^n \exp (\mu _i))\) distribution.

Using the Gumbel-Max trick, one can propose an Exponential Mechanism with Gap by replacing Laplace or exponential noise in Noisy Max with Gap with the Gumbel distribution as shown in Algorithm 7. (Boxed items represent gap information.) We first prove the correctness of this algorithm and then show how to replace the Gumbel-max trick with any efficient black box algorithm for the exponential mechanism.

We first need the following results.

Lemma 9

Let \(\epsilon >0\). Let \(\mu :\mathcal {D}\times \mathcal {R}\rightarrow \mathbb {R}\) be a utility function of sensitivity \(\varDelta _{\mu }\). Define \(\nu :\mathcal {D}\rightarrow \mathbb {R}\) and its sensitivity \(\varDelta _{\nu }\) as

Then \(\varDelta _{\nu }\), the sensitivity of \(\nu \), is at most \( \frac{\epsilon }{2}\).

Proof of Lemma 9

From the definition of \(\nu \) we have

By definition of sensitivity, we have

Thus \(\left|\nu (D) - \nu (D')\right| \le \frac{\epsilon }{2}\), and hence \(\varDelta _{\nu } \le \frac{\epsilon }{2}\). \(\square \)

Lemma 10

Let \(f(x;\theta )=\frac{e^{-(x-\theta )}}{(1+e^{-(x-\theta )})^2}\) be the density of the logistic distribution, then \(\left|\ln \frac{ f(x;\theta )}{f(x;\theta ')}\right| \le \left|\theta - \theta '\right|.\)

Proof of Lemma 10

Note that \(\left|\ln \frac{ f(x;\theta )}{f(x;\theta ')}\right|=\left|\ln \frac{ f(x;\theta ^\prime )}{f(x;\theta )}\right|\) so without loss of generality, we can assume that \(\theta \ge \theta ^\prime \) (i.e., the location parameter in the numerator is \(\ge \) the parameter in the denominator). From the formula of f we have \( \tfrac{f(x;\theta )}{f(x;\theta ')} =e^{\theta -\theta '} \cdot \left( \tfrac{1+e^{-x}e^{\theta '}}{1+e^{-x}e^{\theta }}\right) ^2 \). Clearly \(e^{\theta }\ge e^{\theta '}\implies \tfrac{1+e^{-x}e^{\theta '}}{1+e^{-x}e^{\theta }} \le 1\). Also,

Therefore, \( e^{\theta '-\theta }=e^{\theta -\theta '} \cdot (e^{\theta '-\theta })^2\le \tfrac{f(x;\theta )}{f(x;\theta ')} \le e^{\theta -\theta '}.\) Thus \( \left|\ln \tfrac{f(x;\theta )}{f(x;\theta ')}\right| \le \left|\theta - \theta '\right|. \) \(\square \)

Theorem 10

Algorithm 7 satisfies \(\epsilon \)-differential privacy. Its output distribution is equivalent to selecting \(\omega _s\) with probability proportional to \(\exp \big (\frac{\epsilon \mu (D,\omega _s)}{2\varDelta _\mu }\big )\) and then independently sampling the gap from the logistic distribution (conditional on only sampling nonnegative values) with location parameter \(\theta = \frac{\epsilon \mu (D,\omega _s)}{2\varDelta _\mu } - \ln \sum \limits _{j\ne s}\exp (\frac{\epsilon \mu (D,\omega _j)}{2\varDelta _\mu })\).

Proof of Theorem 10

For \(\omega _i\in \mathcal {R}\), let \(\mu _i=\tfrac{\epsilon \mu (D,\omega _i)}{2\varDelta _{\mu }}\) and \(\mu '_i=\tfrac{\epsilon \mu (D',\omega _i)}{2\varDelta _{\mu }}\). Let \(X_i\sim {{\,\mathrm{Gumbel}\,}}(\mu _i)\) and \(X'_i\sim {{\,\mathrm{Gumbel}\,}}(\mu '_i)\).

We consider the probability of outputting the selected \(\omega _s\) with gap \(\gamma \ge 0\) when D is the input database:

(let \(\mu ^* = \ln (\sum _{i\ne s}e^{\mu _i})\) and \(\theta = \mu _s - \mu ^*\))

and so

Taking the derivative with respect to \(\gamma \), we get the density \(f(\omega _s, \gamma ~|~D)\) of \(\omega _s\) being chosen with gap equal to \(\gamma \):

Now, in Eq. 11, the term \(\frac{e^{\mu _s}}{e^{\mu _s} + e^{\mu ^*}}=\frac{e^{\mu _s}}{e^{\mu _s} + \sum _{i\ne s}e^{\mu _i}}=\frac{e^{\mu _s}}{\sum _i e^{\mu _i}}\) is the probability of selecting \(\omega _s\).

The term \(\frac{e^{-(\gamma -\theta )}}{(e^{-(\gamma - \theta )}+1)^2}\mathbf {1}_{[\gamma \ge 0]}\) is the density of the event that a logistic random variable with location \(\theta \) has value \(\gamma \) and is nonnegative.

Finally, the term \(\frac{1}{1 + e^{-\theta }}\) is the probability that a logistic random variable with location \(\theta \) is nonnegative.

Thus \(\left( \frac{e^{-(\gamma -\theta )}}{(e^{-(\gamma - \theta )}+1)^2}\mathbf {1}_{[\gamma \ge 0]}\right) \Big /\frac{1}{1 + e^{-\theta }}\) is the probability of a logistic random variable having value \(\gamma \) conditioned on it being nonnegative.

Therefore Eq. 11 is the probability of selecting \(\omega _s\) and independently sampling a nonnegative value \(\gamma \) from the conditional logistic distribution location parameter \(\theta = \mu _s-\mu ^*\) (i.e., conditional on it only returning nonnegative values).

Now, recall that \(\mu _i=\frac{\epsilon \mu (D,i)}{2\varDelta _\mu }\), we apply Lemmas 10 and 9 with the help of Eq. 10 to finish the proof:

\(\square \)

Proof of Theorem 6

The first part follows directly from Theorem 10. Also, from the proof of Theorem 10 the gap \(g_s\) has density \(f(x;\theta ) = \left( \frac{e^{-(x-\theta )}}{(e^{-(x - \theta )}+1)^2}\mathbf {1}_{[x\ge 0]}\right) \Big /\frac{1}{1 + e^{-\theta }}\). Since

We have

Hence \(\mathbb {E}(g_s) = (1+e^{-\theta })\ln (1+e^\theta )\). \(\square \)

Proof of Theorem 7

Assume \(H_0\) is true, i.e., there exists a \(t\ne s\) such that \(\mu (D,\omega _s) < \mu (D,\omega _{t})\). Then

Using be the density of the gap from above, we have

because \(\frac{e^\theta +1}{e^\theta +e^\gamma }\) is an increasing function of \(\theta \) and \(\theta < 0\). \(\square \)

Rights and permissions

About this article

Cite this article

Ding, Z., Wang, Y., Xiao, Y. et al. Free gap estimates from the exponential mechanism, sparse vector, noisy max and related algorithms. The VLDB Journal 32, 23–48 (2023). https://doi.org/10.1007/s00778-022-00728-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00778-022-00728-2