Abstract

The growing complexity of software systems and the influence of software-supported decisions in our society sparked the need for software that is transparent, accountable, and trustworthy. Explainability has been identified as a means to achieve these qualities. It is recognized as an emerging non-functional requirement (NFR) that has a significant impact on system quality. Accordingly, software engineers need means to assist them in incorporating this NFR into systems. This requires an early analysis of the benefits and possible design issues that arise from interrelationships between different quality aspects. However, explainability is currently under-researched in the domain of requirements engineering, and there is a lack of artifacts that support the requirements engineering process and system design. In this work, we remedy this deficit by proposing four artifacts: a definition of explainability, a conceptual model, a knowledge catalogue, and a reference model for explainable systems. These artifacts should support software and requirements engineers in understanding the definition of explainability and how it interacts with other quality aspects. Besides that, they may be considered a starting point to provide practical value in the refinement of explainability from high-level requirements to concrete design choices, as well as on the identification of methods and metrics for the evaluation of the implemented requirements.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We are living in the algorithmic age [1]. Software decision-making has spread from simple daily decisions, such as the choice of a navigation route, to more critical ones, such as the diagnosis of cancer patients [2]. Systems have been strongly influencing various aspects of our lives with their outputs, but they can be as mysterious as black boxes to us [3].

The ubiquitous influence of such “black-box systems” has induced discussions about the transparency and ethics of modern systems [4]. Responsible collection and use of data, privacy, safety, and security are just a few among many concerns. In light of this, it is becoming increasingly crucial to understand how to incorporate these concerns into systems and, thus, how to deal with them during software engineering (SE) and requirements engineering (RE).

1.1 Explainability as the solution

In this regard, explainability is increasingly seen as the preferred solution to mitigate a system’s lack of transparency [5] and as a fruitful way to address ethical concerns about modern systems [6]. The concept of explainability has received a lot of attention recently, and it is slowly establishing itself as an important non-functional requirement (NFR)Footnote 1 for high system quality [8, 9].

Incorporating explainability in a system can mitigate software opacity, thereby helping users understand why the system produced a particular result and supporting them in making better decisions. Explainability also has an impact on the relationship of trust in and reliance on a system [10], it may avoid feelings of frustration [11], and can, thus, lead to greater acceptance by end users [12]. In general, previous studies have shown that explainability is not only a means of achieving transparency and calibrating trust, but that it is also linked to other important NFRs, such as usability, auditability, and privacy [5, 13,14,15].

However, although explainability has been identified as such an essential NFR for software-supported decisions [16] and even as one of the pillars for trustworthy artificial intelligence (AI) [17], there is still a lack of a comprehensive overview that investigates the impact of incorporating explainability in a system.Footnote 2 For example, it is often overlooked that these impacts are not only positive, but can also be negative.

To address this gap in knowledge, we investigate the concept of explainability and its interaction with other quality aspectsFootnote 3,Footnote 4 in this article. Accordingly, we want to provide clarity on what constitutes explainability as an NFR and how it can be integrated into the RE process. Just like other NFRs, however, explainability is difficult to elicit, negotiate, and validate.

1.2 Challenges in RE and how artifacts can help solve them

Due to the subjective, interactive, and relative nature of NFRs, eliciting and modeling them presents many challenges for software engineersFootnote 5 [20]. First, knowledge about NFRs is mostly tacit, disseminated, and based on experience [21, 22], which makes it difficult to grasp the existing knowledge. Here, explainability is no exception.

Furthermore, software quality is a multidimensional concept based on real-world needs that comprise different layers of abstraction [5, 23]. Therefore, another challenge for software engineers is to translate abstract notions, such as quality goals (e.g., a smart home system that improves user satisfaction and comfort) into agreed-upon tangible functionality that help achieve the quality goals (e.g., the smart home system dims the lights automatically when the user is tired). This translation process is followed by the need to evaluate how the derived functionality (also called “operationalizations”) influence software quality [20, 24]. This process from abstract to concrete often leads to trade-offs between different NFRs in a system that must be identified and resolved during requirements analysis [20, 25].

RE is not simply a process of identifying and describing requirements; it is also a process of supporting efficient communication of these requirements among various stakeholders [26]. For this reason, proper communication is another difficulty for RE. External stakeholders and internal team members may unintentionally use different words for the same concepts or the same words for different concepts due to a lack of shared understanding, which can make communication challenging and lead to problems because of misunderstandings [27]. Therefore, a shared understanding is crucial for efficient communication and for reducing the risk of stakeholder dissatisfaction and rework [19].

Software engineers can create, use, and reuse artifacts to achieve a shared understanding in software projects [19]. An artifact is “any kind of textual or graphical document with the exception of source code” [28]. Artifacts can take on a variety of shapes, including textual requirement papers, visual models, glossaries, charts, frameworks, or quality models. Artifacts that are often used to support the RE process are conceptual models [21], knowledge catalogues [20], and reference models [29]. Such artifacts may describe the taxonomy of a given kind of system or process, or compile knowledge about specific NFRs and their interactions with other quality aspects.

To illustrate the importance of both a shared understanding and artifacts in software projects, RE itself may be taken as an example: Communication problems may arise from different interpretations of what RE is and how the RE process is structured. Börger et al. [30] proposed a reference model (i.e., a type of artifact) for RE to achieve a shared understanding of it. To achieve a shared understanding about the meaning of RE, the proposed model describes the concept and divides the RE process into two main areas (requirements analysis and requirements management) and their related activities.Footnote 6

Chung et al. [21] explain the importance of models and knowledge catalogues as resources for the use and reuse of knowledge during system development. Models and catalogues can compile either abstract or concrete knowledge. At a more abstract level, such artifacts can compile knowledge about different NFRs and their interrelationships with other quality aspects (e.g., positive or negative influence of an NFR on another quality aspect). Likewise, models and catalogues can also compile more concrete knowledge, such as about methods and techniques in the field that can be used to operationalize a given NFR.

Existing works propose to build artifacts to capture and structure knowledge that is scattered among several sources [20, 21, 32, 33]. Software engineers can use such artifacts during different activities in RE such as during elicitation, interpretation and trade-off analysis, negotiations with stakeholders, as well as to support the documentation of requirements and decisions. In summary, by making knowledge available in artifacts such as definitions, models and catalogues, software engineers can (1) draw on know-how beyond their own fields and use this knowledge to meet the needs of a particular project, and (2) achieve a shared understanding that leads to better communication and to the definition of the “right” system’s requirements.

1.3 Goal and structure of this article

As for explainability, there is a scarcity of artifacts that compile structured knowledge about this quality aspect and assist software engineers in understanding the factors that should be considered during the development of explainable systems, helping to achieve a shared understanding of the topic.

Therefore, we propose four artifacts that should aid in achieving a shared understanding of explainability, supporting the creation of explainable systems: a definition, a conceptual model, a knowledge catalogue, and a reference model. Overall, our goal is to advance the knowledge towards a common terminology and semantics of explainability, facilitating the discussion and analysis of this important NFR during the RE process. To this end, we used an interdisciplinary systematic literature review (SLR) and workshops as part of a multi-method research strategy.

In particular, we distill definitions of explainability into an own suggestion that is appropriate for SE and RE. We use this definition as a starting point to create a conceptual model that represents the impacts of explainability across different quality dimensions. Subsequently, we compile a knowledge catalogue of explainability and its impacts on the various quality aspects along these dimensions. Finally, we conceive a reference model for explainability that describes key aspects to consider when developing explainable systems during requirements analysis, design, and evaluation. The goal of these artifacts is to support the identification, communication, and evaluation of key elements of explainable systems, their attributes, and relationships.

This article is an extension of a paper originally published in the 29th IEEE International Requirements Engineering Conference: [34]. In this extension, we (1) include more details about our research method, and (2) propose a reference model that may be used to identify relevant components for the RE process and the development of explainable systems.

This article is structured as follows: in the following Sect. 2, we present the background and related work. In Sect. 3, we lay the foundation for the more substantive chapters of this article by introducing our research questions (RQs) and outlining the chosen research design. This is followed by a section for each artifact we present. Accordingly, we suggest our definition of explainability in Sect. 4, introduce our conceptual model in Sect. 5, and present the explainability catalogue in Sect. 6. Building on the previous artifacts, we conceive the reference model in Sect. 7. We discuss our results in Sect. 8, and we debate threats to validity in Sect. 9. Finally, we conclude our article in Sect. 10.

2 Background and related work

Artifacts are commonly used in RE and SE to support software professionals during their tasks. For instance, software engineers typically use or reuse artifacts as guidance during SE (or RE) activities. They may also create artifacts to gather knowledge (e.g., catalogues), or they create artifacts as a form of documentation (e.g., requirements specification or story cards). This section provides background information on the types of artifacts that we propose as well as on explainability.

2.1 Definitions

Definitions are the first and most crucial step in facilitating communication for a given topic or concept. Definitions aid in defining the scope of a particular idea, for instance, by indicating its constituents. Definitions in SE and RE give a rough guidance for software engineers on the scope and elements of nearly everything. The definition of a quality aspect, for example, assists software engineers in understanding it during RE and especially quality assurance.

Definitions support a common terminology that facilitates communication. A lack of consensus may result in the specification and integration of the wrong requirements. Explicitly shared vocabulary decreases the likelihood of misunderstandings when ideas employing this terminology are not stated or are only loosely specified. Following this idea, Wixon [35] emphasizes the significance of defining usability and how crucial it is for development teams to reach consensus on this concept. For instance, usability may signify long-term efficiency to some developers while it may represent simplicity of use to others.

2.2 Models

A model is an abstraction of a system that deliberately focuses on some of its aspects while excluding others [36]. Models provide an overview of a field by partitioning it into broad categories. According to Hull et al. [36], a single model never says everything about a system. For this reason, different, possibly interrelated, models of systems are often used to cover a variety of different aspects. Models can be used for a number of different purposes. On the one hand, they can be utilized for more technical tasks like software design or configuration. On the other hand, models make it easier to describe and optimize organizational concerns including business processes and domains.

Conceptual models can be used to define and describe a concept, helping to understand the taxonomy or characteristics of a particular quality aspect during requirements analysis. Conceptual models document, for example, knowledge about a given domain, concept, or NFR. Taxonomies are well-known examples of conceptual models. The knowledge required to develop conceptual models is typically derived from literature, previous experiences, and domain expertise.

Quality models are another example of models. A quality model can be defined as “the set of characteristics, and the relationships between them that provides the basis for specifying quality requirements and evaluation” [37]. Quality models help to specify and illustrate how quality aspects translate to functional requirements.

A special category of models are the so-called reference models. A “reference model consists of a minimal set of unifying concepts, axioms and relationships within a particular problem domain, and is independent of specific standards, technologies, implementations, or other concrete details” [38]. Reference models may be used as a blueprint for software system construction and are sometimes called universal models, generic models, or model patterns [39]. A reference model can serve as a template for creating and deriving other models (e.g., quality models) or understanding the high-level structure of a process or domain. To this end, reference models can be used to help identify important factors for the analysis, operationalization, and evaluation of a given quality aspect.

To give an example, the Open Systems Interconnection model (OSI) [40] is a reference model that divides network protocols into seven abstraction layers. The layers help to separate concepts and network aspects into abstraction levels, helping to compartmentalize the development of network applications. The OSI model is widely used by network engineers to describe network architectures, even though it is informal and does not correspond perfectly to the protocol layers in widespread use.

In fact, this is precisely the reason of their wide adoption: reference models can be used as (1) abstract frameworks or templates for understanding significant relationships among the entities of some environment or domain (e.g., computer networks or, in our case, explainable systems) or (2) to standardize or describe processes [41]. This abstract nature gives them flexibility, making them easily adaptable.

Cherdantseva et al. [42] propose a reference model for the information assurance and security (IAS) domain. The model highlights the important aspects of IAS systems, and serves as a conceptual framework for researchers. The authors say that reference models foster a better understanding of IAS and, as a result, help software engineers to do their job more efficiently, serving as “a blueprint for the design of a secure information system” and providing “a basis for the elicitation of security requirements”.

To clearly distinguish the different types of models, we consider a conceptual model to be an artifact that describes a concept or captures the taxonomy of a certain concept or domain (our conceptual illustrates the impact of explainability on system quality); and we consider a reference model as a template or framework that may be used by software engineers to build other models or to guide them as they design explainable systems.

2.3 Catalogues

Catalogues document knowledge about a given topic (e.g., a specific domain or about quality aspects, in the case of software systems). They can, for example, document relationships between different quality aspects. Some researchers developed catalogues for specific domains based on the premise of the NFR framework [21]. As a result, they can help with trade-off analysis, where it is critical to understand how two or more NFRs will interact in a system and how they can coexist [43].

Serrano and Serrano [32] developed a catalogue specifically for the ubiquitous, pervasive, and mobile computing domain. Torres and Martins [44] propose the use of NFR catalogues in the construction of RFID middleware applications to alleviate the challenges of NFR elicitation in autonomous systems. They argue that the use of catalogues can reduce or even eliminate possible faults in the identification of functional and non-functional requirements. Finally, Carvalho et al. [45] propose a catalogue for invisibility requirements focused on the domain of ubiquitous computing applications. They emphasize the importance of software engineers understanding the relationships between requirements in order to select appropriate strategies to satisfy invisibility and traditional NFRs. Furthermore, they discovered that invisibility might impact other essential NFRs for the domain, such as usability, security, and reliability.

Mairiza et al. [20] conducted a literature review to identify conflicts among existing NFRs. They constructed a catalogue to synthesize the results and suggest that it can assist software developers in identifying, analyzing, and resolving conflicts between NFRs. Carvalho et al. [33] identified 102 NFR catalogues in the literature after conducting a systematic mapping study. They found that the most frequently cited NFRs were performance, security, usability, and reliability. Furthermore, they found that the catalogues are represented in different ways, such as softgoal interdependency graphs, matrices, and tables. The existence of so many catalogues illustrates their importance for RE and software design. Although these catalogues present knowledge about 86 different NFRs, none of them addresses explainability.

2.4 Explainability

Since explainability has rapidly expanded as a research field in the last years, publications about this topic have become quite numerous, and it is hard to keep track of the terms, methods, and results that came up [46]. For this reason, there have been numerous literature reviews presenting overviews concerning certain aspects (e.g., methods or definitions) of explainability research.

Many of these reviews focus on a specific community or application domain. For instance, [47] focuses on explainability of recommender systems, [48] on explainability of robots and human-robot interaction, [49] on the human-computer interaction (HCI) domain, and [50] on biomedical and malware classification. Another focus of these reviews is to demarcate different, but related terms often used in explainability research (see, e.g., [4, 46, 51]). For instance, the terms “explainablilty” and “interpretability” are sometimes used as synonyms and sometimes not [52, 53].

Our review differs from others in the following ways. To the best of our knowledge, our SLR is the first overview specifically targeting software and software engineers, to support them in dealing with explainability as a very new and complex NFR. For this reason, quality aspects are the pivotal focus of our work. As far as we are aware, only a few reviews explicitly include the interaction between explainability and quality aspects (most notably [47, 54]). In contrast to preceding reviews, however, we do not only consider positive impacts of explainability on other quality aspects, but we also take negative ones into account.

Furthermore, many other reviews do not have an interdisciplinary focus. Even if they do not focus on a specific community (e.g., HCI), reviews rarely incorporate views on explainability outside of computer science. From our point of view, however, it is crucial to include fields such as philosophy and psychology in an investigation of explainability, since these fields have much experience in explanation research. Psychology is concerned with aspects of human cognition, while philosophy is interested in the definition of concepts as well as the nature of knowledge and reality. These aspects are crucial to understanding the features, implications, and significance of explainability.

3 Research goal and design

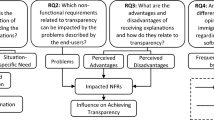

We frame our study into four RQs:

RQ1: What is an appropriate definition of explainability to achieve shared understanding in SE and RE? | |

RQ2: What are the quality aspects impacted by explainability in a system context? | |

RQ3: How does explainability impact these quality aspects? | |

RQ4: How to support software professionals in identifying important factors for the analysis, operationalization, and evaluation of requirements for explainable systems? |

Since other disciplines have a long history working on explainability, their insights should prove valuable for software engineering and enable us to refine the scope of the term explainability for this area. In particular, philosophy and psychology have a long history in making different conceptions of explanation explicit, for instance, in formalisms and operationalizations.

Accordingly, RQ1 focuses on harnessing the work of other sciences in the field of explainability to compile a definition that is appropriate and useful for the disciplines of software and requirements engineering. Useful in this context means that the definition facilitates the discussion around the topic, contributing to a shared understanding among stakeholders and engineers as well as a clear vision of what “explainable system” means in the RE and SE contexts.

RQ2 focuses on providing an overview of the quality aspects that may be impacted by explainability. Similar to the work of Leite and Capelli [55], who investigated the interaction between transparency and other qualities, our goal is to offer an overview for explainability and its impact on other quality aspects within a system.

With RQ3 we want to assess what impact explainability has on other quality aspects. More specifically, our goal is to analyze the polarity of these impacts: whether they are positive or negative. To answer RQ2 and RQ3, we built a conceptual model and a catalogue that compile knowledge about the impacts of explainability on other quality aspects.

The goal of RQ4 is to make our results more actionable. To this end, we provide a reference model for explainability that aims to build shared understanding around the factors to be considered in the development of explainable systems, assisting software engineers in identifying relevant factors for explainability in different phases of the software lifecycle: requirements analysis, design, and evaluation.

An overview of our research design is shown in Fig. 1. Our research consisted of a multi-method approach that combined two qualitative methods to achieve higher data reliability.

The first method focuses on systematic data collection and qualitative data analysis. For the data collection, we conducted an interdisciplinary SLR that resulted in a total of 229 papers. We coded the gathered data by using an open coding approach [56]. As a next step, we analyzed the resulting codes for definitions of explainability (RQ1), for relationships between explainability and other quality aspects (RQ2), and for information about the polarity of these relationships (RQ3).

To validate and complement our findings, we employed a second qualitative method: two workshops with experts. We framed the obtained knowledge in a conceptual model by structuring and grouping the quality aspects impacted by explainability along four dimensions and developed a catalogue based on it.

Finally, we used the responses to RQ1-RQ3 as a starting point and reviewed the data from our SLR to identify other principles and constituent parts of explainability to aid in the analysis of requirements, as well as in the design and evaluation of explainable systems. We combine this knowledge with works in the literature to build a reference model for explainability (RQ4), factoring in all our previous results.

3.1 Data collection and analysis

In what follows, we will describe our research design in more detail. To this end, we will start with a more detailed description of our SLR.

3.1.1 Systematic literature review

We followed guidelines from Kitchenham et al. [57], and Wohlin [58] when conducting our SLR. The search strategy for our SLR consisted of a manual search followed by a snowballing process.

3.1.1.1 Manual Search

During a manual search, an investigator usually scans all the publications in specific sources such as proceedings or journals. First, we identified relevant sources from different domains such as computer science, philosophy, and psychology by consulting experts and relying on our own expertise. As a next step, the selected sources were independently reviewed for suitability by researchers of the specific domains.

Since we conducted an interdisciplinary SLR, sources from other disciplines were also considered during the manual search. In addition to computer science, the disciplines of philosophy and psychology were chosen because they have decades of experience in explanation research. Furthermore, during the snowballing process, other research areas were also taken into account. We believe that the choice of sources for our manual search is sufficiently representative to uncover research in the area of explainability.

The manual search was performed independently by the authors of this article and resulted in 104 papers. We used Fleiss’ Kappa statistics [59] to assess the reliability of the selection process. The calculated value of \(\kappa = 0.81\) showed an almost perfect agreement [60].

3.1.1.2 Snowballing

After the manual search, we performed snowballing to complement the search results. The snowballing process includes backward and forward snowballing as described by Wohlin [58]. Our literature review process is partially based on a grounded theory (GT) approach for literature reviews proposed by Wolfswinkel et al. [61]. The goal of using this approach to reviewing the literature is to reach a detailed and relevant analysis of a topic, following some of the principles of GT.

According to [61], a literature review is never complete but at most saturated. This saturation is achieved when no new concepts or categories arise from the data (i.e., the publications that were inspected). We followed this approach to decide when to conclude our snowballing process. Therefore, we only performed one iteration, as we could not gain any new insights or concepts during a second iteration.

The snowballing was independently conducted by the authors, resulting in additional 125 papers. The calculated value of \(\kappa = 0.87\) also shows an almost perfect agreement. Overall, our SLR yielded a total of 229 papers. A comprehensive overall summary of the number of papers inspected and selected in the different phases of the SLR is shown in Fig. 2.

3.1.1.3 Inclusion & Exclusion Criteria

We included publications that met the following inclusion criteria (IC):

- \(IC_1\):

-

Provide information that is relevant to answering (partially or completely) one of our research questions

- \(IC_2\):

-

Were published between 01/1984 and 03/2020

- \(IC_3\):

-

Are peer-reviewed journal, conference, and workshop publications

and we excluded publications that met the following exclusion criteria (EC):

- \(EC_1\):

-

Are non English-language publications

- \(EC_2\):

-

Are publications exploring or proposing rough algorithmic techniques without further discussion about the theoretical background of explainability

We chose 1984 as the starting date because that was the year in which the first major work on explainability was published (namely, [62]). Furthermore, we started the SLR on 03/2020.Footnote 7

We are aware of the fact that 36 years is a long period of time. However, by choosing this time period, we wanted to get as broad an overview of the topic as possible. When it comes to explainability, it is useful to recognize that this topic was already important in the eighties and is not as new a research field as often believed [63].

To include a publication, all inclusion criteria must be met. If at least one of the exclusion criteria was met, the publication was rejected. Our selection process consisted of a two-phase selection procedure. In phase one, we have selected candidate papers based on title, abstract, and keywords. In cases where the aforementioned elements did not provide sufficient information, we have also analyzed the conclusion section. EC\(_2\) did not apply in this phase. In phase two, we have selected papers based on full text and also applied EC\(_2\).

3.1.2 Coding and analysis

We followed an open-coding approach [56] for the qualitative analysis of the papers we found during our search. This approach consists of up to three consecutive coding cycles. For our first coding cycle, we applied Initial Coding [64] to preserve the views and perspectives of the authors in the code. In the second coding cycle, we clustered the initial codes based on similarities, using Pattern Coding [65]. This allowed us to group the data from the first coding cycle into categories. Next, we discussed these categories until we reached an agreement on whether they adequately reflected the meaning behind the codes. These categories allowed us to structure the data for better analysis and to identify similarities.

For RQ2 and RQ3, we conducted a third coding cycle to further classify the categories into quality aspects. We applied Protocol Coding [66] as a procedural coding method in this cycle. For this method, we used a pre-established list of NFRs from Chung et al. [21]. If any correspondence between a category and an NFR was found, we assigned the corresponding code. In the specific cases where we could not assign a corresponding NFR from [21] to the data, we discussed together and selected a quality aspect that would adequately describe the idea presented in the text fragment.

All coding and review processes were conducted independently by the authors of this article. In terms of review processes, this means that each of the authors independently read and analyzed the literature. The coding processes were also executed independently by each author. After each review and coding session, we discussed our results before proceeding to the next phase. We had regular consensus sessions to discuss discrepancies. A list of all codes is available in our supplementary material [67].

Finally, for RQ4, we conducted an additional round of data extraction to complement our previous insights. During this round, the focus was on the existing types of explanations, possible implementation strategies for explainability (including presentation forms), and methods used to measure the quality of explanations. The coding process followed the same procedure as mentioned above. However, this time, the protocol coding was supported by the taxonomy proposed by Speith [46] for the implementation strategies and the reviews by Vilone and Longo [68] as well as Zhou [69] for measuring.

3.2 Data validation

We held two workshops to validate and augment the knowledge gathered during data collection: one exclusively with philosophers and psychologists, and one exclusively with software engineers. The structure of the workshop is depicted in Table 1.

Each of the workshops lasted for four hours. To prepare for the discussions, we gave all participants of both workshops preparatory exercises to work on individually about a week before the workshop began.

In both workshops, we discussed the categories and other relevant information that were identified during our coding. For RQ1, the categories consisted of competing definitions of explainability that we extracted from the literature. For RQ2, the categories consisted in the identified quality aspects that have a relationship with explainability. Finally, for RQ3, we identified the kind of impact that explainability can have on each of the extracted quality aspects.

3.2.1 Workshop with philosophers and psychologists

We validated the data related to RQ1 in a workshop with philosophers and psychologists (two professors, one postdoc, three doctoral candidates). All scholars excepts for one doctoral candidate do research in the field of explanation, one professor and the postdoc even as a focus. Scholars in these disciplines have a long history in researching explanations and, thus, explainability. After consulting with experts from these disciplines on the workshop design, we decided on an open discussion.

The preparatory exercise of the philosophers and psychologists was to write down a definition of explainability, taking into account their own background knowledge. The idea was to collect these definitions before the discussion to allow for comparison and to avoid bias from our preliminary results and the debate.

The workshop consisted of three activities. In the first activity, we presented the categories with respect to RQ1 found in the literature for discussion. We debated whether these categories accurately reflect the participants’ perceptions on the meaning of explainability. In the second activity, the idea was to compare the definitions found in the literature with participants’ own definition of explainability, submitted before the workshop. We compared the definitions, and also identified and discussed the differences in order to reach a consensus. During the last activity, we discussed interdependencies between explainability and other software quality aspects.

3.2.2 Workshop with software engineers

We validated the data related to RQ2 and RQ3 in a workshop with software engineers (three professors, two postdocs, one practitioner, one doctoral candidate). All three professors do research in the field of requirements engineering and two of them also in direct relation to explainability, as do the two postdoctoral researchers. The practitioner is a product owner in an international company, and the research field of the doctoral candidate is the interplay between requirements engineering and agile development. Two experts in the field of RE with experience in the topic of NFRs and software quality were consulted about the workshop design (depicted in Table 1).

For the preparatory exercise, we asked participants to list quality aspects that can be impacted by explainability. To support them in the task, we developed four hypothetical scenarios in which explainable systems should be designed and sent them a list of quality aspects resulting from our coding process (without the identified polarities, to avoid bias). We also welcomed participants’ suggestions about further quality aspects that could be connected to explainability but were not present in our list.

The scenarios consisted of short stories describing a domain and a business problem related to the need for explainability. The goal was to help participants better understand contexts where explainable systems may be needed. Based on the scenarios, we asked participants to specify desirable quality aspects for each system based on their expertise, and to analyze how explainability would interact with each of these identified aspects (positively or negatively). The four hypothetical scenarios are described in our additional material [67].

This workshop also included three activities, each lasting for approximately an hour. In the first activity, we presented the list of quality aspect without polarities to the participants and asked them to set the polarities. We established a rigorous structure for this activity, where each participant would first define the polarity, justify the decision and at the end of the round all participants could discuss each others’ choices. The idea was to provoke debate and reach consensus.

In the second activity, we compared the polarities given by the participants with the findings from our coding process. Again, we compared the results and had an open discussion to discuss differences and reach consensus. Experts agreed on all polarities that they had not mentioned before but had been discovered in the literature. In the third activity, we clustered the quality aspects collaboratively based on their relationship and discussed their impacts on the system.

3.3 Knowledge structuring

The last step of our research consisted of making sense of and structuring the knowledge collected in the previous stages.

3.3.1 Operationalizing explainability: definition

We operationalized the concept of explainability in a system context by distilling a definition of it. In the workshop with psychologists and philosophers, we integrated proposed definitions with ones that we found in the literature. The resulting definition contains several variables so as to be as flexible as possible to be adjusted to specific project contexts while at the same time providing a shared understanding of explainability among stakeholders.

3.3.2 Framing the results: conceptual model

We built a conceptual model to frame our knowledge catalogue. This model illustrates the impact of explainability on several quality dimensions (see Fig. 3; RQ2). During the workshop with software engineers, we discussed possible ways to classify the different quality aspects. Here, the participants offered useful ideas. To further supplement these ideas, we consulted the literature and found three promising ways to classify the results (more details in Sect. 5). These three ways are analogous to the suggestions made by the workshop participants and supported us in the development of our conceptual model.

3.3.3 Summarizing the results: knowledge catalogue

We summarized the results for RQ3 in a knowledge catalogue for explainability. Overall, we have extracted 57 quality aspects that might be influenced by explainability. We present these quality aspects and how they are influenced by explainability in Fig. 4. Additionally, we extracted a representative example from the literature for all positive and negative influences listed in our catalogue to show how this influence may come about. These examples also serve to illustrate our understanding of certain quality aspects.

3.3.4 Making the results actionable: reference model

We have compiled and summarized the extracted information for RQ4 to conceive a reference model for explainability. The reference model encompasses three phases: requirements analysis, design, and evaluation. Each of these phases in the model include relevant aspects that should be considered in each phase in the software lifecycle.

We used our previous findings (RQ1–RQ3) and an additional round of data extraction to shape the phases in the reference model. In particular, the categories in the “requirements analysis” phase are based on variables of the definition we set out, and on the work of Chazette and Schneider [5]. The categories on the “design” phase that make up the implementation strategy are based on the work by Speith [46], and the categories on the “evaluation” phase are based on the findings of our SLR. Furthermore, we illustrate how our template can be applied by means of a running example.

We present the results for RQ1 in Sect. 4, the results for RQ2 and RQ3 in Sects. 5, 6, and the results for RQ4 in Sect. 7.

4 A definition of explainability

The domain of software engineering does not need a mere abstract definition of explainability, but one that focuses on requirements for explainable systems. Before software engineers can elicit the need for explainability in a system, they have to understand what explainability is in a system context. For this reason, we provide a definition of what makes a system explainable to answer our first RQ.

Explainability is tied to disclosing information, which can be done by giving explanations. In this line of thought, Köhl et al. hold that what makes a system explainable is the access to explanations [9]. However, this leaves open what exactly is to be explained. In the literature, definitions of explainability vary considerably in this regard. Moreover, our review has revealed other aspects in which definitions of explainability differ. Consequently, there is not one definition of explainability, but several complementary ones.

Similarly, Köhl et al. also found that there is not just one type of explainability, but that a system may be explainable in one respect but not in another [9]. Based on their definition of explainability, the definitions we found in the literature, and results from our workshop with philosophers and psychologists, we were able to develop an abstract definition of explainability that can be adjusted according to project or field of application.

Answering RQ1 A system S is explainable with respect to an aspect X of S relative to an addressee A in context C if and only if there is an entity E (the explainer) who, by giving a corpus of information I (the explanation of X), enables A to understand X of S in C. |

The definition above summarizes the important variables of an explainable system that are relevant for requirements and software engineers. These variables provide guidance on the elements that are important in an explainable system and, therefore, need to be considered during elicitation and design. In particular, an exemplary application of how our definition can support requirements engineering in practice can be found in Sect. 7.

There were differences in the literature concerning the values of the following variables presented in the above definition: aspects of a system that should be explained, contexts in which to explain, the entity that does the explaining (the explainer), and addressees that receive the explanation. Being aware of these differences is crucial for requirements engineers to elicit the right kind of explainability for a project as well as specifying the fitting requirements on explanations.

4.1 Aspects that should be explained

Concerning the aspects that should be explained, we found the following options in the literature and validated them during the workshop with philosophers and psychologists: the system in general (e.g., global aspects of a system) [70], and, more specifically, its reasoning processes (e.g., inference processes for certain problems) [71], its inner logic (e.g., relationships between the inputs and outputs) [9], its model’s internals (e.g., parameters and data structures) [72], its intention (e.g., pursued outcome of actions) [73], its behavior (e.g., real-world actions) [74], its decision (e.g., underlying criteria) [4], its performance (e.g., predictive accuracy) [75], and its knowledge about the user or the world (e.g., user preferences) [74].

4.2 Contexts and explainers

A context is set by a situation consisting of the interaction between a person, a system, a task, and an environment [76]. Plausible influences on the context are time-pressure, the stakes involved, and the type of system [54].

Explainers refer to a system or specific parts of a system that supply its stakeholders with the needed information. Semantically speaking, our definition allows that these specific parts of the system do not necessarily have to be technical components (such as algorithms or even hardware elements) of the system itself. In this sense, an explainer could also be an intermediate instance, a kind of external mediator. This mediator acts as an interface between the system and the addressee, explaining something and helping the addressee to understand the aspect of the system [54]. Although the person applying the definition should be the one to decide where the boundaries of an explainable system should be set, in the context of our work, we focus on self-explainable systems: systems that explain themselves “directly” to an end user.

Consider the following example. A patient (addressee) is in a hospital and has been examined by a physician (context) using a medical diagnosis system. The medical findings are processed by the system (aspects) and presented directly to the patient in an electronic dashboard. However, these findings cannot be interpreted and understood by patients directly because they do not have the necessary medical domain knowledge. Therefore, the physician intervenes as a mediator and explains the results of the examination to the patient in a way that is understandable for the patient. This system could be considered explainable following the proposed definition since it communicates results to the physician, who understands them, and the physician, in turn, is able to explain the output of the system to the patient based on the received explanations.

However, because we focus on self-explainable systems, the system in the example above is only deemed explainable in our perspective if the physician is the intended addressee for explanations. If the patients are the intended addressees, the system would be considered explainable if the system explains itself to the patient directly (since the patient is the end user) and comprehensively without the need for a physician to intervene as a mediator. Thus, if necessary, no medical terminology may be used and the results of the examination must be presented in a way that is clear and understandable to laypersons. In this sense, we consider that the target audience of explanations determines whether or not a system is explainable. If a medical diagnostic system is designed for physicians (who are the end users in this situation), the system must be explainable to physicians.

4.3 Addressee’s understanding

A vast number of papers in the literature make reference to the addressee’s evoked understanding as important factor for the success of explainability (e.g., [14, 51, 70, 77, 78]). Framing explainability in terms of understanding provides the benefit of making it measurable, as there are established methods of eliciting a person’s understanding of something, such as questionnaires or usability tests [5].

The variables in our definition will become important later on, when we discuss our reference model (Sect. 7). In this template, these variables essentially constitute different aspects that need to be elicited in a project context before concrete implementation strategies are devised. Accordingly, we will return to the variables later on, using an example to help us understand them better.

5 A conceptual model of explainability

Conceptual models and catalogues compile knowledge about quality aspects and help to better visualize their possible impact on a system. Based on the data extracted from the literature and on our qualitative data analysis and validation, we were able to build a conceptual model and a knowledge catalogue for explainability.

In this section, we will first discuss our conceptual model. Overall, this model serves as a kind of classification scheme and is divided into four so-called quality dimensions. A quality dimension is a conceptual layer that groups quality aspects that make up a system. It represents a perspective from which to consider the quality of the system.

Answering RQ2 We framed the quality aspects that are impacted by explainability in a conceptual model that spans different quality dimensions of a system (see Fig. 3). |

We considered three existing concepts to shape and compose the conceptual model: stakeholder classes, elicitation dimensions, and quality spectrum. These concepts help us illustrate our vision of system quality and how it is impacted by explainability.

5.1 Stakeholder classes

Langer et al. categorize quality aspects that are influenced by explainability according to so-called stakeholder classes and distinguish the following ones: users, developers, affected parties, deployers, and regulators. According to them, these classes should serve as a reference point when it comes to implementing explainability since the interests of different stakeholder classes may conflict [54].

This first concept is related to our definition and based on the insight that understanding is pivotal for explainability. Individuals differ in their background-knowledge, values, experiences, and many further respects. For this reason, they also differ in what is required for them to understand certain aspects of a system.

Furthermore, Langer and colleagues also hold that some persons are more likely to be interested in a certain quality aspect than others [54]. For instance, a developer might be more interested in the maintainability of a system than a user.

Against this background, using stakeholder classes to organize quality aspects seems promising. Since it is a stakeholder who needs to understand a system for it to be explainable according to our definition, the stakeholder class provides a frame of reference for software engineers.

5.2 Elicitation dimensions

Chazette and Schneider identified six dimensions that affect the elicitation and analysis of explainability [5]: the users’ needs and expectations, cultural values, corporate values, laws and norms, domain aspects, and project constraints. Their results indicate that different factors distributed across these dimensions influence the identification of explainability as being a necessary quality aspect within a system, as well as the design choices towards its operationalization. In other words, these dimensions influence the (explainability) requirements of a system.Footnote 8

The users’ needs and expectations for example, is a dimension that considers the user and will thus reflect directly on the end-user requirements. Cultural values refer to the ethos of a group or society [79], and how culture influence the system design [80]. Corporate values refer to the strategic vision and values of an organization [81], and how they shape software systems. Laws and norms concern the regulatory and legal influence on the requirements and design of a system. Domain aspects consider the subject area on which the system is intended to be applied, and will dictate the logic around the application [82]. The project constraints are more practical aspects (also known as non-technical aspects [83]), such as available resources (e.g., time, money, technologies, manpower).

5.3 Quality spectrum

The external/internal quality concept based on the ISO 25010 [37] and proposed by Freeman and Pryce [84] is the final concept that we use to shape our model. In particular, we use it to categorize the quality dimensions (and thus the quality aspects within them) themselves.

The external/internal quality concept is the basis for what we call the quality spectrum. Since a spectrum is “a range of different positions between two extreme points” [85], we consider the system quality spectrum as a range between the two extreme points: internal and external quality. In our model, the quality dimensions represent “positions” or “directions” in the quality spectrum.

In this sense, an external quality dimension is more related to the users or the quality in use, and an internal quality dimension is more related to the developers or the system itself. The same applies to the quality aspects inside these dimensions.

However, as pointed out by McConnel [86], the difference between internal and external quality is not completely clear-cut, meaning that a quality aspect can belong or affect several dimensions. Therefore, we do not assign the dimensions and quality aspects of our conceptual model as clear-cut internal or external, but rather acknowledge a continuous shift from external to internal.

5.4 Compiling the concepts

Based on these three concepts and input from our workshop with software engineers, we developed a conceptual model for the impact of explainability on other quality aspects. To this end, we combined the concepts of stakeholder classes and elicitation dimensions to form four new dimensions, into which we sort the quality aspects that are impacted by explainability: user’s needs, cultural values and laws and norms, domain aspects and corporate values, and project constraints and system aspects. Furthermore, we arrange these quality dimensions in the quality spectrum.

We also identified quality aspects that are present in all dimensions. In particular, we identified quality aspects that form a foundation for the four dimensions (e.g., transparency). Without the influence of explainability on these foundational qualities, many other quality aspects would not be influenced. Furthermore, we identified superodinated qualities. These quality aspects are influenced by all other aspects, sometimes being described as the goals of explainability (e.g., trust).

More details on the individual dimensions will be given in the next section, when we discuss the catalogue, since the dimensions are closely linked to the quality aspects they frame. The dimensions and their respective quality aspects are illustrated in Fig. 3. In the figure, the quality aspects are grouped according to similarity, based on our workshops’ results. Furthermore, the listing order corresponds to that of the descriptive text in Sect. 6. Overall, our conceptual model should support software engineers in understanding how explainability can affect a system, facilitating requirements analysis.

6 A catalogue of explainability’s impacts

In this section, we present the catalogue and discuss the quality aspects in relation to our conceptual model. To this end, we analyze them, whenever possible, based on the three categorizations we have described above: the stakeholders involved, the dimensions that affect the elicitation and analysis of explainability, and the external/internal categorization.

Answering RQ3 We built a catalogue that lists all quality aspects found in our study and the kind of impact that explainability has on each one of these aspects (Fig. 4). |

6.1 Foundational qualities

Explainability can influence two quality aspects that have a crucial role: transparency and understandability. These quality aspects provide a foundation for all four dimensions, thereby having an influence on the other aspects inside these dimensions (and, in some cases, vice versa).

Receiving explanations about a system, its processes and outputs can facilitate understanding on many levels [87]. Furthermore, explanations contribute to a higher system transparency [88]. For instance, understandability and transparency are required on a more external dimension so that users understand the outputs of a system (e.g., an explanation about a route change), which may positively impact user experience. They are also important on a more internal dimension, where they can contribute to understanding aspects of the code, facilitating debugging and maintainability.

6.2 User’s needs

Most papers concerning stakeholders in Explainable Artificial Intelligence (XAI) state users as a common class of stakeholders (e.g., [51, 89, 90]). This, in turn, also coincides with the view from requirements engineering, where (end) users also count as a common class of stakeholders [91]. Among others, users take into account recommendations of software systems to make decisions [13]. Members of this stakeholder class can be physicians, loan officers, judges, or hiring managers. Usually, users are not knowledgeable about the technical details and the functioning of the systems they use [54].

When explainability is “integrated” into a system, different groups of users will certainly have different expectations, experiences, personal values, preferences, and needs. Such aspects mean that individuals can perceive quality differently. At the same time, explainability influences aspects that are extremely important from a user perspective.

The quality aspects we have associated with users are mostly external. In other words, they are not qualities that depend solely on the system. To be more precise, they depend on the expectations and the needs of the person who uses the system.

On a general level, the user experience can both profit and suffer from explainability. Explanations can foster a sense of familiarity with the system [92] and make it more engaging [93]. In this case, user experience profits from explainability. On the other side, explanations can cause emotions such as confusion, surprise [94], and distraction [78], harming the user experience. Furthermore, explainability has a positive impact on the mental-model accuracy of involved parties. By giving explanations, it is possible to make users aware of the system’s limitations [75], helping them to develop better mental models of it [94]. Explanations may also increase a user’s ability to predict a decision and calibrate expectations with respect to what a system can or cannot do [75]. This can be attributed to an improved user awareness about a situation or about the system [12]. Furthermore, explanations about data collection, use, and processing allow users to be aware of how the system handles their data. Thus, explainability may be a way to improve privacy awareness [15, 51]. Explainability can also positively impact the perceived usefulness of a system or a recommendation [95], which contributes to the perceived value of a system, increasing users’ perception of a system’s competence [96] and integrity [97] and leading to more positive attitudes towards the system [98]. Finally, all of this demonstrates that explainability can positively impact the user satisfaction with a system [94].

Explainability can also influence the usability of a system. On the positive side, explanations can increase the ease of use of a system [47], lead to more efficient use [12], and make it easier for users to find what they want [99]. On the negative side, explanations can overwhelm users with excessive information [100] and can also impair the user interface design [5]. Explanations can help to improve user performance on problem solving and other tasks [97]. Another plausible positive impact of explainability is on user effectiveness [101]. With explanations, users may experience greater accuracy in decision-making by understanding more about a recommended option or product [102]. However, user effectiveness can also suffer when explanations lead users to agree with incorrect system suggestions [10]. User efficiency is another quality aspect that can be positively and negatively influenced by explainability. Analyzing and understanding explanation takes time and effort [103], possibly reducing user efficiency. Overall, however, the time needed to make a judgment could also be reduced with complementary information [101], increasing user efficiency. Furthermore, explanations may also give users a greater sense of control, since they understand the reasons behind decisions and can decide whether they accept an output or not [14]. Explainability can also have a positive influence on human-machine cooperation [77] since explanations may provide a more effective interface for humans [104], improving interactivity and cooperation [33], which can be especially advantageous in the case of cyber-physical systems.

Explainability can have a positive influence on learnability, allowing users to learn about how a system works or how to use a system [102]. It may also provide guidance, helping users in solving problems and educating them about product knowledge [105]. As these examples illustrate, explanations can support decision-making processes for users [47]. In some cases, this goes as far as enabling scrutability of a system, that is, enabling a user to provide feedback on a system’s user model so that the system can give more valuable outputs or recommendations in the future [47]. Finally, explainability can help knowledge discovery [14]. By making the decision patterns in a system comprehensible, knowledge about the corresponding patterns in the real world can be extracted. This can provide a valuable basis for scientific insight [75].

6.3 Cultural values and laws and norms

Although [5] distinguished Cultural Values and Laws and Norms as two separate dimensions and [54] did the same for regulators and affected parties, we have combined them into one dimension because they are complementary and influence each other. The dimensions form a kind of symbiosis since, e.g., legal foundations are grounded, among others, on the basis of the cultural values of a society. We adopt the same approach for the dimensions discussed in Sects. 6.4, 6.5.

Regulators commonly envision laws for people who could be affected by certain practices. In other words, regulators stipulate legal and ethical norms for the general use, deployment, and development of systems. This class of stakeholders occupies an extraordinary role, since they have a “watchdog” function concerning the systems and their use [54]. Regulators can be ethicists, lawyers, and politicians, who must have the know-how to assess, control, and regulate the whole process of developing and using systems.

The restrictive measures by regulators are necessary, as the influence of systems is constantly growing and key decisions about people are increasingly automated – often without their knowing [54]. Affected parties are (groups of) people in the scope of a system’s impact. They are stakeholders, as for them much depends on the decision of a system. Patients, job or loan applicants, or defendants at court are typical examples of this stakeholder class [54].

In this dimension, cultural values represent the ethos of a society or group and influence the need for specific system qualities and how they should be operationalized [79, 80]. These values resonate in the conception of laws and norms, which enforce constraints that must be met and guaranteed in the design of systems. Explainability can influence key aspects on this dimension.

With regard to the internal/external distinction, a clear attribution is not possible. Rather, the quality aspects seem to occupy a hybrid position. Whether or not they are present does not only depend on the system itself, but it also does not depend on a person using them. Rather, it depends on general conventions (e.g., legal, societal) that are in place. For this reason, we take them to be more internal than the quality aspects from the last dimensions: general conventions are better implementable than individual preferences.

On the cultural side, explanations can contribute to the achievement of ethical decision-making [106] and, more specifically, ethical AI. On the one hand, explaining the agent’s choice may support ensuring that ethical decisions are made [14]. On the other hand, providing explanations can be seen as an ethical aspect itself [6]. Furthermore, explainability may also contribute to fairness, enabling the identification of harms and decision biases to ensure fair decision-making [14], or helping to mitigate decision biases [75].

On the legal side, explainability can promote a system’s compliance with regulatory and policy goals [107]. Explaining an agent’s choice can ensure that legal decisions are made [14]. A closely related aspect is accountability. We were able to identify a positive impact of explainability on this quality that occurs when explanations allow entities to be made accountable for a certain outcome [108]. In the literature, many authors refer to this as liability [108] or legal accountability [109].

In order to guarantee a system’s adherence to cultural and legal norms, regulators and affected parties need several mechanisms that allow for inspecting systems. One NFR that can help in this regard is auditability. Explainability positively impacts this NFR, since explanations can help to identify whether a system made a mistake [10], can help to understand the underlying technicalities and models [73], and allow users to inspect a system’s inner workings to judge whether it is acceptable or not [110]. In a similar manner, validation can be positively impacted, since explainability makes it possible for users to validate a system’s knowledge [102] or assess if a recommended alternative is truly adequate for them [47]. The latter aspect is essential for another quality that is helped by explainability, namely, decision justification. On the one hand, explanations are a perfect way to justify a decision [108]. On the other hand, they can also help to uncover whether a decision is actually justified [4].

6.4 Domain aspects and corporate values

People who decide where to employ certain systems (e.g., a hospital manager decides to bring a special kind of diagnosis system into use in her hospital) are deployers. Other possible stakeholders in this dimensions are specialists in a domain, known as domain experts. People have to work with the deployed systems and, consequently, new people fall inside the range of affected people [54].

This dimension is shaped by two aspects: (1) the corporate values and vision of an organization [81], and (2) the domain aspects that shape a system’s design since explanations may be more urgent in some domains than in others.

We consider this dimension as more internal to the system, since it encompasses quality aspects that are more related to the domain or the values of the corporation or the team. Generally, the integration of such aspects affects the design of a system on an architectural level. However, there are some exceptions, as the organization’s vision may aim at external factors like customer loyalty.

Explainability supports the predictability of a system by making it easier to predict a system’s performance correctly and helping to determine when a system might make a mistake [111]. Furthermore, explainability can support the reliability of a system [70]. In general, explainability supports the development of more robust systems for critical domains [112]. All of this contributes to a positive impact on safety, helping to meet safety standards [14], or helping to create safer systems [113]. On the negative side, explanations may also present safety risks by distracting users in critical situations.

Explanations are also seen as a means to bridge the gap between perceived security and actual security [71], helping users to understand the actual mechanisms in systems and adapt their behavior accordingly. However, explanations may disclose information that makes the system vulnerable to attack and gaming [3]. Explainability can also influence privacy positively, since the principle of information disclosure can help users to discover what features are correlated with sensitive information that can be removed [15, 114]. By the same principle, however, privacy can be hurt since one may need to disclose sensitive information that could jeopardize privacy [12]. Explainability can also threaten model confidentiality and trade secrets, which companies are reluctant to reveal [51].

Explainability can contribute to persuasiveness, since explanations may increase the acceptance of a system’s decisions and the probability that users adopt its recommendations [47]. Furthermore, explainability influences customer loyalty positively, since it supports the continuity of use [92] and may inspire feelings of loyalty towards the system [99].

6.5 Project constraints and system aspects

Individuals who design, build, and program systems are, among others, developers, quality engineers, and software architects. They count as stakeholders [115], as without them the systems would not exist in the first place. Generally, representatives of this group have a high expertise concerning the systems and a strong interest in creating and improving them.

This dimension is shaped by two aspects: project constraints and system aspects. The project constraints are the non-technical aspects of a system [83], while system aspects are more related to internal aspects of the system, such as performance and maintainability.

The quality aspects framed in this dimension are almost entirely internal in the classical sense, since they correspond to the most internal aspects of a system or the process through which the system is built.

Explainability can have both a positive and negative impact on maintainability. On the one hand, it can facilitate software maintenance and evolution by giving information about models and system logic. On the other hand, the ability to generate explanations requires new components in a system, hampering maintenance. A positive impact on verifiability was also identified, when explanations can work as a means to ensure the correctness of the knowledge base [102] or to help users evaluate the accuracy of a system’s prediction [116]. Testability falls in the same line, since explanations can help to evaluate or test a system or a model [14]. Explainability has a positive influence on debugging, as explanations can help developers to identify and fix bugs [4]. Specifically, in the case of machine learning (ML) applications, this could enable developers to identify and fix biases in the learned model and, thus, model optimization is positively affected [50]. Overall, all these factors can help increase the correctness of a system, by helping to correct errors in the system or in model input data [108].

The overall performance of a system can be affected both positively and negatively by explainability. On the one hand, explanations can positively influence the performance of a system by helping developers to improve the system [77]. In this regard, explainability positively influences system effectiveness. On the other hand, however, explanations can also lead to drawbacks in terms of performance [103] by requiring loading time, memory, and computational cost [5]. Thus, as the additional explainability capacities are likely to require computational resources, the efficiency of the system might decrease [4]. Another quality that is impacted by explainability is accuracy. For instance, in the ML domain, the accuracy of models can benefit from explainability through model optimization [50]. On the negative side, there exists a trade-off between the predictive accuracy of a model and explainability [4]. A system that is inherently explainable, for instance, may have to sacrifice predictive power in order to be so [72]. Explainability may have a negative impact on real-time capability since the implementation of explanations could require more computing power and additional processes, such as logging data, might be involved.

Adaptability can be negatively impacted, for example, if lending regulations in a financial software have changed and an explanation module in the software is also affected. Next, assume that a new module should be added to a system. The quality aspect involved here is extensibility, which in turn is negatively impacted by explainability. Merely adding the new module is already laborious. If the explainability is also affected by this new module, the required effort increases again. Depending on the architecture of the software, it may even be impossible to preserve the system’s explainability. Explanations affect the portability of a system as well. On the negative side, an explanation component might not be ported directly because it uses visual explanations, but the environment to which system is to be ported to has no elements that allow for visual outputs. On the positive side, explainability helps transferability [117]. Transferability is the possibility to transfer a learned model from one context to another (thus, it can be seen as a special case of portability for ML applications). Explanations may help in this regard by making it possible to identify the context from and to which the model can be transferred [117].

Overall, the inclusion of explanation modules can increase the complexity of the system and its code, influencing many of the previously seen quality aspects. In particular, as an explainability component needs additional development effort and time, it can result in higher development costs [9].

6.6 Superordinated qualities

We were able to identify some aspects that hold regardless of dimension. These aspects are commonly seen as some kind of superordinated goals of explainability. For instance, organizations and regulators have been lately focusing on defining core principles (or “pillars”) for responsible or trustworthy AI. Explainability has been often listed as one of these pillars [51]. Overall, many of the quality aspects we could find in the literature contribute to trustworthiness. For instance, explanations can help to identify whether a system is safe and whether it complies to legal or cultural norms.

Ideally, confidence and trust in a system originate solely from trustworthy systems. Although one could trust an untrustworthy system, this trust would be unjustified and inadequate [118]. For this reason, explainability can both contribute to and hurt trust or confidence in a system [12, 71]. Regardless of the system’s actual trustworthiness, bad explanations can always degrade trust [71]. Finally, all of this can influence the system’s acceptance. A system that is trustworthy can gain acceptance [74] and explainability is key to this.

7 A reference model for explainability

Building on the previous artifacts, we propose a reference model for explainability. Reference models can support the design and implementation of software systems, making it easier to understand primordial factors to the conception and design of these systems [119].

Building on this idea, we propose a reference model that provide a frame of reference of the main factors and relevant points that should be considered when defining explainability from requirements analysis (e.g., eliciting explainability requirements) to the design phase (i.e., operationalization of the elicited requirements) and evaluation (i.e., measuring if the requirements are achieved). In light of this, we answer RQ4 as follows:

Answering RQ4 We propose a reference model for explainable systems (Fig. 5) based on the findings from our SLR. This reference model includes relevant factors that should be considered for the development of explainable systems at various stages of the software lifecycle, assisting software engineers in the analysis, operationalization, and evaluation of requirements for explainable systems. |

7.1 Constituents of the reference model

Our reference model draws on ideas from three sources. The first source is a stepwise approach from abstract quality notions to concretely measurable requirements proposed by Schneider [120]. This stepwise approach considers three levels of abstraction: abstract goals, concrete characteristics, and measures or indicators [120, 121]. The abstract goalsFootnote 9 correspond to the objectives and constraints for a system; the concrete characteristics define the design decisions for the abstract goals; and the measures or indicators help evaluate whether the abstract goals have been achieved.

Based on this approach, we create the structure of a reference model that goes from the abstract to the concrete: from abstract aspects of the “real-world” that are relevant for requirements analysis and must be translated into requirements, to factors that influence concrete design decisions, to evaluation strategies. We have transformed these levels of abstraction into phases of the software lifecycle to make them more practical and to provide software engineers with guidance on what to consider in each phase. In our model, these levels correspond to requirements analysis, design, and evaluation, respectively.

During requirements analysis, software engineers, in conjunction with relevant stakeholders, elicit and define explainability requirements. Such requirements are not only the quality aspects that the system should have, but may also include an initial overview of the system, a vision of the system or a part of it, a list of key features, constraints, etc. During the design phase, the requirements should be refined into tangible system solutions or design choices, based on the factors considering during requirements analysis. Finally, during evaluation, quantitative measurements or qualitative indicators should be specified for each concrete solution as a method to subsequently analyze whether a requirement was met.

The work of Chazette et al. [123] is the second source of ideas upon which our reference model is based. Chazette et al. propose six core activities and associated practices for the development of explainable systems. These activities include (1) establishing a system vision (also with respect to explainability), (2) assessing the needs of the involved stakeholders, deciding how to implement explainability, (3) thinking about the algorithm to be explained, (4) weighing the trade-offs between explainability and other quality aspects, (5) making design decisions that favor explainability, and (6) assessing the impact of explainability on the system.