Abstract

In this review, we discuss the value of biological dosimetry and electron paramagnetic resonance (EPR) spectroscopy in the medical management support of acute radiation syndrome (ARS). Medical management of an ionizing radiation scenario requires significant information. For optimal medical aid, this information has to be rapidly (< 3 days) delivered to the health-care provider. Clinical symptoms may initially enable physicians to predict ARS and initiate respective medical treatment. However, in most cases at least further verification through knowledge on radiation exposure details is necessary. This can be assessed by retrospective dosimetry techniques, if it is not directly registered by personal dosimeters. The characteristics and potential of biological dosimetry and electron paramagnetic resonance (EPR) dosimetry using human-derived specimen are presented here. Both methods are discussed in a clinical perspective regarding ARS diagnostics. The presented techniques can be used in parallel to increase screening capacity in the case of mass casualties, as both can detect the critical dose of 2 Gy (whole body single dose), where hospitalization will be considered. Hereby, biological dosimetry based on the analysis of molecular biomarkers, especially gene expression analysis, but also in vivo EPR represent very promising screening tools for rapid triage dosimetry in early-phase diagnostics. Both methods enable high sample throughput and potential for point-of-care diagnosis. In cases of higher exposure or in small-scale radiological incidents, the techniques can be used complementarily to understand important details of the exposure. Hereby, biological dosimetry can be employed to estimate the whole body dose, while EPR dosimetry on nails, bone or teeth can be used to determine partial body doses. A comprehensive assessment will support optimization of further medical treatment. Ultimately, multipath approaches are always recommended. By tapping the full potential of all diagnostic and dosimetric methods, effective treatment of patients can be supported upon exposure to radiation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Clinical Perspective on Radiation Exposure and Radiological Scenarios

Radiological and nuclear incidents with people exposed to a health-threatening radiation dose are rare. However, due to the manifold use of radionuclides and radiation sources in military, industry and medicine [1], there is a wide variety of realistic scenarios, which can lead to a radiation accident [2]. Radiological accidents mostly involve external irradiation, which leads to very heterogonous and/or localized radiation exposure (a collection of reports on radiological and nuclear accidents is provided by the IAEA) [3]. Victims exposed to higher radiation doses in these accidents (leading to deterministic effects), sooner or later, will meet health-care providers, who have to figure out to what extent medical treatment is necessary. Additionally, if a large number of people are involved in a radiological incident, fast exclusion (e.g., by triage) of so-called “worried-well” will strongly relieve the clinical personnel and save valuable resources needed for exposed patients. In most cases, there will be only a small fraction of patients in need of medical treatment (e.g., 20 of 112,000 monitored people in Goiânia [4]). But this fraction will bind a substantial amount of medical resources, e.g., antibiotics to compensate immune suppression, cytokines for support of the stunted production of new granulocytes, blood transfusions or even bone marrow transplantation. For treatment of local radiation burns, skin grafts, injection of stem cells or even amputation has to be considered [5,6,7]. Diagnosing patients fast (< 3 days) and thus providing them optimal treatment can, e.g., nearly double the lethal dose (LD50/60) from 4 Gy to about 8 Gy [8].

For fast screening, clinical signs or symptoms of the acute radiation syndrome (ARS) can be a first method to categorize people into groups with zero, low or high exposure. Here, e.g., the METROPOL manual provides guidance for physicians to correlate symptoms or blood counts and their time delay to the radiation exposure with the respective potential course of hematopoietic ARS [9]. Even if nowadays this can be assisted by a number of software tools, like WinFRAT [10], and the H-Module [11], there are a couple of pitfalls, which can cause uncertainties. (1) Scoring strongly depends on the time between exposure and symptoms. However, there can be several scenarios, in which the exact time of exposure is not known, e.g., because those patients cannot remember or did not notice the exposure (probable in most insidious malevolent acts). (2) Symptoms such as nausea, vomiting or erythema are vague and not specific just to radiation. They can easily be induced psychologically, which could likely happen in a mass casualty, when many people are involved and believe being exposed. (3) The symptoms are connected to reactions of certain organs to an absorbed dose. Thus, certain aspects of the exposure might not be considered, but require treatment, e.g., if exposure is locally restricted and does not induce a general symptomatic response.

As a consequence, additional and preferably neutral information about the exposure is often required, at least for verification. In fact, considering chronic radiation effects, their relation with whole body dose has been examined in extensive cohort studies, and the International Commission on Radiological Protection (ICRP) could establish models for risk estimation of chronic health effects (e.g., leukemia, thyroid cancer) [12,13,14]. Unfortunately, such models for acute health effects in response to radiation exposure are lacking, including ARS characterized by the hematological, gastrointestinal, dermatological or neurological syndromes. Actually, recent examinations on the association of dose with ARS suggest a limitation of dose for ARS severity prediction [15, 16]. For instance, single whole body exposures < 1 Gy roughly corresponds to a mild or no H-ARS 0–1 degree not requiring hospitalization and > 5 Gy corresponds to a severe H-ARS 3–4 degree urgently requiring hospitalization and intensive treatment. The dose range of 1–5 Gy leads to a variety of H-ARS severity degrees (H-ARS 1–3). This makes defined recommendations for individual treatment recommendations challenging in this dose range. Here, further differences in the characteristic of exposure (e.g., heterogeneity) as well as inter-individual differences in biological peculiarities (e.g., intrinsic radiosensitivity) represent further variables with strong impact on the clinical outcome [15] (Fig. 1).

Both, physical characteristics of radiation exposure and biological peculiarities of the individual determine radiation-induced acute health effects. Many factors associated with radiation exposure and biology contribute to the clinical outcome. Bioindicators of effect might integrate these different factors to provide a final assessment. However, such indicators are under research and not a validated tool ready to use for the medical management of radiation victims. ARS: acute radiation syndrome

Assessment of a suitable bioindicator, which might also be more intelligible for physicians, would allow an improved clinical prediction of the acute radiation syndrome (ARS) and its course [17,18,19]. Swartz and colleagues discussed in detail how biomarkers of organ-specific injury could be integrated into an early triage system taking into account the current capabilities of physical dosimetry, i.e., EPR dosimetry [20]. They also addressed the argument that the patient`s biological response and not the radiation dose received should be considered for the initial triage [21, 22].

However, such biological indicators are still under research and not fully established. Thus, a whole body single dose for exposure of 2 Gy holds promises to identify those individuals expected to exhibit an ARS and needing medical intervention [23,24,25]. As personal dosimeters reporting about the accidental exposure will mostly be an exception for a radiological emergency, several methods were established to allow such a dose estimation from the individual patient, which are often described as retrospective dosimetry.

Regarding the central questions and challenges for medical management, the optimal retrospective dosimetry method, similar to a personal dosimeter, should be fast, can be used for triage, cover the relevant dose ranges as well as exhibit a precise, reliable, persistent and radiation-specific indicator.

In this manuscript, we present methods for biological and specifically EPR retrospective dosimetry with regard to radiation accident response. Additionally, we discuss how these methods resemble or complement each other to match the requirements to support medical management in a clinical perspective.

2 Retrospective Dosimetry

The retrospective dosimetry techniques comprise biological (cytogenetic and molecular techniques) as well as physical dosimetry, such as electron paramagnetic resonance (EPR) spectroscopy, luminescence dosimetry (thermo- and optically stimulated luminescence), and neutron activation [26]. The ICRU report 94 [26] provides a comprehensive overview of the biological and physical dosimetry methods considering their suitability for the early-phase (hours up to 3 days) assessment of individual radiation doses after acute ionizing radiation exposure and their use in the past. The report additionally covers basic aspects of retrospective dosimetry, such as dose quantities and calibration processes including discussion of the detection limits. Depending on their characteristics, the various dosimetry methods are differently suitable for various applications, such as initial emergency response (small or large scale), dosimetry for epidemiological studies (population monitoring) or retrospective dosimetry to reconstruct a suspected dose weeks to years after exposure for a single or few individuals.

For completeness, neutron activation is shortly mentioned as the only method specific for neutron exposure, e.g., in criticality accidents such as in Tokaimura 1997 [27] and Sarov 1999 [28]. Here, the production of detectable radionuclides by nuclear reactions of neutrons in human blood, nails and hair can be used for the estimation of the neutron dose [26].

We will focus especially on biological and EPR dosimetry methods for the more common low-LET dose component of an external radiation exposure to support physicians and health-care providers in the potential treatment of an ARS.

2.1 Biological Dosimetry

The most frequently used cytogenetic biodosimetry techniques in case of a radiation accident are the dicentric chromosome analysis (DCA), the cytokinesis-blocked micronucleus (CBMN) assay and analysis of reciprocal translocations by FISH (fluorescence in situ hybridization). The PCC method (premature chromosome condensation), especially suitable in the high dose range up to 20 Gy, is established only in few laboratories and not yet validated as other cytogenetic methods [29]. DCA and CBMN assay have been established as the main biodosimetry methods for an acute ionizing radiation exposure, as they combine high (DCA) or reasonable (CBMN) specificity, a lower detection limit of 0.1 Gy (DCA full mode)/0.5 Gy (DCA triage mode) or 0.3 Gy (CBMN) and persistence of the signal for several months [30,31,32]. PCC (fusion method and ring assay) was established to overcome the 48–70 h culture time essential for DCA and CBMN [33]. The chromosomes are induced to condense prematurely before the first mitosis, which eliminates the culture time and the opportunity for mitotic delay or death to occur. The PCC method can provide dose estimates within hours up to about 20 Gy, identifies inhomogeneous exposures as otherwise only DCA, and has a lower detection limit of 0.2 Gy [29]. The FISH translocation analysis represents the method of choice after external protracted as well as chronic exposures. Cells containing reciprocal translocations (exchange of chromosomal segments between two chromosomes) are viable and allow the identification of aberrations originating from proliferating stem cells after appearing in peripheral blood lymphocytes even decades after exposure. However, FISH translocation analysis is not suitable as an emergency dosimetry method, as a triage mode is not established and the aberration scoring in full mode is too time intensive. A disadvantage of the metaphase-based cytogenetic approaches is the necessity of proliferating cells. Mitogenic stimulation means lymphocytes have to be cultured for at least 48 h and thus a loss of time until reporting dose estimates. However, especially the medical management of large-scale radiation scenarios is a challenging job with regard to sample processing and provision of dose estimates. In such situation, speed of delivering results and sample throughput are more important than the ultimate accuracy of dose estimates. With the aim of increasing capacity of biological dosimetry, different strategies such as a triage-scoring mode, Web-based scoring especially for DCA [34,35,36], or automation are pursued. One approach is networking of national or even international laboratories. In recent years, several international networks for biological dosimetry were established in Europe, Asia, Latin American, Canada and the USA [37]. Among the most established biological dosimetry tools, there are techniques with the capability of a high throughput of samples due to high level of automation (DCA, CBMN, γ-H2AX) [38,39,40]; however a point-of-care device has not been developed up to now. ISO standards for the biological dosimetry methods DCA, CBMN and FISH-based translocation analysis contribute to a high reliability and reproducibility of these methods [30,31,32, 41].

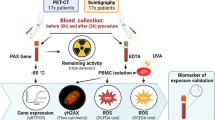

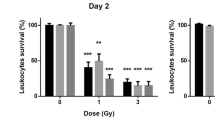

Compared to the cytogenetic methods, the molecular techniques, γ-H2AX DNA foci assay and gene expression analysis are promising triage tools, as they are independent of mitosis and are able to assess absorbed radiation doses about ten times faster than cytogenetic methods [42]. Thus, in particular, the molecular approaches have the potential to become useful triage tools in mass casualty events due to their speed combined with their high-throughput capacity, potential of automation, and capability for point-of-care diagnosis. However, they seem to lack specificity and confounders are less examined so far [16, 43, 44] suggesting a combination of different techniques for improved prediction of ARS. Additionally, these methods have to be applied shortly after a radiation exposure (24 h for γ-H2AX and days for gene expression). Nevertheless, these characteristics (early, high-throughput and point-of-care diagnostic) are urgently required for a valuable emergency dosimetry method.

Recent progress was achieved by adapting biological dosimetry methods, i.e., DCA, CBMN and γ-H2AX foci analysis, to the imaging cytometry method, combining the high throughput of flow cytometry with the sensitivity of aberration/foci scoring by microscopy [45,46,47]. Biological dosimetry methods proved to be very valuable and helpful in several past radiological accidents. The applied methods have to be chosen depending on their applicability in a certain radiation scenario (number of people at risk of exposure, time elapsed since the exposure, assumed temporal dose distribution, level and heterogeneity of dose), and further methodical properties (Table S1). The IAEA manual [29] gives a comprehensive overview of the different cytogenetic techniques and presents several examples of their application in real scenarios. Additionally, a guideline on the use of biodosimetric tools in radiation emergencies has been issued as part of the MULTIBIODOSE project funded by the European Commission [48, 49].

However, despite the ongoing research and progress made in the field of biological dosimetry, up to now, the gold standard biodosimetry method is still the DCA in peripheral blood lymphocytes.

2.2 EPR Dosimetry

A detailed overview on the application of EPR for retrospective dosimetry is also given in the ICRU report 94 [26] and ICRU report 68 [50], which are used as the main references for the following introduction to EPR. Electron paramagnetic resonance spectroscopy enables quantifying the concentration of stable radicals in mater. It is based on the resonant behavior of their unpaired electrons in a microwave field in combination with a static magnetic field. As radicals are also produced by ionizing radiation, this technique gives the opportunity to estimate the absorbed dose in various materials. The main requirement is the long-term stability of the radicals in the material. Typically, EPR is performed in three ranges of the microwave spectrum: L-band (≈ 1 GHz), X-band (≈ 10 GHz) and Q-band (≈ 34 GHz). The necessary static magnetic field correspondingly increases from 100 mT, 300 mT to 1 T for the typical EPR signals used for dosimetry at a g-factor (or dimensionless magnetic moment) of about 2, which enhances the requirements on the used magnets. In parallel, the cavity size decreases with increasing microwave frequency from several centimeters down to few millimeters, which limits the sample size.

A collection of properties for L-, X- and Q-band EPR is presented in Table 1.

The lower frequencies in the L-band have a smaller heat transfer to water and can be applied to “larger” volumes in the several cm range, which is potentially good for in vivo measurements. Its weak spectral resolution is often not able to discriminate between radiation-induced signals (RIS), mechanical-induced signals (MIS) or background signal (BGS). Here, laborious mathematical procedures often have to be used to recalculate the pure RIS component. So far, the first established use of the higher frequencies in the X-band became the “gold standard”, because their use results in a better signal resolution and, thus, radiosensitivity normalized to the sample mass. However, it already generates discomfort when applied in vivo to patients due to the also increased heat generated in water-containing tissue (resonance frequency of water molecules at ≈ 22 GHz). The heat induction could be reduced by using pulsed EPR, which has not been applied to living humans yet and still has disadvantages of short penetration depths and strong dependence on water portions in the tissue at higher frequencies [53]. Ex vivo, a minimum sample mass of about 50–200 mg is needed for a sufficient radiation-induced EPR signal and the size is limited to < 1 cm. Use of the Q-band with the highest frequency increases the signal resolution further and, thus, allows separation of almost all signals in the spectra. In parallel, it allows further reduction of the required sample mass to a few milligrams, while assuring the same signal to noise ratio, which makes sample extraction potentially minimally invasive. However, signal reproducibility can be altered by uncertainties in sample positioning within the EPR cavity, a problem which can be addressed by adding an internal marker. Depending on the sample type, grinding or drilling is often used for sample collection or preparation (minimization of orientation influence for crystalline structures). These procedures induce mechanical-induced signals (MIS), which overlap for the X- and L- band with RIS. Thus, especially for the L-band in vivo measurements are of great interest, because sample collection and preparation are not necessary.

EPR is a non-destructive analysis method. Thus, it can be applied several times to the same specimen. This allows establishment of so-called additive dose calibration curves on the same specimen by exposure to known doses and subsequently measuring the EPR signal after each dose step. The so determined EPR signal change per dose allows to estimate the dose of the initial EPR signal of the unknown dose. In this way, inter-specimen and inter-individual influences can be fully neglected. Of course, this approach is more time consuming, as the EPR signal often has to stabilize for hours or even days after each irradiation. Due to the additional irradiations, additive calibration is just applicable for ex vivo measurements.

In the 1960s, the potential of EPR for individual dose assessment was recognized [54]. Since then, many materials were found to be suitable for dose estimation, e.g., mineral glass or sugars. In this work, we focus on the most promising human-derived specimen, which are teeth (especially enamel), bone and nails. In a radiological incident scenario, this enables to estimate the local dose to different body regions of a patient. Thus, dose heterogeneity can be judged, which can affect the medical treatment, e.g., how strong was the gastro ntestinal part irradiated, how far a body extremity has to be amputated or can a skin graft be successful?

However, these specimen materials have several complementary advantages and drawbacks.

2.2.1 Ex vivo EPR

2.2.1.1 Tooth

Teeth have the strong advantage that they consist of about 97% hydroxyapatite and nearly no soft tissue, which strongly alters the EPR signal due to its water content. Radicals (especially CO2−) produced in this nearly ideal solid state detector are stable for about 107 years. Thus, tooth enamel is the most radiosensitive specimen for EPR. Additionally it is not rebuilt over time, because teeth formation is completed already before adolescence. Thus, radicals measured in teeth are a good estimate for lifetime exposure. With a minimal detection dose (MDD) of about 30–100 mGy for ex vivo X-band application, tooth enamel is the most radiosensitive human-derived sample type that has been already used in several inter-laboratory comparisons [55, 56] and has standardized protocols by the ISO 13304-1:2020 [57] and ISO 13304-2:2020 [58]. Using the Q-band should result in similar radiosensitivity in smaller enamel mass (few mg, which means a size of about 3 mm and less intervention than a typical cavity preparation for fillings) [52, 59, 60]. Although Q-band EPR displays an improvement of sensitivity (20 times higher) and signal resolution compared to X-band, the method shows some practical limitations regarding equipment costs and high sensibility to sample positioning (requiring longer training than X-band) as shown, e.g., in fossil teeth [61]. The high content of hydroxyapatite in teeth also reduces inter-individual signal variances and additionally allows for the application of general calibration curves for dose estimation, which is significantly faster compared to the additive calibration method. This applies for high-energy photons, but for low-energy photons dose additive methods are recommended. The use of different located teeth enables indicating from which side the radiation occurred. The time of the actual EPR measurement is below 15 min [52]. One drawback of tooth enamel is the need of dental intervention for recovery and that its isolation needs further sample preparation steps, which can induce MIS (drilling/sawing without water cooling). However, these MIS can be counteracted by, e.g., an additional acid etching step or low speed drill under cooled water conditions [51]. For epidemiological studies on A-bomb survivors [62, 63] or Chernobyl victims [64], it was accepted by patients that teeth were collected for dose determination, which had to be extracted due to dental treatment anyway. This collection took many years. However, extraction of teeth will not be appropriate for screening or triage in a large- or even small-scale scenario, in which most people will not be exposed to a health-relevant radiation dose. If intervention is smaller, e.g., a biopsy of just a few milligrams of enamel using the Q-band, acceptance could be higher for dose screenings. Additionally for heterogeneous exposure, dose to tooth enamel will only allow a limited estimate for whole body dose or dose of critical organs due to their isolated location. A possible confounder for tooth dosimetry is that the enamel suffers from background signal produced by UV exposure. Thus, molars are the preferred teeth used for EPR. Nevertheless, also dental treatment has to be considered as source of UV light, which also could lead to additional background signal as well as X-ray exposure due to medical imaging. Typically, 48 h are needed until the RIS is stabilized. For emergency cases, the time can be shortened if RIS formation is characterized well enough at these time points. For real radiological accidents, ex vivo X-band EPR was only rarely applied as, e.g., after the Nueva Aldea/Chile accident in 2005 [65] due to the invasiveness of tooth extraction from living patients. In the mentioned case, teeth were extracted due to medical reasons. Ex vivo Q-band EPR on small samples of tooth enamel, originally proposed by Romanyukha et al. [66], already provided important information about the circumstances of the exposure (direction of radiation, local dose and heterogeneity of body dose) in two radiological accidents, i.e., the Stamboliskyski/Bulgaria accident in 2011 [67] and the Chilca/Peru accident in 2012 [68].

Apart from its usage for retrospective dosimetry in acute radiation scenarios, EPR dose estimates are collected and included in a wide range of radiological studies. These studies include the nuclear bomb detonation in Hiroshima and Nagasaki [62,63,64], nuclear power plant accidents, e.g., in Chernobyl [69] or Fukushima [70, 71], radioactive pollution caused by the Mayak plutonium facility [72] as well as Mayak workers [73, 74]. Additionally, EPR data have been collected after incorporation of radionuclides leading to an internal dose component, which can also be detected, e.g., by teeth enamel. These studies examined the intake of 239,240Pu, 137Cs and 98,90Sr by the populations living around the Semipalatinsk test site [75,76,77] as well as the intake of 89,90Sr and 137Cs by the population living near the Techa River [78, 79]. However, EPR was shown to be most applicable when radionuclides are distributed homogenously in the body [80].

2.2.1.2 Bone

Of course, teeth are only located in the head region. Thus, statements about dose exposures of body parts further apart are limited. Here, the analysis of bone biopsies could provide further information, because biopsies can be done at the region of interest, especially if just a few milligrams are needed. On the other hand, biopsies are rather a major intervention and appropriate if there is a strong evidence for radiation exposure and critical need for dose information regarding further medical treatment. Due to the lower content (50% by volume) and more variant crystalline structure of hydroxyapatite in bone, the RIS for EPR in bone is less sensitive (estimated minimal detection dose of 5 Gy for X-band). Additionally, bone has a larger content of organic tissue, which implies a laborious sample preparation step for its removal before application of EPR. Here, for Q-band analysis the preparation can be simplified, as signals from organic tissue can be separated from the RIS. The variance of bone composition and density leads to additional uncertainties in the recalculation from dose absorbed in bone to dose in surrounding tissue, which also changes for different radiation qualities. This has to be considered carefully. In contrast to teeth, bone is constantly rebuilt. Thus, the concentration of radicals after an exposure to ionizing radiation decreases with time. Here, two effects contribute as a consequence of living bone: radical recombination, which happens in hours and bone remodeling in a time window of months. For immediate emergency management, in which a fast dose assessment is the goal, the effect of bone reformation should be negligible, but it can be a problem if the exposure is longer. However, it has to be considered that bone reformation will be altered for high local doses for which bone EPR analysis typically has been applied [7, 67, 68, 81].

As with tooth enamel ex vivo EPR on bone specimen has been applied mainly in small-scale accidents characterized by heterogonous whole body and/or localized exposures. In these cases, the dose estimates have been very helpful for medical treatment planning, as in one case the dose estimates confirmed that a further amputation of a finger was not indicated [7, 67, 68, 81]. Although ex vivo bone EPR has been used widely and already for a long time, many questions are open concerning the behavior of bone material upon irradiation and no standardization of protocols and dose assessment using EPR bone dosimetry has been addressed up to now.

2.2.1.3 Nail

Regarding accessibility and availability, finger and toenails are promising specimen for early retrospective dosimetry and triage by EPR spectroscopy, but methodological aspects (e.g., interindividual variance or sensitivity) challenge this dosimetric approach. Due to their location, they should provide the best information about dose heterogeneity. Due to their location, they should provide the best information about dose heterogeneity. Additionally, they are easy to access for clipping and potential in vivo measurement. Their composition is similar to soft tissue and, thus, the absorbed dose in nails similar to the absorbed dose in surrounding tissue. But in contrast to the great opportunity the use of nail provides especially for fast triage [82], RIS generated in the keratin component is much less stable and sensitive. Especially, water content in nails plays a critical role, because humidity during storing of nail clipping leads to a significant decrease of signal as well as handwashing [83]. In additional to the normal remodeling of nail, signal fading leads to further loss of signal, thus measurements on nails should be done shortly after irradiation. As expected, UV exposure also generates interfering signals [84]. Although 1 Gy dose estimations were presented [85, 86], so far ex vivo nail clippings seem just to deliver suitable dose assessments above 10 Gy, if BGS is unknown and time after irradiation is not limited.

Ex vivo nail EPR dosimetry was also used in some small-scale scenarios with relatively high doses (> 10 Gy) [68, 87, 88]. In the Chilca/Peru accident [68], EPR on fingernails provided important information on the exposure scenario, such as dose distribution over the body and localized high exposure to the hands, and directed the medical treatment planning [67]. Romanyukha and colleagues compared X- and Q-band EPR on fingernail samples collected 2 months after irradiation. They obtained similar results, which were about 50% lower than the clinically based estimate probably due to different methodical reasons [88]. Moreover, ex vivo nail EPR dosimetry performed at Naval Dosimetry Center has demonstrated the ability to assess the radiation dose using a small, portable EPR spectrometer which significantly reduces the time needed for dose estimation to the range of minutes. [88]. Thus, in the future this will be an advantage for the establishment of a suitable point-of-care application of ex vivo nail dosimetry to support early phase diagnosis of radiation victims on-site. However, unsolved methodological challenges as already mentioned represent prerequisites to be solved before establishing a meaningful nail dosimetry.

2.2.2 In vivo EPR

The possible direct in vivo approach of EPR spectroscopy is a unique selling point of EPR dosimetry, but is not established yet or goes along with certain performance losses regarding the minimal detection dose. However, a tradeoff is that an in vivo approach can avoid the uncertainties associated with sample collection and preparation, e.g., mechanically induced signal (MIS) or humidity effects in nail clippings. Additionally, the establishment of calibration curves is challenging, because significant doses are needed at the point of interest and thus only total body irradiated cancer patients can be used, which are rare. Phantoms, which, e.g., were used for investigation of influences on measurements in in vivo setups [89, 90], are also no option, because they are not standardized and validated yet. Nevertheless, some work on in vivo EPR measurement already exists.

2.2.2.1 Tooth

In vivo tooth EPR in the X- or L-band has been tested only by a few laboratories and shows several drawbacks, including a relatively high minimum detectable dose of about 2 Gy [91, 92]. Zdravkova and colleagues [93] compared L-Band in vivo and in vitro EPR on rat teeth irradiated with 50 Gy by X-rays. They found that L-band spectra did not show a significant difference between in vivo and in vitro measurements, but observed the tendency of a higher signal after in vitro measurement [93]. The in vivo tooth EPR approach has not been used in real accidents up to now. However, performing in vivo L-band measurements on volunteers in the Fukushima prefecture [70] showed that, even if no case with detectable dose was measured, a portable EPR spectrometer can be used on-site for possible triage after a radiological event.

2.2.2.2 Bone

Zdravkova and colleagues compared the in vivo approach of bone EPR dosimetry of human and baboon fingers to human dry phalanxes and determined a lower detection limit of 60 Gy with the assessment that with further development a detection limit of 40 Gy could be achievable [87]. The threshold dose for osteoradionecrosis after fractionated exposure over weeks in radiotherapy patients has been found to be about 60 Gy [94], but is lower (about 40 Gy) after an acute high radiation dose [26]. However, this approach requires further knowledge regarding response/behavior of bone upon irradiation and influence on radiation-induced EPR signal as well as technical development until serving as a reliable dosimetry tool.

2.2.2.3 Nail

The in vivo approach of nail EPR dosimetry has also evolved into a promising candidate as an initial phase triage tool in a large-scale event. With the development of a first-generation resonator for in vivo nail dosimetry, important progress has been made to apply the technique under realistic conditions on-site and a multimethod concept to implement in vivo nail EPR in the future into the medical management is discussed [20, 95]. Swartz and colleagues thereby assume an improved dose resolution of 1 Gy which could be achieved in the future using in vivo nail dosimetry [20]. It hypothetically describes the capability of how in vivo nail EPR can be used to assess the homo- or heterogeneity of dose after exposure of an individual and how in case of dose heterogeneity the dose distribution can be assessed by this approach. They conclude that in vivo EPR has to be considered complementary to the use of biological and clinical dosimetry in the most effective response to a large-scale radiation scenario [20]. However, up to now, in vivo EPR has not been carried out in real accidents and continuing research, validation and standardization of this approach are recommended.

2.3 Comparison of Biological and EPR Dosimetry

For better comparison, Tables 2 and 3 summarize the basic information of the described retrospective dosimetry methods. A more detailed table (especially for biological dosimetry methods) can be found in the Supplements (Table S1).

In principle, both retrospective dosimetry methods can provide dose information in the critical range of 0.1–10 Gy and thus can support categorization of patients [29, 55, 56, 91]. Hereby, accuracy is better in the lower dose range (< 5 Gy) for most biological methods, because most biological indicators saturate at higher doses. In contrast, accuracy for EPR dosimetry is better at higher doses (> 5 Gy), because the RIS becomes more dominant and is linear up to 100 Gy or even 1000 Gy [26].

In the early phase of a radiological accident, time is a crucial variable for medical management decision making, which strongly varies for the different techniques, because sample collection, preparation and measurement time differ. Especially, sample collection represents a great advantage of biological dosimetry, because taking blood is a highly common and standard procedure of medical checkups. Additionally, the preparation of blood is highly standardized for most of the analyzed markers (isolation of lymphocytes or RNA) as well as many assays in biological dosimetry, e.g., DCA and CBM. These standardized processes also often bear the possibility of automation or parallelization/multiplexing, meaning that many samples can be processed/analyzed at once, and thus making them suitable for high-throughput diagnosis in the early phase of an accident [39, 96]. In particular, gene expression analysis is a promising screening tool for initial triage dosimetry due to the speed, high sample throughput and capability for point-of-care diagnostics [43, 97, 98].

In future, establishment of standard protocols has to be one main goal for EPR dosimetry, because differences in sample preparation or collection can already lead to differences in dose estimation. For teeth enamel, this was reached for X-band EPR, because there was a broad application in epidemiological studies [63, 64, 69, 72,73,74]. Here, the extraordinary persistency of the RIS (107 a) in enamel makes teeth nearly ideal passive dose detectors.

In vivo EPR omits sample preparation and allows a direct assessment of the absorbed dose, within the measurement time of about 10 min. In combination with a portable spectrometer, which was already applied for in vivo teeth EPR in Fukushima some years afterward, this technique would represent a powerful triage tool for point of care diagnostics. However, a minimal detection dose of 2 Gy [70] would not be sufficient for identification of potential ARS patients requiring early treatment and hospitalization.

Nail EPR ex vivo could also support triage capacity, because when clipping of nails is standardized, its execution should be easy and fast. The low stability or persistency of the RIS is maybe less problematic when measurements are done directly after the accident. Of course, in vivo EPR on nails would be also an extraordinary screening tool. However, solid data on its performance (minimal detection dose) in human and radiological accidents have still to be shown.

Finally, EPR, independent from specimen or in vivo/ex vivo application, would need to reach a minimal detection dose of less than 0.5 Gy to be suitable for triage at 2 Gy (assuming a necessary limit of quantitation of about 2 Gy). So far, this was only achieved by ex vivo EPR on enamel.

2.4 Possible Contribution of Dosimetric Methods to Medical Management of Radiation Accidents

In the past, both biological and physical dosimetry have been applied in parallel or consecutively within the medical management support of the same radiation-exposed victims [99, 100]. Thereby, it is important to consider the site of absorbed dose measured by the different methods. Whereas biological dosimetry measures the dose to circulating blood lymphocytes, and thus provides the absorbed equivalent whole body dose, EPR determines the local dose absorbed by the collected biologically derived specimen or physical sample material located near the victim at time of exposure. This implies that differing dose estimates have to be expected upon dose assessment of heterogeneous exposed individuals by these methods. However, as described in the literature, such complementary dose information has been an advantage and very supportive for medical treatment planning in some past accidents.

In addition, the different challenges and requirements of large-scale and small-scale events must be taken into account. For a small number of subjects the available dosimetry capacity would be able to assess the individual doses with applying complementary methods as accurate as possible and the health-care system will be able to treat all victims. Within large-scale radiological events, a critical component of public health and medical response to a radiological event will be the rapid and effective screening of large populations under probably difficult circumstances to separate the exposed from the non-exposed. Here, the different dosimetric methodologies should be used in parallel to enhance the screening capacity. These dosimetry techniques need to provide a minimal detection limit of ≤ 0.5 Gy to support this decision process of triage (assuming a necessary limit of quantitation of about 2 Gy). So far, biological dosimetry methods and ex vivo EPR on enamel fulfill this criterion.

3 Complementary Application of Biogocial Dosimetry and EPR in Radiation Accidents

The medical management of the Nesvizh/Belarus accident in 1991 [101] is one example showing the great importance of dose assessment for the physician in case of whole body exposure. In a sterilization facility, a worker was accidentally exposed for 90 s to a 28.1 PBq 60Co source. Blood cell counts and EPR dosimetry (clothing material and tooth and nail EPR post-mortem) were carried out. DCA was planned, but cell growth failed matching together with the first estimated high dose based on the reading of a thermoluminescent dosimeter (TLD) at the assumed victim`s position (12–15 Gy) and the estimated dose from blood cell counts (9–11 Gy). EPR on some material from the victim`s clothing at waist level revealed doses ranged from 11 to 18 Gy (± 20%) with the left side being more affected. Post-mortem, ex vivo tooth EPR confirmed such a high dose of about 15 Gy in the head region. Unfortunately, in this high-dose range, no treatment would have been able to save the life of the victim. However, in a lower dose range (< 5 to 8 Gy), where intense medical treatment enables survival and DCA can be performed successfully to estimate the dose heterogeneity, medical treatment could have been started in the first 3 days after exposure.

In a second example, the Chilca/Peru accident in 2012 [68], three workers suffered from a whole body exposure combined with a higher localized exposure. They were accidentally exposed to an 192Ir source; however, the exact timeline and duration of the overexposure could not be reconstructed precisely. The most exposed worker is an impressive example how the combination of biological and physical dosimetry together with the assessment of clinical signs had an impact on the medical treatment of a radiation victim. His OSL dosimeter revealed a high radiation dose of about 7 Gy and an erythema evolved after 3 days on the index finger of the left hand. About 11 days later, biological dosimetry confirmed a heterogeneous exposure of 75% of the whole body to about 2.5–3.5 Gy. Ex vivo nail and bone EPR estimated a very heterogeneous high local dose to the left hand of about 25 Gy (nails) and up to 73 Gy (bone) depending on the site of collected bone biopsy. Findings by biological dosimetry and EPR on mini-biopsies of enamel as well as experimentally on finger nails indicated a worse prognosis and led to an urgent transfer of the worker to a hospital for specialized medical treatment [67]. Moreover, the complementary dosimetry approaches helped to elucidate the circumstances of the scenario, e.g., the volume of the body being exposed to a certain dose level (DCA) and the orientation of the worker within the radiation field (bone EPR on mini-biopsies). Such characteristics are of great importance if a patient is at risk of the development of H-ARS. Additionally, EPR could confirm by estimation of the dose level and distribution on the phalanx of the most exposed worker that no further amputation was necessary [67].

Besides the question of amputation, the complementary use of EPR can also support the question of bone marrow transplantation. Here, it is extremely important to estimate if part of the bone marrow has been spared or was even exposed to lower doses and exhibits residual hematopoiesis allowing hematopoietic recovery. In such cases, a bone transplantation is not indicated due to the risk of graft-versus-host disease, stem cell failure, and organ damage possibly leading to the death of the patient. This lesson was learned from the Chernobyl accident and represents a central aspect of the European consensus on the medical management of ARS [102].

It is worth mentioning that the DCA represents the only highly validated biological dosimetry tool allowing to asses if a presumed whole body exposure has been homogenous or rather not [29]. However, the assessment of dose heterogeneity by EPR would probably still helpful for further treatment.

All in all, in small-scale scenarios, a multi-parametric approach is urgently recommended to exhaust the available options for radiation injury assessment as this strategy has successfully been applied in past scenarios [68, 103]. These options are biological and physical dosimetry methods, physical/mathematical dose reconstruction and clinical evaluation (dose reconstruction and effect prediction based on clinical signs and symptoms) to gain a comprehensive overview concerning the circumstances of the overexposure. In case of only few affected individuals, a close collaboration between the retrospective dosimetry laboratories and the treating physicians is more feasible than can be assumed for mass scenarios.

The special challenge of the complex management of a radiation mass casualty scenario needs emergency planning and preparedness to appropriately triage and treat the identified exposed population. A critical component of the medical response to such an event will be the on-site mass screening of large populations to separate the exposed (requiring intensive and early clinical support) from the non-exposed (avoiding the absorption of limited clinical resources) and to communicate the dosimetric results to the physicians [97, 104]. In case of the European dosimetry network RENEB (Running the European Network of biological dosimetry and physical retrospective dosimetry) [105], the network will be connected to the global emergency and preparedness system. The foundations for this have already been laid by integrating members of the WHO's biodosimetry network (BioDoseNet) [106] and the IAEA's RANET [107]. However, every single step of the procedures from sample collection to dose assessment to transmission of results in a large-scale event where hundreds or thousand people need dose assessment still has to be set up.

4 Conclusion

Until a reliable bioindicator of effect with regard to the risk of developing ARS and for routine use is available, dose information including dose heterogeneity as well as dose distribution is crucial for medical treatment planning. Furthermore, local dose assessment might be indispensable to guide medical treatment in high local irradiation scenarios. Available retrospective dosimetry methods exhibit different suitability for different exposure scenarios and the most informative method or combination of methods with regard to a specific case and its circumstances should be selected for diagnosis. Finally, the application of several complementary methods is strongly recommended for medical treatment and decision-making support, because most scenarios are of a rather complex nature and each case has its own characteristics. Ideally, this is supported by a strong network of laboratories, which share the workload, enable fast dose reporting and provide a high-quality standard by standardization and inter-laboratory comparisons.

References

UNSCEAR, Sources and effects of ionizing radiation: United Nations scientific committee on the effects of atomic radiation 2008 report (2008)

K. Coeytaux, E. Bey, D. Christensen, E.S. Glassman, B. Murdock, C. Doucet, PLoS ONE 10, 1 (2015). https://doi.org/10.1371/journal.pone.0118709

IAEA, Collection of accident reports. https://www.iaea.org/publications/search/topics/accident-reports

IAEA, The radiological accident in Goiania (Vienna, 1988)

F. Hérodin, M. Drouet, Exp. Hematol. 33, 1071 (2005). https://doi.org/10.1016/j.exphem.2005.04.007

A. Farese, T. MacVittie, Drugs Today 51, 537 (2015). https://doi.org/10.1358/dot.2015.51.9.2386730

E. Bey, M. Prat, P. Duhamel, M. Benderitter, M. Brachet, F. Trompier, P. Battaglini, I. Ernou, L. Boutin, M. Gourven, F. Tissedre, S. Créa, C.A. Mansour, T. De Revel, H. Carsin, P. Gourmelon, J.J. Lataillade, Wound Repair Regen. 18, 50 (2010). https://doi.org/10.1111/j.1524-475X.2009.00562.x

G.H. Anno, R.W. Young, R.M. Bloom, J.R. Mercier, Health Phys. 84, 565 (2003). https://doi.org/10.1097/00004032-200305000-00001

T. Fliedner, I. Friesecke, K. Beyrer, Medical management of radiation accidents—manual on the acute radiation syndrome (The British Institute of Radiology, London, 2001)

AFFRI, WinFRAT. https://afrri.usuhs.edu/sites/default/files/2020-07/winfratbrochure.pdf

M. Majewski, M. Rozgic, P. Ostheim, M. Port, M. Abend, Health Phys. 119, 64 (2020). https://doi.org/10.1097/HP.0000000000001247

BEIRV, Health effects of exposure to low levels of ionizing radiation (National Academies Press, Washington, D.C., 1990)

UNSCEAR, Report to the General Assembly, with Scientific Annexes, vol. I (New York, United Nations, 2006)

ICRP, ICRP Publication 60 (Pergamon, Oxford, 1991)

M. Port, B. Pieper, H.D. Dörr, A. Hübsch, M. Majewski, M. Abend, Radiat. Res. 189, 449 (2018). https://doi.org/10.1667/RR14936.1

M. Port, F. Hérodin, M. Drouet, M. Valente, M. Majewski, P. Ostheim, A. Lamkowski, S. Schüle, F. Forcheron, A. Tichy, I. Sirak, A. Malkova, B.V. Becker, D.A. Veit, S. Waldeck, C. Badie, G. O’Brien, H. Christiansen, J. Wichmann, G. Beutel, M. Davidkova, S. Doucha-Senf, M. Abend, Radiat. Res. (2021). https://doi.org/10.1667/RADE-20-00217.1

M. Port, M. Majewski, M. Abend, Radiat. Prot. Dosim. (2019). https://doi.org/10.1093/rpd/ncz058

M. Abend, M. Port, Health Phys. 111, 183 (2016). https://doi.org/10.1097/HP.0000000000000454

P. M. Abend M, Blakely WF, Ostheim P, Schüle S, J. Radiol. Prot. (2021) (under review)

H.M. Swartz, A.B. Flood, V.K. Singh, S.G. Swarts, Health Phys. 119, 72 (2020). https://doi.org/10.1097/HP.0000000000001244

N. Dainiak, J. Radiat. Res. 59, ii54 (2018). https://doi.org/10.1093/jrr/rry004

V. Singh, P. Santiago, M. Simas, M. Garcia, O. Fatanmi, S. Wise, T. Seed, J. Radiat. Cancer Res. 9, 132 (2018). https://doi.org/10.4103/jrcr.jrcr_26_18

N. Dainiak, R.N. Gent, Z. Carr, R. Schneider, J. Bader, E. Buglova, N. Chao, C.N. Coleman, A. Ganser, C. Gorin, M. Hauer-Jensen, L.A. Huff, P. Lillis-Hearne, K. Maekawa, J. Nemhauser, R. Powles, H. Schünemann, A. Shapiro, L. Stenke, N. Valverde, D. Weinstock, D. White, J. Albanese, V. Meineke, Disaster Med. Public Health Prep. 5, 202 (2011). https://doi.org/10.1001/dmp.2011.68

A.L. DiCarlo, C. Maher, J.L. Hick, D. Hanfling, N. Dainiak, N. Chao, J.L. Bader, C.N. Coleman, D.M. Weinstock, Disaster Med. Public Health Prep. (2011). https://doi.org/10.1001/dmp.2011.17

M.E. Rea, R.M. Gougelet, R.J. Nicolalde, J.A. Geiling, H.M. Swartz, Health Phys. 98, 136 (2010). https://doi.org/10.1097/HP.0b013e3181b2840b

ICRU, ICRU Report 94—methods for initial-phase assessment of individual doses following acute exposure to ionizing radiation (2019)

M. Akashi, T. Hirama, S. Tanosaki, N. Kuroiwa, K. Nakagawa, H. Tsuji, H. Kato, S. Yamada, T. Kamata, T. Kinugasa, H. Ariga, K. Maekawa, G. Suzuki, H. Tsujii, J. Radiat. Res. 42(Suppl), 157 (2001). https://doi.org/10.1269/jrr.42.s157

IAEA, The criticality accident in Sarov (Vienna, 2001)

IAEA, Cytogenetic dosimetry: applications in preparedness for and response to radiation emergencies (Vienna, 2011)

ISO, ISO 19238:2014 : Radiation protection—performance criteria for service laboratories performing biological dosimetry by cytogenetics (2014)

ISO, ISO 17099:2014 : Radiological protection—performance criteria for laboratories using the cytokinesis block micronucleus (CBMN) assay in peripheral blood lymphocytes for biological dosimetry (2014)

ISO, ISO 21243:2008 : Radiation protection—performance criteria for laboratories performing cytogenetic triage for assessment of mass casualties in radiological or nuclear emergencies—general principles and application to dicentric assay (2008)

R. Kanda, I. Hayata, D.C. Lloyd, Int. J. Radiat. Biol. 75, 441 (1999). https://doi.org/10.1080/095530099140366

D.C. Lloyd, A.A. Edwards, J.E. Moquet, Y.C. Guerrero-Carbajal, Appl. Radiat. Isot. 52, 1107 (2000). https://doi.org/10.1016/S0969-8043(00)00054-3

F.N. Flegal, Y. Devantier, J.P. McNamee, R.C. Wilkins, Health Phys. 98, 276 (2010). https://doi.org/10.1097/HP.0b013e3181aba9c7

H. Romm, E. Ainsbury, A. Bajinskis, S. Barnard, J.F. Barquinero, L. Barrios, C. Beinke, R. Puig-Casanovas, M. Deperas-Kaminska, E. Gregoire, U. Oestreicher, C. Lindholm, J. Moquet, K. Rothkamm, S. Sommer, H. Thierens, A. Vral, V. Vandersickel, A. Wojcik, Radiat. Environ. Biophys. 53, 241 (2014). https://doi.org/10.1007/s00411-014-0519-8

U. Kulka, A. Wojcik, M. Di Giorgio, R. Wilkins, Y. Suto, S. Jang, L. Quing-Jie, L. Jiaxiang, E. Ainsbury, C. Woda, L. Roy, C. Li, D. Lloyd, Z. Carr, Radiat. Prot. Dosim. 182, 128 (2018). https://doi.org/10.1093/RPD/NCY137

P.M. Sharma, B. Ponnaiya, M. Taveras, I. Shuryak, H. Turner, D.J. Brenner, PLoS ONE 10, 1 (2015). https://doi.org/10.1371/journal.pone.0121083

E. Royba, M. Repin, S. Pampou, C. Karan, D.J. Brenner, G. Garty, Radiat. Res. 192, 311 (2019). https://doi.org/10.1667/RR15266.1

M. Repin, S. Pampou, D.J. Brenner, G. Garty, J. Radiat. Res. 61, 68 (2019). https://doi.org/10.1093/jrr/rrz074

ISO, ISO 20046:2019: performance criteria for laboratories using fluorescence in situ hybridization translocation assay for assessment of exposure to ionizing radiation (2019)

K. Rothkamm, C. Beinke, H. Romm, C. Badie, Y. Balagurunathan, S. Barnard, N. Bernard, H. Boulay-Greene, M. Brengues, A. De Amicis, S. De Sanctis, R. Greither, F. Herodin, A. Jones, S. Kabacik, T. Knie, U. Kulka, F. Lista, P. Martigne, A. Missel, J. Moquet, U. Oestreicher, A. Peinnequin, T. Poyot, U. Roessler, H. Scherthan, B. Terbrueggen, H. Thierens, M. Valente, A. Vral, F. Zenhausern, V. Meineke, H. Braselmann, M. Abend, Radiat. Res. 180, 111 (2013). https://doi.org/10.1667/RR3231.1

M. Abend, S.A. Amundson, C. Badie, K. Brzoska, R. Hargitai, R. Kriehuber, S. Schüle, E. Kis, S.A. Ghandhi, K. Lumniczky, S.R. Morton, G. O’Brien, D. Oskamp, P. Ostheim, C. Siebenwirth, I. Shuryak, T. Szatmári, M. Unverricht-Yeboah, E. Ainsbury, C. Bassinet, U. Kulka, U. Oestreicher, Y. Ristic, F. Trompier, A. Wojcik, L. Waldner, M. Port, Sci. Rep. (2021). https://doi.org/10.1038/s41598-021-88403-4

S.A. Amundson, Int. J. Radiat. Biol. (2021). https://doi.org/10.1080/09553002.2021.1928784

L.A. Beaton, C. Ferrarotto, B.C. Kutzner, J.P. McNamee, P.V. Bellier, R.C. Wilkins, Mutat. Res. Genet. Toxicol. Environ. Mutagen. 756, 192 (2013). https://doi.org/10.1016/j.mrgentox.2013.04.002

M.A. Rodrigues, C.E. Probst, L.A. Beaton-Green, R.C. Wilkins, Cytom. Part A 89, 653 (2016). https://doi.org/10.1002/cyto.a.22887

C.N. Parris, S. Adam Zahir, H. Al-Ali, E.C. Bourton, C. Plowman, P.N. Plowman, Cytom. Part A 87, 717 (2015). https://doi.org/10.1002/cyto.a.22697

A. Jaworska, A. Wojcik, E. Ainsbury, P. Fattibene, C. Lindholm, U. Oestreicher, Guidance for using MULTIBIODOSE tools in emergencies (2013)

A. Jaworska, E.A. Ainsbury, P. Fattibene, C. Lindholm, U. Oestreicher, K. Rothkamm, H. Romm, H. Thierens, F. Trompier, P. Voisin, A. Vral, C. Woda, A. Wojcik, Radiat. Prot. Dosimetry 164, 165 (2015). https://doi.org/10.1093/rpd/ncu294

ICRU, ICRU Report 68—retrospective assessment of exposure to ionising radiation (2002)

T. Kubiak, Curr. Top. Biophys. 41, 11 (2018). https://doi.org/10.2478/ctb-2018-0002

A. Romanyukha, F. Trompier, R. Reyes, Radiat. Environ. Biophys. 53, 305 (2014). https://doi.org/10.1007/s00411-013-0511-8

H. Woflson, R. Ahmad, Y. Twig, B. Williams, A. Blank, Health Phys. 108, 326 (2015). https://doi.org/10.1097/HP.0000000000000187

J.M. Brady, N.O. Aarestad, H.M. Swartz, Health Phys. 15, 43 (1968). https://doi.org/10.1097/00004032-196807000-00007

P. Fattibene, A. Wieser, E. Adolfsson, L.A. Benevides, M. Brai, F. Callens, V. Chumak, B. Ciesielski, S. Della Monaca, K. Emerich, H. Gustafsson, Y. Hirai, M. Hoshi, A. Israelsson, A. Ivannikov, D. Ivanov, J. Kaminska, W. Ke, E. Lund, M. Marrale, L. Martens, C. Miyazawa, N. Nakamura, W. Panzer, S. Pivovarov, R.A. Reyes, M. Rodzi, A.A. Romanyukha, A. Rukhin, S. Sholom, V. Skvortsov, V. Stepanenko, M.A. Tarpan, H. Thierens, S. Toyoda, F. Trompier, E. Verdi, K. Zhumadilov, Radiat. Meas. 46, 765 (2011). https://doi.org/10.1016/j.radmeas.2011.05.001

A. Wieser, P. Fattibene, E.A. Shishkina, D.V. Ivanov, V. De Coste, A. Güttler, S. Onori, Radiat. Meas. 43, 731 (2008). https://doi.org/10.1016/j.radmeas.2008.01.032

ISO, ISO 13304-1:2020: Radiological protection—minimum criteria for electron paramagnetic resonance (EPR) spectroscopy for retrospective dosimetry of ionizing radiation—part 1: general principles (2020)

ISO, ISO 13304-2:2020: Radiological protection—minimum criteria for electron paramagnetic resonance (EPR) spectroscopy for retrospective dosimetry of ionizing radiation—part 2: ex vivo human tooth enamel dosimetry (2020)

A.A. Romanyukha, D.A. Schauer, EPR 21st century (Elsevier, 2002), p. 603–612

T. De, A. Romanyukha, F. Trompier, B. Pass, P. Misra, Appl. Magn. Reson. 44, 375 (2013). https://doi.org/10.1007/s00723-012-0379-9

V. Guilarte, F. Trompier, M. Duval, PLoS ONE 11, 1 (2016). https://doi.org/10.1371/journal.pone.0150346

Y. Hirai, K.A. Cordova, Y. Kodama, K. Hamasaki, A.A. Awa, M. Tomonaga, M. Mine, H.M. Cullings, N. Nakamura, Int. J. Radiat. Biol. 95, 321 (2018). https://doi.org/10.1080/09553002.2019.1552807

Y. Hirai, Y. Kodama, H.M. Cullings, C. Miyazawa, N. Nakamura, J. Radiat. Res. 52, 600 (2011). https://doi.org/10.1269/jrr.10144

G. Gualtieri, S. Colacicchi, R. Sgattoni, M. Giannoni, Appl. Radiat. Isot. 55, 71 (2001). https://doi.org/10.1016/S0969-8043(00)00351-1

IAEA, The Radiological Accident in Nueva Aldea (2009)

A. Romanyukha, C.A. Mitchell, D.A. Schauer, L. Romanyukha, H.M. Swartz, Health Phys. 93, 631 (2007). https://doi.org/10.1097/01.HP.0000269507.08343.85

F. Trompier, F. Queinnec, E. Bey, T. De Revel, J.J. Lataillade, I. Clairand, M. Benderitter, J.F. Bottollier-Depois, Health Phys. 106, 798 (2014). https://doi.org/10.1097/HP.0000000000000110

IAEA, The Radiological Accident in Chilca (2018)

S. Sholom, V. Chumak, M. Desrosiers, A. Bouville, Radiat. Prot. Dosim. 120, 210 (2006). https://doi.org/10.1093/rpd/nci678

M. Miyake, Y. Nakai, I. Yamaguchi, H. Hirata, N. Kunugita, B.B. Williams, H.M. Swartz, Radiat. Prot. Dosim. 172, 248 (2016). https://doi.org/10.1093/rpd/ncw214

S. Toyoda, M. Murahashi, M. Natsuhori, S. Ito, A. Ivannikov, A. Todaka, Radiat. Prot. Dosim. 186, 48 (2019). https://doi.org/10.1093/rpd/ncz037

M.O. Degteva, L.R. Anspaugh, A.V. Akleyev, P. Jacob, D.V. Ivanov, A. Wieser, M.I. Vorobiova, E.A. Shishkina, V.A. Shved, A. Vozilova, S.N. Bayankin, B.A. Napier, Health Phys. 88, 139 (2005). https://doi.org/10.1097/01.HP.0000146612.69488.9c

A.A. Romanyukha, E.A. Ignatiev, E.K. Vasilenko, E.G. Drozhko, A. Wieser, P. Jacob, I.B. Keirim-Markus, E.D. Kleschenko, N. Nakamura, C. Miyazawa, Health Phys. 78, 15 (2000). https://doi.org/10.1097/00004032-200001000-00004

A. Wieser, E. Vasilenko, P. Fattibene, S. Bayankin, N. El-Faramawy, D. Ivanov, P. Jacob, V. Knyazev, S. Onori, M.C. Pressello, A. Romanyukha, M. Smetanin, A. Ulanovsky, Radiat. Environ. Biophys. 44, 279 (2006). https://doi.org/10.1007/s00411-005-0024-1

A. Ivannikov, K. Zhumadilov, E. Tieliewuhan, L. Jiao, D. Zharlyganova, K.N. Apsalikov, G. Berekenova, Z. Zhumadilov, S. Toyoda, C. Miyazawa, V. Skvortsov, V. Stepanenko, S. Endo, K. Tanaka, M. Hoshi, J. Radiat. Res. (2006). https://doi.org/10.1269/jrr.47.A39

S. Sholom, M. Desrosiers, A. Bouville, N. Luckyanov, V. Chumak, S.L. Simon, Radiat. Meas. 42, 1037 (2007). https://doi.org/10.1016/j.radmeas.2007.05.007

K. Zhumadilov, A. Ivannikov, V. Stepanenko, D. Zharlyganova, S. Toyoda, Z. Zhumadilov, M. Hoshi, J. Radiat. Res. 54, 775 (2013). https://doi.org/10.1093/jrr/rrt008

E.I. Tolstykh, E.A. Shishkina, M.O. Degteva, D.V. Ivanov, V.A. Shved, S.N. Bayankin, L.R. Anspaugh, B.A. Napier, A. Wieser, P. Jacob, Health Phys. 85, 409 (2003). https://doi.org/10.1097/00004032-200310000-00004

E.A. Shishkina, A.Y. Volchkova, Y.S. Timofeev, P. Fattibene, A. Wieser, D.V. Ivanov, V.A. Krivoschapov, V.I. Zalyapin, S. Della Monaca, V. De Coste, M.O. Degteva, L.R. Anspaugh, Radiat. Environ. Biophys. 55, 477 (2016). https://doi.org/10.1007/s00411-016-0666-1

A. Giussani, M.A. Lopez, H. Romm, A. Testa, E.A. Ainsbury, M. Degteva, S. Della Monaca, G. Etherington, P. Fattibene, I. Güclu, A. Jaworska, D.C. Lloyd, I. Malátová, S. McComish, D. Melo, J. Osko, A. Rojo, S. Roch-Lefevre, L. Roy, E. Shishkina, N. Sotnik, S.Y. Tolmachev, A. Wieser, C. Woda, M. Youngman, Radiat. Environ. Biophys. 59, 357 (2020). https://doi.org/10.1007/s00411-020-00845-y

I. Clairand, C. Huet, F. Trompier, J.F. Bottollier-Depois, Radiat. Meas. 43, 698 (2008). https://doi.org/10.1016/j.radmeas.2007.12.051

H.M. Swartz, A.B. Flood, B.B. Williams, V. Meineke, H. Dörr, Health Phys. 106, 755 (2014). https://doi.org/10.1097/HP.0000000000000069

F. Trompier, J. Sadlo, J. Michalik, W. Stachowicz, A. Mazal, I. Clairand, J. Rostkowska, W. Bulski, A. Kulakowski, J. Sluszniak, S. Gozdz, A. Wojcik, Radiat. Meas. 42, 1025 (2007). https://doi.org/10.1016/j.radmeas.2007.05.005

A. Marciniak, B. Ciesielski, M. Juniewicz, A. Prawdzik-Dampc, M. Sawczak, Radiat. Environ. Biophys. 58, 287 (2019). https://doi.org/10.1007/s00411-019-00777-2

S. Sholom, S.W.S. McKeever, Radiat. Meas. 88, 41 (2016). https://doi.org/10.1016/j.radmeas.2016.02.014

S. Sholom, S.W.S. McKeever, Radiat. Prot. Dosim. (2019). https://doi.org/10.1093/rpd/ncz019

M. Zdravkova, N. Crokart, F. Trompier, N. Beghein, B. Gallez, R. Debuyst, Phys. Med. Biol. 49, 2891 (2004). https://doi.org/10.1088/0031-9155/49/13/009

A. Romanyukha, F. Trompier, R. Reyes, D. Christensen, C. Iddins, S. Sugarman, Radiat. Environ. Biophys. 53, 755 (2014). https://doi.org/10.1007/s00411-014-0553-6

J. Guo, X. Luan, Y. Tian, L. Ma, X. Bi, J. Zou, G. Dong, Y. Liu, Y. Li, J. Ning, K. Wu, Sci. Rep. 11, 1 (2021). https://doi.org/10.1038/s41598-021-82462-3

M. Umakoshi, I. Yamaguchi, H. Hirata, N. Kunugita, B.B. Williams, H.M. Swartz, M. Miyake, Health Phys. 113, 262 (2017). https://doi.org/10.1097/HP.0000000000000698

H.M. Swartz, A. Iwasaki, T. Walczak, E. Demidenko, I. Salikov, P. Lesniewski, P. Starewicz, D. Schauer, A. Romanyukha, Appl. Radiat. Isot. 62, 293 (2005). https://doi.org/10.1016/j.apradiso.2004.08.016

B.B. Williams, R. Dong, A.B. Flood, O. Grinberg, M. Kmiec, P.N. Lesniewski, T.P. Matthews, R.J. Nicolalde, T. Raynolds, I.K. Salikhov, H.M. Swartz, Radiat. Meas. 46, 772 (2011). https://doi.org/10.1016/j.radmeas.2011.03.009

M. Zdravkova, B. Gallez, R. Debuyst, Radiat. Meas. 39, 143 (2005). https://doi.org/10.1016/j.radmeas.2004.05.003

L. Zehr, Mandible Osteoradionecrosis. https://www.statpearls.com/ArticleLibrary/viewarticle/26415. Accessed 13 July 2021

S.G. Swarts, J.W. Sidabras, O. Grinberg, D.S. Tipikin, M.M. Kmiec, S.V. Petryakov, W. Schreiber, V.A. Wood, B.B. Williams, A.B. Flood, H.M. Swartz, Health Phys. 115, 140 (2018). https://doi.org/10.1097/HP.0000000000000874

B.C. Shirley, J.H.M. Knoll, J. Moquet, E. Ainsbury, N.-D. Pham, F. Norton, R.C. Wilkins, P.K. Rogan, Int. J. Radiat. Biol. 96, 1492 (2020). https://doi.org/10.1080/09553002.2020.1820611

E. Macaeva, M. Mysara, W.H. De Vos, S. Baatout, R. Quintens, Int. J. Radiat. Biol. 95, 64 (2019). https://doi.org/10.1080/09553002.2018.1511926

M. Port, P. Ostheim, M. Majewski, T. Voss, J. Haupt, A. Lamkowski, M. Abend, Radiat. Res. 192, 208 (2019). https://doi.org/10.1667/RR15360.1

E.A. Ainsbury, E. Bakhanova, J.F. Barquinero, M. Brai, V. Chumak, V. Correcher, F. Darroudi, P. Fattibene, G. Gruel, I. Guclu, S. Horn, A. Jaworska, U. Kulka, C. Lindholm, D. Lloyd, A. Longo, M. Marrale, O. Monteiro Gil, U. Oestreicher, J. Pajic, B. Rakic, H. Romm, F. Trompier, I. Veronese, P. Voisin, A. Vral, C.A. Whitehouse, A. Wieser, C. Woda, A. Wojcik, K. Rothkamm, Radiat. Prot. Dosim. 147, 573 (2011). https://doi.org/10.1093/rpd/ncq499

I.K.K. Bailiff, S. Sholom, S.W.S. McKeever, Radiat. Meas. 94, 83 (2016). https://doi.org/10.1016/j.radmeas.2016.09.004

IAEA, The radiological accident at the irradiation facility in Nesvizh (1996)

N.C. Gorin, T.M. Fliedner, P. Gourmelon, A. Ganser, V. Meineke, B. Sirohi, R. Powles, J. Apperley, Ann. Hematol. 85, 671 (2006). https://doi.org/10.1007/s00277-006-0153-x

IAEA, The Radiological Accident in Lia, Georgia (2014)

J.M. Sullivan, P.G.S. Prasanna, M.B. Grace, L.K. Wathen, R.L. Wallace, J.F. Koerner, C.N. Coleman, Health Phys. 105, 540 (2013). https://doi.org/10.1097/HP.0b013e31829cf221

U. Kulka, M. Abend, E. Ainsbury, C. Badie, J.F. Barquinero, L. Barrios, C. Beinke, E. Bortolin, A. Cucu, A. De Amicis, I. Domínguez, P. Fattibene, A.M. Frøvig, E. Gregoire, K. Guogyte, V. Hadjidekova, A. Jaworska, R. Kriehuber, C. Lindholm, D. Lloyd, K. Lumniczky, F. Lyng, R. Meschini, S. Mörtl, S. Della Monaca, O. Monteiro Gil, A. Montoro, J. Moquet, M. Moreno, U. Oestreicher, F. Palitti, G. Pantelias, C. Patrono, L. Piqueret-Stephan, M. Port, M.J. Prieto, R. Quintens, M. Ricoul, H. Romm, L. Roy, G. Sáfrány, L. Sabatier, N. Sebastià, S. Sommer, G. Terzoudi, A. Testa, H. Thierens, I. Turai, F. Trompier, M. Valente, P. Vaz, P. Voisin, A. Vral, C. Woda, D. Zafiropoulos, A. Wojcik, Int. J. Radiat. Biol. 93, 2 (2017). https://doi.org/10.1080/09553002.2016.1230239

R.C. Wilkins, Z. Carr, D.C. Lloyd, Radiat. Prot. Dosim. 172, 47 (2016). https://doi.org/10.1093/rpd/ncw154

IAEA, Response and Assistance Network (RANET). https://www.iaea.org/services/networks/ranet. Accessed 13 July 2021

Acknowledgements

We thank our unknown reviewers for their significant contribution to the improvement of this paper.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Beinke, C., Siebenwirth, C., Abend, M. et al. Contribution of Biological and EPR Dosimetry to the Medical Management Support of Acute Radiation Health Effects. Appl Magn Reson 53, 265–287 (2022). https://doi.org/10.1007/s00723-021-01457-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00723-021-01457-5