Abstract

Long-term monitoring of climate data is significant for grasping the law and development trend of climate change and guaranteeing food security. However, some weather stations lack monitoring data for even decades. In this study, 62 years of historical monitoring data from 105 weather stations in Xinjiang were used for missing sequence prediction, validating proposed data reconstruction tool. First of all, study area was divided into three parts according to the climatic characteristics and geographical locations. A spatio-temporal multilayer perceptron (MLP) was established to reconstruct meteorological data with three time scales (Short term, cycle and long term) and one spatio dimension as inputing (rolling predictions, one step predicts one day), filling in long sequence blank data. By designing an end-to-end model to autonomously detect the locations of missing data and make rolling predictions,we obtained complete meteorological monitoring data of Xinjiang from 1961 to 2022. Seven kinds of parameter reconstructed include maximum temperature (Max_T), minimum temperature (Min_T), mean temperature (Ave _ T), average water vapor pressure (Ave _ WVP), relative humidity (Ave _ RH), average wind speed (10 m Ave _ WS), and sunshine duration (Sun_H). Contrasted the prediction accuracy of the model with general MLP and LSTM, results shows that, in the seven types of parameters, designed spatio-temporal MLP decreases MAE and MSE by 7.61% and 4.80% respectively. The quality of reconstructed data was evaluated by calculating correlation coefficient with the monitored sequences of nearest station,determining the applicable meteorological parameters of the model according to the results. Results show that,proposed model reached satisfied average correlation coefficient for Max_T, Min_T, Ave _ T and Ave _ WVP parameters are 0.969, 0.961, 0.971 and 0.942 respectively. The average correlation coefficient of Sun_H and Ave _ RH are 0.720 and 0.789. Although it is difficult to predict extreme values, it can still capture the period and trend; the reconstruction effect of 10 m Ave _ WS is poor, with the average similarity of 0.488. Proposed method is applicable to reconstruct Max_T, Min_T, Ave _ T and Ave _ WVP, but not recommended to reconstruct Sun_H, Ave _ RH and Ave _ WS.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Agriculture, as the most fundamental industry for human, is facing a serious threat of climate change.Meteorological disasters account for over 70% of the natural disasters globally,have caused serious economic losses (Qin et al. 2002). More severely, global climate is changing dramatically,due to amount of greenhouse gases human activities producted. Because of climate warming, the frequency and intensity of drought and flood are increasing, and the harm to agricultural production is increasingly intensified (IPCC. 2012). From 2010 to 2017, global average annual economic losses due to drought reached US $23.125 billion, with annual grain production cuts ranging from millions of tons to more than 30 million tons (Buda et al. 2018).In past 40 years, flooding events caused over a trillion dollars damage as well (UNDRR. 2020).Monitoring and analysis of meteorological data are able to reduce food and economic losses (Ziolkowska Jadwiga and Jesus 2018).

In order to effectively guide agricultural production, meteorological monitoring usually includes meteorological parameters such as temperature, humidity, water vapor pressure, wind speed and sunshine.What is more, conducting research on climate forecasting requires long-term, large-scale and comprehensive climate data (Bonnet et al. 2020). Governments and scientific communities have been committed to the construction of meteorological databases (Anderson et al. 2008), a large number of professional meteorological monitoring stations have been established around the world, including China. However, there is much missing historical data due to temporal differences of monitoring station establishment, sensor failure, and other reasons.Thus, it is crucial to reconstruct the complete meteorological monitoring data.

Usually, researchers use interpolation method combined with manual correction to reconstruct missing meteorological data.Which not only consumes a lot of manpower, but also, due to the spatial variability of geographical conditions, the data results reconstructed by the traditional method are too smooth and inaccurate (Yao et al. 2023). Machine learning is a better interpolation tool (Li et al. 2023),but which performed poorly when deal with long-sequence missing data scenarios. A simple and efficient method for data reconstruction, deep neural network, has a great potential in meteorological data reconstruction tasks. The neurons in the hidden layers of the neural network can constantly update the weights under supervision of true value, learning high-dimensional association among different data, and more accurately complement missing data (Rajaee et al. 2019). In the task of reconstructing monitoring data of turbomechanical particle flow. Deep learning method was more accurate compared with six commonly used interpolation methods (Ghasem and Nader 2022). In fact, in the field of weather forecasting, some available deep learning models have been published. Training baseed on large amounts of data, FourCastNet2 can calculate the next 24 h of climate for 100 sites in just 7 s (Jaideep et al. 2022),orders of magnitude faster than the numerical weather prediction (NWP). The Pangu model proposed by Huawei team can accurately and quickly predict the global climate by learning global meteorological monitoring data of past four decades (Bi et al. 2023).

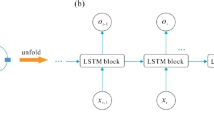

Selection and design of neural networks is a key step in reconstructing climate data. Ghose selected recursive neural network (RNN) for groundwater level prediction (Ghose et al. 2018).Vu reconstructed 50 years groundwater level data in Normandy (France) based on the long and short-term memory (LSTM) (Vu et al. 2020). Differently, meteorological parameters are greatly spatially correlated, as a typical spatiotemporal sequence.Nature Subissue Geoscience published related research, which using image restoration technology combined with HadCRUT4 global historical temperature grid dataset, reconstructed complete global monthly grid temperature, and the reconstructed data sequence has extremely high correlation with the non-reconstructed data (Kadow and Ulbrich 2020). Continuity of time and spatial correlation must be considered simultaneously in the data reconstruction.Most of the frontier studies of spatio-temporal prediction are modeling based on graph neural network (GNN) and Transformer (Zhisong & Li. 2021). But they have high computational complexity and memory overhead. Although MLP is a relatively simple deep learning model, the ability of spatiotemporal prediction is not inferior to complex models in recent studies.The MLPST model shows that, compared with RNN, GNN and Transformer, it can be very accurate even completely based on MLP (Zhang et al. 2023).Usually, different type of time series data have different characteristics, and screening some obvious characteristics can significantly improve the model performance (Tang et al. 2024).Therefore, in specific tasks, feature engineering and special model design need to be carried out to improve the prediction performance of the model.

To meet the demand of meteorological data fast reconstruction in agricultural productions, reduce the workload of meteorologic data reconstruction, we designed a spatio-temporal MLP as a reconstruction tool. A total of 143 missing data (44 weather stations) were reconstructed obtained from three divided study areas in Xinjiang, which contrasted with general MLP,testing the availability of the proposed model while obtaining the reconstruction results. The parameters reconstructed include Max_T, Min_T, Ave _ T, Ave _ WVP, Ave _ RH, 10 m Ave _ WS, and Sun_H. Inputs make up with short-term, cyclical, long-term trends and the same time data of weather stations with the highest sequence similarity, length of filled sequences ranged from one month to 38 years.The confidence of the results is measured by the correlation with the most adjacent station. Finally, datasets automatic construction module, automatic training module, missing positions automatic query module, and automatic rolling prediction module are integrated, realizing end-to-end data reconstruction and published as a micro service.

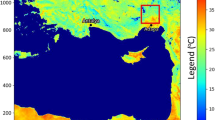

2 Study area and data

The study area is located in Xinjiang, northwest of China. Which is one of the most important cotton production bases in China and most developed drought agricultural technology region (Liu. 2022). Located in the hinterland of Eurasia, due to complex terrain and frequent weather system activity, drought is the main climatic feature of this region (Weiyi et al. 2008). The Tianshan Mountains crosses the central region, divides Xinjiang into northern Xinjiang and southern Xinjiang. The water vapor could enter northern Xinjiang but hardly reach southern Xinjiang, so the drought degree difference of drought between the north and the south is obvious (Wang. 2023). Yili River Valley located in west of the Tianshan Mountains in Xinjiang, surrounded by mountains on three sides, with abundant precipitation, forming special climate (Yan et al. 2017).

105 weather stations in this study are distributed in three areas: northern Xinjiang (A), southern Xinjiang (B) and Yili (C), having 48, 44, 13 sites respectively, recorded meteorological data for nearly 62 years (Fig. 1). Among those, 44 stations exist data missing in varying degrees, with missing parameter types and missing duration varied. Table 1 listed codes and parameters of weather stations with missing data. Of these, 16 stations exist Max_T and Min_T data missing. The number of Ave_T is 13, Ave_WVP and Ave_RH is 23, 10 m Ave_WS is 28, Sun_H is 24. In totally, we need to reconstruct a total of 143 sequences,with time spans from 1961 to 2022. Figure 2 corresponding to Table 1, shows that the specific missing period, these missing lengths are long, the missing location is different, increased the difficulty of the reconstruction.

3 Methodology and model design

Traditional meteorological data reconstruction methods are time-consuming, at the same time, professional and experienced personnel are required to complete it. Which is obviously unrealistic for software engineer or other relevant personnels. Spatio-temporal MLP proposed in this study can mine rules from existing data, which can give Non-professional people the ability to reconstruct missing meteorological data.

3.1 MLP

MLP is the most classic deep neural network, widely used to solve the classification and regression problems of nonlinearity. Compared to other deeplearning models, the network structure of MLP is very simple, and calculation speed is very fast. Whose structure (Fig. 3 lower-right) includes input layer, hidden layer and output layer, each layer contains several neurons, and neurons in the upper and lower layers are connected to each other for information exchange (Benedict. 1988). When training it, the weight parameters of the neurons are constantly updated until a good fit is achieved. Forward propagation and backpropagation are required to complete each time the weights are updated (Rumelhart et al. 1986). Forward propagation takes the outputs of the previous layer as the inputs of the next layer, calculates the outputs of the next layer according to the weight. Consider the layer1 and layer2 as examples, outputs of the layer2 is:

where σ is the activation function, which is the key for MLP to achieve nonlinear fitting. The most commonly used activation function, ReLU, is selected in this study (Glorot et al. 2011):

Prediction errors is measured by cost function LOSS. The back propagation process is based on the chain conduction law, calculating gradients each layer parameters in the network to represent the influence of the parameters on the prediction errors, and updating the weight through multiply by learning rate α, until the loss value no longer drops. Which can be considered that the MLP model fitting has reached the optimal solution. The initial learning rate selected for this study was 0.001. The backpropagation process is:

3.2 Spatio-temporal MLP

Past studies have proved that,climate shows a short-term dependence, and which is cyclical and shows a trend in the long term (Kai et al. 2020), and closely associated with the adjacent site data. According to these experiences,we designed four modules based on the MLP (Fig. 3): Spatial MLP, Short-term MLP, Periodic MLP, Trend MLP. Time series, with different time scales resampled, were entered separately Short-term MLP, Periodic MLP, Trend MLP models, extracting short-term trends, cyclical and long-term trend characteristics of historical data respectively. Monitoring values from nearby stations were fed into the Spatial MLP module to obtain spatial associations between them. Results of the four modules are combined as inputs of predictive header, two fully connected layers. Which enable spatio-temporal association in the sequence is captured.

Dataset size and input sequence length are negatively correlated, need to be balanced when designing the inputs. In our model, all of the inputs length were set to 8, ensuring input format is unified. Inputs of short-term MLP are values last 8 days. Inputs of Periodic MLP and Trend MLP are values resampled according to 90 days intervals and 365 days respectively. Inputs of Spatial MLP were the monitoring values of eight stations with highest similarity to the target sequence within the study region. Using pyramid structure, the number of neurons in each layer is half the number of neurons in the previous layer, and which is usually able to extract features at different scales more effectively (Yang et al. 2020). Rolling prediction, which mean each step to only predicts one day, and then predicted value is used as part of new input to predict the next day, gradually supplementing all data.

All meteorological data were standardized according to the following formula:

where \({x}_{i}{}^{normal}\) is normalized value,\({x}_{i}\) is actual value,\({x}_{\text{max}}\) and \({x}_{\text{min}}\) are maximum and minimum value of sequence, respectively. This normalization method standardizes the values to between 0–1, be able to eliminate the effect of dimension and negative values for model fitting.

When predicting, we restore the results,and output the dimensional results:

where \({y}_{i}{}^{pred}\) is predicted value,\({\widehat{y}}_{i}\) is output value of neural network.

3.3 Assessment methods

Meteorological similarity is measured by Euclidean distance of the sequence commonly,but there is a big difference between the different parameters. In order to standardize this index to 0–1, we define similarity based on Euclidean distance of two sequences:

SMmn represents the similarity of m sequence and n sequence, yi, y’i are the value of two sequences at the same time, respectively. n is the number of non-missing value. SM is closer to 1, the more similar, the closer to 0, the lower similarity. SM was used to select the inputs for the Spatial MLP module.

We used two common indicators, mean squared error (MSE) and mean absolute error (MAE), to evaluate the quality of the prediction:

where \({\text{y}}_{i}\) is the real measure of climate data; \({\widehat{y}}_{i}\) is the estimated value of climate data; and \({\overline{y} }_{i}\) is the mean of \({\text{y}}_{i}\). MAE and MSE were used to select the best number of hidden layers and evaluate the error of the reconstructed data.

When evaluating the credibility of the reconstructed data, in addition to MAE and MSE, we used the correlation coefficient as the evaluation index:

\({h}_{i}^{m}\) and \({h}_{i}^{n}\) are value of m sequence and n sequence,respectively, \({\overline{h} }_{i}^{m}\) and \({\overline{h} }_{i}^{n}\) is the average value of m sequence and n sequence, respectively. Correlation coefficient is closer to 1, the reconstructed data is more credible, and correlation coefficient closer to 0, the more unreliable it is. Based on reconstruction results, larger average correlation coefficient means more accuracy. Meanwhile, comparing the distribution of correlation coefficients in different tasks, the more concentrated the results, the better the robustness.

4 Application instances of reconstruction of missing climate data

4.1 Sub-task division

The reconstruction task was divided into 21 scenarios, 143 sub-tasks depending on the region and the parameters. Climate region A consists of 53 sub-tasks, among these, Max_T, Min_T and Ave_T take up 5 sub-tasks respectively; Ave_WVP and Ave_RH take up 6 sub-tasks respectively; Ave_WS and Sun_H take up 9 and 17 sub-tasks respectively.Climate region B has 75 sub-tasks, Max_T, Min_T, Ave_T, Ave_WVP, Ave_RH, Ave_WS, Sun_H take up 9, 9, 6, 15, 15, 15, 6 sub-tasks respectively. The numbers in climate region C are 2, 2, 2, 2, 2, 4, 1 respectively, total sub-tasks numbers were 15.

The SM of each sequence was calculated and derived as SM table. We show the SM of the target station and stations in the same region in Fig. 4 due to the large amount of table data. The more pronounced the yellow color, the higher the similarity, and the more pronounced blue the lower similarity, medium similarity shows green. Under all of the reconstruction scenarios, overall, the SM of these sequences ranged between 70.6% and 99.48%.Based on SM, 8 stations with the highest similarity to each target task, 143 groups in total. Following the spatiotemporal sampling method shown in Fig. 3, build model inputs.

4.2 Determine the number of MLP hidden layers

The number of layers of the MLP hidden layer is one of the most important parameters of model.A suitable number of hidden layers can enhance the ability of network to extract the data features, and then improve the recognition accuracy. While too many hidden layers can largely increasing the number of model parameters, and causing slower run speed of model. To determine the number of hidden layers, we randomly picked five datasets, testing MSE, MAE and training time of the model prediction. Numbers of hidden layers is seted from 1 to 7(training 1000 epochs). Figure 5 displays, when setting 1–4 hidden layers, MAE and MSE of the model did have a significant downward trend as the hidden layer increasing. But when number of hidden layers greater than 4, MAE and MSE showed little improvement, even rose on some datasets. This may be related to the appearance of gradient explosion when model is too deep.

Figure 6 shows, time consumption to complete training increased significantly with the increase of the number of hidden layers, on the five randomly selected datasets, which displays almost linear. Considering the results of the above trials, the number of hidden layers chosed for MLP is 4, and the longest time consumption for a single task is 519.35 s.

4.3 Train model and reconstruct missing data

Figure 7 shows our prediction process.Using pycharm2022.1 for our programming, we integrated multiple modules to implement end-to-end programs, with pre-processing data automatically, training model automatically,detecting missing data automatically,and reconstructing data automatically. The situation of missing data is complex, and the task of manually constructing datasets is large. Automatic data pre-processing model could generates datasets by reading data sheets according to task list, and completes normalization.Data were disrupted the order before entering.Automatic training model could Completes multiple tasks and saves as different parameter files.When predicting, missing data detection model could detects location of missing data.Later, according to detecting results, rolling prediction model automatically forward or backward predicting.

Tensorflow (Abadi et al. 2016) was selected to be development framework in this study.Using Adaptive Moment Estimation(Adam) optimizer to improve learning efficiency (Kingma and Ba 2014), it can adjust automatically the learning rate according to historical gradient information. At the beginning of training, the larger learning rate helps the model to converge quickly. While later, learning rate adjusts smaller to improve accuracy of model.Meanwhile, Adam normalized the weight parameters, which also alleviates overfitting. MSE was choosed to be loss function.As a skill of training, Dropout layer can effectively prevent model overfitting (Srivastava et al. 2014). In this study, the super-parameter of Dropout layers was set to 0.5.

Figure 8 shows reconstruction results of Max_T, Min_T, Ave_T, Ave_WVP, Ave_RH and Sun_H.Except Sun_H,from the figure, reconstructed sequences of other five parameters are indistinguishable from the real sequences. Sun_H usually represents time length,that the solar radiation above certain intensity. Which influenced by all kinds of meteorological factors, especially the change of the clouds. Our prediction values almost no 0 while measured exists some 0 values, can not mining to the occurrence of 0 values. But we can clearly see that, even without filtering, proposed model could dig out the cycle and trend laws. These reconstructed Sun_H data still useful. For Ave_WS (Fig. 9), model is difficult to predict it. Although Ave _ WS also has annual periodic changes in the long term, it is almost and unpredictable on a smaller time scale.

4.4 Evaluate model and quality of reconstructed data

We compared our designed model with LSTM and general MLP model in five completely random (both parameters and weather stations are random) reconstruction tasks.

As can be seen from Fig. 10, prediction error of spatio-temporal MLP is minimal, LSTM is followed, MLP has the largest error. Both MAE and MSE, design model takes lower error than general MLP in all tasks, and spatio-temporal structure improved the predictive power of the MLP model. Due to the obvious differences in values of different meteorological parameters, percentage of error reduction was used to measure precision improvement. Compared to the second-ranked LSTM, in the five tasks, MSE of per task decreased by 7.61% and MAE by 4.80%, in average.

Compared with the visual evaluation, the evaluation index can give more information about the reconstruction results. Assessing the quality of reconstructed data is very difficult due to the difficulty in tracing the real data of the past. By calculating correlation coefficient of sequences between reconstructed data and the nearest weather station data, credibility of reconstructed meteorological data was scientifically evaluated. A higher correlation coefficient indicates a higher confidence.

Correlation coefficients of all reconstructed sub-tasks are shown in Table 2. From the reconstruction effect of temperature,we very approach the results of Christopher (0.9941) (Kadow and Ulbrich 2020), even exceed theirs in 4 tasks of temperature reconstruction (45 in total). More importantly, the data we reconstructed are of daily scale, smaller than their time granularity (monthly). Our work demonstrates that MLP with special spatiotemporal design can better reconstructe climate data.

The consistency of evaluation indicators in different tasks is also one of the goals we pursue,which can help us to suggest parameter types that the model is suitable for reconstruction. Figure 11 shows the distribution of its correlation coefficient index.Which can be easily see, Max_T, Min_T, Ave_T, Ave_WVP shows excellent performance, with average correlation coefficient is over 0.9 and distribution is very concentrated; Ave_RH and Sun_H performance unstable, although average correlation coefficient is over 0.7 but dispersed; Ave_WS shows poor results, average correlation coefficient is around 0.5 and distributing is dispersed. Which shows that our model is very suitable for four parameters: Max_T, Min_T, Ave _ T, and Ave _ WVP, while Ave _ RH, Sun_H, and Ave _ WS are less suitable.

4.5 Release model

In order to provide convenient services for people in the agriculture field, the model accomplished in this study will published on the Agricultural Smart Brain platform as a tool.Which developed by Beijing Lianchuang Siyuan Measurement and control Technology Co., LTD, providing scientific research data, computing power and publishing AI micro-services for scientific researchers. Users can obtain it by purchasing access authority to the platform. The link of our microservices as follow: http: // 192. 168. 50. 201: 15000/ app/ services/ visionarytech/ test-1/ alg- 26bc86f42a137f8f

5 Conclusion

In order to reconstruct the long-term missing meteorological monitoring data in agricultural field, proposed an end-to-end rapid reconstruction method based on MLP.

Spatio-temporal datasets were built according to the similarity indicator SM, standardized data to ensure good performance of the model. Backbone was designed four MLP modules with 4-hidden layers to jointly learn short-term trends, periodicity, long-term trends, and spatial associations. Predictive head consisted with two fully connected layers. After that, the automatic preprocessing, automatic detection of missing data location, automatic model training and rolling prediction modules are coded and integrated to realize end-to-end long sequence reconstruction. Our model is able to complete a single reconstruction task within 10 min.

In contrast with MLP and LSTM, MAE and MSE obtained by our model were reduced by 7.61% and 4.80%, indicating that our design effectively improved the performance of MLP model and outperforms LSTM. Daily meteorological monitoring data of 44 meteorological stations (143 tasks) in Xinjiang from 1961 to 2022 were reconstructed using our method. The evaluation indexs show that, average correlation coefficient of Max_T, Min_T, Ave_T and Ave_WVP are 0.969,0.961,0.971, and 0.942 respectively,showing high consistency and high credibility; average correlation coefficient of Ave_RH and Sun_H are 0.720 and 0.789 respectively, showing low consistency and general credibility; average correlation coefficient of Ave_WS is 0.488, showing low consistency and low credibility.Which is recommended to use our method when reconstructing Max_T, Min_T, Ave _ T and Ave _ WVP, providing an important solution to solve the problem of missing data in agrometeorological field.

Finally, we released our model on Agricultural Smart Brain platform, provided users a tool of data reconstruction, in the form of micro service.

Code/data availability

Both the code and data are freely available by contacting the corresponding authors.

References

Abadi M, Barham P, Chen J, Chen Z, Davis A, Dean J, Devin M, Ghemawat S, Irving G, Isard M, Kudlur M, Levenberg J, Monga R, Moore S, Gordon Murray D, Steiner B, Tucker PA, Vasudevan V, Warden P, Wicke M, Yu Y, Zhang X (2016) TensorFlow: a system for large-scale machine learning. IEICE Trans Fundam Electron Commun Comput Sci. CoRR abs/1605.08695

Anderson SP, Bales RC, Duffy CJ (2008) Critical Zone Observatories: building a network to advance interdisciplinary study of Earth surface processes. Mineral Mag 72(1):7–10

Benedict A K (1988) Learning in the multilayer perceptron. J Phys A Math General 21(11). https://doi.org/10.1088/0305-4470/21/11/021

Bi K, Xie L, Zhang H, Chen X, Gu X, Tian Q (2023) Accurate medium-range global weather forecastingwith 3D neural networks. Nature 619:533–538. https://doi.org/10.1038/s41586-023-06185-3

Bonnet R, Bóe J, Habets F (2020) Influence of multidecadal variability on high and low flows: the case of the Seine basin. Hydrol Earth Syst Sci 24:1611–1631

Buda Su, Jinlong Huang T, Fischer Yanjun Wang, Kundzewicz Z, Zhai J, Sun Hemin, Anqian Wang X, Zeng Guojie Wang, Tao H, Gemmer M, Xiucang L, Jiang T (2018) Drought losses in China might double between the 1.5°C and 2.0°C warming. Proc Natl Acad Sci USA 115(42):10600–10605

Ghasem A, Nader M (2022) Reconstruction of particle image velocimetry data using flow-based features and validation index: a machine learning approach. Measurement Sci Technol, 33(1). https://doi.org/10.1088/1361-6501/ac2cf4

Ghose D, Das U, Roy P (2018) Modeling response of run off and evapotranspiration for predicting water table depth in arid region using dynamic recurrent neural network. Groundwater Sustainable Dev 6:263–269

Glorot X, Antoine B, Yoshua B (2011) Deep sparse rectifier neural networks. International Conference on Artificial Intelligence and Statistics. (Published in International Conference 14 June 2011 Computer Science, Biology)

IPCC (2012) Summary for policymakers∥Managing the Risks of Extreme Events and Disasters to Advance Climate Change Adaptation. A Special Report of Working Groups I and II of the Intergovernmental Panel on Climate Change, 1-19. Cambridge University Press, Cambridge

Jaideep P, Shashank S, Harrington P, Raja S, Chattopadhyay A, Morteza M, Thorsten K, Hall D, Li Z, Kamyar A, Pedram H, Karthik K, Animashree A (2022) FourCastNet: a global data-driven high-resolution weather model using adaptive Fourier neural operators. Preprint at https://arxiv.org/abs/2202.11214. Accessed Dec 2023

Kadow Christopher, Hall David Matthew, Ulbrich Uwe (2020) Artificial intelligence reconstructs missing climate information. Nat Geosci 13:408–413. https://doi.org/10.1038/s41561-020-0582-5

Kai L, Gege N, Sen Z (2020) Study on the Spatiotemporal Evolution of Temperature and Precipitation in China from 1951 to 2018. Adv Earth Sci 35(11):1113–1126. https://doi.org/10.11867/j.issn.1001-8166.2020.102

Kingma PD, Ba J (2014) Adam: a method for stochastic optimization. IEICE Trans Fundam Electron Commun Comput Sci. CoRR abs/1412.6980

Li C, Ren X, Zhao G (2023) Machine-Learning-Based Imputation Method for Filling Missing Values in Ground Meteorological Observation Data. Algorithms, 2023, 16 (9). https://doi.org/10.3390/a16090422

Liu Yi (2022) Build a national high-quality cotton production base. Xinjiang Daily, 2022–09–05 (001). https://doi.org/10.28887/n.cnki.nxjrb.2022.003680 (in chinese)

Qin DH, Ding YH, Wang SW, Wang SM, Dong GR, Lin ED, Liu CQ, She ZX, Sun HN, Wang SR, Wu GH (2002) Ecological and environmental change in West China and its response strategy. Adv Earth Sci 17(3):314–319 (in Chinese)

Rajaee T, Ebrahimi H, Nourani HV (2019) A review of the artificial intelligence methods in groundwater level modelling. J Hydrol 572:336–351

Rumelhart D, Hinton G, Williams R (1986) Learning representations by back-propagating errors. Nature 323:533–536. https://doi.org/10.1038/323533a0

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: a simple way to prevent neural networks from overfitting. J Machine Learn Res 15(1):1929–1958

Tang D, Zhan Y, Yang F (2024) A review of machine learning for modeling air quality: Overlooked but important issues. Atmospheric Research, 2024, 300, 107261. https://doi.org/10.1016/j.atmosres.2024.107261

UNDRR (2020) Human Cost of Disasters: an Overview of the Last 20 Years 2000–2019; United Nations for Disaster Risk Reduction (UNISDR): Geneva, Switzerland

Vu MT, Jardani A, Massei N, Fournier M (2020) Reconstruction of missing groundwater level data by using Long Short-Term Memory (LSTM) deep neural network. J Hydrol, 2020, (prepublish): 125776. https://doi.org/10.1016/j.jhydrol.2020.125776

Wang J (2023) Distribution and evolution characteristics of drought under the background of climate warming and humidification in Xinjiang. Arid Environ Monitor 37(01):15–21 (in chinese)

Weiyi M, Qinghong N, Hongzheng S (2008) Research on climate change characteristics and climate zoning methods in Xinjiang. Meteorological Calendar (10):67–73 (in chinese)

Yan J, Yan M, Cui D, Liu H, Chen C, Xia Q (2017) Analysis of temperature and precipitation trends in the Ili River Valley of Xinjiang in the past 55 years. Hydropower Energy Science 35(10):13–16+12

Yang C, Xu Y, Shi J, Dai B, Zhou B (2020) Temporal pyramid network for action recognition. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition 2020:588–597

Yao Z, Zhang T, Wu L, Wang X, Huang J (2023) Physics-Informed Deep Learning for Reconstruction of Spatial Missing Climate Information in the Antarctic. Atmosphere 14 (4). https://doi.org/10.3390/atmos14040658

Zhang Z, Huang Z, Hu Z, Zhao X, Wang W, Liu Z, Zhang J, Qin SJ, Zhao H (2023) MLPST: MLP is All You Need for Spatio-Temporal Prediction. In Proceedings of the 32nd ACM International Conference on Information and Knowledge Management (CIKM '23). Association for Computing Machinery, New York, NY, USA, 3381–3390. https://doi.org/10.1145/3583780.3614969

Zhisong P, Wei L (2021) Survey of Spatio-temporal Series Prediction Methods Based on Deep Learning. Data Acquisition and Processing 36 (03): 436–448. https://doi.org/10.16337/j.1004-9037.2021.03.003. (in Chinese)

Ziolkowska Jadwiga R, Jesus Z (2018) Importance of weather monitoring for agricultural decision-making - an exploratory behavioral study for Oklahoma Mesonet. J Sci Food Agric 13:4945–4954

Acknowledgements

Thanks to Beijing Lianchuang Siyuan Measurement and Control Technology Co., Ltd. and Beijing Zhiyu Chuangyi Co., Ltd. for their help in the release of micro service.

Funding

Thanks to financial support of Silk Road Economic Belt Innovation-Driven Development Pilot Zone, WuChangShi National Independent Innovation Demonstration Zone project(2022LQ04001).

Author information

Authors and Affiliations

Contributions

ZhangYan: methodology;essay writing;proofreading of dissertations.

XuTianxin: data processing;method validation;essay writing;proofreading of dissertations.

Zhangchenjia: methodology;method validation;coding;proofreading of dissertations.

MaDaokun: financial support;project management.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Conflict of interest

None.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xu, T., Zhang, Y., Zhang, C. et al. Deep learning tool: reconstruction of long missing climate data based on spatio-temporal multilayer perceptron. Theor Appl Climatol (2024). https://doi.org/10.1007/s00704-024-04945-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00704-024-04945-3