Abstract

We prove two inequalities for the Mittag-Leffler function, namely that the function \(\log E_\alpha (x^\alpha )\) is sub-additive for \(0<\alpha <1,\) and super-additive for \(\alpha >1.\) These assertions follow from two new binomial inequalities, one of which is a converse to the neo-classical inequality. The proofs use a generalization of the binomial theorem due to Hara and Hino (Bull London Math Soc 2010). For \(0<\alpha <2,\) we also show that \(E_\alpha (x^\alpha )\) is log-concave resp. log-convex, using analytic as well as probabilistic arguments.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and main results

The Mittag-Leffler function

is a well-known special function with a large number of applications in pure and applied mathematics; see [9, 13] for surveys. Clearly, we have \(E_1(x)=e^x.\) Somewhat surprisingly, the “identity”

has been used in a few papers. As discussed in [6, 21], it is not correct for \(\alpha \ne 1\). In [21], a correct identity involving integrals of \(E_\alpha (x^\alpha )\) is proven, which reduces to \(e^{x+y}=e^x e^y\) as \(\alpha \rightarrow 1\). Besides this, it seems natural to ask whether the left and right hand sides of (1.1) are comparable. This is indeed the case:

Theorem 1.1

For \(0<\alpha <1,\) we have

and for \(\alpha >1\)

These inequalities are strict for \(x,y>0\).

The strictness assertion strengthens the observation made at the beginning of Section 2 of [6], where it is argued that the validity of (1.1) for all \(x,y\ge 0\) implies \(\alpha =1.\) According to Theorem 1.1, this equality never holds, except in the obvious cases (\(\alpha =1,\) or \(xy=0\)). We note that (1.2) has been used in [25, Proposition 4] to show a convolution inequality for a fractional version of the moment generating function of a random variable. Although apparently not made explicit in the literature, the lower estimate

complementing (1.2), follows from the calculation

In the second line, we have used the following result.

Theorem 1.2

(Neo-classical inequality; Theorem 1.2 in [12]) For \(k\in \mathbb N\) and \(0<\alpha <1,\) we have

With a slightly weaker factor of \(\alpha ^2\) instead of \(\alpha \), this result was proven by Lyons in 1998, who also coined the term neo-classical inequality, in a pioneering paper on rough path theory [18]. Later, it has been applied by several other authors, see e.g. [2, 5, 8, 15]. Analogously to (1.4), it is clear that Theorem 1.1 follows from the following new binomial inequalities.

Theorem 1.3

For \(k\in \mathbb N\) and \(0<\alpha <1,\) we have the converse neo-classical inequality

and for \(\alpha >1\) we have

These inequalities are strict for \(x,y>0\).

The inequalities (1.5)–(1.7) look deceptively simple. For instance, it is not obvious that the proof of (1.7)—at least with the approach used here—is much harder when the integer \(\lfloor \alpha \rfloor \) is even than when it is odd. Lyons’s proof of (the weaker version of) (1.5) applies the maximum principle for sub-parabolic functions in a non-trivial way. Hara and Hino [12] use fractional calculus to derive an extension of the binomial theorem (see Theorem 3.1 below), which immediately implies Theorem 1.2. This extended binomal theorem will be our starting point when proving Theorem 1.3. We also note that in a preliminary version of [7] (available at arXiv:1104.0577v2[math.PR]), it was shown that a multinomial extension can be derived from (1.5), by induction over the number of variables. A binomial inequality that is somewhat similar to the neo-classical inequality is proven in [17].

Our first theorem, Theorem 1.1, says that the function \(\log E_\alpha (x^\alpha )\) is sub- resp. super-additive on \(\mathbb {R}^+=(0,\infty ).\) In Sect. 2, we will show stronger statements for \(0<\alpha <2\): The function \(E_\alpha (x^\alpha )\) is log-concave for \(0<\alpha <1,\) and log-convex for \(1<\alpha <2\). Sections 3–6 are devoted to proving Theorem 1.3. Some preliminaries and the plan of the proof are given in Sect. 3. In that section we also state a conjecture concerning a converse inequality to (1.7).

2 Proof of Theorem 1.1 for \(0<\alpha <2\) and related statements

For brevity, we do not discuss strictness in this section. This would be straightforward, and the strictness assertion in Theorem 1.1 will follow anyways from Theorem 1.3. The following easy fact is well-known; see, e.g., [3].

Lemma 2.1

Let \(f:[0,\infty ) \rightarrow \mathbb R\) be convex with \(f(0)=0.\) Then f is superadditive, i.e.,

Thus, the following theorem implies (1.2), and (1.3) for \(1<\alpha <2\).

Theorem 2.2

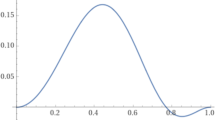

For \(0<\alpha <1,\) the function \(x\mapsto E_\alpha (x^{\alpha })\) is log-concave on \(\mathbb {R}^+\). For \(1<\alpha <2,\) it is log-convex.

For \(\alpha >2,\) it seems that \(E_\alpha (x^\alpha )\) is not log-convex. For instance, \(E_4(x^4)=\tfrac{1}{2}(\cos x+\cosh x),\) and

changes sign. To prove (1.3) for \(\alpha >2,\) we thus rely on the binomial inequality (1.7), which is proven later.

Proof of Theorem 2.2

We start from the representation, given in (3.4) of [24],

where

is a completely monotone function. (Recall that a smooth function f on \(\mathbb {R}^+\) is completely monotone if

By well-known closure properties of completely monotone functions (see e.g. Corollaries 1.6 and 1.7 in [23]),

is completely monotone as well and hence log-convex. Thus, \(E_\alpha (x^{\alpha })\) is log-concave.

Now suppose that \(1<\alpha <2.\) Setting \(\beta = \alpha /2\in (1/2,1),\) we have

Inserting the well-known representation

which is e.g. a consequence of the Perron-Stieltjes inversion formula applied to (3.7.7) in [9], and making some trigonometric simplifications, we get the representation

which is also given in (3.6) of [24]. This implies

with

a completely monotone function. Thus, \(E_\alpha (x^{\alpha })\) is log-convex.

Observe that Theorem 2.2 extends to the boundary case \(\alpha =2,\) where

is clearly log-convex. Let us also mention an alternative probabilistic argument for (1.2) based on the \(\alpha \)-stable subordinator \(\{ Z_t^{(\alpha )}, \; t\ge 0\}\) with normalization \(\mathbb {E} \big [ e^{-\lambda Z_t^{(\alpha )}}\big ] = e^{-t\lambda ^{\alpha }}.\) It is indeed well-known—see e.g. Exercise 50.7 in [22]—that

where \(R^{(\alpha )}_x := \inf \{t> 0 : Z^{(\alpha )}_t > x\}.\) Now if \({\tilde{R}}^{(\alpha )}_y\) is an independent copy of \(R^{(\alpha )}_y,\) the Markov property implies

where the inequality follows from the obvious fact that \(Z_{R^{(\alpha )}_x}^{(\alpha )} \ge x.\) This shows the desired inequality

To conclude this section, we give some related results for the function \(E_\alpha (x).\)

Proposition 2.3

For \(0<\alpha <1,\) the function \(x\mapsto E_\alpha (x)\) is log-convex on \(\mathbb {R}\). For \(\alpha >1,\) it is log-concave on \(\mathbb {R}^+\).

Proof

The logarithmic derivative of \(E_\alpha (x)\) is the ratio of series

and it is clear by log-convexity of the gamma function that the sequence

is increasing for \(\alpha < 1\) and decreasing for \(\alpha > 1.\) By Biernacki and Krzyż’s lemma (see [1]), this shows that

is non-decreasing on \(\mathbb {R}^+\) for \(\alpha < 1\) and non-increasing on \(\mathbb {R}^+\) for \(\alpha > 1.\) Since moment generating functions of random variables are log-convex (see Theorem 2.3 in [16]), the log-convexity of \(E_\alpha (x)\) on the whole real line for \(0< \alpha < 1\) is a consequence of the classic m.g.f. representation

where we have used the above notation and the easily established self-similar identity \( R^{(\alpha )}_x {\mathop {=}\limits ^{\textrm{d}}} x^\alpha R^{(\alpha )}_1.\) Note that \(R^{(\alpha )}_1\) is known as Mittag-Leffler random variable of type 2, with moments \(\Gamma (1+n)/\Gamma (1+\alpha n)\); see [14] and the references therein.

For \(\alpha \ge 2,\) there is actually a stronger result. It has been shown by Wiman [26] that the zeros of the Mittag-Leffler function are real and negative for \(\alpha \ge 2.\) As this function is of order \(1/\alpha <1,\) the Hadamard factorization theorem (Theorem XI.3.4 in [4]) implies that

where

are the absolute values of the zeros of \(E_\alpha (x).\) We conclude that \(1/E_\alpha (x)\) is completely monotone, and thus log-convex, on \({\mathbb R}^+.\) An interesting open question is whether \(1/E_\alpha (x)\) remains completely monotone on \({\mathbb R}^+\) for \(1< \alpha < 2.\) Unfortunately, the above argument fails because the large zeroes of \(E_\alpha (x)\) have non-trivial imaginary part (see Proposition 3.13 in [9]).

Recall that for \(\alpha \in (0,1)\), the function \(1/E_\alpha (x^\alpha )\) is completely monotone on \(\mathbb {R}^+\), as seen in the proof of Theorem 2.2, and that the function \(1/E_\alpha (x)\) is log-concave by Proposition 2.3 and hence not completely monotone on \(\mathbb {R}^+\). One can also show that \(1/E_\alpha (x)\) is decreasing and convex on \(\mathbb {R}^+\) for all \(\alpha > 0\).

3 Proof of Theorem 1.3: preliminaries

By symmetry and scaling, it is clearly sufficient to prove

and

As a sanity check, we verify these statements for \(\lambda >0\) sufficiently small:

and

These inequalities are obviously correct for small \(\lambda >0,\) the first one by \(\left( {\begin{array}{c}\alpha k\\ \alpha \end{array}}\right) >0.\) We now recall a remarkable generalization of the binomial theorem, due to Hara and Hino [12] . Their proof, using fractional Taylor series, builds on earlier work by Osler [20]. Following [12], for \(\alpha >0\) define

For \(t,\lambda \in (0,1]\) and \(k\in \mathbb {N},\) define

Theorem 3.1

(Theorem 3.2 in [12]) Let \(\alpha >0,\) \(\lambda \in (0,1]\) and \(k\in \mathbb {N}_0.\) Then we have

In [12], the theorem was stated for \(k\in \mathbb N\), but it is not hard to see that the proof also works for \(k=0.\) Clearly, the classical binomial theorem is recovered from (3.5) by putting \(\alpha =1.\) As noted in [12], for \(\alpha \in (0,1)\) we have \(K_\alpha =\{1\},\) and thus Theorem 1.2 is an immediate consequence of Theorem 3.1. Hara and Hino also mention that Theorem 3.1 implies

a partial converse to (1.7). It seems that this inequality does not hold for \(\alpha >2.\) We leave it to future research to find an appropriate inequality comparing the binomial sum with \((x+y)^{\alpha k}\) for \(\alpha >2.\) Different methods than in the subsequent sections will be required, as a lower estimate for \(\sum _{\omega \in K_\alpha }(1+\lambda \omega )^{\alpha k}\) is needed. The following statement might be true:

Conjecture 3.2

For \(k\in \mathbb N\) and \(\alpha >2,\) we have

The factor \(2^{\alpha -1}=\sup _{0<\lambda \le 1}(1+\lambda )^\alpha /(1+\lambda ^\alpha )\) would be sharp for \(k=1,\) \(x=\lambda \in (0,1],\) \(y=1.\) In the following sections, we will use arguments based on Theorem 3.1 to prove (3.1) and (3.2), which imply Theorem 1.3, from which Theorem 1.1 follows. The proof of (3.1) is presented in Sect. 4. It profits from the fact that

and requires only obvious properties of the function F defined in (3.4). At the beginning of Sect. 5, we show that, for \(2\le \alpha \in \mathbb N,\) the inequality (3.2) immediately follows from the classical binomial theorem. We then continue with the case where \(\alpha >1\) is not an integer, and \(\lfloor \alpha \rfloor \) is odd. Then, the set \(K_\alpha \) has \(\lfloor \alpha \rfloor \) elements, and the crude estimate

suffices to show (3.2), again using only simple properties of F. The case where \(\lfloor \alpha \rfloor \) is even is more involved, and is handled in Sect. 6. In this case, \(|K_\alpha |=\lceil \alpha \rceil \), and the obvious estimate

is too weak to lead anywhere. We first show (Lemma 6.1) that, for \(\lambda \in [\tfrac{1}{2},1],\) the right hand side of (3.6) can be strengthened to \(\alpha (1+\lambda )^{\alpha k},\) and that (3.2) easily follows from this for these values of \(\lambda .\) For smaller \(\lambda >0,\) more precise estimates for the sum \(\sum _{\omega \in K_\alpha }(1+\lambda \omega )^{\alpha k}\) and the integral in (3.5) are needed, which are developed in the remainder of Sect. 6.

4 Proof of Theorem 1.3 for \(0<\alpha <1\)

As mentioned above, it suffices to prove (3.1). For \(\alpha \in (0,1),\) we have \(K_\alpha =\{1\}.\) Since \(\sin \alpha \pi >0,\) we see from (3.5) that the desired inequality (3.1) is equivalent to

where

Define

This number is positive, as it is defined by the infimum of a sequence of positive numbers converging to a positive limit. Moreover, we define

By definition, \(F(t,\lambda ,k)\) decreases w.r.t. k, and so

Applying Theorem 3.1 with \(k=0\) yields

By these two observations,

Since \(\lambda ^{\alpha k}\le 1,\) and \((1-t)^{\alpha k}\) decreases w.r.t. t, it is clear from the definition of F that

By definition of \(\delta \), we have

Now note that

where the first estimate follows from (4.2) to (4.3), and the second one from (4.4). Thus, (4.1) is established.

5 Proof of Theorem 1.3 for \(\alpha \in \mathbb N\) or \(\lfloor \alpha \rfloor \) odd

If \(2\le \alpha \in \mathbb N\) is an integer, then the proof of (3.2) is very easy, as we are dealing with a classical binomial sum with some summands removed:

In the remainder of this section, we prove (3.2) for \(1<\alpha \notin \mathbb N\) with \(\lfloor \alpha \rfloor \) odd. Our approach is similar to the preceding section. First, observe that

and that

Therefore, the sequence

is well-defined and positive, and

We can thus define the positive number

Since odd \(\lfloor \alpha \rfloor \) implies \(\sin \alpha \pi <0\) for \(\alpha \notin \mathbb N,\) it is clear from (3.5) that (3.2) is equivalent to

where

By the same argument that gave us the first inequality in (4.5), we find

Note that

The proof of (3.2) with \(\lfloor \alpha \rfloor \) odd will thus be finished if we can show that

But this is equivalent to

which follows from the definition of \(\hat{\delta }.\)

6 Proof of Theorem 1.3 for \(\lfloor \alpha \rfloor \) even

We now prove (3.2) in the case that

As \(\sin \alpha \pi >0,\) it follows from (3.5) to (3.2) is equivalent to

Since \(F(\cdot ,\lambda ,k)\) is positive on (0, 1), the following lemma establishes this for \(\lambda \in [\tfrac{1}{2},1].\)

Lemma 6.1

Let \(\alpha >2\) be as in (6.1). Then we have

Proof

By (3.3), we have

We will show that

which proves the lemma. To prove (6.4), observe that (6.1) implies

Using the geometric series to evaluate the cosine sum (see, e.g., p. 102 in [19]), we obtain

Here, \(\cos \big ((m+1)\pi /\alpha \big )<0,\) and the sines are both positive. By (6.5) and the elementary inequalities

the following statement is sufficient for the validity of (6.4):

This is a polynomial inequality in real variables with polynomial constraints, which can be verified by cylindrical algebraic decomposition, using a computer algebra system. For instance, using Mathematica’s Reduce command on (6.6), with the first \(\le \) replaced by >, yields False. This shows that (6.6) is correct, which finishes the proof.

The following two lemmas will be required for some estimates of the function \(F(t,\lambda ,k)\) for small \(\lambda .\)

Lemma 6.2

For \(\alpha \) as in (6.1), we have

and

Proof

By substituting \(s^\alpha =w,\)

where we have used that \(\lfloor \alpha \rfloor \) is even. The first formula now follows from 3.242 on p. 322 of [10], with \(m=1/(2\alpha ),\) \(n=\tfrac{1}{2}\) and \(t=\pi (\alpha -\lfloor \alpha \rfloor -1).\) As for (6.8),

The identity (6.8) then follows from 11a) on p. 14 of [11], with

\(\square \)

Lemma 6.3

Again, suppose that \(\alpha \) satisfies (6.1). For \(k\in \mathbb N,\) we have

Proof

Fix some \(\beta \in (\tfrac{1}{\alpha },\tfrac{1}{2}).\) We have

and

These assertions easily follow from the fact that the integrand is of order \(\textrm{O}(s^{-\alpha -1})\) resp. \(\textrm{O}(s^{-\alpha })\) at infinity. Moreover, we have the uniform expansion

Since \(0\le (1-\lambda s)^{\alpha k}\le 1,\) (6.9) implies

The statement now follows from (6.11), (6.10) and (6.9).

We now continue the proof of (6.2). From the definition of F, it is clear that

As noted above, from (3.3), we have

Since Lemma 6.1 settles the case \(\lambda \in [\tfrac{1}{2},1],\) we may assume \(\lambda \in (0,\tfrac{1}{2})\) in what follows. Using (6.12) and (6.13) in (6.2), we see that it is sufficient to show

This will be proven in the following two lemmas.

Lemma 6.4

For \(\lambda \in (0,\tfrac{1}{2}),\) the left hand side of (6.14) decreases w.r.t. \(\lambda \).

Proof

The derivative of this expression is

Note that it is easy to show that \(\cos (2j\pi /\alpha )+\lambda <0\) for \(j>\alpha /3,\) which is where we use our assumption that \(\lambda <\tfrac{1}{2}\). Thus, we are discarding only negative terms when passing from \(\sum _{j=1}^m\) to \(\sum _{1\le j\le \alpha /3}\) in (6.15). By Lemma 6.2, the last term in (6.15) satisfies

where the inequality follows from

We can thus estimate (6.15) further by

Indeed, it is easy to see that \(2\lfloor \alpha /3 \rfloor +2-\alpha \le 0\) for \(\alpha \) as in (6.1).

Presumably, the preceding lemma can be extended to \(\lambda \in (0,1],\) but this would require a much better estimate for \(\sum _{\omega \in K_\alpha }(1+\lambda \omega )^{\alpha k}\) than the one we have used. The proof of (3.2) could then possibly be streamlined, because Lemma 6.1 would no longer be required.

Lemma 6.5

Let \(\alpha \) be as in (6.1), and \(k\in \mathbb N.\) Then (6.14) holds for \(\lambda >0\) sufficiently small.

Proof

Since \(m=(\lceil \alpha \rceil -1) /2,\) expanding the first terms of (6.14) gives

By Lemmas 6.2 and 6.3, this further equals

and we see that (6.14) becomes sharp as \(\lambda \downarrow 0,\) as its left hand side is \(\textrm{O}(\lambda )\). This is no surprise, since the inequality (3.2) we are proving is obviously sharp for \(\lambda \downarrow 0.\) It remains to show that the coefficient of \(\lambda \) in (6.18) is negative. Similarly to (6.15), we have the bound

Thus, we wish to show that

As the sine quotient is \(<1,\) by (6.16), this is clearly true for \(\alpha >6.\) Now consider \(\alpha \in (4,5).\) It is easy to verify that

and that

Using these estimates in (6.19) leads to a quadratic inequality, which is straightforward to check. The proof of (6.19) for \(\alpha \in (2,3)\) is similar.

Clearly, Lemmas 6.4 and 6.5 establish (6.14). As argued above (6.14), we have thus proven (6.2), hence (3.2), and the proof of Theorem 1.3 is complete.

Data Availability

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

References

Biernacki, M., Krzyż, J.: On the monotonicity of certain functionals in the theory of analytic functions. Ann. Univ. Mariae Curie-Skłodowska A 9, 135–147 (1955)

Boedihardjo, H., Geng, X., Souris, N.P.: Path developments and tail asymptotics of signature for pure rough paths. Adv. Math. 364, 107043, 48 (2020)

Bruckner, A.M.: Some relationships between locally superadditive functions and convex functions. Proc. Am. Math. Soc. 15, 61–65 (1964)

Conway, J.B.: Functions of one complex variable. Graduate Texts in Mathematics, vol. 11, 2nd edn. Springer-Verlag, New York-Berlin (1978)

Crisan, D., Litterer, C., Lyons, T.J.: Kusuoka–Stroock gradient bounds for the solution of the filtering equation. J. Funct. Anal. 268, 1928–1971 (2015)

Elagan, S.K.: On the invalidity of semigroup property for the Mittag-Leffler function with two parameters. J. Egyptian Math. Soc. 24, 200–203 (2016)

Friz, P., Riedel, S.: Integrability of (non-)linear rough differential equations and integrals. Stoch. Anal. Appl. 31, 336–358 (2013)

Friz, P., Riedel, S.: Convergence rates for the full Gaussian rough paths. Ann. Inst. Henri Poincaré Probab. Stat. 50, 154–194 (2014)

Gorenflo, R., Kilbas, A.A., Mainardi, F., Rogosin, S.: Mittag-Leffler Functions, Related Topics and Applications. Springer Monographs in Mathematics, 2nd edn. Springer, Berlin (2020)

Gradshteyn, I.S., Ryzhik, I.M.: Table of Integrals, Series, and Products, 7th edn. Elsevier/Academic Press, Amsterdam (2007)

Gröbner, W., Hofreiter, N.: Integraltafel. Zweiter Teil. Bestimmte Integrale, 5th edn. Springer-Verlag, Berlin (1973)

Hara, K., Hino, M.: Fractional order Taylor’s series and the neo-classical inequality. Bull. Lond. Math. Soc. 42, 467–477 (2010)

Haubold, H.J., Mathai, A.M., Saxena, R.K.: Mittag-Leffler functions and their applications, J Appl Math, pp. 1–51 (2011)

Huillet, T.E.: On Mittag-Leffler distributions and related stochastic processes. J. Comput. Appl. Math. 296, 181–211 (2016)

Inahama, Y.: A moment estimate of the derivative process in rough path theory. Proc. Am. Math. Soc. 140, 2183–2191 (2012)

Jørgensen, B.: The Theory of Dispersion Models. Monographs on Statistics and Applied Probability, vol. 76. Chapman & Hall, London (1997)

Kovač, V.: On binomial sums, additive energies, and lazy random walks. Preprint, available at (2022) arxiv: 2206.01591

Lyons, T.J.: Differential equations driven by rough signals. Rev. Mat. Iberoamericana 14, 215–310 (1998)

Mitrinović, D.S.: Elementary Inequalities. P. Noordhoff Ltd., Groningen (1964)

Osler, T.J.: Taylor’s series generalized for fractional derivatives and applications. SIAM J. Math. Anal. 2, 37–48 (1971)

Peng, J., Li, K.: A note on property of the Mittag-Leffler function. J. Math. Anal. Appl. 370, 635–638 (2010)

Sato, K.-I.: Lévy Processes and Infinitely Divisible Distributions. Cambridge Studies in Advanced Mathematics, 2nd edn. Cambridge University Press, Cambridge (2013)

Schilling, R.L., Song, R., Vondraček, Z.: Bernstein Functions: Theory and Applications., vol. 37 of De Gruyter Studies in Mathematics, 2nd edition, Walter de Gruyter & Co., Berlin, (2012)

Simon, T.: Mittag-Leffler functions and complete monotonicity. Int. Trans. Spec. Funct. 26, 36–50 (2015)

Tomovski, Ž, Metzler, R., Gerhold, S.: Fractional characteristic functions, and a fractional calculus approach for moments of random variables. Fract. Calc. Appl. Anal. 25, 1307–1323 (2022)

Wiman, A.: Über die Nullstellen der Funktionen \(E_a(x)\). Acta Math. 29, 217–234 (1905)

Funding

Open access funding provided by TU Wien (TUW).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Ilse Fischer.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gerhold, S., Simon, T. A converse to the neo-classical inequality with an application to the Mittag-Leffler function. Monatsh Math 200, 627–645 (2023). https://doi.org/10.1007/s00605-022-01817-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00605-022-01817-8