Abstract

We extend the notion of jittered sampling to arbitrary partitions and study the discrepancy of the related point sets. Let \({\varvec{\Omega }}=(\Omega _1,\ldots ,\Omega _N)\) be a partition of \([0,1]^d\) and let the ith point in \({{\mathcal {P}}}\) be chosen uniformly in the ith set of the partition (and stochastically independent of the other points), \(i=1,\ldots ,N\). For the study of such sets we introduce the concept of a uniformly distributed triangular array and compare this notion to related notions in the literature. We prove that the expected \({{{\mathcal {L}}}_p}\)-discrepancy, \({{\mathbb {E}}}{{{\mathcal {L}}}_p}({{\mathcal {P}}}_{\varvec{\Omega }})^p\), of a point set \({{\mathcal {P}}}_{\varvec{\Omega }}\) generated from any equivolume partition \({\varvec{\Omega }}\) is always strictly smaller than the expected \({{{\mathcal {L}}}_p}\)-discrepancy of a set of N uniform random samples for \(p>1\). For fixed N we consider classes of stratified samples based on equivolume partitions of the unit cube into convex sets or into sets with a uniform positive lower bound on their reach. It is shown that these classes contain at least one minimizer of the expected \({{{\mathcal {L}}}_p}\)-discrepancy. We illustrate our results with explicit constructions for small N. In addition, we present a family of partitions that seems to improve the expected discrepancy of Monte Carlo sampling by a factor of 2 for every N.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Setting and main questions

Given a finite set \({{\mathcal {P}}}= \left\{ {\mathbf {x}}_1, \dots , {\mathbf {x}}_N\right\} \) of N points in \([0,1]^d\) one way to quantify how well-spread these points are, is to calculate the \({{\mathcal {L}}}_p\)-discrepancy

of \({{\mathcal {P}}}\), in which \(1\le p < \infty \), \(\#\left( {{\mathcal {P}}}\cap [0, {\mathbf {x}}[\right) \) counts the number of indices \(1\le i \le N\) such that \({\mathbf {x}}_i \in [0, {\mathbf {x}}[\), and \(\big |[0, \mathbf {x}[\big |\) is the Lebesgue measure of \([0,\mathbf {x}[ :=\prod _{k=1}^d [0,x_k[\) with \(\mathbf {x} = (x_1, \ldots , x_d)\); i.e. the \({{\mathcal {L}}}_p\) norm of the so-called discrepancy function. For an infinite sequence \({{\mathcal {S}}}\) the \({{\mathcal {L}}}_p\)-discrepancy \({{\mathcal {L}}}_{p}({{\mathcal {S}}}_N)\) is the \({{\mathcal {L}}}_p\)-discrepancy of the first N elements, \({{\mathcal {S}}}_N\), of \({{\mathcal {S}}}\). Another important irregularity measure is the star-discrepancy defined as

The \({{\mathcal {L}}}_2\)-discrepancy is a well studied and understood measure for the irregularities of point sets. We refer to the book [8] and the excellent survey [9] for further details. In particular, and in contrast to other measures such as the star-discrepancy, it is known how to construct deterministic point sets with the optimal order of magnitude of the \({{\mathcal {L}}}_2\)-discrepancy; see [2, 9, 10]. For \(d=2\) the optimal order of the \({{\mathcal {L}}}_2\)-discrepancy for finite point sets is known to be \({\mathcal {O}}(\sqrt{\log N}/N)\), which already goes back to a result of Davenport [5]. The optimality of these constructions follows from a seminal result of Roth [34] who derived a general lower bound for the \({{\mathcal {L}}}_2\)-discrepancy of arbitrary sets of N points in \([0,1]^d\); see e.g. [8, Theorem 3.20]. While deterministic point sets with small discrepancy are widely used in the context of numerical integration, simulations of different real world phenomena may require an element of randomness. The expected discrepancy of a set \({{\mathcal {P}}}_N\) of N i.i.d. uniform random points in \([0,1]^d\) is of order \({\mathcal {O}}(1/\sqrt{N})\) and as such independent of the dimension. For two-dimensional point sets of N i.i.d. uniform random points, we thus also have an expected discrepancy of order \({\mathcal {O}}(1/\sqrt{N})\) similar to the two-dimensional regular grid (whose discrepancy is known to get worse as the dimension increases).

Randomized quasi-Monte Carlo (RQMC) sampling is a popular method to randomize deterministic point sets; see [14] for an excellent introduction. Clever constructions of deterministic point sets, so called quasi-Monte Carlo (QMC) sampling can significantly improve the asymptotic order of integration errors when compared to classical Monte Carlo sampling. RQMC basically takes a deterministic QMC point set as an input and uses a randomisation technique (e.g. a random shift modulo 1 or a so-called digital shift) to generate a new point set, which can be shown to have improved uniform distribution properties compared to Monte Carlo samples, while still enjoying the advantages of being ‘random’ in theoretical analysis; see [3, 13, 19, 30,31,32].

The starting point for our work, can also be considered as a basic RQMC technique which was already discussed in [19] in a slightly more general form. Classical jittered sampling for \(N=m^d\) combines the simplicity of grids with uniform random sampling by partitioning \([0,1]^d\) into \(m^d\) axis-aligned congruent cubes and placing a random point inside each of them; see Fig. 1. Jittered sampling is sometimes referred to as ‘stratified sampling’ in the literature, but we will use the term ‘stratified sampling’ in a more broad sense as outlined below. Motivated by recent progress [28, 29] the aim of this paper is to take a systematic look at jittered sampling and its extension based on more general partitions \({\varvec{\Omega }}=(\Omega _1,\ldots ,\Omega _N)\) of \([0,1]^d\). We consider stratified sampling, where \([0,1]^d\) is partitioned into N subsets \(\Omega _1,\ldots ,\Omega _N\) and the ith point in \({{\mathcal {P}}}\) is chosen uniformly in the ith set of the partition (and stochastically independent of the other points), \(i=1,\ldots ,N\). If \(N=m^d\) and the partition consists of the above mentioned axis-aligned congruent cubes, we obtain jittered sampling as a special case. Besides results for fixed N, we are also interested in the behavior of stratified samples derived from sequences of partitions when N becomes large.

At this point, we would like to emphasize that sequences of partitions that can be used in stratified sampling are more general than those in Kakutani’s splitting procedure and its variants [24, 33, 38]. Apart from the obvious difference that these procedures restrict considerations to \(d=1\), the partitions in the present paper need not be nested. This means that the partition in step \(N+1\) is not necessarily obtained as a refinement of the partition in step N; see also the discussion in Appendix A.

It was shown in [28] that the asymptotic order of the star-discrepancy of a point set obtained from jittered sampling is \({\mathcal {O}}(N^{-\frac{1}{2}-\frac{1}{2d}})\). Thus, taking partitions can significantly improve the expected discrepancy of (random) point sets in small dimensions \(d\ge 2\). We are interested in the following main questions:

-

(1)

In which sense are sequences of stratified sample points uniformly distributed as their number N increases? What is the connection to similar notions for partitions in the literature?

-

(2)

Does stratified sampling yield smaller or larger mean discrepancy than Monte Carlo sampling with N i.i.d. uniform random points? Are there discrepancy notions and assumptions assuring that stratified sampling is strictly better?

-

(3)

Is there a ’best’ partition for a given N in terms of a chosen mean discrepancy?

-

(4)

Is there a simple family of partitions \(\{{\varvec{\Omega }}^{(N)}\}_{N\ge 1}\) that gives reasonable results for all N and not just for square numbers of points as in the case of classical jittered sampling?

-

(5)

Can we improve classical jittered sampling with stratified sampling?

Section 2 presents our answers to the above together with open questions for future research. In Sect. 3 we prove our main theoretical results and illustrate them with examples. Section 4 introduces and explores an infinite family of partitions and contains more examples as well as numerical results.

1.2 Stratifications and the star discrepancy

By the celebrated result of Heinrich, Novak, Wasilkowski & Wozniakowski [21] there exists a set of N points in \([0,1]^d\) with

Aistleitner [1] using a result of Gnewuch [17], has shown that one can take \(c=10\). Doerr [11] has shown \({\mathbb {E}} D^*({\mathcal {P}}) > rsim \sqrt{d/N}\) for point sets of N i.i.d. uniformly random points indicating that this is the correct order of magnitude. See [18] and references therein for the most up-do-date history of improvements on the constant c; the currently smallest value is \(c=2.4968\) derived in [18, Corollary 3.6] in which it is also shown that (1) with \(c=3\) holds with very high probability when \({{\mathcal {P}}}\) is a set of N i.i.d. uniformly random points.

Since the best known construction is purely probabilistic, it is natural to ask whether we can improve upon these upper bounds using stratification. Indeed, Aistleitner muses in [1] that a thought-out partition may improve the upper bound. Our strong partition principle (Theorem 1) shows that the mean \({{\mathcal {L}}}_{p}\)-discrepancy of stratified sets is strictly smaller than the mean \({{\mathcal {L}}}_{p}\)-discrepancy of N i.i.d uniform random points and this could lead to a similar result for the star discrepancy using the technique from [21]; see also [27]. However, the main obstacle in this context is that in order to see the stratification effect in large dimensions, one needs to subdivide the unit cube into (exponentially in d) many subsets and hence one faces a seemingly unavoidable difficulty if one wishes for a result for small N in large dimension. This is also underlined by the discussion on classical jittered sampling in [28, Section 6], in which it is detailed why jittered sampling gains in effectiveness over purely random points only around \(N \sim d^d\). We believe that stratifications are most useful in small dimensions in which the stratification effect is significant.

Question 1

In which range of d is the stratification effect most significant?

We will illustrate the potential of stratifications in the context of star discrepancy with numerical experiments. For fixed (and small) d we expect that it is possible to improve the constant for a uniformly at random scheme with a stratified scheme similar to the case of the \({{\mathcal {L}}}_{p}\)-discrepancy. For \(d=2,3,5\) we numerically obtain improvements for the families of partitions studied in this paper; see Table 2.

2 Results

2.1 Stratified sampling and uniform distribution

Let \(d\ge 1\) be given. We consider partitions \({\varvec{\Omega }}=\{ \Omega _1, \ldots ,\) \( \Omega _N\}\) of the unit cube in \({{\mathbb {R}}}^d\) into N Lebesgue-measurable sets, i.e.

and the sets do not overlap in the \(L^1\)-sense, so \(\Omega _i\cap \Omega _j\) is a Lebesgue-null set for all \(i\ne j\) in \(\{1,\ldots ,N\}\). It should be emphasized that we prefer this condition to the stronger one requiring pairwise disjoint sets, as we later want to work with closed sets. When the sets \(\Omega _i\) and \(\Omega _j\) are convex, then \(|\Omega _i\cap \Omega _j|=0\) is equivalent to saying that \(\Omega _i\) and \(\Omega _j\) do not have any interior points in common. For the moment, we pose no other geometric conditions on the partition, in particular the sets \(\Omega _i\) need not be connected.

Any partition gives rise to an N-element stratified sample \({{\mathcal {P}}}_{{\varvec{\Omega }}}\) of N random points derived from the partition by picking a random uniform (random w.r.t. the normalized Lebesgue measure) point from each \(\Omega _i\) in a stochastically independent manner. In contrast to stratified sampling in classical sampling theory (see, e.g. [37]), we sample only one point in each of the strata. As ground model for comparison we often will consider the set of Monte Carlo samples \({{\mathcal {P}}}_N\) consisting of N i.i.d. (independent and identically distributed) uniform random points in the unit cube.

For a set \({{\mathcal {P}}}\subset [0,1]^d\) of \(N\in {{\mathbb {N}}}\) points in the unit cube let

be the proportion of points falling in a test cube \([0,{\mathbf {x}}[\) with \({\mathbf {x}}\in [0,1]^d\). For a Monte Carlo sample, \(Z_{\mathbf {x}}({{\mathcal {P}}}_N)\) is a random variable with mean \(|[0,{\mathbf {x}}[|\), but \(Z_{\mathbf {x}}({{\mathcal {P}}}_{\varvec{\Omega }})\) need not be unbiased for \(|[0,{\mathbf {x}}[|\) when \({\varvec{\Omega }}\) is a partition of the unit cube. We show in Proposition 1 below that \(Z_{\mathbf {x}}({{\mathcal {P}}}_{\varvec{\Omega }})\) has mean \(|[0,{\mathbf {x}}[|\) for all \({\mathbf {x}}\in [0,1]^d\) if and only if the partition is equivolume, that is, if \(|\Omega _1|=\cdots =|\Omega _N|\). This corresponds to the concept of self-weighting stratifications in classical sampling of finite populations: as the samples in all strata are equally large (just one point per stratum), the strata must be equal in size. The assumption of equivolume partitions is often convenient, as it allows us to interpret the mean of \({{\mathcal {L}}}_p^p({{\mathcal {P}}}_{{\varvec{\Omega }}})\) as an integrated centered pth mean; see Eqs. (7) and (9), below. Two examples of equivolume partitions for \(d=2\) are illustrated in Fig. 2.

Now let \(\{{\varvec{\Omega }}^{(N)}\}_{N\ge 1}\) be a sequence of finite partitions of the unit cube and let \({{\mathcal {P}}}_{{\varvec{\Omega }}^{(N)}}=\{{\mathbf {X}}_1^{(N)},\ldots ,{\mathbf {X}}_N^{(N)}\}\) be the stratified sample associated to the Nth partition. Note that we use capital letters whenever points are random. The fact that partitions for different N need not be related to one another implies that the set of all sampling points forms a triangular array, and we are thus led to define a uniform distribution property for those; see also [7, Section 3].

Definition 1

A triangular array \({\widehat{{\mathbf {x}}}}=\big ({\mathbf {x}}_1^{(N)},\ldots ,{\mathbf {x}}_N^{(N)}\big )_{N\in {\mathbb {N}}}\) with points in \([0,1]^d\) is said to be uniformly distributed, if for every cube \([\mathbf{x}, \mathbf{y}[ \subset [0,1[^d\) we have

A sequence \(({\mathbf {x}}_i)\) in the unit cube is uniformly distributed in the usual sense, if and only if the triangular array \(({\mathbf {x}}_1,\ldots ,{\mathbf {x}}_N)_{N\in {\mathbb {N}}}\) is uniformly distributed in the sense of Definition 1. Hence, this definition generalizes the usual one. As in the classical case, uniform distribution of triangular arrays is equivalent to the weak convergence of the sequence of ’empirical distributions’, where the Nth of those distributions sits on the points \({\mathbf {x}}_1^{(N)},\ldots ,{\mathbf {x}}_N^{(N)}\) giving equal mass to each of them. In other words, \((1/N) \sum _{i=1}^N f({\mathbf {x}}_i^{(N)})\rightarrow \int _{[0,1]^d}f({\mathbf {x}})\mathrm {d}{\mathbf {x}}\), as \(N\rightarrow \infty \), for all continuous functions f on the unit cube.

Proposition 6 in Appendix A characterizes partitions leading a.s. to uniformly distributed stratified samples using the strong law of large numbers for triangular arrays. The most important implication of Proposition 6 is that stratification sequences of equivolume partitions are uniformly distributed. This is one reason why our theoretical results are based on equivolume partitions. Appendix A also discusses how Definition 1 relates to similar concepts in the existing literature.

2.2 The strong partition principle for stratified sampling

Discrepancy measures can be used to compare sets of sampling points. In the case of a set \({{\mathcal {P}}}\) of random sampling points the mean \({{\mathcal {L}}}_p\)-discrepancy \({{\mathbb {E}}}{{\mathcal {L}}}_p^p({{\mathcal {P}}})\) is often employed, where \({{\mathbb {E}}}\) denotes the probabilistic expectation. One should correctly call \({{\mathbb {E}}}{{\mathcal {L}}}_p^p({{\mathcal {P}}})\) the ‘mean pth power \({{\mathcal {L}}}_p\)-discrepancy’, but we prefer the shorter, slightly misleading form for breviety.

Certainly, a stratified sample need not be better than a Monte Carlo sample. Consider for instance a partition \({\varvec{\Omega }}\) with N sets in \([0,1]^2\) where the \(N-1\) partitioning sets \(\Omega _1,\ldots , \Omega _{N-1}\) are all subsets of \([\delta ,1]^2\) with some \(\delta \in ]0,1[\). Then the mean \({{\mathcal {L}}}_2\)-discrepancy satisfies

for all \(\delta >(5/9)^{1/4}\approx 0.86\) and \(N\ge 2\), where the last inequality uses (15).

In contrast to this, stratified samples from equivolume partitions are never worse than Monte Carlo samples in terms of the mean \({{\mathcal {L}}}_2\)-discrepancy according to the Partition Principle in [28, Theorem 1.2]. We strengthen this result in two directions showing firstly that stratified samples from equivolume partitions are strictly better, and secondly that \({{\mathcal {L}}}_2\)-discrepancy can be replaced by \({{\mathcal {L}}}_p\)-discrepancy with arbitrary \(p>1\). The main ingredient of our proof is a result by Hoeffding [22] stating that among all Poisson-binomial distributions with given mean, the classical binomial distribution is the most spread-out.

Theorem 1

(Strong Partition Principle) For any equivolume partition \({\varvec{\Omega }}\) of \([0,1]^d\) with \(N\ge 2\) sets we have

for all \(p>1\).

The proof of this theorem will be given in Sect. 3.2. One can understand (5) as a continuous analog and extension to the statement in finite population sampling theory that self-weighted stratified sampling is always better (in terms of variance) than simple random sampling, both taken with replacement.

For illustration, we consider the sequence \(({\varvec{\Omega }}^{(N)}_{\mathrm {vert}})\) of vertical strip partitions; see Fig. 2 (left), but generalized to the d-dimensional case. Direct calculation confirms

for all \(N\ge 2\). Both sampling schemes have the same asymptotic order 1/N, but stratified sampling has a better leading constant; see Sect. 3.2 for details.

2.3 Partitions with best average discrepancy

For a given \(N\ge 2\), it is an open problem to assure the existence of a partition whose associated stratified sample has lowest mean discrepancy among all partitions consisting of N sets. We will show such existence results for certain equivolume partitions. These results are all based on the topological standard argument that a continuous function attains its minimum on a compact set. This requires the choice of a topology on the family \({{\mathcal {C}}}\) of compact subsets of \([0,1]^d\). We have chosen the well-established Hausdorff metric, as \({{\mathcal {C}}}\) is compact in its generated topology. However, both, the extension of this compactness to partitions and the continuity claim of \({{\mathbb {E}}}{{{\mathcal {L}}}_p}({{\mathcal {P}}}_{{\varvec{\Omega }}})^p\) as a function of the partitioning sets, require the continuity of the volume functional. We will assure this by assuming certain regularity conditions. More precisely, we assume that there is \(r>0\) such that the sets \(\Omega _1,\ldots ,\Omega _N\subset [0,1]^d\) of the partition have reach at least r, meaning that for any point \({\mathbf {x}}\) with distance less than r from \(\Omega _i\) there is a unique closest point to \({\mathbf {x}}\) in \(\Omega _i\), \(i=1,\ldots ,N\); see Fig. 3. The class of such sets is very general and contains for instance all compact convex sets in \([0,1]^d\) (as the reach of a closed convex set is infinity). It also contains any given set whose boundary is a piecewise \(C^2\)-curve such that its finitely many vertices are ’convex’, provided that \(r>0\) is chosen small enough. Let \({\mathfrak {P}}_N(r)\) be the class of all equivolume partitions consisting of sets with reach at least \(r>0\).

Also smaller classes of partitions can be treated. We name here the class \({\mathfrak {P}}_N^{\mathrm {conv}}\) of equivolume convex partitions, which might be relevant for applications, as all sets constituting a convex partition of \([0,1]^d\) are actually convex polytopes, with the total number of vertices being uniformly bounded when N is given. Hence, convex partitions can be described using finitely many parameters, and thus they are, at least in principle, computationally tractable. The following main result states the existence of equivolume partitions yielding the best mean discrepancy \({{\mathbb {E}}}\Delta \) from stratification under regularity. Note that the assumptions on the function \(\Delta \), which is some given measure of discrepancy, are very weak.

Theorem 2

Let \(r>0\) and \(N\in {\mathbb {N}}\) be given and assume that \(\Delta :([0,1]^d)^N\rightarrow {{\mathbb {R}}}\) is measurable and bounded. Then there exists (at least) one partition \({\varvec{\Omega }}^* \in {\mathfrak {P}}_N(r)\) such that the corresponding stratified sample \({{\mathcal {P}}}_{{\varvec{\Omega }}^*}\) minimizes the mean \(\Delta \)-discrepancy on \({\mathfrak {P}}_N(r)\); i.e.

A corresponding statement holds true for \({\mathfrak {P}}_N^{\mathrm {conv}}\).

The standard notions of discrepancy satisfy the assumptions in the above theorem; see the end of Sect. 3.4 for details.

Corollary 1

Let \(r>0\), \(1\le p<\infty \), and \(N\in {\mathbb {N}}\) be given. Then there are partitions \({\varvec{\Omega }}^p \in {\mathfrak {P}}_N(r)\) and \({\varvec{\Omega }}^* \in {\mathfrak {P}}_N(r)\) of \([0,1]^d\) such that

and

respectively. Corresponding statements hold true for \({\mathfrak {P}}_N^{\mathrm {conv}}\).

For illustration we will determine the optimal convex partition of \([0,1]^2\) for \(N=2\) in Sect. 3.5.

Theorem 2 and its proof in Sect. 3.4 indicate that the properties of the discrepancy are only of marignal importance while the regularity assumptions on the partitioning sets are crucial in order to show the continuity and compactness. Generally speaking, for such existence statements to hold, we expect that these regularity conditions are not needed, that is, we expect the existence of an equivolume partition of \([0,1]^d\) minimizing a given (rather general) measure of discrepancy. The reasoning for this conjecture is the surmise that minimizers are typically consisting of regular sets. To illustrate this point consider the simple case \(d=1\), \(N=2\). Clearly, the class of equivolume partitions contains very complicated pairs of sets, such as fractals, and it is certainly not closed in the Hausdorff-metric nor in the induced \({{\mathcal {L}}}_1\)-metric for indicator functions. But Corollary 2 in Sect. 3.2, shows that the unique mean-\({{\mathcal {L}}}_2\)-disrepancy minimizing equivolume partition in this case is simply \(\{[0,1/2],[1/2,1]\}\) (up to sets of measure zero), which consists of very regular sets.

We cannot claim that the equivolume assumption is needed, but it is crucial for our approach, as it avoids that sets in partition sequences shrink to lower-dimensional sets. Existence statements without the equivolume assumption, though very interesting, would require thus substantially different techniques and are beyond the scope of the present paper.

2.4 Explicit stratification strategies for arbitrary N

Next, we suggest and motivate a general and versatile construction of partitions for arbitrary N. We define partitions of the unit square generated by parallel lines which are orthogonal to the diagonal of the square. As a special case we consider the partitions \({\varvec{\Omega }}_{*}^{(N)}\) which are equivolume; see Fig. 2 (right). In Sect. 4.5 we present numerical evidence that stratified samples based on such partitions improve the expected \({{\mathcal {L}}}_2\)-discrepancy of an N-point Monte Carlo sample roughly by a factor of two. As a comparison, we show in Example 1 in Sect. 3.3 that samples based on vertical strip partitions improve an N-point Monte Carlo sample by a factor of 5/3.

Importantly, this construction enables us also to systematically study the role of the equivolume property. In a first step, in Example 3 in Sect. 4.2 we improve the minimal convex equivolume partition for \(N=2\) obtained in Example 2 in Sect. 3.5 by shifting the separating line along the diagonal. In Sect. 4.3 we extend this analysis to the case \(N=3\). We parametrise all such partitions into three sets and determine the minimal partition among them. It turns out that these partitions into three sets have a rich and interesting global structure with respect to their expected discrepancy.

Finally, we have examples of partitions within this family and for small N that show that it is possible to improve classical jittered sampling by relaxing the equivolume constraint.

2.5 Conclusions and open questions

In conclusion, our results show that if partitions are needed to generate stratified samples for arbitrary N, we suggest to use lines that are orthogonal to the diagonal of the unit square. Within this family it seems that equivolume partitions \({\varvec{\Omega }}_{*}^{(N)}\) are a reasonably good pick; see Sect. 4.5 for details. Secondly, our examples for \(N=2,3,4\) show that the expected discrepancy can be improved if we drop the equivolume property. This is in line with the results from [29] and deserves further attention. It certainly relates to the well-known general observation that the \({{\mathcal {L}}}_2\)-discrepancy exaggerates the importance of points lying close to the origin (see [26, pg 13f]).

Question 2

Are there properties of sequences of partitions, other than equivolume, that improve asymptotically the expected discrepancy of Monte Carlo sampling?

Our example for \(N=4\) supports the idea brought forward in [28] that classical jittered sampling may not give the lowest expected discrepancy for large N.

Question 3

Is there an infinite family of partitions that generates point sets with a smaller expected discrepancy than classical jittered sampling for large N?

3 Proofs and examples

3.1 Proofs for Section 2.1

We now give proofs of the results in Sect. 2.1 using the notations and notions introduced there. On several occasions we will need the following lemma, which essentially is a reformulation of the fact that a distribution (in the probabilistic sense) is uniquely determined by its cumulative distribution function, also in the multivariate case; see e.g. [25, Example 1.44]. Indeed, the proof of the following lemma is based on this fact, if the function involved is split into positive and negative part and the total integrals are normalized.

Lemma 1

An integrable function \(f:[0,1]^d\rightarrow {{\mathbb {R}}}\) is almost everywhere determined if its integrals \(\int _{[0,{\mathbf {x}}]}f({\mathbf {y}})\mathrm {d}{\mathbf {y}}\) are known for almost all \({\mathbf {x}}\in [0,1]^d\).

In other words, \(\int _{[0,{\mathbf {x}}]}f({\mathbf {y}})\mathrm {d}{\mathbf {y}}=0\) for almost all \({\mathbf {x}}\in [0,1]^d\) implies \(f({\mathbf {x}})=0\) for almost all \({\mathbf {x}}\in [0,1]^d\).

We are now in a position to show the announced characterization of equivolume partitions in terms of the unbiasedness of the proportions \(Z_{\mathbf {x}}({{\mathcal {P}}})\) in (3).

Proposition 1

For a partition \({\varvec{\Omega }}\) of \([0,1]^d\) into N Lebesgue sets \(\Omega _1,\ldots , \Omega _N\) of positive volume the following three statements are equivalent.

-

(i)

\({\varvec{\Omega }}\) is equivolume.

-

(ii)

\({{\mathbb {E}}}Z_{\mathbf {x}}({{\mathcal {P}}})=\big |[0,{\mathbf {x}}]\big |\) for all \({\mathbf {x}}\in [0,1]^d\).

-

(iii)

\({{\mathbb {E}}}Z_{\mathbf {x}}({{\mathcal {P}}})=\big |[0,{\mathbf {x}}]\big |\) for almost all \({\mathbf {x}}\in [0,1]^d\).

Proof

The bias is

where

If (i) holds, the vector \({\mathbf {u}}=(u_1,\ldots ,u_N)\) is the zero vector and the bias (6) vanishes for all \({\mathbf {x}}\in [0,1]^d\). Hence, (i) implies (ii).

Clearly (ii) implies (iii), so it remains to assume (iii) and deduce (i). Assumption (iii) implies that (6) vanishes for almost all \({\mathbf {x}}\in [0,1]^d\). This implies \(\int _{[0,{\mathbf {x}}]} f({\mathbf {y}})d{\mathbf {y}}=0\) for almost all \({\mathbf {x}}\in [0,1]^d\), where we have put \( f=\sum _{i=1}^N u_i 1_{\Omega _i}. \) Lemma 1 implies \(f=0\) almost everywhere on \([0,1]^d\), and as \({\varvec{\Omega }}\) is a partition, \({\mathbf {u}}=0\). Hence the partition is equivolume. \(\square \)

3.2 Proofs for Section 2.2

Proof of Theorem 1

Let \(p>1\) be given. Using the variable \(Z_{\mathbf {x}}=Z_{\mathbf {x}}({{\mathcal {P}}})\) from (3) and applying Tonelli’s theorem we see that

so the equivolume assumption and Proposition 1 yield

Here,

is the pth centered moment of a random variable Y. The variable \(NZ_{\mathbf {x}}\) is the sum of N independent (but not identically distributed) Bernoulli variables with success probabilities \(q_1({\mathbf {x}}),\ldots ,\) \(q_N({\mathbf {x}})\), where

The distribution of \(NZ_{\mathbf {x}}\) is usually called Poisson-binomial distribution with N trials and parameter vector \({\mathbf {q}}({\mathbf {x}})=(q_1({\mathbf {x}}),\ldots ,q_N({\mathbf {x}}))\). Its mean is \(\sum _{i=1}^N q_i({\mathbf {x}})=N|[0,{\mathbf {x}}]|\).

Setting  with uniform i.i.d. random variables \(\mathbf{X} _1,\ldots ,\mathbf{X} _N\) in \([0,1]^d\) and using similar arguments as above yields correspondingly

with uniform i.i.d. random variables \(\mathbf{X} _1,\ldots ,\mathbf{X} _N\) in \([0,1]^d\) and using similar arguments as above yields correspondingly

The variable \(NU_{\mathbf {x}}\) has a binomial distribution with N trials and success probability \(|[0,{\mathbf {x}}]|\). Its mean is therefore coinciding with the mean of \(NZ_{\mathbf {x}}\).

We now use the fact that among all Poisson-binomial distributions with given mean, the binomial is the largest one in convex order. This is formalized in [22, Theorem 3] (see also the paragraph directly after the statement of this theorem) and implies

with equality if and only if \(Z_{\mathbf {x}}\) has a classical binomial distribution, that is, if and only if \(q_1({\mathbf {x}})=\cdots =q_N({\mathbf {x}})=|[0,{\mathbf {x}}]|\). Integrating (10) with respect to \({\mathbf {x}}\) now yields (5) if we can exclude the equality case.

Equality in (5) would imply equality in (10), and thus \(N|\Omega _i\cap [0,{\mathbf {x}}]|=|[0,{\mathbf {x}}]|\), \(i\in \{1,\ldots ,N\}\), for almost all \({\mathbf {x}}\in [0,1]^2\). Hence \(\int _{[0,{\mathbf {x}}]} 1_{\Omega _i}({\mathbf {y}})d{\mathbf {y}}=\int _{[0,{\mathbf {x}}]}\frac{1}{N} d{\mathbf {y}}\) for almost all \({\mathbf {x}}\in [0,1]^d\) and all \(i\in \{1,\ldots ,N\}\). Lemma 1 implies \(1_{\Omega _i}=1/N\), which is not possible as \(N\ge 2\). \(\square \)

It is worth emphasizing the special case \(p=2\) of (7), which has been used more or less explicitly and generally in the existing literature, as it implies that the mean \({{\mathcal {L}}}_2\)-discrepancy can be described as sum of contributions from the individual sample points. Also for \(p=4\) an explicit integral representation can be stated.

Proposition 2

Let \({\varvec{\Omega }}\) be an equivolume partition and let \(q_i({\mathbf {x}})\), \(i=1,\ldots ,N\), be defined by (8). Then

with \(Q_i({\mathbf {x}})=q_i({\mathbf {x}})\big (1-q_i({\mathbf {x}})\big )^2+q_i({\mathbf {x}})^2\big (1-q_i({\mathbf {x}})\big )=q_i({\mathbf {x}})\big (1-q_i({\mathbf {x}})\big )\), and

where \(R_i({\mathbf {x}})=q_i({\mathbf {x}})\big (1-q_i({\mathbf {x}})\big )^4+q_i({\mathbf {x}})^4\big (1-q_i({\mathbf {x}})\big )\).

Proof

According to (7) with \(p=2\), we have

where \(Z_{\mathbf {x}}({{\mathcal {P}}})\) is given in (3). We have already seen that \(N Z_{\mathbf {x}}({{\mathcal {P}}})\) is the sum of the independent Bernoulli variables \(Y_{i}=1_{[0, \mathbf{x}[}(\mathbf{X} _i)\) with success probabilities \(q_1({\mathbf {x}}),\ldots ,\) \(q_N({\mathbf {x}})\), respectively, so \(N^2{{\mathbb {V}}}\mathrm {ar}\big (Z_{\mathbf {x}}({{\mathcal {P}}})\big ) =\sum _{i=1}^N q_i({\mathbf {x}})\big (1-q_i({\mathbf {x}})\big )\). This can be inserted into (11) to obtain the first claim.

For the second claim, let \(W_i=Y_i-{{\mathbb {E}}}Y_i\), \(i=1,\ldots ,N\), and note that

As

we have \( {{\mathbb {E}}}W_i^4=R_i({\mathbf {x}})\) and \({{\mathbb {E}}}W_i^2=Q_i({\mathbf {x}})\). Inserting this into (12) and applying (7) with \(p=4\) yields the second assertion. \(\square \)

As an application of the previous proposition, we show that an equivolume partition minimizing the mean \({{\mathcal {L}}}_2\)-discrepancy exists without any further regularity assumptions when \(d=1\) and \(N=2\).

Corollary 2

An equivolume partion \({\varvec{\Omega }}=(\Omega _1,\Omega _2)\) of the unit interval [0, 1] minimizes the mean \({{\mathcal {L}}}_2\)-discrepancy of its corresponding stratified point set among all equivolume partitions of two sets if and only if \(\Omega _1\) coincides up to a set of measure zero with [0, 1/2] or with [1/2, 1].

Proof

Let an equivolume partition \({\varvec{\Omega }}=(\Omega _1,\Omega _2)\) of the unit interval be given. It is determined by the measurable set \(\Omega _1\subset [0,1]\) with 1-dimensional Lebesgue measure 1/2, as \(\Omega _2=[0,1]{\setminus } \Omega _1\) (at least up to a set of measure zero). The functions \(q_i(\cdot )\) in (8) are thus \(q_1(x)=2|\Omega _1\cap [0,x]|\) and \(q_2=2x-q_1(x)\) and Proposition 2 implies

where \(g_x(q)=q(2x-q)\). Clearly \(q\mapsto g_x(q)\) is strictly concave for all \(x\in [0,1]\). It is easy to see that \({\underline{q}}(x)\le q_1(x)\le {\overline{q}}(x)\), where

for \(x\in [0,1]\). Hence, there is an \(\alpha _x\in [0,1]\) with \(q_1(x)=\alpha _x {\underline{q}}(x)+(1-\alpha _x){\overline{q}}(x)\). Now, (13), the concavity of \(g_x\) and the fact that \(g_x\big ({\underline{q}}(x)\big )=g_x\big ({\overline{q}}(x)\big )=\max \{0,2x-1\}\) yield

with equality if and only if \(\alpha _x\in \{0,1\}\) holds for almost all \(x\in (0,1)\) due to the strict concavity. As \(q_1\) is continuous, this can only happen when \(\alpha _x=1\) for all \(x\in (0,1)\) or \(\alpha _x=0\) for all \(x\in (0,1)\). These two cases correspond to \(q_1\in \{{\underline{q}}, {\overline{q}}\}\), and thus to the two stated choices of \(\Omega _1\). \(\square \)

3.3 Example 1: Illustration of partition principle

For illustration, we derive the mean \({{\mathcal {L}}}_2\)-discrepancy of an N-point Monte Carlo sample in \([0,1]^d\). Using (9), the i.i.d. property of the sampling points \({\mathbf {X}}_1,\ldots ,{\mathbf {X}}_N\) and

we obtain

The latter integral equals \(\int _{[0,1]^d} \Big (\prod _{i=1}^d x_i-\prod _{i=1}^d x_i^2\Big )\mathrm {d}{\mathbf {x}}\) and can be evaluated explicitly. One obtains

In particular, for \(d=2\), we get

We now compare this with the mean \({{\mathcal {L}}}_2\)-discrepancy of a stratified sample \({{\mathcal {P}}}_{\mathrm {vert}}\) based on the vertical strip partition in Fig. 2 generalized to arbitrary \(d\ge 2\) by putting

for \(i=1,\ldots ,N\). The partition \({\varvec{\Omega }}=(\Omega _1,\ldots ,\Omega _N)\) is clearly equivolume. For given \({\mathbf {x}}=(x_1,\ldots ,x_d)\in ]0,1]^d\) we let \({\bar{\iota }}:=\lfloor N x_1 \rfloor \), and obtain for the success probabilities introduced in the Proof of Theorem 1

Due to independence, the relative number of points \(Z_{{\mathbf {x}}}({{\mathcal {P}}}_{\mathrm {vert}})\) given by (3) has variance

Therefore, (7) yields

The one-dimensional integral on the right hand side of this chain of equations evaluates to

Putting things together, we arrive at

where (14) and \(N\ge 2\) was used. This confirms the general result that equivolume stratification is always strictly better than Monte Carlo sampling. It also shows that this stratification scheme has the same asymptotic order (namely 1/N) as Monte Carlo sampling, but a uniformly better leading constant: for instance, when \(d=2\) we get

for large N.

3.4 Proofs for Section 2.3

Fix \(A\subset {{\mathbb {R}}}^d\). We let \({{\,\mathrm{int}\,}}A\) and \({{\,\mathrm{bd}\,}}A\) be the interior and the boundary of A, respectively. For \(\varepsilon >0\) the \(\varepsilon \)-parallel set

consists of all points with a distance at most \(\varepsilon \) from A. We recall that a set \(A\subset {{\mathbb {R}}}^d\) is said to have reach \(r>0\) if for any \(0<\varepsilon <r\) and \(x\in A_\varepsilon \) there is a point \(y\in A\) such that \(\Vert x-y\Vert <\Vert x-z\Vert \) for all \(z\in A{\setminus }\{y\}\).

The family of non-empty compact sets will be endowed with the Hausdorff metric \(d_\mathrm {H}\) given by

where \(\emptyset \ne K,K'\subset {{\mathbb {R}}}^d\) are compact. Let \({{\mathcal {C}}}\) be the family of nonempty compact sets in \([0,1]^d\), and for \(r>0\) let \({{\mathcal {R}}}_r\) be the subfamily of sets with reach at least r. The latter contains \({{\mathcal {K}}}\), the family of non-empty compact convex subsets of \([0,1]^d\). Crucial for our line of arguments is the fact that all three families are compact in the Hausdorff metric. This statement for \({{\mathcal {K}}}\) is the famous Blaschke selection theorem [35, Theorem 1.8.7]; a proof for \({{\mathcal {C}}}\) and \({{\mathcal {R}}}_r\) can be found in [35, Theorem 1.8.5] and [15, Remark 4.14], respectively. As a reference of convex geometric notions used in this section, we recommend [35].

Importantly, the volume functional is not continuous on \({{\mathcal {C}}}\) (for instance, \([0,1]^d\) can be approximated by finite sets in the Hausdorff metric), but it is continuous on both \({{\mathcal {R}}}_r\) and \({{\mathcal {K}}}\). This can be seen by means of a Steiner-type result stating for \(K\in {{\mathcal {R}}}_r\) that

holds for \(0\le \varepsilon <r\); see [15]. Here, \(\kappa _{d-k}\) is the volume of the Euclidean unit ball in \({{\mathbb {R}}}^{d-k}\), and \(V_k(K)\in {{\mathbb {R}}}\) is the kth total curvature measure (also called intrinsic volume when applied to convex sets). We use repeatedly that \(K\mapsto V_k(K)\) is continuous on \({{\mathcal {R}}}_r\). These and more results on sets of positive reach can be found in [15]; see also the survey [36], where an outline of the history, newer results and additional references on the matter can be found.

Proposition 3

Let \(N\ge 1\) and \(r>0\) be fixed. The family \({\mathfrak {P}}_N(r)\) of all equivolume partitions of \([0,1]^d\) consisting of N sets in \({{\mathcal {R}}}_r\) is compact.

The same holds true for the family \({\mathfrak {P}}_N^{\mathrm {conv}}\) of all equivolume partitions of \([0,1]^d\) consisting of N convex sets.

Proof

Clearly, the family of equivolume partitions of sets in \({{\mathcal {R}}}_r\) is a subset of the Cartesian product \({{\mathcal {R}}}_r^N\), more precisely,

In fact, assume that \((K_1,\ldots ,K_N)\) is an element of the right hand side of (18). If there was a set \(K_j\) overlapping with \(\bigcup _{i\ne j}^N K_i\), we would have

a contradiction.

From the definitions it is clear that \((K,M)\mapsto K\cup M\) is Lipschitz continuous if the product space is endowed with the maximum norm of the marginal metrics. Hence, the right hand set in (18) is closed in \({{\mathcal {R}}}_r^N\), as it is defined by means of continuous functions. As \({{\mathcal {R}}}_r^N\) is compact, \({\mathfrak {P}}_N(r)\) is compact too.

Finally, \({{\mathcal {K}}}^N\) is compact implying the compactness of \({\mathfrak {P}}_N^{\mathrm {conv}}={\mathfrak {P}}_N(r)\cap {{\mathcal {K}}}^N\). \(\square \)

We now show that an average discrepancy of a stratified sample is continuous as a function of the partitioning sets. In the following, \(\Delta \) stands for a measure of discrepancy, and can be the \({{\mathcal {L}}}_p\)-discrepancy or any other, which satisfies the rather weak assumptions below.

Proposition 4

Fix \(r>0\). If \(\Delta :([0,1]^d)^N\rightarrow {{\mathbb {R}}}\) is measurable and bounded, then \(\phi _\Delta :{{\mathcal {R}}}_r^N\rightarrow {{\mathbb {R}}}\) with

is Lipschitz continuous.

Proof

We let \(\Vert \cdot \Vert _\infty \) be the \(L^\infty \)-norm of bounded functions on \(([0,1]^d)^N\). We will use the bound

which holds for all \(t_1,\ldots ,t_N,t_1',\ldots ,t_N'\in \{0,1\}\). Let \(0<\varepsilon <1\). If \(d_H(K_i,K_i')\le \varepsilon \) for all \(i=1,\ldots ,N\), the indicators \(\mathrm {1}_{K_i}\) and \(\mathrm {1}_{K_i'}\) coincide on the complement of

By Steiner’s formula (17), we have

As \(V_k\) is continuous and \({{\mathcal {R}}}_r\) is compact, \(V_k(K_i)+V_k(K_i')\le 2\max _{M\in {{\mathcal {R}}}_r} V_k(M)<\infty \), so

for all \(i=1,\ldots ,N\), with a constant c that does not depend on \((K_1,\ldots ,K_N,K_1',\ldots ,K_N')\). Thus, by (19) and (20), we have

This implies the claimed continuity. \(\square \)

Proposition 5

Let \(\Delta \) be as in Proposition 4 and fix \(r>0\). For any finite equivolume partition \({\varvec{\Omega }}=(\Omega _1,\ldots ,\Omega _N)\) of \([0,1]^d\) with sets in \({{\mathcal {R}}}_r\), let \({{\mathcal {P}}}_{\varvec{\Omega }}=\{{\mathbf {X}}_1,\ldots ,{\mathbf {X}}_N\}\) be the corresponding stratified sample.

Then \({{\mathbb {E}}}\Delta ({\mathbf {X}}_1,\ldots ,{\mathbf {X}}_N)\) is continuous as a function of \({\varvec{\Omega }}\in {\mathfrak {P}}_N(r)\).

Proof

As the partitions are equivolume, we have \(|\Omega _i|=1/N\) for all i, so

and the claim follows from Proposition 4. \(\square \)

Proof of Theorem 2

Assume that \(\Delta :([0,1]^d)^N\rightarrow {{\mathbb {R}}}\) is measurable and bounded. Proposition 5 thus implies that \({{\mathbb {E}}}\Delta ({{\mathcal {P}}}_{{\varvec{\Omega }}})\) is a continuous function of \({\varvec{\Omega }}\in {\mathfrak {P}}_N(r)\), where \({{\mathcal {P}}}_{{\varvec{\Omega }}}\) is the corresponding stratified sample.

As \({\mathfrak {P}}_N(r)\) and its subset \({\mathfrak {P}}_N^{\mathrm {conv}}\) are both compact by Proposition 3, \({{\mathbb {E}}}\Delta ({{\mathcal {P}}}_{\varvec{\Omega }})\) attains minima on either set. This shows the assertion. \(\square \)

Corollary 1 now follows directly from Theorem 2. In fact, for \(1\le p<\infty \) the function

is bounded. It is also continuous due to a dominated convergence argument. Even simpler, the measurability and boundedness of \(\Delta ({\mathbf {x}}_1,\ldots ,{\mathbf {x}}_N)=D^*(\{{\mathbf {x}}_1,\ldots ,{\mathbf {x}}_N\})\) follows directly from the definition.

It should be remarked that the above arguments do not rely on the choice of the unit cube as reference set. The results and proofs continue to hold with minor modifications if the set \([0,1]^d\) is replaced by any fixed compact convex set \(K\subset {{\mathbb {R}}}^d\) with interior points.

3.5 Example 2: Convex equivolume partitions into two sets

Recall that \({\mathfrak {P}}_N^{\mathrm {conv}}\) is the family of all convex equivolume partitions of \([0,1]^d\) with N elements. According to Theorem 1, there exists a partition in \({\mathfrak {P}}_N^{\mathrm {conv}}\) that minimizes the mean \({{\mathcal {L}}}_2\)-discrepancy. The following result determines this partition for \(N=2\) and \(d=2\). In this simple case, \({\mathfrak {P}}_N^{\mathrm {conv}}\) can be described with the help of a one-parameter model, which is relatively easy to analyze. The optimal partition \({\varvec{\Omega }}_{*}^{(2)}\) is obtained by cutting \([0,1]^2\) into two congruent triangles by the anti-diagonal; see Fig. 4, right.

Lemma 2

For \(d=2\) we have

Proof

Let \(\Omega _1\) and \(\Omega _2\) be two convex sets that partition \([0,1]^2\) and have the same content. By convexity, the intersection of \(\Omega _1\) and \(\Omega _2\) is contained in a line \(\ell \). The midpoint \(p=(1/2,1/2)\) of \([0,1]^2\) must be contained in one of these sets, so let us assume \(p\in \Omega _1\). As the reflection \(\ell '\) of \(\ell \) at p is parallel to \(\ell \), the reflection \(\Omega _1'\) of \(\Omega _1\) at p must contain \(\Omega _2\). By the equivolume property we have \(|\Omega _2|=|\Omega _1|=|\Omega _1'|\), so \(\Omega _2=\Omega _1'\) up to a Lebesgue-null set. As \(\Omega _2\) and \(\Omega _1'\) are closed and convex we must even have \(\Omega _2=\Omega _1'\), so \(\Omega _2\) is the reflection of \(\Omega _1\) at p and we conclude \(p\in \ell \).

As \({\varvec{\Omega }}_{*}^{(2)}\) and \({{\mathbb {E}}}{{\mathcal {L}}}_2^2({{\mathcal {P}}}_{{\varvec{\Omega }}})\) are unaltered if all sets in the partition are reflected at the main diagonal of \([0,1]^2\), we may assume from now on that \(\ell \) hits the x-axis in a point \(A\in [0,1]\); see Fig. 4 (left). Note that \({\varvec{\Omega }}_{*}^{(2)}\) corresponds to \(A=1\). For fixed A, we assume from now on that \(\Omega _1\) is the partitioning set that contains the left, vertical edge of the unit square.

To calculate the expected \({{\mathcal {L}}}_2\)-discrepancy, we use the formula

from [29], in which \(f({\mathbf {x}})= | \Omega _1 \cap [0,{\mathbf {x}}] | \), and where we used for the second equality that the integrand vanishes whenever \({\mathbf {x}}\in \Omega _1\).

We distinguish the three cases \(A=[0,1/2)\), \(A=(1/2,1]\) and \(A=1/2\). The special case \(A=1/2\) gives the vertical strip partition for \(N=2\) with expected discrepancy \(1/18=0.055\ldots \) according to (16).

Now assume that \(A=(1/2,1]\). In this case, the separating line \(\ell \) has the equation

We partition \(\Omega _2\) into the two sets \(\Omega _2^+=\{(x,y)\in [0,1]^2: x\ge A\}\) and \(\Omega _2^-=\Omega _2{\setminus }\Omega _2^+\); see Fig. 4 (middle). For \((x,y)\in \Omega _2^+\) we have

so

whereas for \((x,y)\in \Omega _2^-\) we have

which results in

In total, we obtain for \(A \in (1/2,1]\) by inserting the contributions of both cases into (21) that

which attains its minimum for \(A=1\) with a value of \(1/20=0.05\); see Fig. 5. Note that as \(A\rightarrow 1/2\) this function approaches \(0.055\ldots \).

We can analyse the last case \(A=[0,1/2)\) in a similar fashion and obtain

which approaches its infimum as \(A\rightarrow 1/2\) with a value of \(0.055\ldots \); see Fig. 5. This proves the assertion. \(\square \)

4 An infinite family and numerical results

4.1 An infinite family and special cases

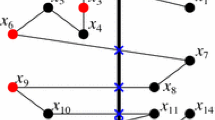

Motivated by the result of the previous section we define an \((N-1)\)-parameter family of partitions of the unit square generated by parallel lines which are orthogonal to the diagonal of the square. For \(N\in {{\mathbb {N}}}\) and a vector \({\mathbf {v}}=(v_1,\ldots ,v_{N-1})\in [0,\sqrt{2}]^{N-1}\) with \(0<v_1<v_2<\cdots<v_{N-1}<\sqrt{2}\) we define a partition \({\varvec{\Omega }}_{\mathbf {v}}^{(N)}\) as follows. If \(\ell _i\) denotes the line with slope \(-1\) hitting the first closed quadrant and with distance \(v_i\) from the origin, then \([0,1]^2{\setminus } \{\ell _{1},\ldots , \ell _{{N-1}}\}\) has N connected components. Its closures are denoted by \(\Omega _1,\ldots ,\Omega _N\), where \(\Omega _i\) is positioned between \(\ell _{{i-1}}\) and \(\ell _i\) if we use the convention \(v_0=0\) and \(v_N=\sqrt{2}\). Examples are illustrated in Fig. 11. We will often use the abbreviation \({\varvec{\Omega }}_{v_1,\ldots ,v_{N-1}}^{(N)}\) for the partition \({\varvec{\Omega }}_{(v_1,\ldots ,v_{N-1})}^{(N)}\).

An interesting special case are the equivolume partitions denoted by \({\varvec{\Omega }}_{*}^{(N)}\). They are defined via \({\varvec{\Omega }}_{*}^{(N)}= {\varvec{\Omega }}_{v_1,\ldots ,v_{N-1}}^{(N)}\) with

for \(1 \le i \le \lfloor N/2 \rfloor \) and

for \(\lfloor N/2 \rfloor +1 \le i \le N-1\).

As a side remark, we mention also the partition generated by a set of equidistant points; i.e. \(v_i = \sqrt{2} i/N\) for a given \(N>1\). This is a simple example of a family of partitions that is not equivolume for any N. However, by pairing complementary sets such that the union has volume 2/N, it is possible to obtain equivolume partitions for every even N with N/2 points into non-connected sets.

4.2 Example 3: Relaxing the volume constraint I

We have seen in Example 2 that \({\varvec{\Omega }}_{*}^{(2)}\) gives the lowest expected discrepancy among all convex equivolume partitions into two sets. We will now show that this partition can be improved if the equivolume condition is dropped by shifting the line along the diagonal, that is, by considering partitions in the class \({\varvec{\Omega }}_{v}^{(2)}\) with \(v\in [0,\sqrt{2} ]\); see Fig. 6.

Lemma 3

We have

for \(v^{*}= 0.793398\ldots \).

A comparison with Lemma 2 shows that \({\varvec{\Omega }}_{v^{*}}^{(2)}\) has a smaller mean \({{\mathcal {L}}}_2\)-discrepancy than any convex equivolume partion when \(N=2\).

Proof

For a given point \(v\in [0,\sqrt{2}]\) we denote the corresponding intersection of the line with the boundary of the square with (A, 1) if \(v \in [\sqrt{2}/2,\sqrt{2}]\) and with (B, 0) for \(v \in [0,\sqrt{2}/2]\). If \(v \in [\sqrt{2}/2,\sqrt{2}]\), then \(A=\sqrt{2}v-1\). The two partitioning sets have volumes \(1-\frac{(1-A)^2}{2}\) and \(\frac{(1-A)^2}{2}\) and points on the separating line satisfy \(y=-x+1+A\). In total, we obtain for \(A \in [0,1]\) and in a similar fashion as in the Proof of Lemma 2 that

This function attains its minimum for \(A=0.122034\) with value \(0.04904\ldots \); see Fig. 5.

Next, if \(v \in [0,\sqrt{2}/2]\), then \(B=\sqrt{2}v\). The two partitioning sets have volumes \(B^2/2\) and \(1-B^2/2\) and points on the separating line satisfy \(y=-x+B\). Similar to the previous considerations we split the integral into subintegrals and distinguish the different cases on which the intersections can be described with the same function. We obtain

for \(B \in [0,1]\), which attains its minimum for \(B=1\) with value 0.05; i.e. the minimum is attained for the anti-diagonal; see Fig. 5. This concludes the proof. \(\square \)

Left: One-parameter model of partitions used in Lemma 3. Right: The partition of this family with the smallest expected discrepancy

4.3 Example 4: Relaxing the volume constraint II

In this section we extend the results of Examples 2 and 3 to the case \(N=3\). The partitions can still be analysed explicitly and it turns out that there is a unique partition that minimises the expected discrepancy; see Fig. 10. However, the full analysis consists of a tedious case-by-case study and, hence, we do not fully outline the proof of this assertion in the following, but report the most interesting cases only. We leave the analysis of the remaining cases – which we carried out and which follows along the same lines as our proof – to the interested reader.

In general, let \(0< v_1< v_2 < \sqrt{2}\) and denote the three sets of the partition with \(\Omega _1, \Omega _2\) and \(\Omega _3\), as described in Sect. 4.1. Associated to the three sets, there are three indicator functions \(\chi _1, \chi _2\) and \(\chi _3\) with

where \(\mathbf {X}_j\) is the random point in \(\Omega _j\). Setting

we get for the expected \({{\mathcal {L}}}_2\)-discrepancy

and since \(\#(x,y)\) is a Poisson-binomial distributed random variable we have that

in which, as in (8),

The analysis proceeds now via two levels of case distinctions. The first level concerns the actual partitions into three sets that we are considering; see Fig. 7. Within each of the four cases, we partition the unit square in dependence of \(v_1\) and \(v_2\) into sets in which the probabilities \(q_j\) have the same closed form in x, y, thus providing closed formulas for \({{\mathbb {E}}}(\#(x,y))\) and \({{\mathbb {E}}}(\#(x,y))^2\); see Fig. 8. Next, we compute the expected discrepancy, which is an integral over the unit square, as a sum of integrals over the different closed form expressions according to the subcase-partition.

In the following, we first focus on the third case in Fig. 7. In this case we have \(0< v_1< 1/\sqrt{2}< v_2 < \sqrt{2}\) with \(v_2 \in [2v_1, \sqrt{2}]\) and \(A= v_1\sqrt{2}\), \(B= v_2\sqrt{2}-1\) such that for given A we have that \(2A-1 \le B \le 1\). It turns out that we can calculate the expected discrepancy as a rational function of A and B, which has a unique minimum. For simplicity we restrict considerations to \(1/2\le A \le 1\) in Lemma 4.

Lemma 4

Let \(1/(2 \sqrt{2}) \le v_1 < 1/\sqrt{2}\) and \(2v_1 \le v_2 \le \sqrt{2}-\frac{\sqrt{2}-2v_1}{2}\). Let \({\varvec{\Omega }}_{v_1,v_2}^{(3)}\) be the corresponding partition of the unit square into three sets. Then

for \(v_1^{*} = 0.5130\ldots \) and \(v_2^{*} = 1.1249\ldots \)

Proof

We set \(A= v_1\sqrt{2}\), \(B= v_2\sqrt{2}-1\) such that for given \(1/2 \le A \le 1\) we have \(2A-1 \le B \le A\).

We get \(|\Omega _1|= A^2/2\), \(|\Omega _3| = (1-B)^2/2\) and \(|\Omega _2| = 1-|\Omega _1| - |\Omega _3|\). To calculate the expected discrepancy we subdivide the unit square into 6 sets, \(S_1, \ldots , S_{6}\), as illustrated in Fig. 8 and we use the symmetry along the diagonal in cases II-VI. For a point \((x,y) \in S_i\) we denote the expected discrepancy function by \(f_i(x,y)\) and we calculate the expected discrepancy for the whole partition as

For illustration, we give the calculations for the first case and refer to Appendix B for the other (equally elementary, but much more technical) cases.

Case I Let \((x,y) \in \Omega _1=S_1\). Then \(q_2(x,y)=q_3(x,y)=0\) and \(q_1(x,y)=2xy/A^2\). Hence, we get

and

Cases II - VI With similar calculations as in Case I we can obtain expressions \(f_2, \ldots , f_6\) for the expected value of the discrepancy function for points (x, y) in each of the sets and in dependence of the parameters A and B; see Appendix B for details. Integrating these functions over their respective domains and summing the values, gives us the following rational function in A and B which we can minimize over \(1/2\le A \le 1\) and \(2A-1 \le B \le A\) in order to obtain the two parameters A and B that generate the partition with the smallest expected discrepancy in this family; i.e.

This function can be minimised using a standard computer algebra system. The minimum of this function is 0.0267804 for the parameter values \(A= 0.725501\) and \(B= 0.590843\). These parameter values correspond to \(v_1 = 0.5130\ldots \) and \(v_2 = 1.1249\ldots \). \(\square \)

In a similar fashion we can analyse the second case in Fig. 7.

Lemma 5

Let \(1/(2 \sqrt{2}) \le v_1 < 1/\sqrt{2}\) and \(\frac{1}{\sqrt{2}} \le v_2 \le 2 v_1\). Let \({\varvec{\Omega }}_{v_1,v_2}^{(3)}\) be the corresponding partition of the unit square into three sets. Then

for \(v_1^{*} = 0.5329\ldots \) and \(v_2^{*} = 1.06582\ldots \).

Proof

The proof follows the same lines as in Lemma 4; the only difference is the subdivision of the unit square as well as the range of B. We set again \(A=v_1 \sqrt{2}\), \(B=v_2 \sqrt{2}-1\) such that for given \(1/2 \le A \le 1\) we have \(0 \le B \le 2A-1\).

We get \(|\Omega _1|= A^2/2\), \(|\Omega _3| = (1-B)^2/2\) and \(|\Omega _2| = 1-|\Omega _1| - |\Omega _3|\). To calculate the expected discrepancy we subdivide the unit square into 6 sets, \(S_1, \ldots , S_{6}\), as illustrated in Fig. 8 and we use again the symmetry along the diagonal in cases II-VI. For a point \((x,y) \in S_i\) we denote the expected discrepancy function by \(f_i(x,y)\) and we calculate the expected discrepancy for the whole partition as

As before, explicit expressions for \(f_i\) on \(S_i\), \(i=1,\ldots ,6\), can be obtained. They depend on the parameters A and B. Integrating these functions over their respective domains and summing the values, gives us again a rational function in A and B which we can minimize over \(1/2\le A \le 1\) and \(0 \le B \le 2A-1\) in order to obtain the two parameters A and B that generate the partition with the smallest expected discrepancy in this family. More explicitly, we have

Using again a computer algebra system we obtain that the minimum of this function is 0.0268054 for the parameter values \(A= 0.753647\) and \(B= 0.507294\). These parameter values correspond to \(v_1 = 0.5329\ldots \) and \(v_2 = 1.06582\ldots \). \(\square \)

The subdivisions for the two middle cases in Fig. 7

The two lemmas provide two interesting insights. Firstly, we can now easily analyse the equivolume partition within this family.

Corollary 3

Let \( v_1 = \sqrt{\frac{1}{3}},\) and \(v_2 = \sqrt{2} - \sqrt{\frac{1}{3}} \), then the mean \({{\mathcal {L}}}_2\)-discrepancy of \({\varvec{\Omega }}_{*}^{(3)}= {\varvec{\Omega }}_{v_1,v_{2}}^{(3)}\) is

Proof

We have that \( A= \frac{\sqrt{2}}{\sqrt{3}} =0.816\ldots \) and \(B= 1 - \frac{\sqrt{2}}{\sqrt{3}} = 0.183\ldots \). Hence, we see that this case satisfies the assumptions of Lemma 5. Using (23) we obtain the value. \(\square \)

Secondly, combining the results of the two lemmas, we can now fix a parameter A in [1/2, 1] and analyse all partitions for this fixed A and any parameter B in [0, A]. As it turns out, if we fix A and plot the expected discrepancy as a function of the parameter B, then this function is very well behaved and has one unique minimum; see Fig. 9.

Remark 1

It is interesting to note that the minimal parameters in Lemma 4 are not at the boundary of the two cases; i.e. for \(A= 0.72550\) we have that \(2A-1 = 0.451003< 0.590843 = B < 0.72550 = A\). The expected discrepancy for the partition generated by \((A,2A-1)\) is

and is thus only slightly larger. Furthermore, it turns out that the minimal parameters in Lemma 5 are exactly at the boundary; i.e. for \(A= 0.753647\), we have that \(2A-1=B= 0.507294\), whereas the minimum for this A is obtained for \(B^*=0.516474\) and the expected discrepancy is

and is thus only slightly smaller.

Interestingly, the minimum for a given A can be obtained in either of the two cases analysed in Lemmas 4 and 5 as can be seen from the examples. As a rule of thumb, the global minimum within this family is obtained for parameters A and B in which the minimum for fixed A lies almost at the interval boundary, i.e. for which \(B_{\min } \approx 2A-1\).

This observation relates to Question 2 of Sect. 2.5. It illustrates that the equivolume property appears to have no particular significance within this simple family of partitions. It rather seems that other geometric reasons drive the minimisation.

4.4 An algorithmic approach

In order to run systematic experiments within this family of partitions, we implemented an algorithm that takes as input an arbitrary vector \({\mathbf {v}}=\{v_1, \ldots , v_{N-1}\}\) with increasing entries in \([0,\sqrt{2}]\) as well as a point \((x,y) \in [0,1]^2\) and outputs the expected value of the discrepancy function of the set of N points generated from the partition \({\varvec{\Omega }}_{\mathbf {v}}^{(N)}\) on the interval \([0,x] \times [0,y]\). This allows for an approximation of the expected value of the \({{\mathcal {L}}}_2\)-discrepancy of \({{\mathcal {P}}}_{{\varvec{\Omega }}_{\mathbf {v}}^{(N)}}\) using standard results from the theory of quasi-Monte Carlo integration.

The algorithm is based on a simple geometric consideration. Assume \(0 \le y\le x \le 1\). As we have seen, we need to determine the probability

with which the point \({\mathbf {X}}_i \in \Omega _i\) lies in the box \([0,x] \times [0,y]\) for \(1\le i \le N\). The expectation is then obtained from Proposition 2. Hence, we first need to calculate the respective areas of the sets \(\Omega _i\). To calculate their intersections with \([0,x] \times [0,y]\) we divide the set \(v_1,\ldots ,v_{N-1}\) into four subsets depending on which of the vertices of \([0,x] \times [0,y]\) are on the left or on the right of \(\ell _1,\ldots ,\ell _{N-1}\), respectively. More precisely: the four lines with slope \(-1\) through the vertices of \([0,x] \times [0,y]\) have distances \(0=u_0\le u_1\le u_2\le u_3\le \sqrt{2}\) from the origin. The jth subset consists then of all \(v_i\)’s between \(u_{j-1}\) and \(u_j\) for \(j=1,\ldots ,4\), where we have put \(u_4=\sqrt{2}\); see Fig. 11 (left). Different formulae are used to compute the intersection in each case.

This elementary algorithm leaves us with two conclusions. On the one hand, it is rather straightforward to calculate the expected value of the discrepancy function for a given box \([0,x] \times [0,y]\). On the other hand, it is incredibly tedious to do so. While this calculation can be solved algorithmically in a straightforward fashion, there is little hope to compute the expectation analytically since we have a different set of success probabilities for each box generated by a vector (x, y).

Left: Illustration of the algorithm. The three bullet points indicate the projections of the vertices of \([0,x] \times [0,y]\) on the main diagonal giving rise to the numbers \(u_1,u_2,u_3\) and the division of \(\{v_1, \ldots , v_{N-1}\}\) into four subsets. Middle: Illustration of the equidistant partition \({\varvec{\Omega }}^{(6)}_{\mathbf {v}}\) with \(\mathbf {v}= \frac{\sqrt{2}}{6} (1,\ldots ,5)\). Right: Illustration of the equivolume partition \({\varvec{\Omega }}^{(6)}_*\)

4.5 Numerical results

In this final section, we present the results of three different sets of experiments. In the first two experiments we generate many instances of stratified point sets for a given fixed partition, calculate the \({{\mathcal {L}}}_2\)-discrepancy of each point set and approximate the expected discrepancy of the partition by the mean of the experiment. In the final experiment, we calculate and compare the star discrepancy of different point sets.

We use Warnock’s formula [39] as presented in [8, Proposition 2.15] to calculate the \({{\mathcal {L}}}_2\)-discrepancy of a given point set. That is, for any point set \({{\mathcal {P}}}=\{\mathbf {x}_0, \ldots , \mathbf {x}_{N-1} \} \in [0,1]^d\) we have

in which \(x_{n,i}\) is the ith component of the \(\mathbf {x}_n\). We refer to [16, 20] for quick implementations of this formula.

First, we present a numerical observation which could, in principle, be proven along the same lines as Lemma 4. However, given the number of case distinctions such an analysis – based on our elementary method – would require, we only provide numerical evidence and state the result as a conjecture.

Conjecture 1

There exists \({\mathbf {v}}=(v_1,v_2,v_3)\) with \(v_1< v_2< v_3\) in \([0,\sqrt{2}]\) such that

We obtained various instances of partitions that seem to improve the classical jittered sampling by perturbing the three values of the vector \((\sqrt{2}/4)(1,2,3)\). We obtained the best numerical results for the three points

see Fig. 10. We simulated \(10^6\) instances of stratified sets for this particular partition and calculated the discrepancy in each case with the formula of Warnock. Independently, we used our algorithm to estimate the expected discrepancy using \(10^4\) many grid points. Both methods indicate that the first digits after the decimal points of the expected discrepancy are

which would be clearly better than the mean discrepancy of jittered sampling.

Next, we use Warnock’s formula to empirically study the discrepancy of different point sets and constructions; i.e. for given N we generate 500 samples and calculate the \({\mathcal {L}}_2\)-discrepancy for each of these samples using Warnock’s formula. The empirical mean of this sample approximates the expected value of the discrepancy. We collect our numerical results in Table 1.

Our numerical results suggest that the expected discrepancy of partitions \({\varvec{\Omega }}_{*}^{(N)}\) is about a factor 2 smaller than the expected discrepancy of a set of random points:

Conjecture 2

We conjecture that

In our final experiment, we use an implementation of the Dobkin-Eppstein-Mitchell algorithm [6] for the computation of the star discrepancy which was provided by Magnus Wahlström; for details on the implementation we refer to [12]. This experiment relates to our comments in Sect. 1.2 and shows that our partitions also seem to generate point sets that have a smaller expected star discrepancy than sets of N i.i.d. uniformly random points. We leave the generalisation of the partitions \({\varvec{\Omega }}_{*}^{(N)}\) for future research. It is in principle straightforward, but a bit technical and thus beyond the scope of this final proof-of-concept numerical experiment.

References

Aistleitner, C.: Covering numbers, dyadic chaining and discrepancy. J. Complexity 27(6), 531–540 (2011)

Chen, W.W.L., Skriganov, M.M.: Explicit constructions in the classical mean squares problem in irregularity of point distribution. J. Reine Angew. Math. 545, 67–95 (2002)

Cranley, R., Patterson, T.N.L.: Randomization of number theoretic methods for multiple integration. SIAM J. Numer. Anal. 13(6), 904–914 (1976)

Chersi, F., Volčič, A.: \(\lambda \)-equidistributed sequences of partitions and a theorem of the De Bruijn-Post type. Ann. Mat. Pura Appl. 162, 23–32 (1992)

Davenport, H.: Note on irregularities of distribution. Mathematika 3, 131–135 (1956)

Dobkin, D.P., Eppstein, D., Mitchell, D.P.: Computing the Discrepancy with Applications to Supersampling Patterns. ACM Trans. Graph. (TOG) 15(4), 354–376 (1996)

Diaconis, P.: The distribution of leading digits and uniform distribution mod 1. Ann. Probab. 5(1), 72–81 (1977)

Dick, J., Pillichshammer, F.: Digital Nets and Sequences. Cambridge University Press, Cambridge, England (2010)

Dick, J., Pillichshammer, F.: Explicit constructions of point sets and sequences with low discrepancy, Kritzer, P. (ed.) et al., Uniform distribution and quasi-Monte Carlo methods. Discrepancy, integration and applications. Radon Series on Computational and Applied Mathematics vol. 15, pp 63-86 (2014)

Dick, J., Pillichshammer, F.: Optimal \({\cal{L}}_2\)-discrepancy bounds for higher order digital sequences over the finite field \({\mathbb{F}}_2\). Acta Arith. 162(1), 65–99 (2014)

Doerr, B.: A lower bound for the discrepancy of a random point set. J. Complexity 30(1), 16–20 (2014)

Doerr, C., Gnewuch, M., Wahlström, M.: Calculation of Discrepancy Measures and Applications, Chen, William (ed.) et al., A panorama of discrepancy theory. Springer, Lecture Notes in Mathematics 2107, pp. 621-678 (2014)

L’Ecuyer, P., Lemieux, C.: Variance reduction via lattice rules. Manage. Sci. 46(9), 1214–1235 (2000)

L’Ecuyer, P.: Randomized Quasi-Monte Carlo: An Introduction for Practitioners. Owen, Art B. (ed.) et al., Monte Carlo and quasi-Monte Carlo methods, MCQMC 2016. In: Proceedings of the 12th International Conference on Monte Carlo and Quasi-Monte Carlo Methods in Scientific Computing, Stanford, CA, August 14–19, 2016. Springer Proceedings of Mathematical Statistics vol. 241, pp. 29–52 (2018)

Federer, H.: Curvature measures. Trans. Am. Math. Soc. 93, 418–491 (1959)

Frank, K., Heinrich, S.: Computing discrepancies of Smolyak quadrature rules. J. Complexity 12, 287–314 (1996)

Gnewuch, M.: Bracketing numbers for axis-parallel boxes and applications to geometric discrepancy. J. Complexity 24(2), 154–172 (2008)

Gnewuch, M., Pasing, H., Weiß, C.: A Generalized Faulhaber Inequality, Improved Bracketing Covers, and Applications to Discrepancy, arXiv:2010.11479

Haber, S.: A modified Monte Carlo quadrature. Math. Comput. 19, 361–368 (1966)

Heinrich, S.: Efficient algorithms for computing the L2 discrepancy. Math. Comput. 65, 1621–1633 (1996)

Heinrich, S., Novak, E., Wasilkowski, G., Wozniakowski, H.: The inverse of the star-discrepancy depends linearly on the dimension. Acta Arith. 96(3), 279–302 (2001)

Hoeffding, W.: On the distribution of the number of successes in independent trials. Ann. Math. Statist. 27, 713–721 (1956)

Hu, T.-C., Taylor, R.L.: On the strong law for arrays and for the bootstrap mean and variance. Int. J. Math. Math. Sci. 20, 375–382 (1997)

Kakutani, S.: A problem on equidistribution on the unit interval [0,1]. Measure Theory, Oberwolfach 1975, vol. 541, pp. 369–375. Springer LNM (1976)

Klenke, A.: Probability Theory - A Comprehensive Course, 2nd edn. Springer, Heidelberg (2013)

Matoušek, J.: Geometric Discrepancy. An Illustrated Guide. Algorithms and Combinatorics, vol. 18. Springer, Berlin, Germany (1999)

Niederreiter, H., Tichy, R.F., Turnwald, G.: An inequality for differences of distribution functions. Arch. Math. 54, 166–172 (1990)

Pausinger, F., Steinerberger, S.: On the discrepancy of jittered sampling. J. Complexity 33, 199–216 (2016)

Pausinger, F., Rachh, M., Steinerberger, S.: Optimal jittered sampling for two points in the unit square. Statist. Probab. Lett. 132, 55–61 (2018)

Owen, A.B.: Monte Carlo variance of scrambled equidistribution quadrature. SIAM J. Numer. Anal. 34(5), 1884–1910 (1997)

Owen, A.B.: Scrambled net variance for integrals of smooth functions. Ann. Stat. 25(4), 1541–1562 (1997)

Owen, A.B.: Variance with alternative scramblings of digital nets. ACM Trans. Model Comput. Simul. 13(4), 363–378 (2003)

Pyke, R., van Zwet, W.R.: Weak convergence results for the Kakutani interval splitting procedure. Ann. Probab. 32(1A), 380–423 (2004)

Roth, K.F.: On irregularities of distribution. Mathematika 1, 73–79 (1954)

Schneider, R.: Convex Bodies: The Brunn–Minkowsky Theory, 2nd edn. Cambridge University Press, Cambridge (2004)

Thäle, Ch.: 50 years sets with positive reach a survey. Surv. Math. Appl. 3, 123–165 (2008)

Thompson, S.K.: Sampling, 3rd edn. Wiley Series in Probability and Statistics. Wiley, New York (2012)

Volčič, A.: A generalization of Kakutani’s splitting procedure. Ann. Mat. Pura Appl. 190(1), 45–54 (2011)

Warnock, T.T.: Computational investigations of low discrepancy point sets. In: Zaremba, S.K. (ed.) Applications of Number Theory to Numerical Analysis, pp. 319–343. Academic Press, Singapore (1972)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Adrian Constantin.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Appendix A – Uniform distribution and equidistribution of partitions

The well-known fact that a sequence of Monte Carlo samples \(({{\mathcal {P}}}_N)\) is almost surely uniformly distributed follows directly from the strong law of large numbers. A corresponding statement for the sequences in Definition 1 is based on the strong law of large numbers for triangular arrays. Note that it does not require that the sampling points for different partitions \({\varvec{\Omega }}^{(N)}\) are independent. This is why we do not introduce this assumption, although it is typically satisfied in the applications we have in mind.

Proposition 6

Consider a sequence of partitions \(\{{\varvec{\Omega }}^{(N)}\}_{N\ge 1}\) of \([0,1]^d\), with \({\varvec{\Omega }}^{(N)}=(\Omega _1^{(N)},\ldots ,\) \(\Omega _N^{(N)})\) consisting of Lebesgue-sets with positive content and let \({\mathbf {X}}^{(N)}=({\mathbf {X}}_1^{(N)},\ldots , {\mathbf {X}}_N^{(N)})\) be the vector of stratified sampling points based on \({\varvec{\Omega }}^{(N)}\). Then the triangular array \({{\widehat{{\mathbf {X}}}}}=\big ({\mathbf {X}}^{(N)}\big )_{N\in {\mathbb {N}}}\) is almost surely uniformly distributed if and only if

for all cubes \([\mathbf{x}, \mathbf{y}[ \subset [0,1]^d\).

Proof

For \(\mathbf{x}, \mathbf{y}\in [0,1]^d\) with each component of \({\mathbf {y}}\) at least as large as the corresponding component of \({\mathbf {x}}\), consider the stochastic variables \(Y_{i}^{(N)}=1_{[\mathbf{x}, \mathbf{y}[}({\mathbf {X}}^{(N)}_i)\), where \({\mathbf {X}}^{(N)}_1,\ldots ,{\mathbf {X}}_N^{(N)}\) is the stratified sample based on the partition \({\varvec{\Omega }}^{(N)}\). The Y’s are row-wise independent random variables with uniformly bounded variances and we have

Therefore, the strong law of large numbers for triangular arrays (see [23, Theorem 2.2] with \(p=1\), \(\psi (t)=t^2\) and \(a_n=n\)) implies

almost surely as \(N\rightarrow \infty \).

If assumption (25) holds, we have \(\lim _{N\rightarrow \infty } a_N({\mathbf {x}},{\mathbf {y}})=|[\mathbf{x}, \mathbf{y}[|\), so (26) implies (4) for almost every realization. Hence, the stratified sample points form almost surely a uniformly distributed triangular array.

If (25) is violated, there must be \(\mathbf{x}, \mathbf{y}\in [0,1]^d\) such that the limit in (25) does not exist or is different from \(|[\mathbf{x}, \mathbf{y}[|\). In any case, there is a subsequence \(\big (a_{N'}({\mathbf {x}},{\mathbf {y}})\big )\) of \(\big (a_N({\mathbf {x}},{\mathbf {y}})\big )\) such that \(a_{N'}({\mathbf {x}},{\mathbf {y}})\rightarrow a\ne |[\mathbf{x}, \mathbf{y}[|\) as \(N'\rightarrow \infty \), and (26) shows that (4) cannot hold for almost every realization.

Concluding, the triangular array is uniformly distributed if and only if (25) holds for all rectangular sets \([\mathbf{x}, \mathbf{y}[\in [0,1]^d\). \(\square \)

In particular, if \(\{{\varvec{\Omega }}^{(N)}\}_{N\ge 1}\) is a sequence of finite partitions of the unit cube such that all partitions are equivolume, then \(|\Omega _i^{(N)}|=1/N\), so (25) is satisfied even without taking the limit. As a consequence, sequences of equivolume partitions are uniformly distributed; this is implication (d) in Fig. 12.

We conclude this section with a comparison of Definition 1 with the notion of equidistributed partitions from [4], which we now recall using our notation. A sequence \(\{{\varvec{\Omega }}^{(N)}\}_{N\ge 1}\) of finite partitions of the unit cube is called equidistributed if for any choice of \({\mathbf {x}}_i^{(N)}\in \Omega _i^{(N)}\), \(i=1,\ldots ,N\), the triangular array \({\widehat{{\mathbf {x}}}}=\big ({\mathbf {x}}_1^{(N)},\ldots ,{\mathbf {x}}_N^{(N)}\big )_{N\in {\mathbb {N}}}\) is uniformly distributed (Definition 1). Actually, in [4] this notion is introduced and exploited in larger generality, replacing the unit cube with a general separable metric space.

In Fig. 12, we outline the connections between different notions for partitions, where a sequence \(\{{\varvec{\Omega }}^{(N)}\}_{N\ge 1}\) of finite partitions of \([0,1]^d\) is said to have vanishing average diameter if the average diameter

converges to zero as \(N\rightarrow \infty \). We also use the set

of all indices i for which \(\Omega _i^{(N)}\) is completely contained in \(B\subset [0,1]^2\), and the condition

stating that asymptotically the ‘correct’ proportion of sets are completely contained in \([\mathbf{x}, \mathbf{y}[\). That an equivolume sequence of partitions with vanishing maximal diameter is equidistributed was shown in [4, Lemma 1], but our implication Fig. 12a is stronger, as it allows for ‘large’ or ‘elongated’ sets, as long as the proportion of such sets within the N sets of the partitions goes to zero as \(N\rightarrow \infty \).

Proposition 7

The implications in Fig. 12 hold. Apart from the equivalence in (b) none of the implications can be reversed.

Proof

To show (a) fix \([\mathbf{x}, \mathbf{y}[ \subset [0,1]^d\), assume that the sets of \(\{{\varvec{\Omega }}^{(N)}\}_{N\ge 1}\) are equivolume, and observe that in this case

On the other hand, if

describes the sets of the partition hitting both, \(B\subset [0,1]^d\) and its complement, we have

so it is enough to show that

as \(N\rightarrow \infty \). To show (28) let \(\varepsilon >0\) and \(\alpha >0\) be given. Markov’s inequality and the assumption of vanishing average diameter yield the existence of \(N_0\in {{\mathbb {N}}}\) such that

for all \(N\ge N_0\). Hence, using again the equivolume property,

where \(({{\,\mathrm{bd}\,}}[\mathbf{x}, \mathbf{y}[)_\alpha \) is the set of all points with distance at most \(\alpha \) from the boundary of \([\mathbf{x}, \mathbf{y}[\). Choosing \(\alpha =\varepsilon /(8d)\) implies (28).

To show the equivalence in (b) we note that (27) implies (28). In fact, if \([\mathbf{x}, \mathbf{y}[ \subset [0,1]^d\) is fixed, the set \(B=[0,1[^d{\setminus } [\mathbf{x}, \mathbf{y}[\) can be written as disjoint union of at most \(k=3^d-1\) half-open rectangles \(B_1,\ldots ,B_k\), so

by applying (27) to all \(3^d\) rectangles.

If \({{\mathcal {P}}}_N=\{{\mathbf {x}}_1,\ldots ,{\mathbf {x}}_N\}\) satisfies \({\mathbf {x}}_i\in \Omega _i^{(N)}\) but is otherwise arbitrary, then

and there are sets for which either of the two inequalities becomes an equality. One can thus conclude that (27) (together with its consequence, (28)) implies equidistribution of \(\{{\varvec{\Omega }}^{(N)}\}_{N\ge 1}\). Conversely, assuming equidistribution, we may choose \({{\mathcal {P}}}_N\) such that there is equality on the left side of (29) for every N, and (27) follows.

Implication (c) is trivial as a stratified sample point lies a.s. in the partition set it is associated to. Implication (d) has already been shown and (e) is trivial.

None of the above implications can be reversed. That implication (a) cannot be reversed is clear, as one just takes a sequence satifying (27) and changes two sets appropriately to assure that the sequence is not equivolume. Implication (d) can be treated in a similar way. That implication (c) cannot be reversed can be seen by means of vertical strip partitions \(({\varvec{\Omega }}^{(N)}_{\mathrm {vert}})\) in Fig. 2 (left). For all N these partitions are equivolume and thus the stratified sample based on them is a.s. uniformly distributed. However, this sequence of partitions is not equidistributed, as the limit in (27) is always zero. This example also shows that implication (e) cannot be reversed. \(\square \)

1.2 Appendix B–Proof of Lemma 4

In this part of the appendix we present the details we omitted in the Proof of Lemma 4 in Sect. 4.3. Lemma 5 can then be shown along the very same lines with slightly different probabilities and boundaries for the six subcases; see Fig. 8.

Proof of Lemma 4

We set \(A=v_1 \sqrt{2}\), \(B=v_2 \sqrt{2}-1\) such that for given \(1/2 \le A \le 1\) we have \(2A-1 \le B \le A\).