Abstract

Let \(\varPi _n\) be the set of bivariate polynomials of degree not greater than n. A \(\varPi _n\)-correct set of nodes is a set such that the Lagrange interpolation problem with respect to these nodes has a unique solution. A maximal line of a \(\varPi _n\)-correct set is any line containing exactly \(n+1\) nodes. Syzygy matrices can be used to find linear factors of the fundamental polynomials and detect maximal lines. We suggest to use matrix relations in order to generate syzygies, identify linear factors of fundamental polynomials and detect maximal lines. We interpret our results in the important case of GC sets trying to shed some light on the Gasca-Maeztu conjecture.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Gasca-Maeztu conjecture [13] is still an outstanding problem in polynomial interpolation in two varialbles. So far it has been proven up to total degree \(n=5\) [14] with proofs of increasing complexity, for three and more variables not even its correct formulation is clear, cf. [11]. A promising approach, based on methods from algebraic geometry, was introduced in [12] and suggests to consider so-called syzygy matrices that capture information about the structure of the underlying interpolation problem. In this paper, which is a continuation of [8, 9], we collect further information about these syzygy matrices and explicitly show their behavior in certain cases of a given defect. Even if this is not yet a proof of the conjecture we consider these results useful for further studying this connection between interpolation problems and the associated syzygy matrices.

We begin by recalling the setup we are considering in a more detailed way, using terminology from [9]. We will only consider the case of two variables in this paper. The bivariate Lagrange interpolation problem of degree \(n-1\) consists of finding a polynomial in \(\varPi _{n-1}\), the space of bivariate polynomials of total degree not greater than \(n-1\), whose values coincide with prescribed data on a finite set \(Y \subset \mathbb {R}^2\). If the Lagrange interpolation problem to data on Y in \(\varPi _{n-1}\) has a unique solution, we say that the set Y is \(\varPi _{n-1}\)-correct. The fact that a set is \(\varPi _{n-1}\)-correct can be revealed by inspecting I(Y), the ideal of all polynomials vanishing on Y. A set Y with \(\#Y=\dim \varPi _{n-1}\) is \(\varPi _{n-1}\)-correct if and only if \(I(Y) \cap \varPi _{n-1} = \{ 0 \}\). The properties of the ideal of polynomials vanishing on a set can be read off specific bases of the ideal called H-bases and, if Y is \(\varPi _{n-1}\)-correct, H-bases of I(Y) can be described as bases of the vector space \(I(Y) \cap \varPi _n\). Homogeneous relations between elements of a given H-basis are called syzygies. A matrix of maximal rank formed by first degree polynomials whose rows are syzygies of an H-basis for I(Y) is called a syzygy matrix for the ideal. Many features of the interpolation problem can be interpreted in terms of syzygy matrices. Conversely, syzygy matrices can be used to derive properties of the corresponding H-basis, cf. [9, 12].

The Berzolari-Radon construction extends a \(\varPi _{n-1}\)-correct set \(Y_{n-1}\) by adding \(n+1\) nodes on a line W with \(W \cap Y_{n-1} = \emptyset \) and gives rise to a \(\varPi _n\)-correct set \(Y_n\). The fundamental polynomials for the nodes in \(Y_n {\setminus } Y_{n-1}\), i.e., the polynomials that vanish at all other nodes and take the value 1 at a given node, provide a particular H-basis \(h=(h_0, \ldots , h_n)\) of \(I(Y_{n-1})\), suitable for explicitly constructing syzygy matrices. Maximal lines in \(Y_n\) are lines containing \(n+1\) nodes. Any maximal line of \(Y_n\) different from W is a linear factor of all elements of the H-basis h except one. Such maximal lines can be detected in the syzygy matrices as shown in [9, 12].

In order to characterize first degree factors of the fundamental polynomials in h, the syzygy matrix relation \(\varSigma h =0\) could be used. In this paper, we propose and investigate an alternative approach that deals with a more general relation of the form \(S h =g e\) instead, where \(S \in \varPi _1^{(n+1)\times (n+1)}\) is now a square matrix whose determinant coincides up to a nonzero constant with a nonzero ideal polynomial \(g \in I(Y) \cap \varPi _{n+1}\) and \(e=[1 , \ldots , 1 ]^T \in \mathbb {R}^{n+1}\). Specifically, in Proposition 5 a relation of the form \(S h =ge\) is derived with a matrix \(S=A\) with null diagonal entries and whose off diagonal elements \(a_{ij}\) are affine polynomials that can be related to linear factors of the elements of h as shown in Theorem 2. In Theorem 1, we also identify maximal lines as the common off diagonal element in a column of A, which recovers the fundamental property of the syzygy matrices in [9].

Recall that a GC set is a \(\varPi _n\)-correct set all whose fundamental polynomials are products of first degree polynomials. Due to the relation between repeated elements in the syzygy matrix and maximal lines, syzygy matrices are a promising tool for attacking the aforementioned Gasca-Maeztu conjecture which can be rephrased as the fact that any GC set can be obtained as a Berzolari-Radon extension of a GC set of smaller degree. To illustrate this connection, we end the paper by considering known GC sets and relating them to the properties derived in Theorem 1 and Theorem 2.

2 H-bases and syzygy matrices of correct sets

Let \(\varPi \) be the space of bivariate polynomials and \(\varPi _n\) be the subspace of \(\varPi \) consisting of all polynomials of total degree not greater than n.

Definition 1

A set \(Y \subset \mathbb {R}^2\) is called \(\varPi _n\)-correct if the Lagrange interpolation problem on the set of nodes Y

has a unique solution in \(\varPi _n\) for any \(f: Y \rightarrow \mathbb {R}\).

From the definition, it follows that the number of elements of any \(\varPi _n\)-correct set Y is \(\#Y=\dim \varPi _n= {n+2 \atopwithdelims ()2}\). Let us denote by

the ideal of polynomials vanishing on the set Y. We observe that a set Y with \(\#Y =\dim \varPi _n\) is \(\varPi _n\)-correct if and only if \(\varPi _n \cap I(Y) = 0\). Associated to each node y of a \(\varPi _n\)-correct set Y, there exist Lagrange fundamental polynomials \(\ell _{y,Y} \in \varPi _n\) such that \(\ell _{y,Y}(y)=1\) and \(\ell _{y,Y} \in I(Y{\setminus }\{y\})\). Since the interpolation error

of a polynomial p always belongs to I(Y), many interpolation formulae can be related to the representation of elements in the ideal I(Y).

Definition 2

A finite sequence \((h_0, \ldots , h_n)\) of elements in an ideal I of polynomials is called an H-basis for I if for any \(f \in I\) there exist polynomials \(g_ j \in \varPi _{\deg f- \deg h_j}\), \(j=0, \ldots , n\), such that \(f= \sum _{j=0}^n g_j h_j\).

In this paper we shall consider H-bases consisting of polynomials of degree n of I(Y) with Y a \(\varPi _{n-1}\)-correct set. As a consequence of Lemma 1 and Theorem 3 of [9] we can state the following result.

Proposition 1

If Y is a \(\varPi _{n-1}\)-correct set then \(\dim (I(Y) \cap \varPi _n)=n+1\). A sequence of polynomials \((h_0, \ldots , h_n)\) is an H-basis of I(Y) if and only if it is a basis of the vector space \(I(Y) \cap \varPi _n\).

Some properties of an H-basis can be related to their syzygies.

Definition 3

A syzygy of a sequence of polynomials \((h_0, \ldots , h_n) \in \varPi ^{n+1}\) is a sequence \((\sigma _0, \ldots , \sigma _n) \in \varPi ^{n+1}\) such that \(\sum _{j=0}^n \sigma _j h_j=0\).

In Theorem 4 of [9], it was shown that for any H-basis of the ideal I(Y) of a \(\varPi _{n-1}\)-correct set there exist a matrix in \(\varPi _1^{n \times (n+1)}\) whose rows are formed by independent syzygies of degree at most one. This motivates the following definition.

Definition 4

Let Y be a \(\varPi _{n-1}\)-correct set and \((h_0, \ldots , h_n)\) be a basis of \(I(Y) \cap \varPi _n\). An affine syzygy matrix of h is any matrix \(\varSigma \in \varPi _1^{n\times (n+1)}\) of rank n (with respect to the field of rational functions) such that \(\varSigma [h_0 , \ldots , h_n]^T =0\).

In Theorem 5 of [9], it was shown that any syzygy formed by polynomials in \(\varPi _1\) is a linear combination of the rows of a particular affine syzygy matrix \(\varSigma \) and that the set of all affine syzygy matrices is of the form \(A \varSigma \), where \(A \in \mathbb {R}^{n\times n}\) is a nonsingular matrix. Since we only consider affine syzygy matrices here, we will sometimes drop the explicit mentioning of degree at most one and just speak of a syzygy matrix.

The linear combinations of the rows of a syzygy matrix \(\varSigma \) with entries \(\sigma _{jk}\), \(j=0, \ldots , n-1\), \(k=0, \ldots , n\), generate a vector subspace of \(\varPi _1^{n+1}\) of dimension n. Hence, there exist polynomials \(s_{n0}, \dots , s_{nn} \in \varPi _1\) such that \((s_{n0}, \ldots , s_{nn})\) does not belong to the subspace generated by the rows of \(\varSigma \) and hence \(\sum _{k=0}^n s_{nk} h_k =: g \ne 0\). Now, we define \(s_{jk} = s_{nk} + \sigma _{jk}\), for \(j=0, \ldots , n-1\), \(k=0, \ldots , n\), and find that \(\sum _{k=0}^n s_{jk} h_k= g\) for all \(j=0, \ldots , n\). Then we obtain the relation

The following result shows how to obtain syzygy matrices from a relation of the form \(Sh=ge\).

Proposition 2

Let Y be a \(\varPi _{n-1}\)-correct set, \((h_0, \ldots ,h_n)\) be a basis of \( I(Y) \cap \varPi _n\) and let \(S \in \varPi _1^{(n+1) \times (n+1)}\) be a polynomial matrix with \(\det S \ne 0\) such that \(Sh= ge\), where g is a nonzero polynomial, \(h:=[h_0 , \ldots , h_n]^T\) and \(e=[1 , \ldots , 1]^T\). Then \(\varSigma \) is a syzygy matrix of h if and only if there exists a matrix \(M \in \mathbb {R}^{n\times (n+1)}\) of rank n with \(Me=0\) such that \(\varSigma =MS\). Furthermore, there exists a constant \(c \ne 0\) such that

where \(S_{[j]}\) is the matrix obtained from S by replacing the j-th column by e.

Proof

Let \(M\in \mathbb {R}^{n\times (n+1)}\) of rank n with \(Me =0\). Multiplying in the matrix relation \(Sh=ge\) by M we obtain \(MS [h_0 , \ldots , h_n]^T =0\). Since MS is a \(n \times (n+1)\) matrix whose rank over the field of rational functions is n, we deduce that \(\varSigma :=MS\) is a syzygy matrix. Now let \({\hat{\varSigma }}\) be another syzygy matrix. By Theorem 5 of [9], there exist a nonsingular real matrix \(A \in \mathbb {R}^{n\times n}\) such that \({\hat{\varSigma }} = A \varSigma = AM S = {\hat{M}} S\), with \({\hat{M}}:=AM\). Since \({\hat{M}}\) is a matrix of rank n such that \({\hat{M}} e=AMe=0\), the result follows.

Since \(h_0, \ldots , h_n\) are nonzero polynomials in \(I(Y) \cap \varPi _n\) and \(\det S \ne 0\), we deduce that Sh is a nonzero vector and then g is a nonzero polynomial in \(I(Y) \cap \varPi _{n+1}\). Since all elements in S are polynomials in \(\varPi _1\), we have that \(\det S \in \varPi _{n+1}\) and \(\det S_{[j]} \in \varPi _n\), \(j=0, \ldots , n\). By Cramer’s rule,

Since \(\deg h_j = n\), \(j=0,\dots ,n\), we have that

is independent of \(j \in \{0, \ldots , n\}\). Taking into account that the \(h_j\) form a basis of \(I(Y) \cap \varPi _n\), they have no common divisor and we deduce that g divides \(\det S\). Since \(\det S_{[j]} =h_j {\det S}/g\), we have that \(\det S_{[j]} \ne 0\) and \(h_j\) divides \(\det S_{[j]}\), \(j =0, \ldots , n\). From the fact that \(\det S_{[j]} \in \varPi _n\) and \(\deg h_j = n\), it follows that \(h_j = c \, \det S_{[j]}\) and \(g=c \det S\) for some \(c \in \mathbb {R}{\setminus } \{ 0 \}\), \(j=0, \ldots , n\).

Since \(g \in I(Y) \cap \varPi _{n+1}\) is a nonzero polynomial, there are two possible cases in Proposition 2, \(\deg g = n\) or \(\deg g=n+1\).

If \(\deg g =n\), then \(g= \sum _{j=0}^n c_j h_j\) for some constants \(c_0, \ldots , c_n\) because \(h_0, \ldots , h_n\) form a basis of \(I(Y) \cap \varPi _{n}\). The relations

give rise to syzygies consisting of first degree polynomials. Since all syzygies of first degree can be generated by the rows of a syzygy matrix as shown in Theorem 5 of [9], we deduce that a linear combination of the rows of \(S-e c^T\) equals 0, that is, \(b^T S= (b^Te)c^T\) for some \(b=[b_0 , \ldots , b_n]^T\). Since \(\det S \ne 0\), it follows that \(b^T S \ne 0\) and \(b^T e\) is a nonzero constant. Hence \(c^T =(b^Te)^{-1} b^T S\), that is, the constant row vector \(c^T\) is a linear combination of the rows of S. From this fact, it follows that \(\det S \in \varPi _n\). Since g coincides with \(\det S \) up to a constant factor, we have that \(\deg \det S =n\) and thus

because \(\det S_{[j]}\) coincides with \(h_j\) up to a constant factor. Therefore,

If, on the other hand, the degrees of g and \(\det S\) are exactly \(n+1\), then

and no linear combination of the rows can be a constant vector.

In the following result, we compare different relations \(S h =ge\).

Proposition 3

Let Y be a \(\varPi _{n-1}\)-correct set and \((h_0, \ldots ,h_n)\) be a basis of \(I(Y) \cap \varPi _n\). Let \(S, {\hat{S}} \in \varPi _1^{(n+1) \times (n+1)}\) be polynomial matrices with \(\det S \ne 0\) and \(\det {\hat{S}} \ne 0\) such that \(Sh= ge\) and \({\hat{S}} h ={\hat{g}} e\) with \(h=[h_0, \ldots , h_n]^T\), \(e=[1, \ldots , 1]^T\). Then, there exist polynomials \(s_0, \ldots , s_n \in \varPi _1\) and a matrix \(N \in \mathbb {R}^{(n+1) \times (n+1)}\) with \(Ne= e\) such that

Proof

By Proposition 1, \((h_0, \ldots , h_n)\) is an H-basis of I(Y). Since g and \( {\hat{g}}\) can be written as a combination of the H-basis with first degree coefficients, we have that \(g,{\hat{g}} \in \varPi _{n+1}\cap I(Y)\). By the definition of H-basis, there exist polynomials \(s_0, \ldots , s_n \in \varPi _1\) such that \(g- {\hat{g}}=\sum _{k=0}^n s_k h_k=[s_0 , \ldots , s_n] h\). Then we have

So \({\hat{S}}-S+e [s_0 , \ldots , s_n]\) is a matrix whose rows are syzygies. From Proposition 2, we deduce that \({\hat{S}} - S + e [s_0 , \ldots , s_n] = M S\), where \(M \in \mathbb {R}^{(n+1) \times (n+1)}\) is a matrix such that \(Me = 0\). Taking \(N=I+M\), we have that \(Ne=e\) and \({\hat{S}} =N S - e [s_0 , \ldots , s_n]\).

3 H–bases generated by Berzolari-Radon extensions

All information about the interpolation problem is contained in the ideal I(Y), hence also in any basis of the ideal. Since the construction we are considering proceeds by total degree, it is convenient to use an H-basis. We can construct an H-basis explicitly using interpolation properties of a Berzolari-Radon extension. From the H-basis we determine, in an elementary and explicit way, a syzygy matrix. These syzygy matrices describe the geometry of the node configuration as pointed out in [12].

Recall that a line is the zero set \(Z(w):=\{ x \in \mathbb {R}^2 \mid w(x) = 0\}\) of a first degree polynomial \(w\in \varPi _1 {\setminus } \varPi _0\). In 1948, Radon [15] suggested a construction that extends a \(\varPi _{n-1}\)-correct set \(Y_{n-1}\) by adding \(n+1\) nodes \(t_0, \ldots , t_n\) in Z(w) with \(Z(w) \cap Y_{n-1} = \emptyset \). The extended set \(Y_{n}:=Y_{n-1} \cup T\), \(T := \{ t_0,\ldots ,t_{n} \}\), is \(\varPi _n\)-correct. This construction is also attributed to Berzolari [1] due to which it is called the Berzolari-Radon construction nowadays.

Conversely, if \(Y_{n}\) is a \(\varPi _{n}\)-correct set and we remove a line Z(w) of \(n+1\) nodes, the resulting set \(Y_{n-1}:=Y_n{\setminus } Z(w)\) is a \(\varPi _{n-1}\)-correct set. In Theorem 1 of [9] the Berzolari-Radon construction is reviewed providing explicit formulae for the fundamental polynomials of the extension. The fundamental polynomials of the extension can be used to describe an H-basis \((h_0, \ldots , h_n)\) of the ideal \(I(Y_{n-1})\).

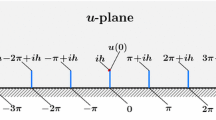

Let us revisit the construction of the syzygy matrices from [9], conveniently modified for our purposes. In the next result we construct an H-basis \((h_0, \ldots , h_n)\) of the ideal \(I(Y_{n-1})\) and find a matrix relation for defining syzygies of \((h_0, \ldots , h_n)\). To that end, we introduce some notation that we will use in the remainder of the paper.

Definition 5

Let \(Y_{n-1}\) be a \(\varPi _{n-1}\)-correct set and \(T=\{t_0, \ldots , t_n\}\) be a set of distinct nodes lying on a line Z(w) such that \(Z(w) \cap Y_{n-1}= \emptyset \), and let \(Y_n:=Y_{n-1} \cup T\). Let v be a first degree polynomial such that 1, v, w form a basis of \(\varPi _1\). We use \(v_k := v(t_k)\), \(k=0,\dots ,n\), to define the polynomials

and

These polynomials belong to \(\varPi _{n-1}\) and interpolate \(d_j/w\), \(j=0, \ldots , n+1\), and \(d_{n+1}/w^2\) on \(Y_{n-1}\), respectively. Moreover, set

\(h := \left[ h_0,\dots ,h_n \right] ^T\) and

Finally, let \(B = [b_{jk}]_{j,k=0, \ldots , n} \in \mathbb {R}^{(n+1) \times (n+1)}\) be the matrix whose entries are

and \(V:=\mathrm{diag\,}(v_0, \ldots , v_n)\). Finally, we set

with I denoting the identity matrix, and use \(S_{[j]}\) for the matrix obtained by replacing the j-th column of S by e, \(j=0,\dots ,n\).

Proposition 4

Let the assumptions of Definition 5 be satisfied. Then

- (a)

The polynomials \(h_0,\dots ,h_n\) are a basis of \(I(Y_{n-1}) \cap \varPi _{n}\) and an H-basis of \(I(Y_{n-1})\).

- (b)

The polynomials \(g_0,\dots ,g_{n+1}\) are a basis of \(I(Y_n)\cap \varPi _{n+1}\) and an H-basis of \(I(Y_n)\).

- (c)

The following relation specializing (1) holds: \(Sh = g_{n+1} e\).

- (d)

\(g_{n+1}= \det S\) and \(h_j= \det S_{[j]}\), \(j=0, \ldots , n\).

Proof

(a) The set \(Y_n\) is \(\varPi _n\)-correct and the Lagrange fundamental polynomials for the nodes in the set \(Y_n\) can be written in the form (see Theorem 1 of [9])

By Theorem 2 of [9], the \((\ell _{t_j, Y_{n}})_{j=0, \ldots ,n}\) form a basis of the vector space \(I(Y_{n-1}) \cap \varPi _n\) and thus are an H-basis for the ideal \(I(Y_{n-1})\). Since

we deduce that \((h_0, \ldots , h_n)\) is an H-basis of \(I(Y_{n-1})\).

(b) Since the polynomials \(g_0, \ldots , g_n, g_{n+1} \in I(Y_n) \cap \varPi _{n+1}\) are linearly independent, we deduce from Corollary 1 of [9] that \((g_0, \ldots , g_n,g_{n+1})\) is a basis of \(I(Y_n) \cap \varPi _{n+1}\) and H-basis of \(I(Y_n)\).

(c) We note that \((v -v_j) h_j \in I(Y_n) \cap \varPi _{n+1}\), \(j=0,\dots ,n\), are linearly independent polynomials since the homogeneous leading forms of \(h_0,\dots ,h_n\) are linearly independent. By (b), there exist constants \(b_{jk}\) such that

Since 1, u, v form a basis of \(\varPi _1\), we can interpret (8) as a polynomial in v and w and compare the coefficients of \(v^{n+1}\) in both sides of (8), leading to \(b_{j,n+1}=1\), \(j =0, \ldots , n\). Consequently, by (6),

and thus \(Sh=g_{n+1}e\).

Let us now describe a formula for elements \(b_{jk}\) of the matrix B. Using (3) and (4) we have

Comparing this with (9), we find that

Due to (7) we have

Hence, substituting \(x=t_k\) into formula (10) and noticing that \(w (t_k)= 0\), we obtain

which is the expression given in (5). In particular \(b_{jj}=0\), \(j=0, \ldots , n\), and B has zero diagonal entries.

(d) From Proposition 2, it follows that there exists a constant c such that \(g_{n+1}=c \det S\) and \(h_j= c\det S_{[j]}\), \(j=0, \ldots , n\). Expanding \(\det S\) and ordering the expansion by powers of w, we get

By (4), the coefficient of \(v^{n+1}\) in \(g_{n+1}\) coincides with the coefficient of \(v^{n+1}\) in \(d_{n+1}= \prod _{k=0}^n (v-v_k)\) and thus also with the coefficient of \(v^{n+1}\) in \(\det S\), hence \(c=1\) and \(h_j= \det S_{[j]}\), \(j=0, \ldots , n\), and \(g_{n+1}= \det S\).

Proposition 4(d) can be used to obtain expansions of \(h_j\), \(j=0, \ldots , n\), and \(g_{n+1}\) into powers of w in terms of the elements of the matrix B with zero diagonal entries. The identity \(h_j=\det S_{[j]}\) holds with

Expanding the determinant of \(S_{[j]}\) into powers of w, we deduce that

where \(B_{[j]}\) is the matrix obtained from B, replacing its j-th row by e.

We can now obtain syzygies in a similar way to Sect. 3 of [9]. Forming the difference of any two equations in (9),

yields the syzygies \(\sigma _{ji}=[\sigma _{ji0} , \ldots , \sigma _{jin}]\) with

Let \(\varSigma ^{(i)}\) be the matrix whose rows are the syzygies \(\sigma _{ji}\), \(j=0, \ldots , n\). Observe that the matrix \(\varSigma ^{(i)}\) is the matrix obtained from \(S=vI -V - w B\) by subtracting the i-th row from all the other rows. By Proposition 2, \( \varSigma ^{(i)}\) is a syzygy matrix of \((h_0, \ldots , h_n)\), \(i=0, \ldots , n\). Let

be the matrix obtained by removing the j-th column in \(\varSigma ^{(i)}\). From Proposition 4 (d), it follows that

and, using Cramer’s rule, we can deduce \(h_j= (-1)^{i-j}\det \varSigma _j^{(i)}\) (see also Theorem 4 of [9]).

In general, we can apply Proposition 2 to obtain syzygy matrices MS multiplying any constant matrix \(M \in \mathbb {R}^{n \times (n+1)}\) of rank n with \(Me=0\) by the matrix \(S=vI-V-wB\).

Let us now consider a modification of the relation \(Sh=g_{n+1}e\) of Proposition 4 (c). For this purpose, we define the crucial matrix that we will consider for the rest of the paper and whose entries can be regarded as equations of lines related with the set \(Y_n\) of nodes.

Definition 6

Under the hypotheses of Definition 5, we define

whose entries are the polynomials

that are either zero or have degree 1.

Proposition 5

Under the assumptions of Definitions 5 and 6,

- (a)

\(A [h_0 , \ldots , h_n]^T = g e\), where

$$\begin{aligned} g =\sum _{k=0}^n (v -v_k) \, h_k - g_{n+1} \in \varPi _{n+1} \cap I(Y_n), \end{aligned}$$ - (b)

\( \det A =(-1)^{n}g \ne 0\),

- (c)

we have for any \(k=0, 1, \ldots , n\), that

$$\begin{aligned} \nabla g(t_k) =d_k(t_k) \sum _{j\ne k} \nabla a_{jk}, \quad \nabla h_j(t_k) = \frac{d_k(t_k)}{v_k - v_j} \nabla a_{jk}, \qquad j \ne k. \end{aligned}$$

Proof

(a) Using (15) and the relations (9) we find that

for all j, so that \(A [h_0 , \ldots , h_n]^T =ge\).

(b) Adding the relations in (9), we get

and so we have

that is

From (16), it follows that coefficient of \(v^{n+1}\) in the expansion of g in terms of v and w is n. From (14), the coefficient of \(v^{n+1}\) in \(\det (- A)\) is \(\det (I-ee^T) = -n\). Applying Proposition 2 and comparing terms in \(v^{n+1}\) we deduce that \(g=-\det (-A)=(-1)^n \det A\). Moreover, setting \(w = 0\) in (16) and noting that \(d_{n+1}\) does not vanish identically on this line, allows us to conclude that \(g \ne 0\).

(c) Differentiating (16), substituting \(x=t_k\) and recalling that \(w(t_k)= 0\), we get

Now we differentiate (3) and obtain, using (5),

Observe that the matrix relation \(-Ah =-ge\) can be obtained from \(Sh=g_{n+1} e\) using Proposition 3, with \(S=vI-V-wB\), \(N=I\) and \([s_0 , \ldots , s_n] =e^T (vI-V)\). In fact,

The syzygy matrices \(\varSigma ^{(j)}\), \(j\ne k\), are the matrices obtained from \(S=vI-V-wB\) by subtracting the j-th row from all other rows. Since the differences between the rows of S and the corresponding of \(-A\) are the same, the syzygy matrices \(\varSigma ^{(j)}\) can be obtained either from the matrix S or \(-A\) by subtracting the j-th row from all the other rows. For instance, choosing \(j=0\), we obtain the syzygy matrix

4 Maximal lines

Maximal lines, introduced by de Boor in [11], are a key tool in the description of \(\varPi _n\)-correct sets that can be obtained from the Berzolari-Radon construction. Since no line in the plane can contain more than \(n+1\) nodes of a \(\varPi _n\)-correct set, maximality has to be understood in the sense that such a maximal lines contains the maximal number of nodes possible among all lines in the plane.

Definition 7

A maximal lineZ(w) of a \(\varPi _n\)-correct set Y is defined by the property that \(\# (Z(w) \cap Y) = n+1\). The set of maximal lines of a \(\varPi _n\)-correct set Y will be denoted by M(Y).

The main structural advantage of the syzygy matrices is that they enable the detection of maximal lines, see Theorem 6 of [9]. The following result shows that maximal lines can even be directly detected in the matrix A.

Theorem 1

Let the hypotheses of Definition 5 be satisfied and let A be as in Definition 6. Then we have

- (a)

Let \(\ell = v-v_k + cw\) be such that \(Z(\ell )\) is a maximal line of \(Y_{n-1}\) passing through \(t_k\). Then \(\ell \) divides \(\det A\) and \(h_j\) for all \(j \ne k\) and \(a_{jk}= \ell \), for all \(j \ne k\).

- (b)

If all off diagonal elements of the k-th column of A coincide, then \(\ell = a_{jk}\), \(j \ne k\), defines a maximal line \(Z(\ell )\) of \(Y_{n-1}\) through \(t_k\).

- (c)

The product of all first degree polynomials defining maximal lines of \(Y_{n-1}\) passing through nodes in T divides \(\det A\).

Proof

(a) By Proposition 5 (a),

Since first degree polynomials defining maximal lines are factors of all Lagrange polynomials of all nodes not lying on them (see for instance, Proposition 2.1 (v) of [3]), we have that \(\ell \) divides \(h_j\) for all \(j \ne k\). Taking into account that \(\ell \) divides all terms in \( \sum _{j\ne k} a_{kj} h_j\), it follows that \(\ell \) divides g and, by Proposition 5 (b), \(\ell \) also divides \(\det A\). For \(j \ne k\) we have

Since \(\ell \) divides g and all terms in \(\sum _{l\ne j,k} a_{jl} h_l\) but not \(h_k\), we deduce that \(\ell \) divides \(a_{jk}\). Therefore \(a_{jk}= \ell \) for all \(j \ne k\). So all off diagonal elements of the k-th column of A coincide with \(\ell \).

(b) Assume that all off diagonal elements of the k-th column of A coincide, that is

By Proposition 2, \(h_j\) coincides up to a constant with the determinant of a matrix \(S_{[j]}\), whose k-th column is \(\ell (x) (e-e_j)\). So, \(\ell \) divides \(h_j\) for all \(j \ne k\). For any \(y \in Y_{n-1}{\setminus } L\), take \(n_y(x)\) a first degree polynomial such that \(n_y(y)=0\) and \(n_y(t_k)=0\). Then \(n_y \ell _{y,Y_{n-1}} \in \varPi _n\cap I(Y_{n-1})\) and since \((h_0, \ldots , h_n)\) is a basis of \(\varPi _n\cap I(Y_{n-1})\), we can write

Evaluating at \(t_k\) and taking into account (11), we conclude that \(c_k =0\) and, since \(\ell \) divides \(h_j\) for all \(j \ne k\) and not \(n_y\) we conclude that \(\ell \) divides all polynomials \(\ell _{y,Y_{n-1}}\), \(y \in Y_{n-1} {\setminus } L\). From the fact that \(\ell _{y,Y_{n-1}}\), \(y \in Y_{n-1} {\setminus } L\) are linearly independent polynomials in \(\ell \, \varPi _{n-2}:= \{ \ell \, p : p \in \varPi _{n-2}\}\), we deduce that \(\# ( Y_{n-1} {\setminus } L) \le \dim \varPi _{n-2}\) and \(\#(Y_{n-1} \cap L) \ge n\), which implies that L is a maximal line.

(c) By (a), any maximal line passing through some node in T is a factor of \(\det A\). So, the product of all maximal lines passing through a node in T divides \(\det A\).

The zero set of each fundamental polynomial \(\ell _{t_j,Y_n}\) is an algebraic curve \(H_j:= Z(h_j)\) determined by the polynomial \(h_j\). If the gradient \(\nabla h_j(y) \ne 0\) at some point \(y \in H_j\), then \(\nabla h_j(y) (\cdot -y) =0\) is the equation of the tangent line of \(H_j\) at \(y \in H_j\). If \(\ell \) is a linear factor of \(h_j\), then the line \(Z(\ell )\) is a component of the algebraic curve \(H_j\). Therefore, if L is a component of \(H_j\) passing through \(y \in H_j\), then \(\ell \) coincides with \(\nabla h_j(y) (\cdot -y)\) up to a nonzero constant factor. Proposition 5 can be used to relate the elements of the matrix \(A \in \varPi _1^{(n+1)\times (n+1)}\) with the tangents of \(H_j\) and Z(g) at the nodes in T. In this way linear factors of \(h_j\) and g can be related to the elements of the matrix A.

Let us observe that, by Theorem 1, maximal lines are linear factors of the H-basis \((h_0, \ldots , h_n)\) and of the polynomial g. The relation \(A[h_0 , \ldots , h_n]^T=ge\) can be used in order to identify linear factors of the H-basis \((h_0, \ldots , h_n)\) and of g in terms of the elements of the matrix A. In particular, if all the \(h_j\), \(j=0, \ldots , n\), are products of first degree polynomials, then all elements of the matrix A can be obtained directly from the factors of \(h_j\), \(j=0, \ldots , n\).

Theorem 2

Let the hypotheses of Definition 5 be satisfied and let A be as in Definition 6.

- (a)

If a first degree polynomial \(\ell \) is a factor of \(\det A\), then there exists \(0 \le k \le n\) such that \(\ell \) coincides, up to a constant factor, with \( \sum _{j \ne k}a_{jk}\).

- (b)

If a first degree polynomial \(\ell \) is a factor of \(h_j\), then there exists \(k \ne j\) such that \(\ell \) coincides, up to a constant factor, with \(a_{jk}\).

- (c)

If \(h_j\) can be factored into a product of first degree polynomials then, \(h_j= \prod _{k \ne j} a_{jk}\).

Proof

(a) Assume that a first degree polynomial \(\ell \) is a factor of g, that is, \(g = \ell \, q\) with \(q \in \varPi _n\). Clearly w is not a factor of g because w divides \(g-nd\) but not d. Since q can vanish at most at n nodes of \(T=\{t_0, \ldots , t_n\}\) and \(g \in \varPi _{n+1} \cap I(Y_n)\) vanishes at T, we conclude that there exists some k such that \(\ell (t_k)=0\) and \(q(t_k) \ne 0\). Differentiating, we have that \(\nabla g(t_k) = q(t_k) \nabla \ell \). From Proposition 5 (c) we conclude that \(\ell \) and \(\sum _{j\ne k} a_{jk}\) are first degree polynomials vanishing at the same point \(t_k\) with proportional gradients. So we deduce that \(\ell \) coincides, up to a constant factor, with \( \sum _{j \ne k}a_{jk}\).

(b) The proof is completely analogous. Assume that \(h_j = \ell \, q_j\), with \(q_j \in \varPi _{n-1}\). Since \(h_j\) vanishes at \(T {\setminus } \{t_j\}\) and \(q_j\) can vanish at most at \(n-1\) nodes of \(T {\setminus } \{t_j\}\), we deduce that there exists \(k \ne j\) such that \(\ell (t_k)=0\) and \(q_j(t_k) \ne 0\). Differentiating and using Proposition 5 (c), we deduce that \(\nabla \ell \) and \(\nabla a_{jk}\) are both proportional to \(\nabla h_j(t_k)\) and we deduce that \(\ell \) coincides, up to a constant factor with \(a_{jk}\).

(c) Assume that \(h_j\) is a product of first degree polynomials. Since w cannot be a factor of \(h_j\) and \(h_j\) vanishes at \(T {\setminus }\{t_j\}\), we deduce that there exists exactly one factor \(\ell _{jk}\) vanishing at \(t_k\), \(k \ne j\) and we can assume without loss of generality that

By (b), \(\ell _{jk}\) coincides up to a constant with \(a_{jk}\). Then we have \(h_j = c\prod _{k \ne j} a_{jk}\). Comparing the coefficients of \(v^n\), we deduce that \(c=1\).

Theorem 2 (c) can also be proven using formula (12). If \(h_j =\prod _{k\ne j} \ell _{jk}\) is a product of first degree polynomials, we can assume without loss of generality that \(\ell _{jk}(t_k)=0\) and hence there exists \(\beta _{jk}\) such that \(\ell _{jk}=v-v_k -\beta _{jk} w\). Comparing (12) with the expansion into powers of w in the product

we deduce that \(\beta _{jk} = b_{jk}\) and \( \ell _{jk}=a_{jk}\), for all \(k \ne j\). So \(h_j = \prod _{k\ne j} a_{jk}\).

As a consequence of the preceding theorems, we can characterize maximal lines of an \(\varPi _{n-1}\)-correct set in terms of its ideal.

Corollary 1

Let \(Y_{n-1}\) be a \(\varPi _{n-1}\)-correct set. Then \(Y_{n-1}\) contains a maximal line if and only if there exists \(t \in \mathbb {R}^2 {\setminus } Y_{n-1}\) such that all polynomials in \(I(Y_{n-1} \cup \{t\}) \cap \varPi _n\) have parallel gradients, i.e. there exists a line L through t such that for each \(f \in I(Y_{n-1} \cup \{t\})\cap \varPi _n\) we have \(\nabla f(t)=\lambda \nabla \ell \) for some \(\lambda \in \mathbb {R}\).

Proof

Suppose that \(Y_{n-1}\) contains a maximal line \(\ell \). Let \(t \in L {\setminus } Y_{n-1}\). By the Berzolari-Radon construction, we can choose a line W containing \(t_0=t\) and no node of \(Y_{n-1}\) to construct \(Y_n = Y_{n-1} \cup \{t_0, \ldots , t_n\}\). By Theorem 1 (a), \(\ell \) divides \(h_1, \ldots , h_n\) and, by Proposition 4 (a) and the fact that \(\ell (t)=0\), these polynomials are a basis of the vector space \(I(Y_{n-1} \cup \{t\}) \cap \varPi _n\). Hence \(\ell \) divides any \(f \in I(Y_{n-1} \cup \{t\}) \cap \varPi _n\), that is \(f =\ell q\) and \(\nabla f(t) =q(t) \nabla \ell \). For the converse, we again make a Berzolari-Radon completion with \(t_0=t\) and use Proposition 5 (c) and Theorem 1 (b) to conclude that L is a maximal line for \(Y_{n-1}\).

Let us derive some consequences of Theorem 2. By (13), we know that \(h_0=\det \varSigma _0^{(0)}\), where

is the matrix obtained from \(S=vI-V-wB\) by subtracting the first row from the other rows and removing the first column. Denoting by

the matrix obtained from B by subtracting the first row from the other rows and removing the first column, we can write

If \(h_0\) is the product of first degree polynomials, we get from Theorem 2 (c) that

that is, the determinant of \(vI- \mathrm{diag\,}(v_1, \ldots , v_n) - wB_0^{(0)}\) is the product of its diagonal entries for all v, w. From this fact, it follows that the eigenvalues of the matrix

and hence of \(\varSigma _0^{(0)}\) are just their diagonal entries.

Reordering the elements of the H-basis, the above argument shows that if \(h_j\) is a product of first degree polynomials for some \(j \in \{0,\ldots , n\}\) then the determinant of the polynomial matrix \(\varSigma _j^{(j)}\) is the product of their diagonal entries and their eigenvalues are just their diagonal entries.

5 GC sets and factors of the fundamental polynomials

The geometric characterization introduced in [10] identifies \(\varPi _n\)-correct sets Y in the plane such that all the Lagrange fundamental polynomials \(\ell _{y,Y} \in \varPi _n\), \(y\in Y\), can be expressed as products of first degree polynomials.

Definition 8

A \(\text {GC}_n\) set Y is a set with \(\# Y ={n+2 \atopwithdelims ()2}\) such that for each \(y \in Y\), there exists a set \(\varGamma _{y,Y}\) of n lines containing all points in Y but y, that is, \(Y{\setminus } \bigcup _{K \in \varGamma _{y,Y}} K =\{y\}\).

From the definition, it follows that a \(\text {GC}_n\) set is \(\varPi _n\)-correct. If \(Y_n\) is a \(\text {GC}_n\) set and a maximal line \(W \in M(Y_n)\) is removed, the remaining set \(Y_{n-1}=Y_n {\setminus } W\) is a \(\text {GC}_{n-1}\)-set and all the Lagrange polynomials \(\ell _{t_j,Y_n}(x)\), \(t_j \in Y_n\cap W\), \(j=0, \ldots , n\), are products of first degree polynomials, and so are the H-basis polynomials \(h_0,\dots ,h_n\).

In [13], Gasca and Maeztu conjectured that each \(\text {GC}_n\) set contains at least one maximal line. So far, his conjecture has only been proved for degrees \(n\le 5\) [2, 4, 14]. If the conjecture would hold for any degree, then each \(\text {GC}_n\)-set \(Y_n\) could be regarded as a Berzolari-Radon extension of a \(\text {GC}_{n-1}\)-set \(Y_{n-1}\) by adding \(n+1\) nodes on a line W with \(W\cap Y_{n-1}= \emptyset \).

The concept of defect introduced in [3, 5, 6] can be used to classify \(\varPi _n\)-correct sets and consequently \(\text {GC}_n\) sets.

Definition 9

The defect of a \(\varPi _n\)-correct set Y, with \(n\ge 1\) is the number \(n+2-\# M(Y)\).

From the definition and the properties of \(\varPi _n\)-correct sets, if follows that the defect of a \(\varPi _n\)-correct set is a nonnegative integer less than or equal to \(n+2\).

If \(Y_{n-1}\) is a \(\text {GC}_{n-1}\) set of defect d, then we can choose a line W with \(Y_{n-1} \cap W = \emptyset \) and a subset \(T \subset W\) of \(n+1\) points containing all intersections \(K_j \cap W\), \(j=0, \ldots , n-d\), where \(K_0, \ldots , K_{n-d}\) are the maximal lines of \(Y_{n-1}\). By Theorem 1, all off diagonal elements of the j-th column of A coincide with a first degree polynomial \(k_j\) for \(j=0, \ldots , n-d\). If the Gasca-Maeztu, conjecture holds then \(M(Y_{n-1}) \ne \emptyset \), \(d<n+1\), and all off diagonal elements in the first column of A coincide.

On the other hand, if \(Y_n\) is a \(\text {GC}_{n}\) set and W is a maximal line of \(Y_n\), then \(Y_{n-1}= Y_n {\setminus } W\) is a \(\text {GC}_{n-1}\) set. Let \(T= Y_n \cap W\). All maximal lines of \(Y_n\) except W are maximal lines of \(Y_{n-1}\) intersecting W at some point in T, leading to matrices A such that some of their columns have coincident off diagonal elements.

Let us illustrate some properties described in Theorem 1 and Theorem 2 with all known \(\text {GC}_{n}\) configurations.

Defect 0. Assume that \(Y_n\) is a \(\text {GC}_n\) set of defect 0. Let \(K_0, \ldots , K_{n}, K_{n+1}\) be the maximal lines of \(Y_n\). Choose w a linear polynomial such that \(K_{n+1}=Z(w)\) and v a first degree polynomial such that 1, v, w form a basis of \(\varPi _1\). Take \(T=Y_n \cap Z(w)\), \(t_j =K_j \cap W\), \(j=0, \ldots , n\), and \(Y_{n-1} =Y_n {\setminus } W\). Since all off diagonal elements of the j-column of A are equal to \(k_j\), \(j=0, \ldots , n\), we have that

and

If we multiply \(A[h_0 , \ldots , h_n]\), we see that all components of the product are equal to \(g =n k_0 \cdots k_n\). So we find that \(k_0 \cdots k_n\) divides \(\det A\).

Defect 1. Assume that \(Y_n\) is a \(\text {GC}_n\) set of defect 1, \(n \ge 2\). Let \(K_0, \ldots , K_{n}\) the maximal lines of \(Y_n\). Choose w a linear polynomial such that \(K_{n}=Z(w)\) and v a first degree polynomial such that 1, v, w form a basis of \(\varPi _1\). Take \(T=Y_n \cap Z(w)\), with \(t_j =K_j \cap Z(w)\), \(j=0, \ldots , n-1\), \(t_n\in Z(w) {\setminus } \{t_0,\ldots , t_{n-1}\}\) and \(Y_{n-1} =Y_n {\setminus } Z(w)\). Then we have that

and

The elements of the last column \(a_{jn}\) are first degree polynomials corresponding to the line joining \(t_n\) and the node in \(K_j \cap Y_{n-1} {\setminus } \bigcup _{l \ne j} K_l\). Since the defect is 1, not all elements in the last column of A coincide and there exists at least two linearly independent polynomials vanishing at \(t_n\) among \(a_{jn}\), \(j=0, \ldots , n-1\). All components of the product \(A[h_0 , \ldots , h_n]\) are equal to \(g = k_0 \cdots k_{n-1} \sum _{j=0}^{n-1}a_{jn}\) and \(k_0 \cdots k_{n-1}\) divides \(\det A\). We remark that the linear factor \( \sum _{j=0}^{n-1}a_{jn}\) not corresponding to a maximal line can be directly obtained from Theorem 2 (a).

Defect 2. In this case \(Y_n\) is a \(\text {GC}_n\) set of defect 2, \(n \ge 3\), and \(K_0, \ldots , K_{n-1}\) are their maximal lines. Choose w a linear polynomial such that \(K_{n-1}=Z(w)\) and v a first degree polynomial such that 1, v, w form a basis of \(\varPi _1\). Take \(T=Y_n \cap Z(w)\), \(t_j =K_j \cap Z(w)\), \(j=0, \ldots , n-2\), and \(Y_{n-1} =Y_n {\setminus } Z(w)\). Let \(z \in Y_{n-1} {\setminus } \bigcup _{l=0}^{n-1} K_l\) be the unique node in \(Y_{n-1}\) not belonging to any maximal line and denote by \(L_{n-1}\) and \(L_{n}\) the lines joining z with \(t_{n-1}\) and \(t_n\) respectively. Then, it can be deduced that the sets \(\varGamma _{t,Y}\) from Definition 8 are of the form

where the union of the two lines \(A_{j,n-1}\cup A_{j,n}\) should contain \(t_{n-1}, t_n\), the node z and the two nodes in \(K_j{\setminus } \bigcup _{i \ne j} K_i\), \(j=0, \ldots , n-2\). Since at least one of them should contain z, we deduce that at least one of the lines \(L_{n-1}\) or \(L_n\) belong to \(\varGamma _{t_j,Y_n}\) for \(j=0, \ldots , n-2\), that is, one of the following three situations can happen: either only \(A_{j,n-1}=L_{n-1}\), or only \(A_{j,n}=L_n\) or both at the same time, \(A_{j,n-1}=L_{n-1}\), \(A_{j,n}=L_n\). We can reorder the nodes in such a way that \(L_n\) is used by the nodes \(t_j\) with \(j=0, \ldots , m-1\) but not \(L_{n-1}\) and \(L_{n-1}\) is used by the nodes \(t_j\) with \(j=m, \ldots , n-2\). At least one node \(t_j\), \(j \in \{m, \ldots , n-2\}\) does not use \(L_n\) and so we can assume without loss of generality that \(A_{m,n} \ne L_n\). Then we have

and deduce that

Defect 3. Let \(K_0, \ldots , K_{n-2}\) be the maximal lines of the \(\text {GC}_n\) set \(Y_n\), \(n \ge 5\). Let w be a linear polynomial such that \(K_{n-2}=Z(w)\) and v a first degree polynomial such that 1, v, w form a basis of \(\varPi _1\). Take \(T=Y_n \cap Z(w)\), \(t_j =K_j \cap Z(w)\), \(j=0, \ldots , n-3\), and \(Y_{n-1} =Y_n {\setminus } Z(w)\). Let \(Z = Y_{n-1} {\setminus } \bigcup _{l=0}^{n-2} K_l\). Then the set Z consists of three noncollinear nodes. Let \(L_{n-2}\), \(L_{n-1}\) and \(L_n\) denote the lines containing two of this nodes. The maximal line \(K_{n-2}\) can be chosen such that \(L_j\) contains a node in \(T {\setminus } \bigcup _{l=0}^{n-3} K_l\) for each \(j \in \{n-2,n-1,n\}\) and renumbering the nodes we can assume that \(t_j \in L_j\), \(j=n-2,n-1,n\). Then for each line \(L_{n-2+i}\) there exists a maximal line among \(K_0, \ldots , K_{n-3}\), say \(K_i\), such that \(L_{n-2+i} \cap K_i \cap Y_n = \emptyset \) and we can deduce (see Theorem 3.2 of [7]) that

Then we have that

and

and deduce that

Defect n-1. The only known configuration with this defect are generalized principal lattices. If \(Y_n\) is a generalized principal lattice, then there exist three families of lines \((L_{ir})_{i=0, \ldots , n}\) for \(r=0,1,2\), all of them distinct such that \(L_{i0} \cap L_{j1} \cap L_{k2}\) contain exactly one node of \(Y_n\) if and only if \(i+j+k=n\). Take w with \(L_{00}=Z(w)\) and let \(Y_{n-1}=Y_n {\setminus } Z(w)\). We can re-index the set \(T=Y_n \cap Z(w)\) such that \(t_j \in Z(w) \cap L_{j1} \cap L_{n-j,2} \) . Then

is an H-basis of \(I(Y_{n-1})\) and

Then we obtain

6 Conclusion

In summary, Theorems 1 and 2 offer further matrix and algebraic tools for the analysis of GC sets. According to Theorem 2 (c), if a fundamental polynomial can be factored into a product of first degree polynomials, we can identify these factors in terms of \(A \in \left( \varPi _1 \right) ^{(n+1) \times (n+1)}\) or \(B \in \mathbb {R}^{(n+1) \times (n+1)}\). From this fact, we deduce at the end of Sect. 4 that the eigenvalues of all syzygy matrices \(\varSigma _j^{(j)} (x)\) coincide with their diagonal entries for all x, and we can express this property in terms of \(B_j^{(j)}\), the scalar matrix obtained from B by subtracting the j-th row from the other rows and removing the j-th row and j-th column. We observe that whenever \(B_j^{(j)}\) is a triangular matrix or a matrix obtained by performing the same permutation of rows and columns of a triangular matrix, the eigenvalues of \( \varSigma _j^{(j)}(x)\) coincide with their diagonal entries. If these eigenvalue relations could be used to show that all the off diagonal elements of a column of a matrix \(B_j^{(j)}\) vanish, then all off diagonal elements in a column of A would coincide and, by Theorem 1 (a), there would exist at least a maximal line. This provides another technique that could be useful to find an answer to the Gasca-Maeztu conjecture.

References

Berzolari, L.: Sulla determinazione di una curva o di una superficie algebrica e su algune questioni di postulazione. Lomb. Ist. Rend. 47, 556–564 (1914)

Busch, J.R.: A note on lagrange interpolation in \({\mathbb{R}}^2\). Rev. Union Matem. Argent. 36, 33–38 (1990)

Carnicer, J.M., Gasca, M.: Planar configurations with simple Lagrange formulae. In: Lyche, T., Schumaker, L.L. (eds.) CAGD. Mathematical Methods, pp. 55–62. Vanderbilt University Press, Nashville (2001)

Carnicer, J.M., Gasca, M.: A conjecture on multivariate polynomial interpolation. Rev. R. Acad. Cien. Serie A. Math. 95, 145–153 (2001)

Carnicer, J.M., Gasca, M.: Classification of bivariate GC configurations for interpolation. Adv. Comput. Math. 20, 5–16 (2004)

Carnicer, J.M., Gasca, M.: Generation of lattices of points for bivariate interpolation. Numer. Algorithm 39, 69–79 (2005)

Carnicer, J.M., Godés, C.: Geometric characterization of configurations with defect three. In: Cohen, A., Merrien, J.L., Schumaker, L.L. (eds.) Curve and Surface Fitting: Avignon, pp. 61–70. Nashboro Press, Brentwood (2007)

Carnicer, J.M., Godés, C.: Extensions of planar GC sets and syzygy matrices. Adv. Comput. Math. 45, 655–673 (2019)

Carnicer, J.M., Sauer, T.: Observations on interpolation by total degree polynomials in two variables. Constr. Approx. 47, 373–389 (2018). arXiv:1610.01850

Chung, K.C., Yao, T.H.: On lattices admitting unique Lagrange interpolation. SIAM J. Numer. Anal. 14, 735–743 (1977)

de Boor, C.: Multivariate polynomial interpolation: conjectures concerning GC-sets. Numer. Algorithm 45, 113–125 (2007)

Fieldsteel, N., Schenck, H.: Polynomial interpolation in higher dimension: From simplicial complexes to \(GC\) sets. SIAM J. Numer. Anal. 55, 131–143 (2017)

Gasca, M., Maeztu, J.I.: On Lagrange and Hermite interpolation in \({\mathbb{R}}^k\). Numer. Math. 39, 1–14 (1982)

Hakopian, H., Jetter, K., Zimmermann, G.: The Gasca-Maeztu conjecture for \(n=5\). Numer. Math. 127, 685–713 (2014)

Radon, J.: Zur mechanischen Kubatur. Monatsh. Math. 52, 286–300 (1948)

Acknowledgements

Open Access funding provided by Projekt DEAL. This work was partially supported by the Spanish Research Grant PGC2018-096321-B-I00 (MCIU/AEI), by Gobierno de Aragon (E41-17R) and FEDER 2014-2020 “Construyendo Europa desde Aragón”.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Adrian Constantin.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Carnicer, J.M., Godés, C. & Sauer, T. Radon’s construction and matrix relations generating syzygies. Monatsh Math 192, 311–332 (2020). https://doi.org/10.1007/s00605-020-01383-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00605-020-01383-x