Abstract

A gentle and informal introduction to the Skorokhod topologies. Focus is on motivating examples and concepts.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

As soon as we stray from the theory of continuous stochastic processes, we are in need of a suitable space of discontinuous functions and a topology on it. Skorokhod proposed in [11] the topology used predominantly today and which has since inherited his name. When I started to work with discontinuous stochastic processes and needed to understand the Skorokhod space, I struggled to find textbooks or lecture notes providing an easy start into the topic. The general tenor is that “constructing [the] Skorokhod topology and deriving tightness criteria are rather tedious” (see [8, Chap. VI]). That gave me the impression that the Skorokhod topology is a very technical tool which has no real motivation.

After working with it for some years, I believe that there are simple and intuitive ideas underlying this construction which might facilitate the understanding. Unfortunately, these are not the main focus in most textbooks as the proofs are already long enough as is. For the same reason, very few textbooks explore all four Skorokhod topologies and focus only on the main one, also known as \(J_{1}\)-topology. Here, I want to take the time to expose the ideas underlying the four Skorokhod topologies.

This paper is not meant to give a complete overview on the Skorokhod topologies, nor will I include any proofs. Instead, I will concentrate on pictures, examples and heuristics in the hope of building intuition. Nevertheless, I try to give a reference to every major fact I mention. Note that I will not discuss non-Skorokhod topologies such as the \(S\)-topology defined in [9].

Most of what I present is taken from the two books [1, 12] which I highly recommend for delving into the subject: the former focuses on the prevalent topology on real-valued processes, the latter takes a more general approach. Some general results are taken from [6]. For examples on how to apply these general results to measure-valued processes, I recommend the first chapter of [4].

The rest of the paper has a very simple structure: first, I try to motivate why we need a new topology and what we should expect it to look like. Next, I derive the two main \(J_{1}\)- and \(M_{1}\)-topologies for real-valued processes on a finite time interval. Eventually, I conclude by presenting generalisations of these topologies.

2 Motivation

In this first section, I illustrate why we need to define a new topology and what properties we would want it to have.

2.1 Convergence of Continuous Processes

Let us start with the most famous example of convergence of stochastic processes: Donsker’s Theorem, a.k.a. the functional central limit theorem. Consider a simple random walk \((S_{n})_{n\geq 0}\) on \(\mathbb{Z}\) starting at \(S_{0}=0\), and define the rescaled and interpolated continuous process

We consider \((Y^{N}_{\cdot})_{N\geq 1}\) as a random sequence in \(\mathcal{C}([0,1])\) endowed with the Borel \(\sigma\)-algebra of the topology of uniform convergence. Donsker’s Theorem states that the sequence \((Y^{N}_{\cdot})_{N\geq 1}\) converges in distribution to a standard Brownian motion \(B\) on the time interval \([0,1]\). To prove this, one uses that \(\mathcal{C}([0,1])\) endowed with the topology of uniform convergence is a Polish spaceFootnote 1 so that we may apply Prokhorov’s Theorem.

Theorem 1 (Prokhorov; see e.g. [1, Sect. 5] or [2, Theorems 8.6.2 and 8.9.4] for more complete statements)

Let \(E\) be a Polish space with its Borel \(\sigma\)-algebra. Let \(\mathcal{P}(E)\) denote the set of probability measures on this measurable space endowed with the topology of weak convergence of measures. Then the following holds true:

-

1.

the space \(\mathcal{P}(E)\) is again Polish,

-

2.

a set of probability measures \(K\subseteq\mathcal{P}(E)\) is relatively compact if and only if it is tightFootnote 2.

Corollary 1

A sequence of processes \((X^{N})_{N\geq 1}\subset\mathcal{C}([0,1])\) converges in law to some \(X\in\mathcal{C}([0,1])\) if and only if the sequence \((X^{N})_{N\geq 1}\) is tight and the finite-dimensional distributions of \((X^{N})_{N\geq 1}\) converge to those of \(X\), i.e. for all \(0\leq t_{1}<\dots<t_{k}\leq 1\), one has

in \(\mathbb{R}^{k}\).

That means that one only needs to check those two things to prove Donsker’s Theorem. More precisely, we really need to worry only about tightness as the convergence of the finite-dimensional distributions is an application of the usual Central Limit Theorem. Since tightness is a statement about compact sets of the underlying space, we need a good characterisation of compact sets in \(\mathcal{C}([0,1])\). For this, we use another powerful theorem:

Theorem 2 (Arzelà–Ascoli; see e.g. [7])

A set \(K\subseteq\mathcal{C}([0,1])\) of continuous functions is relatively compact if and only if

-

1.

the set \(K\) is uniformly bounded at 0 in the sense that

$$\sup_{f\in K}|f(0)|<+\infty,$$ -

2.

the set \(K\) is uniformly equicontinuous, i.e. for every \(\epsilon> 0\) there exists some \(\delta> 0\) such that

$$|f(t)-f(s)|<\epsilon$$for all \(f\in K\) whenever \(|t-s|<\delta\).

In terms of the modulus of continuity

the second condition may be rewritten as

Corollary 2

A sequence of processes \((X^{N})_{N\geq 1}\subset\mathcal{C}([0,1])\) is tight if and only if

-

1.

the sequence is bounded at 0 in the sense that for all \(\epsilon> 0\) , one has

$$\lim_{C\to+\infty}\sup_{N\geq 1}\mathbb{P}(|X^{N}_{0}|> C)<\epsilon,$$ -

2.

for every \(\epsilon> 0\) , one has

$$\lim_{\delta\downarrow 0}\sup_{N\geq 1}\mathbb{P}\left[\omega_{X^{N}}(\delta)> \epsilon\right]=0.$$

With all this machinery at our disposal, it becomes straightforward to prove Donsker’s Theorem. One only needs to do two things:

-

1.

use Prokhorov’s Theorem to prove relative compactness via tightness;

-

2.

identify all limit points through their finite-dimensional distributions.

Don’t get me wrong: each point in itself might be difficult to prove. But at least we have a strategy on how to approach the problem. It turns out that this strategy is very general: only the first point depends on the particular topology which we put on the space of functions. For it to work, we first and foremost need Prokhorov’s Theorem. That means that we want a Polish topology. But there is a second ingredient on which we relied heavily. To prove tightness, we need a good way to characterise the compact sets of the topology we work in. In the case of the uniform topology on \(\mathcal{C}([0,1])\), this is taken care of by the Arzelà–Ascoli Theorem.

2.2 Convergence of Discontinuous Processes

We will now try to apply our insights from the previous section to the convergence of discontinuous processes. More precisely, we first need to identify what space of functions we are interested in and what topology we can endow it with.

A first idea could be to go to the next bigger space we are familiar with and which extends the topology of uniform convergence: the space of bounded measurable functions \(\mathcal{B}([0,1])\) with the topology of uniform convergence. The way I am presenting this, it becomes clear that this is not a good choice: even though the space is complete, it is not separable and therefore not Polish. And when we want to do probability theory, that is not a good sign; particularly with Prokhorov’s Theorem in mind. Despite having quite a nice characterisation of the compact sets of \(\mathcal{B}([0,1])\) similar to the Arzelà–Ascoli Theorem, it is not the right space to work in.

Now that we ruled out the obvious choice, we need to decide on how to proceed. The first step is to choose the right space of functions. In other words: what type of functions are relevant to us? Note that we are mostly interested in martingales and Markov processes, often characterised through their generator or their martingale problem. For these, we have a very nice regularity result:

Theorem 3 (see e.g. [10])

A sub- or supermartingale \((M_{t})_{t\geq 0}\) with respect to a right continuous filtration has a càdlàgFootnote 3 modification whenever \(t\mapsto\mathbb{E}[M_{t}]\) is right continuous. In particular, a martingale w.r.t. a right continuous filtration always has a càdlàg modification.

That indicates that the space of càdlàg functions seems to be the right choice. We will denote this so-called Skorokhod spaceFootnote 4 by \(\mathbb{D}_{[0,1]}(\mathbb{R})\). In general, the Skorokhod space of càdlàg functions on \([0,T]\) (resp. \([0,+\infty)\)) with values in a (hopefully Polish) space \(E\) will be denoted by \(\mathbb{D}_{[0,T]}(E)\) (resp. \(\mathbb{D}_{[0,+\infty)}(E)\)). Note that we may view \(\mathcal{C}([0,1])\) as a subspace of \(\mathbb{D}_{[0,1]}(\mathbb{R})\).

Now that we have identified the “right” space of functions, we need to identify the “right” topology. It turns out that there is not one good topology. So instead, we will identify the right properties a good topology should have. The most important part is the applicability of Prokhorov’s Theorem. In other words, we want

to hold. In most applications, it is enough to weaken this condition to

which amounts to the “important” part of Prokhorov’s Theorem. However, it is usually preferable to have a Polish space. The second important ingredient in our strategy was the Arzelà–Ascoli Theorem that characterises the compact sets for the topology of uniform convergence. Hence, we would want

to hold.

If both conditions (1) and (3) are satisfied, we have a “good” topology. Nevertheless, there are other properties that one could wish for. For example, it would be great if the new topology extends the topology of uniform convergence on \(\mathcal{C}([0,1])\). More formally, this means thatFootnote 5

Even though this property seems very natural, there is an important argument against it: the space of continuous functions with the topology of uniform convergence is complete. That means that if the new topology extends it, it is impossible for a sequence of continuous functions to converge to a discontinuous function. In other words, a topology extending the topology of uniform convergence may be too strong for some applications.

There is a last “bonus” property which would be nice to have. Imagine a sequence of functions converging to some continuous function. Since the limit lies in the subspace where the topology is “stronger”, it would be great if this would automatically strengthen the mode of convergence to uniform convergence, i.e.

If the new topology satisfies (5), we would not need to worry about the topology of uniform convergence any more at all. Whenever the limit is continuous, we get the uniform convergence for free!

Equipped with these constraints, we will start constructing Skorokhod topologies!

3 Skorokhod Topologies on \(\mathbb{D}_{[0,1]}(\mathbb{R})\)

In this main section, we construct the topologies on the Skorokhod space: first, we will try to understand how we might want to tweak the uniform topology; then, we will see the actual definitions of these topologies. A little word of caution: the rigorous proofs of the facts that I will state are very technical. So instead, I will use the old magician’s trick and refer to the books [1, 12] that present those proofs nicely.

3.1 What Exactly Goes Wrong with the Uniform Topology?

In Sect. 2.2, I pointed out that one major problem of the uniform topology is that it is not separable anymore. I did not give any proof of this statement, so here it is: all functions of the form \(1\!\!\!1_{[x,1)}\) are at \(\|\cdot\|_{\infty}\)-distance one of each other. Indeed, if we take \(x<y<1\), then

This proves that there is an uncountable family of functions which are all at distance one from each other in the uniform topology. Hence, the uniform topology is not separable on \(\mathbb{D}_{[0,1]}(\mathbb{R})\).

But let us take another point of view. Perhaps the non separability is not the main problem here. Perhaps it is rather a consequence of an even bigger problem: shouldn’t the convergence \(1\!\!\!1_{\left[\frac{1}{2}-\frac{1}{n},1\right)}\underset{n\to\infty}{\longrightarrow}1\!\!\!1_{\left[\frac{1}{2},1\right)}\) hold from an intuitive point of view? That would immediately force these functions to get “closer” together and prevent the non separabilityFootnote 6.

This insight transforms the problem of finding a topology similar to the uniform topology but preventing non separability into the problem of tweaking the uniform convergence so that this sort of convergence is allowed. To narrow down on what exactly keeps these indicator functions apart, let us have a closer look at what \(\epsilon\)-balls look like in the uniform topology. Let us take the example of \(f=1\!\!\!1_{\left[\frac{1}{2},1\right)}\). Then, the \(\epsilon\)-ball around \(f\) contains all functions whose graphs lie in the so-called \(\epsilon\)-tube around the graph of \(f\), see Fig. 1. This tube forces functions to be ever closer to \(f\), but allows them to wiggle a little bit up and down. Note that this corresponds to a spatial wiggle. When functions are continuous, that is all perfectly fine, because a wiggle in time can be translated into a wiggle in space. However, when we have a discontinuity, this is not true anymore. As soon as we move the discontinuity a bit to the left or to the right, we necessarily leave the tube and are immediately “far away” from \(f\).

That means that we need to modify the \(\epsilon\)-tubes to allow for some temporal wiggle in addition to the spatial wiggle already accounted for. There are two options that may come to mind. The more minimalistic approach would be to extend the \(\epsilon\)-tube by a little bit at a discontinuity to get \(\epsilon\)-“gloves”, see Fig. 2a. The other, more generous approach, would be to connect the two ends of the \(\epsilon\)-tube at a discontinuity, see Fig. 2b.

In reality, Skorokhod defined in its foundational paper [11] four different topologies, a strong and a weak version for each approach. They are now commonly referred to by the rather obscure names \(J_{1}\)-, \(J_{2}\)-, \(M_{1}\)- and \(M_{2}\)-topologies. The \(J\)-topologies arise from the minimalistic approach and the \(M\)-topologies from the more generous one.

For all those intimidated by these cryptic names: in the end, only the \(J_{1}\)-topology is commonly used and therefore referred to as the Skorokhod topology. It appears that the \(M\)-topologies also have their use in various problems, whereas you will most certainly not encounter the \(J_{2}\)-topology at all. That means that this mess reduces to

-

1.

one main (\(J_{1}\)‑)Skorokhod topology everybody should be familiar with and

-

2.

a second type of (\(M\)‑)Skorokhod topologies one should have a general idea of.

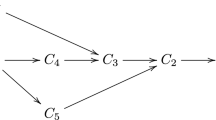

In this paper, I will only discuss the two main topologies \(J_{1}\) and \(M_{1}\). But before getting into the details, I want to illustrate how these topologies relate to each other. Keeping the above in mind, we should expect the \(J\)-topologies to be stronger than the \(M\)-topologies, as the latter allow more functions to be close. However, the situation is a bit more complicated, see Fig. 3. The good news is that the Skorokhod topology \(J_{1}\) is stronger than all the other “new” topologies. That means that whenever a convergence is shown to hold in \(J_{1}\), then it holds in all the other Skorokhod topologies. Fig. 4 illustrates for what extra type of convergence the different topologies allow. These examples are taken from [12, Fig. 11.2] and can partially be found already in [11], see also [3, Limit Theorems for Stochastic Processes].

3.2 The Topologies \(J_{1}\) and \(M_{1}\)

Now comes the more difficult part of translating our intuition into real definitions. We will start with the Skorokhod topology \(J_{1}\) and finish with the \(M_{1}\)-topology.

In the minimalistic setting, we want to allow for some temporal wiggle, without connecting the two ends of the \(\epsilon\)-tube across a discontinuity. To reformulate this mathematically, we will perform a time change. By a change of time I mean that we take a strictly increasing bijection \(\lambda:[0,1]\rightarrow[0,1]\) and consider \(f\circ\lambda\) instead of \(f\). Naturally, we are only interested in parametrisations that are “close” to the unitary flow of time, corresponding to the trivial parametrisation \(id:t\mapsto t\). In other words, we need to penalise parametrisations which are “too far away” from \(id\). This leads to the following definition of distance:

where the infimum is taken over all increasing bijections on \([0,1]\). It can be shown that \(d_{J_{1}}\) is a metric and it is usually referred to as Skorokhod metric, see e.g. [1, Sect. 12] and one defines \(J_{1}\) to be the topology induced by this metric.

Unfortunately, it turns out that this is actually a bad metric in the sense that it is not complete. Consider the following example from [1, Example 12.2]. Take the indicator functions \(f_{n}:=1\!\!\!1_{[0,2^{-n})}\) and define the change of time \(\lambda_{n}\) as the linear interpolation of the three points \((0,0)\), \((2^{-n},2^{-(n+1)})\) and \((1,1)\), see Fig. 5. One easily checks that the change of time is such that \(f_{n}=f_{n+1}\circ\lambda_{n}\) leading to \(\|f_{n+1}\circ\lambda-f_{n}\|_{\infty}=0\). To bound the penalty \(\|\lambda_{n}-id\|_{\infty}\) on the change of time, note that they differ maximally at \(x=2^{-n}\) yielding \(\|\lambda_{n}-id\|_{\infty}=|2^{-n}-2^{-(n+1)}|=2^{-(n+1)}\). This gives

In particular, this error is summable and we conclude that \((f_{n})_{n}\) is a Cauchy sequence. Since \(f_{n}(x)\) converges to 0 for all \(x\in(0,1)\), the only possible limit is the null function \(f=0\). However, whatever change of time we apply, the null function doesn’t change. Hence,

That means that although \((f_{n})_{n}\) is Cauchy, it does not converge.

The problem of the Skorokhod metric is quite subtle and lies within the penalty we put onto the parametrisation \(\lambda\): we measure the absolute distance between \(\lambda\) and \(id\). However, it is better to think of parametrisations as a modified flow of time. In this sense, it would be better to measure the difference in flow speed. In other words, we want parametrisations with a nearly constant speed

In the above example, the slope of \(\lambda_{n}\) will never converge to 1 (not even pointwise), as

for every \(n\geq 1\). To fix this, we introduce the new penalty

leading to the modified metric

It turns out that both metrics are equivalent, i.e. induce the same topology. However, this modified metric is complete! For this reason, this metric is sometimes called Skorokhod metric instead of the previous one, leaving the original metric without any special name. It can be shown that \(J_{1}\) is separable (see e.g. [1, Theorem 12.2]), meaning that \(J_{1}\) satisfies (1).

Recalling that the uniform topology on continuous functions does not care about small temporal distortions, one easily verifies that \(J_{1}\) also satisfies (4), i.e. if a sequence of continuous functions converges in \(J_{1}\), then the convergence is uniform (and conversely).

The only thing we still need is a good description of compact sets, i.e. that \(J_{1}\) satisfies (3). Fortunately, there is indeed a result similar to the Arzelà–Ascoli Theorem! The only thing we need to adapt is the definition of the modulus of continuity so that it ignores jump discontinuities. This is achieved by allowing the function to jump at a finite number of points:

where the infimum is taken over all finite partitions \(0=t_{0}<\dots<t_{v}=1\) of \([0,1]\) of finite size \(v\geq 1\). Note that the innermost supremum only ranges over the right open interval \([t_{i-1},t_{i})\), allowing for jumps at times \(t_{i}\). To distinguish it from the “real” modulus of continuity, I refer to it as modulus of continuity type function.

Theorem 1 (Compactness in \(J_{1}\), see e.g. [1, Theorem 12.3])

A set \(K\subseteq\mathbb{D}_{[0,1]}(\mathbb{R})\) is relatively compact in \(J_{1}\) if and only if

-

1.

the set is uniformly bounded in the sense that

$$\sup_{f\in K}\|f\|_{\infty}<+\infty;$$ -

2.

the modulus of continuity type function vanishes uniformly over \(K\):

$$\lim_{\delta\downarrow 0}\sup_{f\in K}\omega_{\delta}^{\prime}(f)=0.$$

Note that it is not enough to have a uniform bound of \(|f(0)|\) as before! This was possible in the case of continuous functions, because we also imposed uniform equicontinuity. Since we now allow for jumps, we need to strengthen this condition to a uniform bound on the entire interval.

That means that \(J_{1}\) satisfies all of the constraints that are really important to us. The only thing we are left to check is the property (5) of strengthened convergence whenever the limiting function is continuous. From the heuristic, it is conceivable that this holds for \(J_{1}\) as we only allow for small distortions in time. This idea can be made rigorous by noting that we may shift the change of time onto the limit: take \(f_{n}\to f\) in \(J_{1}\) with \(f\) continuous. Then there is a sequence \((\lambda_{n})_{n}\) of time changes such that

Since \(f\) is continuous and \(\|\lambda\|_{\circ}\to 0\), one has \(\|f\circ\lambda_{n}-f\|_{\infty}\to 0\). This in turn implies that \(\|f-f_{n}\|_{\infty}\to 0\). An extension of this statement can be found e.g. in [6, Proposition 3.6.5] and the preceding comment.

We finish with the \(J_{1}\) topology by pointing out that there is an immediate drawback in satisfying (4): continuous functions cannot converge to discontinuous functions as \(\mathcal{C}([0,1])\) is closed in the uniform topology! This explains why we cannot expect to see convergences of the type shown in Fig. 4b.

Let us now come to the \(M_{1}\)-topology. Recall that the idea was to generously extend the \(\epsilon\)-tube across the discontinuity, see Fig. 2b. To make this more rigorous, we will work with the so-called completed graph

of \(f\in\mathbb{D}_{[0,1]}(\mathbb{R})\) containing the graph of \(f\) together with the straight lines connecting the two ends of a jump discontinuity. Here, I use the notation \(f(t-)\) to denote the left limit of \(f\) in \(t\) which exists by definition of \(\mathbb{D}_{[0,1]}(\mathbb{R})\). For example, in Fig. 2b, the completed graph corresponds to the graph together with the dotted line.

From here on, the idea is very similar to the one used for the \(J_{1}\)-topology. To allow for some freedom, we use again parametrisations. The only difference is that we will now parametrise the completed graph, i.e. we will take non decreasing functions \((\lambda,\rho):[0,1)\rightarrow\Gamma(f)\) which are onto. Here, \(\lambda\) is the temporal component and \(\rho\) is the spatial component. Note also that we use the intuitive order on \(\Gamma(f)\) to define monotonicity:

In words, we use the temporal ordering coming from drawing the completed graph from left to right without lifting the pencil. We then define the distance between two functions \(f\) and \(g\) through

as the minimal distance between any two parametric representations of \(f\) and \(g\). Again, one checks that \(d_{M_{1}}\) indeed is a metric and one defines \(M_{1}\) to be the induced topology.

The advantage of this metric is that we get the additional convergence illustrated in Fig. 4b. Symmetrically, the drawback is that \(M_{1}\) does not verify (4). Except from this, all other constraints are satisfied: \(M_{1}\) defines a Polish topologyFootnote 7 on \(\mathbb{D}_{[0,1]}(\mathbb{R})\), see e.g. [12, Theorem 12.8.1] and also satisfies the property (5) of strengthened convergence whenever the limit is continuous. The description of compact sets is a bit more difficult, but it is still possible. Before we define the new modulus of continuity we will need here, one should keep in mind that \(M_{1}\) is weaker than \(J_{1}\). That means in particular that the above criterion for compactness still is sufficient. Then again, in most of the cases if the above applies, one usually directly works with the \(J_{1}\)-topology.

Define the new modulus of continuity type function

with

Using the notation \(d(x,A)\) for the distance between \(x\) and the set \(A\), this can be written more compactly as

A small modulus of continuity type function ensures that on small intervals, the graph is close to straight lines. This is a way to exclude oscillations, but allows for ever steeper slopes, see Fig. 4b.

Theorem 2 (Compactness in \(M_{1}\), see e.g. [12, Theorem 12.12.2])

A set \(K\subseteq\mathbb{D}_{[0,1]}(\mathbb{R})\) is compact in \(M_{1}\) if and only if

-

1.

the set is uniformly bounded in the sense that

$$\sup_{f\in K}\|f\|_{\infty}<+\infty;$$ -

2.

the oscillations vanish uniformly over \(K\):

$$\begin{cases}\lim_{\delta\downarrow 0}\sup_{f\in K}\omega_{\delta}^{\prime\prime}(f)&=0\\ \lim_{\delta\downarrow 0}\sup_{f\in K}|f(\delta)-f(0)|&=0\\ \lim_{\delta\downarrow 0}\sup_{f\in K}|f(1-)-f(1-\delta)|&=0\end{cases}.$$

The above description seems to differ from the short characterisation in Theorem 1, but a similar description for compact sets w.r.t. \(J_{1}\) can be found e.g. in [1, Theorem 12.4].

We finish this section with a small overview of which topologies have which properties. For completeness, I also include the topologies \(J_{2}\) and \(M_{2}\). These additional properties follow columnwise from [12, Theorem 11.6.6]; [3, Limit Theorems for Stochastic Processes, Sect. 2.7] and [12, Theorems 12.12.2]; a similar argument for \(J_{2}\) as in Sect. 3 and for \(M_{2}\) the fact that it is weaker than \(M_{1}\); and [12, Corollary 12.11.1] together with the fact that \(J_{2}\) is stronger than \(M_{2}\).

3.3 Convergence of Stochastic Processes in \(J_{1}\) and \(M_{1}\)

In this section, I simply restate the previous compactness results in terms of tightness of stochastic processes on \(\mathbb{D}_{[0,1]}(\mathbb{R})\). I will furthermore state the generalisation of Corollary 1 to the topologies \(J_{1}\) and \(M_{1}\). This is not immediate, because the projections \(\pi_{t}:f\mapsto f(t)\) are not continuous anymore: already in \(J_{1}\), the projection \(\pi_{t}\), \(t\in(0,1)\), is continuous in \(f\) if and only if \(f\) is continuous in \(t\). (The projections \(\pi_{0}\) and \(\pi_{1}\) are always continuous.) That means that we cannot hope to have the convergence of all finite-dimensional distributions.

Instead, we define the set of all continuity points of a stochastic process \(X\) by

Note that 0 is always a continuity point of any stochastic process \(X\), but \(X\) may be discontinuous in \(t=1\). As such, it would be better to say that \(T_{X}\) is the set of times \(t\in[0,1]\) such that the projection \(\pi_{t}:f\mapsto f(t)\) is a.s. continuous. It turns out to be enough to check convergence on \(T_{X}\), which is almost surely dense in \([0,1]\), see [1, Sect. 13].

Theorem 3 (see e.g. [12, Theorem 11.6.6])

A sequence of càdlàg processes \((X_{n})_{n\geq 1}\) converges in law to a càdlàg process \(X\) w.r.t. either \(J_{1}\) or \(M_{1}\) if and only if \((X_{n})_{n\geq 1}\) is tight in the respective topology and all finite dimensional distributions at times \(t_{i}\in T_{X}\) converge to those of \(X\).

Let us now turn to tightness criteria.

Theorem 4 (Tightness in \(J_{1}\), see e.g. [1, Theorem 13.2])

A sequence of càdlàg processes \((X_{n})_{n\geq 1}\) is tight w.r.t. \(J_{1}\) if and only if

-

1.

it holds that

$$\lim_{C\to+\infty}\limsup_{n}\mathbb{P}\left[\|X_{n}\|_{\infty}\geq C\right]=0;$$ -

2.

for every \(\epsilon> 0\), it holds that

$$\lim_{\delta\downarrow 0}\limsup_{n}\mathbb{P}\left[\omega_{\delta}^{\prime}(X_{n})\geq\epsilon\right]=0.$$

Theorem 5 (Tightness in \(M_{1}\), see e.g. [12, Theorem 12.12.3])

A sequence of càdlàg processes \((X_{n})_{n\geq 1}\) is tight w.r.t. \(M_{1}\) if and only if

-

1.

it holds that

$$\lim_{C\to+\infty}\limsup_{n}\mathbb{P}\left[\|X_{n}\|_{\infty}\geq C\right]=0;$$ -

2.

for every \(\epsilon> 0\), it holds that

$$\begin{cases}\lim_{\delta\downarrow 0}\limsup_{n}\mathbb{P}\left[\omega_{\delta}^{\prime\prime}(X_{n})\geq\epsilon\right]&=0\\ \lim_{\delta\downarrow 0}\limsup_{n}\mathbb{P}\left[|X_{n}(\delta)-X_{n}(0)|> \epsilon\right]&=0\\ \lim_{\delta\downarrow 0}\limsup_{n}\mathbb{P}\left[|X_{n}(1-)-X_{n}(1-\delta)|> \epsilon\right]&=0\\ \end{cases}.$$

For sufficient criteria of tightness in \(J_{1}\) in a very general setting, one may refer to [6, Chap. 3], or [4, Chap. 1] for a less general approach. Convergence criteria for \(M_{1}\) and \(M_{2}\) may be found in [12, Chap. 12].

4 Extending \(J_{1}\) and \(M_{1}\) to More General Skorokhod Spaces

There are some generalisations we are interested in. The first concerns the time interval: obviously, there is no problem with substituting \([0,1]\) with some other finite time interval \([0,T]\), but what about the entire half line \([0,+\infty)\)? Secondly, we would like to be able to consider the space \(\mathbb{D}_{[0,T]}(\mathbb{R}^{k})\) and compare its topology with the product topology \(\mathbb{D}_{[0,T]}(\mathbb{R})^{k}\). Finally, we would like to generalise the topology to a more general range space \(E\) to get Skorokhod topologies on \(\mathbb{D}_{[0,T]}(E)\).

This section is relatively short as I will simply point out possible restrictions and the necessary modifications needed to generalise the topologies.

4.1 Extending Time

As mentioned above, there is no difficulty in extending the topology to \(\mathbb{D}_{[0,T]}(\mathbb{R})\) for any finite time horizon \(T> 0\). One simply replaces the 1s in all definitions by \(T\)s.

Once we can define the topology on any finite time horizon, we would like to extend it to \(\mathbb{D}_{[0,+\infty)}(\mathbb{R})\) in the usual way: a sequence \((f_{n})\subseteq\mathbb{D}_{[0,+\infty)}(\mathbb{R})\) converges to \(f\in\mathbb{D}_{[0,+\infty)}(\mathbb{R})\) if and only if all restrictions to time intervals of the form \([0,T]\) converge to the restrictions of \(f\) to these intervals. However, this approach is doomed: the sequence given by \(f_{n}:=1\!\!\!1_{[1+1/n,+\infty)}\) does not converge to \(1\!\!\!1_{[1,+\infty)}\) in \(\mathbb{D}_{[0,1]}(\mathbb{R})\)! The problem is that the endpoint of the time interval is different from all other points. Since this problem disappears whenever it is a continuity point of the limit function, one remedy is to say that \(f_{n}\) converges to \(f\) if and only if all restriction to the time intervals of the form \([0,T]\), with \(T\) a continuity point of \(f\), converge to the restrictions of \(f\) to these intervals. A different approach is demonstrated in [1, Sect. 16].

It is possible to define a metric which induces this topology, see e.g. [12, Sects. 3.3 and 12.9] or [1, Sect. 16]. One checks that \(\mathbb{D}_{[0,+\infty)}(\mathbb{R})\) is again a Polish space, see e.g. [1, Theorem 16.3] in the case of \(J_{1}\). By our definition, compactness can be checked by restricting to compact time intervals and using the compactness criteria discussed above.

4.2 Product Skorokhod Topologies

The next step is to generalise the topologies to processes with values in \(\mathbb{R}^{k}\). The easiest solution is to wait for the next section and take the range space \(\mathbb{R}^{k}\). However, there is a second way we can get a topology on \(\mathbb{D}_{[0,1]}(\mathbb{R}^{k})\) by using the fact that

in the sense of a bijection. From the point of view of topologies, this implies that we may endow \(\mathbb{D}_{[0,1]}(\mathbb{R}^{k})\) with the product topology on \(\left(\mathbb{D}_{[0,1]}(\mathbb{R})\right)^{k}\). It turns out that this topology differs from the topology we would get from the next section. More precisely, the product topology is weaker. For this reason, we speak of the strong topologies obtained by viewing \(\mathbb{R}^{k}\) as the range space and the weak topologies obtained as product topologies. Intuitively, the product topology allows for different time parametrisations in every component whereas in the strong topology, the same time change is used for all components.

A detailed discussion of the weak \(M\)-topologies can be found in [12, Chap. 12]. Unfortunately, I have not found much literature on the weak \(J\)-topologies. It might be that they are less commonly used. One use may be found e.g. in [5, Proof of Lemma 4.3], see also [12, Theorem 11.5.1].

4.3 Towards General Range Spaces

Let \(E\) be a general Polish space. When going back to the definition of \(J_{1}\) in Sect. 3.2, we only need to replace \(\|f\circ\lambda-g\|_{\infty}\) by

where \(d_{E}\) is a complete metric on \(E\). It turns out that everything else goes through without any problem. To generalise the compactness result, we only need to adjust again the definition of the modulus of continuity to

One can verify that \(J_{1}\) preserves all the good properties as long as \(E\) is Polish, see e.g. [6, Chap. 3].

One would hope that this is also possible with the \(M_{1}\)-topology. However, its definition relies on the fact that we can define the straight line between two points in \(E\). In other words, we need an additive structure to define \(M_{1}\), i.e. we can generalise \(M_{1}\) only to Banach spaces. In this setting, the interval \([f(t_{1}),f(t_{2})]\) has to be interpreted as the line

from \(f(t_{1})\) to \(f(t_{2})\). This restriction is another reason why \(J_{1}\) has become the more prevalent topology.

Notes

A Polish space is a topological space that is separable and completely metrizable.

A family \((P_{\alpha})_{\alpha}\) of probability measures is tight if they vanish uniformly outside compact sets: for every \(\epsilon> 0\) there is a compact set \(K_{\epsilon}\) such that \(\sup_{\alpha}P_{\alpha}(K_{\epsilon}^{c})<\epsilon\).

The acronym càdlàg comes from the French “continue à droite, limite à gauche” which translates to “continuous from the right with left limits” (at any point \(t\)).

I have the impression that there are differences in the nomenclature. Sometimes, the name Skorokhod space is only used for the space endowed with the usual Skorokhod topology which we will construct later on. However, in different contexts other Skorokhod topologies are useful, so that it is necessary to give different names to the topological spaces.

The trace topology, also known as subspace topology, is obtained by restricting all open sets to the subspace.

At least the one induced by this specific example… but it turns out that this is enough.

Again, the metric we defined is not complete. An equivalent complete metric is defined in [12, Sect. 12.8].

References

Billingsley, P.: Convergence of probability measures, second edn. Wiley series in probability and statistics: probability and statistics. A Wiley-Interscience Publication. John Wiley & Sons, New York (1999)

Bogachev, V.I.: Measure theory, vol. 1,2. Springer, Berlin (2007). https://doi.org/10.1007/978-3-540-34514-5

Dorogovtsev, A.A., Kulik, A., Pilipenko, A., Portenko, M.I., Shiryaev, A.N. (eds.): Anatolii V. Skorokhod: selected works, 1st edn. Springer, Cham (2016)

Etheridge, A.M.: An introduction to superprocesses. University lecture ser. American Mathematical Society, Providence (2000). http://cds.cern.ch/record/2623111

Etheridge, A.M., Kurtz, T.G.: Genealogical constructions of population models. Ann. Probab. 47(4), 1827–1910 (2019)

Ethier, S.N., Kurtz, T.G.: Markov Processes: Characterization and Convergence. Wiley series in probability and mathematical statistics. Wiley, New York (1986)

Green, J.W., Valentine, F.A.: On the Arzela-Ascoli Theorem. Math. Mag. 34(4), 199–202 (1961)

Jacod, J., Shiryaev, A.N.: Limit theorems for stochastic processes. Grundlehren der mathematischen Wissenschaften, vol. 288. Springer, Berlin Heidelberg (2003). https://doi.org/10.1007/978-3-662-05265-5

Jakubowski, A.: A Non-Skorohod Topology on the Skorohod Space. Electron. J. Probab. 2, 1–21 (1997). https://doi.org/10.1214/EJP.v2-18

Lowther, G.: Cadlag modifications (2009). https://almostsuremath.com/2009/12/18/cadlag-modifications/, Accessed 14 Aug 2023

Skorokhod, A.V.: Limit theorems for stochastic processes. Theory Probab. Appl. 1(3), 261–290 (1956). https://doi.org/10.1137/1101022

Whitt, W.: Stochastic-process limits, 1st edn. Springer Series in Operations Research and Financial Engineering. Springer, New York (2002)

Acknowledgements

I would like to thank Andrey Pilipenko for bringing the collection [3] of selected works of Skorokhod to my attention. This research has been partially funded by Deutsche Forschungsgemeinschaft (DFG) through grant CRC 1114 Scaling Cascades in Complex Systems, Project Number 235221301, Project C02 Interface dynamics: Bridging stochastic and hydrodynamic descriptions.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kern, J. Skorokhod topologies. Math Semesterber 71, 1–18 (2024). https://doi.org/10.1007/s00591-023-00353-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00591-023-00353-2