Abstract

Purpose

To evaluate responsiveness and minimal important change (MIC) of Oswestry Disability Index (ODI), pain during activity on a numeric rating scale (NRSa) and health related quality of life (EQ-5D) based on data from the Norwegian neck and back registry (NNRR).

Methods

A total of 1617 patients who responded to NNRR follow-up after both 6 and 12 months were included in this study. Responsiveness was calculated using standardized response mean and area under the receiver operating characteristic (ROC) curve. We calculated MIC with both an anchor-based and distribution-based method.

Results

The condition specific ODI had best responsiveness, the more generic NRSa and EQ-5D had lower responsiveness. We found that the MIC for ODI varied from 3.0 to 9.5, from 0.4 to 2.5 for NRSa while the EQ5D varied from 0.05 to 0.12 depending on the method for calculation.

Conclusion

In a register based back pain population, the condition specific ODI was more responsive to change than the more generic tools NRSa and EQ5D. The variations in responsiveness and MIC estimates also indicate that they should be regarded as indicative, rather than fixed estimates.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Back pain is a leading cause of disability globally [1], however, pain is first and foremost an individual problem. Patient reported outcome measures (PROMs) are both scientifically and clinically used to assess patients’ subjective symptom burden. Generic PROMs generalize across groups and conditions, while condition specific instruments intend to be more definite. Hence, condition specific instruments are assumed to be more responsive and likely to detect clinical changes [2]. Around 200 PROMS for back pain exists, however, less questionnaires are warranted to compare results across studies and include results in meta-analysis [3].

Responsiveness is defined as the ability of a PROM to detect change in the measured construct over time [4]. This is important when assessing benefits of interventions. To interpret change scores, a frequently used benchmark is the minimal important change (MIC) [5]. MIC is the smallest change that patients perceive as important. Hence, MIC could aid the interpretation of PROM scores, and is a useful measure for both planning and evaluating clinical trials [5]. Responsiveness and MIC values for the frequently used PROMs for back pain patients diverge [6]. The deviation may be caused by different target populations [7,8,9] and different calculation methods (anchor-based or distribution-based methods [10]).

A national register was developed to improve health care services for neck and back pain patients in specialized care; the Norwegian Neck and Back Register (NNRR) [11]. This register collects data prior to all primal patient outpatient consultations and includes a 6- and 12-month follow-up regarding pain and functional development. The register includes demographic characteristics of patients, in addition to the following PROMs: Pain in activity reported on an 11-point numeric rating scale (NRSa) [6], Oswestry Disability Index (ODI) [12] and health related quality of life measured by EuroQol-5 (EQ-5D) [13] according to recommended core outcome measurements for low back pain [6, 12].

The aim of this study was to calculate responsiveness and MIC of NRSa (pain in activity), ODI and EQ-5D- among patients with low back pain in a Norwegian specialist care setting through both anchor-based and distribution-based methods.

Materials and methods

Design

The current study is a register-based cohort study originated from data from the Norwegian neck and back registry (NNRR) from 2021. NNRR is a national quality register established in 2012. Outpatient clinics in all Departments of Physical Medicine and Rehabilitation (PMR) in Norway receiving neck and back pain patients are obliged to deliver data to NNRR. All patients attending the outpatient clinics are invited, hence an unselected population reflecting normal clinical settings. The register collects data (digitally) prior the PMR baseline consultations, and after 6- and 12-months. For the current study we included back pain patients who fulfilled both follow-up forms and the patient global impression of change (PGIC) (Figs. 1 and 2).

Patient reported outcome measures

Recently, the first study based on NNRR was published, with detailed description of PROMs and set-up of the register [11]. The current study includes pain intensity during activity during the past week (NRSa) reported on an 11-point numeric rating scale, ranging from 0 (lowest pain) to 10 (highest pain). Oswestry Disability Index (ODI) [12] which consists of 10 items, ranging from 0 to 5. The summed score is presented as a percentage where 0% indicates no pain related disability and 100% maximum pain related disability [12]. Health related life quality for the last 30 days measured through EQ-5D including five domains with 5 levels from no problem (1) to extreme problem (5). EQ-5D is reported on a scale from − 0.59 to 1, were 1 is the best health state, with a cut-off value of ≥ 0.75 considered as normal health-related life-quality. The Norwegian Medicine Agency recommends the utilizing the EQ-5D 3L algorithm for the UK [14].

Anchor for minimal important change

Patient Global Impression of Change (PGIC) scales are often as used an anchor to categorize patients’ improvement, deterioration or no important change [15]. At 6- and 12-month follow-up the patients were asked to rate the effect of the treatment received on a 7-point Likert scale were (1) was “I am all fine”, (2) “much improved”, (3) “minimally improved”, (4) “unchanged”, (5) “minimally worse”, (6) “much worse” and (7) “worse than ever.“ For the statistical analysis of the PGIC were trichotomized into three ordinal categories 1 to 3 as “improved”, 4 as “unchanged” and 4 to 7 as worsened. We also trichotomized 1 and 2 to “much improved”, 3 to 5 as “unchanged” and 6 and 7 to “much worsened”.

Statistics

The statistical analysis was performed with IBM SPSS Statistics version 28. We present descriptive data as means (standard deviations).

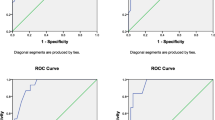

Responsiveness

We calculated responsiveness for both improvement and worsening with the “unchanged” group as reference group. Responsiveness was calculated using standardized response mean (SRM) and measuring area under the curve (AUC) for the receiver operating characteristic (ROC) curve. SRM was estimated by dividing the mean change score by the standard deviation (SD) of the change scores, in the improved, unchanged, and worsened group of patients. We used the cut-off SRM > 0.8 indicating large responsiveness, 0.5-0.0.8 moderate, and 0.2-<0.5 low. The AUC for the ROC curve (ROCAUC) represents the ability of the PROM to separate between patients who have improved and patients who have not improved. Spearman correlation coefficient > 0,30 was required between the anchor and the change score of the measurements [16]. Responsiveness can be considered adequate when the AUC is at least 0.7 [4]. The AUC was estimated with 95% confidence intervals.

Anchor-based minimal important change

We used the anchor based ROC-method to estimate the MIC values for each PROM [4]. ROC-curves were plotted as the combination of sensitivity and 1 – specificity for each change score. The sensitivity is the proportion of importantly improved patients, correctly identified by the PROM, and specificity is the proportion of patients with no important change correctly identified by the PROM. The three groups of patients according to the PGIC “improved”, “unchanged” and ”worsened”, will overlap in their change scores, and the optimal cut-off point is where the sum of proportions of misclassifications is smallest ((1- sensitivity) + (1-specificity)) or equivalently the point closest to the upper- left corner [5].

Distribution-based minimal important change

We also calculated the MIC according to the distribution-based approach using two methods.

-

1.

0,5 SD: half the SD of the measured change in PROM scores [17].

-

2.

The effect size: multiplying the SD of the baseline score by 0.2 (small effect size) [18].

Ethics

The Norwegian Directorate of Health has granted national status to the medical quality registry (NNRR) in 2011. The registry adheres to the Personal Protection Regulation article 6 no. 1 letter e (public interest) and follows the regulation on medical quality registry. An updated Data Protection Impact Assessment (DPIA) was in 2022 to ensure data security and privacy (2020/5880). Participation is based on written consent. A national expert group reviews all applications for data delivery to research purposes and approval from the Data Protection Office is required for the specific research project. Hence, this study was approved by the Data Protection Officer (22/07399) at Oslo University Hospital “and the data was delivered and stored in accordance with the regulations of both Oslo University Hospital and NNRR”.

Results

The patients responding to both follow-ups and PGIC at 6- and 12-months follow-up had similar gender, baseline ODI, NRSa, duration of symptoms, education, and employment rate (Table 1). However, the included patients were significantly older, had lower HSCL-10 and registered a Norwegians nationality. The means of the outcome measures are presented in Table 2.

Apart from EQ-5D at 6 months, the change score from baseline to 6 and 12 months of all PROMs correlated with the anchor question over 0.3, (Table 3).

Patient global impression of change

Based on the responses to the PGIC, 42% of participants considered themselves as “improved” at the 6-months follow-up and 43% considered themselves “improved” at 12 months (Table 4). Correspondingly, 17% and 20% considered themselves “much improved” at 6 and 12 months, respectively. At 6 months 15% perceived a worsening, with 18% reporting worsening at 12 months. Likewise, 5% considered themselves “much worsened” at 6 months and 8% at 12-months follow-up.

Responsiveness

ROCAUC estimates indicated adequate responsiveness for improvement for ODI (> 0.7) at both 6- and 12-months follow-up. Slightly higher ROCAU was documented when choosing only patients reporting much improved as anchor versus the group both improved and much improved (Table 5). NRSa was also responsive for both anchor groups at 6-months but not at 12 months follow-up (Table 5). ROCAUC for EQ-5D could only be calculated at 12 months follow-up. Responsiveness values for EQ-5D were only documented applying the “much improved” group as anchor. Low responsiveness was found for all measurements regarding worsening of the condition except for ODI for the much worse group. ROC curves for improved and much improved patients at 6- and 12-months follow-up are reported in Figs. 3 and 4.

The SRM values in the improved group indicates large responsiveness for ODI and NRSa at both follow-ups, for both improvement groups (Table 6). For EQD, the responsiveness through SRM had large responsiveness at 12-months, with moderate responsiveness for all improved patients. None of the SRM values for the worsened or much worsened had large responsiveness (Table 6).

Minimal important change

The MIC values for improvement for NRSa varied from 0.4 to 2.5 depending on the calculation method (Table 7). Correspondingly, the MIC for ODI varied from 3.0 to 12.5 (Table 7).

Discussion

To our knowledge, no previous studies have assessed responsiveness and MIC for NRSa, ODI and Eq. 5D in a register-based cohort of patients with back pain. We found that a condition specific outcome measure, ODIa was more responsive to change compared to the generic tools as NRSa and EQ5D. Additionally, we found variations in the calculated MIC values depending on the method applied.

Responsiveness

Overall, we found higher responsiveness for patients reporting “very much” and “much improvement” compared to “minimally improved”. Our results indicate adequate responsiveness for patients reporting improvement for ODI, consistent with previous research on low back pain [19]. NRSa only showed adequate responsiveness at 6-months follow-up. While frequently used for assessment of back pain, the reported responsiveness of NRS is inconsistent [20]. Lastly, EQ-5D had adequate responsiveness only at the 12-months follow-up and specifically in the much-improved group. A review of outcome measures in low back pain patients found inconsistent responsiveness of EQ-5D [6]. Hence, our findings correspond to prior research indicating that condition-specific PROMs are more responsive than generic tools [6].

NRSa was not responsive for worsening, while ODI and EQ5D were only responsive for patients reporting “much worse”. Patients are referred to specialized care when they have considerable pain and disability, and their symptoms are assumed to decline as a function of treatment and time. Furthermore, the treatment of the patients in NNRR is mostly conservative, reducing the probability of complication compared to a surgical setting. Hence, fewer patients are expected to experience deterioration, making responsiveness for worsening less important compared to a surgical context. Hence, we would argue that the present cohort is well suited for assessing responsiveness for improvement, but less for detecting declining function.

MIC

In the current study, we found that distribution-based method in general provided lower MIC values than the anchor-based method. In most cases the distribution-based calculations of MIC were lower than the measurement error for the instruments which questions the validity of the distribution-based MIC values [5]. Therefore, for the reminder of the discussion, we will as suggested by literature, focus on the anchor-based MIC [4] and MIC off “all improved” participants in the same range, we will focus on the “much improved”.

We found that the NRSa MIC-values for improvement was 2.5, which is in line with previously reported MIC-values for NRSa in back pain populations [7, 10, 21]. ODI varied from 9.5 to 12.5 at 6- and 12-months, which is in the lower range compared to other studies [7, 21]. MIC for Eq. 5D at 12 months was 0.12 which is also in the lower range of previous studies [7]. Hence, the MICs in our study are in the lower range compared to existing literature. This might be caused by our longer follow-up period [7, 10] and that our presented MIC-values reflect a heterogeneous back pain population independent of spinal care. Another possible reason is the trichotomization of change (improved, unchanged, and worsened). Most other studies only include a dichotomization (improved vs. non-improved), leading to an overestimation of the responsiveness and MIC.

Nevertheless, MIC values should be viewed with awareness concerning the measurement error of the PROM, the studied population and context [22]. As stated, there is probably no “gold standard” for MIC. MIC changes according to the population and context, hence, MIC should potentially be regarded as an indicative range rather than a fixed estimate [23].

Finally, the presented MICs increased from six to twelve month. To our knowledge, few studies have assessed MIC over several measuring points in spinal populations. However, a similar pattern appeared in a study assessing patients with plantar fasciopathy [24]. Potentially this reflects “responsive shift,” with patients downscaling the importance of a change as a function of time [24]. This also illustrates the challenges with the anchor based approach where varying response to the anchor may influence MIC values.

Over the years different terminology have been used to for calculating the difference patients perceive as important, e.g. MIC, Minimal important difference (MID), Minimal clinical Important Difference (MCID) [23]. In common for all definitions is the word “important”, however, what is an important improvement is open for discussion.

Strengths and limitations

A strength of the present study is the large number of participants giving a sample size well above the general recommendations for estimating MIC [5]. The heterogeneity of the present back pain population increases the external validity. Measuring points at both six and twelve months provides MIC values applicable at different time points. Anchor-based MIC-calculation were included, and all calculations are based on the recommended cut off values for correlations between instruments [5]. A weakness of the study is the number lost to follow-up, only 45% of the patients respond to both follow-ups. Furthermore, the anchor question may represent a source of MIC variation. The psychometrics of the anchor have not been examined, neither has the thrichotomization. When not addressing the specific neck or back condition, the patients may emphasize other constructs related to their health. Nevertheless, we believe that when this follow-up is part of a neck and back registry the patients will interpret it relating to their neck and back pain. However, as all other studies assessing responsiveness and MIC, recall bias is important to consider when evaluating an anchor-question [25].

Conclusion

Our estimated MIC thresholds will be helpful when evaluating effects of interventions offered to back pain patients in a clinical setting. In a register-based twelve-month follow-up of back pain patients we found that the condition-specific PROM ODI was more responsive to change than generic tools for pain and health related quality of life. NRSa and EQ55D. The variations in responsiveness and MIC estimates also indicate that they should be regarded as indicative, rather than fixed estimates.

Data availability

The data that support the findings of this study are available from the Norwegian neck and back registry, but restrictions apply to the availability of these data, which were used under license for the current study, and so are not publicly available. Data are however available from the authors upon reasonable request and with permission of the Norwegian neck and back registry.

References

Hartvigsen J et al (2018) What low back pain is and why we need to pay attention. Lancet 391(10137):2356–2367

Black N (2013) Patient reported outcome measures could help transform healthcare. BMJ 346:f167

Ramasamy A et al (2017) Assessment of patient-reported Outcome instruments to assess chronic low back Pain. Pain Med 18(6):1098–1110

de Vet HCW, Mokkink TC, Knol LB (2011) DL., Responsiveness. Measurement in Medicine: a practical guide. Cambridge University Press, Cambridge

Terwee CB et al (2021) Minimal important change (MIC): a conceptual clarification and systematic review of MIC estimates of PROMIS measures. Qual Life Res 30(10):2729–2754

Chiarotto A et al (2018) Core outcome measurement instruments for clinical trials in nonspecific low back pain. Pain 159(3):481–495

Braten LCH et al (2022) Minimal important change was on the lower spectrum of previous estimates and responsiveness was sufficient for core outcomes in chronic low back pain. J Clin Epidemiol 151:75–87

Burgstaller JM et al (2020) Evaluating the minimal clinically important difference of EQ-5D-3L in patients with degenerative lumbar spinal stenosis: a Swiss prospective Multicenter Cohort Study. Spine (Phila Pa 1976) 45(18):1309–1316

Kim HJ et al (2015) Determination of the optimal cutoff values for Pain Sensitivity Questionnaire scores and the Oswestry Disability Index for Favorable Surgical outcomes in subjects with lumbar spinal stenosis. Spine (Phila Pa 1976) 40(20):E1110–E1116

Maughan EF, Lewis JS (2010) Outcome measures in chronic low back pain. Eur Spine J 19(9):1484–1494

Bjørneboe J Symptom burden and follow-up of patients with neck and back complaints in specialized outpatient care: a national register study 2023

Grotle M, Brox JI, Vollestad NK (2003) Cross-cultural adaptation of the Norwegian versions of the Roland-Morris disability questionnaire and the Oswestry Disability Index. J Rehabil Med 35(5):241–247

Garratt AM et al (2022) Norwegian population norms for the EQ-5D-5L: results from a general population survey. Qual Life Res 31(2):517–526

van Hout B et al (2012) Interim scoring for the EQ-5D-5L: mapping the EQ-5D-5L to EQ-5D-3L value sets. Value Health 15(5):708–715

Kamper SJ, Maher CG, Mackay G (2009) Global rating of change scales: a review of strengths and weaknesses and considerations for design. J Man Manip Ther 17(3):163–170

Devji T et al (2020) Evaluating the credibility of anchor based estimates of minimal important differences for patient reported outcomes: instrument development and reliability study. BMJ 369:m1714

Norman GR, Sloan JA, Wyrwich KW (2003) Interpretation of changes in health-related quality of life: the remarkable universality of half a standard deviation. Med Care 41(5):582–592

Samsa G et al (1999) Determining clinically important differences in health status measures: a general approach with illustration to the Health utilities Index Mark II. PharmacoEconomics 15(2):141–155

Clement RC et al (2015) A proposed set of metrics for standardized outcome reporting in the management of low back pain. Acta Orthop 86(5):523–533

Chiarotto A et al (2019) Measurement Properties of Visual Analogue Scale, Numeric Rating Scale, and Pain Severity Subscale of the brief Pain Inventory in patients with Low Back Pain: a systematic review. J Pain 20(3):245–263

Ostelo RW et al (2008) Interpreting change scores for pain and functional status in low back pain: towards international consensus regarding minimal important change. Spine (Phila Pa 1976) 33(1):90–94

Copay AG et al (2018) Minimum clinically important difference: current trends in the Orthopaedic Literature, Part II: lower extremity: a systematic review. JBJS Rev 6(9):e2

King MT (2011) A point of minimal important difference (MID): a critique of terminology and methods. Expert Rev PharmacoEcon Outcomes Res 11(2):171–184

Heide M et al Responsiveness of specific and generic patient-reported outcome measures in patients with plantar fasciopathy Disability and Rehabilitation: pp. 1–7

Grøvle L et al (2014) Patients’ ratings of global perceived change during 2 years were strongly influenced by the current health status. J Clin Epidemiol 67(5):508–515

Funding

No funding. The authors have no relevant financial or non-financial interests to disclose.

Open access funding provided by University of Oslo (incl Oslo University Hospital)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical approval

This study was approved by the Data Protection Officer at Oslo University Hospital.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Competing interests

No.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Skatteboe, S., Røe, C., Heide, M. et al. Responsiveness and minimal important change of specific and generic patient-reported outcome measures for back patients: the Norwegian Neck and Back Register. Eur Spine J (2024). https://doi.org/10.1007/s00586-024-08394-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00586-024-08394-4