Abstract

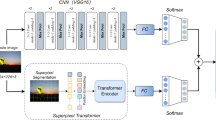

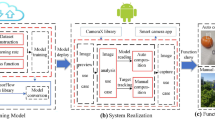

Composition detection and semantic line detection are important research topics in computer vision and play an important auxiliary role in the analysis of image esthetics. However, at present, few researchers have considered the internal relationship between these two related tasks for comprehensive research. In order to solve this problem, we propose a synchronous detection network of composition class and semantic lines based on cross-attention, which can realize the mutual supervision and guidance between composition class detection and semantic line detection, to improve the accuracy of each other’s detection. First, the pre-trained composition detection model and the pre-trained semantic line detection model as two teacher models to provide data labels of composition and semantic line information for the student model. Then, we train a student model with the help of the teacher model. The student model adopts the multi-task learning architecture by combining soft and hard parameter sharing, as we propose. At the same time, we develop a cross-attention module to ensure that both tasks get the help and supervision they need from each other. Experimental results show that our method can draw semantic lines while detecting composition classes, which increases the interpretability of composition class detection. Our composition detection accuracy reaches 92.57%, and for benchmark semantic lines, the accuracy of our AUC_A metric can reach 92.00%.

Similar content being viewed by others

References

Jin, W., Sanjabi, M., Nie, S., Tan, L., Ren, X., Firooz, H.: Msd: Saliency-aware knowledge distillation for multimodal understanding. In: Findings of the Association for Computational Linguistics: EMNLP 2021, 3557–3569 (2021)

Hariharan, B., Arbelaez, P., Girshick, R., Malik, J.: Object instance segmentation and fine-grained localization using hypercolumns. IEEE Trans. Pattern Anal. Mach. Intell. (PAMI) (2016)

Mai, L., Jin, H., Liu, F.: Composition-preserving deep photo aesthetics assessment. in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition(CVPR), 497–506 (2016)

Elkins, J.: Photography Theory. Routledge (2013)

Lee, J.-T., Kim, H.-U., Lee, C., Kim, C.-S.: Photographic composition classification and dominant geometric element detection for outdoor scenes. J. Vis. Commun. Image Represent. 55, 91–105 (2018)

Wen, C.-L., Chia, T.-L.: The fuzzy approach for classification of the photo composition. In: 2012 International Conference on Machine Learning and Cybernetics(ICMLC), 4, IEEE, 1447–1453 (2012)

Lee, J.-T., Kim, H.-U., Lee, C., Kim, C.-S.: Semantic line detection and its applications. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), 3229–3237 (2017)

Jin, D., Lee, J.-T., Kim, C.-S.: Semantic line detection using mirror attention and comparative ranking and matching. In: Proceedings of the European Conference on Computer Vision (ECCV), 119–135 (2020)

Jin, D., Park, W., Jeong, S.-G., Kim, C.-S.: Harmonious semantic line detection via maximal weight clique selection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 16737–16745 (2021)

Zhao, K., Han, Q., Zhang, C.-B., Xu, J., Cheng, M.-M.: Deep hough transform for semantic line detection. IEEE Trans. Pattern Anal. Mach. Intell. (PAMI) (2021)

Hinton, G., Vinyals, O., Dean, J.: Distilling the knowledge in a neural network. arXiv preprint arXiv:1503.02531 (2015)

Gou, J., Sun, L., Yu, B., Wan, S., Ou, W., Yi, Z.: Multi-level attention-based sample correlations for knowledge distillation. IEEE Trans. Ind. Inform. (2022)

Yu, Y., Li, B., Ji, Z., Han, J., Zhang, Z.: Knowledge distillation classifier generation network for zero-shot learning. IEEE Trans. Neural Netw. Learn. Syst. (2021)

Gou, J., Yu, B., Maybank, S.J., Tao, D.: Knowledge distillation: a survey. Int. J. Comput. Vis. (IJCV) 129, 1789–1819 (2021)

Li, J., Mei, X., Prokhorov, D., Tao, D.: Deep neural network for structural prediction and lane detection in traffic scene. IEEE Trans. Neural Netw. Learn. Syst. 28, 690–703 (2016)

Wang, Q., Han, T., Qin, Z., Gao, J., Li, X.: Multitask attention network for lane detection and fitting. IEEE Trans. Neural Netw. Learn. Syst. (2020)

Ji, Y., Zhang, H., Jie, Z., Ma, L., Wu, Q.J.: Casnet: A cross-attention Siamese network for video salient object detection. IEEE Trans. Neural Netw. Learn. Syst. 32, 2676–2690 (2020)

Zhang, B., Niu, L., Zhang, L.: Image composition assessment with saliency-augmented multi-pattern pooling (2021)

Hong, C., Du, S., Xian, K., Lu, H., Cao, Z., Zhong, W.: Composing photos like a photographer. In: 2021 IEEE Conference on Computer Vision and Pattern Recognition(CVPR), IEEE, (2021)

Illingworth, J., Kittler, J.: A survey of the hough transform. Comput. Vis. Graph. Image Process. 44, 87–116 (1988)

Xue, N., Wu, T., Bai, S., Wang, F., Xia, G.-S., Zhang, L., Torr, P. H.: Holistically-attracted wireframe parsing. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2788–2797 (2020)

Girshick, R.: Fast r-cnn. In: Proceedings of the IEEE International Conference on Computer Vision(ICCV), 1440–1448 (2015)

Girshick, R., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition(CVPR), 580–587 (2014)

Kong, S., Shen, X., Lin, Z., Mech, R., Fowlkes, C.: Photo aesthetics ranking network with attributes and content adaptation. In: European Conference on Computer Vision (ECCV), 662–679 (2016)

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., Fei-Fei, L.: Imagenet: A large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, 248–255 (2009)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale visual recognition. arXiv preprint arXiv:1409.1556 (2014)

Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., Huang, Z., Karpathy, A., Khosla, A., Bernstein, M., et al.: Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. (IJCV) 115, 211–252 (2015)

Woo, S., Park, J., Lee, J.-Y., Kweon, I. S.: Cbam: Convolutional block attention module. In: Proceedings of the European Conference on Computer Vision (ECCV), 3–19 (2018)

Shen, Z., Cui, C., Huang, J., Zong, J., Chen, M., Yin, Y.: Deep adaptive feature aggregation in multi-task convolutional neural networks. In: Proceedings of the 29th ACM International Conference on Information & Knowledge Management, 2213–2216 (2020)

Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Powers, D.M.: Evaluation: from precision, recall and f-measure to roc, informedness, markedness and correlation. arXiv preprint arXiv:2010.16061 (2020)

Huang, J., Ling, C.X.: Using AUC and accuracy in evaluating learning algorithms. IEEE Trans. Knowl. Data Eng. 17, 299–310 (2005)

Caruana, R.: Multitask learning. Mach. Learn. 28, 41–75 (1997)

Misra, I., Shrivastava, A., Gupta, A., Hebert, M.: Cross-stitch networks for multi-task learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 3994–4003 (2016)

Gao, Y., Ma, J., Zhao, M., Liu, W., Yuille, A.L.: Nddr-cnn: Layerwise feature fusing in multi-task cnns by neural discriminative dimensionality reduction. In: Proceedings of the IEEE/CVF International Conference on Computer Vision(CVPR), 3205–3214 (2019)

Cui, C., Shen, Z., Huang, J., Chen, M., Xu, M., Wang, M., Yin, Y.: Adaptive feature aggregation in deep multi-task convolutional neural networks. IEEE Trans. Circ. Syst. Video Technol. 32, 2133–2144 (2022)

Park, J., Woo, S., Lee, J.-Y., Kweon, I.S.: Bam: Bottleneck attention module. arXiv preprint arXiv:1807.06514 (2018)

Selvaraju, R.R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., Batra, D.: Grad-cam: Visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), 618–626 (2017)

Author information

Authors and Affiliations

Contributions

Qinggang Hou: conceptualization, methodology, software, writing—original draft. Yongzhen Ke: resources, writing—review and editing, supervision, project administration. Kai Wang: validation, data curation. Fan Qin: investigation, methodology, writing—review and editing. Yaoting Wang: formal analysis, visualization.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Communicated by S. Sarkar.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hou, Q., Ke, Y., Wang, K. et al. Synchronous composition and semantic line detection based on cross-attention. Multimedia Systems 30, 121 (2024). https://doi.org/10.1007/s00530-024-01307-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00530-024-01307-x