Abstract

An important set of theorems in geometric analysis consists of constant rank theorems for a wide variety of curvature problems. In this paper, for geometric curvature problems in compact and non-compact settings, we provide new proofs which are both elementary and short. Moreover, we employ our method to obtain constant rank theorems for homogeneous and non-homogeneous curvature equations in new geometric settings. One of the essential ingredients for our method is a generalization of a differential inequality in a viscosity sense satisfied by the smallest eigenvalue of a linear map Brendle et al. (Acta Math 219:1–16, 2017) to the one for the subtrace. The viscosity approach provides a concise way to work around the well known technical hurdle that eigenvalues are only Lipschitz in general. This paves the way for a simple induction argument.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We introduce a viscosity approach to a broad class of constant rank theorems. Such theorems say that under suitable conditions a positive semi-definite bilinear form on a manifold, that satisfies a uniformly elliptic PDE, must have constant rank in the manifold. In this sense, constant rank theorems can be viewed as a strong maximum principle for tensors. The aim of this paper is two-fold. Firstly, we want to present a new approach to constant rank theorems. It is based on the idea that the subtraces of a linear map satisfy a linear differential inequality in a viscosity sense and the latter allows to use the strong maximum principle. This avoids the use of nonlinear test functions, as in [5], as well as the need for approximation by simple eigenvalues, as in [24]. Secondly, we show that the simplicity of this method allows us to obtain previously undiscovered constant rank theorems, in particular for non-homogeneous curvature type equations. To illustrate the idea, we give a new proof for the following full rank theorem for the Christoffel-Minkowski problem, a.k.a. the \(\sigma _{k}\)-equation.

Theorem 1.1

[14, Theorem 1.2]

Let \(({\mathbb {S}}^n,g,\nabla )\) be the unit sphere with standard round metric and connection. Suppose \(n\ge 2\), \(1\le k\le n-1\) and \(0<s,\phi \in C^{\infty }({\mathbb {S}}^n)\) satisfy

where \(\sigma _{k}\) is k-th symmetric polynomial of eigenvalues of r with respect to g and \(\Gamma _{k}\) is the k-th Garding cone. Then r is positive definite.

Proof

For convenience, we define

Then \(F=f.\) Differentiate F and use Codazzi, where a semi-colon stands for covariant derivatives and we use the summation convention:

Hence the tensor r satisfies the elliptic equation

Now we deduce an inequality for the lowest eigenvalue of r, \( \lambda _{1}\), in a viscosity sense. Let \(\xi \) be a smooth lower support at \(x_0\in \mathbb {S}^{n}\) for \(\lambda _{1}\) and let \(D_1\ge 1\) denote the multiplicity of \(\lambda _{1}(x_0)\). Denote by \(\Lambda \) the complement of the set \(\{i,j,k,l>D_1\}\) in \(\{1,\dots ,n\}^{4}\). We use a relation between the derivatives of \(\xi \) and r, and the inverse concavity of F (cf. [3, Lemma 5], [2]) to estimate in normal coordinates at \(x_{0}\):

Then the strong maximum principle for viscosity solutions (cf. [4]) implies that the set \(\{\lambda _{1}=0\}\) is open. Hence, if \(\lambda _{1}\) was zero somewhere, it would be zero everywhere. However, we know it is positive somewhere, since at a minimum of s we have \(r>0\). \(\square \)

The proof may be summarized as follows: apply the viscosity differential inequality from [3, Lemma 5] for the minimum eigenvalue \(\lambda _1\) of the spherical hessian of r. Then the strong maximum principle shows that since there is a point at which \(\lambda _1 > 0\) we must have \(\lambda _1 > 0\) everywhere and hence the hessian has constant, full rank. A similar argument was employed in [19] for obtaining curvature estimates along a curvature flow.

Our main approach here is to generalize the viscosity inequality to the subtrace \(G_m = \lambda _1 + \dots + \lambda _m\), the sum of the first m eigenvalues. See Lemma 3.2 below. Then by induction, we are able to show that if \(\lambda _1 = \dots = \lambda _{m-1} \equiv 0\), the strong maximum principle shows that either \(G_m > 0\) or \(G_m \equiv 0\) to conclude constant rank theorems (in short, CRT).

We say a symmetric 2-tensor \(\alpha \) is Codazzi, provided \(\nabla \alpha \) is totally symmetric. Here is a prototypical CRT:

Theorem 1.2

(Homogeneous CRT) [10, Theorem 1.4] Suppose \(\alpha \) is a Codazzi, non-negative, symmetric 2-tensor on a connected Riemannian manifold \((M,g,\nabla )\) satisfying \(\Psi (\alpha ,g) = f> 0\), where \(\Psi \) is one-homogeneous, inverse concave and strictly elliptic (see Definition 1.3 and Assumption 2.1), and we have \(\nabla ^2 f^{-1} + \tau f^{-1} g \ge 0\) with \(\tau (x)\) the minimum sectional curvature at x. Then \(\alpha \) is of constant rank,Footnote 1

We state a more general version of CRT that allows the curvature function to be non-homogeneous and to explicitly depend on \(x\in M\) as well. To state the result, we need a few definitions.

Definition 1.3

Let \(\Gamma \subset \mathbb {R}^{n}\) be an open, convex cone such that

Suppose \((M^{n},g)\) is a smooth Riemannian manifold. A \(C^{\infty }\)-function

is said to be a pointwise curvature function, if for any \(x\in M\), the map \(F(\cdot ,x)\) is symmetric under permutation of the \(\lambda _{i}\). Such a map generates another map (denoted by F again) given by

where \(\mathcal {U}\) is a suitable open set and \(\lambda = (\lambda _{i})_{1\le i\le n}\) are the eigenvalues of \(\alpha \) with respect to g, or equivalently, the eigenvalues of the linear map \(\alpha ^{\sharp }\) defined by \(g(\alpha ^{\sharp }(v),w) = \alpha (v,w).\) Note that F can be considered as a map on an open set of \(\mathbb {R}^{n\times n}\) via \(F(\alpha ^{\sharp },x) = F(\alpha ,g,x)\); see [23].

With the convention \(\alpha ^{i}_{j} = g^{ik}\alpha _{kj}\), where \((g^{kl})\) is the inverse of \((g_{kl})\):

Note that \(F^{ij} = F^{i}_{k}g^{kj}\). Moreover, F is said to be

-

(i)

Strictly elliptic, if \(F^{ij}\eta _{i}\eta _{j} >0\quad \forall 0\ne \eta \in \mathbb {R}^{n},\)

-

(ii)

One-homogeneous, if for all \(x\in M\), \(F(\cdot ,x)\) is homogeneous of degree one, and

-

(iii)

Inverse concave, if the map \(\tilde{F} \in C^{\infty }(\Gamma _{+}\times M)\) defined by

$$\begin{aligned} \begin{aligned} \tilde{F}(\lambda _{i},x)= - F(\lambda _{i}^{-1},x)\quad \text{ is } \text{ concave. } \end{aligned} \end{aligned}$$

We use the convention for the Riemann tensor from [11]. For a Riemannian or Lorentzian manifold \((M,g,\nabla )\),

and we lower the upper index to the first slot:

The respective local coordinate expressions are \((R^{m}_{jkl})\) and \((R_{ijkl})\).

Definition 1.4

-

(i)

A pointwise curvature function \(F\in C^{\infty }(\Gamma \times M)\) is \(\Phi \)-inverse concave for some

$$\begin{aligned} \begin{aligned} \Phi \in C^{\infty }(\Gamma \times M, T^{4,0}(M)), \end{aligned} \end{aligned}$$provided at all \(\beta >0\) we have

$$\begin{aligned} \begin{aligned} F^{ij,kl}\eta _{ij}\eta _{kl}+2F^{ik}\tilde{\beta }^{jl}\eta _{ij}\eta _{kl}\ge \Phi ^{ij,kl}\eta _{ij}\eta _{kl}, \end{aligned} \end{aligned}$$where \(\tilde{\beta }^{ik}\beta _{kj}=\delta ^{i}_{j}\).

-

(ii)

For \(\alpha \in \Gamma \) we define a curvature-adjusted modulus of \(\Phi \)-inverse concavity,

$$\begin{aligned} \begin{aligned} \omega _{F}(\alpha )(\eta ,v)&=\Phi ^{ij,kl}\eta _{ij}\eta _{kl}+D_{xx}^{2}F(v,v)+2D_{x^{k}}F^{ij}\eta _{ij}v^{k}\\&+ {{\,\textrm{tr}\,}}_{g}{{\,\textrm{Rm}\,}}(\alpha ^{\sharp },v,D_{\alpha ^{\sharp }}F,v), \end{aligned} \end{aligned}$$where D denotes the product connection on \(\mathbb {R}^{n\times n}\times M\). Here the curvature term denotes contracting the vector parts of the (1, 1) tensors \(\alpha ^{\sharp } = \alpha ^i_j, D_{\alpha ^{\sharp }} F = F^k_l\) with the Riemann tensor and tracing the resulting bilinear form with respect to the metric so that

$$\begin{aligned} \begin{aligned} {{\,\textrm{tr}\,}}_{g}{{\,\textrm{Rm}\,}}(\alpha ^{\sharp },e_m,D_{\alpha ^{\sharp }}F,e_m) = g^{jl} \alpha ^i_j F^k_l R_{imkm}. \end{aligned} \end{aligned}$$

Remark 1.5

If \((A, x) \mapsto -F(A^{-1}, x)\) is concave (i.e., F is inverse concave), then we take \(\Phi =0\) and for all \((\eta ,v)\) we have

On several occasions, where there is a homogeneity condition on F, we will be able to choose a good positive \(\Phi \) that allows to relax assumptions on the other variables of the operator F; see Sect. 2.

We state the main result of the paper which contains Theorem 1.2 as a special case.

Theorem 1.6

(Non-homogeneous CRT) Let \((M,g,\nabla )\) be a connected Riemannian manifold and \(\Gamma \) an open, convex cone containing \(\Gamma _{+}\). Suppose \(F\in C^{\infty }(\Gamma \times M)\) is a \(\Phi \)-inverse concave, strictly elliptic pointwise curvature function. Let \(\alpha \) be a Codazzi, non-negative, symmetric 2-tensor with eigenvalues in \(\Gamma \) and

Suppose for all \(\Omega \Subset M\) there exists a positive constant \(c=c(\Omega )\), such that for all eigenvectors v of \(\alpha ^{\sharp }\) there holds

Then \(\alpha \) is of constant rank.

Remark 1.7

It might seem more natural to replace the condition on \(\omega _F\) with the condition

for every \(\eta \) and all v. Indeed such a condition certainly leads to constant rank theorems since taking in particular \(\eta = \nabla _v \alpha \), and v and eigenvector, we may apply Theorem 1.6. However, the requirement holding for all \(\eta , v\) is too restrictive for applications such as in Theorem 1.2. See the proof in Sect. 2 below where the required inequality is only proved to hold for \(\eta = \nabla _v \alpha \) and v an eigenvector.

An application of Theorem 1.6 to a non-homogeneous curvature problem is given in Theorem 2.4. Such a result was declared interesting in [16]. The full results are listed in Sect. 2.

CRT (also known as the microscopic convexity principle) was initially developed in [9] in two-dimensions for convex solutions of semi-linear equations, \(\Delta u = f(u)\) using the maximum principle and the homotopy deformation lemma. The result was extended to higher dimensions in [20]. The continuity method combined with a CRT yields existence of strictly convex solutions to important curvature problems. For example, a CRT was an important ingredient in the study of prescribed curvature problems such as the Christoffel-Minkowski problem and prescribed Weingarten curvature problem [12, 14, 15]. Later, general theorems for fully nonlinear equations were obtained in [5, 10] under the assumption that \(A \mapsto F(A^{-1})\) is locally convex. These approaches are based on the observation that a non-negative definite matrix valued function A has constant rank if and only if there is a \(\ell \) such that the elementary symmetric functions satisfy \(\sigma _{\ell } \equiv 0\) and \(\sigma _{\ell -1} > 0\). To apply this observation requires rather delicate, long computations and the introduction of clever auxiliary functions. The difficulties are at least in part due to the non-linearity of \(\sigma _{\ell }\). An alternative approach was taken in [24, 25], using a linear combination of lowest m eigenvalues, which provides a linearity advantage at the expense of losing regularity compared with \(\sigma _{\ell }\). The authors get around this difficulty by perturbing A so that the eigenvalues are distinct (thus restoring regularity) but then using an approximation argument. Our approach based on the viscosity inequality shows that \(G_m\) enjoys sufficient regularity to apply the strong maximum principle and this suffices to obtain a self-contained proof of the CRT.

We remark here, that our method is capable of reproving the results in [5, 10], namely with the help of Theorem 3.4 it is possible to prove that any convex solution u to

has constant rank under the assumption that

is concave for fixed p. This result does not follow from Theorem 1.6, but by using a suitably redefined \(\omega _{F}\) in Theorem 3.4, this result follows in the same way as Theorem 1.6. Here we rather want to focus on geometric problems.

We proceed as follows: In Sect. 2 we collect and prove direct applications of Theorem 1.6. In Sect. 3 we prove the viscosity inequality satisfied by the subtrace, a result that is of interest by itself. After some further corollaries, we conclude with the proof of Theorem 1.6.

2 Applications

In this section, we collect a few applications of Theorem 1.6. We fix an assumption that we need on several occasions.

Assumption 2.1

Let \(\Gamma \) be as in Definition 1.3.

-

(i)

\(\Psi \in C^{\infty }(\Gamma )\) is a positive, strictly elliptic, homogeneous function of degree one and normalized to \(\Psi (1,\dots ,1)=n,\)

-

(ii)

\(\Psi \) is inverse concave.

Recall that such a function \(\Psi \) at invertible arguments \(\beta \) satisfies

for all symmetric \((\eta _{ij})\); see for example [2].

In order to facilitate notation, for covariant derivatives we use semi-colons, e.g., the components of the second derivative \(\nabla ^{2}T\) of a tensor are denoted by

First, we illustrate how Theorem 1.2 follows from Theorem 1.6.

Proof of Theorem 1.2

We define \(F = \Psi - f.\) In view of (2.1) and Definition 1.4, we have

Let \(x_0\in M\) and \((e_i)_{1\le i\le n}\) be an orthonormal basis of eigenvectors for \(\alpha ^{\sharp }(x_0)\). In the associated coordinates, we calculate

for some constant c. Hence the claim follows from Theorem 1.6. \(\square \)

For a \(C^2\) function \(\zeta \) on a space (M, g) of constant curvature \(\tau _M\),

The next theorem contains the full rank theorems from [14, 15, 17] as special cases.

Theorem 2.2

(\(L_p\)-Christoffel-Minkowski Type Equations) Suppose \((M,g,\nabla )\) is either the hyperbolic space \(\mathbb {H}^{n}\) or the sphere \(\mathbb {S}^{n}\) equipped with their standard metrics and connections. Let \(\Psi \) satisfy Assumption 2.1, \(k\ge 1,\) \(p\ne 0\) and \(0<\phi ,s\in C^{\infty }(M)\) satisfy

If either

then \(r_M[s]\) is of constant rank. In particular, if \(M=\mathbb {S}^n,\) then we have

Proof

Note that \(\alpha =r_M[s]\) is a Codazzi tensor. We define

For simplicity, we rewrite \(f=us^{q-1},\) where \(u=\phi ^{\frac{1}{k}}\) and \(q=\frac{p+k-1}{k}.\)

As in the proof of Theorem 1.2, we have

Now we calculate

Therefore, if either \(r_{\mathbb {H}^n}[u^{-\frac{1}{q}}]\ge 0,\; q<0\) or \(r_{\mathbb {S}^n}[u^{-\frac{1}{q}}]\ge 0,\; q\ge 1,\) then

for some \(c\ge 0.\) The result follows from Theorem 1.6. Since \(\mathbb {S}^n\) is compact, at some point y we must have \(r_{{\mathbb {S}}^n}[s](y)>0.\) Hence \(r_{{\mathbb {S}}^n}[s]>0\) on M. \(\square \)

Remark 2.3

Let \(M=x(\Omega )\), \(x:\Omega \hookrightarrow \mathbb {R}^{n,1}\) be a co-compact, convex, spacelike hypersurface. The support function of M, \(s:\mathbb {H}^n\rightarrow \mathbb {R}\), is defined by \(s(z)=\inf \{-\langle z,p\rangle ;\, p\in M\},\) and \(r_{\mathbb {H}^n}[s]\) is non-negative definite. Moreover, if \(r>0\), then the eigenvalues of r with respect to g are the principal radii of curvature; e.g., [1]. Therefore, the curvature problem stated in the previous theorem can be considered as an \(L_p\)-Christoffel-Minkowski type problem in the Minkowski space.

In [16] the authors asked the validity of CRT for non-homogeneous curvature problems. In this respect we have the following theorem. First we have to recall the definition of the Garding cones:

where \(\sigma _{k}\) is the k-th elementary symmetric polynomial of the \(\lambda _{i}\). In \(\Gamma _{\ell }\), all \(\sigma _{k}\), \(1\le k\le \ell \), are strictly elliptic and the \(\sigma _{k}^{1/k}\) are inverse concave, see [18]. For a cone \(\Gamma \subset \mathbb {R}^{n}\), on a Riemannian manifold (M, g) a bilinear form \(\alpha \) is called \(\Gamma \) -admissible, if its eigenvalues with respect to g are in \(\Gamma \).

Theorem 2.4

(A non-homogeneous curvature problem) Let \(\phi >0\) be a smooth function on \((\mathbb {S}^{n},g,\nabla )\) with

\(\psi _{\ell }\equiv 1\) and \(0< \psi _{k}\in C^{\infty }(\mathbb {S}^{n})\) for \(1\le k\le \ell -1\) satisfyFootnote 2

Let \(\alpha \) be a \(\Gamma _{\ell }\)-admissible, Codazzi, non-negative, symmetric 2-tensor, such that

Then \(\alpha \) is of constant rank. In particular, when \(\alpha =r_{\mathbb {S}^n}[s]\ge 0\) for some positive function \(s\in C^{\infty }(\mathbb {S}^n)\), then in fact we have \(\alpha >0.\)

Proof

The result follows quickly from Theorem 1.6. We define

Since \(\sigma _{k}^{1/k}\) is inverse concave and 1-homogeneous, F is \(\Phi \)-inverse concave with

Let \(x_0\in M\) and \((e_i)_{1\le i\le n}\) be an orthonormal basis of eigenvectors for \(\alpha ^{\sharp }(x_0).\) Now using

we deduce

Therefore, \(\omega _F(\alpha )(\nabla _{e_i}\alpha ,e_i)+c\alpha _{ii}\) is non-negative for some constant c. \(\square \)

Let \((N,{\bar{g}},\bar{D})\) be a simply connected Riemannian or Lorentzian spaceform of constant sectional curvature \(\tau _N\). That is, N is either the Euclidean space \(\mathbb {R}^{n+1}\), the sphere \(\mathbb {S}^{n+1}\), the hyperbolic space \(\mathbb {H}^{n+1}\) with respective sectional curvature \(0,1,-1\) or the \((n+1)\)-dimensional Lorentzian de Sitter space \(\mathbb {S}^{n,1}\) with sectional curvature 1.

Assume \(M=x(\Omega )\) given by \(x:\Omega \hookrightarrow N\) is a connected, spacelike, locally convex hypersurface of N and

where \(\tilde{N}\) denotes the dual manifold of N, i.e.,

Here f is extended as a zero homogeneous function to the ambient space. We write \(\nu , h, s\) for the future directed (timelike) normal, the second fundamental form and the support function of M, respectively (cf. [7, 8]). The eigenvalues of h with respect to the induced metric on \(\Sigma \) are ordered as \(\kappa _1\le \dots \le \kappa _n\) and we write in short

The Gauss equation (cf. [11, (1.1.37)]) relates extrinsic and intrinsic curvatures,

where \(\sigma = {\bar{g}}(\nu ,\nu )\) and the second fundamental form is defined by

Theorem 2.5

Let \((N,{\bar{g}},{\bar{D}})\) be one of the spaces above and let \(\Psi \) satisfy Assumption 2.1. Let M be a connected, spacelike, locally convex and \(\Gamma \)-admissible hypersurface such that

where \(0<f\in C^{\infty }(M\times \mathbb {R}_{+}\times \tilde{N})\) and

Then the second fundamental form of M is of constant rank.

Proof

Define \(F(h,g,x) = \Psi (h^{\sharp }) - f(x,s(x),\nu (x)).\) Let \(x_0\in M\) and \((e_i)_{1\le i\le n}\) be an orthonormal basis of eigenvectors for \(h^{\sharp }(x_0).\) Now in view of Theorem 1.6, the claim follows from [8, p. 15] and a computation using the Gauss equation (2.2):

\(\square \)

The following corollary contains the CRT from [12, 13] as special cases.

Corollary 2.6

(Curvature Measures Type Equations) Suppose the curvature function \(\Psi \) satisfies Assumption 2.1, \(1\le k\le n-1\), \(p\in \mathbb {R}\) and \(0<\phi \in C^{\infty }({\mathbb {S}}^n)\). Let M be a \(\Gamma \)-admissible convex hypersurface of \(\mathbb {R}^{n+1}\) which encloses the origin in its interior and suppose

If

then M is strictly convex.

3 A viscosity approach

The following lemma served as the main motivation for us to study the constant rank theorems with a viscosity approach. It shows that the smallest eigenvalue of a bilinear form satisfies a viscosity inequality. In the context of extrinsic curvature flows a similar approach was taken to prove preservation of convex cones; see [21, 22]. There it was shown that the distance of the vector of eigenvalues to the boundary of a convex cone satisfies a viscosity inequality.

Lemma 3.1

[3, Lemma 5] Let the eigenvalues of a symmetric 2-tensor \(\alpha \) with respect to a metric \((g,\nabla )\) at \(x_0\) be ordered via

for some \(D_1\ge 1.\) Let \(\xi \) be a lower support for \(\lambda _1\) at \(x_0.\) That is, \(\xi \) is a smooth function such that in an open neighborhood of \(x_0\),

and \(\xi (x_0)=\lambda _1(x_0).\) Choose an orthonormal frame for \(T_{x_0}M\) such that

Then at \(x_0\) we have for \(1\le k\le n\),

-

(1)

$$\begin{aligned} \begin{aligned} \alpha _{ij;k}=\delta _{ij}\xi _{;k}\quad 1\le i,j\le D_1, \end{aligned} \end{aligned}$$

-

(2)

$$\begin{aligned} \begin{aligned} \xi _{;kk}\le \alpha _{11;kk}-2\sum _{j>D_1}\frac{(\alpha _{1j;k})^2}{\lambda _j-\lambda _1}. \end{aligned} \end{aligned}$$

While the previous lemma is sufficient for full rank theorems (i.e., when the respective linear map is non-negative, and positive definite at least at one point), we need to generalize [3, Lemma 5] from the smallest eigenvalue to an arbitrary subtrace of a matrix to treat constant rank theorems.

To formulate the following lemma, we introduce some notation. For a symmetric 2-tensor \(\alpha \) on a vector space V with inner product g, let \(\alpha ^{\sharp }\) be the metric raised endomorphism defined by \(g(\alpha ^{\sharp }(X), Y) = \alpha (X, Y)\). Then \(\alpha ^{\sharp }\) is diagonalizable and we write

for the eigenvalues with distinct eigenspaces \(E_k\) of dimension \(d_k = \dim E_k\), \(1 \le k \le N\). For convenience, let \(E_0 = \{0\}\) and \(d_0 = 0\). Define

for \(0\le j \le N\) so that

Let \((e_j)_{1 \le j \le n}\) be an orthonormal basis of eigenvectors corresponding to the eigenvalues \((\lambda _j)_{1\le j \le n}\) giving \(E_k = {{\,\textrm{span}\,}}\{e_{\bar{d}_{k-1}+1}, \dots , e_{\bar{d}_k}\}\) and \(\bar{E}_k = {{\,\textrm{span}\,}}\{e_1, \dots , e_{\bar{d}_k}\}\). For each \(1 \le m \le n\), there is a unique j(m) such that

Then \(\bar{d}_{j(m)-1} < m \le \bar{d}_{j(m)}\). For convenience, we write

Note that \(D_m\) is the largest number such that

and hence

The subspace \(V_m\) is invariant under \(\alpha ^{\sharp }\) and the trace of \(\alpha ^{\sharp }\) restricted to \(V_m\) is the subtrace,

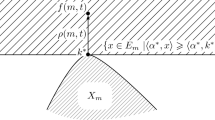

This subtrace is characterized by Ky Fan’s maximum principle (cf. [6, Theorem 6.5]), taking the infimum with respect to all traces of \(\pi _P \circ \alpha ^{\sharp }|_P\) over m-planes of the tangent spaces where \(\pi _P\) is orthogonal projection onto an m-plane P:

where \((g^{kl})\) is the inverse of \(g_{kl}=g(w_{k},w_{l})\). Now suppose \(\alpha \) is a bilinear form on a Riemannian manifold (M, g), \(x_0\in M\) and \((e_{i})_{1\le i\le n}\) is an orthonormal basis of eigenvectors at \(x_{0}\) with eigenvalues

Letting \(w_i(x)\), \(1 \le i \le m\), be any set of linearly independent local vector fields around \(x_0\) with \(w_i(x_0) = e_i\), then we have a smooth upper support function for \(G_m\) at \(x_0\):

where \(\alpha _{kl} = \alpha (w_k(x), w_l(x))\). We make use of \(\Theta \) to prove the next lemma generalizing Lemma 3.1.

Lemma 3.2

Let (M, g) be a Riemannian manifold and let \(\alpha \) be a symmetric 2-tensor on TM. Suppose \(1\le m\le n\) and \(\xi \) is a (local) lower support at \(x_0\) for the subtrace \(G_m(\alpha ^{\sharp })\). Then at \(x_0\) we have

-

(1)

$$\begin{aligned} \begin{aligned} \xi _{;i} = {{\,\textrm{tr}\,}}_{V_m} \alpha _{;i} = \sum _{k=1}^m \alpha _{kk;i}, \end{aligned} \end{aligned}$$

-

(2)

$$\begin{aligned} \begin{aligned} \xi _{;ii}\le&\sum _{k=1}^{m}\alpha _{kk;ii}-2\sum _{k=1}^{m}\sum _{r>D_m}\frac{(\alpha _{kr;i})^{2}}{\lambda _{r}-\lambda _{k}}, \end{aligned} \end{aligned}$$

where \(V_m = {{\,\textrm{span}\,}}\{e_1(x_0), \dots , e_m(x_0)\}\) for any choice of m orthonormal eigenvectors \(e_k\) with corresponding eigenvalues \(\lambda _1, \dots , \lambda _m\) satisfying

Proof

For this proof we use the summation convention for indices ranging between 1 and m. Let \(\xi \) be a lower support for \(G_m\) at \(x_{0}\). Fix an index \(1\le i\le n\) and let \(\gamma (s)\) be a geodesic with \(\gamma (0)=x_{0}\) and \({\dot{\gamma }}(0)=e_{i}(x_0)\). Let \((v_k)_{1\le k \le m}\) be any basis (not necessarily orthonormal) for \(V_m\) as in the statement of the lemma. As mentioned above, for any m linearly independent vector fields \((w_k(s))_{1\le k \le m}\) along \(\gamma \) with \(w_k(0) = v_k(x_0)\), \(\alpha _{kl} = \alpha (w_{k},w_{l})\) and \((g^{kl}) = (g(w_{k},w_{l}))^{-1}\), the function

satisfies

and hence

Since \(V_m \subseteq \bar{E}_{j(m)}\), choosing \(w_k\) such that \({\dot{w}}_k(0) \perp \bar{E}_{j(m)}(x_0)\) gives

and hence also

Then we compute

giving the first part.

Now we move on to the second derivatives. For this we make the additional assumptions, \(v_k = e_k\) and \(\ddot{w}_k(0) = 0\). We first calculate

since \({\dot{g}}_{kl}(0) = 0\) and \(g^{kl}(0) = \delta ^{kl}\). Then from \(\ddot{w}_k(0) = 0\) we obtain

From the local minimum property,

From \({\dot{w}}_k(0) \perp \bar{E}_{j(m)}\), we may write \({\dot{w}}_k (0) = \sum \limits _{r>D_m} c_k^r e_r\) giving

Optimizing yields the specific choice

From this we obtain

\(\square \)

Corollary 3.3

Let \(\alpha \) be a non-negative, symmetric 2-tensor on TM. Suppose for some \(1 \le m \le n\) that \(\dim \ker \alpha ^{\sharp } \ge m-1\) or equivalently that the eigenvalues of \(\alpha ^{\sharp }\) satisfy \(\lambda _{1} \equiv \dots \equiv \lambda _{m-1} \equiv 0.\) Then for all \(x_{0}\) and any lower support \(\xi \) for \(G_{m}\) at \(x_{0}\) and all \(1\le i\le n\) we have

-

(1)

\((\nabla _i\alpha (x_{0}))_{|\ker \alpha ^{\sharp } \times \ker \alpha ^{\sharp }} = 0,\)

-

(2)

\((\nabla _i\alpha (x_{0}))_{|E_{j(m)} \times E_{j(m)}} = g\nabla _i \xi (x_{0}),\quad \text{ if }~\lambda _{m}(x_{0})>0.\)

Proof

We use a basis \((e_{i})\) as in Lemma 3.2. To prove (1) we may assume \(\lambda _1(x_0) = 0\), and hence the zero function is a lower support for \(\lambda _1\). By Lemma 3.1, we have \(\nabla \alpha _{kl}=0\) for all \(1\le k,l\le d_1\) proving the first equation.

Now we prove (2). For \(m=1\) the claim follows from Lemma 3.2-(1). Suppose \(m > 1\). If \(d_1 \ge m\) at \(x_0\) then \(\lambda _m(x_0) = 0\) which violates our assumption. Hence \(d_1=m-1\) and \(E_1(x_0) = {{\,\textrm{span}\,}}\{e_1, \dots , e_{m-1}\}\). Taking any unit vector \(v \in E_2(x_0)={{\,\textrm{span}\,}}\{e_m,\ldots ,e_{D_m}\}\) and applying Lemma 3.2-(1) with \(V_m=\{e_1,\ldots ,e_{m-1},v\}\) gives

Polarizing the quadratic form \(v \mapsto \nabla _i \alpha (v, v)\) over \(E_2(x_0)\) then shows

\(\square \)

Now we state the key outcome of the results in this section. We want to acknowledge that the following proof is inspired by the beautiful paper [24] and their sophisticated test function

Theorem 3.4

Under the assumptions of Theorem 1.6, if \(\dim \ker \alpha ^{\sharp } \ge m-1\), for all \(\Omega \Subset M\) there exists a constant \(c=c(\Omega )\), such that for all \(x_{0}\in \Omega \) and any lower support function \(\xi \) for \(G_m(\alpha ^{\sharp })\) at \(x_0\) we have

Proof

In view of our assumption \(\lambda _{m-1}\equiv 0\). Hence the zero function is a smooth lower support at \(x_{0}\) for every subtrace \(G_{q}\) with \(1\le q\le m-1\). Therefore by Lemma 3.2, for every \(1\le q\le m-1\) and every \(1\le i\le n\) we obtain

Due to the Ricci identity, we have the commutation formula

Taking into account Lemma 3.2 and adding the inequalities (3.1) for \(1\le q\le m-1\), we have at \(x_{0}\),

Now differentiating the equation \(F(\alpha ^{\sharp }, x)=0\) yields

Then substituting above gives

where we have used that \(\alpha \) is Codazzi and the fact that \(1 \le k \le m \le D_m\) in splitting the sum involving \(F^{ij,rs}\) into terms where at least two indices are at most \(D_{m}\) and the remaining indices \(i,j,r,s>D_m\). We have also used \(\lambda _j - \lambda _k \ge \lambda _j\), and that for some constant c,

Now for every \(1\le k\le m\) define

Then

In addition we define \(\alpha ^{\sharp }_{\varepsilon } = \alpha ^{\sharp }+\varepsilon {{\,\textrm{id}\,}}\), which has positive eigenvalues for \(\varepsilon >0\). In the sequel, a subscript \(\varepsilon \) denotes evaluation of a quantity at \(\alpha ^{\sharp }_{\varepsilon }\), e.g., we put \(F^{ij}_{\varepsilon } = F^{ij}(\alpha ^{\sharp }_{\varepsilon }).\) We have

In view of Definition 1.4, and the definition of \(\omega _F,\)

Adding and subtracting some terms gives

Next we estimate the last two lines of (3.2). We have

where for the last inequality we used Corollary 3.3. Let us define

Note that if \(\lambda _m(x_0)=0\), then \(D_q=D_m\) for all \(q\le m\) and hence \({\mathcal {R}}=0\). If \(\lambda _{m}(x_{0})>0,\) then we have \(D_q=m-1\) for all \(q\le m-1\) and

Therefore, due to uniform ellipticity, we can use

to show that \({\mathcal {R}}\le c'\xi .\) Then by the assumptions on \(\omega _F\), the right hand side of (3.2) is bounded by \(c(\xi + |\nabla \xi |)\) completing the proof. \(\square \)

Remark 3.5

Here we crucially used that F is \(\Phi \)-inverse concave, then we took the limit \(\varepsilon \rightarrow 0\) and finally swapped \(\eta _k\) with \(\nabla _{e_k} \alpha \) absorbing the extra terms. If on the other hand we tried to swap first without using \(\Phi \)-inverse concavity, the extra terms would involve \(\sum _{r=1}^{n}\frac{F^{ii}_{\varepsilon }(\nabla _{e_k} (\alpha _{\varepsilon })_{ir})^{2}}{\lambda _{r}+\varepsilon }\). Since \(\lambda _r = 0\) for \(1\le r \le m-1\) this blows up in the limit \(\varepsilon \rightarrow 0\) and cannot be absorbed.

Proof of Theorem 1.6

Let \(k:= \max _{x\in M}\dim \ker \alpha ^{\sharp }(x).\) If \(k=0\), we are done. By induction we show that for all \(1\le m\le k\) we have \(\lambda _{m}\equiv 0\). For \(m=1\), clearly we have \(\dim \ker \alpha ^{\sharp } \ge m-1\) and hence by Theorem 3.4 a lower support \(\xi \) for \(G_1 = \lambda _1\) locally satisfies

By the strong maximum principle [4], \(\lambda _1 \equiv 0\).

Now suppose the claim holds true for \(m-1\), i.e.,

Then a lower support \(\xi \) for \(G_m\) satisfies

Hence \(G_m \equiv 0\) for all \(m\le k.\) Since k indicates the maximum dimension of the kernel, we must have \(\lambda _{k+1}>0\) and the rank is always \(n-k\). \(\square \)

Data Availability

Not applicable.

Code Availability

Not applicable.

Notes

Note that in [10, Theorem 1.4] \(F:=-\Psi ^{-1}\).

Note this forces \(\psi _{1}\) to be constant.

References

Andrews, B., Chen, X., Fang, H., McCoy, J.: Expansion of Co-compact convex spacelike hypersurfaces in Minkowski space by their curvature. Indiana Univ. Math. J. 64(2), 635–662 (2015)

Andrews, B.: Pinching estimates and motion of hypersurfaces by curvature functions. J. für die Reine und Angewandte Math. 608, 17–33 (2007)

Brendle, S., Choi, K., Daskalopoulos, P.: Asymptotic behavior of flows by powers of the Gaussian curvature. Acta Math. 219(1), 1–16 (2017)

Bardi, M., Da Lio, F.: On the strong maximum principle for fully nonlinear degenerate elliptic equations. Arch. Math. 73(4), 276–285 (1999)

Bian, B., Guan, P.: A microscopic convexity principle for nonlinear partial differential equations. Invent. Math. 177(2), 307–335 (2009)

Bhatia, R.: Perturbation bounds for matrix eigenvalues, Classics in Applied Mathematics, vol. 53, Society for Industrial and Applied Mathematics (SIAM), Philadelphia, PA, (2007), Reprint of the 1987 originald

Bryan, P., Ivaki, M.N., Scheuer, J.: Harnack inequalities for curvature flows in Riemannian and Lorentzian manifolds. J. für die Reine und Angewandte Math. 2020(764), 71–109 (2019)

Bryan, P., Ivaki, M.N., Scheuer, J.: Parabolic approaches to curvature equations. Nonlinear Analysis 203, 112174 (2021)

Luis, A.: Caffarelli and Avner Friedman, Convexity of solutions of semilinear elliptic equations. Duke Math. J. 52(2), 431–456 (1985)

Caffarelli, L., Guan, P., Ma, X.-N.: A constant rank theorem for solutions of fully nonlinear elliptic equations. Commun. Pure Appl. Math. 60(12), 1769–1791 (2007)

Gerhardt, C.: Curvature problems, Series in Geometry and Topology, vol. 39. International Press of Boston Inc., Sommerville (2006)

Guan, P., Lin, C., Ma, X.-N.: The Christoffel-Minkowski problem II: Weingarten curvature equations. Chin. Ann Math. Series B 27(6), 595–614 (2006)

Guan, P., Lin, C., Ma, X.-N.: The existence of convex body with prescribed curvature measures. Int. Math. Res. Not. 2009(11), 1947–1975 (2009)

Guan, P., Ma, X.-N.: The Christoffel-Minkowski problem I: Convexity of solutions of a Hessian equation. Invent. Math. 151(3), 553–577 (2003)

Guan, P., Ma, X.-N., Zhou, F.: The Christofel-Minkowski problem III: existence and convexity of admissible solutions. Commun. Pure Appl. Math. 59(9), 1352–1376 (2006)

Guan, P., Zhang, X.: A class of curvature type equations. Pure Appl. Math. Quarterly 17(3), 865–907 (2021)

Changqing, H., Ma, X.-N., Shen, C.: On the Christoffel-Minkowski problem of Firey’s p-sum. Calc. Var. Partial. Differ. Equ. 21(2), 137–155 (2004)

Huisken, G., Sinestrari, C.: Convexity estimates for mean curvature flow and singularities of mean convex surfaces. Acta Math. 183(1), 45–70 (1999)

Ivaki, M.N.: Deforming a hypersurface by principal radii of curvature and support function. Calculus of Vari. Partial Diff. Equ. 58(1), 1–18 (2019)

Korevaar, N.J., Lewis, J.L.: Convex solutions of certain elliptic equations have constant rank Hessians. Arch. Rational Mech. Anal. 97(1), 19–32 (1987)

Langford, M.: Motion of hypersurfaces by curvature. Australian National University, Australia (2014)

Langford, M.: A general pinching principle for mean curvature flow and applications. Calculus of Variat. Partial Diff. Equ. 56(4), 107 (2017)

Scheuer, J.: Isotropic functions revisited. Arch. Math. 110(6), 591–604 (2018)

Székelyhidi, G., Weinkove, B.: On a constant rank theorem for nonlinear elliptic PDEs. Discrete Contin. Dyn. Syst. Series A 36(11), 6523–6532 (2016)

Székelyhidi, G., Weinkove, B.: Weak Harnack inequalities for eigenvalues and constant rank theorems. Comm. Partial Diff. Equ. 46(8), 1585–1600 (2021)

Funding

Open Access funding enabled and organized by Projekt DEAL. PB was supported by the ARC within the research grant “Analysis of fully non-linear geometric problems and differential equations", number DE180100110. MI was supported by a Jerrold E. Marsden postdoctoral fellowship from the Fields Institute. JS was supported by the “Deutsche Forschungsgemeinschaft" (DFG, German research foundation) within the research scholarship “Quermassintegral preserving local curvature flows", grant number SCHE 1879/3-1.

Author information

Authors and Affiliations

Contributions

Not applicable

Corresponding author

Ethics declarations

Conflict of interests

There are no conflicts of interest.

Ethical approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Additional information

Communicated by A. Malchiodi.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bryan, P., Ivaki, M.N. & Scheuer, J. Constant rank theorems for curvature problems via a viscosity approach. Calc. Var. 62, 98 (2023). https://doi.org/10.1007/s00526-023-02442-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00526-023-02442-5