Abstract

In this paper, we start from a very natural system of cross-diffusion equations, which can be seen as a gradient flow for the Wasserstein distance of a certain functional. Unfortunately, the cross-diffusion system is not well-posed, as a consequence of the fact that the underlying functional is not lower semi-continuous. We then consider the relaxation of the functional, and prove existence of a solution in a suitable sense for the gradient flow of (the relaxed functional). This gradient flow has also a cross-diffusion structure, but the mixture between two different regimes, that are determined by the relaxation, makes this study non-trivial.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The starting point of this paper is the following very natural system of PDEs

complemented with no-flux boundary conditions in a bounded domain \(\Omega \). This cross-diffusion system describes the motion of two populations, each subject to diffusion and trying to avoid the presence of the other, so that each density acts as a potential in the evolution equation satisfied by the other.

This model was studied in [4], where it is obtained as a continuum version of a discrete lattice model proposed in [1], to account for the territorial development of two competing populations. Similar models appear in mathematical biology to describe the evolution of interacting species that are under the influence of population pressure due to intra- and inter-specific interferences (see e.g. [10, 16]). However, in these papers, the models considered always enjoy some special structure that ensures some “convexity”, which is crucially not verified by System (1.1), as we will explain later.

The existence of solutions for (1.1) is a very challenging problem. An easy computation shows that the system is parabolic when \(\rho \mu <1\) but has an anti-parabolic behavior when \(\rho \mu >1\). Existence of solutions for short time is proven by the third author and her collaborators in [4], under the assumption that the initial data satisfy \(\rho _0\mu _0<1\).

A noticeable property of system (1.1) is the following: it can be seen as a gradient flow for a suitable functional in the Wasserstein space.

The usual notion of gradient flows applies to Hilbert spaces but in the last two decades the interest has grown for gradient flows in metric spaces and in particular in the space of probability measures endowed with the Wasserstein distance (see [3] and [15]), after the seminal work by Jordan, Kinderlehrer and Otto [12], who found a gradient flow structure for the Fokker–Planck equation. Applying the same ideas not to a single PDE but to a system of PDEs, thus looking for gradient flows in the space of pairs of probability measures, is more recent (see [7, 13]) and more delicate.

At a formal level, given a functional F defined on probabilities on a given domain \(\Omega \), its gradient flow corresponds to the evolution PDE

where \(\delta F/\delta \rho \) is the first variation of the functional F (formally defined through the condition \(F(\rho +\varepsilon \delta \rho )=F(\rho )+\varepsilon \int \frac{\delta F}{\delta \rho } \,\textrm{d}\delta \rho +o(\varepsilon )\)). This equation is endowed with no-flux boundary conditions on \(\partial \Omega \). Analogously, given a functional F defined on pairs \((\rho ,\mu )\) of probabilities on the domain \(\Omega \), the gradient flow of F in \(W_2(\Omega )\times W_2(\Omega )\) would be given by the system

with again no-flux on the boundary. Of course, we formally define the two partial first variations via the condition \(F(\rho +\varepsilon \delta \rho , \mu +\varepsilon \delta \mu )=F(\rho ,\mu )+\varepsilon \int \frac{\delta F}{\delta \rho }\,\textrm{d}\delta \rho +\varepsilon \int \frac{\delta F}{\delta \mu }\,\textrm{d}\delta \mu +o(\varepsilon )\).

Here, it turns out that (1.1) is the gradient flow of the following functional

where the function \(f_0\) is given by \(f_0(a,b)=a \log a + b\log b +ab\), and \(\rho (x)\), \(\mu (x)\) stand for the densities of the two measures, which are supposed to be absolutely continuous and identified with their \(L^1\) densities.

The main problem in considering the function \(f_0\) is that it is not globally convex, as we have, denoting \(D^2f_0\) the Hessian of \(f_0\),

and this matrix is only positive-definite if \(ab<1\). The non-convexity of \(f_0\) translates into the non-parabolic behavior of the system outside the region where \(\rho \mu <1\).

Yet, in terms of the functional \(F_0\), the situation is even worse. Indeed, integral functionals with a non-convex integrand are not lower semi-continuous for the weak convergence of probability measures. This is a non-negligible issue when applying variational methods. Indeed, one of the main tools to study gradient flows in a metric setting is the so-called minimizing movement method (see [2, 8]). Let us quickly explain this tool in the general case of a metric space, and we specify it to the case of interest here just after.

For a given functional F, acting on a metric space M endowed with a distance d, the minimizing movement scheme consists in building an approximation of the gradient flow (which is a curve x(t) in M) as follows: we fix a time step \(\tau >0\) and look for a sequence \(x^\tau _k\) such that

In the case where the metric space is the space of propability measures on \(\Omega \) and the distance d is the Wasserstein distance, this iterated minimization scheme is known under the name of Jordan-Kinderlehrer-Otto (JKO) scheme. It produces a sequence of probability measures which, when appropriately interpolated, would approximate the gradient flow of F.

Here, we will consider the case of a gradient flow in \(M = W_2(\Omega )\times W_2(\Omega )\) (and the distance d is the natural product of the Wasserstein distance). The minimizing movement method then consist in solving the following family of minimization problems:

However, if F is not lower semi-continuous for the weak convergence, which is the case when \(F=F_0\) defined above, while the terms in \(W_2^2\) are continuous, the existence of a minimizer in the above iterated minimization problem is not always guaranteed. From the variational point of view, it is necessary to replace the functional \(F_0\) with its relaxation, or lower semi-continuous envelope.

We stress that the relation between the gradient flow of a functional and its lower semi-continuous envelope is not clear in terms of PDEs, but it is clearer at the level of the JKO scheme. Indeed, if we fix \(\tau >0\) and a tolerance parameter \(\delta >0\), when facing a non-lower semi-continuous functional F (whose lower semi-continuous envelope is denoted by \(\bar{F}\)), we could define a \(\delta \)-approximated JKO scheme in the following way: we denote by \(\textrm{argmin}_x^\delta G(x)\) the set of \(\delta \)-almost minimizers of G, i.e. the set \(\{x\,:\, G(x)<\inf G +\delta \}\), which is always non-empty, and we pick any sequence \(x^{\tau ,\delta }_k\) satisfying

It can be proven, as an application of \(\Gamma -\)convergence, that, for fixed \(\tau \) and letting \(\delta \rightarrow 0\), any such sequence converges to a sequence satisfying

i.e. to the output of the JKO scheme for the lower semi-continuous relaxation \(\bar{F}\).

The present paper is then devoted to the study of the system of PDEs representing the gradient flow of a functional F obtained as the lower semi-continuous envelope of \(F_0\), and in particular to an existence result. Let us detail the structure of the paper. As a first task, we need to compute this lower semi-continuous envelope. The general theory about local functionals on measures (see [9]) provides a clear answer: one just needs to replace the non-convex function \(f_0\) with its convex envelope, that we will denote by f. We therefore compute the convex envelope f of \(f_0\) and find that it has the following form: on a certain region \(B\subset {\mathbb {R}}_+^2\) we have \(f=f_0\), and on the complement \(A={\mathbb {R}}_+^2\setminus B\) the function f only depends on the sum of the two variables, i.e. there exists \(\tilde{f}:{\mathbb {R}}\rightarrow {\mathbb {R}}\) such that \(f(a,b)=\tilde{f}(a+b)\) for \((a,b)\in A\).

The fact that f partially agrees with a function of the sum only recalls some cross-diffusion problems already studied in other papers using Wasserstein techniques, such as in [7, 13]. Cross-diffusion with a functional only depending on the sum is not difficult to study, as one can first find an equation on the sum \(S=\rho +\mu \) and then use the gradient of the solution S as a drift advecting both \(\rho \) and \(\mu \). The difficulty in most of the cross-diffusion problems involving the sum comes from the lower-order terms which differentiate the two species (reaction, or advection), since the above strategy cannot be applied and in general it is not possible to obtain compactness results on the two densities \(\rho \) and \(\mu \) separately, but only on their sum. However, when the functional involves the integral of a strictly convex function of the two densities \(\rho \) and \(\mu \), it is indeed possible to obtain separate compactness: here the challenge comes from combining the two regimes, one where f is strictly convex and one where it only depends on the sum, but without extra terms differentiating the two species.

On the other hand, a remark is compulsory when looking at the precise definition of the function f, since the region B where \(f=f_0\) is strictly included in the set \(\{ab<1\}\). Indeed, one could think (and it would have been nice!) that the system we obtain is an extension of the one with \(f_0\) (that is, (1.1)), in the sense that it allows to extend the solutions even after touching the dangerous curve where \(\rho \mu =1\). Unfortunately, this interpretation is not correct since there are initial data satisfying \(\rho \mu <1\) but \((\rho ,\mu )\notin B\), for which the two systems would have well-defined different solutions, at least for short time.

As a result, this paper will only be concerned with the gradient flow of the new functional F (the lower semi-continuous envelope of \(F_0\), which is, in this case, its convexification as well), without discussing its relation with the original PDE which motivated the study. For this new gradient flow we will prove existence of solutions in a suitable sense.

The notion of solution we consider is inspired by the notion of EDI solution introduced in [2, 3] in terms of the metric slope, but it is slightly different and more PDE-adapted. Given our functional F, our goal is to find a solution of the following system

where the two continuity equations are satisfied in a weak sense with no-flux boundary conditions on \(\partial \Omega \) and the functions \(\frac{\delta F}{\delta \rho }\) and \(\frac{\delta F}{\delta \mu }\) are differentiable in a suitable weak sense. In our particular case this means finding a pair \((\rho _t,\mu _t)\) such that the gradients of \(f_a(\rho _t,\mu _t)\) and \(f_b(\rho _t,\mu _t)\) exist in such a sense, and the above equations are satisfied.

Formally, if the two first conditions of (1.4) are satisfied, then the last two conditions are equivalent, on the interval [0, T], to the following inequality

Indeed, if we formally differentiate the function \(t\mapsto F(\rho _t,\mu _t)\) in time, we obtain

hence

This means that (1.5) can be re-written as

and the only way to satisfy this condition is to satisfy the a.e. equality of the last two lines in (1.4).

This trick to characterize the solutions comes from the Euclidean observation that \(x'(t)=-\nabla F(x(t))\) is equivalent to \(\frac{\,\textrm{d}}{\,\textrm{d}t}F(x(t))\le -\frac{1}{2} |x'(t)|^2-\frac{1}{2}|\nabla F(x(t))|^2\), and hence to the Energy Dissipation Inequality (EDI)

The fact that gradients and derivatives cannot be defined in metric spaces (a vector structure is needed) but their norms could be defined (using the so-called metric derivative and metric slope) instead leads to the definition of a notion of EDI gradient flow in metric spaces. This is what is done in [3] for general metric spaces (in particular for functionals F which are geodesically convex) and then particularized to the case of the Wasserstein space.

However, this is not the strategy which is followed in our paper, even though what we do is strongly inspired by the metric approach of [3]. Our precise procedure is the following:

-

We define a class \({\mathcal {H}}\) consisting of pairs \((\rho ,\mu )\) where \(\nabla f_a(\rho ,\mu )\) and \(\nabla f_b(\rho ,\mu )\) are well-defined. We do this by requiring that some functions of the densities \((\rho ,\mu )\) belong to the \(H^1\) Sobolev space: in particular, we require that \(\eta (\rho ,\mu )\) is \(H^1\) for any smooth function \(\eta \) supported in the set B where f has a strictly convex behavior, and we also require \(\sqrt{\rho +\mu }\) to be \(H^1\). The second requirement is not sharp, in the sense that other functions of the sum could be used as well. We chose to use this one for simplicity, since we guarantee this condition on the solutions which we build via extra arguments.

-

We define, on the class \({\mathcal {H}}\), the slope functional \(\textrm{Slope}F\) via

$$\begin{aligned} \textrm{Slope}F(\rho ,\mu ):=\int _\Omega \rho |\nabla f_a(\rho ,\mu )|^2+\mu |\nabla f_b(\rho ,\mu )|^2. \end{aligned}$$(1.7)Note that we do not pretend at all that this slope functional coincides with the metric slope which could be defined by following the theory in the first part of the book [3].

-

We say that a pair of curves \((\rho ,\mu )\) is an EDI solution if \((\rho _t,\mu _t)\in {\mathcal {H}}\) for a.e. t and we have

$$\begin{aligned} F(\rho _T,\mu _T)+\frac{1}{2}\int _0^T\textrm{Slope}F(\rho ,\mu ) +\frac{1}{2}\int _0^T\int _\Omega \rho |v|^2+\frac{1}{2} \int _0^T\int _\Omega \mu |w|^2\le F(\rho _0,\mu _0), \end{aligned}$$(1.8)for some velocity fields v, w solving the continuity equations \(\partial _t\rho +\nabla \cdot (\rho v)=0, \, \partial _t\mu +\nabla \cdot (\mu w)=0\).

In order to prove the existence of an EDI solution we rely on the JKO scheme (1.3) and build suitable interpolations in time of the sequence of solutions, thus obtaining an approximation of (1.8). More precisely, we will have

for two different interpolations \((\hat{\rho },\hat{\mu })\) and \((\tilde{\rho },\tilde{\mu })\). We then pass to the limit as \(\tau \rightarrow 0\) (\(\tau \) is the time step for the discretization in the JKO scheme) where the weak limits of the different interpolations coincide. We prove that \(\textrm{Slope}F\) is a lower semi-continuous functional for the weak convergence, which allows us to conclude, combined with more standard semi-continuity arguments.

Organization of the paper After this introduction, Sect. 2 is devoted to the computation of the convexification of \(f_0\), and to some properties of the function f we obtain, introducing some relevant quantities. Section 3 is devoted to the precise definition of the slope \(\textrm{Slope}F\) and to the proof of its lower semi-continuity. Section 4 introduces the notion of EDI solutions and proves their existence. In the proof, several interpolations of the sequence obtained via the JKO scheme are needed, including the De Giorgi variational interpolation. Some estimates on these solutions are required in order to prove that they belong to the class \({\mathcal {H}}\) and to obtain the desired inequalities. Sects. 5 and 6 are not required to obtain the existence of EDI solutions, but are required if one wants to come back to System (1.4). Indeed, we stated that the notions are formally equivalent thanks to an easy computation for the derivative in time of \(F(\rho _t,\mu _t)\). However, this computation is only formal if we do not face smooth solutions. This explains the choice of the notion of EDI solutions: it is a definition which coincides with solving the equation in a classical sense if functions are smooth, but the equivalence is in general not granted. In Sect. 5, we then explain an approximation procedure (by convolution) to obtain the differentiation property (i.e. the validity of (1.6)) for non-smooth solutions of the continuity equation. Yet, the nonlinearity of the terms involved in \(f_a\) and \(f_b\) requires a certain bound on the \(H^1\) norm of some functions of the regularized functions. This raises a very natural question: suppose that a function u is such that its positive part \(u_+\) belongs to \(H^1\), and let \(u_\varepsilon \) be its convolution with a certain mollifier \(\eta _\varepsilon \): when is it true that the sequence \((u_\varepsilon )_+\) is bounded in \(H^1\) (with, possibly, uniform bounds in terms of the kernel)? This is not trivial and the answer could depend on the choice of the kernel. We provide in Sect. 6 a proof of this fact in dimension 1 for a specific choice of the kernel shape. Note that the very same result is false (even after changing the shape of the kernel) in higher dimensions (we thank Alexey Kroshnin for a refined counter-example in this direction). Nevertheless, this does not prevent the approximation or the differentiation to be true.

2 Convexification

In this section, we characterize the function f which is the convex envelope of the function \(f_0\), and \(f_0\) is defined by \(f_0(a,b)=a\log a + b\log b +ab\) on \({\mathbb {R}}_+^2\). The functional F defined through

will be the lower semi-continuous envelope of \(F_0\) (which is itself defined in a similar way replacing f with \(f_0\)) for the weak convergence of probability measures, thanks to standard relaxation results (see, for instance, [9]).

As we underlined in the introduction, \(f_0\) is not convex since \(D^2 f_0(a,b)\) is not positive semi-definite unless \(ab \le 1\).

First, we look at the shape of \(f_0\) on diagonal lines where \(a+b\) is constant. We denote \(s:=a+b\) and for a fixed s, we define a function \(g(a) := f_0(a, s-a)\) which gives the value of \(f_0\) over a segment for s fixed. Then we have

We need to distinguish the cases where \(s\le 2\) and where \(s>2\). In the first case, g is convex, since \( \frac{1}{a} + \frac{1}{s-a}\ge \frac{4}{s}\ge 2\). In the second case, we can easily see that g has a double-well shape, with three critical points on (0, s) (see Fig. 2) and the critical points are characterized by (using \(b=s-a\)):

For \(s\le 2\) only \(a=b=s/2\) is a critical point, otherwise the same point is a local maximizer and there are two global minimizers.

Due to the double-well shape of g and to the fact that \(g''\) vanishes only twice, the two minimizers of g satisfy \(g''>0\) (and not only \(g''\ge 0\)). Indeed, if this were not the case, then on the interval between the two minimizers, g would be strictly concave and would have vanishing derivative at the endpoints, which is impossible.

It will turn out that the convexification of \(f_0\) on the line \(a+b=s\) will coincide with the 1-dimensional convexification of g.

In order to construct such a function f, we first define two auxiliary functions \(\alpha ,\beta \) and two sets A, B: indeed, there exist two functions \(\alpha \ne \beta \in C^0([2,+\infty ))\) characterized by

-

\(\alpha (2)=\beta (2)=1\),

-

for every \(s>2\) we have \(\alpha (s)<s/2<\beta (s)\),

-

for every \(s>2\) we have

$$\begin{aligned} \log (\alpha (s))+ \beta (s)&= \log (\beta (s)) + \alpha (s), \nonumber \\ \alpha (s)+ \beta (s)&= s. \end{aligned}$$(2.10)

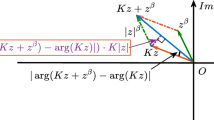

In Fig. 1, \({\mathbb {R}}_+^2\) is divided into two subsets A and B which are shown in pink and yellow colors respectively. The closed, convex set A corresponds to the set above the curve \(\{(\alpha (s),\beta (s)) : \, s \ge 2\}\cup \{(\beta (s),\alpha (s)) : \, s \ge 2\}\) and \(B = {\mathbb {R}}_+^2 \setminus A\). In Fig. 2, g, which is the 1-dimensional section of \(f_0\) and its convex envelope \(\tilde{f}\) are drawn in orange and green colors respectively.

This means that for every \(s>2\), the points \(\alpha (s)\) and \(\beta (s)\) are the two minimizers of g and these conditions are enough to determine \(\alpha ,\beta \) in a unique way. They can also be obtained using an Implicit Function Theorem, which also shows the smoothness of \(\alpha ,\beta \). On the other hand, we lose the smoothness at \(s=2\) where the IFT cannot be applied, but we have anyway continuity due to the uniqueness of the minimizer.

We then define two sets A and B as those sets that partition \({\mathbb {R}}_+^2\), with A being the closed, convex set above the curve \(\{(\alpha (s),\beta (s)) : \, s \ge 2\}\cup \{(\beta (s),\alpha (s)) : \, s \ge 2\} \), and \(B = {\mathbb {R}}_+^2 \setminus A\) the open set below. The two sets A and B are represented in Fig. 1.

Then, the main goal of this section is to prove the following proposition:

Proposition 2.1

Define

where \(f_0(a,b) := a \log a + b \log b + a b\) and \(\tilde{f} (s) = f_0(\alpha (s), \beta (s)).\) Then, \(\tilde{f}\in C^1 ([2,+\infty ))\) and the function f is the convex envelope of \(f_0\).

We give the proof of this proposition at the end of this section. Next, we give some technical results that will prove useful in the sequel. We define the “product” function P as the following:

where \(\pi (s): = \alpha (s) \beta (s)\in C^0([2, +\infty ))\). We gather some properties of \(\pi (s)\) in the following lemma:

Lemma 2.2

The function \(\pi \in C^0([2, +\infty ))\), satisfies the following properties:

-

i.

\(s-2\pi (s) >0\) for \(s>2\).

-

ii.

\(\pi \in C^1((2,+\infty ))\) and \(\pi '(s) =-\pi (s)\frac{(s-2)}{s-2\pi (s)}\).

-

iii.

\(\pi (s) <1\) for \(s>2\).

-

iv.

\(\pi \) is also differentiable at \(s=2\) and \(\pi '(2) = -1/2\).

Proof

Recall that \(\alpha (s)\) and \(\beta (s)\) are the minimizers of g, and that they satisfy \(g''>0\). Since we have \(g'' (a) = \frac{1}{a} + \frac{1}{s-a} -2=\frac{s}{a(s-a)}-2\), taking \(a=\alpha (s)\) and \(s-a=\beta (s)\) we obtain \(\frac{s}{\pi (s)}>2\), which proves i

Now we would like to compute \(\pi '(s)\) for \(s>2\) (note that \(\alpha \) and \(\beta \) are differentiable because of the implicit function theorem): we have

Let us now compute \(\alpha '(s)\) and \(\beta '(s)\). We know from (2.10) that at the minima of f we have

which gives

Plugging these in (2.13) we obtain

which proves ii

Since \(\pi '(s) \le 0\) for \(s>2\) (we use here \(s-2\pi (s)>0\)), and \(\pi \in C^0([2, +\infty ))\), we obtain that \(\pi \) has its maximum value at \(s=2\), then \(\pi (s) < \pi (2) = 1\) for \(s>2\). This gives iii

Now, we want to prove that \(\pi \) is differentiable at \(s=2\) and compute its derivative. We will consider the liminf and the limsup of the incremental ratio and bound it iteratively. We recall that we have \(0 \le \pi (s) \le 1\), \(-1 < \pi '(s) \le 0 \), \(\pi \in C^0 ([2, + \infty ))\), and

We first note that we have

We then deduce

We define two sequences \((p_n)_{n \in {\mathbb {N}}}\) and \((q_n)_{n \in {\mathbb {N}}}\) which are meant to satisfy

We take \(p_0 = -1\) and \(q_0 = 0\). Supposing that we have defined \(p_n\) and \(q_n\), we then note that for any \(\varepsilon >0\), we have, for s in a neighborhood of \(2^+\), that

This implies that we have, in the same neighborhood

Since \(\pi (s)\rightarrow 1\) as \(s\rightarrow 2\), we can define \(q_{n+1}\) via

and, analogously,

In particular, we have \(p_1=-1\) and \(q_1=-1/3\). We can see that the new values \(p_{n+1}\) and \(q_{n+1}\) also satisfy (2.14). From the definition of \(p_n\) and \(q_n\) we obtain

By induction, we can see that the sequence \(p_{2n}\) is increasing and bounded above by \(-1/2\). If we denote its limit by L, we have \(L=-\frac{1-2L}{2-2L}\), which implies \(L = -1/2\). The same holds for \(p_{2n+1}=p_{2n}\).

Similarly, \(q_{2n}\) and \(q_{2n+1}\) are decreasing and bounded from below by \(-1/2\) and they converge to \(-1/2\) as well.

This gives \( \liminf _{s\rightarrow 2^+} \frac{\pi (s)-2}{s-2} = -1/2\) and iv is proven. \(\square \)

Lemma 2.3

The function \(\tilde{f}: [2,+\infty ) \rightarrow {\mathbb {R}}\) (defined in Proposition 2.1) is convex and \(C^1\).

Proof

We recall that we have

Then we compute

This allows to see \(\tilde{f}\in C^1\) since the expression for \(\tilde{f}'\) is made of continuous functions (as we do have \(\alpha ,\beta \in C^0([2,\infty ))\)). Moreover, we can go on differentiating and get

Since we have \(\pi (s)\le 1\) and \(s-2\pi (s)\ge 0\), the second derivative of \(\tilde{f}\) is non-negative, and \(\tilde{f} \) is convex. \(\square \)

Remark 1

For future use, we denote by \(r_0\) the number given by

and we note that we have \(r_0>0\) since the function in the infimum is strictly positive, tends to 1 as \(s\rightarrow \infty \), and tends to \(2/(2-1/\pi '(2))=1/2>0\) as \(s\rightarrow 2^+\).

Corollary 2.4

The function \(f: {\mathbb {R}}_+^2 \rightarrow {\mathbb {R}}\) is convex.

Proof

We notice that \(f_0 \) is \(C^1\) in the interior of B and that \(\tilde{f}\) is \(C^1\) on \([2,+\infty )\). Moreover we have the following formula for the gradient of f, using \(s=a+b\):

Since these two expressions agree on \(\overline{B}\cap A\), then f is globally \(C^1\) in \((0,+\infty )^2\). This allows us to prove that f is convex by considering separately its restrictions to A and B.

Indeed, convexity for \(C^1\) functions is equivalent to the inequality \((\nabla f(x)-\nabla f(y))\cdot (x-y)\ge 0\) for every x, y. If the segment connecting x and y is completely contained either in A or in \(\overline{B}\) then the convexity of the two restrictions is enough to obtain the desired inequality. If not, we can decompose it into a finite number of segments (three at most) of the form \([x_i,x_{i+1}]\) with \(x_0=x\) and \(x_3=y\) and each \([x_i,x_{i+1}]\) fully contained either in A or in \(\overline{B}\). We then write

and the fact that \(x-y\) is a positive scalar multiple of each vector \(x_i-x_{i+1}\) shows that the convexity of each restriction is again enough for the desired result (note that we strongly use here \(f\in C^1\), i.e. that the gradients of the two restrictions agree).

The convexity of f restricted to \(\overline{B}\) comes from the positivity of the Hessian of \(f_0\) and that of f restricted to A from the convexity of \(\tilde{f}\), and the result is proven. \(\square \)

Now, with the help of the above results, we prove Proposition 2.1.

Proof of Proposition 2.1

The function f has been built so that on each segment \(\{(a,b):a+b=s\}\) it coincides with the convexification of the restriction of \(f_0\) on such a segment. So, the convexification of \(f_0\) cannot be larger than f. On the other hand, f is a convex function smaller than \(f_0\), so it is also smaller than the convexification, which proves the claim.

We conclude this section with a remark which will be useful in the sequel (see Lemma 4.10 in Sect. 4).

Remark 2

If \(\chi \) is a function which is compactly supported in the set B, then there exists a Lipschitz continuous function \(g:{\mathbb {R}}^2\rightarrow {\mathbb {R}}\) such that we have

This holds because the Jacobian of \((f_a,f_b)\) is invertible inside B.

3 Lower semi-continuity of the slope

The goal of this section is to give a precise definition of the slope functional \(\textrm{Slope}F(\rho ,\mu )\) (introduced in (1.7)) and prove that it is lower semi-continuous with respect to the weak topology on measures.

Notice that the formula (1.7) for \(\textrm{Slope}F(\rho ,\mu )\) makes use of the gradients of \(\rho \) and \(\mu \), but this expression is not well-defined for any arbitrary couple of measures \((\rho ,\mu )\). This leads us to consider the following space:

Definition 3.1

We define the class \({\mathcal {H}}\) as the set of all pairs of densities \(\rho , \mu \in L^1(\Omega )\cap {\mathcal {P}}(\Omega ) \) such that

-

i.

For every \(\eta \in W_c^{1,\infty }(B)\), we have \(\eta (\rho ,\mu ) \in H^1(\Omega )\);

-

ii.

We also have \(\sqrt{\rho +\mu } \in H^1(\Omega )\).

The sets A, B above are those defined in Sect. 2 (and we keep this notation in the whole presentation), and by \(W_c^{1,\infty }(B)\), we denote the set of Lipschitz functions whose support is compact inside B (it can touch the axes \({\mathbb {R}}\times \{0\}\) and \(\{0\}\times {\mathbb {R}}\), but not the separating curve between A and B).

For \((\rho ,\mu )\in {\mathcal {H}}\), we do not have necessarily \(\rho , \mu \in H^1(\Omega )\). However, we can define for couples \((\rho ,\mu )\in {\mathcal {H}}\) a suitable notion of “gradient” for certain functions of \((\rho ,\mu )\). The notion of gradient we want to define should satisfy at least some chain-rule in order to be useful in the sequel, that is, we would like to have, for any \((\rho ,\mu )\in {\mathcal {H}}\), that

In particular, we need this to be true for some simple functions \(\chi \), such as affine functions composed with suitable positive parts so that we have \({{\,\textrm{supp}\,}}(\chi )\subset B\).

Hence, let us define, for \((\alpha ,\beta ,c)\in {\mathbb {R}}_+^3\), the following function:

which we will refer to as “triangle” sometimes. Owing to the convexity of the set A, for every \((a,b)\in B\) we can find \((\alpha ,\beta ,c)\in {\mathbb {R}}_+^3\) such that \(T_{\alpha ,\beta ,c}(a,b)>0\) and \(T_{\alpha ,\beta ,c}\) is compactly supported in B. Whenever \({{\,\textrm{supp}\,}}T_{\alpha ,\beta ,c}\subset B\), then \(T_{\alpha ,\beta ,c} (\rho ,\mu )\in H^1(\Omega )\) for every \((\rho ,\mu )\in {\mathcal {H}}\).

Definition 3.2

Let us fix a countable dense set E of parameters \((\alpha ,\beta ,c)\): for simplicity we choose \(E={\mathbb {Q}}_+^3\). Given \((\rho ,\mu )\in {\mathcal {H}}\), for each \((\alpha ,\beta ,c)\in E\) fix a representative of the weak gradient of \(T_{\alpha ,\beta ,c}(\rho ,\mu )\). Take \(x\in \Omega \) such that \((\rho (x),\mu (x)) \in B\) and \((\alpha ,\beta ,c) \in E\) and \(\varepsilon >0,\varepsilon \in {\mathbb {Q}}\) such that the supports of \(T_{\alpha ,\beta ,c}, T_{\alpha -\varepsilon ,\beta ,c},T_{\alpha ,\beta -\varepsilon ,c} \) are contained in B and \((\rho (x),\mu (x)) \in {{\,\textrm{supp}\,}}T_{\alpha ,\beta ,c}\). Then we define the gradients of \(\rho (x)\) and \(\mu (x)\) as the following:

Let us observe that the above definition of the gradients does not depend on the choices of \(\alpha ,\beta ,c\), and \(\varepsilon \), except possibly on a negligible set of points x. Indeed, for a.e. x such that \((\rho (x),\mu (x)) \in {{\,\textrm{supp}\,}}T_{\alpha ,\beta ,c}\cap T_{\tilde{\alpha }, \tilde{\beta }, \tilde{c}}\), then the functions \((T_{\alpha -\varepsilon ,\beta ,c}(\rho ,\mu ) - T_{\alpha ,\beta ,c}(\rho ,\mu )) / \varepsilon \) and \((T_{\tilde{\alpha } -\varepsilon ,\tilde{\beta } ,\tilde{c}}(\rho ,\mu ) - T_{\tilde{\alpha },\tilde{\beta },\tilde{c}}(\rho ,\mu )) / \varepsilon \) are in \(H^1\) and coincide, hence their gradients coincide almost everywhere at these points, see [6].

Let us also mention that, with such definition of the gradients, we have the following chain-rule: for any \(\chi \in W_c^{1,\infty }(B)\), and for any \((\rho ,\mu )\in {\mathcal {H}}\) we have

To prove this fact, let us start with considering the case where \(\chi \) is compactly supported in \({{\,\textrm{supp}\,}}T_{\alpha ,\beta ,c}\), for some \(\alpha , \beta ,c\in {\mathbb {Q}}\). Then, for \(\varepsilon \) small enough, we have, for x such that \((\rho (x),\mu (x))\in {{\,\textrm{supp}\,}}\chi \),

As both the functions are in \(H^1\), their gradients coincide on the set where \((\rho ,\mu )\in {{\,\textrm{supp}\,}}\chi \), and on this set, applying the chain rule on the right-hand side yields the desired equality. Outside of this set, the chain rule is direct and everything is zero. We then conclude by observing that any compact subset of B is contained in a finite union of supports of sets of the form \({{\,\textrm{supp}\,}}T_{\alpha ,\beta ,c}\) for \(\alpha , \beta ,c\in {\mathbb {Q}}\), and then we use a partition of the unity to generalize the result to general \(\chi \in W_c^{1,\infty }(B)\).

We note that, if \(\rho ,\mu \in H^1(\Omega )\), then the gradient defined above coincide with the usual gradient.

To conclude these remarks on the gradients of \((\rho ,\mu )\), we observe that our definition of the space \({\mathcal {H}}\) does not allow us to define the gradients of \(\rho ,\mu \) properly when \((\rho ,\mu )\) lies in the set A. However, in this set, and this will be sufficient for our needs, the gradient of the sum \(\rho + \mu \) is well defined, i.e, it is measurable. Indeed, we know that \(\sqrt{\rho +\mu }\) is in \(H^1\), hence, we set \(\nabla (\rho +\mu ) := 2(\sqrt{\rho +\mu })\nabla \sqrt{\rho +\mu }\).

It follows from the above discussion that, for any Lipschitz function \(h=h(a,b)\) that depends only on \(a+b\), if \((a,b)\in A\), then \(\nabla (h(a,b))\) is well defined.

We now define the slope functional on the space \({\mathcal {H}}\).

Definition 3.3

Let \((\rho ,\mu )\in {\mathcal {H}}\). Then, we define the slope functional \(\textrm{Slope}F\) as

where \(S:=\rho +\mu \).

Note that the above formula for the slope has been obtained by expanding the expression

We are now in a position to state the main result that we prove in this section.

Theorem 3.4

Let \((\rho _n,\mu _n) \in {\mathcal {H}}\) be such that

where the above convergence is weak in the sense of measures. Then we have

3.1 Preliminary results

In this section, we provide some preliminary results that will be used in the proof of Theorem 3.4. We start with proving the following proposition:

Proposition 3.5

Assume that \((\rho _n,\mu _n)\in {\mathcal {H}}\) converges weakly, as \(n \rightarrow +\infty \), to \((\rho ,\mu )\in {\mathcal {P}}(\Omega )\), and that \(\textrm{Slope}F(\rho _n,\mu _n)\) is bounded independently of n. Then, for any Lipschitz continuous function \(\chi (a,b)\) which is constant everywhere on A, we have

The proof of Proposition 3.5 relies on two lemmas.

Lemma 3.6

Assume that \((\rho _n,\mu _n)\) and \(\chi \) satisfy the hypotheses of Proposition 3.5. Assume in addition that \(\chi \) is constant outside a compact set contained in B. Then, the sequence \((\chi (\rho _n,\mu _n))_{n\in {\mathbb {N}}}\) is bounded in \(H^1(\Omega )\). In particular, it has a subsequence that converges strongly in \(L^2\) and weakly in \(H^1\).

Proof

We start by defining, for all points x such that \((\rho _n(x),\mu _n(x))\in B\), the following vector fields:

Note that \(X_n\) is only defined on \(\{\rho _n>0\}\), i.e. \(\rho _n-\)a.e., and \(Y_n\) on \(\{\mu _n>0\}\), i.e. \(\mu _n-\)a.e. Therefore, by the definition of \(\textrm{Slope}F\), for any function \(\eta \) compactly supported in B, we have that

is bounded independently of n.

Again, for points x such that \((\rho _n(x),\mu _n(x))\in B\), we can write

Hence, remembering that \(\rho _n\mu _n < 1\) for \((\rho _n,\mu _n)\in B\), we obtain

and

Since \((\rho _n,\mu _n) \in {\mathcal {H}}\), we have that \(\chi (\rho _n,\mu _n) \in H^1(\Omega )\) (where \(\chi \) is given as in the statement of Proposition 3.5). Up to subtracting a constant, we can assume that \(\chi \) is compactly supported in B. Now, let us prove that the \(H^1\) norm of \(\chi (\rho _n,\mu _n)\) is bounded independently of n.

The first line above is obtained by using the chain rule given by (3.16) for the composition of Lipschitz functions and functions in \({\mathcal {H}}\).

The quantities \(\vert \chi _a(\rho _n,\mu _n)\vert ^2\rho _n/ (1-\rho _n\mu _n)^2\) and \(\vert \chi _b(\rho _n,\mu _n)\vert ^2\mu _n/ (1-\rho _n\mu _n)^2\) are bounded because the support of \(\chi \) is far from the set A, so that the product \(\rho _n\mu _n\) is bounded above by a constant strictly less than 1 on the set of points x such that \((\rho _n(x),\mu _n(x))\in {{\,\textrm{supp}\,}}(\chi )\). Hence the result follows. \(\square \)

Let us now improve the above lemma by showing that, for \(\chi \) as above, the \(H^1\) weak and \(L^2\) strong limit of \(\chi (\rho _n,\mu _n)\) is \(\chi (\rho ,\mu )\), i.e. we can pass to the limit inside the function \(\chi \). To do so, we start with considering the specific case were \(\chi \) is of the form \(T_{\alpha ,\beta ,c}\), defined in (3.15).

Lemma 3.7

Let \((\alpha ,\beta ,c) \in {\mathbb {R}}_+^3\) be such that \({{\,\textrm{supp}\,}}T_{\alpha ,\beta ,c}\subset B\). Then

and the convergence is strong in \(L^2\) and weak in \(H^1\).

Proof

We assume that \((\alpha ,\beta ,c)\) are chosen as in the statement of the lemma, and we omit writing them as subscripts of \(T_{\alpha ,\beta ,c}\) in the proof.

By Lemma 3.6, there exists \(u\in H^1\), \(u\ge 0\), such that

strongly in \(L^2\) and weakly in \(H^1\). Using

and the weak converge of \(c - \alpha \rho _n-\beta \mu _n\) to \(c-\alpha \rho -\beta \mu \), we find that \(c - \alpha \rho -\beta \mu \le u\). Taking the maximum with 0, we get

This already proves the equality \( T(\rho ,\mu )=u\) on \(\{u=0\}\).

Now, let \(\delta >0\) be fixed and define the set \(\omega := \{ u>\delta \} \subset \Omega \). Let \(\varepsilon >0\) be fixed as well. Using Egoroff’s theorem, we can find \(E\subset \omega \) such that \(\vert E\vert <\varepsilon \) and such that \(T(\rho _n,\mu _n)\) converges uniformly to u on \(\omega \backslash E\). Taking n large enough, we have

The term on the left-hand side converges to \(u \mathbbm {1}_{\omega \backslash E}\) strongly in \(L^2\) and the term on the right-hand side converges to \((c-\alpha \rho - \beta \mu ) \mathbbm {1}_{\omega \backslash E}\) weakly. Then,

and this is actually an equality due to (3.18). Since the measure of E can be taken arbitrarily small, and up to taking \(\delta \rightarrow 0\), we obtain that \(u=T(\rho ,\mu )\) a.e. \(\square \)

We can now turn to the proof of Proposition 3.5.

Proof of Proposition 3.5

If the function \(\chi \) is of the form \(T_{\alpha ,\beta ,c}\), then Lemma 3.7 tells us that the proposition is true. The strategy of the proof is to prove first that the proposition holds true for any function \(\chi \) whose support is contained in a triangle, itself contained in B, then we show that it holds true for any function \(\chi \) whose support does not touch A (but may not be contained in a single triangle), and finally we consider the general case.

Step 1. The case where the support of \(\chi \) is in a triangle. Assume that the support of \(\chi \) is compactly supported in the support of the triangle function \(T_{\alpha +\varepsilon ,\beta +\varepsilon ,c-\varepsilon }\) and that \((\alpha ,\beta ,c)\) and \(\varepsilon >0\) are chosen so that such a support is contained in B. We now define \(T_1:=T_{\alpha +\varepsilon ,\beta ,c}\) and \(T_2:=T_{\alpha ,\beta +\varepsilon ,c}\). We want to prove that \(\chi (\rho ,\mu )\) can be expressed as a Lipschitz function of \((T_1(\rho ,\mu ),T_2(\rho ,\mu ))\). To do so, we observe that there exists an affine function \(L:{\mathbb {R}}^2\rightarrow {\mathbb {R}}^2\) such that \((a,b)=L(c-(\alpha +\varepsilon )a-\beta b,c-\alpha a-(\beta +\varepsilon )b)\). We then define a function \(g:L^{-1}({\mathbb {R}}_+^2)\cap ({\mathbb {R}}_+^2)\rightarrow {\mathbb {R}}\) via the formula

It is clear that \(\chi (\rho ,\mu )\) equals to \(g(T_1(\rho ,\mu ),T_2(\rho ,\mu ))\) since if either \(T_1(\rho ,\mu )\) or \(T_2(\rho ,\mu )\) vanishes, then we have \(\chi (\rho ,\mu )=0\), while in the other case we can express \(\rho \) and \(\mu \) via the affine function L, and of course we only need to apply g to values which are in \({\mathbb {R}}_+^2\) (since \(T_1,T_2\ge 0\)) and in \(L^{-1}({\mathbb {R}}_+^2)\) (since \(\rho ,\mu \ge 0\)). We only need to prove that g is Lipschitz continuous, which is not evident from its definition. To do so, we will prove that we have \(g(t_1,t_2)=0\) if \(t_1<\varepsilon \) or \(t_2<\varepsilon \).

Indeed, setting \((a,b)=L(t_1,t_2)\), the condition \(g(t_1,t_2)>0\) implies that \(t_1,t_2>0\) and \((\alpha +\varepsilon )a+(\beta +\varepsilon )b+\varepsilon <c\), i.e. \(\varepsilon b+\varepsilon <t_1\), hence \(t_1>\varepsilon \) since \(b>0\). Analogously, we also have \(\varepsilon a +\varepsilon <t_2\), hence \(t_2>\varepsilon \). This shows that we could define

and both expressions are Lipschitz continuous and they agree on the open set which is the intersection of the two domains of definition.

Once we know that \(\chi (\rho ,\mu )\) can be written as \(g(T_1(\rho ,\mu ),T_2(\rho ,\mu ))\), the claim follows.

Step 2. The case where the support of \(\chi \) does not touch A. Assume that the support of \(\chi \) is compactly supported in B. We use the fact, which is based on the convexity of A, that the domain B is a union of triangles of the form \({{\,\textrm{supp}\,}}T\), even if functions supported in B are not necessarily supported in one of such of triangles only. Hence, we can find a finite family \(((\alpha _k,\beta _k,c_k) )_{k}\) such that

Let \((\chi _k)\) be a family of functions compactly supported in \({{\,\textrm{supp}\,}}T_{\alpha _k,\beta _k,c_k}\), such that \(\chi = \sum _k \chi _k.\) Then, we can apply the first step to conclude.

Step 3. The general case. Up to subtracting a constant, we assume that \(\chi =0\) on A. Let us start with assuming that \(\chi \) is non-negative. We proceed by approximation. Let \(\varepsilon >0\) be fixed, and define

By Step 2 above, we have that

and this convergence (up to a subsequence) holds strongly in \(L^2\) and weakly in \(H^1\). Then,

Taking the limit as \(n \rightarrow +\infty \) yields the result.

To treat the case where \(\chi \) changes its sign, we can apply the argument on the positive and negative parts of \(\chi \) separately. \(\square \)

We conclude these preliminary results with the following lemma:

Lemma 3.8

Let \((\rho _n,\mu _n) \in {\mathcal {H}}\) converges weakly, as \(n \rightarrow +\infty \), to \((\rho ,\mu )\in {\mathcal {H}}\) and be such that \(\textrm{Slope}F(\rho _n,\mu _n)\) is bounded independently of n. Then, for any \(\chi \) compactly supported in B, we have

where the convergence is weak in \(L^2\).

Proof

As usual, by possibly decomposing \(\chi \) into a finite sum we can assume that the support of \(\chi \) is included in a triangle. We choose two different triangle functions \(T_1:=T_{\alpha +\varepsilon ,\beta ,c},T_2:=T_{\alpha ,\beta ,c}\) such that their supports include that of \(\chi \). We use the weak \(H^1\) convergence of \(T_2(\rho _n,\mu _n)\) to \(T_2(\rho ,\mu )\) to deduce

This implies

since we just need to multiply the above weak converging sequence with \(\chi (\rho _n,\mu _n)\), which is dominated and pointwisely converging a.e. to \(\chi (\rho ,\mu )\). If we do the same for \(T_1\) we also obtain

Subtracting the two relations and dividing by \(\varepsilon \), we obtain

We can now also deduce that \(\chi (\rho _n,\mu _n)\nabla \mu _n\rightharpoonup \chi (\rho ,\mu )\nabla \mu \) and the claim is proven. \(\square \)

We make use of these preliminary results to prove Theorem 3.4.

3.2 Lower semi-continuity in the region B

The goal of this section is to prove the following:

Proposition 3.9

Let \((\rho _n,\mu _n) \in {\mathcal {H}}\) be such that \((\rho _n,\mu _n)\) converges weakly, as \(n \rightarrow +\infty \), to \((\rho ,\mu )\in {\mathcal {H}}\) and such that \(\textrm{Slope}F(\rho _n,\mu _n)\) is bounded independently of n. Let \(\chi \) be a Lipschitz function compactly supported in B. Then

Proof

By the definitions of f and \(\chi \), we have

The latter expression is convex in the terms \((\nabla \rho _n + \rho _n\nabla \mu _n)\chi (\rho _n,\mu _n)\), \(\rho _n\chi (\rho _n,\mu _n)\) (and same for the terms involving \(\mu _n\)). Then the standard lower semi-continuity results (see Chapter 4 in [11]) will prove the claim. We only have to note that Proposition 3.5 provides

where the convergence is strong in \(L^2\) (and the same also holds true for \(\mu _n\)) and Lemma 3.8 in turn provides

where the convergence is weak in \(L^2\). We used here many times that the functions \((a,b)\mapsto a\chi (a,b),b\chi (a,b)\) are compactly supported in B and Lipschitz continuous. This concludes the proof. \(\square \)

3.3 Lower semi-continuity in the region A

The goal of this section is to prove the following:

Proposition 3.10

Let \((\rho _n,\mu _n) \in {\mathcal {H}}\) such that \((\rho _n,\mu _n)\) converges weakly, as \(n \rightarrow +\infty \), to \((\rho ,\mu )\in {\mathcal {H}}\) and such that \(\textrm{Slope}F(\rho _n, \mu _n)\) is bounded independently of n. Let \(\chi \) be a Lipschitz function compactly supported in B such that \(0\le \chi \le 1\). Then

Let us define the function \(S := \rho + \mu \) and consider the function \(P(\rho , \mu )\) defined in (2.12). We recall that (see Lemma 2.2), for \(s>2\), we have

Remember that, if \((\rho ,\mu )\in {\mathcal {H}}\), then the gradients of \(\sqrt{S}\) and \( P(\rho ,\mu )\) are well defined. We can then state the following lemma:

Lemma 3.11

Consider \((\rho ,\mu ) \in {\mathcal {H}}\). Then we have

Proof

We consider the cases where \((\rho ,\mu )\in A\) and \((\rho ,\mu )\in B\) separately. The inequality is actually an equality in the set A.

We start with the set B. We have, by convexity, for \((\rho ,\mu )\in B\),

Now, let us consider the set A. For \((\rho ,\mu )\in A\) we have

We also have

Owing to the relation \(\pi ^\prime = -\pi \frac{(s-2)}{s-2\pi }\), we obtain

This proves that the desired inequality is actually an equality when \((\rho ,\mu ) \in A\). \(\square \)

Lemma 3.11 implies that, if \((\rho _n,\mu _n) \in {\mathcal {H}}\) is such that \(\textrm{Slope}F(\rho _n,\mu _n)\) is bounded independently of n, then so is the \(H^1\) norm of

Proposition 3.12

Let \((\rho _n,\mu _n) \in {\mathcal {H}}\) be such that \(\textrm{Slope}F(\rho _n,\mu _n)\) is bounded, and suppose that \(\rho _n\rightharpoonup \rho \) and \(\mu _n\rightharpoonup \mu \) as \(n \rightarrow +\infty \). Let \(S_n := \rho _n+\mu _n\) and let \(P_n := P(\rho _n,\mu _n)\). Then, we have the following convergence results:

-

\(S_n \rightarrow \rho +\mu \) a.e.

-

\(P_n \rightarrow P(\rho ,\mu )\) a.e.

Proof

We start by giving some bounds on \(\nabla S_n\). Recalling the definitions of \(X_n,Y_n\) from (3.17), we have (on the set \(\{(\rho _n,\mu _n)\in B\}\) of points \(x\in \Omega \) where \((\rho _n(x),\mu _n(x))\in B\)),

Therefore, on \(\{(\rho _n,\mu _n)\in B\}\), we obtain

Since \(\rho _n\mu _n\le 1\) in the set B, we get

Now, using \((1-\rho _n\mu _n) \ge (1 - \frac{S_n^2}{4})\), we finally get, for \((\rho _n,\mu _n)\in B\),

For \((\rho _n,\mu _n)\in A\), we use \(f^{\prime \prime }\ge \frac{r_0}{s}\), where \(r_0\) as in Remark 1, and we obtain

for a constant C independent of n. Therefore, taking

we find that

is bounded independently of n. The function h is positive and vanishes only at \(s=2\). If we denote by H any anti-derivative of h, characterized by \(H'=h\), we deduce that H is strictly increasing. Moreover, we obtain a uniform \(H^1\) bound on \(H(S_n)\). This implies that, up to a subsequence, \(H(S_n)\) has an a.e. limit. Composing with \(H^{-1}\), the same is true for \(S_n\). The pointwise limit of \(S_n\) can only coincide with its weak limit, i.e. S.

Now, to prove the convergence of \(P(\rho _n,\mu _n)\), it is sufficient to write

for any extension of \(\pi \) to \({\mathbb {R}}_+\) (\(\pi \) is originally only defined on \([2,+\infty )\), but we can take \(\pi =1\) on [0, 2]).

The function \(P(a,b) - \pi (a +b)\) is constant, equal to zero, on the set A. Then, we can apply Proposition 3.5 to obtain the desired convergence. In what concerns \(\pi (\rho _n+\mu _n)\) we just need to apply what we just proved on \(S_n\). \(\square \)

We are now in the position to prove Proposition 3.10.

Proof of Proposition 3.10

Consider a sequence as in the statement of the proposition. Then, we have

Since \(\sqrt{S_n+P_n}\) is bounded in \(H^1\), it converges weakly in \(H^1\) up to extraction of a subsequence, and we know that its limit has to be \(\sqrt{S+P}\). Using the standard semi-continuity argument we obtain

\(\square \)

Therefore, Theorem 3.4, that is, the lower semi-continuity of the functional \(\textrm{Slope}F\), follows from the previous results as an easy consequence:

Proof of Theorem 3.4

Let \((\rho _n, \mu _n)\) be a sequence satisfying the hypotheses of Theorem 3.4. Let \(0\le \chi \le 1\) be a Lipschitz function compactly supported in B. Then

Applying separately the results of Propositions 3.9 and 3.10, we obtain

Since \(\chi \) is arbitrary, we can select an increasing sequence of cut-off functions \(\chi _k\) converging to \({\mathbbm {1}}_B\), and by monotone convergence we obtain

and this concludes the proof. \(\square \)

4 Existence of solutions in the EDI sense

In this section, we define the JKO scheme for the functional F and three different interpolations (De Giorgi variational, piecewise geodesic, and piecewise constant) for this scheme. We prove that these interpolations converge to the same limit curve and this limit curve is a gradient flow for the functional F in a suitable sense (see Definition 4.2 below). More precisely, we show that the Energy Dissipation Inequality (4.19) holds by using the estimates we obtain via the three interpolations we define subsequently.

First, we define the space \(L^2{\mathcal {H}}\) as follows:

Definition 4.1

The space \(L^2{\mathcal {H}}\) is composed of all the curves \(\rho ,\mu :[0,T]\rightarrow {\mathcal {P}} (\Omega )\) such that

-

i.

Both \(\rho \) and \(\mu \) belong to \(AC_2([0,T];W_2(\Omega ))\) (see [3]);

-

ii.

For a.e. \(t\in [0,T]\) we have \((\rho _t,\mu _t)\in {\mathcal {H}}\);

-

iii.

For every \(\eta \in W^{1,\infty }_c(B),\) we have \(\int _0^T \Vert \eta (\rho _t,\mu _t) \Vert _{H^1(\Omega )}^2 \,\textrm{d}t<+\infty \);

-

iv.

We also have \(\int _0^T \Vert \sqrt{\rho _t+\mu _t} \Vert _{H^1(\Omega )}^2 \,\textrm{d}t<+\infty \).

Next we give the following definition:

Definition 4.2

A pair of curves \((\rho _t, \mu _t)_{t\in [0,T]} \in L^2{\mathcal {H}}\) is a gradient flow in the EDI sense for the functional F, given by (2.9), with initial datum \((\rho _0,\mu _0) \in {\mathcal {P}}(\Omega )\times {\mathcal {P}} (\Omega )\) and \(F(\rho _0, \mu _0) < +\infty \), if and only if

-

There exist a pair of velocities \((v_t, w_t)\), associated with the curves \((\rho _t, \mu _t)\), that satisfy the continuity equations \(\,\partial _t \rho _t +\nabla \cdot (\rho _tv_t ) =0\) and \(\,\partial _t\mu _t +\nabla \cdot (\mu _t w_t) =0\) with no-flux boundary conditions.

-

The pairs \((\rho _t,\mu _t)\) and \((v_t, w_t)\) satisfy the Energy Dissipation Inequality

$$\begin{aligned}&F(\rho _T, \mu _T) + \frac{1}{2}\int _{0}^{T} \int _\Omega \rho _t |v_t|^2 \,\textrm{d}x \,\textrm{d}t + \frac{1}{2}\int _{0}^{T} \int _\Omega \mu _t |w_t|^2 \,\textrm{d}x \,\textrm{d}t \nonumber \\&\quad + \frac{1}{2} \int _{0}^{T} \textrm{Slope}F(\rho _t, \mu _t) \,\textrm{d}t\le F(\rho _0, \mu _0). \end{aligned}$$(4.19)

The main goal of this section is to prove the following theorem:

Theorem 4.3

For any initial datum \((\rho _0,\mu _0)\) such that \(F(\rho _0, \mu _0) < +\infty \), there exists an EDI gradient flow for the functional F.

4.1 The JKO scheme and the interpolations

Definition 4.4

(JKO scheme for F) For a fixed time step \(\tau >0\) (of the form \(\tau =T/N\) for some \(N\in {\mathbb {N}}\)), we define the JKO scheme as a sequence of probability measures \((\rho _k^{\tau }, \mu _k^{\tau })_k\), with a given initial datum \((\rho _0^\tau ,\mu _0^\tau ) = (\rho _0,\mu _0)\) such that for \(k \in \{0, \cdots , N-1\}\) we have

This sequence of minimizers exists since the functional F is lower semi-continuous for the weak convergence and so is the sum we minimize. Such a sum is also strictly convex, since the measures \(\rho _k^{\tau }, \mu _k^{\tau }\) are necessarily absolutely continuous (which implies strict convexity of the Wasserstein terms, see Proposition 7.19 in [14]): the minimizer is then unique at every step.

Notice that (4.20) implies that, at each iteration, we have

An important consequence of (4.21) is the following inequality, which is obtained by summing over k and using the fact that F is bounded from below:

Our strategy to prove that Inequality (4.19) holds is to improve (4.21) by the help of some interpolations for the sequence \((\rho _k^{\tau }, \mu _k^{\tau })_k\) for the functional F. Therefore, we define these interpolations next.

Definition 4.5

(De Giorgi variational interpolation) We define the De Giorgi variational interpolation \((\hat{\rho }_t^\tau , \hat{\mu }_t^\tau )\) for the sequence \((\rho _k^{\tau }, \mu _k^{\tau })_k\) as follows: for any \(s \in (0,1]\) and any k, take \( t = (k+s) \tau \) such that

We notice that when \(s=1\), (4.23) is nothing but the JKO scheme (4.20). The main point (which is now a classical idea in the study of gradient flows, see [3]), is that we can improve (4.21) by

To obtain (4.24) we define a function \(g: [0,1] \rightarrow {\mathbb {R}}\) such that

This function is decreasing and hence differentiable a.e. At differentiability points, we necessarily have

On the other hand, for a monotone function we have an inequality by the fundamental theorem of calculus, which gives here

Combining the last two lines, we obtain (4.24). Moreover, we have, by the optimality conditions, the following equalities

where \(\varphi _{\hat{\rho }_{k+s}^{\tau }\rightarrow \rho _{k}^{\tau }}\) and \(\varphi _{\hat{\mu }_{k+s}^{\tau }\rightarrow \mu _{k}^{\tau }}\) are the Kantorovich potentials associated with the transports from \(\hat{\rho }_{k+s}^\tau \) to \(\rho _k^{\tau }\), and from \(\hat{\mu }_{k+s}^\tau \) to \(\mu _k^{\tau }\) respectively. Using (4.25) and Brenier Theorem (see [5]), we obtain

Using (4.26), Inequality (4.24) re-writes

Definition 4.6

(Piecewise constant interpolation) We define the piecewise constant interpolation as a pair of piecewise constant curves \((\bar{\rho }_t^{\tau }, \bar{\mu }_t^{\tau }) \) and a pair of velocities \((\bar{v}_t^{\tau }, \bar{w}_t^{\tau } ) \) associated with these piecewise constant curves such that for every \(t \in (k\tau , (k+1) \tau ]\) and \(k \in \{ 0, \cdots , N-1\}\), \(N \in {\mathbb {N}}\), they satisfy

where \(T_{\rho _{k+1}\rightarrow \rho _k^\tau }^\tau \) and \(T_{\mu _{k+1} \rightarrow \mu _k }^\tau \) are the optimal transport maps from \(\rho _{k+1}^\tau \) to \(\rho _k^\tau \) and from \(\mu _{k+1}^\tau \) to \(\mu _k^\tau \) respectively. We define the momentum variables by \(\bar{E}^\tau (t) :=(\bar{E}_\rho ^\tau (t), \bar{E}_\mu ^\tau (t)) = (\bar{\rho }_t^\tau \bar{v}_t^\tau , \bar{\mu }^\tau _t \bar{w}_t^\tau )\).

Definition 4.7

(Piecewise geodesic interpolation) We define the piecewise geodesic interpolation as a pair of densities \((\tilde{\rho }^\tau _t, \tilde{ \mu }^\tau _t)\) that interpolate the discrete values \((\rho _k^\tau , \mu _k^\tau )_k\) along Wasserstein geodesics: for each k we define

We also define some velocity fields \((\tilde{v}_t^\tau , \tilde{w}_t^\tau )\) as follows

In this way we can check that we have

and, for \(t\in ((k-1)\tau ,k\tau )\), we also have

We also define the momentum variables by \(\tilde{E}^\tau (t) =(\tilde{E}_\rho ^\tau (t), \tilde{E}_\mu ^\tau (t)) :=(\tilde{\rho }_t^\tau \tilde{v}_t^\tau , \tilde{\mu }_t^\tau \tilde{w}_t^\tau )\).

Now that we have defined the three interpolations above and that we have improved (4.21) into (4.27), we would like to replace the terms involving the De Giorgi variational interpolation \((\hat{\rho }^\tau _{k+s}, \hat{\mu }^\tau _{k+s})\) in (4.27) by the slope functional \(\textrm{Slope}F(\hat{\rho }^\tau _{k+s}, \hat{\mu }^\tau _{k+s})\). To be able to do that, first we prove two technical lemmas (Lemmas 4.8 and 4.9 below) and then by using them we prove that the pair of curves obtained via the De Giorgi variational interpolation belongs to the space \({\mathcal {H}}\).

Lemma 4.8

For every \(\rho , \mu \in C^\infty (\Omega )\) strictly positive densities we have

where \(S:= \rho + \mu \) and \(r_0>0\) is defined in Remark 1 in Sect. 2.

Proof

We consider the sets A and B separately. For \((\rho , \mu ) \in A\), we have

For \((\rho , \mu ) \in B\), we have

We want to show that the inequality

is always satisfied. This means we want to have

We now look at the matrix

and prove that it is positive definite. Using \(r_0\le 1\) and \(\rho ,\mu \le S\) we easily see that the terms on the diagonal are non-negative. We then compute the determinant and obtain

For fixed sum \(S=\rho +\mu \) this last expression is minimal if the product \(\rho \mu \) is maximal. If \(S>2\) we can then bound this from below by \(\frac{1-r_0}{\pi (S)}-1+\frac{2r_0}{S}\). This quantity is non-negative as a consequence of the definition of \(r_0\) since we have

If \(S\le 2\) we just use \(\rho \mu \le S^2/4\le S/2\) and bound the same quantity from below by \(2\frac{1-r_0}{S}-1+\frac{2r_0}{S} =\frac{2}{S}-1\ge 0.\) \(\square \)

Lemma 4.9

Let us consider the auxiliary energy functional \(G(\rho , \mu ) =\int _\Omega g(\rho , \mu )\) where \(g(a,b) := a\log a + b\log b\) (defined as equal to \(+\infty \) if measures are not absolutely continuous). Suppose that \(\rho _0, \mu _0\) are given absolutely continuous measures and let us call \((\rho _1, \mu _1)\) the unique solution of

Then, setting \(S_1:=\rho _1+\mu _1\), we have

where the number \(r_0>0\) is defined in Remark 1 in Section 2.

Proof

We prove this result via a flow-interchange inequality and a regularization argument. We fix \(\varepsilon >0\) and define \(F_\varepsilon (\rho , \mu ) := F (\rho , \mu )+ \varepsilon G (\rho , \mu ) \). For a given sequence of smooth measures \(\rho _{0,\varepsilon }, \mu _{0,\varepsilon } \in C^\infty \) we consider the sequence of minimizers

If we write the optimality conditions for the above minimization problem we have \(\rho _{1,\varepsilon },\mu _{1,\varepsilon }>0\) (the argument is the same as in the proof of Lemma 8.6 in [14]) and

where \(C, \tilde{C} \) are some positive constants. Since \((a,b)\mapsto (f_a(a,b)+\varepsilon \log a,f_b(a,b)+\varepsilon \log b)\) is the gradient of a strictly convex function it is a diffeomorphism and we deduce that \(\rho _{1,\varepsilon }\) and \(\mu _{1,\varepsilon }\) have the same regularity of the Kantorovich potential, and are Lipschitz continuous. They are also bounded from below because of the logarithm in the optimality conditions and Caffarelli’s theory (see Sect. 4.2.2 in [17]) implies that the Kantorovich potentials are \(C^{2,\alpha }\) (we assumed that we are in a convex domain). Iterating these regularity arguments gives that \(\rho _{1,\varepsilon }\) and \(\mu _{1,\varepsilon }\) are \(C^\infty \) functions.

We then use the geodesic convexity of the entropy to deduce that we have

where \((\rho _s, \mu _s)\) is a pair of geodesic curves in \(W_2(\Omega ) \times W_2 (\Omega )\) connecting the densities \((\rho _{1,\varepsilon }, \mu _{1,\varepsilon })\) to the densities \((\rho _{0,\varepsilon }, \mu _{0,\varepsilon })\) (pay attention that, in a JKO scheme, this interpolation starts from the new points and go back to the old points). We then use the continuity equations \(\,\partial _s \rho _s + \nabla \cdot (\rho _s v_s)=0\), and \(\,\partial _s \mu _s + \nabla \cdot (\mu _s w_s)=0\), together with the fact that the initial velocity fields \(v_0\) and \(w_0\) can be obtained as the opposite of the gradient of the corresponding Kantorovich potentials. Hence we have

Using the optimality conditions we obtain

Dropping the positive terms with the gradients of the logarithms and applying Lemma 4.8 we then obtain

where \(S_{1,\varepsilon }=\rho _{1,\varepsilon }+\mu _{1,\varepsilon }\). It is then enough to let \(\varepsilon \rightarrow 0\) if we choose an approximation \(\rho _{0,\varepsilon },\mu _{0,\varepsilon }\) s.t. \(G(\rho _{0,\varepsilon },\mu _{0,\varepsilon })\rightarrow G(\rho _0,\mu _0)\). Note that the terms in \(G(\rho _{1,\varepsilon },\mu _{1,\varepsilon })\) and \(S_{1,\varepsilon }\) are lower semi-continuous for the weak convergence (and the sequence \((\rho _{0,\varepsilon },\mu _{0,\varepsilon })\) weakly converges to \((\rho _1,\mu _1)\) by \(\Gamma -\)convergence of the minimized functionals and uniqueness of the minimizer at the limit). \(\square \)

Lemma 4.10

Let \((\hat{\rho }_t^\tau , \hat{\mu }_t^\tau )\) be the pair of curves obtained by the De Giorgi variational interpolation. Then \( (\hat{\rho }_t^\tau , \hat{\mu }_t^\tau ) \in {\mathcal {H}}\) for every t.

Proof

First, we note that the optimality conditions provide Lipschitz continuity, for fixed \(\tau \), for \(f_a (\hat{\rho }_t^\tau , \hat{\mu }_t^\tau )\) and \(f_a (\hat{\rho }_t^\tau , \hat{\mu }_t^\tau )\), and hence for \(\chi (\hat{\rho }_t^\tau , \hat{\mu }_t^\tau )\) (see Remark 2 in Sect. 2). Therefore Property i of Definition 3.1 is satisfied.

For Property ii of Definition 3.1, we apply Lemma 4.9 with \(s\tau \) instead of \(\tau \), which guarantees the \(H^1\) behavior of the square root of the sum. \(\square \)

Remark 3

Note that the very same argument implies that the curves obtained by the piecewise constant interpolation also belong to the space \({\mathcal {H}}\).

4.2 Existence of solutions

The goal of this section is to prove Theorem 4.3. We start by proving the following lemma:

Lemma 4.11

The pair of curves \((\hat{\rho }_t^{\tau }, \hat{\mu }_t^{\tau })\), \((\bar{\rho }_t^\tau , \bar{\mu }_t^\tau )\) and \((\tilde{\rho }_t^\tau , \tilde{\mu }_t^\tau )\) given by Definitions 4.5, 4.6 and 4.7 respectively converge up to subsequences, as \(\tau \rightarrow 0\), to the same limit curve \((\rho _t, \mu _t)\) uniformly in \(W_2\) distance. Moreover, the vector-valued measure \(\tilde{E}^\tau \) corresponding to the momentum variable of the piecewise geodesic interpolations, also converges weakly-* in the sense of measures on \([0,T]\times \Omega \) to a limit vector measure E along the same subsequence.

Proof

Recall that the geodesic speed is constant on each interval \((k\tau , (k+1)\tau )\), and this implies

and similar for \(\tilde{w}_t^\tau \). Then we obtain

Let us note that we have

where the inequality is a consequence of (4.22).

We first use this inequality to estimate the momentum variables, since we have

Analogous estimates can be obtained for \(\tilde{E} ^\tau _\mu \). This means that \(\tilde{E}^\tau \) is bounded in \(L^1 ([0,T] \times \Omega )\) and we obtain the weak-* compactness in the space of measures on space-time.

It is now classical in gradient flows, as a consequence of the estimate on the \(L^2\) norm of the velocities (4.32), to obtain Hölder bounds on the geodesic interpolations. Indeed, the pair \((\tilde{\rho }^\tau _t, \tilde{ \mu }^\tau _t)\) is uniformly \(\frac{1}{2}-\)Hölder continuous since by using the previous computations we can show that, for \(s<t\),

and an analogous estimate holds for \(\tilde{\mu }^\tau \). Since the domain of the curves \(\tilde{\rho }, \tilde{\mu } : [0,T] \rightarrow W_2(\Omega )\) is compact and so is the image domain \(W_2(\Omega )\), we can pass to the limit by using Ascoli-Arzelà theorem. Therefore there exists a subsequence \(\tau _j \rightarrow 0\) such that

Moreover the curves \((\bar{\rho }_t^\tau , \bar{\mu }_t^\tau )\) obtained from the piecewise constant interpolation converge uniformly to the same limit curve \((\rho _t, \mu _t)\) as the ones obtained from the piecewise geodesic interpolation since we have that

where \(C, \tilde{C}\) are some positive constant. This is shown by using that \((\tilde{\rho }_t^\tau , \tilde{\mu }_t^\tau )\) and \((\bar{\rho }_t^\tau , \bar{\mu }_t^\tau )\) coincide at every \(t =k \tau \) and \((\bar{\rho }_t^\tau , \bar{\mu }_t^\tau )\) are constant in each interval \((k\tau , (k+1)\tau ]\). The details of these computations can be found in Sect. 8.3 of [14].

For the pair of curves \((\hat{\rho }_t^\tau , \hat{\mu }_t^\tau )\) defined by De Giorgi variational interpolation we have

and similarly \( W_2 (\hat{\mu }_t^\tau , \tilde{\mu }_t^\tau ) \le \tilde{C} \sqrt{\tau }\) for some positive constants \(C ,\tilde{C}\). This implies as \(\tau \rightarrow 0\),

This means that the pair \((\hat{\rho }_t^\tau , \hat{\mu }_t^\tau )\) also converges to the limit curves \((\rho _t, \mu _t)\) uniformly in [0, T]. Therefore we showed that the three interpolations that are defined for (4.20) converge to the same limit curves \((\rho _t, \mu _t)\). \(\square \)

By Lemma 4.10, we know that, for the curves obtained by the De Giorgi intepolation we have \((\hat{\rho }_{t}^\tau , \hat{\mu }_{t}^\tau ) \in {\mathcal {H}}\), for all t. Then we have the following:

Using the above equality, (4.27) re-writes as

On the other hand since we have

we can re-write (4.34) as

and, up to a change of variable, we obtain

Next, we prove the following two results which will be helpful in the proof of Theorem 4.3:

Proposition 4.12

Suppose that a sequence of curves \((\rho _t^n, \mu _t^n) \) satisfies \((\rho _t^n,\mu _t^n)\in {{\mathcal {H}}}\) for every n and a.e. t, and

-

\(\int _0^T \textrm{Slope}F(\rho _t^n, \mu _t^n) \,\textrm{d}t \le C\) for all n.

-

\((\rho _t^n, \mu _t^n) \rightharpoonup (\rho _t, \mu _t)\) for each t, where \(\rho _t\) and \(\mu _t\) are curves in \(AC_2([0,T];W_2(\Omega ))\).

-

\(\int _0^T \Vert \sqrt{\rho _t + \mu _t}\Vert _{H^1(\Omega )}^2 \,\textrm{d}t < +\infty \).

Then we have \((\rho _t, \mu _t) \in L^2{{\mathcal {H}}}\) and \( \textrm{Slope}F(\rho _t, \mu _t)\le \underset{n \rightarrow \infty }{\liminf }\textrm{Slope}F(\rho _t^n, \mu _t^n)\).

Proof

First, we consider the function \(t\mapsto \liminf _n \textrm{Slope}F(\rho _t^n, \mu _t^n)\). Fatou’s lemma implies that

In particular, the liminf of the slope is finite for a.e. t. If we take a function \(\chi \in W_c^{1,\infty } (B)\), then we have

Using Proposition 3.5 we have

Hence, we can pass to the limit as \(n\rightarrow + \infty \) and deduce for a.e. t that we have \(\chi (\rho _t, \mu _t) \in H^1(\Omega )\), i.e. Property i for being in \({\mathcal {H}}\). Moreover, the assumption on \(\sqrt{\rho _t+\mu _t}\) provides Property ii for a.e. t. Hence, we know \( (\rho _t, \mu _t)\in {\mathcal {H}}\) for a.e. t. We now use the lower semi-continuity of the slope on \({\mathcal {H}}\) together with Fatou’s lemma to deduce

Using again (4.36) we also obtain

which, combined with the \(L^2\) integrability of the \(H^1\) norm of the square root, provides \((\rho ,\mu )\in L^2 {\mathcal {H}}\). \(\square \)

Lemma 4.13

Let \(S_t:=\rho _t+\mu _t\) where \((\rho ,\mu )\) is the limit of the piecewise constant interpolation of the JKO scheme. We then have \(\int _{0}^{T} \Vert \sqrt{S_t}\Vert _{H^1(\Omega )}^2 \,\textrm{d}t <+\infty \).

Proof

We iterate the estimate of Lemma 4.9, which guarantees

where \(S_k^\tau :=\rho _k^\tau +\mu _k^\tau \), and where G is defined in Lemma 4.9. Summing over k, we obtain, for \(N=T/\tau \),

where \(\bar{S}^\tau := \bar{\rho }^\tau + \bar{\mu }^\tau \), and \(\bar{\rho }^\tau , \bar{\mu }^\tau \) are the curves obtained from the piecewise constant interpolation. It is then enough to pass to the limit \(\tau \rightarrow 0\) and apply the semi-continuity of the terms on the right hand side above to obtain

which proves the claim since \(|\nabla \sqrt{S}|^2=\frac{|\nabla S|^2}{4S}\). \(\square \)

Now we have the necessary tools to prove Theorem 4.3.

Proof of Theorem 4.3

Let \((\hat{\rho }_t^{\tau }, \hat{\mu }_t^{\tau })\), \((\bar{\rho }_t^\tau , \bar{\mu }_t^\tau )\) and \((\tilde{\rho }_t^\tau , \tilde{\mu }_t^\tau )\) be the curves obtained by the De Giorgi variational interpolation, the piecewise constant interpolation and the geodesic interpolation respectively. Summing (4.35) over k we obtain

Lemma 4.11 gives that the curves obtained by the three interpolations, defined above, for the JKO scheme (4.20), converge to the same limit curve \((\rho _t, \mu _t)\). Lemma 4.13 uses the convergence of the piecewise constant interpolation to deduce \(\sqrt{\rho _t+\mu _t}\in L^2_tH^1_x\) and, together with (4.37) (which provides the uniform bound on \(\textrm{Slope}F\)) and Proposition 4.12 we obtain \((\rho ,\mu )\in L^2{\mathcal {H}}\) and \(\int _0^T \textrm{Slope}F(\rho _t, \mu _t) \le \liminf _\tau \int _0^T \textrm{Slope}F(\hat{\rho }_t^\tau , \hat{\mu }_t^\tau ) \).

Let us now look at the momentum variables \(\tilde{E}_t\). We consider the Benamou-Brenier functional

This functional, see Chapter 5 in [14], is lower semi-continuous for the weak convergence of both variables. Hence, we can write

and pass to the limit as \(\tau \rightarrow 0\), thus deducing \(E_\rho =\rho v\) with \(v\in L^2(\rho )\) and \(E_\mu =\mu w\) with \(w\in L^2(\mu )\) (all these integrabilities being meant in space-time) and

We then combine this with the lower semi-continuity of F which is implied by the convexity of f, and we see that the limit curves \((\rho ,\mu )\) together with the velocity fields (v, w) is indeed an EDI solution of our PDE. \(\square \)

An additional estimate. We conclude this section by showing an additional estimate on S, which is actually not needed for our analysis.

Lemma 4.14

Assume that the problem we consider is in dimension 1 or 2, that is, \(\Omega \subset {\mathbb {R}}^d\) with \(d=1\) or \(d=2\). Then, for \(T>0\), we have \( \int _{0}^{T} \Vert S_t\Vert _{L^2(\Omega )}^2 \,\textrm{d}t < + \infty \).

Proof

We treat the cases \(d=1\) and \(d=2\) separately.

By Lemma 4.13, we have that \(\sqrt{S} \in L^2_t H_x^1\) and this implies \(\sqrt{S} \in L^2_t L_x^{\infty }\), thus \(S \in L_t^1 L_x^{\infty }\). Moreover, since \(S=\rho +\mu \) and \(\rho \) and \(\mu \) have unit mass, we also have \(S \in L_t^\infty L^1_x\). Then, we have

This proves the result for \(d=1\).

Now, we consider a function \(\phi (b) = b \left( \log b -c \right) \), and notice that the Legendre transform \(\phi ^*\) of \(\phi \) is given by \(\phi ^*(a) = e^{a+c-1}\). Then we have the following inequality for every a, b, c (with \(b>0\)):

Let us define \(h(t) := \Vert \sqrt{S}_t\Vert ^2_{H^1(\Omega )} < +\infty \) and notice that \(h(t)\in L^1([0,T])\). Taking \(b(t,x) = S_t(x) h(t)\) and \(a(t,x) = \frac{S_t(x)}{h(t)}\), together with a function \(c=c(t)\) to be chosen in (4.38), we obtain

Now let us recall the Moser–Trudinger inequality in dimension \(d=2\). There exist positive constants \(\alpha \) and C such that for every \(u \in H^1 (\Omega )\) with \(\Vert u\Vert _{H^1(\Omega )} \le 1\) we have

The sharp value of the constant \( \alpha \) is \( 4 \pi \), but we will just use \(\alpha =1\). Taking \(c (t)= \log h(t)\) and denoting \(u (t,x)= \sqrt{\frac{S_t(x)}{h(t)}}\) in (4.39) we obtain that

The very last inequality also relies on the fact that S has uniformly bounded entropy, i.e. \(\int _\Omega S_t\log S_t \,\textrm{d}x\le C\). This is a consequence of the bound on \(F(\rho _t,\mu _t)\le F(\rho _0,\mu _0)\). Using \(F\ge G\) we have a uniform bound on \(\int \rho \log \rho +\mu \log \mu \) and, by convexity, on \(\int \frac{S}{2}\log (\frac{S}{2})\), which in turn gives a bound on the entropy of S. This gives the result for \(d=2\) and finishes the proof. \(\square \)

5 Differentiation properties

The goal of this section is to prove a statement similar to the following one:

Let \((\rho ,\mu )\) be a curve in \(L^2{\mathcal {H}}\) (see Definition 4.1) and let v and w be two velocity fields for \(\rho \) and \(\mu \), respectively, i.e. we have \(\partial _t\rho +\nabla \cdot (\rho v)=0\) and \(\partial _t\mu +\nabla \cdot (\mu w)=0\).

If \(g:{\mathbb {R}}^2_+\rightarrow {\mathbb {R}}\) is a “nice enough” function, we have

In particular, we would like this to be true for \(g=f\) and for \((\rho ,\mu )\) the solution that we found in Sect. 4.

The main idea behind the above computation is that (5.41) holds if the densities of \(\rho \) and \(\mu \) are smooth, by just using a differentiation under the integral sign and an integration by parts. Hence, we will prove the result by regularization, relying on a suitable convolution kernel (in space only). We will suppose that \(\Omega \) is either the torus or a regular cube. In the second case, after symmetrizing, the functions \(\rho \) and \(\mu \) can be extended by periodicity and it is exactly as if \(\Omega \) were the torus.

We first choose a convolution kernel \(\eta >0\) with \(\int \eta =1\) (some assumptions on it will be made precise later) and define \(\eta _\varepsilon \) to be its rescaled version \(\eta _\varepsilon (z) :=\varepsilon ^{-d}\eta (z/\varepsilon )\). We define \(\rho _\varepsilon \) and \(v_\varepsilon \) via

Analogously, we define \(\mu _\varepsilon \) and \(w_\varepsilon \) with the same convolution kernel.

We first observe the following property.

Lemma 5.1

If \(\rho \in L^1([0,T]\times \Omega )\) and we have \(\int _0^T\int _\Omega \rho |v|^2<+\infty \), and \(\rho _\varepsilon \) and \(v_\varepsilon \) are defined as (5.42), then we have \(\sqrt{\rho _\varepsilon }v_\varepsilon \rightarrow \sqrt{\rho }v\) in \(L^2([0,T]\times \Omega )\).

Proof

It is well known (see Chapter 5 in [14]) that we have

which proves that \(\sqrt{\rho _\varepsilon }v_\varepsilon \) is bounded in \(L^2\). Moreover, it is clear that its pointwise limit is \(\sqrt{\rho }v\) on the set \(\{\rho >0\}\) as a consequence of the standard properties of the convolution and of \(\rho _\varepsilon \rightarrow \rho \) and \(\rho _\varepsilon v_\varepsilon \rightarrow \rho v\). We then use Lemma 5.2 below to deduce the strong \(L^2\) convergence. \(\square \)

Lemma 5.2

Suppose that a sequence \(u_n\in L^2(X;{\mathbb {R}}^d)\) weakly converges in \(L^2\) to a function v, and that we have \(u_n(x)\rightarrow u(x)\) for a.e. \(x\in A\subset X\). Then we have \(u=v\) on A.

Suppose that a sequence \(u_n\) satisfies \(\limsup _n \int |u_n|^2 \le \int |u|^2\) and \(u_n(x)\rightarrow u(x)\) for a.e. \(x\in A\) with \(A=\{u\ne 0\}\); then we have \(u_n\rightarrow u\) in \(L^2(X)\).

Proof

Let \(\phi \in L^\infty (X)\) be a test function vanishing on \(A^c\). We have \(\int u_n \cdot \phi \rightarrow \int v\cdot \phi \) because of weak convergence. Yet, for an arbitrary \(R>0\), if we denote by \(\pi _R\) the projection onto the closed ball of radius R, we also have \(\int \pi _R(u_n)\cdot \phi \rightarrow \int \pi _R(u)\cdot \phi \) because of dominated convergence. Moreover

Using

we obtain

i.e. \(\lim _n \int _X (u_n-u)\cdot \phi =0\) since R is arbitrary. We then obtain \(\int (u-v)\cdot \phi =0\) for any \(L^\infty \) function \(\phi \) vanishing outside A, i.e. \(u=v\) a.e. on A.

For the second part of the statement, since \(u_n\) is bounded in \(L^2\) we first extract a subsequence weakly converging to some \(v\in L^2\). From the previous part of the claim and our assumptions, we know that \(v=u\) on \(A=\{u\ne 0\}\). We then use

Since all the inequalities must be equalities, we deduce \(v=0=u\) on \(A^c\) and hence \(u=v\) a.e. on X, as well as \(||u_n||_{L^2}\rightarrow ||u||_{L^2}\). Thus, \(u_n\) weakly converges to u with convergence of the norm, which implies strong convergence. The limit does not depend on the subsequence we extracted, so it holds on the full sequence. \(\square \)

With this convergence result in mind we can first prove the following:

Proposition 5.3

Equality (5.41) holds when \(g(a,b)=\tilde{f}(a+b)\), provided that \(\tilde{f}\) (which is a priori only defined on \([2,+\infty )\)) is extended to a function bounded from below on \({\mathbb {R}}_+\) satisfying \(0\le \tilde{f}''(s)\le C/s\).

Proof

We first regularize by convolutions the densities \(\rho \) and \(\mu \) into \(\rho _\varepsilon \) and \(\mu _\varepsilon \). We define

where \(S=\rho +\mu \). Note that \(\sqrt{S}\in L^2_tH^1_x\) implies \(\nabla (\sqrt{S_\varepsilon })\rightarrow \nabla (\sqrt{S})\) in \(L^2\) in space-time. Indeed, a simple convexity argument proves that \(||\nabla (\sqrt{S_\varepsilon })||_{L^2}\le ||\nabla (\sqrt{S})||_{L^2}\). This proves that \(\nabla (\sqrt{S_\varepsilon })\) is bounded in \(L^2\) and has, hence, a weak limit. This limit can only be \(\nabla (\sqrt{S})\) since \(\sqrt{S_\varepsilon }\rightarrow \sqrt{S}\) pointwise. Yet, this also means that the limit of the \(L^2\) norm will be smaller than the norm of the limit, which implies strong convergence (even if, actually, in this context weak convergence would have been enough).

Since, for the regularized densities, formula (5.41) is true, we just have to pass to the limit each term as \(\varepsilon \rightarrow 0\). The convergence

is true for any convex function g which is bounded from below by combining a Fatou’s lemma giving the lower bound on the liminf and a Jensen inequality giving an upper bound (indeed, we use the fact that every convex functional invariant by translations decreases by convolution).

We then just need to pass to the limit the integral in space-time. The part concerning \(\rho \) can be written as