Abstract

We provide a general approach to deform framed curves while preserving their clamped boundary conditions (this includes closed framed curves) as well as properties of their curvatures. We apply this to director theories, which involve a curve \(\gamma : (0, 1)\rightarrow \mathbb {R}^3\) and orthonormal directors \(d_1\), \(d_2\), \(d_3: (0,1)\rightarrow \mathbb {S}^2\) with \(d_1 = \gamma '\). We show that \(\gamma \) and the \(d_i\) can be approximated smoothly while preserving clamped boundary conditions at both ends. The approximation process also preserves conditions of the form \(d_i\cdot d_j' = 0\). Moreover, it is continuous with respect to natural functionals on framed curves. In the context of \(\Gamma \)-convergence, our approach allows to construct recovery sequences for director theories with prescribed clamped boundary conditions. We provide one simple application of this kind. Finally, we use similar ideas to derive Euler–Lagrange equations for functionals on framed curves satisfying clamped boundary conditions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

An elastic inextensible rod of length \(\ell \) can be modelled by an arclength parametrized curve \(\gamma : (0, \ell )\rightarrow \mathbb {R}^3\) describing the deformation of the center line, and a field of orthonormal directors \(d_2\), \(d_3 : (0, \ell )\rightarrow \mathbb {S}^2\) which are normal to the (unit) velocity vector \(d_1 = \gamma '\) of the curve. The triple \((d_1, d_2, d_3)\) is called a frame (or framing) adapted to the curve \(\gamma '\). We assume that \((d_1, d_2, d_3)\in SO(3)\) almost everywhere. There are generalizations of this setting, e.g., to non-orthonormal frames and to models for extensible rods; we refer to [2, 7] and the references therein.

Rod-like theories also arise as asymptotic theories for narrow plates. One such instance are theories for ribbons [10, 26]. In such instances, there can be a natural choice of directors, which is characterized by the vanishing of curvature or torsion, i.e., of expressions of the form \(d_i\cdot d_j'\). A common choice of directors gives rise to the Frénet frame, cf. [2, 8]. In [6] alternative choices of frames are discussed. One such alternative is what is called a relatively parallel adapted frame in [6]. The Frénet frame is determined by the condition \(d_1'\cdot d_3 = 0\), while the relatively parallel frame in [6] is determined by the condition \(d_2'\cdot d_3 = 0\). In the theory derived in [10], the natural choice of directors instead satisfies the constraint \(d_1\cdot d_2' = 0\).

Similar objects and constraints arise in the context of curves which lie within a surface \(\Sigma \subset \mathbb {R}^3\). A common choice of directors in this case is to take \(d_3(t)\) to equal the normal to the surface \(\Sigma \) at the point \(\gamma (t)\). The curvature constraints then correspond to special curves on \(\Sigma \) such as geodesics or lines of curvature, and the frame is called the Darboux frame.

In hyperelasticity, the behaviour of rod-like objects is governed by a suitable energy functional which assigns an elastic energy to each triple \((\gamma , d_2, d_3)\) resp. \((d_1, d_2, d_3)\).

A common mechanical situation is that of a rod (or ribbon) whose ends are clamped (or welded to some planes), cf. [2, 4]. That is, one prescribes \((\gamma , d_1, d_2, d_3)\) at both ends of the rod:

Here \(\overline{\gamma }\) and \(\overline{d}_i\) are given boundary data defined on \(\{0, \ell \}\). Observe that closed framed curves (e.g. infinitesimally narrow ribbons in the shape of a Möbius band [25, 27]) are particular cases of framed curves with clamped ends.

In order to study variational problems in such a setting, it is useful to be able to modify a given framed curve \((\gamma , d_2, d_3)\) in a way that preserves both the boundary conditions and curvature conditions, e.g., those of the form \(d_i\cdot d_j' = 0\). The purpose of this article is to describe a general process to achieve such modifications. An important feature of this process is that the required modifications can be chosen to enjoy additional properties, such as smoothness or small support.

One class of applications arises in the derivation of asymptotic theories. In the context of \(\Gamma \)-convergence, the need arises to construct, for a given limiting framed curve \((\gamma , d_2, d_3)\) (which satisfies the boundary conditions), sequences \((\gamma ^{(n)}, d^{(n)}_2, d^{(n)}_3)\) of framed curves which are asymptotically optimal, i.e., so-called recovery sequences. Using the modification process, one can first construct these recovery sequences without taking the boundary conditions into account, and subsequently modify the resulting \((\gamma ^{(n)}, d^{(n)}_2, d^{(n)}_3)\) to become admissible for the prescribed boundary conditions. In such a context, it is essential to find well-controlled modifications even when the sequence \((\gamma ^{(n)}, d^{(n)}_2, d^{(n)}_3)\) obtained in the first step is only weakly compact in \(W^{2,2}\times W^{1,2}\).

In the present article we only give a simple application of the general results in this context. It is related to the existence of generalized optimal designs for rods with clamped ends via \(\Gamma \)-convergence, see Proposition 4.4 below. A more sophisticated application to such a question in the context of ribbons will be given elsewhere.

Another area of applications of the modification procedure is the approximation of a given framed curve by smooth ones. Such density results are useful in the context of \(\Gamma \)-convergence, as well. They allow to construct recovery sequences \((\gamma ^{(n)}, d^{(n)}_2, d^{(n)}_3)\) starting from smooth limiting objects \((\gamma , d_2, d_3)\). This can be much easier than having to base the construction on an irregular object.

They are also an important tool to establish the convergence of discrete approximations in [4], as they permit to remove artificial regularity hypotheses. This is particularly useful in the case of ribbons, since the constraints are not of lower order.

We will obtain the following density result; cf. Theorem 4.1 below for its precise statement.

Let Q be continuous and define \( \widetilde{\mathcal {F}}_Q \) by

Then for all framed curves

with \(\widetilde{\mathcal {F}}_Q(\gamma , d_2, d_3) < \infty \) there exist \((\gamma ^{(n)}, d_2^{(n)}, d_3^{(n)})\), which are \(C^{\infty }\) on \([0, \ell ]\) and which satisfy the following:

-

convergence: \((\gamma ^{(n)}, d_2^{(n)}, d_3^{(n)})\rightarrow (\gamma , d_2, d_3)\) strongly in \(W^{2,2}\times W^{1,2}\times W^{1,2}\) as \(n\rightarrow \infty \).

-

convergence of energies: \(\widetilde{\mathcal {F}}_Q(\gamma ^{(n)}, d_2^{(n)}, d_3^{(n)}) \rightarrow \widetilde{\mathcal {F}}_Q(\gamma , d_2, d_3)\) as \(n\rightarrow \infty \).

-

boundary conditions: \((\gamma ^{(n)}, d_2^{(n)}, d_3^{(n)})\) satisfy (1) for all \(n\in \mathbb {N}\).

-

structure: if \(d_i\cdot d_j' = 0\) on \((0, \ell )\), then \(d^{(n)}_i\cdot (d^{(n)}_j)' = 0\) on \((0, \ell )\) for all \(n\in \mathbb {N}\).

The above theorem applies to the standard bending-torsion theory of rods, for which a similar result has been obtained recently, by a different approach, in [5]; see also [19] for a related result. However, it also applies to curves endowed with the Frénet frame, or with the almost parallel frame in [6], or to the frame used in the context of ribbons in [10]. For more details we refer to Sect. 3.

Both Theorems 4.1 and 4.4 are applications of the following more general result; we refer to Theorem 3.2 for the precise statement and to Definition 3.1 for the notion of degeneracy:

Let \((\gamma , d_2, d_3)\) be a framed curve satisfying (1). Assume that there is a set \(J\subset I\) of positive measure on which \((\gamma , d_2, d_3)\) is nondegenerate. Let \((\gamma ^{(n)}, d_2^{(n)}, d_3^{(n)})\) be a sequence of framed curves converging weakly to \((\gamma , d_2, d_3)\) in \(W^{2,2}\times W^{1,2}\).

Then there exist \((\widehat{\gamma }^{(n)}, \widehat{d}_2^{(n)}, \widehat{d}_3^{(n)})\), still converging weakly to \((\gamma , d_2, d_3)\), which satisfy (1) and whose curvatures differ from those of \((\gamma ^{(n)}, d_2^{(n)}, d_3^{(n)})\) only by well-behaved functions supported on J.

Roughly speaking, by well-behaved functions we mean those which preserve the conditions \(d_i\cdot d_j' = 0\) on the curvatures, and which moreover can be chosen to belong to any space with is typically dense in \(L^2\).

Clearly, this last result is only interesting if nondegeneracy is a very weak condition. This is indeed the case, as shown in Proposition 3.3 below. In fact, the notion of degeneracy turns out to be strong enough to see why the result cannot be true if \((\gamma , d_2, d_3)\) is degenerate on \((0, \ell )\).

In Sect. 3.5 we derive the Euler–Lagrange equations for stationary points of functionals on framed curves satisfying the boundary conditions (1). In Sect. 4.3 we apply this to a ribbon model.

A more general notion of framed curves (allowing for zero velocity) is studied, e.g., in [3, 11, 15]. In [12] conditions are obtained under which a smooth curve with points of zero velocity nevertheless admits a smooth adapted framing.

In [9, 20,21,22] equilibrium states of clamped rods were studied under various kinds of boundary conditions; we also refer to [13]. A formalism to study such variational problems on framed curves was developed in [14] and applied for fixed boundary conditions e.g. in [18].

Notation. We tacitly sum over repeated indices, unless stated otherwise. All sets and functions are measurable. The letter I will denote the identity matrix, but it will also denote the open interval (0, 1). We denote by \(\mathfrak {so}(n)\) the space of skew-symmetric \(n\times n\)-matrices and by SO(n) the set of rotation matrices. The scalar product on \(\mathbb {R}^{n\times n}\) will be denoted by \(A:B = {{\,\mathrm{Tr}\,}}(A^TB)\), and we use the norm \(|A|^2 = A:A\). We use the notation \( \wedge : \mathbb {R}^n\times \mathbb {R}^n\rightarrow \mathfrak {so}(n) \) given by \(a\wedge b = a\otimes b - b\otimes a\). We denote by \((e_1, e_2, e_3)\) the canonical basis of \(\mathbb {R}^3\).

2 Abstract results

2.1 An implicit function theorem

In what follows, for a normed space \((H, \Vert \cdot \Vert _H)\), we will denote by \(B^{\Vert \cdot \Vert _H}_R(x)\) the open ball of radius \(R > 0\) with respect to the norm \(\Vert \cdot \Vert _H\) centered at \(x\in H\); we will omit the superscript when the norm is evident from the context. For a given linear map T we will denote its kernel by N(T). By L(E, G) we denote the space of linear bounded operators from E to G.

Theorem 2.1

Let K, \(k\in \mathbb {N}\), let \(\mathcal {M}\subset \mathbb {R}^K\) be a smooth k-dimensional submanifold, let H be a Banach space, let \(V\subset H\) be open in the strong topology of H. Let \(\mathcal {P}: V\rightarrow \mathcal {M}\) be continuously Fréchet differentiable and let \(x\in V\) be such that \( D\mathcal {P}(x) \) maps some subspace \(\widetilde{E}\subset H\) onto the tangent space to \(\mathcal {M}\) at \(\mathcal {P}(x)\). Then there exists a subspace \(E\subset \widetilde{E}\) such that

Moreover, for any subspace \(E\subset H\) satisfying (2) the following is true:

-

(i)

E is k-dimensional, and there exists an \(r > 0\) and a continuously Fréchet differentiable map

$$\begin{aligned} Y : B_r^{\Vert \cdot \Vert _H}(x) \rightarrow E \end{aligned}$$satisfying \(Y(x) = 0\) and

$$\begin{aligned} \mathcal {P}(z + Y(z)) = \mathcal {P}(x) \text{ for } \text{ all } z\in B_r^{\Vert \cdot \Vert _H}(x). \end{aligned}$$(3) -

(ii)

If both \(\mathcal {P}\) and its Fréchet derivative \(D\mathcal {P}: V\rightarrow L\left( H, \mathbb {R}^K \right) \) are sequentially continuous with respect to weak convergence in H and if \(R > 0\) is such that

$$\begin{aligned} B^{\Vert \cdot \Vert _H}_{2R}(x)\subset V \end{aligned}$$(4)and \((x_n)\subset B_R^{\Vert \cdot \Vert _H}\) is such that \(x_n\rightharpoonup x\) weakly in H as \(n\rightarrow \infty \), then there exist \(y_n\in E\) converging to zero in E and satisfying \(\mathcal {P}(x_n + y_n) = \mathcal {P}(x)\) for all \(n\in \mathbb {N}\) large enough.

Remarks

-

(i)

Theorem 2.1 (i) is a common version of the implicit function theorem. Theorem 2.1 (ii) will be relevant for the derivation of our main result, Theorem 3.2 below.

-

(ii)

If \(V = H\) then there always exists an \(R > 0\) satisfying the hypotheses of the second part, since in this case condition (4) is void and the sequence \((x_n)\) is bounded in H because it converges weakly.

-

(iii)

In applications (such as in [4, 10, 16] and Sect. 4) one needs the freedom to choose E in order to ensure that the corrections \(y_n\) enjoy suitable properties.

-

(iv)

A consequence of Theorem 2.1 (i) is that

$$\begin{aligned} N(D\mathcal {P}(x))\subset N(DY(x)). \end{aligned}$$(5)This follows at once by applying the chain rule to (3).

The proof of Theorem 2.1 (ii) uses the following implicit function theorem.

Lemma 2.2

Let X be a topological space, let E and G be Banach spaces and let \(U\subset E\) be open in the strong topology. Let

be continuous. Assume, moreover, that the partial Fréchet derivative

of F with respect to its second argument exists and is continuous, and let \((a, b)\in X\times U\) be such that

Then there exists a neighbourhood \(W\subset X\) of a and a map \(Y : W\rightarrow U\) such that

Moreover, Y is continuous in a with \(Y(a) = b\).

Proof

The proof closely follows the usual proof of the implicit function theorem. Without loss of generality we assume that \(b = 0\), that U is an open ball centered at 0 and that \(F(a, 0) = 0\). By the closed graph theorem, the inverse of \(D_2F(a, 0)\) is bounded.

Set \(A = D_2 F(a, 0)\) and define, for every \(x\in X\) the map \(T_x : U\rightarrow E\) by setting

Then for all y, \(z\in U\):

By continuity of \(D_2 F\), there exist \(R_0 > 0\) and a neighbourhood \(W_0\) of a such that \(\overline{B}_{R_0}(0)\subset U\) and such that for all \((x, \widetilde{y})\in W_0\times \overline{B}_{R_0}(0)\) we have

Thus

Now let \(R\in (0, R_0)\) and \(y\in \overline{B}_R(0)\). Then for all \(x\in W_0\) we have by (9) that

Since \(F(a, 0) = 0\) and since F is continuous in (a, 0), there exists a neighbourhood \(W\subset W_0\) of a such that

Summarizing, for every \(R\in (0, R_0)\) there exists a neighbourhood W of a such that the map \(T_x : \overline{B}_R(0)\subset E\rightarrow \overline{B}_R(0)\subset E\) is a contraction for each \(x\in W\). Hence for each \(x\in W\) the map \(T_x\) has a unique fix point \(Y(x)\in \overline{B}_R(0)\).

Since, moreover, \(R\in (0, R_0)\) was arbitrarily small, we conclude that Y is continuous in a with \(Y(a) = 0\). \(\square \)

Remark 2.3

Let K, \(k\in \mathbb {N}\) and let \(\mathcal {M}\) be a smooth k-dimensional submanifold of \(\mathbb {R}^K\). Then Lemma 2.2 remains true if G is replaced by \(\mathcal {M}\) in (6), by \(\mathbb {R}^K\) in formula (7) and by \(T_{F(a, b)}\mathcal {M}\) (i.e., the tangent space to \(\mathcal {M}\) at the point F(a, b)) in (8).

Proof

Let \(\widetilde{\mathcal {M}}\subset \mathcal {M}\) be relatively open with \(F(a, b)\in \widetilde{\mathcal {M}}\) and such that there exists a chart \(\Psi : \widetilde{\mathcal {M}}\rightarrow \mathbb {R}^k\). By continuity of F there exist open neighbourhoods \(\widetilde{X}\subset X\) of a and \(\widetilde{U}\subset U\) of b such that \( F(\widetilde{X}\times \widetilde{U})\subset \widetilde{\mathcal {M}}. \) The conclusion now follows by applying Lemma 2.2 with \(\widetilde{X}\), \(\widetilde{U}\) and \(\Psi \circ F\) instead of X, U and F. \(\square \)

Since Remark 2.3 is such an immediate consequence of the lemma, we will at times refer to Lemma 2.2 even when we should, more precisely, be referring to Remark 2.3.

Proof of Theorem 2.1

The existence of E is standard. In fact, since \(D\mathcal {P}(x)\) maps H onto the tangent space to \(\mathcal {M}\) at \(\mathcal {P}(x)\), there exist \(y^{(1)}\), ..., \(y^{(k)}\in H\) such that

are linearly independent. We can take E to be the span of the \(y^{(j)}\).

If E is any subspace satisfying (2) then \(\dim E \le k\). In fact, since \(E\cap N(D\mathcal {P}(x)) = \{0\}\), we see that the restriction of \(D\mathcal {P}(x)\) to E is injective. Hence \(\dim E\le \dim D\mathcal {P}(x)(E)\). On the other hand, the range of \(D\mathcal {P}(x)\) equals the range of the restriction \(D\mathcal {P}(x)|_E\). Since \(D\mathcal {P}(x)\) is surjective, we conclude that \(\dim E \ge k\).

Now let \(R > 0\) satisfy (4), let \(X = B_R^{\Vert \cdot \Vert _H}(x)\) and \(U = E\cap B_{R/2}^{\Vert \cdot \Vert _H}(0)\). Then \(z + y\in V\) for all \((z, y)\in X\times U\) and therefore we can define the map \(F : X\times U\rightarrow \mathcal {M}\) by setting

For every fixed \(z\in X\) we see that \(F(z, \cdot )\) is differentiable with differential

In particular, \(D_2F(x, 0) = D\mathcal {P}(x)|_E\) is injective. Since \(\mathcal {P}\) and its Fréchet derivative \(D\mathcal {P}\) are strongly continuous, the existence of \(r > 0\) and Y follows from Lemma 2.2 and Remark 2.3 if we endow X with the relative topology inherited from the strong topology on H. The continuous Fréchet differentiability of Y is standard as well.

In order to prove Theorem 2.1 (ii), we endow X with the relative topology inherited from the weak topology on H. Then both F and \(D_2 F\) are continuous on \(X\times U\) because \(\mathcal {P}\) and \(D\mathcal {P}\) and the operation of addition are sequentially weakly continuous and the relative weak topology on X is first countable. Hence the claim follows from Lemma 2.2 and Remark 2.3 by setting \(y_n = Y(x_n)\) for large enough n. \(\square \)

Corollary 2.4

Assume that the hypotheses of Theorem 2.1 are satisfied and let \(E\subset H\) be a subspace satisfying (2). Then there exists a neighbourhood \(\widehat{E}\subset E\) of the origin such that \(\mathcal {P}\) maps \(x + \widehat{E}\) homeomorphically onto a neighbourhood of \(\mathcal {P}(x)\) in \(\mathcal {M}\).

Proof

We define a \(C^1\)-map \(\widetilde{F}\) from a neighbourhood of the origin in E into \(\mathcal {M}\) by setting \( \widetilde{F}(y) = \mathcal {P}(x + y). \) Then \( D\widetilde{F}(0) = D\mathcal {P}(x)|_E \) is a bijection from the k-dimensional space E onto the k-dimensional tangent space to \(\mathcal {M}\) at \(\mathcal {P}(x)\). Hence the claim follows from the inverse function theorem. \(\square \)

In Sect. 3.5 we will use the following Lagrange multiplier rule.

Corollary 2.5

Assume that the hypotheses of Theorem 2.1 are satisfied. Assume, moreover, that \(\mathcal {F}: V\rightarrow \mathbb {R}\) is Fréchet differentiable in x and that \( \mathcal {F}(x)\le \mathcal {F}(z) \) for all \(z\in V\) with \(\mathcal {P}(z) = \mathcal {P}(x)\). Then there exists a unique \(\Lambda \) in the tangent space to \(\mathcal {M}\) at \(\mathcal {P}(x)\) such that

Proof

Since \(D\mathcal {P}(x)\) maps H onto the tangent space to \(\mathcal {M}\) at \(\mathcal {P}(x)\), existence and uniqueness of \(\Lambda \) follow once we prove that

By Theorem 2.1 (i) there exists a finite dimensional subspace \(E\subset H\), an open ball \(B\subset V\) centered at x and a continuously Fréchet differentiable map \(Y : B\rightarrow E\) such that \( \mathcal {P}(z + Y(z)) = \mathcal {P}(x) \text{ for } \text{ all } z\in B. \) In particular, for any \(y\in N(D\mathcal {P}(x))\) there is an \(r > 0\) such that

Hence

On the other hand, (5) implies that \( \frac{1}{t}Y(x + ty) \) converges to zero in E as \(t\rightarrow 0\). Dividing (11) by t and letting \(t\rightarrow 0\) we therefore deduce (10). \(\square \)

3 Framed curves with boundary conditions

Throughout this chapter I denotes the open interval (0, 1). Any other interval would be as good. The same letter I will denote the identity matrix.

For given \(A\in L^2(I, \mathbb {R}^{n\times n})\) the initial value problem

has a unique solution \(R\in W^{1,2}(I, \mathbb {R}^{n\times n})\) for any choice of initial condition \(R_0\in \mathbb {R}^{n\times n}\).

From now on, a framed curve is a pair consisting of a frame field \(r : I\rightarrow SO(3)\) and an associated curve \(\gamma : I\rightarrow \mathbb {R}^3\) satisfying \(\gamma ' = r^Te_1\) on I. For every \(A\in L^2(I, \mathfrak {so}(3))\) let us denote by \(r_A\in W^{1,2}(I, SO(3))\) the unique solution to the initial value problem

In what follows we will at times write r instead of \(r_A\) and \(r_i\) instead of \(r_A^Te_i\). Notice that \(r_2 = d_2\) and \(r_3 = d_3\) are the directors mentioned in the introduction. The differential equation (12) can be spelled out as follows:

If \(\gamma \) is a curve lying in a surface and \(r_3\) is the normal to the surface along \(\gamma \), then \(A_{13}\) is the normal curvature, \(A_{12}\) the geodesic curvature and \(A_{23}\) the geodesic torsion of \(\gamma \). One can also regard \(r_A\) as a curve in SO(3), in which case A is the pull-back of the Maurer-Cartan form.

The standard bending-torsion theory of rods [5] corresponds to the case when A in (12) is allowed to take arbitrary values in \(\mathfrak {so}(3)\), i.e., all entries \(A_{12}\), \(A_{13}\) and \(A_{23}\) can be nonzero in (13).

The Frénet frame \(r_A\) corresponds to the case when \(A_{13}\equiv 0\) in (13); the almost parallel frame in [6], which is also relevant in [16], corresponds to the case when \(A_{23}\equiv 0\); whereas in [10] the case \(A_{12}\equiv 0\) is relevant.

The cases when only one of the \(A_{ij}\) may differ from zero have obvious geometric interpretations:

-

If \(A_{13} = A_{23} = 0\) everywhere or \(A_{12} = A_{23} = 0\) everywhere, then one director is constant and the curve \(t\mapsto \int _0^t r_A^Te_1\) lies in a plane.

These cases are equivalent to planar framed curves, because one component of \(r_A\) is constant. Therefore, the results obtained later imply similar results for planar framed curves.

-

If \(A_{12} = A_{13} = 0\), then the curve \(t\mapsto \int _0^t r_A^Te_1\) is a straight line and the frame spins around this line.

As mentioned in the introduction, the boundary conditions we address here amount to clamped ends, i.e., boundary conditions prescribing \(\gamma (0)\), \(r_A(0)\) and \(\gamma (1)\), \(r_A(1)\). Theorem 3.2 below shows that, for a given generic framed curve \((\gamma , r)\) and any sequence \((\gamma _n, r_n)\) converging to \((\gamma , r)\) weakly in \(W^{2,2}\times W^{1,2}\), it is possible to perturb \((\gamma _n, r_n)\) in a well-controlled way such that each of the modified framed curves satisfies the same clamped boundary conditions as \((\gamma , r)\). By a well-controlled perturbation we mean, firstly, that it converges to zero as \(n\rightarrow \infty \); secondly, that it is supported on a small set; thirdly, that it preserves the structure of A. By the latter we simply mean that if \(A_{ij}\) vanishes identically then the same should remain true for modified framed curve.

3.1 Main results

Let i, j, \(k\in \{1, 2, 3\}\) and set

In this definition i, j, k are not necessarily distinct, so that \(\mathfrak {A}\) can be one, two or three dimensional; let us exclude the trivial case \(\mathfrak {A}= \{0\}\). For a given choice of \(\mathfrak {A}\) we define the following subspace \(\widetilde{\mathfrak {A}}\) of \(\mathfrak {so}(3)\):

and the following subspace \(\Gamma \) of \(\mathbb {R}^3\):

as well as the following subgroup of SO(3):

where the symbol \(\times \) denotes the cross product in \(\mathbb {R}^3\).

From now on, the symbols \(\mathfrak {A}\), \(\widetilde{\mathfrak {A}}\), \(\Gamma \) and G will always be as just defined, i.e., \(\mathfrak {A}\) will be a space of the form (14) and \(\widetilde{\mathfrak {A}}\), \(\Gamma \) and G will denote the sets determined by this choice of \(\mathfrak {A}\).

These definitions and many of the results extend to higher dimensions and to other subspaces \(\mathfrak {A}\subset \mathfrak {so}(n)\) as well. The natural definition of \(\widetilde{\mathfrak {A}}\) is that it is the smallest Lie subalgebra of \(\mathfrak {so}(3)\) containing \(\mathfrak {A}\), and \(G = \exp (\widetilde{\mathfrak {A}})\).

The following technical definition is to be viewed in conjunction with Proposition 3.3 below. That proposition shows that, in most cases, degeneracy of A in the sense of Definition 3.1 in fact implies that \(A_{12}\) and \(A_{13}\) vanish identically, which essentially means that the curve \(t\mapsto \int _0^t r_A^T e_1\) is a straight line. A partial converse to Proposition 3.3 is also true, see Proposition 3.9. Summarizing, the following degeneracy condition turns out to be very restrictive, i.e., most \(A\in L^2(I, \mathfrak {A})\) are nondegenerate.

Definition 3.1

A map \(A\in L^2(I, \widetilde{\mathfrak {A}})\) is said to be degenerate with respect to \(\mathfrak {A}\) on a set \(J\subset I\) if there exist \(\lambda \in \Gamma \) and \(M\in \widetilde{\mathfrak {A}}\), not both zero, such that

In (15) we can also take \(\int _0^t r_A^Te_1\) instead of \(-\int _t^1 r_A^Te_1\). In fact, one can readily verify that \(\lambda \wedge \int _0^1 r_A^Te_1\in \widetilde{\mathfrak {A}}\) which can therefore be absorbed into M.

The following theorem is our main result regarding the deformation of framed curves while preserving prescribed boundary conditions.

Theorem 3.2

Let \(A\in L^2(I, \widetilde{\mathfrak {A}})\) and assume that there is a measurable set \(J\subset I\) of positive measure such that A is not degenerate with respect to \(\mathfrak {A}\) on J. Then every \(L^2\)-dense subspace of

contains a finite dimensional subspace E such that, whenever \(A_n\in L^2(I, \widetilde{\mathfrak {A}})\) converge weakly in \(L^2\) to A, then there exist \(\widehat{A}_n\in E\) converging to zero in E and such that

for all n.

Remarks.

-

(i)

This result should be viewed in conjunction with Proposition 3.3, which provides simple sufficient criteria for nondegeneracy.

-

(ii)

Equations (17) and (18) only refer to the boundary conditions at 1. The boundary conditions at 0 are determined by the initial values of the framed curves and are 0 (for the curve) resp. I (for the frame \(r_A\)). This entails no loss of generality, as it is always possible to arrange these conditions in 0 by means of a rigid motion.

-

(iii)

Observe that \(A_n\) and A are allowed to attain values in all of \(\widetilde{\mathfrak {A}}\), while \(\widehat{A}_n\) takes only values in \(\mathfrak {A}\). Clearly, if \(A_n\) takes values in \(\mathfrak {A}\), then so does \(A_n + \widehat{A}_n\).

-

(iv)

In the cases when \(\dim \mathfrak {A}= 1\), we have \(\widetilde{\mathfrak {A}} = \mathfrak {A}\) and therefore the hypotheses require that \(A_n\in \mathfrak {A}\) almost everywhere. This hypothesis cannot be dropped. In fact, if \(A_n\not \in \mathfrak {A}\) on a set of positive measure, then it is impossible to find \(\widehat{A}_n : I\rightarrow \mathfrak {A}\) such that (18) is satisfied.

For instance, consider the case \(\mathfrak {A}= {{\,\mathrm{span}\,}}\{e_2\wedge e_3\}\) and let \(\widetilde{A}\in L^2(I, \mathfrak {so}(3))\). Then we have \(\widetilde{A}\in \mathfrak {A}\) almost everywhere on I if, and only if, \(\int _0^1 r_{\widetilde{A}}^Te_1 = 1\). In fact, both assertions are equivalent to \(t\mapsto \int _0^t r_{\widetilde{A}}^Te_1\) being a straight line.

Therefore, if A takes values in \(\mathfrak {A}\) but \(A_n\not \in \mathfrak {A}\) on a set of positive measure, then there exist no \(\widehat{A}_n : I\rightarrow \mathfrak {A}\) which lead to (18), because \(A_n + \widehat{A}_n\not \in \mathfrak {A}\) on a set of positive measure.

-

(v)

By adapting the proofs, similar results can be derived for other boundary conditions. If, for instance, we were only interested in preserving the boundary conditions (17), then there would exist no degenerate A at all; this follows from the assertion, in Proposition 3.3 below, that \(\lambda \ne 0\) whenever A is degenerate.

-

(vi)

A variant of Theorem 3.2 in the particular case \(\mathfrak {A} = \{\widehat{A}\in \mathfrak {so}(3) : \widehat{A}_{23} = 0\}\) is used in [16]. The assertion for the case \(\mathfrak {A} = \{\widehat{A}\in \mathfrak {so}(3) : \widehat{A}_{12} = 0\}\) is relevant in ribbon models [10, 26]. Both in [16] and in Sect. 4.1 we choose J to be an interval and the dense subspace to consist of smooth functions. But we will also need to apply Theorem 3.2 for J which are not intervals.

The following result provides some natural sufficient criteria for nondegeneracy.

Proposition 3.3

Let \(A\in L^2(I, \widetilde{\mathfrak {A}})\), let \(J\subset I\) have positive measure and assume that A is degenerate with respect to \(\mathfrak {A}\) on J. Let M and \(\lambda \) be as in (15). Then \(\lambda \ne 0\) and the following are true:

-

(i)

If \(\dim \mathfrak {A}= 1\) then \(e_2\wedge e_3\not \in \mathfrak {A}\) and \(A = 0\) almost everywhere on J.

-

(ii)

If \(\dim \mathfrak {A}\ge 2\) and \(e_2\wedge e_3\in \mathfrak {A}\), then \(A_{12} = A_{13} = 0\) almost everywhere on J.

-

(iii)

If \( \mathfrak {A}= {{\,\mathrm{span}\,}}\{e_1\wedge e_2, e_1\wedge e_3\}, \) and \(J\subset I\) is an interval and \(A\in \mathfrak {A}\) almost everywhere on J, then there exists a nonzero constant multiple \(\Lambda \) of \(\lambda \) such that, with \(r_i = r_A^Te_i\), we have

$$\begin{aligned} r_1' = \Lambda \times r_1 \text{ on } J, \end{aligned}$$(19)and there is an \(a\in [0, 1]\) and a constant \(c_1\) such that

$$\begin{aligned} \frac{\Lambda }{|\Lambda |}\cdot r_1&= a \nonumber \\ - A_{13}(t)&= \Lambda \cdot r_2(t) = |\Lambda |\sqrt{1 - a^2}\cdot \cos \left( a|\Lambda |t - c_1\right) \nonumber \\ A_{12}(t)&= \Lambda \cdot r_3(t) = |\Lambda |\sqrt{1 - a^2}\cdot \sin \left( a|\Lambda |t - c_1\right) \end{aligned}$$(20)for almost every \(t\in J\).

Remarks.

-

(i)

The conclusions of Proposition 3.3 are not exhausting. We refer to Sect. 3.4 for details.

-

(ii)

The assertion \(e_2\wedge e_3\not \in \mathfrak {A}\) in Proposition 3.3 (i) means that in the case \( \mathfrak {A}= {{\,\mathrm{span}\,}}\{e_2\wedge e_3\} \) every \(A\in L^2(I, \mathfrak {A})\) is nondegenerate on any \(J\subset I\) of positive measure.

-

(iii)

Equation (19) asserts that the so-called Darboux vector \(\Lambda \) of \(r_1\) is constant. It amounts to the existence of a constant rotation \(R_{\Lambda }\in SO(3)\) taking \(e_1\) into \(\frac{\Lambda }{|\Lambda |}\) such that

$$\begin{aligned} R_{\Lambda }^T r_1(t) = \begin{pmatrix} a \\ \sqrt{1 - a^2}\cos (|\Lambda |t) \\ \sqrt{1 - a^2}\sin (|\Lambda |t) \end{pmatrix}. \end{aligned}$$(21)Hence with \(t_0 = \inf J\) and setting \(\widetilde{\gamma }(t) = \int _{t_0}^t r_1\), we have

$$\begin{aligned} R_{\Lambda }^T \widetilde{\gamma }(t) = \begin{pmatrix} a(t - t_0) \\ \frac{\sqrt{1 - a^2}}{|\Lambda |}\left( \sin (|\Lambda |t) - \sin (|\Lambda |t_0)\right) \\ -\frac{\sqrt{1 - a^2}}{|\Lambda |}\left( \cos (|\Lambda |t) - \cos (|\Lambda |t_0)\right) \end{pmatrix}, \end{aligned}$$which is a circular helix. Observe that the equations for \(\Lambda \cdot r_2\) and \(\Lambda \cdot r_3\) are in fact consequences of the equations for the \(A_{ij}\), up to additive constants.

-

(iv)

Summarizing, for most choices of \(\mathfrak {A}\) degeneracy implies that \((r_A^Te_1)' = 0\). The case \(\mathfrak {A}= {{\,\mathrm{span}\,}}\{e_1\wedge e_2, e_1\wedge e_3\}\) is an exception. Here \(r_A(1)\) cannot be changed without modifying \(r_A^T e_1\). Intuitively, if we wish to impose torsion on a narrow strip of paper, then the ends of the strip must be moved closer to each other, cf. Remark 3.8 below.

In the following sections we prove Theorem 3.2 and Proposition 3.3.

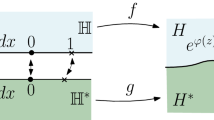

3.2 Boundary conditions as a level set

The boundary conditions (17), (18) can be regarded as a level set of a well-behaved functional \(\mathcal {G}\) on the space of connections A. In fact, introducing

In what follows, the letter \(\mathcal {G}\) will always refer to this map. The regularity of \(\mathcal {G}\) depends on the dependence of \(r_A\) from A. Therefore, the following results will be useful.

Lemma 3.4

Assume that \(A_n\rightharpoonup A\) weakly in \(L^2(I, \mathfrak {so}(3))\). Then

If \(A_n\rightarrow A\) strongly in \(L^2(I, \mathfrak {so}(3))\), then \(r_{A_n}\rightarrow r_A\) strongly in \(W^{1,2}(I, \mathbb {R}^{3\times 3})\).

Proof

We define the \(C^1\)-functions \(\Phi _n : I\rightarrow [0, \infty )\) by setting

Recall that

and that \(r_{A_n}(0) = r_A(0)\). Introducing

we can therefore estimate, for all \(t\in I\),

because \((A_n)\) is bounded in \(L^2(I)\). Since \(\Phi _n(0) = 0\), we deduce from Gronwall’s inequality that \( \Phi _n(1)\le C\int _0^1 \Psi _n. \) But \(\Psi _n\rightarrow 0\) pointwise on I, because \(A_n\rightharpoonup A\) weakly in \(L^2(I)\). Moreover, \(|\Psi _n|\le C\) on I because \((A_n)\) is bounded in \(L^2(I)\). Hence dominated convergence ensures that \(\Psi _n\rightarrow 0\) in \(L^1(I)\). Thus \( \Phi _n(1)\rightarrow 0 \) as \(n\rightarrow \infty \). This means that

On the other hand, we see from \(r_{A_n}' = A_n r_{A_n}\) and the fact that \(r_{A_n}\) takes values in SO(3), that the sequence \((r_{A_n}')\) is bounded in \(L^2(I)\). Combining this with (25) we deduce (23).

Finally, if \(A_n\rightarrow A\) strongly in \(L^2\), then \(A_nr_{A_n}\rightarrow Ar_A\) strongly in \(L^2\) because \(r_{A_n}\rightarrow r_A\) uniformly on I by (23). Therefore, \( r_{A_n}' \rightarrow r_A' \) strongly in \(L^2\). \(\square \)

Lemma 3.5

Let \(t_0\in [0, 1]\). Then the map

is Fréchet differentiable with

Moreover, \(\vec {r}\) and its Fréchet derivative \(D\vec {r}\) are sequentially continuous with respect to weak convergence in \(L^2(I, \mathfrak {so}(3))\).

In particular, \(\mathcal {G}\) is Fréchet differentiable and, moreover, \(\mathcal {G}\) and \(D\mathcal {G}\) are sequentially continuous with respect to weak convergence in \(L^2\). We have

for all \(B\in L^2(I, \mathfrak {so}(3))\).

Proof

We may assume without loss of generality that \(t_0 = 1\). For A, \(B\in L^2(I, \mathfrak {so}(3))\) set

Then

is the derivative of \(\vec {r}\) at A in the direction B. In fact, let \(B\in L^2(I, \mathfrak {so}(3))\). Since

we see that

By Lemma 3.4 the second factor on the right-hand side converges to zero as \(B\rightarrow 0\) in \(L^2\). This proves the Fréchet differentiability and completes the proof of (28).

Now let \(A_n\rightharpoonup A\) weakly in \(L^2\). We claim that then \(D\vec {r}(A_n)\rightarrow D\vec {r}(A)\) in the operator norm on \(L(L^2, \mathbb {R}^{3\times 3})\). In fact, by (28),

From (27) we deduce that

Since \(|W_A(B)|\le \Vert B\Vert _{L^1(I)}\), we conclude that

So by Lemma 3.4 we have \(D\vec {r}(A_n)\rightarrow D\vec {r}(A)\) in the operator norm on \(L(L^1, \mathbb {R}^{3\times 3})\).

Finally, the second component of (26) follows by integration from the corresponding formulae for \(r_A\). \(\square \)

3.3 Surjectivity

The following observation motivates Definition 3.1. Notice that \((r_A(1)\widetilde{\mathfrak {A}})\times \Gamma \) is the tangent space of \(G\times \Gamma \) at \(\mathcal {G}(A)\).

Lemma 3.6

Let \(A\in L^2(I, \widetilde{\mathfrak {A}})\), let \(J\subset I\) have positive measure and assume that A is not degenerate with respect to \(\mathfrak {A}\) on J. Then \(D\mathcal {G}(A)\) maps any \(L^2\)-dense subspace of

onto \((r_A(1)\widetilde{\mathfrak {A}})\times \Gamma \).

Proof

As usual, we write r instead of \(r_A\). Lemma 3.5 implies that \(\mathcal {G}\) is Fréchet differentiable with derivative given by (26). If the restriction of \(D\mathcal {G}(A)\) to a dense subspace of (29) does not map onto \(r(1)\widetilde{\mathfrak {A}}\times \Gamma \), then there exist \(M\in \widetilde{\mathfrak {A}}\) and \(\lambda \in \Gamma \), not both zero, such that, setting \(\gamma (t) = -\int ^1_t r^Te_1\), we have

for all \(\widehat{A}\) in a dense subspace of (29). Thus on J the matrix-valued map \( r(M + \lambda \otimes \gamma )r^T \) takes values in the orthogonal complement of \(\mathfrak {A}\subset \mathbb {R}^{3\times 3}\). This implies (15) because \(r^T\mathfrak {A}^{\perp }r = \left( r^T\mathfrak {A}r\right) ^{\perp }\). \(\square \)

Combining Lemma 3.6 with Theorem 2.1 we can prove Theorem 3.2:

Proof of Theorem 3.2

Let \(\widetilde{E}\) be a dense subspace of (16). According to Lemma 3.6 the hypotheses of Theorem 2.1 are satisfied with \(V = H = L^2(I, \widetilde{\mathfrak {A}})\), \(\mathcal {M}= G\times \Gamma \) and \(\mathcal {P}= \mathcal {G}\). Hence the assertions follow from Theorem 2.1, taking into account Remark (ii) following its statement. \(\square \)

Another consequence of Lemma 3.6 is the following result about the relative openness of the set \(\mathcal {G}(L^2(I, \mathfrak {A}))\).

Corollary 3.7

Let \(A\in L^2(I, \mathfrak {A})\) and let \(J\subset I\) have positive measure. Assume that A is not degenerate with respect to \(\mathfrak {A}\) on J. Then there exists a nonempty open neighbourhood \(W\subset G\times \Gamma \) of \(\mathcal {G}(A)\) such that for all \((\overline{\gamma }, \overline{r})\in W\) there is some \(\widetilde{A}\in L^2(I, \mathfrak {A})\) with \(\mathcal {G}(\widetilde{A}) = (\overline{\gamma }, \overline{r})\).

Proof

Let \(\widetilde{E}\) be an \(L^2\)-dense subspace of (29). Combining Lemma 3.6 with Corollary 2.4, we see that there exists a finite dimensional subspace \(E\subset \widetilde{E}\) and an open neighbourhood \(\widehat{E}\subset E\) of the origin such that \(\mathcal {G}\) maps \(A + \widehat{E}\) onto a relatively open neighbourhood \(W\subset G\times \Gamma \) of \(\mathcal {G}(A)\). \(\square \)

The following consequence of Corollary 3.7 asserts that the maximal distance \(\left| \int _0^1 r_A^Te_1\right| \) of the endpoints of the curve for a prescribed value of \(r_A(1)\) can be attained only when A is degenerate.

Remark 3.8

Let \(\widetilde{E}\) be an \(L^2\)-dense subspace of \(L^2(I, \mathfrak {A})\) and assume that \(A\in L^2(I, \mathfrak {A})\) maximizes the functional \(L^2(I, \mathfrak {A})\rightarrow \mathbb {R}\) given by

among all \(\widetilde{A}\in A + \widetilde{E}\) with \(r_{\widetilde{A}}(1) = r_A(1)\). Then A is degenerate with respect to \(\mathfrak {A}\) on I.

Proof

If A is not degenerate with respect to \(\mathfrak {A}\) on I, then by (the proof of) Corollary 3.7 there exists a finite dimensional subspace E of \(\widetilde{E}\) and a neighbourhood \(\widehat{E}\subset E\) of the origin such that \(\mathcal {G}(A + \widehat{E})\) contains the set \( \{r_A(1)\}\times W, \) where W is some neighbourhood of \(\int _0^1 r_A^Te_1\) in \(\Gamma \). Hence A cannot be a maximizer. \(\square \)

For most choices of \(\mathfrak {A}\), for global maximizers Remark 3.8 and its converse are immediate consequences of Proposition 3.3 and Proposition 3.9 below, because they show that \(r_A^Te_1\) is constant if and only if A is degenerate.

3.4 Degeneracy

Proof of Proposition 3.3

We write r instead of \(r_A\) and \(r_i = r^Te_i\). We set \(\gamma (t) = -\int _t^1 r_1\).

We begin with the proof of part (ii). Let \((j, k) = (2, 3)\) or \((j, k) = (3, 2)\). From the definition of \(\mathfrak {A}\) and (15) we deduce that there exist \(M\in \mathfrak {so}(3)\) and \(\lambda \in \mathbb {R}^3\), not both zero, such that

Denote by \(f\in W^{1,2}(I)\) the coefficient of the projection of \(M + \lambda \wedge \gamma \) onto \(r_1\wedge r_j\). Then

Taking derivatives in (30) we find

We conclude that \(f A_{1k} = 0\). Hence

Therefore also \(f' = 0\) on this set. Hence

Taking the derivative we find

where, as usual, we sum over the repeated index m. Since \(\lambda \wedge r_1 = 0\) on this set, if \(\lambda \ne 0\) we conclude in particular \(A_{1k} = 0\) almost everywhere on \(J\cap \{ A_{1k}\ne 0\}\). Thus we have shown:

But if \(\lambda = 0\), then we would deduce \(M = 0\) by combining (32) with (30).

Let us prove part (iii). With f defined in analogy to part (ii), there exist \(M\in \mathfrak {so}(3)\) and \(\lambda \in \mathbb {R}^3\), not both zero, such that

If \(f = 0\) almost everywhere on J, then \(\lambda \ne 0\) (since otherwise \(M = 0\)) and \(\lambda \wedge r_1 = 0\) on J. This implies that \(A_{12} = A_{13} = 0\) almost everywhere on J, which is the conclusion with \(a = 1\).

So it remains to consider the case when f does not vanish identically. Taking the derivative in (35), we deduce

Hence \(f' = 0\) on J (hence f is a nonzero constant on J) and

on J. Since f is a nonzero constant on J, the vector \(\Lambda = \frac{\lambda }{f}\) is nonzero and constant, and (37) is satisfied with \(f = 1\) and \(\Lambda \) instead of \(\lambda \). If \(\lambda = 0\) then \(A_{12} = A_{13} = 0\) by (37) on J, which as we saw above is the conclusion.

Let us therefore assume that \(\lambda \ne 0\), so \(\Lambda \ne 0\). Returning to (36), we see that

where \(\times \) denotes the cross product in \(\mathbb {R}^3\). We conclude that \(r_1\) satisfies the equation (19) for a precession about the axis \(\Lambda \). Formula (21) follows by applying first a rotation taking \(e_1\) into \(\Lambda /|\Lambda |\) and afterwards possibly rotating about \(\Lambda \).

Equation (19) implies that

is constant on J, and after possibly reflecting \(\Lambda \) we may assume that \(a\ge 0\).

Projecting (38) onto \(r_2\) and \(r_3\) we recover (37). Using (37) we find

This implies that there are (say, positive) constants \(c_1\), \(c_2\in \mathbb {R}\) such that

Finally, notice that \(|\Lambda |^2 = \sum _i (\Lambda \cdot r_i)^2 = |\Lambda |^2a^2 + c_2^2\), so that \(c_2 = |\Lambda |\sqrt{1 - a^2}\).

Let us prove part (i). First let \((j, k) = (2, 3)\) or \((j, k) = (3, 2)\) and \(\mathfrak {A}= {{\,\mathrm{span}\,}}\{e_1\wedge e_k\}\).

Recall that in this case \(r_j\) is constant and \(\lambda \perp r_j\) and \(M\in \mathfrak {A}= {{\,\mathrm{span}\,}}\{r_1\wedge r_k\}\). For suitable \(f_1\), \(f_2\in W^{1,2}(I)\) we have

This equation shows that \(M = 0\) if \(\lambda = 0\). Hence \(\lambda \ne 0\). Taking derivatives, we find

because \(r_j\) is constant. Thus \(\lambda \cdot r_k = 0\) on J and therefore \(r_1\) is parallel to \(\lambda \) on J. Moreover, taking derivatives we find \(A_{1k}\lambda \cdot r_1 = 0\) on J. Since \(\lambda \ne 0\) we conclude that \(A_{1k} = 0\) on J.

Let us now address the case \(\mathfrak {A}= {{\,\mathrm{span}\,}}\{e_2\wedge e_3\}\). Then \(r_1\) is constant and so \(\mathfrak {A}= {{\,\mathrm{span}\,}}\{r_2\wedge r_3\}\). Since in this case degeneracy implies \(\lambda = 0\), we must have \(M\ne 0\). Since by degeneracy \(M\in \{r_2\wedge r_3\}^{\perp }\), we obtain a contradiction (to our assumption that J has positive measure). \(\square \)

From the proof we can in fact draw more conclusions than those stated in Proposition 3.3 (with notation as in its proof):

For \(\mathfrak {A}= {{\,\mathrm{span}\,}}\{e_1\wedge e_k\}\) in fact we have shown that \(r_1\parallel \lambda \) on J. Recalling that \(\gamma \cdot r_1\times r_k = 0\) we can insert this into (40) to conclude that \(M + \lambda \wedge \gamma = 0\) on J; in particular, \(\lambda \cdot r_k\) is constant and \(\gamma - \gamma (t_0)\) is parallel to \(\lambda \) on J, for any \(t_0\in J\). Similarly, in the case \(\mathfrak {A}= \mathfrak {so}(3)\) we can use (30) (which for \(\mathfrak {A}= \mathfrak {so}(3)\) holds with \(f = 0\)) to deduce that \(r_1\parallel \lambda \) on J and, from (30), that \(\gamma - \gamma (t_0)\) is parallel to \(\lambda \) on J for any \(t_0\in J\).

In the case \(\mathfrak {A}= {{\,\mathrm{span}\,}}\{e_1\wedge e_k, e_2\wedge e_3\}\) we can use \(A_{1k} = 0\) in (31) to obtain the system

almost everywhere on J. So for instance we see that \(A_{jk}\) is not arbitrary; in particular, it agrees with a continuous function on the relatively open set \(J\cap \{f\ne 0\}\).

In view of these remarks only a partial converse to Proposition 3.3 can be expected. In particular, the last observation shows that in Proposition 3.9 below we cannot allow A to take arbitrary values in \(\mathfrak {so}(3)\) in the cases when \(\dim \mathfrak {A}= 2\).

Proposition 3.9

Let \(J\subset I\) be an interval, let \(A\in L^2(I, \mathfrak {A})\) and assume that one of the following conditions is true:

-

(i)

\(\dim \mathfrak {A}\ge 2\), \(e_2\wedge e_3\in \mathfrak {A}\) and \(A_{12} = A_{13} = 0\) almost everywhere on J.

-

(ii)

\(\mathfrak {A}= {{\,\mathrm{span}\,}}\{e_1\wedge e_2, e_1\wedge e_3\}\) and there is a constant vector \(\Lambda \in \mathbb {R}^3\) such that (19) is satisfied almost everywhere on J.

-

(iii)

\(k\in \{2, 3\}\) and \(\mathfrak {A}= {{\,\mathrm{span}\,}}\{e_1\wedge e_k\}\) and \(A = 0\) almost everywhere on J.

Then A is degenerate with respect to \(\mathfrak {A}\) on J.

Proof

We use the same notation as in the proof of Proposition 3.3. Set \(t_0 = \inf J\).

We begin with part (i). Since we assumed that A takes values in \(\mathfrak {A}\), we have \(A_{12} = A_{13} = 0\) on J. Since J is an interval, this implies that \(r_1\) is constant on J. We set \(\lambda = r_1(t_0)\). Then

Hence there is an \(M\in \mathfrak {so}(3)\) such that \(M + \lambda \wedge \gamma = 0\) on J.

In case (ii) notice that (19) implies that \( \Lambda \wedge r_1 = (r_2\wedge r_3)'. \) Hence there is an \(M\in \mathfrak {so}(3)\) such that \(M + \Lambda \wedge \gamma = r_2\wedge r_3\) on J; in particular, we cannot have \(M = 0\) and \(\Lambda = 0\) because the right-hand side is not zero. Thus A is degenerate.

In case (iii) notice that r is constant on J and therefore all \(r_i\wedge r_j\) are constant. Setting \(\lambda = r_1(t_0)\) we have \(\lambda \in \Gamma \). Moreover, \(\lambda \wedge r_1 = 0\) on J and therefore by integration \(\lambda \wedge (\gamma - \gamma (t_0)) = 0\) on J. As in Remark (i) to Definition 3.1 we see that \(\lambda \wedge \gamma (t_0)\in \mathfrak {A}\). \(\square \)

3.5 Euler–Lagrange equations

In this section we derive Euler–Lagrange equations for minimizers of functionals on \(L^2(I, \mathfrak {A})\) under the boundary conditions considered in earlier sections.

In order to define a suitable notion of minimizers, let \(\mathfrak {A}\) as in Definition 3.1. We will say that A is a minimizer of some functional \(\mathcal {F}: L^2(I, \mathfrak {A})\rightarrow (-\infty , \infty ]\) under its own boundary conditions on \((t_0, t_1)\subset I\) if \(\mathcal {F}(A)\le \mathcal {F}(\widetilde{A})\) for all \(\widetilde{A}\in L^2(I, \mathfrak {A})\) satisfying \(\widetilde{A} = A\) on \(I\setminus (t_0, t_1)\) as well as

The main result of this section is as follows.

Proposition 3.10

Let \(Q\in C^1(\mathfrak {A})\) be a nonnegative function satisfying

and define \(\mathcal {F}: L^2(I, \mathfrak {A})\rightarrow [0, \infty ]\) by

Let \(A\in L^2(I, \mathfrak {A})\) be such that \(\mathcal {F}(A) < \infty \). Assume, moreover, that there is a nonempty open interval \(J\subset I\) such that A is a nondegenerate minimizer of \(\mathcal {F}\) under its own boundary conditions on J. Then \(\nabla Q(A)\in W^{1,2}(J, \mathfrak {A})\) and there exist \(M\in \widetilde{\mathfrak {A}}\) and \(\lambda \in \Gamma \) such that

Remarks.

-

(i)

Proposition 3.10 is a Lagrange multiplier rule. The rule fails when A is degenerate. However, we understand this situation well due to Proposition 3.3.

-

(ii)

Let

$$\begin{aligned} \mathcal {I}_{\mathfrak {A}} = \{(i, j)\in \{1, 2, 3\}^2 : i < j \text{ and } e_i\wedge e_j\in \mathfrak {A}\} \end{aligned}$$and let f be the function defined by

$$\begin{aligned} f(A_{12}, A_{13}, A_{23}) = Q\left( \sum _{(i, j)\in \mathcal {I}_{\mathfrak {A}}} A_{ij} e_i\wedge e_j \right) . \end{aligned}$$We will abuse notation by writing f(A) instead of \(f(A_{12}, A_{13}, A_{23})\). Writing \(r_i = r_A^T e_i\) and \(\gamma (t) = \int _t^1 r_1\), the conclusion of Proposition 3.10 can be stated as follows:

There exist \(M\in \widetilde{\mathfrak {A}}\), \(\lambda \in \Gamma \) and \(H_{ij}\in W^{1,2}(I)\) such that on J we have

$$\begin{aligned} M + \lambda \wedge \gamma = -\sum _{(i, j)\in \mathcal {I}_{\mathfrak {A}}} \frac{\partial f}{\partial A_{ij}}(A)r_i\wedge r_j + \sum _{(i, j)\in \mathcal {I}_{\widetilde{\mathfrak {A}}}\setminus \mathcal {I}_{\mathfrak {A}}} H_{ij}r_i\wedge r_j. \end{aligned}$$(43)In fact, (42) implies that

$$\begin{aligned} \sum _{\mathcal {I}_{\mathfrak {A}}} \frac{\partial f}{\partial A_{ij}}(A) r_i\wedge r_j + M + \lambda \wedge \gamma \perp r_k\wedge r_{\ell } \text{ for } \text{ all } (k, \ell )\in \mathcal {I}_{\mathfrak {A}} \end{aligned}$$(44)on J; notice that we can absorb any nonzero constant factor into M and \(\lambda \). Since \(M + \lambda \wedge \gamma \) takes values in \(\widetilde{\mathfrak {A}}\), there exist coefficients \(H_{ij}\in W^{1,2}(I)\) such that

$$\begin{aligned} M + \lambda \wedge \gamma = \sum _{\mathcal {I}_{\widetilde{\mathfrak {A}}}} H_{ij}r_i\wedge r_j. \end{aligned}$$(45)Equation (44) can be restated as

$$\begin{aligned} \frac{\partial f}{\partial A_{ij}}(A) = - H_{ij} \text{ for } \text{ all } (i, j)\in \mathcal {I}_{\mathfrak {A}}. \end{aligned}$$(46)In particular \(\frac{\partial f}{\partial A_{ij}}(A)\in W^{1,2}(I)\), hence \(\nabla Q(A)\in W^{1,2}(I)\), and equation (43) also follows at once.

Proof of Proposition 3.10

We may assume without loss of generality that \(J = I\). Let \(A\in L^2(I, \mathfrak {A})\) be such that \(\mathcal {F}(A) < \infty \). For every \(\widehat{A}\in L^{\infty }(I, \mathfrak {A})\) we have \(\mathcal {F}(A + \widehat{A}) < \infty \), due to the growth condition on \(\nabla Q\). Moreover, since \(Q\in C^1\), for each \(R > 0\) the expression

converges to zero as \(\delta \downarrow 0\). For every \(\widehat{A}\in L^{\infty }(I, \mathfrak {A})\) with \(\Vert \widehat{A}\Vert _{L^{\infty }} \le \delta \le 1\) and every \(R > 0\) we have

due to (41). Choosing R large enough and then \(\delta \) small enough, the first factor on the right-hand side can be made arbitrarily small.

Hence the function \(\widetilde{\mathcal {F}} : L^{\infty }(I, \mathfrak {A})\rightarrow \mathbb {R}\) defined by \( \widetilde{\mathcal {F}}(\widehat{A}) = \mathcal {F}(A + \widehat{A}) \) is Fréchet differentiable at 0, with

Define \(\widetilde{\mathcal {G}} : L^{\infty }(I, \mathfrak {A})\rightarrow G\times \Gamma \) by setting \( \widetilde{\mathcal {G}}(\widehat{A}) = \mathcal {G}(A + \widehat{A}). \) Then \(\widetilde{\mathcal {F}}\) and \(\mathcal {P}= \widetilde{\mathcal {G}}\) satisfy the hypotheses of Corollary 2.5, with \(H = L^{\infty }(I, \mathfrak {A})\) and V some neighbourhood of \(x = 0\) in H. The surjectivity of \(D\widetilde{\mathcal {G}}(0)\) follows from Lemma 3.6.

We conclude that there exists a unique \(\Lambda \) in the tangent space to \(G\times \Gamma \) at \((r_A(1), \int _0^1r_A^Te_1)\) such that \(D\widetilde{\mathcal {F}}(0) + \Lambda \cdot D\widetilde{\mathcal {G}}(0) = 0\). By (47) and since \(D\widetilde{\mathcal {G}}(0) = D\mathcal {G}(A)\), this amounts to

The existence of M and \(\lambda \) satisfying (42) now follows from (48) as in the proof of Lemma 3.6. \(\square \)

4 Applications

4.1 Smooth approximation of framed curves with clamped ends

In this section we construct smooth approximations for framed curves which preserve clamped boundary conditions. Results of this kind can be useful in proving the convergence of numerical schemes, cf. [4]. We will prove the following result.

Theorem 4.1

Let \(\mathfrak {A}\) be as in Definition 3.1. Assume that \(Q : \mathfrak {A}\rightarrow [0, \infty )\) is continuous and define \( \mathcal {F}_Q : L^2(I, \mathfrak {A})\rightarrow [0, \infty ] \) by

Then for all \(A\in L^2(I, \mathfrak {A})\) with \(\mathcal {F}_Q(A) < \infty \) there exist \(A_n\in C^{\infty }(\overline{I}, \mathfrak {A})\) such that \(A_n\rightarrow A\) strongly in \(L^2(I, \mathbb {R}^{3\times 3})\) and \(\mathcal {F}_Q(A_n)\rightarrow \mathcal {F}_Q(A)\) and, moreover,

Remarks.

-

(i)

According to Lemma 3.4, strong convergence of \((A_n)\) in \(L^2\) implies strong convergence of \((r_{A_n})\) in \(W^{1,2}\).

-

(ii)

The case \(\mathfrak {A}= \mathfrak {so}(2)\) is implicit in the degenerate cases when \(\dim \mathfrak {A}= 1\).

In [10] a model for ribbons was obtained which generalises the model from [25]. Both models are governed by an energy functional of the form considered in Proposition 4.1, with \(\mathfrak {A}= {{\,\mathrm{span}\,}}\{e_1\wedge e_3, e_2\wedge e_3\}\). Proposition 4.1 therefore is a density result for ribbons with prescribed boundary conditions.

In [16] framed curves with \(\mathfrak {A}= {{\,\mathrm{span}\,}}\{e_1\wedge e_2, e_1\wedge e_3\}\) were used to describe developable surfaces and a smooth approximation result was obtained using similar ideas. However, the boundary conditions considered there were different and the length of the curves was not fixed.

Lemma 4.2

Let L be an index set and let \(\{J_i\}_{i\in L}\) be a family of open subintervals of I such that \(I = \bigcup _{i\in L}J_i\). Let \(A\in L^2(I, \mathfrak {A})\) and assume that A is degenerate with respect to \(\mathfrak {A}\) on \(J_i\), for each \(i\in L\). Then A is degenerate for \(\mathfrak {A}\) on I.

Proof

As usual, we write \(r_i = r_A^Te_i\). Since the \(J_i\) cover I, the claim follows if we show that whenever \(J_1\), \(J_2\in \{J_i\}_{i\in L}\) are such that \(J = J_1\cap J_2\) is nonempty, then A is degenerate on \(J_1\cup J_2\).

Let us first consider the case when for a \(k\in \{2,3\}\) we have \(e_1\wedge e_k\), \(e_2\wedge e_3\in \mathfrak {A}\). Then Proposition 3.3 implies that \(A_{12} = A_{13} = 0\) on \(J_1\cup J_2\). Proposition 3.9 then implies that A is degenerate with respect to \(\mathfrak {A}\) on the interval \(J_1\cup J_2\).

Now let us consider the case when \(\mathfrak {A}\) is as in Proposition 3.3 (iii). By definition of \(\mathfrak {A}\) we have \(A_{23} = 0\) on \(J_1\cup J_2\). By Proposition 3.3 (iii) there exist \(\Lambda ^{(1)}\), \(\Lambda ^{(2)}\in \mathbb {R}^3\setminus \{0\}\) such that \(r_1' = \Lambda ^{(i)}\times r_1\) on \(J_i\). If \(\Lambda ^{(1)} = \Lambda ^{(2)}\) then Proposition 3.9 implies that A is degenerate with respect to \(\mathfrak {A}\) on \(J_1\cup J_2\). If \(\Lambda ^{(1)}\ne \Lambda ^{(2)}\) then \((\Lambda ^{(1)} - \Lambda ^{(2)})\times r_1 = 0\) on J implies that \(r_1\) is constant on J. Since J is a nontrivial subinterval of \(J_1\) and of \(J_2\) and since (20) applies on each of them, we conclude that \(r_1\) is constant on \(J_1\cup J_2\). Hence A is degenerate with respect to \(\mathfrak {A}\) on \(J_1\cup J_2\), by Proposition 3.9.

Finally, if \(\dim \mathfrak {A}= 1\) then Proposition 3.3 implies that \(e_2\wedge e_3\not \in \mathfrak {A}\) and \(A = 0\) on \(J_1\cup J_2\). And then Proposition 3.9 implies that A is degenerate with respect to \(\mathfrak {A}\) on \(J_1\cup J_2\). \(\square \)

Lemma 4.3

Let \(J_0\subset J_1\subset J_2\subset \cdots \) be measurable subsets of I with \(I = \bigcup _{n\in \mathbb {N}} J_n\). Let \(A\in L^2(I, \mathfrak {A})\) be degenerate with respect to \(\mathfrak {A}\) on \(J_n\), for each \(n\in \mathbb {N}\). Then A is degenerate with respect to \(\mathfrak {A}\) on I.

Proof

Set \(r = r_A\) and define \(\gamma (t) = -\int _t^1r^Te_1\). We may assume that each \(J_n\) has positive measure. As usual, Proposition 3.3 shows that \(\mathfrak {A}\ne {{\,\mathrm{span}\,}}\{e_2\wedge e_3\}\).

Since A is degenerate with respect to \(\mathfrak {A}\) on each \(J_n\), for each n there exist \(M_n\in \widetilde{\mathfrak {A}}\) and \(\lambda _n\in \Gamma \), not both zero, such that the map \(F_n : I\rightarrow \mathfrak {so}(3)\) given by \( F_n = M_n + \lambda _n\wedge \gamma \) satisfies

According to Proposition 3.3 we have \(\lambda _n\ne 0\), so after dividing \(M_n\) and \(\lambda _n\) by \(|\lambda _n|\) we may assume that \(\lambda _n\in \mathbb {S}^2\). Hence there exists \(\lambda \in \mathbb {S}^2\cap \Gamma \) such that, after passing to a subsequence (not relabelled), we have \(\lambda _n\rightarrow \lambda \) as \(n\rightarrow \infty \).

Let us first consider the case when \((M_n)\) has a bounded subsequence. After possibly taking a further subsequence we may assume that there exists \(M\in \widetilde{\mathfrak {A}}\) such that \(M_n\rightarrow M\) as \(n\rightarrow \infty \).

Define \(F = M + \lambda \wedge \gamma \). Then \(F_n\rightarrow F\) uniformly on I. Hence (49) implies that \(F\in \left( r^T\mathfrak {A}r\right) ^{\perp }\) almost everywhere on I. Since \(\lambda \in \Gamma \setminus \{0\}\) and \(M\in \widetilde{\mathfrak {A}}\), this means that A is degenerate with respect to \(\mathfrak {A}\) on all of I.

It remains to consider the case when \((M_n)\) does not admit a bounded subsequence. Then we may assume that \(M_n\ne 0\) and we define

Then \(F_n\) satisfies (49) and there exists an \(M\in \widetilde{\mathfrak {A}}\) such that, after passing to a subsequence,

Since \(\lambda _n\in \mathbb {S}^2\) and \(\gamma \) is bounded, we conclude that \(F_n \rightarrow M\) uniformly. As before, we conclude that A is degenerate with respect to \(\mathfrak {A}\) on all of I. (As \(\lambda = 0\), Proposition 3.3 leads to a contradiction, showing that in fact \((M_n)\) always admits a bounded subsequence.) \(\square \)

Proof of Theorem 4.1

Let \(A\in L^2(I, \mathfrak {A})\). We may assume without loss of generality that \(\mathfrak {A}\) the smallest among the spaces allowed on the right-hand side of (14) with the property that \(A\in \mathfrak {A}\) almost everywhere on I.

Let us first consider the case when A is not degenerate with respect to \(\mathfrak {A}\) on all of I.

Step 1 (Truncation). Set \(J_n = \{|A|\le n\}\). Since A is not degenerate on all of I, Lemma 4.3 implies that there exists an \(N\in \mathbb {N}\) such that A is not degenerate with respect to \(\mathfrak {A}\) on

we may also assume that J has positive measure. Denote by \(\widetilde{E}_0\) the space of measurable functions \(I\rightarrow \mathfrak {A}\) supported on J and attaining only finitely many values.

For all \(m\in \mathbb {N}\) and all \(t\in I\) define

By Theorem 3.2 there is a finite dimensional subspace \(E_0\) of \(\widetilde{E}_0\) and there are \(\widehat{A}_m\in E_0\) converging to zero uniformly as \(m\uparrow \infty \) and such that \(A_m = \widetilde{A}_m + \widehat{A}_m\) satisfies \(\mathcal {G}(A_m) = \mathcal {G}(A)\) for all m. Clearly \(A_m\rightarrow A\) strongly in \(L^2(I)\). After taking a subsequence, we may also assume that \(A_m\) is not degenerate with respect to \(\mathfrak {A}\) on I for any m. Moreover, for all \(m\ge N\) we have \(\widetilde{A}_m = A\) on J and therefore

As \(m\rightarrow \infty \) the first term converges to zero because the measure of \(\{A > m\}\) converges to zero and \(Q(A)\in L^1(I)\) because \(\mathcal {F}_Q(A) < \infty \). The second term converges to zero because Q is uniformly continuous on the ball of radius 2N around the origin and \(\widehat{A}_m\rightarrow 0\) uniformly. Thus \(Q(A + \widehat{A}_m) \rightarrow Q(A)\) uniformly on J.

Step 2 (Mollification). In view of Step 1, we may assume from the outset and without loss of generality that \(A\in L^{\infty }(I, \mathfrak {A})\). Let \(A_n\in C^{\infty }(\overline{I}, \mathfrak {A})\) be such that \(|A_n|\le 2\Vert A\Vert _{L^{\infty }(I)}\) on I and such that \(A_n\rightarrow A\) strongly in all \(L^p(I)\) with \(p\ge 2\).

Let \(r > 0\) be small. By Lemma 4.2 there exists an open interval \(J\subset I\) of length r such that A is not degenerate with respect to \(\mathfrak {A}\) on J. The space \(\widetilde{E}_1 = C_0^{\infty }(J, \mathfrak {A})\) is \(L^2\)-dense in (16). Hence by Theorem 3.2 there exist \(\widehat{A}_n\) belonging to a finite dimensional subspace \(E_1\) of \(\widetilde{E}_1\) such that \(\widehat{A}_n\rightarrow 0\) uniformly and such that \(A_n = \widetilde{A}_n + \widehat{A}_n\) satisfies \(\mathcal {G}(A_n) = \mathcal {G}(A)\) for all n. Clearly \(A_n\rightarrow A\) strongly in \(L^2(I)\). Since Q is locally bounded, \(Q(A_n)\) is dominated by a constant and therefore \(\int _I Q(A_n)\rightarrow \int _I Q(A)\) by dominated convergence.

Step 3 (Degenerate cases). Let us now consider the case when A is degenerate with respect to \(\mathfrak {A}\) on all of I. If \(\dim \mathfrak {A}\ge 2\) then

In fact, otherwise Proposition 3.3 together with the fact that \(A\in \mathfrak {A}\) almost everywhere would imply that \(A_{12} = A_{13} = 0\), contradicting the minimality of \(\mathfrak {A}\).

If A is degenerate with respect to (50), then Proposition 3.3 implies that (20) holds on all of I. If, on the other hand, \(\dim \mathfrak {A}= 1\) then Proposition 3.3 implies that \(A = 0\) almost everywhere on I. In both cases we already had \(A\in C^{\infty }(\overline{I}, \mathfrak {A})\) to begin with. \(\square \)

4.2 Shape design for rods with clamped boundary conditions

As a simple application of Theorem 3.2, in this section we consider an optimal design problem for rods. In [17] the relaxed problem was derived by considering the Euler–Lagrange equations; the proof was similar to the one given in [1] for the linear case.

Here, instead, we prove \(\Gamma \)-convergence of the functionals and, in addition, we impose clamped boundary conditions at both ends of the rod. More precisely, let \(r^{(1)}\in G\) and \(\gamma ^{(1)}\in \Gamma \) be given. For simplicity let us assume that, in the case when \(\mathfrak {A}= {{\,\mathrm{span}\,}}\{e_1\wedge e_2, e_1\wedge e_3\}\), these prescribed boundary conditions \(r^{(1)}\) and \(\gamma ^{(1)}\) are not satisfied by any helix (20) with the initial data \(r(0) = I\) and \(\gamma (0) = 0\).

Let \(0< a < b\), let \(\mathcal {L}: \mathfrak {so}(3)\rightarrow \mathfrak {so}(3)\) be linear and positive definite and for a given \(B\in L^{\infty }(I; [a, b])\) define \(\mathcal {F}^{(B)} : L^2(I, \mathfrak {A})\rightarrow [0, \infty ]\) by

The number \(B(t)\in [a, b]\) models the ‘hardness’ of the material at the point \(t\in I\). The aim is to find the material distribution B which is optimal in the sense that it minimizes some compliance functional

Here \(A_B\) is a suitable minimizer of \(\mathcal {F}^{(B)}\) among all admissible \(A\in L^2(I, \mathfrak {A})\), i.e., those satisfying \(r_A(1) = r^{(1)}\) as well as \(\int _0^1 r_A^Te_1 = \gamma ^{(1)}\).

The minimization of (51) commonly requires considering sequences \((B_n)\subset L^{\infty }(I; [a, b])\) and understanding the asymptotic behaviour of the corresponding \(A_{B_n}\). This motivates the following proposition, which addresses the asymptotic behaviour of the functionals \(\mathcal {F}^{(B_n)}\). Since it is a \(\Gamma \)-convergence result, it remains true if we add a lower order functional to \(\mathcal {F}^{(B)}\). Its proof is closely related to an argument for a similar problem without clamped boundary conditions; see e.g. [24].

Proposition 4.4

Let \(B_n : I\rightarrow [a, b]\) be measurable and assume that \(B_n^{-1}{\mathop {\rightharpoonup }\limits ^{*}}(B^*)^{-1}\) weakly-\(*\) in \(L^{\infty }(I)\). Then the \(\Gamma \)-limit of the functionals \(\mathcal {F}^{(B_n)} : L^2(I, \mathfrak {A}) \rightarrow [0, \infty ]\) with respect to weak convergence in \(L^2(I, \mathfrak {A})\) agrees with the functional \(\mathcal {F}^{(B^*)} : L^2(I, \mathfrak {A})\rightarrow [0, \infty ]\).

Proof

As usual, we define \(\mathcal {G}\) as in (22) and write the boundary conditions as \(\mathcal {G}(A) = \mathcal {G}_0\), where \(\mathcal {G}_0 = (r^{(1)}, \gamma ^{(1)})\).

Let us begin by proving the upper bound. Let A be such that \(\mathcal {F}^{(B^*)}(A) < \infty \), so in particular \(\mathcal {G}(A) = \mathcal {G}_0\). Let \(\widehat{A}_n\in L^{\infty }(I, \mathfrak {A})\) be such that \(\widehat{A}_n\rightarrow 0\) strongly in \(L^{\infty }\), and define

Clearly \(A_n\in L^2(I, \mathfrak {A})\) and \(A_n\rightharpoonup A\) weakly in \(L^2\). Moreover,

because \(B_n\widehat{A}_n\rightarrow 0\) strongly in \(L^{\infty }\) and \(\mathcal {L}A_n\rightharpoonup \mathcal {L}A\) weakly in \(L^2\).

It remains to show that the \(\widehat{A}_n\) can be chosen in such a way that \(\mathcal {G}(A_n) = \mathcal {G}_0\) for all n.

If A is not degenerate with respect to \(\mathfrak {A}\) on I, then the existence of such \(\widehat{A}_n\) follows from Theorem 3.2, because \(L^{\infty }(I, \mathfrak {A})\) is dense in \(L^2(I, \mathfrak {A})\).

If \(e_2\wedge e_3\in \mathfrak {A}\) and A is degenerate with respect to \(\mathfrak {A}\) on I, then Proposition 3.3 implies that \(A_{12} = A_{13} = 0\) on I. Hence \(A\in \mathfrak {A}_0 = {{\,\mathrm{span}\,}}\{e_2\wedge e_3\}\) almost everywhere. Proposition 3.3 shows that such A are never degenerate with respect to \(\mathfrak {A}_0\). Hence Theorem 3.2 again implies the existence of suitable \(\widehat{A}_n\) (taking values only in \(\mathfrak {A}_0\)).

If \(\mathfrak {A}= {{\,\mathrm{span}\,}}\{e_1\wedge e_k\}\) and A is degenerate with respect to \(\mathfrak {A}\) on I, then Proposition 3.3 implies that \(A = 0\) on I. Then we can simply set \(\widehat{A}_n = 0\) as well.

If \(\mathfrak {A}= {{\,\mathrm{span}\,}}\{e_1\wedge e_2, e_1\wedge e_3\}\) and A were degenerate with respect to \(\mathfrak {A}\) on I, then by Proposition 3.3 it would have to define a helix, which we have excluded by our choice of \(r^{(1)}\) and \(\gamma ^{(1)}\).

It remains to prove the lower bound, which is standard: assume that \(A_n\rightharpoonup A\) weakly in \(L^2(I, \mathfrak {A})\) and that \(\mathcal {F}^{(B_n)}(A_n) < \infty \). In particular, \(\mathcal {G}(A_n) = \mathcal {G}_0\) for all n. Hence \(\mathcal {G}(A) = \mathcal {G}_0\) because \(\mathcal {G}\) is weakly continuous. Set

Then \(q_n\rightharpoonup 0\) weakly in \(L^2\) because both terms on the right converge to A weakly in \(L^2\). Hence

The right-hand side converges to \(\mathcal {F}^{(B^*)}(A)\) because \(q_n\rightharpoonup 0\) and \(\mathcal {L}A_n\rightharpoonup \mathcal {L}A\) weakly in \(L^2\). \(\square \)

The relaxation for planar rods with clamped boundary conditions is a particular case of Proposition 4.4. In order to relate to the setting in [17], we introduce the space

and for any \(B:I\rightarrow [a,b]\), \(g^{(1)}\in B_1(0)\) and \(K^{(1)}\in \mathbb {R}\) we define the functionals

Corollary 4.5

Let \(B^*\) and \(B_n\) be as in Proposition 4.4. Then \(\mathcal {F}_{2d}^{(B_n)}\) \(\Gamma \)-converge to \(\mathcal {F}_{2d}^{(B^*)}\) with respect to weak \(W^{1,2}\)-convergence.

Proof

Set

and \(\gamma ^{(1)} = (g^{(1)}\cdot e_1, 0, g^{(1)}\cdot e_2)^T\) and let \(\mathcal {L}\) denote the identity. Then

Hence the claim follows from Proposition 4.4. \(\square \)

4.3 Euler–Lagrange equation for clamped ribbons

The functional for ribbons derived in [10] is defined on \(L^2(I, \mathfrak {A})\) with \(\mathfrak {A}= {{\,\mathrm{span}\,}}\{e_1\wedge e_3, e_2\wedge e_3\}\). For isotropic materials it is, up to a constant prefactor, of the form \(\mathcal {F}(A) = \int _I Q(A)\), with \(Q(A) = f(A_{13}, A_{23})\) and \(f : \mathbb {R}^2\rightarrow [0, \infty )\) defined by

A formal derivation of the Euler–Lagrange equation for this functional can be found in [23]. Applying Proposition 3.10, we obtain the following result.

Corollary 4.6

Let \(\mathfrak {A}= {{\,\mathrm{span}\,}}\{e_1\wedge e_3, e_2\wedge e_3\}\) and let \(\mathcal {F}: L^2(I, \mathfrak {A})\rightarrow \mathbb {R}\) be defined by \(\mathcal {F}(A) = \int _I f(A_{13}, A_{23})\), with f as in (52). Let \(A\in L^2(I, \mathfrak {A})\) be a nondegenerate minimizer for \(\mathcal {F}\) under its own boundary conditions on I. Then there exist \(M\in \mathfrak {so}(3)\) and \(\lambda \in \mathbb {R}^3\) such that, with \(H_{ij} : I\rightarrow \mathbb {R}\) defined by

and setting

we have

Proof

We have \(f\in C^1(\mathbb {R}^2)\) with

and

Hence we can apply Proposition 3.10. Inserting these expressions into (46) we obtain (53) and (54) after absorbing a constant into M and \(\lambda \). \(\square \)

A regularity analysis for solutions of the Euler–Lagrange equations is not the topic of the present paper. We only note one immediate consequence of (53) and (54).

Remarks.

-

(i)

The set \( J^+ = \{t\in I : |A_{13}(t)| > |A_{23}(t)|\} \) is open and \(A\in C^{\infty }(J^+)\). The proof is given below.

-

(ii)

On \(J^- = \{t\in I : |A_{13}(t)| \le |A_{23}(t)|\}\) we have \(A_{23} = -\frac{H_{23}}{4}\). The right-hand side is a continuous function on I.

Proof of Remark (i)

On \(J^+\) we have \(A_{13}\ne 0\); so \(\theta = A_{23}/A_{13}\) is well-defined on this set. Observe that \(\theta ^2 = \widehat{\theta }^2\) on \(J^+\). Equation (53) implies that

because on \(J^+\) we have \(A_{13}\ne 0\) and \(\theta \ne 1\), so the left-hand side of (53) is nonzero. Since \(H_{13}\) is continuous, up to a null set \(J^+\) agrees with an open set.

The function \(\Psi : (-1, 1)\rightarrow \mathbb {R}\) given by \(\Psi (s) = \frac{2s}{1-s^2}\) is strictly increasing and smooth. Hence it has a smooth inverse \(\Psi ^{-1} : \mathbb {R}\rightarrow (-1, 1)\). Dividing (54) by (53) we conclude that

In particular, \(\theta \in C^0(J^+)\). But then (53) and (54) imply that \(A\in C^0(J^+)\). Hence \(r_A\in C^1(J^+)\). Thus \(H_{ij}\in C^1(J^+)\). Hence (56) implies that \(\theta \in C^1(J^+)\) and then (53) and (54) imply that \(A\in C^1(J^+)\). Continuing to bootstrap, we conclude that \( A\in C^{\infty }(J^+). \) \(\square \)

References

Allaire, G.: Shape optimization by the homogenization method. In: Applied Mathematical Sciences, vol. 146. Springer, New York (2002)

Antman, S.S.: Nonlinear problems of elasticity, 2nd edn. Volume 107 of Applied Mathematical Sciences. Springer, New York (2005)

Balestro, V., Martini, H., Teixeira, R.: On Legendre curves in normed planes. Pacific J. Math. 297(1), 1–27 (2018)

Bartels, S.: Numerical simulation of inextensible elastic ribbons (2019)

Bartels, S., Reiter, P.: Numerical solution of a bending-torsion model for elastic rods (2019)

Bishop, R.L.: There is more than one way to frame a curve. Am. Math. Monthly 82, 246–251 (1975)

Ciarlet, P.G.: Mathematical elasticity, vol. II. Volume 27 of Studies in Mathematics and its Applications. North-Holland Publishing Co., Amsterdam (1997)

do Carmo, M.P.: Differential Geometry of Curves and Surfaces. Prentice-Hall Inc., Englewood Cliffs (1976)

Domokos, G., Healey, T.J.: Multiple helical perversions of finite, intristically curved rods. Internat. J. Bifur. Chaos Appl. Sci. Eng. 15(3), 871–890 (2005)

Freddi, L., Hornung, P., Mora, M.G., Paroni, R.: A variational model for anisotropic and naturally twisted ribbons. SIAM J. Math. Anal. 48(6), 3883–3906 (2016)

Fukunaga, T., Takahashi, M.: Existence and uniqueness for Legendre curves. J. Geom. 104(2), 297–307 (2013)

Fukunaga, T., Takahashi, M.: Existence conditions of framed curves for smooth curves. J. Geom. 108(2), 763–774 (2017)

Giusteri, G.G., Fried, E.: Importance and effectiveness of representing the shapes of Cosserat rods and framed curves as paths in the special Euclidean algebra. J. Elast. 132(1), 43–65 (2018)

Griffiths, P.A.: Exterior differential systems and the calculus of variations. Volume 25 of Progress in Mathematics. Birkhäuser, Boston (1983)

Honda, S., Takahashi, M.: Framed curves in the Euclidean space. Adv. Geom. 16(3), 265–276 (2016)

Hornung, P.: Approximation of flat \(W^{2,2}\) isometric immersions by smooth ones. Arch. Ration. Mech. Anal. 199(3), 1015–1067 (2011)

Hornung, P., Rumpf, M., Simon, S.: Material optimization for nonlinearly elastic planar beams. ESAIM Control Optim. Calc. Var. 25, 11–19 (2019)

Ivey, T.A.: Minimal curves of constant torsion. Proc. Am. Math. Soc. 128(7), 2095–2103 (2000)

Le Tallec, P., Mani, S., Rochinha, F.A.: Finite element computation of hyperelastic rods in large displacements. RAIRO Modél. Math. Anal. Numér. 26(5), 595–625 (1992)

Maddocks, J.H.: Stability of nonlinearly elastic rods. Arch. Rational Mech. Anal. 85(4), 311–354 (1984)

Manning, R.S.: A catalogue of stable equilibria of planar extensible or inextensible elastic rods for all possible Dirichlet boundary conditions. J. Elast. 115(2), 105–130 (2014)

Neukirch, S., Henderson, M.E.: Classification of the spatial equilibria of the clamped elastica: symmetries and zoology of solutions. J. Elasticity 68, 1–3 (2002), 95–121 (2003). (Dedicated to Piero Villaggio on the occasion of his 70th birthday)

Paroni, R., Tomassetti, G.: Macroscopic and microscopic behavior of narrow elastic ribbons. J. Elast. 135(1–2), 409–433 (2019)

Pawelczyk, M.: Homogenization and Thin Film Asymptotics for Slender Structures in Nonlinear Elasticity. PhD Thesis, TU Dresden (2018)

Sadowsky, M.: Ein elementarer Beweis für die Existenz eines abwickelbaren Möbius’schen Bandes und Zurückführung des geometrischen Problems auf ein Variationsproblem. Sitzungsber. Preuss. Akad, Wiss (1930)

Sadowsky, M.: Theorie der elastisch biegsamen undehnbaren Bänder mit Anwendungen auf das Möbiussche Band. Verhandl. des 3. Intern. Kongr. f. Techn. Mechanik, 2, 444–451 (1930)

Starostin, E.L., van der Heijden, G.H.M.: The shape of a Möbius strip. Nat. Mater. 6, 563–567 (2007)

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by J. M. Ball.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.