Abstract

In recent years, deep learning has significantly reshaped numerous fields and applications, fundamentally altering how we tackle a variety of challenges. Areas such as natural language processing (NLP), computer vision, healthcare, network security, wide-area surveillance, and precision agriculture have leveraged the merits of the deep learning era. Particularly, deep learning has significantly improved the analysis of remote sensing images, with a continuous increase in the number of researchers and contributions to the field. The high impact of deep learning development is complemented by rapid advancements and the availability of data from a variety of sensors, including high-resolution RGB, thermal, LiDAR, and multi-/hyperspectral cameras, as well as emerging sensing platforms such as satellites and aerial vehicles that can be captured by multi-temporal, multi-sensor, and sensing devices with a wider view. This study aims to present an extensive survey that encapsulates widely used deep learning strategies for tackling image classification challenges in remote sensing. It encompasses an exploration of remote sensing imaging platforms, sensor varieties, practical applications, and prospective developments in the field.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Remote sensing (RS) images are valuable resources of data to quantify and observe intricate formations on the Earth’s surface.

Remote sensing image classification (RSIC), which task is to automatically assign a semantic label for a given remote sensing image, has been a fast-growing research topic in recent years, and it has significant contributions to monitoring and understanding key environmental processes. Thanks to a large volume of remote sensing data availability, sensor development, and ever-increasing computing powers, rapid advancement in RSIC has been witnessed by its real-world applications, such as natural hazard detection [1, 2], precision agriculture [3, 4], landscape mapping [5], urban planning [6], and climate changes [7]. The enabler of this wide range of applications of RSIC is also attributed to the ability of RS images to capture multi-scale, multi-dimensional, and multi-temporal information. Hence, one of the challenging but important tasks in RSIC is to effectively extract valuable information from the various kinds of RS data to aid further image analysis and interpretation.

Traditional approaches to exploiting features from RS images heavily rely on feature extraction and/or feature selection. The former process produces new features to describe specific spatial and spectral attributes of RS data using a transformation matrix or a set of filtering processes. For instance, knowledge-based approaches depend on spectral characteristics and supervised and unsupervised methods such as canonical analysis and principal component analysis. The latter identifies a subset of feature candidates from a feature pool via selection criteria. Although feature extraction or selection provides useful information to improve the accuracy of RSIC, most of those methods are suboptimal for comprehensively representing original data for given applications [8]. Particularly, their suitability can be even degraded when comes to big data with multi-Sensors, since RS images can vary greatly in terms of time, geo-location, atmospheric conditions, and imaging platform [9, 10]. Therefore, an effective and unified approach is needed to automatically extract pertinent features from diverse RS data.

Deep learning (DL) [11], as a subset of machine learning, has demonstrated unprecedented performance in feature representation and is capable of performing end-to-end learning in various vision tasks, including image classification [12], object detection [13], semantic segmentation [14], and natural language processing [15]. Since the astonishing accuracy was produced by a deep convolutional neural network (CNN) in the large-scale visual recognition challenge [12], CNN and its variants prevailed in many fields [16], along with tremendous successes including those most important yet unsolved issues of modern science, such as AlphaFold [17] which can accurately predict 3D models of protein structures.

Over the last few years, researchers have made an effort to utilize the most advanced techniques in AI for RSIC, from traditional methods in machine learning, all the way through deep learning techniques such as the use of CNN and its variants.

This study aims to fill the gap in the existing literature in RSIC with the following key contributions:

-

An extensive examination of cutting-edge deep learning models through a systematic review, covering a brief description of architectures and frameworks for RSIC. Our review includes concise descriptions of the architectural nuances and frameworks that have shown promise in this domain.

-

A summary of remote sensing datasets, modalities, as well as corresponding applications. This comprehensive resource will serve as a reference for researchers and practitioners navigating the rich landscape of remote sensing data.

-

Suggestions for promising research direction and insights around RSIC. These recommendations aim to catalyze innovation and drive the field forward.

This study serves as evidence of the growing impact of deep learning within the domain of remote sensing image analysis. It sheds light on how deep learning can be a powerful tool for addressing persistent challenges in RSIC and seeks to stimulate further research in this dynamic and essential field.

1.1 Review statistics

Deep learning techniques have been actively implemented for RS-related tasks (i.e., image classification) in the last lustrum. Statistical analysis was conducted using the latest Scopus data for a literature search on the most popular keywords in RS publications: “deep learning” AND “remote sensing” AND “image classification,” ranging the year from 2017 to 2023 (mid-September). The detailed research setting can be found in Table 1. The research results are analyzed from two aspects: (1) the number of conferences and journals published, as shown in Fig. 1, and (2) the distribution of publications across subject areas, as depicted in Fig. 2.

From Fig. 1, it can be clearly seen that the total number of publications (i.e., conference papers + journal articles) is consistently increased yearly in the past 5 years and a particularly significant difference is observed comparing 2021 with 2017, demonstrating fast growth of this research. While journal articles also exhibit a yearly increase, the number of conference papers published in 2021 is reduced compared to the previous year. In addition, it is worth noting that the number of published journal articles in 2021 greatly exceeded the number of conference papers, indicating remarkable development of the aforementioned topic. In Fig. 2, the subject of “Earth and planetary science” turns out to be the most widely applied area of deep learning applications in RSIC, while the computer science field is ranked second with a minor margin in terms of publication count.

The remainder of this article is organized as follows. In Sect. 2, we delve into an overview of existing surveys on deep learning-based Remote Sensing Image Classification (RSIC). Section 3 provides a comprehensive summary of Deep Learning (DL) applications in remote sensing. The various deep learning models pertinent to RSIC are reviewed briefly in Sect. 4. Detailed descriptions of remote sensing datasets and their associated applications are presented in Sect. 5. Section 6 addresses current challenges and outlines potential future research directions. Finally, Sect. 7 offers concluding remarks on this survey.

2 Related work

In the past several years, driven by DL, a great number of RSIC methods sprung up, and consequently, many related survey or review papers have been published, which are summarized in Table 2. These surveys cover various aspects of the field, including the methods used and the content they focus on.

In chronological order, starting in Yao et al. [18] conducted a survey focused on providing data sources for RS and current deep learning-based classification methods. Moving to Li et al. [19] conducted a comprehensive review and comparative analysis of deep learning approaches for RSIC, considering both pixel-wise and scene-wise strategies. The year 2019 witnessed the presentation of several related surveys. Li et al. [20] delved into the realm of hyperspectral image scene classification, revising deep learning methods and offering guidance on how to enhance classification performance. Paoletti et al. [21] offered a detailed review of deep learning algorithms, frameworks, and normalization methods tailored to hyperspectral image classification. Additionally, Song et al. [22] summarized methods based on CNNs for remote sensing scene classification and highlighted challenges and recommendations for CNN-based classification. Cheng et al. [23] discussed the challenges, methods, benchmarks, and opportunities in remote sensing image scene classification, in addition to comparing popular deep learning architectures, including CNN, GAN, and SAE. On the other hand, Alem et al. [24] conducted a comparative analysis of deep CNN models on diverse remote sensing datasets, while Vali et al. [25] reviewed deep learning in remote sensing scene classification from the perspective of multispectral and hyperspectral images. Kuras et al. [26] conducted a review of hyperspectral and LiDAR data fusion approaches for urban land-cover classification, focusing on the use of CNN and CRNN. Zang et al. [27] reviewed deep learning-based land-use mapping methods, including supervised, semi-supervised, and unsupervised learning, as well as pixel-based and object-based approaches. In [28], an overarching view of contemporary deep learning models and some hybrid methodologies for RSIC is presented.

Although the abovementioned surveys have substantially contributed to the literature by reviewing various methodologies and aspects of RSIC, there remains a compelling need for a survey that encapsulates the latest advancements and trends in this rapidly evolving field. The existing surveys, while thorough, often focus on specific subdomains or are somewhat dated given the fast pace of technological progress in deep learning applications for RSIC. This survey is necessitated by several critical factors. (1) Since the publication of the last major surveys, numerous new deep learning architectures have been developed, each accompanied by innovative applications. This necessitates an updated review that cohesively synthesizes these advancements. (2) Recent advancements in publicly available data sources, coupled with their corresponding applications in RSIC, have not been fully addressed in prior surveys. Our work seeks to fill this gap by providing a comprehensive review and categorization of these datasets. (3) Emerging Challenges and Solutions, as the application areas of RSIC expand, new challenges arise, including those related to scalability, data heterogeneity, and scarcity. Addressing these challenges requires a fresh look at the state-of-the-art, which our survey provides. In conclusion, this survey does not merely aggregate existing knowledge but critically analyzes recent innovations and trends, thereby setting a new benchmark for research in RSIC. It aims to catalyze further research and development in a field that is crucial for a wide array of applications, from environmental monitoring to disaster response. This work is intended to serve as a cornerstone for future explorations and technological advancements in remote sensing image analysis.

3 DL in Remote sensing applications

Deep learning methods have been remarkably utilized by the research community in the recent years in RSIC due to their important role in a wide range of applications, such as agriculture, urban and forestry [29,30,31,32,33,34], environment monitoring [35,36,37,38], land mapping and management [39,40,41,42,43], disaster response [44,45,46,47,48,49,50], ecology [51, 52], mining [53, 54], oceanography [55, 56], hydrology [57, 58], archaeology [59, 60], among others. By exploring the Scopus database, it is found that agriculture and forestry are the most RS applications that researchers have used deep learning methods for data analysis. This is followed by environment monitoring, land mapping and management, and disaster response as shown in Fig. 3. Based on these initial statistics, we will focus in this survey paper on the top four RS applications on the list.

3.1 Agriculture and forestry

Countries worldwide are investing billions of dollars in precision agriculture and forestry in order to increase production efficiency while reducing environmental impact.

RS satellite and aerial images are considered very useful sources of information for many agriculture and forestry applications such as:

-

Crop monitoring: Deep learning and RS technology are used widely by researchers and the agriculture industry to provide real-time monitoring of crop growth [61], plant morphology [62], and plantation monitoring [63]. The main advantage of using real-time intelligent RS technology for crop monitoring is providing an accurate understanding of the growth environment that leads to environment optimization and consequently improved production efficiency and quality [64]. It also helps in detecting variations in several parameters of the field such as biomass, nitrogen status, and yield estimation of the crop which determine the need for fertilizer or other actions.

-

Diseases detection: crop health monitoring is a crucial step in avoiding economic loss and low production quality. Traditionally, disease detection and avoiding its spread in crops is done manually which takes days or months of continuous work to inspect the entire crop. Moreover, these methods lack detection accuracy and do not provide real-time monitoring, especially in large crop areas [65]. RS technology with DL algorithms provided practitioners with real-time monitoring capabilities for large crops with high detection accuracy, especially when using UAVs. Further, they improved the ability to control the spread of diseases at critical times which led to reduced losses and improved product quality in precision agriculture. Recent research efforts have focused on improving existing methods in crop disease detection [66, 67], Pest identification and tracking [68, 69], and plant disease classification [67, 70, 71].

-

Weed control: weeds detection and removal is considered one of the most important factors in improving product quality and critical to the development of precision agriculture. Accurate mapping and localization of the weeds lead to accurate pesticide spraying of the weed location without contaminating crops, humans, and water resources. Researchers have put great efforts into using RS technology and deep learning in weed detection [72,73,74,75,76] and weed mapping [77,78,79,80].

-

Precision irrigation: One of the most important applications of modern precision farming where RS technology and DL algorithms play a crucial role in the efficient use of water at the right time, location, and quantity. Aerial and satellite data analysis using efficient DL algorithms helped in soil moisture estimation [81,82,83,84,85] and prediction [86, 87], mapping of center pivot irrigation [88,89,90,91], and estimation of soil indicators [92, 93].

-

Forest planning and management: The modern forest management utilizes RS platforms such as UAVs, airplanes, and satellites to provide crucial data at different spatial and spectral band resolutions. This data is mainly used in creating forest models for monitoring, conservation, and restoration [94]. In recent years, researchers focused on creating deep learning models to analyze data collected from RS platforms in land-cover and forest mapping [95,96,97,98,99], species classification [33, 100,101,102], and forest disaster management [103,104,105].

It is evident that RS technology and platforms along with deep learning methods have recently played an integral role in enabling precision agriculture.

3.2 Disaster response and recovery

Natural disasters such as floods, earthquakes, landslides, tsunamis, hurricanes, and wildfires have a devastating impact on the environment, cities’ infrastructure, and living beings. The modern disaster management cycle consists of the following phases: (1) prevention and mitigation, (2) preparedness, (3) response, and (4) recovery [106]. RS technology and DL methods are widely applied in disaster response and recovery. While disaster response aims to immediately reduce the impact and damages caused by the disaster through damage mapping and estimation, disaster recovery is concerned with bringing life back to normal through reconstruction monitoring and wreckage clearance. Having said that, AI algorithms are still being used in disaster detection and forecasting.

Response to sudden-onset disasters requires spatial information that should be updated in real-time due to their high dynamics. Thanks to the recent advancements in RS technologies and platforms that are capable of providing high levels of spatial and temporal resolution data. Not to mention the recent developments in DL methods that provide real-time analysis for RS data. In the last 5 years, researchers have focused on developing DL methods to analyze RS data in order to provide governments and the research community with tools that help in managing sudden-onset disasters such as floods [48, 107, 108], earthquakes [109,110,111], landslides [112,113,114], tsunamis [111, 115], hurricanes [116, 117], and wildfires [50, 118, 119].

3.3 Environment monitoring

The extraordinary level of air, land, and water pollution has led governments and researchers worldwide to take immediate action to allocate financial resources and efforts toward creating technologies that ensure ongoing and universal surveillance of the environment. Traditionally, governments use a large number of distributed fixed station that consists of advanced sensors and instruments to monitor the environment. With the advancement in the Internet of Things technology, wireless sensors network (WSN) with millions of tiny distributed sensors is widely used to monitor the environment. Recently, crowdsensing platforms, including vehicles like cars, buses, taxis, bicycles, and trains have been equipped with sensors and measurement systems that collect, process, and store data about the environment at practically zero cost. Fascista [120] stated, based on an in-depth review of the literature, that although WSNs offer an attractive solution for environmental monitoring, they suffer from several technical drawbacks including poor data quality, low communication range, reliability, and power limitation. On the other hand, crowdsensing poses some implementation challenges including incentive mechanisms, task allocation, workload balancing, data trustworthiness, and user privacy.

RS technology and platforms offer an attractive solution to these challenges by providing rich data about the environment ranging from RGB images and LiDAR to thermal and hyperspectral data. DL algorithms have also provided reliable tools for extracting information about the environment from the collected RS data. Looking at the literature, it is found that recent studies have developed DL algorithms to analyze RS data for land environment monitoring [121,122,123,124], air monitoring [35, 125,126,127], and marine and water monitoring [36, 128,129,130].

3.4 Land-cover/land-use mapping

Urban growth has historically influenced alterations in regional and global climates by impacting both biogeochemical and biophysical processes. Therefore, remote sensing is widely used for land-cover mapping, land management, and the spatial distribution of landforms to examine earth surface processes and landscape evolution. Land-use classification using remote sensing images and DL methods [131,132,133,134,135] has played a crucial role in effectively identifying diverse land uses, which in turn improved urban environment monitoring, planning, and designing. RS and DL have also been used in land-cover mapping and change detection [136,137,138,139,140] which are employed in natural resource management, urban planning, and agricultural management.

4 DL methods for RS image classification

4.1 Learning approach

4.1.1 Convolutional neural networks (CNNs)

Convolutional neural network (CNN) and its variants have been widely applied to RS applications [22]. The key component in CNN is convolutional operation which involves trainable parameters and aims to extract pertinent features that are associated with specific tasks such as object recognition, segmentation, and tracking. Figures 4 and 5 depict commonly used CNN modules in RSIC, including residual connection [141], dense blocks in the Dense Convolutional Network (DenseNet) [142], inception module [143], squeeze and excitation inception module (SE-inception) [144], dilated convolution [145], and depth-wise separable convolution [146]. Key features of these learning modules are described as follows:

-

Residual connection: The module presented by He et al. [141] uses skip or residual connections between layers to facilitate the gradient propagation and thus help to achieve deeper neural network architectures with better accuracy. It has been widely used in hyperspectral image classification [147,148,149]. This module is illustrated in Fig. 4a

-

Dense blocks: Introduced by Huang et al. [142] dense blocks enhance the feature reuse by dense connectivity. In other words, the information flow between layers is given by direct connections from an original layer to all the subsequent layers. Some RS-related works have implemented this block with promising results [150, 151]. This block is illustrated in Fig. 4b.

-

Inception module: Szegedy et al. [152] proposed this module, which allows the use of multiple filter sizes in parallel, instead of a single filter size in a series of connections. The motivation of this module is that multi-scale convolutional filters have the potential to enrich feature representation as the architectures go deeper into the number of layers. The illustration of this module is provided in Fig. 4c. RS-related works have successfully used this module [153,154,155].

-

SE-inception: The Squeeze-and-Excitation block (SE block) was introduced by Hu et al. [144] as an architectural unit that boosts the performance of a network. This architectural unit block empowers the architecture with a dynamic channel-wise feature calibration. The specifics of this architectural unit block are illustrated in Fig. 5a. The SE block has been extensively utilized for RS tasks [156,157,158].

-

Dilated convolution: Yu et al. [145] introduced this type of convolution, which expands receptive fields by introducing gaps between the values of the filter kernel, effectively “dilating” the filter. The expansion is controlled by a dilation factor (\({{\varvec{l}}}\)). Figure 5b illustrates dilated convolution with \({{\varvec{l}}} = 2\). This technique increases the receptive field without increasing computation. In RS, several studies have reported the use of dilated convolutions with promising results [159,160,161,162].

-

Depth-wise separable convolution: Introduced by Chollet [146], depth-wise separable convolution divides standard convolution operations into two steps. First, a depth-wise convolution is applied to each input channel independently. Second, a point-wise convolution is performed, i.e., a 1 × 1 convolution, mapping the outputs from the depth-wise convolution onto a new channel space. Details of this architectural module are illustrated in Fig. 5c. Experimental results show satisfactory results with the implementation of this architectural module for RS-related tasks [163,164,165].

Many existing works use one or more aforementioned convolutional network modules with various network connections or designs for RSIC. Zhong et al. [148] adopts residual connections in hyperspectral data cute, aiming to extract discriminative features from both spectral signatures and spatial contexts in hyperspectral imagery, and outperformed popular classifiers such as kernel support vector machine (SVM) [166], stacked autoencoders, and 3D CNN.

4.1.2 Generative adversarial networks

A generative adversarial network (GAN) [167] has been proposed as semi-supervised and unsupervised DL models that provide a way to learn deep representations without extensively annotated training data. Generating fake data is a key component in GAN, which basically based on the two main networks that represent the GAN. A Generator (G) network tries to generate “realistic” samples and a Discriminator (D) network distinguishes between the real and generated samples. Figure 6 shows the main concept of GAN. These semi-supervised and unsupervised DL representations have been widely applied to RS applications. Jian et al. [168] developed one class classification technique based on GAN for remote sensing image change detection aiming to train the network only with the unchanged data instead of both the changed and unchanged data. Jiang et al. [169] constructed a GAN-based edge-enhancement method for satellite imagery super-resolution reconstruction to ensure the reconstruction of sharp and clean edges with finely preserved details. Also, Ma et al. [170] introduced a GAN-based method capable of acquiring the mapping between low-resolution and high-resolution remote sensing imageries which aims to restore sharper details with fewer pseudotextures, and outperformed popular single-image super-resolution methods, including traditional and CNN-based techniques.

4.1.3 Autoencoders and stacked autoencoders

An autoencoder (AE) is a neural network that uses back-propagation to generate an output almost close to the input value in an unsupervised learning framework. As it is shown in Fig. 7a, an AE takes an input and compresses its representation into a low-dimensional latent space. This process is done by the encoder component of the AE. On the other hand, the decoder component of the AE reconstructs the input, scaling the latent space representation to the original input dimension.

Also known as deep autoencoder, stacked autoencoders (SAE) are extensions of the basic AE, consisting of several layers of encoders and decoders that are stacked on top of each other, as shown in Fig. 7b. The use of several layers, in the encoder and decoder portion of the architecture, allows the model to increasingly abstract the representation of the original input as it moves deeper into the network. This makes SAE capable of learning complex features when compared with basic AE.

AE and SAE have emerged as powerful tools for enhancing the performance of DL models for RSIC tasks. For instance, Lv et al. [171] proposed a combination of SAE with an extreme learning machine (ELM) [172]: SAE-ELM. This ensemble-based algorithm leverages the benefit of the two key components to address challenges in RSIC, including limitation and complexity of the data. The SAE-ELM creates diverse base classifiers through feature segmentation and SAE transformations and accelerates the learning process with the use of ELM. The proposed method showed evidence of improvement in classification tasks and adaptability to different types of remote sensing images. Liang et al. [173] proposed the use of stacked denoising AE for RSIC. This model was built by stacking layers of denoising AE, using the noise input to train the algorithm in an unsupervised approach layer-wise, and turning the robust expression into characteristics by supervised learning using back-propagation. The method outperformed traditional neural networks and SVM performance. On the other hand, Zhou et al. [174] suggested a condensed and discriminative stacked AR (CDSAE) for Hyperspectral image (HSI) classification. This method consisted of two stages: The first stage is a local discriminant, and the second is an effective classifier. The CDSAE aimed to produce highly discriminative and compact feature representation from low-dimensional features. Experimental results demonstrate its effectiveness when compared to traditional methods for HSI classification. Additionally, Zhang et al. [175] introduced the use of recursive autoencoders (RAE) as an unsupervised method for HSI classification. This method utilizes spatial and spectral information to learn features from the interaction of the neighborhood of the targeted pixel in an HSI. This approach outperformed methods such as SVM, SVM-CK [176], and SOMP [177]. Similarly, Zhou et al. [178] presented a semi-supervised method for HSI classification with stacked autoencoders (Semis-SAE). The SAE used pre-trained hyperspectral and spatial features, followed by a fine-tuning stage prior to a classification fusion composed of the probabilities from the SAEs with a Markov random field model. The Semis-SAE outperformed state-of-the-art ML methods, such as CNN, GANs, and SVM.

4.1.4 Recurrent neural networks

Recurrent neural networks (RNNs) are a type of artificial neural network designed to recognize patterns in sequences of data that has been widely used in language modeling, text generation, and speech recognition. In RNNs, hidden layers act as the network’s memory, which store information based on previous inputs, integrating not only the current input but also the knowledge accumulated from prior data. Figure 8 shows basic structures of FNN and RNN, where (x) is the input layer, (h) is the hidden layer/s, and (y) is the output layer. A, B, and C are the network parameters that are learned during the training of the model.

A well-known type of RNN is called long short-term memory (LSTM) [179] which was first introduced to overcome the gradient vanishing and exploding problem. Several variants of LSTM architecture have been proposed as an effective and scalable model to learn long-term dependencies [180]. LSTM has been used for the land-cover classification via multi-temporal spatial data derived from a time series of satellite images and showed competitive results compared to the state-of-the-art classifiers with the remarkable advantage of improving the prediction quality on low-represented and/or highly mixed classes [181].

The configuration of the input and output determines the design of the RNN architecture, which can be implemented in diverse ways. Among the main such architectures: (1) One-to-one which is known as the vanilla neural network and has been used for general machine learning problems, in which a single input is used to generate a single output. (2) One-to-sequence, in which a single input is employed to produce a sequence of outputs. (3) Sequence-to-sequence, which involves taking a series of inputs and producing a corresponding sequence of outputs. (4) Sequence-to-one takes sequential data to utilize it as an input to generate a single output.

RNN and its variants have been used for RSIC. Mou et al. [182] proposed a deep RNNs architecture with a new activation function to characterize the sequential property of a hyperspectral pixel vector for the classification task. Experimental results showed promise of RNNs in capturing pertinent information for hyperspectral data analysis. RNN variant such as a Patch-based recurrent neural network (PB-RNN) system has been introduced for classifying multi-temporal remote sensing data [183]. PB-RNN is considered as a sequence-to-one architecture and used multi-temporal-spectral-spatial samples to deal with pixels contaminated by clouds/shadows present in multi-temporal data series. Recently, a bidirectional long short-term memory (Bi-LSTM)-based network with an integrated spatial-spectral attention mechanism was developed for hyperspectral image (HSI) classification, enhancing classification performance by emphasizing relevant information [184]. Experiments on three popular HSI datasets demonstrated its superiority over unidirectional RNN-based methods.

4.1.5 Vision transformer-based approach

Originally implemented to solve natural language processing (NLP) tasks, transformers [185] have crossed the threshold of a single domain with high success. Transformers-based models are getting popular in the research community for different fields, including computer vision, RS, and bio-informatics [186,187,188]. Transformers utilize the self-attention mechanism to handle long-range dependencies of a given sequence, providing the model with a larger “memory” in comparison with traditional recurrent neural networks. For NLP, transformers can deal with larger sequences by the use of tokens, which provide the positional information required to preserve the context of the input. In vision transformers (ViT) [189], this methodology is translated to computer vision, in which the images are divided into patches, as an analogy of tokens and sequences, and then, each patch is linearly projected along with the corresponding embedding positional information. Self-attention is known as the key component within a transformers-based framework. This component helps to capture long-range similarities between a given sequence of tokens by updating the token with aggregated global knowledge. This attention mechanism is mathematically described as follows:

Illustration of the vision transformer (ViT) [189] architecture. On the left side, the notion behind ViT is presented, including the initial embedding layer and the transformer encoder. Meanwhile, on the right side, details about the transformer encoder with emphasis on the multi-head self-attention mechanism are provided

where the vectors Q, K, and V represent the queries, keys, and values, respectively. In this mechanism of attention, the matrices corresponding to the queries and the keys are dot multiplied, as an attention filter operation, and then, the output of this operation is normalized through a division operation with the \(\sqrt{d_k}\) (the dimension of K). The softmax operation provides a probability distribution for the weights that are being multiplied against the matrix corresponding to the values.

For multi-head self-attention, the aforementioned procedure is repeated in parallel h stands for heads, with different learned linear projections of K, Q, and V (\({\textbf {W}}^Q, {\textbf {W}}^K, {\textbf {W}}^V\)). The outputs from the attention functions are concatenated and linearly projected with (\({\textbf {W}}^O\)). In summary, the multi-head self-attention mechanism can be represented as:

where

The success of ViT-based models has increased the interest in this technology within the RS area, and several techniques have been explored in recent years for tasks involving very-high-resolution Imagery (VHR), hyperspectral imagery, and synthetic aperture radar imagery. Figure 9 illustrates the ViT architecture used with hyperspectral data.

For the remote sensing scene classification task, Deng et al. [190] presented a vision transformer-based approach in conjunction with CNN, in which two streams (one ViT and another CNN) generate concatenated features, within a joint optimized loss function framework. On the other hand, Ma et al. [191] explored the use of a transformer-based framework with a patch generation module, analyzing the effect promoted by using heterogeneous or homogeneous patches.

In the task of HSI classification, there are several efforts have been made to develop either purely transformer-based architectures or a hybrid approach that combines the merits of CNN and transformers. For instance, He et al. [192] presented HSI-BERT, a pure transformer-based architecture, with bidirectional encoders. This architecture captures the global dependencies of a target pixel, obtaining a flexible architecture that can be generalized for prediction over different regions with the pre-trained model. Another effort in pure transformers-based architecture is provided by Zhong et al. [193], proposing a spectral-spatial transformer network. Spatial attention leverages the local region feature channels with spatial kernel weights; meanwhile, spectral association leverages the integration of spatial locations for each corresponding feature map. Hybrid efforts, by combining CNN and transformers, have achieved promising outcomes for hyperspectral pixel-wise classification. For instance, the work presented by Wang et al. [194] presented a multi-scale convolutional transformer, which aims to capture spatial-spectral information effectively from a given input. Introduced by Paheding et al. [195] GAF-NAU utilizes the Gramian angular field encoder over the hyperspectral signal to produce a 2D representation for each pixel. This 2D signal is used as input in a U-Net-like framework that combines the attention mechanism with multi-level skip connections. The experimental outcomes from this proposed architecture outperform traditional approaches for pixel-wise hyperspectral classification.

Problems related to the use of SAR image interpretation have been analyzed using pure transformer-based architecture. For instance, Dong et al. [196] utilized vision transformers as a method for PolSAR (Polarimetric SAR) image classification. Each pixel is represented as a token within the architecture, and the long-range dependency is captured by the use of the self-attention mechanism. A hybrid methodology was proposed by Liu et al.[197], in which the merits of CNN and transformers were combined to capture, both local and global, feature representation, for the SAR image classification task. On the other hand, the work presented by Chen et al. [198] addressed the detection task for aircraft with SAR imagery by using transformers within a geo-spatial framework composed by image decomposition, geo-spatial contextual attention in multi-scale fashion and image re-composition. Zhang et al. [199] proposed a feature relation enhancement framework, in which a fusion pyramid structure is adopted to combine feature representation at different scale levels, in addition to the use of an attention mechanism for the improvement of the position context information.

4.2 Learning type

4.2.1 Multi-task learning

The goal of the machine learning paradigm known as “Multi-task learning” (MTL) is to learn several related tasks simultaneously [200], compared to the one that learns specific tasks separately as shown in Fig. 10a. The use of MTL is to ensure that the information in one task may be used by other tasks, enhancing the generalization performance of all the involved tasks. In this context, task refers to learning an output target from a single input source [201]. Hence, MTL employs the domain knowledge in the training signals of related tasks as an inductive bias for improving the generalization [202]. This is accomplished, as shown in Fig. 10b, by learning many tasks concurrently while utilizing a common representation; what is learned for one task can aid in learning other tasks.

Multi-task learning in deep learning is often carried out with either hard or soft parameter sharing of hidden layers [203]. The method of MTL that uses hard parameter sharing is the most used one in neural networks. It is often implemented by preserving several task-specific output layers while sharing the hidden levels across all activities. Overfitting is considerably reduced by hard parameter sharing. On the other hand, with soft parameter sharing, every task has a unique model with unique parameters. To encourage the model’s parameters to be similar, the distance between them is regularized.

The MTL architecture has been used to concurrently complete the tasks of road identification and road center-line extraction [204]. Due to its superior capacity to maintain spatial information, U-Net [205] was chosen as the MTL’s basic network. The multi-task U-Net design contains two networks, a road detection network and a center-line extraction network, which operate simultaneously during training. The hierarchical semantic features obtained from the road detection network are convoluted to create the road center-line extraction network.

4.2.2 Active learning

Active learning (AL), also referred to as query learning or optimal experimental design, is a sub-field of machine learning where the learner makes queries or selects actions that impact what data is to be added to its training set [206, 207]. It is instrumental in scenarios where the labeled data are either scarce or expensive to label the data (such as speech recognition, information extraction, and RSIC). In this model, a small training set is used to train the model initially, and then, an acquisition function decides to obtain a label for unlabeled data points from an external oracle (generally a human expert). These labeled data points are added to the training set, and the model is now trained on this updated training set. Repetitions of this process lead to an increase in the size of the training set.

Active learning for RSIC is a logical choice because it can utilize scarce labeled and abundant unlabeled data. AL has been used in HSI classification [208], in which DRDbSSAL (Discovering Representativeness and Discriminativeness by Semi-Supervised Active Learning) architecture was proposed to extract representative and discriminative information from unlabeled data. This architecture employs multiclass level uncertainty (MCLU), a state-of-the-art approach commonly used in RSIC to identify the most informative samples [209, 210]. Using semi-supervised active learning, it tries to identify representativeness and discriminativeness from unlabeled data using a labeling procedure. It is particularly efficient when there are only a few labeled samples and catches the overall trends of the unlabeled data while preserving the data distribution.

In a work by Haut et al. [211], AL was employed by a B-CNN (Bayesian-Convolutional Neural Network) that was based on the Bayesian Neural Networks (BNNs) [212, 213] for HSI classification. The BNN is a kind of artificial neural network (ANN) that may provide uncertainty estimates and a probabilistic interpretation of DL models while being resistant to overfitting. They do so by inferring distributions across the models’ weights, learning from small data sets, and avoiding the tendency of traditional ANNs to generate overconfident predictions in sparse data areas. Applying the same Bayesian methodology to CNNs can help them withstand overfitting on small data sets while improving their generalization capability.

4.2.3 Transfer learning

In the field of machine learning, transfer learning refers to the process where a model, initially trained for a specific task, is repurposed and utilized for a different but related task. It depicts a situation where knowledge acquired in one context is employed to improve optimization in a different context. TL is commonly employed when the new dataset, intended for training the pre-trained model, is smaller in size compared to the original dataset. TL can convey four distinct types of knowledge for target tasks: relational knowledge, feature representation, parameter information, and instance knowledge [214]. Deep learning frequently transfers representations by reusing models built on a source model because deep learning automatically learns and keeps the feature representation on network layers and weights. Figure 11 depicts the overall TL procedure. TL typically involves the following three stages:

-

Rich source domain data \(\textit{X}_{S}\) is used to train a deep learning model \(\textit{Y} = f_{A}\) for source domain Task A until the optimal weights converge and the cost function \(\textit{J}_{A}\) is minimized.

-

The deep learning model \(\textit{Y}' = f_{B}\) for Task B is built on top of this learned model. The new model \(\textit{Y}' = f_{B}\) reuses the first n layers from the original model (n = 3 in Fig. 11). This ensures that \(\textit{f}_{B}\) creates representations that adhere to the information discovered in the source domain.

-

Using the sparse, labeled training data \(\textit{X}_{T}\), the transferred model \(\textit{Y}' = f_{B}\) is trained to minimize \(\textit{J}_{B}\).

The result is a deep learning model for Task B’s target domain that incorporates information from the source domain.

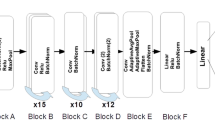

In [215], the TL was conducted at three levels: shallow, middle, and deep. In the shallow experiment, features were extracted from the initial blocks of the base models, incorporating the small classification model. The intermediate experiment removes the block from the center of the base model. On the other hand, the deep experiment retained the original base model’s blocks, excluding the last classification layers. Experimenting with two CNN models on three distinct remote sensing datasets (UC Merced, AID, and PatternNet) demonstrated that TL, especially fine-tuning, is a robust approach for classifying remote sensing images, consistently outperforming a CNN with randomly initialized weights.

4.2.4 Ensemble learning

Ensemble learning (EL) combines outputs from multiple models to achieve superior predictive performance [216]. The four primary categories of EL techniques are as follows:

-

1.

Boosting, as shown in Fig. 12a, is a technique that creates several classifiers to increase any classifier’s accuracy. A classifier chooses its training set depending on how well its last classifier performed. A sample that a prior classifier has wrongly categorized is chosen more frequently than one that has been successfully classified. As a result, boosting creates a new classifier that can successfully process the new data set.

-

2.

Bagging or bootstrap aggregating, as shown in Fig. 12b, is an ensemble learning technique in machine learning designed to improve prediction accuracy by training separate models with bootstrap samples. It typically aids in reducing variance and mitigating overfitting. In classifiers that use bagging, each classifier’s training set is generated by drawing N instances at random with replacement from the original dataset. This process, known as bootstrap sampling, creates multiple different training sets. In this case, many of the original instances might be repeated, while others might be omitted from the training set. The learning system from a sample creates a classifier, and the final classifier is created by combining all of the classifiers created from the many trials.

-

3.

Stacking, as shown in Fig. 12c, is a technique that uses a variety set of models as base learners and utilizes another model or the combiner to aggregate prediction. Here, the combiner is referred to as a meta-learner. The base models are trained first, and their predictions are aggregated as input features for the meta-learner.

-

4.

Random forest, as shown in Fig. 12d, is an ensemble learning approach that includes training a large number of decision trees and combining those decision trees’ predictions through voting. Instead of having just one decision tree, the random forest method uses sample data from the population to generate several decision trees. When merged, the many samples (bootstrap samples) produce many individual trees that make up the Random Forest.

The EL has been used for the semi-supervised classification of RS scenes [217]. The residual convolutional neural network (ResNet) [141] extracts initial image features. EL is used to exploit the information included in unlabeled data in order to generate discriminative picture representations. Initially, T prototype sets are generated periodically from all accessible data. Each set consists of prototype samples that serve as proxy classes for training supervised classifiers. Afterward, an ensemble feature extractor (EFE) is produced by combining T-learned classifiers. The final image representation is created by concatenating the classification scores from all T classifiers by feeding each image’s preliminary ResNet feature into EFE. The experimental results on the publically available AID and Sydney datasets demonstrate that the learned features and semi-supervised technique provide improved performance.

EL has been also used to categorize multiple sensor data using a decision-level fusion technique [218]. CNN-SVM ensemble systems were used to classify Light Detection and Ranging (LiDAR), HSI, and extremely high-resolution Visible (VIS) images. A random feature selection is used to construct two CNN-SVM ensemble systems, one for LiDAR and VIS data and the other for HSI data. VIS and LiDAR data are extracted for texture and height information first. Together with hyperspectral data, these extracted features are used in a Random Features Selection technique to generate various subsets of retrieved features. All feature spaces are provided as input layers to different deep CNN ensemble systems. Weighted majority voting (WMV) and behavior knowledge space (BKS) were applied to each CNN ensemble as the final classifier fusion approaches. The result indicated that the suggested technique produces more precision and outperforms several current methods.

4.2.5 Multi-instance learning

MIL general architecture [219]

The conventional data description applies to single-instance learning, in which each instance of a learning object is characterized by several feature values and, perhaps, an associated output. In contrast, a bag (learning sample or object) is linked to several instances or descriptions in multiple instance learning (MIL) [219, 220]. The objective of a MIL classification is to assign unseen bags to a particular class driven by the class labels within the training data or, more precisely, to use an estimation model constructed from the labeled training bags. An instance-based algorithm’s overall design is shown in Fig. 13. It is represented as a bag, \(Y \in \mathbb {N}^\mathbb {Y}\), holding n instances, \(y_{1},\ldots , y_{n} \in Y\).

There are four different options to choose from:

-

1.

K (Set of bag labels): K’s length is the number of classes.

-

2.

\(\Lambda\) (Set of instance labels): Bag sub-classes or instance-level concepts might correlate to instance labels.

-

3.

M (MIL assumption): It requires the construction of an explicit mapping between the set of instance labels and the set of bag labels. It is a function \(M: \mathbb {N}^ \Lambda \rightarrow \mathbb {K}\) that links the class label of instances within a bag to the class label assigned to the bag.

-

4.

A technique for locating the instance classifier \(m: \mathbb {Y} \rightarrow \Lambda\) (utilized for the classification of instances within each bag).

Since each unique instance requires a class label, single-instance learners cannot be applied directly to MIL data. (A bag classifier is required.) A MIL hypothesis is performed over instance labels to get the bag label.

For the application of MIL to RS, the input images in an RS system are broken down into multiple sub-images, each of which is handled as a different instance of that class. The learning system will then discover which sub-image is crucial for correctly predicting the image’s class. The total accuracy of the algorithm may be increased by constructing a neural network that can concentrate on the area of the picture that is more crucial to the categorization.

The MIL has been used for classifying scenes in RS [221]. Generally, one segment of a scene identifies its class, while the others are unimportant or belong to another class. The first stage of the proposed method splits the picture into five instances (the center image plus the four corners). Subsequently, a deep neural network is trained to retrieve intricate convolutional features from individual instance and ascertain the optimal weights for their fusion through weighted averaging

Multiple instance learning has been employed as the end-to-end learning system [221]. Here, two instances were used: one to characterize the spectral information of multispectral (MS) photographs and the other to capture the spatial information of panchromatic (PAN) images. The relevant spatial information of PAN and the associated spectral information of MS are extracted using deep CNN and stacked autoencoders (SAE), respectively. The last step was joining the features from the two instances together using fully connected layers. Four aerial MS and PAN images were used in classification studies, and the results showed that the classifier offers a workable and effective solution.

4.2.6 Reinforcement learning

Reinforcement learning (RL) is a machine learning paradigm where an agent learns to make decisions by taking actions in an environment to maximize some notion of cumulative reward [222]. Unlike supervised learning, where the model learns from a dataset of input-output pairs, RL focuses on learning from the consequences of actions, guided by a reward signal. This framework makes RL particularly well suited for problems where an agent interacts with an environment, making it applicable to various remote sensing tasks.

In remote sensing, RL has been utilized for tasks such as satellite task scheduling, resource management, and dynamic path planning for unmanned aerial vehicles (UAVs). The key advantage of RL in these scenarios is its ability to handle sequential decision-making problems and adapt to changing environments.

One application of RL in remote sensing is dynamic path planning for UAVs. UAVs are increasingly used for environmental monitoring, disaster response, and agricultural surveillance. RL algorithms, such as Q-learning or deep Q-networks (DQN) [223], can be employed to optimize the flight paths of UAVs to maximize coverage, minimize energy consumption, or avoid obstacles [224]. By learning from interactions with the environment, the UAV can adapt its path in real-time to changes in the environment, such as moving obstacles or areas of interest.

Another significant application is satellite task scheduling, where multiple satellites need to be coordinated to maximize the overall mission objectives, such as maximizing data collection or minimizing observation gaps. RL techniques can be used to optimize the scheduling of satellite observations, taking into account various constraints like limited satellite resources, orbital dynamics, and conflicting observation requests [225]. This can result in more efficient use of satellite resources and improved data acquisition strategies.

RL has also been applied to the problem of data fusion in remote sensing, where information from multiple sensors needs to be integrated to produce a comprehensive understanding of the observed environment. By treating the fusion process as a sequential decision-making problem, RL algorithms can learn optimal strategies for combining data from different sources to enhance the accuracy and reliability of the resulting information [226].

Despite its potential, the application of RL in remote sensing comes with challenges, such as the need for large amounts of data to train the models and the complexity of accurately modeling the environment. However, ongoing research is addressing these challenges [227], making RL a promising tool for advancing remote sensing technologies.

5 Sensor types and remote sensing datasets

A wide variety of datasets has been collected using an array of sensors. In this section, we will examine different types of sensors and describe different categories of remote sensing datasets.

5.1 Sensor types

In RS, sensors can be described as mechatronic instruments that comprise electrical, mechanical, and computing elements. Carried on board satellites, airborne vehicles, or installed (in situ) on the ground, they record electromagnetic signals as digital data to study Earth processes or atmospheric phenomena.

Satellite-mounted sensors can cover large areas of the Earth’s surface, but are limited to the satellite’s orbital path and are obstructed by clouds [228]. Example applications for satellite RS include monitoring forest fires [229], drought [230], atmospheric particulate matter concentrations [231], and sea ice thickness [232].

Airplane, helicopter, and unmanned aerial vehicle (UAV) mounted sensors have the advantage of high to very-high spatial resolution, custom flight paths, and Light Detection and Ranging (LiDAR) capabilities; however, they require flight operation efforts and have relatively small area coverage. Example applications include crop monitoring and vegetation mapping (Table 3), disaster response (Table 4), and environmental monitoring (Table 5).

Ground-based remote sensing systems (GRSS) are installed on the Earth’s surface, where several sensors are spatially distributed and accessed collectively. Example applications include: in situ real-time monitoring of algae blooms and water quality inland and in oceans [233]; landslide mapping and early warning [234]; distributed surface temperature; and wind speed profile measurement [235].

Sensors can be passive or active. An example of a passive sensor is a satellite-mounted infrared (IR) camera. It captures the thermal radiation emitted or reflected by objects on the Earth’s surface. In this case, the heat radiation originates from the Sun and reflects into the IR camera aperture. An active sensor, such as radio detection and ranging (Radar), produces radiation energy to expose the objects it is sensing by capturing the reflected electromagnetic radiation.

Sensor resolution is an important characterization of the imaging sensor modality. Three types of resolution are meaningful in RS, namely spatial, spectral, and temporal resolution [236]. The composition of these types of resolution can affect the feasibility of RS applications as shown in Fig. 14. Spatial resolution refers to the sensor’s ability to resolve small details. For example, a satellite image might have a resolution of 1 pixel per meter, whereas a UAV sensor may have twice the spatial resolution at 1 pixel per 0.5 meter, i.e., 2 pixels per meter. Spectral resolution refers to the number of discrete electromagnetic radiation bands the sensor can process, i.e., record the average power from. A high spectral resolution sensor is sensitive to narrower, and more, spectral bands. For a given spectral sensing range, a low spectral resolution sensor will have fewer, and wider, spectral bands, than a high spectral resolution sensor. For example, a color camera with red, green, and blue (RGB) channels (3 bands between 450 and 650 nm), has higher spectral resolution than a bandpass (1 band between 1150 and 1300 nm) short-wave infrared (SWIR) camera. Finally, temporal resolution in RS refers to the sensor’s ability to repeat sensing the same area. For example, a UAV-mounted sensor has a much higher temporal resolution than a satellite sensor, which requires a long time to complete the Earth orbit and return to the designated area for repeated sensing [237].

Earth’s atmosphere blocks some electromagnetic wavelengths due to the presence of Ozone, water, carbon dioxide, and other particles. This protects the surface from dangerous radiation such as X-rays and high-energy ultraviolet (UV) wavelengths. RS sensors are developed to measure the radiation that is not blocked by the atmosphere, i.e., that passes through the “atmospheric window.”

Ultraviolet (UV) sensors are sensitive in the range between 10 and 400 nm. RS applications that utilize UV sensors include Ozone layer detection, ocean color, and oil spill detection [238, 239].

Red–green–blue (RGB) sensors are essentially color cameras sensitive to the visible spectrum color bands 380 nm (shortest blue) to 850 nm (longest red). This is the range of wavelengths the human eye is sensitive to. The Landsat-8 satellite, for example, includes RGB sensors as follows: red (640–670 nm); green (530–590 nm); and blue (450–510 nm) [240]. Some examples of RGB use in RS include urban sprawl and drought mapping [241, 242].

Near infrared (NIR) sensors are sensitive to the electromagnetic band between 850 and 900 nm. In addition, short-wave infrared (SWIR) are sensitive between 900 and 2500 nm. These two bands measure reflected infrared radiation, as opposed to thermal radiation, which requires medium and long infrared red (MWIR, LWIR) sensors, to detect. These span the wavelengths between 3000 to 5000 nm, and 8000 to 12000 nm, respectively. RS applications utilizing infrared sensors include: NIR and SWIR in the estimation of soil carbon content [243]; vegetation canopy studies using near-infrared imaging [244]; and thermal imaging for urban climate and environmental studies [245].

The passive microwave electromagnetic range is between 1 and 200 GHz (1.5 and 300 mm). Like thermal sensors, passive microwave sensors collect radiation emitted by objects. Water and oxygen molecules in Earth’s atmosphere absorb some of the shorter wavelengths. RS applications include monitoring the spatial distribution of permafrost [246] and land surface temperature [247]. Beyond the passive microwave radio are the higher-frequency detection and ranging (RADAR) waves. Synthetic aperture radar (SAR) sensors are active and send microwave pulses that reflect off of objects such as the Earth’s surface back to the transmitter, usually on a satellite. An example application is the estimation of vegetation thickness for forest fire studies [248], the study of sea surface winds and waves from spaceborne SAR [249], arctic ice thickness monitoring [250].

Multispectral imagery refers to the utilization of between 3 and 10 bands in the electromagnetic spectrum. RGB, for example, can be considered multispectral imaging as it collects information from three color bands. Also, the Landsat-8 satellite can measure 11 bands from indigo to Thermal IR, in roughly 40 nm steps, i.e., bands [240]. Example applications are in: disaster response with multispectral SAR [251], and multispectral RGB and NIR [252]; land mapping using multispectral LiDAR [253]; and agriculture and forestry [254,255,256].

Hyperspectral imaging has a much higher spectral resolution than multispectral, with narrower bands between 10 and 20 nm, as well as the measurement of from hundreds to thousands of bands [257]. The Hyperion (EO-1 satellite) [258], for example, measures 220 spectral bands between 400nm (violet) and 2500nm (SWIR). Example applications utilizing hyperspectral imaging are: land mapping [253]; agriculture and forestry [255, 259]; and reservoir water quality monitoring [260].

5.2 Aerial datasets

Unmanned aerial vehicles (UAVs) are commonly used nowadays as a remote sensing platform that holds different types of imaging devices ranging from RGB, and thermal cameras to hyperspectral and miniaturized SAR devices. Despite the fact that UAVs have limited power sources and can only cover relatively limited areas compared to their satellite counterpart, UAVs offer an attractive solution when on-demand images from low altitudes are required in time-sensitive applications. Further, with their availability, low cost, easy-to-use, and high operational capability to capture images at high temporal and spatial resolutions, UAVs market has grown dramatically over the last decade and they are now used widely in different RS applications.

We used the data published in [261] to show the difference between using UAV and satellite platforms in terms of temporal, spatial, and spectral resolutions as well as swath. In [261], the researchers categorized the types of satellites into three categories as follows:

-

Global monitoring satellites (GM) such as MODIS Terra work in high orbit and provide high temporal resolution and relatively high swarth but offer a moderate spatial and spectral resolution.

-

Environmental monitoring satellites such as Landsat and Sentinel-2 provide moderate temporal, spatial, and spectral resolutions and high swath.

-

Civilian satellites such as Pleiades or Ikonos provide high spatial resolution but low temporal and spectral resolution as these satellites are at low orbit.

While different types of satellites provide different levels of resolutions, all UAV types guarantee high temporal and spatial resolution; however, they provide low swath. Nevertheless, UAVs offer an attractive solution for RS applications that require high temporal and spatial resolutions such as agriculture and disaster response. On the other hand, UAVs are not used widely in land-cover/land-use mapping due to the need for a high swath. Figure 14 illustrates the required resolution and swath in different RS applications and what resolution is offered by different RS platforms as indicated in [261].

With this big interest and growth in using UAVs as a remote sensing platform by governments and the RS research community, we present, in this section, a summary of up-to-date public UAV (aerial) datasets that were collected or synthesized over the last decade. In contrast to the very few existing review papers [262,263,264,265,266], we summarize the most popular and recent UAV datasets that cover the RS applications presented in section 4.2 (i.e., agriculture and forestry, environment monitoring, disaster response, land mapping). This summary of the available UAV datasets should greatly help the research community in its efforts to develop DL algorithms for aerial data analysis.

5.2.1 Datasets in agriculture and forestry

Developing reliable and robust DL methods for crop monitoring, disease detection, weed control, plant classification, and other precision agriculture and applications requires a high-quality, large-scale dataset. Practically, it is hard to build such datasets due to the cost and efforts that are needed for image acquisition, classification, and annotation. Therefore, datasets that are publicly available play an integral role in fostering remote sensing scientific progress and significantly reducing the cost and time needed for dataset preparation. In this subsection, we present a tabulated summary (Table 3) of recent publicly available datasets in the field of RS in agriculture and forestry.

The datasets are classified based on the application within precision agriculture. The table also provides the reader with a link to the dataset website as well as a brief description of the contents of the dataset. Our search was limited to aerial images that are acquired by UAVs, drones, airplanes, or any flying device. We also provide the sensor type in each dataset which is entirely dependent on the type of application [65], as indicated in Fig. 15. For example, multispectral images are used mainly in precision irrigation and disease detection while RGB is mainly used for weed control.

5.2.2 Aerial datasets for disaster response

Unlike agriculture and forestry, finding public datasets of aerial images for disaster response can be challenging. As shown in Fig. 16, 53\(\%\) of the data sources for damage assessment as a result of natural disasters are acquired by satellites and only 21\(\%\) are acquired by UAVs [276]. However, with the increased interest in using UAVs for disaster response and damage assessment over the last 5 years, it is expected that UAVs will gain more volume as a source of data than satellites due to their high temporal and spatial resolution. Therefore, we are presenting in this section the publicly available aerial image datasets which are categorized by the type of disaster and ordered by the date of the last update of the dataset as shown in Table 4. A brief description of the dataset (based on the publishing source) is also provided.

5.2.3 Aerial datasets for environment monitoring

Due to the advantages of UAVs mentioned earlier in this section, they have been increasingly used for environmental monitoring, especially in hard-to-reach areas. UAV remote sensing technology is capable of operating at different spatial resolutions while keeping a high temporal resolution. Furthermore, with the recent advancements in the miniaturized multispectral and LiDAR sensors, UAVs have become the best choice for distinguishing between natural and pollutant materials and building precise 2D/3D maps of the land surfaces [120]. In recent times, unmanned aerial vehicles (UAVs) have emerged as a significant transformative factor in marine monitoring. They play a crucial role in addressing biological and environmental issues, encompassing tasks such as monitoring invasive species, conducting surveys and mapping, observing marine animal activities, and monitoring marine disasters [281, 282]. Imagery data acquired by UAVs are normally analyzed using DL which requires datasets of real images collected by UAVs. In this subsection, we present the recent publicly available datasets based on our review of the literature. Table 5 provides a list of the dataset categorized based on the application as well as a description of the dataset contents.

5.2.4 Aerial datasets for land mapping

UAVs have become valuable tools for land mapping and surveying due to their ability to capture high-resolution aerial images and data efficiently. Moreover, UAVs provide rapid cover for large areas which is important for time-sensitive projects, and can access hard-to-reach or hazardous areas, making them suitable for mapping terrain, forests, cliffs, and other challenging landscapes. UAVs can also capture images from multiple angles and altitudes, enabling the creation of 3D models of the land. These models are valuable for urban planning, archaeological site preservation, and environmental assessment. Repeated UAV flights over time can be used to monitor land changes, such as urban expansion, deforestation, or erosion. For land mapping, deep learning techniques are employed in various ways to extract valuable information from aerial. Deep learning models can be used to detect changes in land cover and land use over time by comparing historical and current imagery. It can be also applied to identify and extract building footprints from high-resolution aerial imagery and extract road networks, enabling the creation of detailed road maps. This information is essential for urban planning, disaster response, and infrastructure development. Deep learning algorithms proficiently classify land parcels or areas into different land-use types, such as residential, commercial, agricultural, or industrial, and can process LiDAR data to create accurate digital elevation models, which are essential for terrain analysis, and flood modeling. To implement deep learning in land mapping, large labeled datasets are required for model training, and specialized neural network architectures. Consequently, we present a compilation of recently accessible datasets, as per our comprehensive literature review. Table 6 illustrates a categorized list of datasets, detailing their respective applications and dataset contents.

5.3 Satellites datasets

There is a large number of active remote sensing satellites and databases, which are accessible through freely available and commercial interfacing software programs. Some of the common sources of remote sensing data are the United States Geological Survey; National Oceanic and Atmospheric Administration; National Aeronautics and Space Administration Earthdata; NASA Earth Observations; European Space Agency; Japan Aerospace Exploration Agency; AirBus Defense and Space; MAXAR Company; Planet Labs; Satellite Imaging Corporation; Apollo Mapping. We present a selection of popular and recently cited datasets of overhead imagery, mostly from remote sensing Earth satellites. The available satellite data is vast. Most datasets combine satellite imagery from multiple satellites with ground-based measurements to train artificial intelligence models to create algorithms that can be used to process new data. For example, a local 2011 study of mangrove forests in the coastal region of West Africa was used to develop a general model for mangrove detection globally [297].

We present a partial list of interesting satellite-based datasets in the four most popular applications. Agriculture and forestry datasets are included in Table 7. Such datasets commonly reply to RGB color imagery, as well as infrared to detect the extent of vegetation on the surface. Often data products for analysis via machine learning are created to include urban sprawl, human development, and water levels. The results are often global maps useful for assessment and planning.

Furthermore, disaster response satellite datasets are listed in Table 8; common sensor modalities include infrared, radar, RGB, and multispectral imagery. Applications include the study of global storms, floods, and landslide patterns, as well as volcano activity, temperature extremes, wildfires, and building damage mapping.

In addition, environmental monitoring satellite datasets are listed in Table 9. Applications include monitoring wildlife habitats, ice sheet monitoring, climate predictions, atmospheric gas concentrations, freshwater reservoir assessment, ocean flux, and plastic pollution monitoring. Sensor modalities include combinations of multispectral, radar, GPS, ground data, infrared, and RGB.

Finally, satellite datasets for scene classification and object segmentation detection are listed in Table 10. Applications include semantic segmentation of overhead scene pixels into categories of land use, as well as road, car, and ship detection. Sensor modalities include RGB, infrared, radar, and multispectral imagery.

6 Discussion and future direction

6.1 Perspective from imaging and sensing

The combination of UAV and satellite imagery with deep learning algorithms in remote sensing is anticipated to persist in transforming our capacity to observe and comprehend the Earth’s surface and its dynamic processes. This advancement is poised to contribute to progress in fields such as agriculture, environmental conservation, disaster management, and urban planning, among others. Drawing from our expertise and comprehensive review of this field, we present key trends and areas of development as follows:

-

Enhancing the spatial and temporal resolution of satellite and UAV imagery is imperative. This enhancement will facilitate more frequent and detailed monitoring of landscapes, ecosystems, and urban areas.

-

Multi-sensor integration is a critical requirement within the field of remote sensing data analysis and remote sensing, where data from a variety of sensors such as optical, thermal, LiDAR, and hyperspectral sensors must be effectively combined. This integration offers the potential for a more comprehensive understanding of the environment. However, it also presents a new challenge in the implementation of novel deep learning architectures, as they need to be capable of handling the fusion of multi-modal data for enhanced analysis. Standardize data and calibration procedures across different sensors and the development of DL architectures designed for multi-sensor integration with the collaboration of the sensor manufacturers, could help to mitigate the aforementioned challenges

-

In dynamic scenarios applications, such as disaster response and precision agriculture, real-time and on-device processing of UAV imagery is an immediate need. However, this also requires efficient algorithms and hardware capable of handling large volumes of data with low latency. The development of lightweight DL models optimized for on-device processing could be needed for this scenario, in which edge computing and distributed processing systems are used to reduce latency problems.

-

There will be an increasing trend toward tailoring deep learning models with satellite/UAV platforms to specific applications, whether it is precision agriculture, forestry management, environment monitoring, or disaster management. This requires a deep understanding of the domain and the ability to adapt to changing requirements.

-

As the use of UAV/satellite and deep learning in remote sensing grows, there will be a greater focus on ethical and regulatory issues, including privacy concerns, data security, and compliance with local and international regulations. Methods such as strong data encryption and access control measures to protect sensitive data could be implemented to mitigate privacy concerns. Additionally, educate stakeholders about the importance of data privacy and security.

6.2 Perspective from learning algorithms

Supervised classification algorithms need labeled data to classify RS images correctly. However, collecting labeled samples for learning is time-consuming and costly. Accurate classification maps need sufficient and high-quality training data. One of the major limitations of these supervised approaches is the scarcity of a dataset superior in quantity and quality for training the classifier. In remote sensing image classification, data labeling can be tricky and time-consuming. Thus, semi-supervised learning (SSL) approaches have been used to train the classifier using labeled and unlabeled data to enrich the input to the supervised learning algorithm and increase classification accuracy. This is especially beneficial in remote sensing, where gathering and classifying vast training data may be time-consuming and costly. However, SSL approach poses some challenges. It requires carefully selecting the labeled and unlabeled data and an appropriate semi-supervised learning algorithm. Different SSL algorithms may perform variably depending on the dataset characteristics and classification tasks, where there is a need for algorithms that can adapt to the specific properties of remote sensing data, such as high dimensionality and spectral variability. To address this issue, some advanced data selection methods could be an option, such as developing reinforcement learning-based strategies for dynamically selecting the most informative labeled and unlabeled samples during training. In addition, future research can focus on enhancing domain adaptation techniques [330] within SSL frameworks to handle variations in data distributions across different regions and sensor types. We also suggest exploring other types of machine learning techniques like curriculum learning [331], where the model is trained on easier tasks or samples first, gradually increasing the complexity to improve learning efficiency and performance.