Abstract

Photovoltaic (PV) system performance and reliability can be improved through the detection of defects in PV modules and the evaluation of their effects on system operation. In this paper, a novel system is proposed to detect and classify defects based on electroluminescence (EL) images. This system is called Fault Detection and Classification (FDC) and splits into four modules, which are (1) Image Preprocessing Module (IPM), (2) Feature Extraction Module (FEM), (3) Feature Selection Module (FSM), and (4) Classification Module (CM). In the first module (i.e., IPM), the EL images are preprocessed to enhance the quality of the images. Next, the two types of features in these images are extracted and fused together through FEM. Then, during FSM, the most important and informative features are extracted from these features using a new feature selection methodology, namely, Feature Selection-based Chaotic Map (FS-CM). FS-CM consists of two stages: filter stage using chi-square to initially select the most effective features and a modified selection stage using an enhanced version of Butterfly Optimization Algorithm (BOA). In fact, BOA is a popular swarm-based metaheuristic optimization algorithm that has only recently found success. While BOA has many benefits, it also has some drawbacks, including a smaller population and an increased likelihood of getting stuck in a local optimum. In this paper, a new methodology is proposed to improve the performance of BOA, called chaotic-based butterfly optimization algorithm. Finally, these selected features are used to feed the proposed classification model through CM. During CM, Hybrid Classification Model (HCM) is proposed. HCM consists of two stages, which are binary classification stage using Naïve Bayes (NB) and multi-class classification stage using enhanced multi-layer perceptron. According to the experimental results, the proposed system FDC outperforms the most recent methods. FDC introduced 98.2%, 89.23%, 87.2%, 87.9%, 87.55%, and 88.20% in terms of accuracy, precision, sensitivity, specificity, g-mean, and f-measure in the same order.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This section presents two parts: The first is PV panel defect detection, while the second is the research contributions and paper organization.

1.1 PV panel defect detection

Increasing global prosperity and population have placed heavy strains on the world's electrical grid. The world's annual electricity consumption is projected to double by 2050 [1], according to recent reports. Currently, fossil fuels and coal, traditional energy sources that contribute to climate change in a negative way, are the industry standard for generating electricity. The International Energy Agency [2] found that conventional energy production was responsible for releasing 66 percent of the world's annual carbon dioxide emissions in 2014, but conventional forms of energy are finite as well. This means that renewable and low-carbon energy sources can play a crucial role in providing the necessary electrical energy for environmentally friendly and sustainable energy production [3]. Recent research indicates that by 2050, photovoltaic and wind energy systems will account for 88% of the world's total electricity generation needs.

The photovoltaic (PV) system is made up of many parts, such as cells, wires, inverters, structures, and mechanical connections. The peak kilowatt represents the maximum amount of energy harvested from the system while the sun is overhead [4]. PV-based energy systems are popular among renewable energy sources because they are reliable, run quietly, are available all over the world, are easy to set up, and the cost of PV modules is going down [5]. The PV modules can also work on their own, and they are easy to put on the roof of the building. So, every year, there has been a sharp rise in the number of PV generation plants being put in place [6].

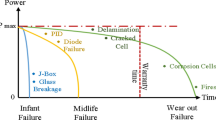

Using PV systems to make electricity is a growing area of research in both academia and business. As PV systems get more reliable and complicated, they have become important automation issues [7]. PV systems that are connected to the grid are one of the energy technologies that are growing the fastest. So, the most important things are safe operation and handling. There are two main things that can go wrong with a PV system: internal and external problems. The internal cause is broken cables or not enough wiring. The external cause is shade from trees or clouds or a hotspot caused by the temperature in the room. Due to the vulnerability of solar modules to surface modifications, a system for fault detection is necessary. These types of errors and flaws have an impact on the module's production, and in the worst cases, they prevent it from functioning at all. The issue is that the majority of faults cannot be found using standard cameras [8]. A variety of photography module methods are depicted in Fig. 1.

When compared to direct measurements, Infrared (IR) imaging (thermal) is a non-destructive and non-contact method for determining the quality of solar modules. Solar cells that have been disconnected from the module's electrical circuit, whether temporarily or permanently, are a telltale sign of damage. The solar cells become overheated because they are no longer able to convert the sun's energy into electricity. An IR camera can then capture an image of the emitted infrared radiation [9]. When it comes to analyzing the failure of PV modules, electroluminescence (EL) imaging is another well-established non-destructive technology that can provide much clearer images [10]. Defective cells are depicted as darker in EL images due to the fact that their disjointed parts do not radiate. It is possible to obtain an EL image by applying current to a PV module, which causes it to emit EL light at a wavelength of 1150 nm. A silicon Charge-Coupled Device (CCD) sensor can capture an image of the emission. Due to the high spatial resolution of the image, small cracks can be detected, and lateral radiation imaging is not distorted by lateral heat propagation. However, it takes a lot of time and money and requires experts to visually inspect EL images [11].

Recently, researchers have been working hard to find solutions to these problems with PV system failures [12]. One modern way to find out what is wrong is to use Artificial Intelligence (AI), which has shown it can model, control, predict, forecast, diagnose, and categorize problems [13]. In fact, AI is used in several steps to make a PV monitoring system. These steps are: (1) data acquisition, (2) image preprocessing, and (3) defect classification, as shown in Fig. 2. Firstly, in data acquisition step, two important types of image modules are used: thermal images and electroluminescence image. Then, after collecting the PV images, these images are preprocessed through image processing step. In image preprocessing step, several processes are performed, which are feature extraction and feature selection. Feature extraction is a method for compressing data by selecting salient characteristics from a larger data sample [14].

Once the features have been extracted, the ones that are most useful and efficient should be chosen. This step, called "feature selection," chooses a subset of features that give the classification problem useful information [14, 15]. The goal of feature selection is to increase accuracy while sacrificing as little data as possible [14, 15]. Actually, there are several feature selection techniques used to select the most informative features. These techniques include Fisher score, chi-square score, correlation-based feature selection, fast correlation-based filter, etc. [16,17,18]. The next step is defect classification to detect and classify the failure type in the PV modules.

1.2 Research contributions and organization

The primary goal of this paper is to create a Fault Detection and Classification (FDC) system for PV systems based on EL images in an effort to boost their dependability and security. Actually, FDC passes through several modules which are (1) Image Preprocessing Module (IPM), (2) Feature Extraction Module (FEM), (3) Feature Selection Module (FSM), and (4) Classification Module (CM). In the first module (i.e., IPM), EL images are preprocessed to improve the quality of the images to achieve this aim, and several processes are performed, including cropping, resizing, and rotating. In the second module (i.e., FEM), statistical and texture features are extracted from the enhanced images. These two types of features are fused together. After that, the most important and effective features are selected to improve classification accuracy. This process is applied through the FSM. In FSM, a novel methodology called Feature Selection-based Chaotic Map (FS-CM) was introduced to select the most useful and meaningful features from extracted features. FS-CM is a combination of filter methods and wrapper methods. FS-CM consists of two stages: filter stage and a modified selection stage. In filter stage, chi-square is used to pick the most useful features as a preselection stage. As a filter method, chi-square is characterized by high speed in performance. After that, in modified selection stage, Butterfly Optimization Algorithm (BOA) was used as a wrapper method with a chaotic map to accurately select the final subset of features. Actually, BOA is a modern swarm intelligence algorithm that models itself after the natural foraging behaviors of butterflies. It exhibits potent qualities similar to those of other SI algorithms, including its ease of use, lack of need for derivatives, relatively few tunable parameters, adaptability, scalability, and soundness and completeness.

Finally, in the last module (i.e., CM), hybrid model was used to detect and classify defects in PV modules based on the most important features that were selected. This model is called Hybrid Classification Model (HCM) that consists of two stages which are Binary Classification Stage (BCM) Multi-class Classification Stage (MCS). Through the first stage, PV modules are classified into healthy or defect modules using Naïve Bayes (NB). NB classifier is a relatively straightforward ML method with impressive practical applications. In addition to being quick and simple to implement, NB also requires only a minimal amount of training data in order to accurately estimate the parameters needed to construct a classification model. After that, defect modules are then classified into different defects using Enhanced Multi-Layer Perceptron (EMLP). In fact, MLP was enhanced using Gray Wolf Optimization (GWO). GWO was used to fine-tune the MLP's weights and biases so that it could efficiently track global maxima in real time. By tuning weights and biases, MLP can classify PV defect classes accurately.

The rest of the paper is organized as follows: Sect. 2 provides applicability of FDC in real life. Section 3 discusses the previous work about PV detection systems. Section 4 explains the proposed system. Section 5 provides experimental evidence that the proposed method works. Conclusions and future works are introduced in Sect. 6.

2 FDC applicability for PV fault detection

In recent years, solar energy has experienced a surge in popularity as a reliable and efficient source of electricity and heat. PV systems play a significant role in the electricity and energy sectors, accounting for over 10% of the total power supply currently. Grid-connected PV systems are increasingly favored due to their cost-effective installation, rapid energy generation, and potential return on investment, which may include customer incentives. To ensure the cost-effectiveness of PV installations, it is necessary to verify the consistent generation of output energy. Defects, similar to those found in other power generation systems, are inevitable in PV systems and pose a significant challenge to address. Examples of such challenges include the preservation of system reliability and the mitigation of power and energy losses. Faults in off-grid, on-grid, and hybrid power systems can be characterized as failures in the solar cell panel and system components, including power converters and wiring connections.

Consequently, in this paper, we are provided with a real-life software program that can be installed on a desktop computer to help anyone who works in the station detect the PV defect. Figure 3 shows the architecture of FDC in real life. It consists of the storage that can be used to store the EL images, AI system, and alert module. Firstly, EL images are collected and uploaded to Google Drive. Then, AI system performs its task to detect the defect in any EL images. The output of the AI system passes to the alert module to draw attention that there is an image of a defect, which can appear on the screen of the software program. The main advantages of this program are that (1) anyone can use it without any experience, (2) cheap, and easy, and (3) it does not require maintenance.

3 Literature review

In this section, we discuss the previous work about PV detection systems. In [19], a Non-invasive Machine Learning-based Fault Diagnosis (NML-FD) method for PV modules has been introduced. The proposed method is based on thermal image PV modules. Firstly, the input images are preprocessed through two processes, which are grayscale image conversion and histogram equalization. Then, texture features using Gray Level Co-occurrence Matrix (GLCM) and Histogram of Oriented Gradient (HOG) were extracted. After that, Principal Component Analysis (PCA) was used for dimensionality reduction. Finally, the selected features were used for classification using Naïve Bayes (NB). The proposed methodology obtained 94.10% accuracy at a minimal computing cost.

As illustrated in [20], a Deep Learning (DL) model for classifying defective PV modules has been proposed. The proposed DL model passes through several steps, which are data augmentation and classification. Firstly, data augmentation has been performed using Generative Adversarial Network (GAN) to solve the problem of the limitation of the number of used images. Then, CNN was used to extract features from electroluminescence images. Then, DL model is compared with VGG16, ResNet50, Inception-v3, and MobileNet. The model's performances are assessed from a variety of angles, and the outcomes of the numerical experiments also demonstrate how effective the model is.

As presented in [21], a Machine Learning Framework (MLF) has been introduced. The proposed model MLF was used to detect and classify PV panel based on thermal images. Actually, MLF goes through two processes; first, a new hybrid feature vector was formed using a data fusion approach. This vector consists of RGB, texture, the Histogram of Oriented Gradient (HOG), and Local Binary Pattern (LBP). Then Support Vector Machine (SVM) was used to classify the obtained thermal images of PV panels into three different categories, which are healthy, non-faulty hotspot, and faulty. Experimental results demonstrate that the proposed MLF obtains 96.8% training accuracy and 92% testing accuracy with lesser computational complexity.

As introduced in [22], an Improved Fault Detection and Diagnosis (IFDD) has been proposed to detect PV system malfunctions. The proposed IFDD goes through two main steps which are feature extraction and selection, and fault classification. In feature extraction and selection process, PCA was used to extract and select the most important multi-variate features. Then, these features are used to feed the Supervised Machine Learning (SML) classifiers for fault diagnosis. The results show that the suggested method for fault detection and diagnosis is both practical and effective.

Also, as shown in [23], a Novel Intelligent Framework (NIF) has been proposed for detecting PV plant defects. In fact, NIF consists of several processes: image acquirement, image segmentation, fault orientation, and defect warning. After taking images using a handheld device, the images are segmented using the fifth version of the You Only Look Once (YOLOv5) algorithm. Then, deep Residual Network (ResNet) was used for defect detection. According to the experimental results, the accuracy of NIF approach can reach 91.7% in segmentation and 95% in defect detection.

As illustrated in [24], an Automatic Defect Detection (ADD) system was developed for detecting defects in photovoltaic modules. Actually, ADD passes through two phases, which are data augmentation and defect detection. In the first phase, data augmentation is dependent on the Wasserstein distance calculation of WGAN-GP and absorbs the idea of going step by step in ProGAN to solve the problem of data limitation. Then, through the defect detection phase, a novel Self-Fusion Network (SeFNet) is combined with High-Resolution Network (HRNet) to improve the classification accuracy of photovoltaic cell defects. Also, the authors in [25] aim to create a fault detection and identification algorithm for the DC portion of a PV. The method takes a novel approach to PV fault detection and identification by utilizing logistic regression with cross-validation. The technology is used in intelligent PV arrays that can send voltage and current readings from each PV cell in the array separately.

The authors in [26] developed a fault detection and diagnosis system using Genetic Algorithms (GA) and Artificial Neural Network (ANN). Firstly, PCA is a statistical method for extracting features from a dataset by transforming the original variables into latent ones. Then, GA was applied to select the most important features from those extracted features. In fact, GA is applied in order to reduce the workload of the classifier and the computation time required. Finally, the trained ANN classifies the features it was given based on the model it was given. The observed findings validate the feasibility and effectiveness of the proposed GA-ANN for fault diagnosis, all while requiring minimal computational time.

The study in [27] presents an innovative approach for identifying defects in solar cells using EL images. The focus was on the challenge of detecting cracks and labeling data in polycrystalline cells, which are more prone to impurities on their surface compared to monocrystalline cells. This study developed a method for detecting defects in PV module cells by treating it as an unsupervised domain adaptation problem. The approach involves training a cross-domain classifier using EL images. In addition to this method, attention-based transfer learning and class-aware domain discriminators were proposed to improve feature learning for PV module cells. According to the obtained results, the proposed method promoted the performance of defect detection.

In order to detect defects in PV panels, the authors in [28] suggested a method called Ghost convolution with BottleneckCSP and a small target prediction head that incorporates YOLOv5 (GBH-YOLOv5). The main aim of the proposed method is to improve accuracy on multi-state targets, which is achieved through the introduction of the BottleneckCSP module, which uses Ghost convolution to increase model inference speed and decrease the number of parameters, and a prediction head for tiny target detection to alleviate tiny defect misses. To physically increase the size of the defect, the original image is first cropped and compressed. Next, the GBH-YOLOv5 model takes the processed images as input and uses a network processing technique based on Ghost convolution, the BottleneckCSP module, and the prediction head of tiny targets to extract depth features. Feature Pyramid Networks (FPNs) and Path Aggregation Networks (PANs) are used to categorize the features that have been extracted. Experimental results ensure that the proposed method is effective. Table 1 summarizes the advantages and disadvantages of the proposed PV defect detection techniques from the literature reviewed above.

The research gaps in previous detection and classification systems for photovoltaic modules can be summarized in the following:

-

Inadequate consideration of real-time implementation: Most studies lack a full examination of the proposed fault detection systems' real-time applicability in practical circumstances, which is critical for their deployment in monitoring PV systems.

-

Inadequate emphasis on noise robustness: Several techniques perform poorly in the presence of noise or changes in ambient variables. There has been little research specifically addressing noise resilience.

-

Lack of comparative analysis between different types of PV systems: Many studies focus on fault detection in specific types of PV systems, ignoring comparative analyses across different system architectures such as off-grid, on-grid, or hybrid PV systems.

-

Inadequate hardware deployment exploration: There has been little investigation into the hardware requirements or resource restrictions required for implementing these defect detection algorithms in embedded systems or IoT devices utilized in PV monitoring.

-

Limited uniformity of evaluation criteria: A lack of standardization in evaluation measures among research makes comprehensive comparison of the performance of different defect detection methods difficult.

-

Minimal discussion of scalability: Studies frequently fail to address how these defect detection systems can scale efficiently when monitoring large-scale photovoltaic installations, such as solar farms or utility-scale facilities.

-

Limited examination into interdisciplinary approaches: Merging techniques from several disciplines (for example, merging computer vision with electrical engineering concepts) may result in more robust defect detection systems, although there has been little research in this area.

These identified research gaps may be prospective areas of focus for future studies, adding to the progress of defect detection and classification systems for solar modules.

In this paper, we proposed a Fault Detection and Classification (FDC) system that overcomes the mentioned research gaps by:

-

1.

Explaining the ability to apply the proposed system in real life.

-

2.

The proposed system is consistent and robust in cases of noise.

-

3.

The system architecture is designed to support large-scale PV installation monitoring by optimizing algorithms for parallel processing and effective resource utilization.

-

4.

By combining computer vision techniques with domain-specific knowledge from electrical engineering, the system ensures a comprehensive defect detection framework that leverages the capabilities of many domains.

-

5.

The proposed Classification Module (CM) uses defined assessment criteria to ensure that the defect detection system's performance is measured uniformly, allowing for easy comparison with other methods.

-

6.

By enhancing the computing efficiency of the algorithms utilized in each module, the proposed system aims at real-time fault detection. The Image Preprocessing Module (IPM) focuses on fast picture normalization, enabling real-time processing.

4 Fault Detection and Classification (FDC) system

The use of PV energy as a renewable resource has gained worldwide attention and expanded rapidly over the past decade. PV cells require close monitoring for quality issues throughout the manufacturing, shipping, and use phases. Manufacturing errors, such as poor soldering or faulty wiring, pose a threat to PV modules during assembly. The modules' power output may be diminished as a result of external stresses that cause cracks and other defects. In this paper, Fault Detection and Classification (FDC) system for PV systems is proposed based on electroluminescence (EL) Images. The proposed FDC consists of several modules, which are (1) Image Preprocessing Module (IPM), (2) Feature Extraction Module (FEM), (3) Feature Selection Module (FSM), and (4) Classification Module (CM) as shown in Fig. 4. The following subsections will break down each module in detail.

4.1 Image Preprocessing Module (IPM)

The first step in the proposed system (i.e., FDC) is Image Preprocessing Module (IPM). Actually, IPM is a crucial step in the proposed system as it is responsible for preparing data for the classification model. Through IPM, the size and orientation of each EL image have been standardized. Furthermore, the lens distortion introduced by the camera used to capture the EL images was corrected before the solar cells were extracted. Figure 5 shows some of the used EL images.

4.2 Feature Extraction Module (FEM)

Feature Extraction (FE) is the process of extracting features from a grayscale image. To be more precise, extracted features are crucial for machine learning-based classification [29]. Actually, there are various FE techniques, as shown in Fig. 6. In this paper, statistical and texture features are extracted from a grayscale image and fused together, as illustrated in Fig. 7. First, statistical features are characteristics that lend themselves to definition and calculation within the context of statistics [30]. The first- and second-order statistical texture features were extracted from all images for this study. Variance, skewness, and kurtosis, the three first-order statistical texture features, are extracted from the image histogram. Extraction techniques for statistical texture features of the second order include Gray Level Co-occurrence Matrix (GLCM), Gray Level Run Length Matrix (GLRLM), and Gray Level Size Zone Matrix (GLSZM) [31]. In this work, Gray Level Co-occurrence Matrix (GLCM) is applied. As a result, a total of 25 features from each image were extracted (three first-order statistical features and 22 second-order features) [32]. Some of these features are [33]:

-

1.

Contrast (C):

Contrast (C) determines how bright a pixel is compared to its neighbors which can be calculated using the following equation:

$$C=\sum_{m,n=0}^{N-1}{X}_{mn}{(n-m)}^{2}$$(1)where \({X}_{mn}\) is the normalized value of the gray scale at position n, m. \(N\) is the number of gray levels in the image.

-

2.

Correlation:

Correlation calculates the combined probability that the given pixel pairs will occur which can be determined by using Eq. (2):

$${Correlation}=\sum_{n=0}^{N-1}\sum_{m=0}^{N-1}\frac{(n-{\mu }_{n})(m-{\mu }_{m})}{\sqrt{({\sigma }_{n})}\sqrt{({\sigma }_{m})}}$$(2)where \(\mu\) is the GLCM mean (a weighted average of the intensities of all pixels in the GLCM-contributing relationships) which can be determined using the following equation:

$$\mu =\sum_{n,m=0}^{N-1}n{X}_{nm}$$(3) -

3.

Entropy:

Entropy is a definitional measure of randomness used to quantify the amount of data. Entropy can be calculated using Eq. (4):

$${Entropy}= \sum_{n,m=0}^{N-1}-\mathit{ln}({X}_{nm}){X}_{nm}$$(4) -

4.

Energy

The GLCM relies on the scalar product of energy to represent quantities, similar to the angular second moment or uniformity. Energy can be determined using the following equation:

$${Energy}=\sum_{n,m=0}^{N-1}{({X}_{nm})}^{2}$$(5) -

5.

Standard deviation

Standard deviation (Sd) provides a quantitative measure of the dispersion of the data around the mean. It is also a way to quantify how far apart the individual data points are. Its formula is determined using the following equation [34]:

$$Sd=\sqrt{\frac{1}{N-1}\sum_{i=1}^{N}{(Z-\overline{Z })}^{2}}$$(6)where \(Sd\) is the standard deviation, and \(N\) is the number of data points. Additionally, \(\overline{Z }\) represents mean which can be calculated using the following equation:

$$\overline{Z }=\frac{1}{N}\sum_{i=1}^{N}{Z}_{i}$$(7) -

6.

Skewness

Skewness quantifies the degree to which something is asymmetrical. When the data in a set are identical to both the left and right of the center, we say that the distribution is symmetrical. Skewness can be determined using Eq. (8) [34]:

$${Skewness}=\frac{\sum_{i=1}^{N}{({Z}_{i}-\overline{Z })}^{3}/N}{{sd}^{3}}$$(8) -

7.

Kurtosis

Kurtosis quantifies how far away the data are from a normal distribution's middle. Simply put, heavy tails, or outliers, characterize datasets with a high kurtosis. In general, light tails or a lack of outliers characterize datasets with a low kurtosis. The worst-case scenario is if everyone was given the same amount. Kurtosis can be determined using the following equation [34]:

$${Kurtosis}=\frac{\sum_{i=1}^{N}{({Z}_{i}-\overline{Z })}^{4}/N}{{sd}^{4}}$$(9)

4.3 Feature Selection Module (FSM)

After extracting features from the used image, it is time to perform the feature selection process through Feature Selection Module (FSM). Feature Selection (FS) lowers the dimensionality of the model, improving performance. In this study, we looked for the most effective, accurate, and all-encompassing way to identify the most useful feature to help the classifier detect defects in PV systems [35]. What follows explains how we arrived at what we believe to be the most ideal solution. The purpose of feature selection is to zero in on the most important and useful characteristics while eliminating those that are superfluous. In most cases, the FS process also improves overall performance and data dimensionality, reducing the expense of classification and prediction by simplifying the time complexity required to build a model. However, using all of the PV system model's features has a number of disadvantages. Due to the increased computational burden and slower training and testing times, the number of features also increases storage needs. Additionally, the model's error rate rises as a result of irrelevant features' reduced ability to distinguish between relevant and irrelevant features. In this article, a new feature selection methodology has been introduced, called Feature Selection-based Chaotic Map (FS-CM). As shown in Fig. 8, FS-CM consists of two stages: filter stage and modified selection stage. In filter stage, the best subset of the extracted attributes is selected as the initial selection. The main aim of filter stage is to select the most attributes from all extracted attributes using chi-square (\({\chi }^{2}\)). In statistics, the chi-square test is used to determine the independence of two events. We can get the observed count \(A\) and the expected count \(B\) from the data for two variables. Chi-square calculates the difference between the expected count \(B\) and the observed count \(A\).

Then, in modified selection stage, a new feature selection methodology was used to select the best attributes. This method is called Chaotic-based Butterfly Optimization Algorithm (CBOA) as shown in Fig. 8. In this method, Butterfly Optimization Algorithm (BOA) was enhanced using Chaotic Map (CM) to efficiently select the best attributes.

Butterfly Optimization Algorithm (BOA) is a bio-inspired algorithm, a class of metaheuristics that takes inspiration from the natural world. Butterflies are used as search agents in BOA's optimization process and as inspiration for the system's underlying food-hunting behavior [35, 36]. Butterflies have sensory receptors that allow them to smell or perceive the fragrance of food or flowers. Chemoreceptors are sensory receptors found throughout the butterfly's body. In BOA, a butterfly is thought to be able to create a scent or fragrance with some power or intensity [35, 36]. The objective function of the problem determines the butterfly's fitness, which has something to do with this scent. This means that a butterfly's fitness will change as it moves around the search area. The ability of nearby butterflies to detect a butterfly's aroma leads to the development of a group social learning system. The global search phase of BOA occurs when a butterfly makes a beeline for the best butterfly in the search area after smelling it. The local search phase is when a butterfly in the search area is unable to detect the scent of another butterfly because it will move randomly [35,36,37,38].

Actually, BOA is used in this paper as it demonstrates strong characteristics, such as being straightforward to use, free of derivatives, requiring fewer tuned parameters, adaptable, scalable, sound, and complete in solving optimization problems. For this reason, BOA has found varied applications, including feature selection and photovoltaic models [39]. Additionally, BOA is able to efficiently and flexibly handle a wide range of optimization problems across domains thanks to its robust and dynamic parameters. In light of this, a number of studies have advocated for the use of BOA to solve optimization issues, especially those pertaining to engineering [39, 40].

BOA passes through several phases, as in any SI algorithm, which are initialization phase, iteration phase, and final phase [41]. The parameters of the method are set during the initialization phase, after which the algorithm creates its initial population at random. The algorithm then moves on to the iteration phase, when the search agents employ the global and local search phases, respectively, in accordance with a switch probability \(p\). The algorithm technique for both global and local searches is actually controlled by \(p\) [41]. The global and local searches of BOA are defined using Eqs. (11) and (12):

where \({B}_{i}\left(t+1\right)\) represents the place of \({i}^{th}\) butterfly in the \((t+1)\) try. \({B}_{i}\left(t\right)\) donates the position of \({i}^{th}\) butterfly in the current try \(t\), and \(\eta\) is a random value in the range [0–1]. Also, \({B}_{{best}}\left(t\right)\) is the position of the best butterfly in iteration \(t\), and \({B}_{j}\left(t\right)\) and \({B}_{k}\left(t\right)\) represent \({j}^{th}\) and \({k}^{th}\) butterflies from the population in the current try \(t\). \(f\) represents the perceived magnitude of the fragrance which is formulated by using the following equation:

where \(m\) is the sensory modality, \(I\) is the stimulus intensity, and \(e\) is the power exponent. In BOA, Eqs. (11) and (12) are used according to the following strategy:

Firstly, BOA parameters values were defined. Then, the proposed method was started to, firstly, generate initial population (S) using the following equation:

where \({Y}_{i}\) is the initial population, and \(i = {1,2}, \dots , N\) is the population size. \({Y}_{{min}}\) is the lower parameter value, and \({Y}_{{max}}\) is the upper parameter value. Additionally, \(\mu\) is a random value; \(0 \le \mu \le 1\). Actually, the initial population (B) consists of a group of individuals \({S}_{i}\), where \(i=1, 2,\dots , N\), and \(N\) is the population size. Then, apply Swap Mutation (SM) on the initial population to generate a new population (BB). In SM, it involves randomly choosing two individual positions and swapping the values there. Figure 9 shows an illustrative example of SM.

After performing SM on the original population, a new population (SS) will be generated. Then, fitness value of each individual will be calculated using the following equation:

where \(\eta \left({BB}_{i}\right)\) combines the classification accuracy of the features in the \({i}^{th}\) solution which can be calculated using K-Nearest Neighbor (KNN) that uses the Euclidean Distance (ED) to evaluate the individuals by using the following equation:

where \({x}_{i}\),\({y}_{i}\) reflect certain attributes for a given record in the population, and \(i\) is a variable \(i={1,2},..,N\). The suggested approach aims to select the best individual with the highest \({Fit}\left({BB}_{i}\right)\). After calculating \({Fit}\left({BB}_{i}\right)\), random value was generated using chaotic maps to determine the mechanism of updating the next position using the tent map [42]. Actually, chaotic maps are crucial in enhancing metaheuristic algorithms by preventing the occurrence of local optima and accelerating the convergence process and have good effects on improving the performance [43]. As shown in Fig. 8, if the generated value using CM is larger than \(p\) value, \(p\epsilon [{0,1}]\), the updated position in the next try is calculated using Eq. (18) if and Eq. (19):

where \(\eta\) is a random variable generated by using tent CM, \(\eta \epsilon [{0,1}]\). In fact, the generated values for the positions of the search agents are continuous. The standard binary format for choosing features has issues, so it cannot be used in its entirety. The feature selection problem with values (0 or 1) determines which features should be used to enhance the performance and accuracy of a particular classification algorithm as shown in Fig. 10. The calculated search space will be altered by converting values from continuous to binary using sigmoid transfer function using the following equations:

where \(\sigma \left({BB}_{i}^{j}\right)\) in the S-shaped search agent is a continuous value (attribute), \(i = 1,\dots ,N\), and \({BB}_{i}^{j}\) binary value can be 0 or 1 by random number \(R \epsilon [{0,1}]\) value. Figure 11 illustrates a sigmoidal function as an example of an S-shaped function. Finally, after performing FSM, the selected features are contrast, energy, entropy, homogeneity, correlation, angular second moment, cluster shade, dissimilarity, skewness, and kurtosis. Algorithm 1 illustrates the steps of the proposed CBOA.

The main disadvantage of the BOA is its ability to locate local minima trapping, such as any optimization algorithm. Despite the integration of chaotic mapping to mitigate this issue, ensuring consistent and comprehensive efficacy remains an ongoing subject of exploration, one can employ various techniques, such as incorporating momentum, adaptive learning rates, or regularization, to circumvent local minima and explore alternative regions within the parameter space. Additionally, implement strategic measures within the CBOA. Advanced initialization techniques, coupled with strategies to maintain population diversity, play a pivotal role in mitigating confinement to local optima by fostering extensive exploration across the search space. Additionally, there is a set of guidelines and considerations that should be followed when finalizing the chaotic map for optimization tasks. These guidelines encompass aspects such as the choice of chaotic map equations, sensitivity analysis, parameter tuning, and the significance of initial conditions.

4.4 Non-deterministic behavior of FS-CM

In this section, the non-deterministic behavior of FS-CM will be discussed. The algorithm exhibits non-deterministic behavior as a result of its chaotic component. This phenomenon implies that the execution of the algorithm on the same problem numerous times may yield varying outcomes, hence posing a potential concern for applications that need a high level of dependability. Chaotic maps are functions that describe the evolution of a system over time. In the field of mathematics, a chaotic map can generate a sequence of numbers that is chaotic and unpredictable due to its deterministic simplicity and chaotic behavior. To deal with the problem of non-deterministic behavior, mitigation strategies were applied to address non-determinism, such as ensemble approaches, result aggregation techniques, or incorporating stability measures to enhance reliability in real-world applications. But, over the past two decades, it has been observed that the chaotic map can be utilized for the purpose of developing a deterministic random number generator. Consequently, chaotic trajectories may exhibit deterministic behavior, but they display a high degree of sensitivity to initial conditions. If the initial conditions are identical, the resulting trajectories will also be identical. However, even a slight deviation from the initial conditions will cause the resulting trajectories to diverge from each other.

4.5 Classification Module (CM)

Finally, after selecting the most useful, important, and efficient features, then, these features are ready to fed to classification module. In this paper, a new classification model is proposed to detect and classify defects in PV systems. This model is called Hybrid Classification Model (HCM) and consists of two stages: (i) Binary Classification Stage (BCS) and (ii) Multi-class Classification Stage (MCS) as shown in Fig. 12.

4.5.1 Binary Classification Stage (BCS)

In the first stage of the proposed HCM, PV modules are classified into two categories: healthy or defective using NB classifier. NB classifier is widely used in many ML contexts. NB is a classification method that uses Bayesian principles. The attribute value determines how likely each sample is to fall into each category, and the category with the highest probability serves as a predictor of the sample's category [44]. Based on the Bayes algorithm, NB assumes that all attributes (features) are independent of one another.

Given that the sample set \(Y=\{{y}_{1},{y}_{2},{y}_{3},\dots {y}_{m}\}\) has various \(n\) features. Consequently, according to these features, the sample can be divided into different classes \(x=\left\{{c}_{1}, {c}_{2},\dots , {c}_{N}\right\}.\) The main aim of Bayesian theorem is to maximize the posterior probability which can be determined using the following equations [45, 46]:

where \(P\left(c|y\right)\) is the conditional probability of class c given the feature vector \(y\), \(P\left(y|c\right)\) is the conditional probability of feature y given the class \(c\), and P(c) is the prior probability of class \(c\). As \(P\left(y\right)\) is not important to the classes, consequently Eq. (23) can be written as [46]:

When all attributes are uncorrelated with one another, NB classifier's expression is depicted in Eq. (25):

4.5.2 Multi-class Classification Stage (MCS)

Multi-Layer Perceptron (MLP) is a common ML technique used to boost classification accuracy. MLP is a type of feed-forward ANN that is characterized by its fully connected structure. Feed-Forward Neural Networks (FFNNs) like MLP have an input layer, a few hidden layers, and an output layer, each of which is made up of a set number of neurons. Using MLPs for pattern classification problems entails two steps: choosing an appropriate architecture and tuning the network's weights and biases. To treat this issue, in this paper, EMLP is an enhanced version of MLP by using Gray Wolf Optimization (GWO). The main aim of using GWO with MLP is to optimize weights and biases rather than generate it randomly. In fact, EMLP consists of several processes, as shown in Fig. 13. Firstly, only features of PV defects are used to perform these tasks.

In contrast, MLPs are built by counting how many neurons are in each layer. Ten neurons were used in the input layers because that was what was necessary for the given number of features. In addition, there are exactly as many neurons in the output layer as are needed to produce the desired output. Multi-class classification was applied, so three is the resulting number of neurons. After a fitness function, representing the precision with which NB makes its classifications, is calculated, GWO processes are carried out to determine the optimal solution. The fitness value is minimized in order to obtain the weights and biases. After a set number of repetitions in a training phase, the phase is over. Finally, the database of weights and biases is updated. When it comes time for testing, the best possible weights and biases are implemented.

As can be seen in Fig. 14, an MLP typically has three layers: an input layer, a hidden layer, and an output layer. It is a type of ANN known as an early MLP. Like the perceptron, the early MLP uses error-corrective learning to set the weight of the connections between its layers, which it does by adjusting the weights based on the deviation from the ideal output. The mathematical presentation of an output \({X}_{n}\left(Y\right)\) is described using the following equation [47]:

where \({Y}_{j}\) is the input associated with the weight \({W}_{nj}\). \(f\) is the activation function, and \({a}_{m}\) is the bias. in this paper, GWO was used to find the optimal weights and biases of MLP.

GWO algorithm was proposed by Mirjalili et al. [48] as a metaheuristic. Gray wolf hunting strategies are modeled in GWO. It is a new kind of swarm intelligence that is based on a population and has a relatively easy setup process, making it potentially scalable to deal with problems on a massive scale. By positioning one solution in the n-dimensional search space around another solution, GWO seeks the global optimum in a manner reminiscent of predators encircling their prey in nature. GWO employs a hierarchical mechanism to seek out and capture the optimal prey, with each wolf standing in for a different candidate solution. Alpha (α), beta (β), and delta (δ) refer to the first three optimal solutions in the population, while omega (ω) describes the remaining possible answers. Omegas adjust their locations based on the alpha, beta, and delta positions of the pack's leaders. Consequently, these fittest solutions are used to guide other wolves (e.g., omega) to modify their positions in the decision search space. The solutions are evaluated using the fitness function, which can be calculated using the following equation:

where \({y}_{i}\) represents the actual value, and \(\widehat{{y}_{i}}\) represents the predicted value. According to the fitness value, the best three solutions are \({W}_{\alpha }\), \({W}_{\beta }\), and \({W}_{\delta }\), because \({W}_{\alpha }\), \({W}_{\beta }\), and \({W}_{\delta }\) stand for the leaders who are in a better position to assess where their prey might be hiding; this is the case. As a result, they can be steered by these heads toward the best possible state. Then, ith wolf determines its distance from the three fittest solutions by using Eqs. (30) → (33). Then, ith wolf updates its position using Eq. (34):

where \(\overrightarrow{{W}_{\alpha }}\), \(\overrightarrow{{W}_{\beta }}\), \(\overrightarrow{{W}_{\delta }}\), and \(\overrightarrow{{W}_{i}}\) represent the position vector of α, β, δ, and ith wolf in the current iteration t. \(\overrightarrow{{D}_{\alpha }}\), \(\overrightarrow{{D}_{\beta }}\), and \(\overrightarrow{{D}_{\delta }}\) represent the distance vector between the wolves Wα, Wβ, Wδ, and ith wolf. Additionally, \(\overrightarrow{A}\) and \(\overrightarrow{C}\) represent two coefficient vectors. \(\overrightarrow{{r}_{1}}\) and \(\overrightarrow{{r}_{2}}\) are random vectors with range [0–1]. Figure 15 shows the flowchart of GWO and Algorithm 2, which illustrates the steps of GWO.

After obtaining the best solution, this solution is used to optimize the weights and biases of MLP. Actually, this method will improve the performance of MLP training; additionally, at every iteration, the weights and biases will also improve as the search agent improved. In summary, GWO-MLP is a new method that enhances the performance of MLP using GWO to find the optimal weights and biases. First, the initial population of GWO and its parameters were generated randomly. Next, the generated population was used as weights and biases for MLP. Then, the fitness function is computed. The main target is to reach to the minimum fitness value. In this case, the gray wolves get a refresh every iteration. There will be more iterations of this process until the appropriate standards of assessment are satisfied. At last, the MLP model with the lowest value is put to the test on the test data.

5 Experimental results

Through this section, the proposed system, called Fault Detection and Classification (FDC), was evaluated. The main goal of this paper is to create a new automatic system in order to detect and classify defects in PV systems. In fact, FDC passes consist of four modules: Image Preprocessing Module (IPM), Feature Extraction Module (FEM), Feature Selection Module (FSM), and Classification Module (CM). Firstly, the input images were preprocessed through IPM. Then, the features are extracted from preprocessed images. Actually, through FEM, two methods used Gray Level Co-occurrence Matrix (GLCM) to extract texture features and also statistical features. These features that are extracted from EL images are fused together. Next, the most important features are selected from the fused features using the proposed Feature Selection-based Chaotic Map (FS-CM). Finally, these selected features are fed to the proposed Classification Module (CM). Through CM, Hybrid Classification Model (HCM) was proposed that consists of two stages: (1) Binary Classification Stage (BCS) using NB and (2) Multi-class Classification Stage (MCS) using Enhanced MLP (EMLP). The implementation of FDC depends on a set of EL images [49]. The dataset is partitioned into 2099 (80%) training cases and 525 (20%) testing cases. The experiments were conducted using a GPU with 8 GB of RAM and CPU: Intel i78565U (4) @ 1800 GHz. Python 3 version "3.9.12" was used as a programming language to run the experiment in this paper.

5.1 Parameter optimization

In this subsection, the mechanism of how to optimize the applied parameters will be discussed to ensure optimal performance and prevent suboptimal convergence or divergence due to parameter configurations. In fact, in this paper, the parameters used were optimized experimentally. First, start with the default values. Then the experiment is conducted, and the results are evaluated. Then the parameter is updated according to this method. This procedure was repeated until the algorithm reached its best performance. Table 2 shows the parameters used and their values.

5.2 Dataset description

In this paper, a dataset of 44 individual PV modules with varying degrees of defects was used to validate the results [49, 50]. These pictures were used as examples while designing the new technique. In a controlled testing environment, the PV modules were photographed from a variety of angles and with a range of exposure times. Cropping, resizing, and rotating were just some of the post-processing techniques used on EL images. There is a total of 26 monocrystalline and 18 polycrystalline solar cells in this dataset. Microcracks, completely disconnected cells, and mechanically induced cracks (such as electrically insulated or conducting cracks, or cell cracks due to soldering) account for 715 of the 2,624 solar cells in these 44 solar modules, making up a significant percentage of the defective cells. A total of 106 solar cells have minor flaws that prevent them from being labeled as defective, while 295 have various surface abnormalities that do not constitute defects. With no obvious surface flaws, the remaining 1508 solar cells are considered fully operational, as presented in Table 3. Solar cells in PV modules captured in images are square (quadratic) in shape. Figure 16 shows the total number of sample types in the used dataset.

5.3 Performance evaluation metrics

In this paper, various evaluation metrics are used to evaluate the proposed systems also, to compare it with measure. Confusion matrix is used to calculate the values of these measurements as shown in Tables 4 and 5. These metrics are accuracy, sensitivity, specificity, precision, g-mean, and f-measure: various formulas are shown in Table 6 [15].

5.4 Testing Feature Selection Module (FSM)

In this subsection, the proposed feature selection method will be evaluated against the other SI algorithms. These algorithms are Genetic Algorithm (GA), Particle Swarm Optimization (PSO), Bat Algorithm (BA), and BOA. Results are shown in Table 7.

As presented in table, FS-CM outperforms the others in terms of accuracy, precision, sensitivity, specificity, g-mean, and f-measure. It introduces 97%, 85%, 84.7%, 87.9%, 86.5%, and 87.4%, respectively. The reason is that FS-CM has two improvements: The first is that the initial population is generated using chi-square instead of generating it randomly, while the second is that using tent map to improve the performance of standard BOA; consequently, chaotic sequences accelerate the convergence of BOA and improve its ability to search globally. To be more precise, instead of randomly generating variables, tent chaotic map is used.

5.5 Time complexity analysis

The time complexity of an algorithm is a key factor in assessing its performance. According to the analysis of BOA, time complexity is \(O\left(N+C\right)\). Consequently, the time complexity of CBOA is \(O(t(D*N) + (C*N))\) where \(t\) is the number of iterations, \(D\) indicates the dimension of the problem, \(N\) represents the population size, and \(C\) is the cost of the objective function.

5.6 Testing the proposed system

Through this subsection, the proposed method, FDC, was evaluated against the most recent methods. These methods are NML [19], DL [20], MLF [21], IFDD [22], NIF [23], ADD [24], and GA-ANN [26] as illustrated in Table 1. Results are shown in Table 8. According to Table 8, the proposed FDC gives the best accuracy with a value of 98.2%, followed by GA-ANN with a value of 92%. Additionally, the worst method is NML with an accuracy value of 75.5%. The precision values from the worst to the best are 62.6%, 70.5%, 75%, 78%, 80.2%, 82.1%, 83.9%, and 89.23% for NML, DL, MLF, IFDD, NIF, ADD, GA-ANN, and FDC in the same order. Moreover, the proposed FDC gave the best sensitivity with a value of 87.2% followed by GA-ANN, ADD, NIF, IFDD, MLF, DL, and NML with values of 82%, 81.1%, 79.5%, 75%, 72.6%, 72.1%, and 66.2%, respectively. With a g-mean value of 0.9022, the proposed FDC achieved optimal performance by combining sensitivity and specificity values into a single metric followed by GA-ANN with a value of 81.59%. The third method that gave the best results is ADD with a value of 80.65%. In addition, the proposed FDC achieved the highest f-measure value of 88.2%, allowing it to be tested under the most severe conditions and evaluated according to the harmonic mean. A bar chart of the evaluation metrics is shown in Fig. 17. According to the obtained results, it is shown that the proposed system performs well in detecting the PV defect. The reason is that FDC is dependent on an efficient feature selection method that selects only the most effective features, and these features are fed into an effective classifier. Figure 18 shows a sample of the correctly classified images by FDC.

Despite the time complexity of both the wrapper feature selection and classification algorithms, FDC offers rapid classification in comparison with other competitors. This occurred due to the fact that other competitors depend on the concept of deep learning. Deep learning is widely recognized for its high computational demands, necessitating substantial memory and computational resources. Furthermore, there is a sizable time penalty. Conversely, FDC is characterized by its simplicity, flexibility, and capacity to handle issues arising from inaccurate data. Furthermore, it depends on a flawless hybrid feature selection methodology that chooses only the most efficient features. Since feature selection occurs only once, it does not impact the classification speed of FDC during the testing process. This precise feature selection methodology effectively reduces the dimensionality of the feature space being used.

5.7 Statistical tests

It is important to use statistical validation when measuring the model performance.

As a result, the suggested strategy's predictive power is compared and evaluated using the Friedman test and the Wilcoxon Signed-Rank Test (WSRT) [51]. With a significance level of 5% and confidence intervals of 95%, the Wilcoxon signed-rank test was run. Table 9 displays the results of the Wilcoxon signed-rank test. For the sake of this analysis, we will pretend that the two strategies' means are statistically indistinguishable. A statistical analysis was conducted using the Minitab program. The results showed that the p value is less than 0.05 (the 5% significance level), providing strong evidence against the null hypothesis. It indicates that the proposed strategy differs statistically from other strategies. It follows that, when compared to more conventional methods of PV defect detection, the suggested system performs better.

Furthermore, each strategy's performance is ranked using the Friedman test metric, a nonparametric statistical tool. At a significant level (α = 0.05), this method would ascertain the contrast between the suggested FDC and NML, DL, MLF, IFDD, NIF, ADD, and GA-ANN. Table 10 displays the outcomes. According to Table 10, the proposed FDC outperforms the others.

6 Conclusions and future works

In this paper, a new system for detecting and classifying PV module defects based on artificial intelligence is proposed. The proposed system makes fault diagnosis smarter by automatically detecting differences in PV modules and correctly classifying them. The proposed system is called Fault Detection and Classification (FDC) based on electroluminescence (EL) images. Actually, FDC is divided into four modules, which are Image Preprocessing Module (IPM), Feature Extraction Module (FEM), Feature Selection Module (FSM), and Classification Module (CM). Through the first module (i.e., IPM), EL images were accurately prepared for the next modules. Next, in FEM, two types of features, textural and statistical, were extracted and fused together. Then, to increase the classifier performance and reduce time complexity, the most important features are selected from these extracted features using the proposed Feature Selection-based Chaotic Map (FS-CM). FS-CM is a novel methodology that is divided into two stages: filter stage using chi-square and the modified selection stage using Chaotic-based Butterfly Optimization Algorithm (CBOA). Through CBOA, chaotic maps were used to enhance the performance of BOA. Finally, these selected features are used to feed the proposed classification module. Actually, in the proposed system, binary and multi-class classifications were performed to classify PV module defects. In binary classification, PV modules are classified as normal or abnormal using NB. Then, the abnormal is classified into defective, various surface abnormalities, and minor defects using Enhanced Multi-Layer Perceptron (EMLP). In EMLP, Gray Wolf Optimization (GWO) was used to find the optimal weights and biases of MLP. According to the experimental results, the proposed FDC outperforms the other recent systems in terms of accuracy, precision, sensitivity, specificity, g-mean, and f-measure. The main advantages of the proposed system, which is based on AI:(1) It could detect and diagnose issues with solar panels quickly, allowing for faster and more effective maintenance; (2) cost-effective—our system is very inexpensive and easy to implement; and (3) it provides the ability to receive real-time notifications.

In spite of the fact that CBOA has demonstrated effectiveness across several fields, its suitability for all issue types may be limited. For instance, it may not be the most optimal selection for problem domains characterized by high levels of noise or necessitating real-time optimization. Moreover, optimized parameter use is still important issue; consequently, in the future, an adaptive mechanism will be integrated with the algorithm to ensure optimal performance and prevent suboptimal convergence or divergence due to parameter configurations.

Data availability

The dataset is available at https://github.com/zae-bayern/elpv-dataset.

Abbreviations

- AI:

-

Artificial intelligence

- BA:

-

Bat algorithm

- BOA:

-

Butterfly optimization algorithm

- CM:

-

Classification module

- EL:

-

Electroluminescence

- FEM:

-

Feature extraction module

- FSM:

-

Feature selection module

- GWO:

-

Gray wolf optimization

- GLCM:

-

Gray level co-occurrence matrix

- GA:

-

Genetic algorithm

- HE:

-

Histogram equalization

- IR:

-

Infrared imaging

- IPM:

-

Image preprocessing module

- MLP:

-

Multi-layer perceptron

- NB:

-

Naïve Bayes

- PV:

-

Photovoltaic

- PSO:

-

Particle swarm optimization

References

Gielen D, Gorini R, Wagner N et al (2019) Global energy transformation: a roadmap to 2050,” international Renewable Energy Agency (IRENA), 2019

International Energy Agency: IEA. https://www.iea.org/, last access 16 July 2023

Nengroo S, Ali M, Zafar A et al (2019) An optimized methodology for a hybrid photo-voltaic and energy storage system connected to a low-voltage grid. Electronics, Multidisciplinary Digital Publishing Institute (MDPI) 8(2):1–12

Jaber M, Abd Hamid A, Sopian K et al (2022) Prediction model for the performance of different PV modules using artificial neural networks. Appl Sci 12:1–16. https://doi.org/10.3390/app12073349

Rana M, Uddin M, Sarkar M et al (2022) A review on hybrid photovoltaic—battery energy storage system: current status, challenges, and future directions. J Energy Storage 51:1–20. https://doi.org/10.1016/j.est.2022.104597

Crespo D, Blay C, Escrivá G et al (2023) Evaluation of the Spanish regulation on self-consumption photovoltaic installations. A case study based on a rural municipality in Spain. Renew Energy 204:788–802. https://doi.org/10.1016/j.renene.2023.01.055

Yang N, Ismail H (2022) Voting-based ensemble learning algorithm for fault detection in photovoltaic systems under different weather conditions. Mathematics, Multidisciplinary Digital Publishing Institute (MDPI) 10(2):1–18. https://doi.org/10.3390/math10020285

Romero H, González M, Payo V et al (2022) Applications of artificial intelligence to photovoltaic systems: a review. Appl Sci, Multidisciplinary Digital Publishing Institute (MDPI). https://doi.org/10.3390/app121910056

Deitsch S, Christleind V, Berger S et al (2019) Automatic classification of defective photovoltaic module cells in electroluminescence images. Sol Energy 185:455–468. https://doi.org/10.1016/j.solener.2019.02.067

Et-taleby A, Chaibi Y, Allouhi A et al (2023) A combined convolutional neural network model and support vector machine technique for fault detection and classification based on electroluminescence images of photovoltaic modules. J Eng Appl Sci 27:1–17. https://doi.org/10.1186/s44147-023-00200-0

Pratta L, Mattheusa J, Klein R (2023) A benchmark dataset for defect detection and classification in electroluminescence images of PV modules using semantic segmentation. Syst Soft Comput 5:1–18. https://doi.org/10.1016/j.sasc.2023.200048

Roumpakias E, Stamatelos T (2022) Health monitoring and fault detection in photovoltaic systems in central greece using artificial neural networks. Appl Sci Multidisciplinary Digital Publishing Institute (MDPI) 12(23):1–21

Seghiour A, Abbas H, Chouder A, Rabhi A (2023) Deep learning method based on autoencoder neural network applied to faults detection and diagnosis of photovoltaic system. Simul Model Pract Theory 123:1–31. https://doi.org/10.1016/j.simpat.2022.102704

Shaban W (2023) Early diagnosis of liver disease using improved binary butterfly optimization and machine learning algorithms. Multimed Tools Appl. https://doi.org/10.1007/s11042-023-16686-y

Adhya D, Chatterjee S, Chakraborty A et al (2022) Performance assessment of selective machine learning techniques for improved PV array fault diagnosis. Sustain Energy Grids Netw 29:1–16. https://doi.org/10.1016/j.segan.2021.100582

Urbanowicz R, Meeker M, La Cava W et al (2018) Relief-based feature selection: introduction and review. J Biomed Inform 85:189–203. https://doi.org/10.1016/j.jbi.2018.07.014

Bahassine S, Madani A, Al-Sarem M et al (2020) Feature selection using an improved Chi-square for Arabic text classification. J King Saud Univ Comput Inf Sci 32(2):225–231

Sun H, Su J, Deng Zh et al (2023) Grouping bi-chi-squared method for pulsar navigation experiment using observations of Rossi X-ray timing explorer. Chin J Aeronaut 36(1):386–395

Niazi K, Akhtar W, Khan H et al (2019) Hotspot diagnosis for solar photovoltaic modules using a Naive Bayes classifier. Energy 190:34–43. https://doi.org/10.1016/j.solener.2019.07.06

Tang W, Yanga Q, Xiong K, Yana W (2020) Deep learning based automatic defect identification of photovoltaic module using electroluminescence images. Sol Energy 201:453–460. https://doi.org/10.1016/j.solener.2020.03.04

Ali M, Khan H, Masud M et al (2020) A machine learning framework to identify the hotspot in photovoltaic module using infrared thermography. Sol Energy 208:643–651. https://doi.org/10.1016/j.solener.2020.08.027

Hajji M, Harkat M, Kouadri A et al (2021) Multivariate feature extraction based supervised machine learning for fault detection and diagnosis in photovoltaic systems. Eur J Control 59:313–321. https://doi.org/10.1016/j.ejcon.2020.03.004

Hong F, Song J, Meng H et al (2022) A novel framework on intelligent detection for module defects of PV plant combining the visible and infrared images. Sol Energy 236:406–416. https://doi.org/10.1016/j.solener.2022.03.018

Zhao X, Song C, Zhang H et al (2023) HRNet-based automatic identification of photovoltaic module defects using electroluminescence images. Energy 267:1–9. https://doi.org/10.1016/j.energy.2022.126605

Voutsinas S, Karolidis D, Voyiatzis I, Samarakou M (2023) Development of a machine-learning-based method for early fault detection in photovoltaic systems. J Eng Appl Sci 70:1–17. https://doi.org/10.1186/s44147-023-00200-0

Hichri A, Hajji M, Mansouri M et al (2022) Genetic-algorithm-based neural network for fault detection and diagnosis: application to grid-connected photovoltaic systems. Sustainability, Multidisciplinary Digital Publishing Institute (MDPI) 14:1–14. https://doi.org/10.3390/su141710518

Xie X, Lai G, You M et al (2023) Effective transfer learning of defect detection for photovoltaic module cells in electroluminescence images. Sol Energy 250:312–323. https://doi.org/10.1016/j.solener.2022.10.055

Li L, Wang Z, Zhang T (2023) GBH-YOLOv5: ghost convolution with BottleneckCSP and tiny target prediction head incorporating YOLOv5 for PV panel defect detection. Electronics, MDPI 23:1–15. https://doi.org/10.3390/electronics12030561

Shaban W, Abdullah A, Ashraf E (2023) An in-depth review of AI-based techniques for early diagnosis of breast cancer: evaluation of CAD system design and classification methodologies. In: Proceedings of the 2023 international telecommunications conference (ITC-Egypt), Alexandria, Egypt, IEEE explore, pp 322–329. https://doi.org/10.1109/ITC-Egypt58155.2023.10206239

Kumar V, Maheshwari P (2022) Advanced analytics on IV curves and electroluminescence images of photovoltaic modules using machine learning algorithms. Photovoltaics 30:880–888. https://doi.org/10.1002/pip.3469

Vishnoi V, Kumar K, Kumar B (2022) A comprehensive study of feature extraction techniques for plant leaf disease detection. Multimed Tools Appl 81:367–419. https://doi.org/10.1007/s11042-021-11375-0

Shaban W (2023) Insight into breast cancer detection: new hybrid feature selection method. Neural Comput Appl 35:6831–6853. https://doi.org/10.1007/s00521-022-08062-y

Yahya Z, Imane S, Hicham H et al (2022) Applied imagery pattern recognition for photovoltaic modules’ inspection: a review on methods, challenges and future development. Sustain Energy Technol Assess 52:1–19. https://doi.org/10.1016/j.seta.2022.102071

Altaf M, Akram T, Khan M et al (2021) A new statistical features based approach for bearing fault diagnosis using vibration signals. Sensors, Multidisciplinary Digital Publishing Institute (MDPI) 22:1–15. https://doi.org/10.3390/s22052012

Alweshah M, Al Khalaileh S, Gupta B et al (2020) The monarch butterfly optimization algorithm for solving feature selection problems. Neural Comput Appl. https://doi.org/10.1007/s00521-020-05210-0

Sadeghian Z, Akbari E, Nematzadeh H (2021) A hybrid feature selection method based on information theory and binary butterfly optimization algorithm. Eng Appl Artif Intell 97:1–13. https://doi.org/10.1016/j.engappai.2020.104079

Long W, Jiao J, Liang X et al (2021) Pinhole-imaging-based learning butterfly optimization algorithm for global optimization and feature selection. Appl Soft Comput 103:1–19. https://doi.org/10.1016/j.asoc.2021.107146

EL-Hasnony I, Elhoseny M, Tarek Z (2022) A hybrid feature selection model based on butterfly optimization algorithm: COVID-19 as a case study. Expert Syst 39:1–28. https://doi.org/10.1111/exsy.12786

Makhadmeh S, Al-Betar M, Abasi A et al (2023) Recent advances in butterfly optimization algorithm, its versions and applications. Achiev Comput Methods Eng 30:1399–1420. https://doi.org/10.1007/s11831-022-09843-3

Deng L, Liu S (2023) A novel hybrid grasshopper optimization algorithm for numerical and engineering optimization problems. Neural Process Lett 55:9851–9905. https://doi.org/10.1007/s11063-023-11230

Tang J, Duan H, Lao S (2023) Swarm intelligence algorithms for multiple unmanned aerial vehicles collaboration: a comprehensive review. Artif Intell Rev 56:4295–4327. https://doi.org/10.1007/s10462-022-10281-7

Naik R, Singh U (2022) A review on applications of chaotic maps in pseudo-random number generators and encryption. Ann Data Sci. https://doi.org/10.1007/s40745-021-00364-7

Tawhid M, Ibrahim A (2022) Improved salp swarm algorithm combined with chaos. Math Comput Simul 202:113–148. https://doi.org/10.1016/j.matcom.2022.05.029

Shaban W, Rabie A, Saleh A et al (2021) Accurate detection of COVID-19 patients based on distance biased Naïve Bayes (DBNB) classification strategy. Pattern Recogn 119:1–15. https://doi.org/10.1016/j.patcog.2021.108110

Sethi J, Mittal M (2022) Efficient weighted Naive Bayes classifiers to predict air quality index. Earth Sci Inform 15:1–12. https://doi.org/10.1007/s12145-021-00755-7

Li L, Zhou Z, Bai N et al (2022) Naive Bayes classifier based on memristor nonlinear conductance. Microelectron J 129:1–9. https://doi.org/10.1016/j.mejo.2022.105574

Zhang J, Li C, Yin Y et al (2023) Applications of artificial neural networks in microorganism image analysis: a comprehensive review from conventional multilayer perceptron to popular convolutional neural network and potential visual transformer. Artif Intell Rev 56:1013–1070. https://doi.org/10.1007/s10462-022-10192-7

Pan H, Chen Sh, Xiong H (2023) A high-dimensional feature selection method based on modified gray wolf optimization. Appl Soft Comput. https://doi.org/10.1016/j.asoc.2023.110031

GitHub: https://github.com/zae-bayern/elpv-dataset. Accessed 21 June 2023

Lutz C, Deitsch S, Maier A et al (2018) A benchmark for visual identification of defective solar cells in electroluminescence imagery. In: Proceedings of the European PV solar energy conference and exhibition (EU PVSEC), Brussels, Belgium, 2018, pp 24–28

Mansour-Hajji M, Harkat A, Kouadri A et al (2021) Multivariate feature extraction based supervised machine learning for fault detection and diagnosis in photovoltaic systems. Eur J Control. https://doi.org/10.1016/j.ejcon.2020.03.004

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Shaban, W.M. Detection and classification of photovoltaic module defects based on artificial intelligence. Neural Comput & Applic (2024). https://doi.org/10.1007/s00521-024-10000-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00521-024-10000-z