Abstract

The advent of Internet-of-Things (IoT)-based telemedicine systems has ushered in a new era of technology facilitating early diagnosis and prevention for distant patients. This is particularly crucial for severe illnesses such as Alzheimer's disease, encompassing memory loss and cognitive dysfunction that significantly impairs daily life, necessitating immediate medical attention. The surge in data from intelligent systems, sourced from diverse locations, has heightened complexity and diminished diagnostic accuracy. In response, this study proposes an innovative distributed learning-based classification model, leveraging a deep convolutional neural network (CNN) classifier. This model proficiently manages clinical data images from disparate sources, ensuring disease classification with high accuracy. The research introduces a novel system designed for automated Alzheimer's disease detection and healthcare delivery. Comprising two subsystems, one dedicated to Alzheimer's diagnosis with an impressive 94.91% accuracy using CNN, and another for healthcare treatment, delivering excellent results. Notably, the system is adaptable to various diseases post-training. The study emphasizes the model's robust performance, achieving an outstanding 94.91% accuracy after 200 training epochs, with a loss of 0.1158, and a validation accuracy of 96.60% with a loss of 0.0922 at training without noise and loss: 0.2938 - Accuracy: 0.8713 - val_loss: 0.2387 - val_accuracy: 0.9069 at CNN with noise. Precision, recall, and F1 scores are comprehensively presented in a classification report, underscoring the system's effectiveness in categorizing Mild Demented and Non-Demented cases. While acknowledging room for further enhancements, this study introduces a promising avenue for telemedicine systems. It significantly impacts the early diagnosis and treatment of Alzheimer's disease and related medical conditions, thereby advancing the healthcare sector and improving patients' quality of life. The inclusion of these quantitative results enhances the abstract's appeal to readers, providing a clearer understanding of the study's outcomes.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

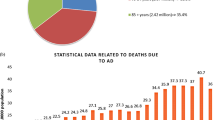

The World Health Organization (WHO) reports that 47.5 million individuals worldwide have dementia, a premature cognitive decline beyond biological aging. Most people with dementia have Alzheimer’s disease (AD). It has a cumulative effect on memory, thought, behaviors, and the ability to carry out routine tasks. There is no cure or viable medical therapy for this complicated and permanent neurological condition. Nevertheless, preventative medicine may slow illness development if signs of cognitive impairment are caught early [1]. The standard approach to diagnosing Alzheimer’s disease is to track the mental development of a patient with moderate cognitive impairment (MCI) through time. This means that physicians can only diagnose AD if the illness has progressed to the point where symptoms are noticeable. Conversely, Alzheimer’s disease is caused by brain cells’ gradual death (degeneration).

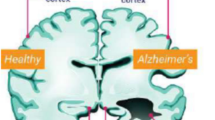

This deterioration may be seen in brain scans even before the onset of symptoms [2]. Magnetic resonance imaging (MRI) [3] and other structural imaging techniques can be used to detect brain shrinkage as well as localized injury to specific regions (such as the hippocampus). MRI has made it possible for researchers to obtain a three-dimensional (3D) image of the brain’s structure and determine the precise dimensions of the hippocampus and other important brain regions. For this reason, MRI-based diagnostics are now routinely used in clinical settings to assess dementia [4, 5]. Neuropathologists study extensive regions of the brain to find unique and highly differentiated morphologies [6]. Nevertheless, this is a challenging and subjective activity requiring a high degree of competence to assess the pictures accurately. MRI is impracticable for regular examinations because of its high cost, long processing times, and potential for expert disagreement. The use of computer-assisted diagnostic (CAD) technologies could lessen these drawbacks. Numerous data types are frequently used (e.g., genetic analysis, positron emission tomography, and magnetic resonance imaging) [7], but this adds complexity because several regularizations are required. Because of this, CAD techniques that rely solely on a single data source, like MRI, frequently have the highest success rates [8]. Current MRI-based CAD methods can use a collection of 2D slices [9] or the whole 3D brain volume [10].

Early research used standard algorithmic pipelines (manually produced features paired with shallow classifiers) [11]. Echoing the current medical imaging trend, deep learning (DL) is quickly becoming the most popular automated brain feature extraction method [12,13,14]. The computational cost of applying DL to 3D brain volumes is high [10] because it depends on a variety of training variables. The lack of 3D data and the potential negative effects of the curse of dimensionality further limit the ability to produce accurate models [15]. Additionally, 2D models (trained on massive picture datasets) are more widely available than pre-trained 3D models [16]. Due to the aforementioned, deep models based on 2D data have the potential to be more accurate in this area, but there are still many unanswered questions. In the majority of cases, 2D MRI slices from the available 3D data were chosen at random from all subjects without regard to which group they belonged to in order to test the effectiveness of various methods for dividing data into two groups (normal vs. dementia) at the slice level [17, 18]. Even though slices from the same person may appear in both the training and testing stages, the distribution of the test data may resemble that of the training set more closely than would be anticipated from data collected from wholly new individuals. This is referred to as the “data leaking” problem in machine learning [19], which has called into question the validity of numerous past MRI-based CAD research and raised concerns about its use to real clinical screens [20]. In light of the scarce research, automated classification capability is still insufficient for MRI-based CAD to be effective in clinical settings [9, 21]. It is unclear how many 2D slices should be cut from the 3D MRI volumes.

Adding more slices to each subject might offer some discriminating information but also contribute a lot of noise. Only examples where an arbitrary number of slices was assigned to each topic are available in the literature as benchmarks. The most recent and potent CNN models have altered computer vision, but they have not been tested for AD diagnosis using MRI data yet. Perhaps, this is a result of the laborious and time-consuming data preparation, model validation, and model implementation processes used by the machine learning community [22].

Additionally, it has not been confirmed whether other recent machine learning advancements outside of convolutional neural networks are viable. It has been demonstrated that self-attention may be a useful method for creating picture recognition models [23]. To our knowledge, this field of study has been less investigated in CAD, but it can be utilized to highlight important parts of an image to extract high-level data. New, ground-breaking tests have been conducted using 3D brain data recently, but no work has been done on topics connected to AD [24].

By addressing the aforementioned constraints, this study attempts to improve the automated detection of dementia in MRI brain data. The current state-of-the-art in classification methods cannot completely emphasize the crucial information for dementia identification that is hypothesized to be present in the 2D slices retrieved from MRI brain data. The objective, therefore, is to utilize more robust methods that can more accurately define the data distribution, preserve information that may be used to identify dementia, and get rid of any extraneous data. The tried-and-true pipeline, including registration, slicing, and categorization, was used for this mission. This research examines three cutting-edge, highly-promising CNN architectures as well as two methods based on vision transformers (VIT) [25] to transform input images into clinical and diagnoses. The ResNet [26], DenseNet [27], and EfficientNet [28] architectures in particular were put to the test. Their efficacy in various medical image analysis applications has been demonstrated [29]. And they are now among the top-performing methods for image categorization.

Contrarily, data-efficient image transformers (deit) [30, 31] use significantly less data and computer resources to build an effective image classification model because of their redesigned training methodology. The most common form of dementia (a catch-all term for memory loss and other cognitive functions severe enough to interfere with daily functioning) is Alzheimer’s, and Masked Autoencoders (MAE) [32] are self-attention learners that can automatically highlight significant regions in brain images for potentially fatal diseases like Alzheimer’s which exclusively utilize self-attention techniques and require immediate medical intervention [33].

Numerous medical imaging techniques have been applied to cancer patients’ therapy to aid in early identification, situation monitoring, and follow-up [34]. It takes a lot of effort and time for doctors to interpret a lot of medical images. Medical image interpretation done by hand might be biased and prone to mistakes. To make these processes automatic by precisely and effectively combining medical pictures, computer-aided diagnostics assist physicians in the early detection of cancer [35]. Many techniques for computer-aided cancer disease diagnosis have been developed recently [36]. Deep learning models assist physicians in identifying cancer from medical images in its early stages. These models have been successfully applied in many domains, including cybersecurity and disease diagnosis [37].

One of the most difficult cancers to identify is brain cancer. It is an uncontrollably developing condition that can affect any area of the brain. Distinguishing the afflicted from the healthy brain tissue is the most difficult challenge in the detection of brain cancer. Numerous investigations have been conducted to identify brain cancer. Two techniques based on two-dimensional and three-dimensional CNN were proposed by Guo et al. [55]. The suggested methods extract salient characteristics from three-dimensional and two-dimensional slice brain pictures. Swift (SIFT), a scale-invariant feature, and the KAZE feature performed worse when these two methods were combined.

In a similar vein, Pereira et al. [38] proposed an automatic MRI image segmentation method utilizing CNN. The authors of the study examined various methods for identifying brain tumors in addition to intensity normalization. In order to segment the images of brain tumors, Zhao et al. [39] recommended a hybrid method that combined a fully connected convolutional neural network and a conditional random field. The authors suggested utilizing picture patches to train a fully connected convolutional neural network and a conditional random field in the first phase. Ultimately, they used image slices directly to fine-tune their method. Based on a CNN model’s dense training technique, Kamnitsas et al. [40] proposed combining neighboring image patches into a single pass. They utilized a three-dimensional completely connected random field to minimize false positives. Likewise, CNN was employed by researchers in [41] to identify brain cancer.

The deep learning methods for detecting brain cancer can be summarized in Table 1

In trials on two big, publicly available datasets, subject-level categorization was greatly improved compared to state-of-the-art techniques. In addition, the knowledge extraction and generalization abilities of each CNN and VIT technique were assessed using various benchmarks, with the number of topic slices ranging from 4 to 16. For this article, a fair comparison of categorization algorithms necessitated that 3D volume registration and 2D slice extraction be carried out in the same manner as comparable studies in the literature. The article does not address the issue of noise reliability created during MRI acquisition using different scanning instruments. Many studies [42, 43] and [44] discuss the importance of entropy in brain MRI data; this is how the rest of the paper is structured: Materials and techniques are discussed in Sect. 2, while experimental findings are presented in Sect. 3. Finally, Sect. 4 contains the paper’s conclusion.

Convolutional neural networks (CNNs) have garnered attention for their efficacy in time-series modeling and classification tasks. LSTM-ALO (adaptive learning optimization) models, for instance, offer capabilities in capturing long-range dependencies and temporal dynamics within sequential data, making them suitable for analyzing time-series datasets characteristic of medical records. Similarly, CNN-INFO (Information Maximizing Convolutional Neural Networks) have demonstrated proficiency in extracting salient features from complex data representations, facilitating accurate classification, and prediction tasks in diverse domains. “While these models present compelling avenues for application in healthcare analytics, our study focuses on the integration of a deep CNN classifier within an Internet-of-Things (IoT)-based telemedicine system for Alzheimer's disease detection.”

We discuss the rationale behind our decision not to adopt LSTM-ALO, CNN-INFO, and other recent deep learning models in the context of our study. While LSTM-ALO models excel in capturing temporal dependencies, they may introduce computational overheads and complexity unsuitable for real-time telemedicine applications, which demand efficient processing and decision-making. Similarly, although CNN-INFO models offer robust feature extraction capabilities, their architecture may not be optimized for handling the multidimensional image data characteristic of neuroimaging studies utilized in Alzheimer’s disease diagnosis. By elucidating the considerations and constraints specific to our research objectives, we aim to provide clarity on the methodology adopted and its alignment with the overarching goals of advancing telemedicine systems for Alzheimer’s disease detection and health-care delivery.

2 Methodology

The intelligent system consists of two subsystems: an Alzheimer’s diagnoses disease (AD) subsystem and a health-care treatment subsystem. The proposed intelligent system model illustrates in Fig. 1.

2.1 Classification algorithm

A convolutional neural network (CNN) is employed for the classification of Alzheimer’s disease (AD) using magnetic resonance imaging (MRI). The network is trained by convolving Mild demented and Nondemented MRI images to detect the early stages of Alzheimer’s disease. In essence, the CNN distinguishes between MRI images of patients as either Mild demented or Nondemented. The CNN utilizes the Adam optimizer with a learning rate of 0.001 and sources its Mild demented and Nondemented images from various websites, including OASIS, ADNI, MIRIAD, and AIBL databases. The CNN architecture is composed of four convolutional layers with a kernel size of 3 × 3, followed by a rectified linear unit (ReLU) activation function. These layers are designed to process input images sized at 150 × 150 × 3.

The algorithm encompasses two key phases: (I) a preprocessing testing phase and (II) a combined training and testing phase. Figure 2 illustrates the block diagram representing the AD classification algorithm.

-

Datasets used

The OASIS, ADNI, MIRIAD, and AIBL datasets are valuable sources of medical and neuroimaging data that have played pivotal roles in various studies, particularly in the field of Alzheimer’s disease research:

-

1.

OASIS (Open Access Series of Imaging Studies):

-

OASIS is an open-access dataset that includes brain imaging data, such as MRI and PET scans.

-

It features data from both healthy individuals and those with Alzheimer’s disease.

-

OASIS has contributed significantly to the development of diagnostic and predictive models for Alzheimer’s disease.

-

-

2.

ADNI (Alzheimer’s Disease Neuroimaging Initiative):

-

ADNI is a large, longitudinal study that collects neuroimaging, genetic, and clinical data.

-

It focuses on individuals with Alzheimer’s disease, mild cognitive impairment, and healthy controls.

-

ADNI has been instrumental in understanding the progression of Alzheimer’s disease and in developing early diagnostic tools.

-

-

3.

MIRIAD (Minimal Interval Resonance Imaging in Alzheimer’s Disease):

-

MIRIAD is a dataset dedicated to the study of Alzheimer’s disease and related dementias.

-

It comprises MRI data, including structural and functional imaging, from participants with Alzheimer’s disease and controls.

-

MIRIAD has been used to investigate brain changes associated with the disease.

-

-

4.

AIBL (Australian Imaging, Biomarkers, & Lifestyle Flagship Study of Aging):

-

AIBL is a comprehensive dataset that collects neuroimaging, genetic, and lifestyle data.

-

It includes participants with Alzheimer’s disease, mild cognitive impairment, and healthy aging controls.

-

AIBL has been valuable in exploring the relationship between genetics, lifestyle factors, and brain health in aging and Alzheimer’s research.

-

2.1.1 Preprocessing testing phase

In this phase, the image input is resized by four preprocessing steps: rescaling by 1/255, shearing by 0.2, zooming by 0.2, and horizontal flip. Finally, the preprocessed image size was reduced to 150 × 150 × 3 before applying the training and testing phase.

2.1.2 Training and testing phase

This phase is responsible for training and testing data. It consists of preprocessing training data, training data, and CNN. The MRI images with 896 Mild demented patients and 3200 Nondemented belong to various websites, as mentioned before.

-

Preprocessing training

Rescaling, shearing, zooming, and horizontal flips are applied in this unit using the same setting involved in the preprocessing testing phase. These data will be trained and employed at CNN.

-

Training data

In this step, we apply the supervised learning rule through the backpropagation algorithm, as depicted in Fig. 3, for training purposes.

The preprocessed image inputs constitute the initial raw data that are fed into the convolutional neural network (CNN) for training. In the feature extraction phase, the system extracts relevant features and reduces the dimensionality of inputs before they are trained in the classifier. Both the feature extraction and classifier components perform functions akin to encoders and decoders, and the details of the CNN architecture will be elaborated upon in the subsequent stage.

The classifier employed here is a fully connected neural network, and it employs the backpropagation algorithm for training and feature acquisition. To elucidate the training steps, we refer to Eqs. 1–6, which offer a clear depiction of the process. These equations outline the computation of error and the delta, denoted as δ, for the output nodes (as shown in Eqs. 1 and 2). Furthermore, Eqs. 3 and 4 detail the calculation of the backward deltas for the adjacent left nodes. Lastly, Eqs. 5 and 6 are employed to adjust the weights in accordance with the prescribed learning rule.

where \({\varphi } ^{\prime}(\cdot )\) the output node activation function derivative, \({y}_{i}\) output, \({d}_{i}\) training data output, \({v}_{i}\) weighted sum corresponding node, and \({e}_{i}\) error

where \(K\) is backward

where \({x}_{j}=\) the input node output \(j,(j=\mathrm{1,2},3)\), \({e}_{i}=\) the output node \(i\) error, \({w}_{ij}=\) the weight between the output node \(i\) and input node \(j\), and \(\alpha =\) learning rate \((0<\alpha \le 1)\)

-

CNN architecture

The architecture of the proposed CNN comprises two main components: the feature extractors and a classifier. In this layered sequential model for MRI image classification, each layer within the feature extraction section takes the output from the preceding layer as its input, and the resulting output is subsequently fed into the subsequent layers.

This model is structured with four convolutional layers, featuring 32, 64, 64, and 128 filters, respectively. Notably, the lower layers of the proposed network progressively incorporate more filters as they learn hidden patterns during network training and detect features within smaller image segments. As the network expands, the CNN layer architecture deepens, and the number of acquired characteristics at each layer is directly associated with the number of filters employed. The feature map size can be determined using the following equation:

where W, F, P, and S are input size, filter size, stride, and padding, respectively.

The default kernel size of 3 × 3 and a nonlinear rectified linear unit (ReLU) activation function are used. Using ReLU, any neurons with a negative input value will not activate and be with zero value, which improves the computation and training. Equation 8 shows the ReLU activation function:

Similarly, the three max-pooling layers and kernel window of size 2 × 2 are applied. Then, the classifier will start with the binary classification neural network, which classifies the input data into Mild demented and Nondemented. The neural network is constructed with a single output node and sigmoid activation function. The function of the sigmoid activation function is illustrated in Eq. 9. Figure 3 shows the architecture of the proposed CNN.

Tables 2 and 3 show CNN architecture parameters and a summary of the proposed CNN.

The pseudo-code for CNN training and evaluation will be illustrated as follows:

Pseudo-code for convolutional neural network (CNN) training and evaluation

# Import necessary libraries |

# Define the paths to training and testing data |

# Create data generators for training and testing |

# Define input image shape (150,150,3) |

# Create a convolutional neural network model |

Conv2D(32, (3, 3), padding = 'same', activation = 'relu')(img_imput) |

Conv2D(64, (3, 3), padding = 'same', activation = 'relu') |

MaxPool2D((2, 2), strides = (2, 2)) |

Dropout(0.25) |

Conv2D(64, (3, 3), padding = 'same', activation = 'relu') |

MaxPool2D((2, 2), strides = (2, 2)) |

Dropout(0.25) |

Conv2D(128, (3, 3), padding = 'same', activation = 'relu') |

MaxPool2D((2, 2), strides = (2, 2)) |

Dropout(0.25) |

Flatten() |

Dense(64) |

Dropout(0.5) |

Dense(2, activation = 'sigmoid') |

# Create the model |

# Compile the model |

# Train the model with batch_size = 64 and epochs = 200 |

# Plot loss function for training and validation |

# Plot accuracy for training and validation |

# Evaluate the model and display confusion matrix |

# Save the trained model |

2.2 The health-care treatment

The health-care treatment subsystem plays a pivotal role in the delivery of prescription treatments to patients through the utilization of an intelligent medication box. It stands as one of the system’s core components, serving as a vital element in furnishing patients with essential medical care. This intelligent medication box comprises three integral components: a compact medication box, an embedded control system, and a mobile application.

2.2.1 Compact medication box

The intelligent box was designed using Maker Case and Inkscape CAD software, Solid Works, and a Prusa i3 3D printer. It was manufactured using a Laser Cutter machine and assembled in a compact medication box. It consists of three medical pill cones with a capacity of 50 medications each. The intelligent medication box dimensions are 250 mm height × 150 mm width, and the outlet cone diameter is 18 mm. This model was designed to supply three different types of medications to illustrate the model’s idea, and we can increase them by increasing the number of cones that provide medical medicines. Figure 4 shows the medical pill box design stages.

2.2.2 Embedded control system

The embedded control system is the system that responds to control the medical medication box. It designs to manage supplying patients with medication and medical care at a specific time which the doctor decides. It consists of Arduino Uno, Real-Time Clock (RTC), a Bluetooth module, three servo motors, and a buzzer. Figure 5 shows the circuit of the control system. The purpose of using these items is illustrated as follows.

-

Arduino Uno is a microcontroller used to control the system.

-

Real-Time Clock (RTC) uses DS1307 type to provide the system with clock time counts (seconds–minutes–hours–months–years).

-

Bluetooth module uses HM-06 type. It communicates wireless data between Arduino microcontrollers and mobile application systems.

-

Three servo motors type SG-90 is used to push the medication from three cones at a specific time the doctor decides to the patients.

-

Buzzer is used to announce patients to take medical bills on time.

2.2.3 Mobile application

The App Inventor program is used to design the mobile application of this system. The application is divided into three parts; the first is associated with the patient, the second is associated with the doctor, and the third is related to the pharmacy. Figure 7a shows the home page of the mobile application.

The patient part is divided into two parts; the first is for the patient’s relatives or someone responsible for the patient, who can enter their data after signing up for the application.

In the second part, the patient can receive the medical data (types and times of medications), which comes from the doctor, and types of these data in the application to communicate with the medication box using Bluetooth. Figure 6b shows the pages of the patient on the mobile application. Then, the doctor sets the time and types of medications the patient needs and sends this data to the patient. Figure 7c illustrates the doctor’s page. And last part is for the pharmacy, in which the patient can call or send an SMS to the nearest pharmacy after the medication is finished. Figure 7d illustrates the pharmacy’s page.

3 Results

The proposed CNN model has training with total params: 2,784,578, after 200 epoch loss: 0.1158—accuracy: 0.9491—val_loss: 0.0922—val_accuracy: 0.9660. The system’s accuracy and loss are shown in Fig. 7.

3.1 Confusion metrics

The confusion matrix is used to evaluate the system’s performance. There are four performance metrics: accuracy, precision, recall, and F1 score. Equations 10–13 show the confusion matrix parameters and evaluation metrics illustrated in Fig. 8.

where TP, TN, FP, and FN are true positive, true negative, false positive, and false negative.

Table 3 shows a summary of classification results.

The comparison between the proposed system’s accuracy and other approaches is illustrated in Table 4 and Fig. 9.

Moreover, the health-care treatment subsystem provides patients with timely medical medications and 100% accuracy.

There are many factors which are considered and achieved in this work such as:

-

Integrating Many Datasets: Using different datasets from different medical equipment modality avoiding the overfitting in our model and proving the accuracy

-

Integration of IoT in Telemedicine: While IoT-based telemedicine systems have been explored previously, our work specifically focuses on integrating IoT technologies into the detection and prevention of Alzheimer’s disease. The incorporation of IoT devices enhances the accessibility of health-care services, providing early diagnosis and prevention for distant patients.

-

Distributed Learning-Based Classification Model: We propose an innovative distributed learning-based classification model utilizing a deep CNN classifier. This model effectively manages clinical data from dispersed sources, addressing the increasing complexity of handling data from intelligent systems. The distributed learning aspect ensures scalability and adaptability, making it suitable for various diseases beyond Alzheimer’s.

-

Highly Accurate Disease Classification: Our study aims to achieve remarkable accuracy in Alzheimer’s disease diagnosis using the CNN classifier. This accuracy will be achieved with a comprehensive classification report including precision, recall, and F1 scores. The results demonstrate the effectiveness of our proposed model in categorizing Mild demented and Nondemented cases.

-

Versatility for Other Diseases: The proposed telemedicine system is designed to be adaptable for various other diseases post-training. This adaptability enhances the system’s utility in addressing a broader spectrum of medical conditions, making it a versatile and valuable tool in the health-care domain.

After all previous consideration that is mentioned above the proposed CNN model has training with total params: 2,784,578 without noise and by adding the salt and pepper with 0.05 noise to the training dataset. Figure 10 illustrates the Mild demented and Demented with and without noise. The CNN without noise after 200 epoch has loss: 0.1158—accuracy: 0.9491—val_loss: 0.0922—val_accuracy: 0.9660 comparing to loss: 0.2938—accuracy: 0.8713—val_loss: 0.2387—val_accuracy: 0.9069 at CNN with noise. The system’s accuracy and loss are shown in Fig. 9.

3.2 Confusion metrics

The confusion matrix is used to evaluate the system’s performance. There are four performance metrics: accuracy, precision, recall, and F1 score. Equations 10–13 show the confusion matrix parameters and evaluation metrics illustrated in Fig. 10.

where TP, TN, FP, and FN are true positive, true negative, false positive, and false negative.

Table 5 shows a summary of classification results. The comparison between the proposed system’s accuracy and other approaches is illustrated in Table 5 and Fig. 11.

Moreover, the health-care treatment subsystem provides patients with timely medical medications and 100%.

4 Conclusion

This research has introduced an Internet-of-Things-based telemedicine system, which is a ground-breaking technology with the potential to revolutionize health care by offering early diagnosis and prevention capabilities to patients, particularly those afflicted by severe illnesses such as Alzheimer’s disease. Alzheimer’s disease is a major contributor to the overall burden of dementia, characterized by memory loss and cognitive impairment that significantly hinders daily life, necessitating immediate medical attention. With the proliferation of intelligent systems, the challenge of handling and ensuring diagnostic accuracy for data from diverse sources has become increasingly complex. In response to this challenge, our study has proposed an innovative distributed learning-based classification model leveraging a deep convolutional neural network (CNN) classifier. This model effectively manages clinical data from disparate sources, resulting in highly accurate disease classification.

Our study presents a novel system designed for the automatic detection and health-care provision for Alzheimer’s patients. This system employs convolutional neural networks for Alzheimer’s disease diagnosis and offers comprehensive medical care. It comprises two subsystems: the Alzheimer’s disease diagnostic system, achieving an impressive 94.91%, accuracy, and the health-care treatment system, delivering excellent results. Importantly, the proposed system exhibits adaptability for application to various other diseases following appropriate dataset training.

The results of our study demonstrate the model’s robust performance. After 200 epochs of training, the model achieved an outstanding accuracy of 94.91%, with a loss of 0.1158, and a validation accuracy of 96.60%, with a loss of 0.0922. The precision, recall, and F1 scores were computed, and the classification report revealed the system’s effectiveness in classifying Mild demented and Nondemented cases. While there is room for further improvement, this research has paved the way for the development of telemedicine systems that can significantly impact the early diagnosis and treatment of Alzheimer’s disease and other related medical conditions. This innovative approach holds great promise in advancing the field of health care and improving the lives of patients suffering from cognitive disorders and severe illnesses.

-

This study presents a novel system designed for the automatic detection and health-care provision for Alzheimer’s patients. This system employs convolutional neural networks for Alzheimer’s disease diagnosis and offers comprehensive medical care. It comprises two subsystems: the Alzheimer’s disease diagnostic system, achieving an impressive 94.91%, accuracy, and the health-care treatment system, delivering excellent results. Importantly, the proposed system exhibits adaptability for application to various other diseases following appropriate dataset training.

-

This work introduces a novel model with robust performance. After 200 epochs of training, the model achieved an outstanding accuracy of 94.91%, with a loss of 0.1158, and a validation accuracy of 96.60%, with a loss of 0.0922 at training without noise and loss: 0.2938—accuracy: 0.8713—val_loss: 0.2387—val_accuracy: 0.9069 at CNN with noise. The precision, recall, and F1 scores were computed, and the classification report revealed the system’s effectiveness in classifying Mild demented and Nondemented cases.

-

The Internet-of-Things-based telemedicine and CNN play the main core of this system. Even though there is still space for development, this research has paved the way for the development of telemedicine systems that can significantly impact the early diagnosis and treatment of Alzheimer’s disease and other related medical conditions. This innovative approach holds great promise in advancing the field of health care and improving the lives of patients suffering from cognitive disorders and severe illnesses. Therefore, we recommend doing more research using AI in this approach.

Data availability

Data of images of Mild demented and Nondemented belonging to various websites (OASIS, ADNI, MIRIAD, and AIBL databases).

References

Organization WH (2017) Global action plan on the public health response to dementia 2017–2025

National Insitute on Aging (NIA) H (2022) What Happens to the Brain in Alzheimer’s Disease?

Inglese M, Patel N, Linton-Reid K et al (2022) A predictive model using the mesoscopic architecture of the living brain to detect Alzheimer’s disease. Commun Med 2:16. https://doi.org/10.1038/s43856-022-00133-4

Frisoni GB, Fox NC, Jack CR et al (2010) The clinical use of structural MRI in Alzheimer disease. Nat Rev Neurol 6:67–77. https://doi.org/10.1038/nrneurol.2009.215

Long X, Chen L, Jiang C, Zhang L (2017) Prediction and classification of Alzheimer disease based on quantification of MRI deformation. PLoS ONE 12:e0173372. https://doi.org/10.1371/journal.pone.0173372

Tang Z, Chuang KV, DeCarli C et al (2019) Interpretable classification of Alzheimer’s disease pathologies with a convolutional neural network pipeline. Nat Commun 10:14. https://doi.org/10.1038/s41467-019-10212-1

Qiu S, Miller MI, Joshi PS et al (2022) Multimodal deep learning for Alzheimer’s disease dementia assessment. Nat Commun 13:3404. https://doi.org/10.1038/s41467-022-31037-5

Brand L, Nichols K, Wang H et al (2020) Joint multi-modal longitudinal regression and classification for Alzheimer’s disease prediction. IEEE Trans Med Imaging 39:1845–1855. https://doi.org/10.1109/TMI.2019.2958943

Wen J, Thibeau-Sutre E, Diaz-Melo M et al (2020) Convolutional neural networks for classification of Alzheimer’s disease: overview and reproducible evaluation. Med Image Anal 63:101694. https://doi.org/10.1016/j.media.2020.101694

Chen L, Qiao H, Zhu F (2022) Alzheimer’s disease diagnosis with brain structural MRI using multiview-slice attention and 3D convolution neural network. Front Aging Neurosci. https://doi.org/10.3389/fnagi.2022.871706

Rathore S, Habes M, Iftikhar MA et al (2017) A review on neuroimaging-based classification studies and associated feature extraction methods for Alzheimer’s disease and its prodromal stages. Neuroimage 155:530–548. https://doi.org/10.1016/j.neuroimage.2017.03.057

Ebrahimighahnavieh MA, Luo S, Chiong R (2020) Deep learning to detect Alzheimer’s disease from neuroimaging: a systematic literature review. Comput Methods Programs Biomed 187:105242. https://doi.org/10.1016/j.cmpb.2019.105242

Yamanakkanavar N, Choi JY, Lee B (2020) MRI segmentation and classification of human brain using deep learning for diagnosis of Alzheimer’s disease: a survey. Sensors 20:3243. https://doi.org/10.3390/s20113243

Altinkaya E, Polat K, Barakli B (2020) Detection of Alzheimer’s disease and dementia states based on deep learning from MRI images: a comprehensive review. J Inst Electron Comput 1:39–53

Liu S, Masurkar AV, Rusinek H et al (2022) Generalizable deep learning model for early Alzheimer’s disease detection from structural MRIs. Sci Rep 12:1–12. https://doi.org/10.1038/s41598-022-20674-x

Singh SP, Wang L, Gupta S et al (2020) 3D deep learning on medical images: a review. Sensors 20:5097. https://doi.org/10.3390/s20185097

Orouskhani M, Zhu C, Rostamian S et al (2022) Alzheimer’s disease detection from structural MRI using conditional deep triplet network. Neurosci Inform 2:100066. https://doi.org/10.1016/j.neuri.2022.100066

AlSaeed D, Omar SF (2022) Brain MRI analysis for Alzheimer’s disease diagnosis using cnn-based feature extraction and machine learning. Sensors 22:2911. https://doi.org/10.3390/s22082911

Kaufman S, Rosset S, Perlich C, Stitelman O (2012) Leakage in data mining. ACM Trans Knowl Discov Data 6:1–21. https://doi.org/10.1145/2382577.2382579

Yagis E, De Herrera AGS, Citi L (2019) Generalization performance of deep learning models in neurodegenerative disease classification. In: 2019 IEEE international conference on bioinformatics and biomedicine (BIBM). IEEE, pp 1692–1698

Yagis E, Atnafu SW, Seco G, de Herrera A et al (2021) Effect of data leakage in brain MRI classification using 2D convolutional neural networks. Sci Rep 11:22544. https://doi.org/10.1038/s41598-021-01681-w

Thibeau-Sutre E, Díaz M, Hassanaly R et al (2022) ClinicaDL: An open-source deep learning software for reproducible neuroimaging processing. Comput Methods Programs Biomed 220:106818. https://doi.org/10.1016/j.cmpb.2022.106818

Zhao H, Jia J, Koltun V (2020) Exploring self-attention for image recognition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10076–10085

Jiang M, Yan B, Li Y et al (2022) Image classification of Alzheimer’s disease based on external-attention mechanism and fully convolutional network. Brain Sci 12:319. https://doi.org/10.3390/brainsci12030319

Liu Y, Zhang Y, Wang Y et al (2021) A survey of visual transformers. arXiv preprint arXiv:211106091

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Huang G, Liu Z, Van Der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4700–4708

Tan M, Le Q (2019) Efficientnet: rethinking model scaling for convolutional neural networks. In: International conference on machine learning. PMLR, pp 6105–6114

Suganyadevi S, Seethalakshmi V, Balasamy K (2022) A review on deep learning in medical image analysis. Int J Multimed Inf Retr 11:19–38. https://doi.org/10.1007/s13735-021-00218-1

Touvron H, Cord M, Douze M, et al (2021) Training data-efficient image transformers and distillation through attention. In: International conference on machine learning. PMLR, pp 10347–10357

Touvron H, Cord M, Jégou H (2022) Deit iii: Revenge of the vit. In: Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXIV. Springer, pp 516–533

He K, Chen X, Xie S, et al (2022) Masked autoencoders are scalable vision learners. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 16000–16009

Yang C, Wang Y, Zhang J, et al (2022) Lite vision transformer with enhanced self-attention. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 11998–12008

Fass L (2008) Imaging and cancer: a review. Mol Oncol 2:115–152

Doi K (2007) Computer-aided diagnosis in medical imaging: historical review, current status and future potential. Comput Med Imaging Graph 31:198–211

Kose U, Alzubi J (2021) Deep learning for cancer diagnosis. Springer, Berlin

Kourou K, Exarchos TP, Exarchos KP et al (2015) Machine learning applications in cancer prognosis and prediction. Comput Struct Biotechnol J 13:8–17

Pereira S, Pinto A, Alves V, Silva CA (2016) Brain tumor segmentation using convolutional neural networks in MRI images. IEEE Trans Med Imaging 35:1240–1251

Zhao L, Jia K (2015) Deep feature learning with discrimination mechanism for brain tumor segmentation and diagnosis. In: 2015 international conference on intelligent information hiding and multimedia signal processing (IIH-MSP). IEEE, pp 306–309

Kamnitsas K, Ledig C, Newcombe VFJ et al (2017) Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Med Image Anal 36:61–78

Ahmed KB, Hall LO, Goldgof DB et al (2017) Fine-tuning convolutional deep features for MRI based brain tumor classification. In: Medical imaging 2017: computer-aided diagnosis. SPIE, pp 613–619

Wang Z, Li Y, Childress AR, Detre JA (2014) Brain entropy mapping using fMRI. PLoS ONE 9:e89948. https://doi.org/10.1371/journal.pone.0089948

Wang Z (2020) Brain entropy mapping in healthy aging and Alzheimer’s disease. Front Aging Neurosci. https://doi.org/10.3389/fnagi.2020.596122

Manera AL, Dadar M, Fonov V, Collins DL (2020) CerebrA, registration and manual label correction of Mindboggle-101 atlas for MNI-ICBM152 template. Sci Data 7:237. https://doi.org/10.1038/s41597-020-0557-9

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB).

Author information

Authors and Affiliations

Contributions

Dr. Mohamed Ahmed Masoud performed 40%, Dr. Wael Abouelwafa Ahmed performed 30%, and Dr. Mohamed E. El-Bouridy performed 30%. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no competing interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Massoud, M.A., El-Bouridy, M.E. & Ahmed, W.A. Revolutionizing Alzheimer’s detection: an advanced telemedicine system integrating Internet-of-Things and convolutional neural networks. Neural Comput & Applic 36, 16411–16426 (2024). https://doi.org/10.1007/s00521-024-09859-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-024-09859-9