Abstract

In recent years, machine learning models have been increasingly used to detect security vulnerabilities in software, due to their ability to achieve high performance and lower false positive rates compared to traditional program analysis tools. However, these models often lack the capability to provide a clear explanation for why a program has been flagged as vulnerable, leaving developers with little reasoning to work with. We present a new method which not only identifies the presence of vulnerabilities in a program, but also the specific type of error, considering the whole program rather than just individual functions. Our approach utilizes graph neural networks that employ inter-procedural value flow graphs, and instruction embedding from the LLVM Intermediate Representation, to predict a class. By mapping these classes to the Common Weakness Enumeration list, we provide a clear indication of the security issue found, saving developers valuable time which would otherwise be spent analyzing a binary vulnerable/non-vulnerable label. To evaluate our method’s effectiveness, we used two datasets: one containing memory-related errors (out of bound array accesses), and the other a range of vulnerabilities from the Juliet Test Suite, including buffer and integer overflows, format strings, and invalid frees. Our model, implemented using PyTorch and the Gated Graph Sequence Neural Network from Torch-Geometric, achieved a precision of 96.35 and 91.59% on the two datasets, respectively. Compared to common static analysis tools, our method produced roughly half the number of false positives, while identifying approximately three times the number of vulnerable samples. Compared to recent machine learning systems, we achieve similar performance while offering the added benefit of differentiating between classes. Overall, our approach represents a meaningful improvement in software vulnerability detection, providing developers with valuable insights to better secure their code.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

One of the most common causes of security vulnerabilities found in computer systems is software bugs, or errors. These errors cause programs to behave incorrectly, indirectly leading to data loss, system compromise and damage. These bugs are usually disclosed as security advisories, and published in databases such as the MITRE Common Vulnerabilities and Exposures (CVE) List [1], or the National Vulnerability Database (NVD) [2]. Analysis of yearly CVE disclosures has shown significant growth in the number of advisories published in the last 5 years, and no signs of reduction [3], with thousands of new vulnerabilities being discovered every month. Of greater concern is the number of zero-day attacks in the wild, with Rapid7 finding there has been a significant increase from the years 2020 to 2021 alone [4]. A zero-day attack is where an unpatched vulnerability in software is being actively exploited by malicious actors, leading to a race between both the software developers and IT professionals to patch/protect systems before significant damage can be caused.

Early detection of vulnerabilities is critical for reducing the frequency of these zero-day attacks, with bugs ideally being identified prior to software shipping. Traditionally, this has been done through a combination of static program analysis, manual auditing performed by humans, and fuzzing/genetic-based systems. Each of these approaches has both upsides and downsides, for example, static analysis usually results in high false positive rates and sub-optimal detection rates [5], and fuzzing can have extremely long time intervals between error discovery [6]. In the literature, machine learning has overtaken traditional software analysis methods for vulnerability detection, with Hanif et al. finding that 74% of publications were using some form of machine learning [7], in particular, deep learning, being the most popular.

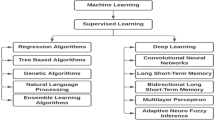

Earlier work using machine learning for bug detection dates back to 2012, where Hovsepyan et al. utilized text analysis techniques, combined with a support vector machine for detecting errors [8]. Other approaches include Long Short-Term Memory-based gadget classification of intra-procedural slices [9], as well as convolutional neural networks based on natural language processing (NLP) techniques, both at the source code level [10, 11], and assembly/instruction level [12]. However, we believe that using NLP-based methods significantly increases the difficulty of the problem. Not only does the classifier have to learn the characteristics of the errors it is trying to detect, but it also has to learn the semantics of the language it is analyzing. Furthermore, the detection system requires a large enough training set, particular to the language being analyzed, and a model trained on one language is not directly transferable to other languages.

Instead of detecting vulnerabilities in the program’s code, whether it be source or binaries, we considered an alternative approach: Can we train a machine learning model to detect vulnerabilities in a value flow graph computed from the program? Using such a graph representation of the program would make the detection model less dependent on the original language that the program is written in, and its semantics, as instead we are examining where and how data are used in computation. In addition, we can detect vulnerabilities across function and file boundaries, as value flows are computed for the entire program, compared to analyzing individual functions in isolation. Having this greater amount of information can lead to higher precision for detection; however, it is a trade-off, as such performance is dependent on the model’s ability to filter out flows irrelevant to the learning task.

Value flow graphs are a state-of-the-art representation of programs, incorporating both control and data flow, and alias-aware memory analysis. This makes them an ideal candidate for vulnerability detection applications, as combined with the aforementioned fact that value flows are computed interprocedurally, we can reason about the security of the whole program. The value flow graphs contain complex relationships, describing how data traverses the program, with larger programs containing tens of thousands of nodes and edges. These types of graphs are usually used for optimization and program analysis tasks, such as [13], and not machine learning applications. Graph neural networks are known for their ability to transform and capture attributes of both the data and relations contained within graphs [14], and paired with the value flow graphs, represent a natural fit for our task of software vulnerability detection.

Using neural networks for vulnerability detection provides many benefits, including the ability to learn new types of vulnerabilities through data, rather than having to manually identify the characteristics of the vulnerability, and write rules which systems can then apply. Compared to runtime/coverage-based analysis, such as fuzzing, where non-trivial configuration time is required, machine learning systems can analyze programs with minimal effort from the user. However, as a result, some flexibility is lost, as many of these machine learning systems are trained to simply identify the presence of vulnerabilities, rather than reporting the specific type of error. While identification, or binary classification is still valuable, it still requires an expert to identify the exact type of error and propose a solution. For this reason, we chose to build a multi-class classification system, which not only identifies vulnerabilities but reports the types of errors as well, saving human time by reducing the range of errors that must be considered. However, it is a more difficult classification problem, as the model must now identify which type of weaknesses the features of the error most closely align with. Despite the challenges, our research suggests that multi-class machine learning systems can achieve high performance, exceeding that of rule-based systems, which are also capable of identifying multiple classes/vulnerability types.

2 Our contributions

In this article, we present a new method for multi-class vulnerability detection in programs, utilizing static, inter-procedural value flow graphs and rich instruction embedding as a program representation, and a graph neural network for classification. Figure 1 shows a high-level overview of the process for determining which vulnerability a sample contains. Our method begins by pre-processing the input program (Sect. 5, which includes computing the value flow graph and transforming said graph into a form suitable for input into a neural network. In addition to the graph itself, we also compute an embedding from both the graph node attributes, as well as the original instruction which the graph nodes were derived from (Sect. 5.3). Our machine learning model predicts a multi-label category from the sample’s graph and embeddings, suggesting which vulnerabilities the sample may contain, based on a probability for each class. We evaluated the model’s performance by:

-

Generating two datasets for evaluating our model: a synthetic dataset with simple memory-related errors, using the Csmith program generation tool [15], as well as a subset of the Juliet Test suite [16]. We chose to focus on memory errors due to the high percentage of security vulnerabilities being related to some kind of memory safety issues [17, 18], and value flow graphs providing a means of capturing the patterns that lead to these types of errors [19].

-

Benchmarking our model in multi-class vulnerability detection, identifying and reasoning about the areas of weakness.

-

Benchmarking our model against alternative machine learning systems and static analysis tools. As these systems only produce binary classifications, we had to also benchmark our model in binary mode to enable comparison.

-

Examining the impact of the sub-features in the node embedding, determining which components are most critical for the downstream learning task, and how much value is added by providing the model with more concise detail in the input, rather than relying on learning alone.

3 Background

3.1 Inter-procedural security vulnerabilities

As mentioned in Sect. 1, errors in program code, or bugs, are one of the most common causes of security vulnerabilities in computer software. The CWE list is a "list of software and hardware weakness types" [1] which groups these security vulnerabilities into categories, based on the characteristics of the bug itself. These categories include memory errors, such as out-of-bounds reads/writes, integer overflow/underflow, uncontrolled format string, and hundreds of others [1].

Figure 2 shows an example of an inter-procedural buffer overflow error. We allocate storage for an array of 10 integers, using the malloc function provided by the C library, call func_b, which stores 10 integers to this array, and then print out the first element in the array. Since the size parameter in malloc refers to the number of bytes of storage to allocate, not the number of elements to allocate, instead of allocating 40 bytes of storage, we are only allocating 10 bytes. In this case, the programmer should multiply the number of elements by the size of each element (malloc(10 * sizeof(int)), or use an alternative routine such as calloc, which also handles the case where the multiply results in an integer overflow. Such errors are very common vulnerabilities, with out-of-bounds writes being in the top 25 most dangerous software weaknesses [1].

With the above example, neither function on its own contains a buffer overflow error. While func_a allocates an incorrect number of bytes, no writes occur within the function itself. The overflow occurs in func_b, which assumes that the array parameter has at least 40 bytes of storage allocated. Therefore, the vulnerability exists in func_b, but only in the context of func_a calling it, making it an inter-procedural error. This makes considering each function in isolation insufficient for detecting these types of errors, as the label is dependent on the context. Following the example further, these two functions may not even be defined in the same file, or be contained within a different library which is resolved later at link time. With the large range of possibilities, we can argue that security is a property of the whole program, rather than something which we can reason about for individual functions, or even source files, highlighting the importance of using inter-procedural techniques for error detection.

3.2 Inter-procedural value flow graphs

Value flow analysis encompasses both the control and data dependencies of a program, in contrast to control or data flow graphs, which only consider one of these in isolation. We utilize SVF (Static Value-Flow Analysis Framework), a framework for scalable and precise inter-procedural static value flow analysis [19]. SVF is a state-of-the-art tool for analyzing C-like programs and computing value flows, by "leveraging recent advances in sparse analysis" [19]. The view of all value flows across a program forms the inter-procedural value flow graph, a representation of program dependencies produced by SVF analysis, and have previously been used for detecting memory leaks, uninitialized variables, security vulnerabilities, and tainted information flow [20,21,22] The inter-procedural nature of these graphs provides further benefit in a bug detection context, as security is a property of the entire program, not just one function, considering functions in isolation is insufficient for identifying some vulnerabilities.

Figure 3 shows a portion of the value flow graph generated for three functions in a simple C program, which stores the constant integer 42 to a pointer provided as a formal parameter, and copies it to another stack variable in a second function call. This example demonstrates SVF’s ability to track program dependencies and data flow across procedures, in addition to the flow across memory, as the value is value being stored to stack and reloaded.

SVF uses Andersen’s pointer analysis [23] to build a points-to set, which is used to derive define-use chains for variables where the address is taken, as well as top-level definitions, capturing alias-aware value flows. This method is key to SVF’s ability to precisely analyze large programs in reasonable amounts of time, compared to more traditional iterative approaches [19]. The output of the analysis steps is combined with the program’s IR-level instructions to produce a value flow graph, which can be utilized for the aforementioned applications. For example, points-to analysis can be also utilized to statically identify the memory buffers that each pointer references, and with the metadata from the buffer, identify whether a given access is safe. This also extends to other types of errors, such as numerical overflow, as the usages of all variables in the program can be traced through the graph, we can reason more accurately about the domain of operations, such as additions, and ignore locations when the operation is conclusively safe.

More recently, SVF has been utilized for semantic labeling and code captioning tasks (Flow2Vec, [24]), outperforming previous state-of-the-art approaches such as Code2Vec [25]. While Flow2Vec utilized higher-order proximity embedding to transform the value flow graph to a vector representation, in contrast to our proposed method of using Graph Neural Networks, this work highlights the value in using alias-aware program analysis for these kinds of tasks when compared to simpler methods such as AST paths, utilized by Code2Vec. SVF has also been used for vulnerability detection as recent as 2022, however, with a focus on binary classification, instead using a separate model trained for each type of weakness [21, 22].

SVF is built on the LLVM compiler framework [13], with the intermediate representation being used as the source for which the inter-procedural static value flow graph is computed. LLVM is a "a collection of modular and reusable compiler and toolchain technologies" [26]. Programs are compiled to the LLVM intermediate representation (IR), a strongly typed, single static assignment (SSA) bitcode format. LLVM provides a range of tools which operate on the intermediate representation, including analyzers and optimizers [27]. Code generation backends are available for many popular architectures, including x86, ARM, MIPS, RISC-V, and others. The LLVM framework, including the intermediate representation and backends are used for a range of languages, including C/C++, Rust, Swift, Zig, and others [26].

3.3 Graph neural networks

Graph neural networks have seen an increase in use over the last decade, as a method for performing learning and prediction type tasks on graph structured data, with a jump in popularity with the publication of The Graph Neural Network Model [28]. Types of data often represented in graph form include social media connections, citation networks, molecular sets, and encyclopedias [14]. Two of the common operations performed by these networks are node-based classification, where a label or class is predicted for every node in the graph based on its attributes and connections, and graph classification, where a label or class is predicted for the entire graph (considering all nodes and edges) [29].

The most relevant graph network model to our work is the Gated Graph Sequence Neural Network (GGSNN) [30]. The GGSNN produces a feature vector of user-specified length for each node in the graph, based on the attributes of the graph nodes, as well as the edges between them. The GGSNN has seen use for text-based reasoning applications, as well as program verification [30]. More recently, and most relevant, was its usage in the Devign vulnerability detection model [31]. Devign used the GGSNN with graphs representing programs (Abstract Syntax Trees/Control Flow Graphs/Data Flow Graphs) to learn to detect intra-procedural errors, and its success in this application was the main influence for our choice of this network design for our detection model.

4 Datasets

In this section, we describe how we generated and pre-processed the dataset, used to train and evaluate the vulnerability detection model. Two datasets were generated for our experiments; one fully synthetic focusing on out-of-bounds memory errors among complex control flow, and the second based on the well-studied Juliet Test Suite, covering a wider range of vulnerabilities.

4.1 Dataset A: fully synthetic/out-of-bounds errors

As the number of memory safety errors in security vulnerabilities is proportionately high [17, 18], our initial evaluation was performed on a synthetically generated dataset of memory errors. This allowed us to efficiently generate the large amounts of data required for training. We considered using data augmentation, but naïve approaches of mutating the input source code would not be usable in our application, as to compute the value flow graph, the input code must compile successfully.

We utilized the Csmith [15] test generation tool to generate these synthetic programs. Csmith "generates random C programs that statically and dynamically confirm to the C99 standard" [32]. It is useful for "stress testing compilers, static analyzers, and other tools that process C code" [32]. A range of options are supported, including injecting branches, loops, arrays, structs, and unions into the generated code. For this dataset, we decided to begin with simple, intra-procedural memory-based errors, and CSmith’s option to inject out-of-bounds array accesses satisfied this criteria. When generating these programs, Csmith reports the number of out-of-bounds accesses injected into the program, which we used to label the samples.

50,000 vulnerable and 50,000 non-vulnerable samples were generated for this dataset. Figure 4 shows a snippet of one of these samples, with the out-of-bounds access occurring for the first 1000 iterations of the loop (l_4).

4.2 Dataset B: Juliet test suite

To evaluate the model’s performance on a wider range of bug types, we utilized the Juliet Test Suite. The Juliet Test Suite is packaged as a set of test cases, grouped by the CWE type, or vulnerability. In some cases, the example is limited only to a single source file, but in others, the example spans multiple source files/translation units. Prior experimentation has shown that considering these source files in isolation is insufficient for bug detection, and that analysis tools need to perform whole program analysis to accurately detect errors, which is a trait shared with real-world applications. Figure 5 shows an example of one of the vulnerable programs in the Juliet Test Suite, with comments removed and identifiers shortened for improving readability.

The program shown in Fig. 5 is an example of an inter-procedural, as well as cross-compilation-unit vulnerability. While the stack-based buffer overflow occurs in the second file/function (badSink()), whether any overflow occurs is context dependent, similar to the example shown in Sect. 3.1. The caller must ensure that the buffer provided is sufficient to store the SRC_STRING string, which is 11 characters in total, including the null terminator. This presents a challenge for analysis tools, as not only do they have to consider the statements and instructions within a function itself when trying to identify errors, but also all callers of that function when the parameters are passed by reference. In this case, the caller is in 68a.c, and the sink in 68b.c), which means that an analysis tool which only examines one file in isolation at once would be unable to detect these errors, except for generating a general warning about using potentially unsafe functions such as wcscpy, instead of its bounds checked alternative, wcsncpy.

4.2.1 Test case grouping

While the Juliet Test Suite does contain build files, it was insufficient for our purposes since it packages all test cases together in a single binary, and we need to be able to label each test case with the weakness contained within. As mentioned in Sect. 4.2, test cases can span multiple source files; therefore, we developed a pre-processor which groups the source files for each test case.

The filenames of the test cases follow a predictable pattern; therefore, it is possible to group each case’s files together with simple string manipulation. Each of the files usually has two sections, one guarded by a pre-processor check for OMITGOOD, and another guarded by OMITBAD. We use the inverse of these checks to generate the labeled samples, i.e., OMITGOOD becomes the "vulnerable" sample, and OMITBAD becomes the "non-vulnerable" sample. To remove the sections of the program not applicable to the label, the macro is defined at compile time, when the LLVM bitcode is produced (see Sect. 5.1), prior to VFG generation.

Vulnerable samples were labeled with the CVE number the test case is associated with, for example, CWE121. Non-vulnerable samples across all weakness categories are grouped together under the label GOOD, regardless of which weakness they were demonstrating.

4.2.2 Label selection

One of the issues with the Juliet Test Suite is the large range of test case counts across weakness classes. Some classes have thousands of test cases; whereas, others only have tens or hundreds. Such small numbers are insufficient for training neural networks; therefore, we chose a subset of memory and overflow-related errors that we believed would be the best fit for value flow analysis, rather than using the full dataset. Table 1 shows the classes we selected for our evaluation, with the number of samples evenly split between vulnerable and non-vulnerable for each class.

5 Data pre-processing

This section describes our method for pre-processing programs, producing the inputs to the classification model. The same pre-processing method would be followed for utilizing the trained model to make predictions in a practical application.

5.1 Program compilation

The SVF framework we utilize to produce the inter-procedural value flow graphs operates on LLVM modules; therefore, the C programs in our datasets must be compiled to LLVM bitcode to be analyzed. Many of the samples contained data flows via pointers which span multiple translation units (source files), and thus span LLVM modules. (see Sect. 4.2.1). Analyzing each of these modules independently would fail to capture these data flows. To ensure these data flows were captured, we utilized the wllvm (Whole Program LLVM, Ravitch [33]) compiler driver, which retains a copy of the IR representation generated by the compiler frontend for each module. After all modules have been compiled, the extract-bc utility is utilized to combine and generate a single bitcode file from one or more original translation units. For the case where multiple translation units have duplicate functions with the same name, wllvm will add a suffix to avoid conflicts. Compiler optimization was not enabled during our testing, as many of the programs contain undefined behavior which could be optimized out entirely, leading to mis-predictions. The result of the compilation step was a single bitcode file for each sample.

5.2 IVFG computation

The Whole Program Analysis (WPA) tool from the SVF framework for program analysis [19] was utilized to compute the inter-procedural value flow graph (IVFG) from the LLVM IR (bitcode) modules produced by the compiler. To compute the points-to set required for value flow analysis, Andersen’s pointer analysis [23] was utilized. This enables the resulting value flow graph to be alias-aware, and capable of identifying data flows through pointers which would otherwise not be possible by simply examining the data flow graph, as described in Sect. 3.2.

Generation of the value flow graph for each LLVM module was performed by a dataset-specific script, which annotated each graph with the associated label (vulnerability class or GOOD). Each of these graphs was written in graphviz (dot) format, suitable for loading by the node embedding process. As the value flow graph produced by the WPA tool does not always include the original instructions which lead to the creation of the VFG nodes, we modified WPA to compute the instruction embedding at the time the graph is written to file, and to append this vector to the node attributes in graphviz format. This was achieved by loading the pre-computed vectors for each token (see Sect. 5.3), and generating/emitting the instruction embedding at the same time as processing the other per-node attributes.

5.3 Instruction embedding

While the value flow graph provides some information about the original instructions responsible for producing the data flow, some context is lost. For example, a LoadVFGNode represents a data load from a pointer, but the type of data are not retained. To provide the model with a greater amount of information about the operations represented by the graph nodes, we compute an embedding based on the operation and operands of the instruction. Computing embeddings from LLVM IR instructions has been used with success in other applications, such as IR2Vec [34], however, not individual instructions in isolation. First, we split the instruction into tokens for its operation (opcode), type, and parameters (operands), as shown in Fig. 6.

Value identifiers (represented by the percentage sign and a number in the original IR) are dropped as they are meaningless outside of the function, when only examining a single instruction. The opcode is preserved based on its mnemonic (load). Types for both the parameter and instruction result are generalized; with a single token representing all integer types, another to represent floating point types, pointer types, and so on. Generalizing the types ensures the distance between resulting vectors of instructions with similar semantics is minimized.

Each of these tokens is looked up in an embedding dictionary containing 8-element vectors. A TransE [35] model was trained on triplets formed from the instruction’s tokens (opcode, resulting type, and operands), with the relation between these tokens being represented in vector space. The entire Juliet Test Suite was used as a source for generating the triplets, resulting in 48 unique tokens for the IR instructions and types contained within. We wish to retain as much information in our embeddings, for the model to have a greater chance at learning the semantics of the input programs. Therefore, instead of summing the vectors for each token together after applying a weight to each, we concatenate these vectors, producing a longer vector, but with dedicated elements for each component of the instruction.

Examination of the IR produced by compiling the Juliet Test Suite dataset found that the most common number of operands per instruction in SSA form was 2, excluding the Get Element Pointer (GEP) instruction. We chose not to consider the GEP instruction because it is less useful for the instruction/node embedding, since pointers are captured in the graph itself. Therefore, we chose to only include the first two operands in the instruction embedding; any additional operands are ignored, and the corresponding sub-vectors for instructions with fewer than two operands (e.g., loads/stores) are filled with zero. The sub-vectors for operands which are present are populated only with the embedding for the type of the operand, as the SSA identifier (e.g., %2) does not provide any information about the data it represents outside of the context of the basic block, and the input relation to the instruction is already captured in the graph itself.

Using the load instruction example from Fig. 7, load i32, i32* %2, align 4, this would result in the first 24 elements of the vector being populated, and the last 8 being zeroed. An arithmetic operation, such as %7 = add nsw i32 %5, %6 would populate all 32 elements.

5.4 Node embedding

The gated graph layer used in our model requires a feature vector to be computed for each graph node, prior to feeding the data into the network. We include a vector representation of the graph node type, enabling the network to make decisions based on the type of data flow (for example, an addition of two variables, or a memory load). The type information captured from the LLVM IR instruction which the node was created from is also included. Combined, this produces a 40-element vector for each of the value flow graph nodes. This 40-element vector contains two sub-vectors:

-

Node type sub-vector To embed the node type information, we examine all graphs produced by the dataset, and identify the unique node types. For our benchmarks, there was 19 unique node types, such as LoadVFGNode or GepVFGNode. A Word2Vec [36] model was trained to produce a vector encoding of these node types, using the Gensim implementation. Each vector is 8 elements wide, with the hyper-parameters for the Word2Vec model set to the defaults.

-

Instruction sub-vector The LLVM IR instruction which the IVFG node was derived from forms the majority of vector elements. Both the instruction and types of its operands are utilized in the embedding process, described in Sect. 5.3. The node types which are not derived directly from IR instructions are zero-filled, but these represent only a small fraction of the graph.

6 Model

In this section, we describe our proposed neural network model, for both single and multi-class classification of vulnerable programs. Similar network designs were used for a range of applications in [31, 37, 38]. Figure 8 shows a summary of the layers of the model, described in further detail below.

6.1 Input

The input to the model is formed by both the nodes in the sample’s inter-procedural value flow graph, and the edges between them representing transfer of data. Each node has a corresponding feature vector, computed based on the IVFG node type, as well as the IR instruction which it was originally produced from (Sect. 5.4).

The implementation of the Gated Graph Sequence layer that we utilized requires the number of nodes in the graph be constant; therefore, we chose a fixed value based on the maximum IVFG size produced for our datasets. For our experiments, this was 1400 nodes, sufficient to fit all the graphs in the dataset, with no upper bound on the number of edges between nodes. Graphs with fewer nodes were padded with zero to match the fixed size.

6.2 Gated graph sequence layer

The Gated Graph Sequence Layer [30] is utilized to produce feature maps based on both the original vector for each graph node, as well as the edges between them. The GGS uses message passing and the Gated Recurrent Unit to reduce the variable number of graph edges to a fixed size output, which can be utilized by the downstream layers and transformations in the model.

In our experiments, we set the output size, or number of channels, to 200, therefore producing a final feature map of [1400 x 200] for each input sample. The number of layers, or rounds performed by the GGS, was set to 6, with the source-to-target messages, or aggregation summed.

Considering the input node embeddings \(I_i\) (a matrix of \(EMB\_SIZE \times NUM\_NODES\), batch i), adjacency list \(A_i\), and W as the learned weight matrix, Propagate as the message passing function, and GRU as Gated Recurrent Unit [39], the Gated Graph Sequence Layer [30] is defined in Torch-Geometric [40] as:

6.3 Convolution layers

While the GGS layer does consider the spatial relation and flows between graph nodes, it still produces a fixed size feature vector for each node in the graph, leading to a very large feature map. To reduce the dimensionality of this feature map, and concentrate on nodes relevant to the classification task, as well as assist with learning relations between the feature vectors for nearby graph nodes, we utilize three one-dimensional convolutions, each followed by a Max Pooling layer. The number of channels for each of the convolutional layers was set to 600, 300, and 150, respectively, with a kernel size of 6, 1, 1, and padding of 1. The pooling layers used a kernel size of 3, 1, 1, and a stride of 1. Both Average and Max Pooling layers were evaluated, with Max Pooling achieving higher performance. After the pooling layers, the rectified linear unit (ReLU) activation function is applied, as we found it to provide the most consistent performance compared to sigmoid and hyperbolic tangent functions. After applying the three convolution and pooling layers, we are left with a [150 x 31] feature map, or 4650 elements, reduced from the [1400 x 200] output of the GGS.

Formally, we define the convolutional operation as C(x) , incorporating the single-dimensional convolution, followed by max pooling and the ReLU activation function:

Then, concatenate both the feature map computed by the Gated Graph Sequence Layer \(G_i\) with the original node embeddings \(I_i\), producing \(L_1\), then apply the three convolutions in sequence, producing \(N_i\):

6.4 Fully connected layers

The final component of the model is the multilayer perceptron (MLP). The feature map from the convolution layers is flattened to a single dimension, leaving 4650 elements, which is fed through two hidden layers to produce the network’s output. In our experiments, we set the dimension of the hidden layers to 1000, with the final layer size and activation depending on whether the model was being used for single or multi-class prediction. The MLP is responsible for learning the relationship between the large feature maps produced by the GGS and convolution layers, and the much smaller number of output classes that the model predicts. We utilized layer normalization and dropout layers before and after the hidden layers within the MLP, to improve the model’s generalization and reduce overfitting on the training set.

Formally, using the output of the convolutional layers \(N_i\), the Layer Normalization function LN, Dropout function Drop, the linear transformation Linear, and the prediction for the batch \(X_i\), this overall layer can be expressed as:

6.5 Model output

For binary classification, we apply the Sigmoid activation function to the output of the fully connected layer, resulting in a value between 0 and 1, representing the probability that the input program contains a vulnerability.

For multi-class classification, the last fully connected layer has a dimension of the number of classes plus one, with the additional neuron representing the probability that the input program contains no weaknesses. The softmax activation function is applied to the final output values, producing values between 0 and 1 for each class. The final prediction for the input is selected based on the class that has the highest probability.

7 Evaluation

Experiments were conducted to answer four research questions:

-

RQ1 How effective is our model at identifying samples containing any vulnerability?

-

RQ2 How effective is our model at identifying the type of vulnerability contained in the sample (i.e., which weakness does it contain)?

-

RQ3 How does our model compare to traditional static analysis tools at identifying vulnerabilities in the dataset?

-

RQ4 How does our model compare to other state-of-the-art machine learning-based vulnerability detection systems?

7.1 Experimental setup

To evaluate the performance of our model, we implemented the design in PyTorch [41], using Torch-Geometric [40] for data loading and the Gated Graph Sequence layer. Training and inference was performed on a workstation with an AMD Ryzen Threadripper 3970X CPU, 256GB of DDR4 memory, and an RTX 3090 GPU.

Metrics were computed by training the model on the training set, and evaluating on the test set (unseen samples). Across both datasets, we considered two scenarios: Multi-Class Prediction (predicting the correct weakness/class for the sample), and Vulnerable Sample Identification (predicting whether the sample contains any vulnerability).

We treat the vulnerability detection goal as an information retrieval problem, aiming to identify the vulnerable samples, ordered by the confidence that a vulnerability is contained within. To benchmark performance, we define the following metrics, where TP is true positives, FP is false positives, and FN is false negatives:

-

1.

Precision The ability of the model to identify true positives, and not predict false positives (i.e., predicting not vulnerable as vulnerable): \(P = \frac{TP}{TP + FP}\)

-

2.

Recall The ability of the model to identify true positives, without considering false positives (i.e., what percentage of vulnerable programs were identified): \(R = \frac{TP}{TP + FN}\)

-

3.

F1 The harmonic mean of precision and recall, giving an indication of overall model performance: \(F1 = 2\frac{P \times R}{P+R}\)

The dataset generation followed the pre-processing method described in Sect. 5. We performed training and evaluation separately on the two datasets introduced in Sect. 4. Each dataset was split into a training, test and validation set using 80, 10, 10% of the samples, respectively. Each subset was stratified based on the weakness classes to ensure that each class contained approximately the same fraction of the total samples across the splits.

The model was trained for up to 100 epochs, with early stopping being utilized to reduce overfitting on the training set. The validation set was used as the trigger for early stopping; after the validation loss increased for 10 consecutive epochs, training was halted, with the parameters before the first higher-validation-loss epoch restored. Table 2 shows the number of samples in each of the subsets.

For training, the Adam optimizer was utilized, with the learning rate set to 1e-4, a weight decay (L2 penalty) of 1.3e-6, and a batch size of 64. For the multi-class models, the Cross-Entropy loss function was used, and for the binary classification models, we utilized Binary Cross-Entropy. Other hyper-parameters were left at defaults, with the aforementioned values and layer parameters found to be optimal for the model through binary search.

7.2 RQ1: vulnerable sample identification

Methodology For this experiment, we collapsed all the vulnerable classes into a single, “vulnerable”, label. Thus, the model can output one of two predictions, “vulnerable” or “non-vulnerable”. While the focus of this article is on multi-class prediction, for comparing our method to prior work, we also required a binary classification model. A separate model was trained and evaluated for both of the datasets, following the procedure discussed in Sect. 7.1.

Results Table 3 shows the precision, recall, and F1 score of the model on the test split of the datasets (samples unseen when training).

The model achieves very high performance on the CSmith dataset, identifying 98.24% of the vulnerable samples. False positive rates were low, with a precision of 96.35% indicating that on average, only 3 out of every 100 programs were falsely reported as vulnerable when they contained no errors.

Discussion Performance on the Juliet Test Suite was slightly lower, where 90.14% of the vulnerable programs were identified. These results were somewhat expected, as the samples in the CSmith dataset (A) only represent a single type of vulnerability (out of bounds memory access), in contrast to the Juliet Test Suite (B), where we selected a range of vulnerabilities, including integer overflows, and other undefined behavior, which can have significantly different value flows compared to out-of-bounds memory access. However, despite the more difficult problem, the model still achieved low false positive rates, with on average, 9 out of 100 samples without errors being falsely reported as vulnerable.

These results suggest that our model can both identify simple errors matching the out-of-bounds memory access pattern, and in addition, generalize to other types of errors/vulnerabilities, with the caveat that it must be trained with examples of these errors to recognize them.

7.3 RQ2: multi-class prediction

Methodology This experiment uses the model to predict which weakness class a particular sample falls into, i.e., which vulnerability it contains. We evaluated performance for both the single-weakness-per-model and multi-class models. Multi-class classification was only applicable to the Juliet Test Suite dataset (B), as the fully synthetic dataset does not contain any non-memory-related vulnerabilities, and therefore only spans two classes.

Results Table 4 shows the precision, recall, and F1 scores for using multiple models, one per vulnerability class SC, trained separately. MC shows the metrics for a single model, trained to recognize all vulnerability classes. Figure 9 shows the same results in graphical form, to easily identify which classes are under-performing.

For multi-class prediction of the Juliet Test Suite Dataset, precision varied, with some classes as low as 45% (CWE191), but others having no false positives (CWE78). We believe two main factors are responsible for this level of performance; the similarities between classes (e.g., multiple integer over/underflow-related errors, or buffer-related errors), and the imbalance of samples across the classes (e.g., CWE197 only has 127 samples, with 62 of those positive; whereas, CWE134 has 454, with 227 positive). This is also reflected in the recall numbers, with a non-trivial difference between classes.

Discussion Comparing the single-class model (one model trained for each class), there is a measurable performance improvement across the board; performance significantly increases across the classes. However, the model-per-class method is not without downsides; for it to provide the same exact-weakness functionality to the user as the multi-class model, one would have to perform a number of predictions equal to the number of classes, and then take the class with the highest probability. These results suggest that the model is capable of successfully identifying which features contribute to the sample being vulnerable versus non-vulnerable, and increasing the number of parameters in the multi-class model may improve performance, as the single-class model effectively has more parameters per class. However, we leave this for future work.

Using a 80% F1 score as a baseline for low performance, we can observe 4 classes that under-perform in the multi-class model: CWE190 (Integer Overflow), CWE191 (Integer Underflow), CWE121 (Stack-Based Buffer Overflow), CWE122 (Heap-Based Buffer Overflow), CWE124 (Buffer Underwrite), CWE126 (Buffer Overread), and CWE127 (Buffer Underread).

CWE190 and CWE191 are similar, overflow vs. underflow, with the only difference being the instruction opcode. However, subtraction can also be expressed as the addition of a negative number. LLVM IR also does not differentiate between signed and unsigned types, and model may also struggle to predict which of these two classes the sample falls within, as the higher-level semantics are lost during the compilation process. The fact that the single-model-per-class configuration (MC) achieves 97-98% performance supports this hypothesis since it does not need to decide whether it is an underflow or overflow specifically, just one of the two.

CWE121, CWE122, CWE124, CWE126, and CWE127 are all memory buffer-related errors, with the common property of out-of-bounds access. The CWE categorization presents a challenge to classifying these samples, as the vulnerability may be a stack-based buffer overflow (CWE121), but also a buffer over-read (CWE126). This is in contrast to other machine learning applications, where classes are distinct with minimal overlap. Therefore, a model that reports any of these classes in the top predictions may arguably be correct, but due to being constrained by a single label, will not count as a true positive.

To gain insight into how much of a performance impact the multi-class model has over the binary classification model (Sect. 7.2) at identifying any vulnerability, we compared the precision, recall and F1 scores for both models, after collapsing the classes of the multi-class model to a binary result, where any of the 16 vulnerability classes was treated as “vulnerable”. Table 5 compares these results for Dataset B (Juliet Test Suite).

Despite the lower performance in some of the vulnerability classes of the multi-class model, it still potentially provides value for users. Comparing the multi-class model, with the predictions binned as vulnerable or non-vulnerable, to the single-class model (Table 5) shows that the multi-class model achieves similar performance to a binary model, with only a difference of 1.27% in precision, 1.76% in recall, and 0.22% F1. This indicates that while the multi-class model may predict the incorrect weakness class for a program, it is still able to identify which programs are vulnerable versus non-vulnerable with high precision (low false positive rates), on average 9 out of 10 times.

7.4 RQ3: static analysis tools comparison

Methodology To compare our work with more traditional program analysis methods for bug detection, we utilized two popular static analysis tools: cppcheck [42], version 2.3, and the Clang static analyzer, part of the LLVM compiler framework [13, 43], version 12.0.0. These static analysis tools can identify a range of potential issues in programs, including bugs which lead to security vulnerabilities.

The performance of these tools was evaluated using the same information retrieval metrics that we used to determine our model’s performance (precision, recall and F1 score). We chose to evaluate the Juliet Test Suite (Dataset B), rather than the CSmith dataset, as these tools are known for being able to detect many types of vulnerability causing errors, not just memory/buffer-related issues. To generate the inputs for the tools, the same grouping method described in Sect. 4.2.1 was followed, generating two samples for each test case. All the source files for each of the programs were provided to the analyzer, alongside the utility file, containing additional functions and declarations (e.g., printing output to the terminal). We treated any output from the analyzer, both warnings and errors, as a vulnerable report, with no output being considered non-vulnerable.

Results Table 6 shows the precision, recall and F1 scores of our model, versus both the cppcheck and clang static analysis tools. The performance of the Devign [31] vulnerability detection system is included in these results, and discussed in Sect. 7.5.

Discussion We can observe that our graph-based model significantly outperforms the static analysis tools across all three metrics. The lower precision suggests that the static analysis tools produced a high number of false positives, which we confirmed through manual examination of the outputs; many of the samples were reporting the same warnings for both the vulnerable and non-vulnerable variants of the same program. Recall was also much lower, suggesting a high false negative rate. Between the two static analyzers, Cppcheck appears to produce fewer false positives, at the cost of missing more errors than the Clang static analyzer. However, as aforementioned, we treated any output from the tools as a "vulnerable" label; therefore, the higher recall in the Clang results can be explained by the same false error report in both the vulnerable and non-vulnerable samples in some cases.

7.5 RQ4: How does our model compare to other state-of-the-art machine learning-based vulnerability detection systems?

Methodology To discover how our model performs relative to recently published, machine learning-based vulnerability detection systems, we utilized the Juliet Dataset (4.2), comparing the prior work to our model using the three metrics introduced in Section 7: Precision, Recall and F1 score.

We were able to evaluate our dataset on one of the open-source implementations [44] of the Devign [31] paper. The Juliet dataset was split to the same 80/10%/10% arrangement for training/testing/validation samples, with the training parameters set similar to our model: 100 epochs with early stopping enabled.

In addition, we originally planned to compare the performance of our model with VulDeePecker [9] and SySeVR [45]. However, we found that with the implementation released in [46], the call graph generation step did not complete after several weeks of runtime. Several issues in the GitHub repository report similar difficulties [46]. Furthermore, other more recent publications did not have public implementations available at the time of submission. Due to these publications utilizing different datasets, we could not directly compare their performance numbers on the same dataset we used for evaluating our model.

However, as the dataset for the VulDeePecker paper has been released, and since it was based on the same Juliet Test Suite samples, we are able to compare how our model performs relative to VulDeePecker on the subset of classes that Li et al. evaluated [9]. These two evaluations are reported as CWE-119 and CWE-399; however, these classes include several CWE groups (e.g., CWE-119 includes CWE-121, CWE-122, etc.). We evaluated our model in both multi-class and single-class modes, to determine its effectiveness at differentiating between similar, but different vulnerability classes, something which is not possible in VulDeePecker.

Results

Table 6 compares the precision, recall and F1 scores of our model, compared to Devign [31, 44] on the same dataset. As the Devign model only considers vulnerable versus non-vulnerable labels, we compared it to the results of our binary classification model (Sect. 7.2). Compared to Devign, our model achieves 25% greater precision, 39% greater recall, and a 31% greater F1 score on the Juliet dataset. Both machine learning-based models outperform the static analysis methods introduced in the previous section by a considerable margin.

Table 7 and Figure 10 show the precision, recall and F1 scores for our model (VFG) in single-class mode (7.2) versus VulDeePecker (VDP) [9]. We also benchmarked the two previously introduced static analysis tools, Clang and Cppcheck on the VulDeePecker dataset. As aforementioned, the datasets do not consist of a single CWE label from the Juliet Test suite, a full breakdown of the included classes is shown in Table 8.

Compared to VulDeePecker [9], our model achieves similar performance, sacrificing a small amount of false positives for slightly higher true positive detection. As VulDeePecker cannot predict different labels for an input, only a binary vulnerable/non-vulnerable label, we cannot compare our model’s ability to differentiate between different weakness classes. For completeness, we included the results for multi-class prediction from our model in Table 8.

A performance difference can be observed in Table 8 when compared with our earlier evaluation in Sect. 7.3. We hypothesize this is due to the fact that these are two separate datasets being trained and evaluated in isolation, rather than a single model being utilized for all classes.

Discussion

The similar performance of our model when compared with VulDeePecker [9] suggests that graph representations of programs can be just as effective for security vulnerability detection as source-based representations, including slice or gadget-based such as those used by VulDeePecker. However, it is also clear that the chosen graph representation is key to high performance, with the Devign implementation utilized [44] relying exclusively on abstract syntax trees performing significantly lower. Both machine learning vulnerability detection systems outperformed the static analysis tools by a considerable margin, with Cppcheck trailing Clang, similar to the experiment in Sect. 6, although the gap was smaller on the VulDeePecker dataset, particularly in CWE-399.

The authors of the Devign implementation note that not the all code representations mentioned in the original paper [31] were utilized, and that they were unable to reproduce the original results of the paper due to dataset mismatches, among other issues [44]. Therefore, we are unable to reason about how much performance could be improved by including control and data flow information as the original paper proposed. Given the performance discrepancy between our model and Devign, it would suggest that utilizing only representations lacking flow information is insufficient for achieving high detection rates.

Regardless, even with the disadvantages of the open-source implementation when compared to the original paper, it still provides some indication that our model can compete with, or in some cases, outperform, recent state-of-the-art vulnerability detection systems, while introducing the ability to differentiate between different weakness classes.

8 Ablation study

To better reason about the performance of our model, we propose two further questions:

-

1.

Feature Impact Which of the additional features that we add to the data has the greatest impact on model performance, and how does the model perform when making predictions solely on the graph itself, without attributes (node types, instruction vectors).

-

2.

Dataset subsampling How large does sampling different portions of the dataset affect the performance of the model?

-

3.

Program representations How much of the model’s performance can be attributed to the use of graph neural networks, versus value flow and instruction embedding?

8.1 Feature impact

To determine the performance impact on the model for the different features we generate during pre-processing, another series of experiments were performed with each of these features disabled.

-

Full Node Embedding (F) All features were enabled in the model, as described in Sect. 5.4.

-

No Node Types (T) The node type sub-vector (as described in Sect. 5.4) is set to a fixed vector, leaving only the instruction vector variable.

-

No Instruction Vector (I) The instruction embedding sub-vector (as described in Sect. 5.3) is zeroed, leaving only the node type. This effectively makes all nodes behave the same as nodes not derived from LLVM instructions.

-

No Node Embedding (N) All pre-processing features were disabled, by setting the entirety of the node’s attribute vector to zero.

The same 16 weakness classes evaluated in Sect. 7.3 were examined, recording the performance for each of the configurations listed above. For all four configurations, the model was trained for 5 epochs, and early stopping was disabled, as we wanted the only variance between runs to be the feature vectors in the node attributes. Table 9 shows the precision, recall, and F1 scores of each configuration.

The extreme outlier in these results is the “No Node Embedding” (N) columns, where the model’s performance completely collapses, where the model’s recall dropped 1% for some classes (e.g., CWE-126). We hypothesize that this is due to the embedding being all-zeros, the model cannot differentiate between present nodes in the graph and the all-zero nodes which are added as padding to fit the fixed input size, and the model is effectively predicting randomly. Therefore, we can ignore these results in further analysis. Initially, for the “No Node Types” case, we set the node type component (the first 8 elements) of the embedding to zero; however, this resulted in similar results to the all-zeros case, as some node types do not contain an instruction vector. Instead, for the "No Node Types" case, we set the node type component to a fixed 8-element value, so while there is no unique information from the node type component, we do not end up with a large number of non-padding all-zero nodes.

The greatest decrease was observed when disabling the node type vectors ("No Node Types", "T"), relying exclusively on instruction vectors. This resulted in an average reduction of 19.5% precision, 19.3% recall, and 22.8% in F1 score. We hypothesize that this is because every node in the graph will have a corresponding node type vector, whereas as mentioned above, some of the VFG-specific nodes, such as formal in/out parameters, are not directly generated from an instruction, and thus will have an instruction vector of zero.

Disabling the instruction vector ("No Instruction Vector", "I") has a smaller impact, with a 5.6% reduction in recall, and approximately a 1.5% difference in precision/F1 score. This would suggest that while the instruction embedding does not contribute as significantly to the overall performance of the model as the node types, it still improves detection rates (recall), without resulting in a non-trivial change to false positive rates (precision), especially for some classes. For example, CWE-369 (Divide By Zero), where the F1 score decreases by 23.2% without instruction vectors. In this case, it makes sense that the instruction vector provides valuable information for the model, as the impact of a zero parameter on a division operation is much more significant than say, an addition or subtraction operation.

8.2 Dataset subsampling

While many of the samples in the Juliet Test Suite were very similar in how the flaw was represented in the program code, some samples contained significant differences. To examine the impact of the training/testing/validation split on the dataset, we utilized K-fold cross validation, computing precision, recall and F1 for each fold. Model performance was evaluated across 5 folds, stratified based on the weakness label to ensure the distribution of classes across the training and testing sets. The train/test split was set at 80%/20%. The hyper-parameters for the model were identical to the results presented in Sect. 7.2, and early stopping with a patience value of 10 was used. Table 10 shows the results of this experiment.

While the precision did vary by up to 2.2%, the recall was much less noisy, only varying by up to 0.9%. The F1 score reflects this, also indicating low variance. This shows that while the selection of training and testing samples does impact model performance, as we expected due to large differences in the semantics and structure of the programs, the accuracy of the model in identifying true positives was largely unaffected, with only a small percentage of additional false positives reported based on the dataset split.

8.3 Program representations

In Sect. 7.5, we evaluated our model in the binary classification task and compared the performance to the Devign [31] paper. These results show a clear improvement in both precision and recall from using the VFG and instruction embedding representation, over Devign, which uses the Abstract Syntax Tree. This highlights the benefits of using value flow graphs. However, it is unclear which contributes the most: the value flow or graph neural networks.

To gain insight into the benefit of graph neural networks, we utilized the same Juliet Test Suite dataset previously introduced in Sect. 4, and developed a bi-directional GRU (Gated Recurrent Unit) model, that processes a stream of tokens generated from the Abstract Syntax Tree of the sample. This is similar to Devign’s use of AST and enables us to isolate the graph neural network, determining its impact.

Utilizing the Clang [43] compiler, we wrote a tool that produces a stream of tokens, while walking the AST of the program. To reduce the size of the vocabulary, local variables are renamed to LVARn, where n is an increasing counter, global variables are renamed to GVARn, parameters are renamed to PARAMn, non-primitive types are renamed to TYPE, and functions outside of the standard C library are renamed FUNCTION. Figure 11 shows an example of the corresponding token stream for the sample C function.

We utilized the Gated Recurrent Unit (GRU) [39] instead of LSTM layers due to their higher performance on smaller datasets [47]. The model itself can be defined as the following, with I representing the input, and O representing the output.

The convolution layer set the filters to 32, with a kernel size of 64. The pooling layer also used a size of 32, and the GRU layers were set to 128 units, as was the hidden dense layer. Before being fed into the convolution layer, each of these tokens is embedded into vector space using a Word2vec [36] model. As the number of tokens is variable, and the fully connected layer expects a fixed-size input, we use the feature vector that is output from the final time step (token) as the output of the bi-directional layers, which includes information from previous tokens.

The GRU model was implemented using the Keras framework and optimized using the Adam algorithm, with all other hyper-parameters left as default. The model was trained for 20 epochs, finding no improvement in validation loss after this number. Table 11 shows the result of this experiment, evaluated in the same manner described in Sect. 7.2.

We can observe that the GRU model achieves 15% greater precision over Devign, but the difference in recall is within the margin of error. The F1 score is, therefore, higher on the GRU model by 6.6%. The VFG model improves precision by 8.3% compared to the GRU model, recall by 42%, and F1 score by 25.1%. Therefore, we hypothesize that while graph neural networks can benefit security vulnerability detection applications, the chosen graph representation is key to high performance. Using the AST alone, as in Devign, still misses 34.4% of samples in our dataset, compared to the 6.7% of the VFG model. These results suggest that our choice of graph representation (value flow graphs and instruction embedding) is the main contributor to the model’s performance, as the overall accuracy of the GRU and Devign models was similar.

However, despite the greater performance in the binary classification task, does the choice of representation impact the detection performance of some vulnerability types over others? Are some vulnerability types better expressed with high-level representations, such as the AST, or low-level, such as VFG/LLVM IR? To test this hypothesis, we also trained and evaluated the VFG model in multi-class mode, producing per-class metrics in the manner described in Sect. 7. The results of this experiment are shown in Table 12. Devign and VulDeePecker are not multi-class models; thus, we cannot equally compare with these models.

We can observe that while the GRU model had lower overall precision and recall in the binary classification task, some classes are more precise with the GRU model. For example, CWE190 and CWE191 (Integer Overflow/Underflow). We hypothesize that this is due to the value flow graph and instruction embedding containing insufficient information for the model to reason about whether the operation is an overflow or underflow; whereas, the high-level information from the AST better represents the calculation. Excluding integer overflow across the board, the majority of classes favored the VFG model in overall accuracy (F1 score).

These results support the hypothesis that different types of vulnerabilities are better represented by high or low-level information. In multi-class classification, the GRU model detected a greater number of vulnerable samples in 4 of the 16 evaluated classes. This suggests that the representation of data is key not only to detect the presence of vulnerabilities, but also to enable the model to differentiate between types in a multi-class context.

9 Related work

Examining the methods of vulnerability detection systems used throughout the literature, most fall into three distinct categories: rule-based, similarity-based, and machine learning-based [48].

Rule-based systems attempt to detect errors in programs by iterating through a set of pre-defined specifications, and testing program code against each rule. These rules are often combined with program analysis techniques to produce the necessary information, such as control and data flow graphs, as seen in the clang static analyzer [27]. The specifications that these rules follow are written by experts [48] and are thus often very generic in nature, limiting the ability for these systems to detect domain-specific errors. While rule-based systems have near-ideal detection granularity, often reporting the exact line number where the error is contained, high false positive rates [9, 48] limit their usability in practical scenarios. Examples of rule-based systems include the previously-examined clang static analyzer [27], cppcheck [42], and commercial tools such as Coverity [49].

Similarity-Based Systems were actively researched in the previous decade, although have lost popularity to machine learning-based systems in more recent years. These types of systems usually attempted to identify bugs through the detection of "code clones" [50], two pieces of code which perform the same computation, but may have different syntactic structure. Similarity-based systems generally have high precision, as they will only report errors in code which has been labeled as vulnerable, but due to relying on exact or near-exact matching, also have very high false negative rates (missed detections). Examples of such systems include ReDeBug [51], and VUDDY [52].

Machine-Learning Systems utilize models trained on vulnerable datasets to identify vulnerabilities in programs. While they are also a similarity-based system, an additional level of indirection is introduced by the training process, with the models utilizing features computed from the input program to make classifications, rather than attempting to match the program exactly as a code clone. Utilizing machine learning is not a new approach for bug detection, with publications dating back to 2012 using text analysis techniques, combined with a support vector machine for detecting errors [8]. More recently in the literature, neural network-based approaches have dominated; however, it is not a clear-cut solution to the problem, with more traditional machine learning approaches such as random forests still being utilized in recent research [10, 53]. Neural network approaches range from LSTM-based (Long Short-Term Memory) gadget classification of intra-procedural slices [9], as well as convolution approaches based on natural language processing (NLP) techniques [12]. The inputs used by these models include source code [10, 11, 54], abstract syntax trees [55, 56], and assembly/compiled instructions [12]. In addition to learning directly from the program’s code, using metrics computed from the source has also been explored [53].

From these systems, the most relevant to our work is VulDeePecker [9], and Devign [31]. VulDeePecker is a machine learning-based vulnerability detection system developed by Li et al. [9], published in 2018. VulDeePecker divides functions into slices, known as "code gadgets", after stripping whitespace and normalizing variable names. The gadgets are then embedded, with an LSTM (Long Short-Term Memory) layer used to produce fixed-length vectors for variable length slices, and fed into a dense layer for classification. The main downside to VulDeePecker compared to our work is the lack of inter-procedural classification: detections are limited to within a single function only, not considering the full context and callees. The same authors went on to publish SySeVR [45], which instead of using a simple token-based representation of code gadgets, grouped related statements together based on control and data flow. SySeVR [45] was later extended to utilize inter-procedural slices, solving the main limitation of VulDeePecker [9].

Devign, by Zhou et al. [31] uses a range of representations of the input program, including the abstract syntax tree, control flow graph, data flow graph, and an embedded form of the original source tokens. The authors proposed the Conv module as a method for computing features for nodes based on the graph representations of the program, and our network follows a similar structure. As with VulDeePecker [9], its major weakness is the intra-procedural nature of its analysis and detection, that is, the inability to detect vulnerabilities spanning across function calls.

In contrast to the methods we are proposing, the majority of this prior work has been focused around analysis of the program source code, which can reduce performance, requiring the model to learn and identify control and data flow patterns which contribute to vulnerabilities. More recent work such the aforementioned Devign [31], directly utilized control and data flow graphs, combined with AST (Abstract Syntax Tree) paths for bug detection, with non-trivial performance improvements. This development heavily influenced our decision to utilize value flow graphs in our method, making the detection system alias-aware, which is particularly important for memory-related errors.

However, we argue that while the AST is a more general representation of the program compared to the source code, the types of nodes in the tree are tied to the language being analyzed. In contrast, using intermediate representations of the program, usually utilized by compilation, such as Control and Data Flow Graphs, is not only less specific to the original language of the program, but have also been shown to improve detection performance [31]. Our work builds upon this knowledge, using a state-of-the-art, alias-aware, inter-procedural program representation. This choice was driven by the fact that many vulnerabilities discovered today are both complex in nature, spanning multiple functions, as well as being dominated by memory errors [17, 18].

In other applications, such as semantic labeling of functions, some success has been found by utilizing the Abstract Syntax Tree from the program, in particular, the paths between nodes in the tree. Code2vec is a neural network model developed by Alon et al. [25] which represents snippets of code (functions in the paper) as "continuous distributed vectors". The goal of the model is to predict semantic labels, or method names from the features of the code. Code2vec used AST paths as an input into the network, in contrast to the prior work which had used tokens with bag of words models. An AST path represents all the intermediate nodes in a tree between two terminals. The authors argue that using the syntactic paths can "capture regularities that reflect common code patterns", "lowers the learning effort" [25], and can scale to large code bases, since the model does not have to learn the syntactic structure of the programming language. Other works have also investigated the use of AST paths for identifying errors with promising performance [55, 56].

Similar to Code2vec, value flow graphs have also been utilized in recent years for semantic labeling and code captioning tasks (Flow2Vec, Sui et al. [24]). While Flow2Vec utilized higher-order proximity embedding to transform the value flow graph to a vector representation, other recent work has explored generating code embeddings from value flow graphs, also for vulnerability detection, but with a focus on binary classification [21, 22], as mentioned in Sect. 3.2. As in many other applications, transformers have also seen use for vulnerability detection in late 2022 [57].

Initially, for this work, we considered using AST paths as a representation for detecting vulnerabilities. However, we ended up switching to value flow graphs for three main reasons. Firstly, as the paths are local to the function, it prevents us from identifying bugs spanning multiple procedure calls. Secondly, operating at the AST level instead of a compiled intermediate representation results in the semantics of the language being captured in the paths, which means we would require a greater number of samples to capture variations of bugs, which may be more similar once compiled. Lastly, most critically, Code2vec’s main limitation is in how the paths are computed—the number of intermediate nodes between terminal nodes will vary between paths, unsuitable for the later layers in the neural network. Code2vec solved this by using the hash of the path, followed by an attention layer to select the most relevant paths [25]. Tying into the second point, this would further reduce the number of samples required to train on, as any small semantic change would change the resulting hash. This weakness was partially solved in Code2seq [58], generating the path embeddings with an LSTM model; however, we did not investigate using Code2seq for bug detection. An alternative method using an RNN for working around the limitations of Code2vec was also developed in 2023 [59].

10 Conclusion

We presented a new approach for identifying the weakness class which a program falls into, based on inter-procedural value flow graphs, and graph neural networks. The performance of the classifier was on average high, capable of handling errors spanning multiple translation units, and outperformed industry-leading static analysis tools. Additional experiments were conducted to reason about the model’s performance, and identify which types of vulnerabilities our approach can most accurately detect.

While our model did not detect every vulnerable program (i.e., a recall of 100%), we argue that for the problem of vulnerability detection, at least at scale, precision, is more important to the programmer than recall. High false positive rates, i.e., low precision, mean that the programmer must spend large amounts of time investigating each error report, which equates to large amounts of wasted time. In this way, our model could potentially prove valuable, either on its own, or used in combination with static analysis tools, to reduce the amount of time spent investigating inaccurate error reports.

Despite the high performance of our model on the datasets we examined, there are three limitations to our approach, and we defer addressing these for future work.

-

Detection granularity In our experiments, we predicted the weakness class that the input program falls into as a graph classification task, i.e., is the entire program vulnerable or not. This limits the practical usefulness of the approach for developers, as generally you need more precise information about where the bug is located. We believe there are at least two possible methods for working around this limitation; the value flow graph for each function (and its callees) can be extracted instead of the entire program, which would achieve function level prediction. Alternatively, we can treat the problem as a node classification rather than graph classification task, but this significantly increases labeling difficulty, which is a challenge within itself.

-

Performance in real-world applications While we have made some effort to evaluate our model’s performance on datasets with a different structure to what it was trained on, the lack of real-world benchmarks for vulnerability detection make it difficult to predict how it would perform if utilized in the practical scenario of analyzing software being written for production use today. We hypothesize that this is an area where more traditional program analysis approaches may perform better, as the semantics and structure of the programs can vary significantly. However, we believe that as datasets for machine learning-based approaches are expanded, this may change.

-

Inconsistent performance across classes During the Program Representations ablation study (Sect. 8.3), the results of our experiments suggest that different vulnerability types require different representations for both high detection rates, and low false positive rates, as well as being able to differentiate between different, similar vulnerabilities. Future work should attempt to incorporate multiple representations of the program into the graph representation, in place of, or in addition to the instruction embedding we presented in this article.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

The MITRE Corporation: CVE (2022) https://cve.mitre.org/

National Institute of Standards and Technology: NVD (2022) https://nvd.nist.gov/

K2 Cyber Security Inc (2022) Vulnerabilities up almost 10% in 2021. https://www.k2io.com/the-final-count-vulnerabilities-up-almost-10-in-2021/