Abstract

Archimedes Optimization Algorithm (AOA) is a recent optimization algorithm inspired by Archimedes’ Principle. In this study, a Modified Archimedes Optimization Algorithm (MDAOA) is proposed. The goal of the modification is to avoid early convergence and improve balance between exploration and exploitation. Modification is implemented by a two phase mechanism: optimizing the candidate positions of objects using the dimension learning-based (DL) strategy and recalculating predetermined five parameters used in the original AOA. DL strategy along with problem specific parameters lead to improvements in the balance between exploration and exploitation. The performance of the proposed MDAOA algorithm is tested on 13 standard benchmark functions, 29 CEC 2017 benchmark functions, optimal placement of electric vehicle charging stations (EVCSs) on the IEEE-33 distribution system, and five real-life engineering problems. In addition, results of the proposed modified algorithm are compared with modern and competitive algorithms such as Honey Badger Algorithm, Sine Cosine Algorithm, Butterfly Optimization Algorithm, Particle Swarm Optimization Butterfly Optimization Algorithm, Golden Jackal Optimization, Whale Optimization Algorithm, Ant Lion Optimizer, Salp Swarm Algorithm, and Atomic Orbital Search. Experimental results suggest that MDAOA outperforms other algorithms in the majority of the cases with consistently low standard deviation values. MDAOA returned best results in all of 13 standard benchmarks, 26 of 29 CEC 2017 benchmarks (89.65%), optimal placement of EVCSs problem and all of five real-life engineering problems. Overall success rate is 45 out of 48 problems (93.75%). Results are statistically analyzed by Friedman test with Wilcoxon rank-sum as post hoc test for pairwise comparisons.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Optimization algorithms are used in many fields involving engineering problems. In recent years, the concepts of efficiency and speed have become even more important for applications that are important in terms of both transaction and time cost, such as data mining or image processing. Complex problems with higher dimensions, more variables and constraints have emerged. Different solution approaches are followed in line with the needs of the specific applications. Solving engineering problems using traditional numerical solving techniques is inefficient and time consuming. In cases where it is not possible to calculate the solution set analytically within an acceptable timeframe, metaheuristic optimization algorithms come into play. These algorithms do not guarantee the best results, but they produce near-best solutions in a reasonable amount of time.

Especially in the 90 s, the articles published in this field have pioneered many publications and hundreds of optimization algorithms today. Genetic Algorithm (GA), Particle Swarm Optimization (PSO), and Tabu Search are among the most well-known algorithms [1,2,3]. Many studies have been carried out in this area and have been grouped according to the areas inspired by the algorithm. They are classified as evolutionary, physics-based, swarm-based and human-based algorithms [4]. Evolutionary algorithms are inspired by the phenomenon of evolution. The better candidate solutions are grouped together in order to form the next generation of possible solutions. Consequently, new generation solution sets provide more accurate results than older ones. Genetic algorithm is a well-known optimization algorithm falling under this category. Genetic algorithm includes genetic items and events such as chromosomes, crossover, and mutation. Physics-based algorithms are inspired by the physic rules such as AOA [5] that is developed simulating the Archimedes’ Principle. Human-based algorithms are inspired by human behaviors. Mother Optimization Algorithm (MOA) is an algorithm based on the interaction of a mother with her children [6]. Swarm-based algorithms are inspired by the biologic and social behavior of various animals in order to solve complex problems. These algorithms mimic a swarm based animals’ prey searching or mating behavior in a computational optimization method. PSO, Salp Swarm Algorithm (SSA), and Whale Optimization Algorithm (WOA) are examples of swarm based algorithms [4, 7].

In the last decade, optimization algorithms have improved and new algorithms are proposed providing intuitive solution approaches and better resolutions to problems. For instance, Honey Badger Algorithm (HBA) is a population-based optimizer which is inspired by behavior of honey badger [8]. Sine Cosine Algorithm (SCA) tries to find the best solution using sine and cosine functions [9]. In study [10] Butterfly Optimization Algorithm (BOA) is introduced. This algorithm mimics the mating and food searching behavior of butterflies. In study [11] a nature-inspired optimization is proposed called Golden Jackal Optimization (GJO). GJO is particularly driven by the collective hunting habits of golden jackals which are mainly searching, surrounding and capturing the prey. Whale Optimization Algorithm (WOA) is another swarm-based metaheuristic algorithm [4]. WOA is developed with inspiration from the hunting method of whales. Fennec Fox Optimization (FFA), is inspired by digging and escape behavior of fennec fox [12]. In [13] Mutated Leader Algorithm (MLA) is proposed where initial random solutions are updated by a mutated leader. Two-stage Optimization (TSO) is an algorithm that updates population members based on the good members of the population [14]. Activities such as random walks are used in the algorithm to provide diversity in population. Salp Swarm Algorithm (SSA) is a swarm-based algorithm proposed in [7]. It is inspired by navigation and hunting behaviors of salps. Atomic Orbital Search (AOS) is a physics-based algorithm utilizing laws of quantum-based atomic theory [15].

According to a theorem, (no free lunch—NFL), a specific optimization algorithm cannot provide best results over a range of problems [16]. Therefore, in recent years, numerous new optimization algorithms are published and hybrid or modified algorithms are proposed to obtain better results. There are many studies on providing a hybrid solution to problems. For instance PSO is used along with Seeker Optimization Algorithm (SOA) to develop a new hybrid algorithm called SOAPSO [17]. It is tested on various benchmark functions. In literature [18] a hybrid optimization algorithm is formed with WOA and Modified Differential Evolution (MDE). Study aims to improve areas of the local optimum, population diversity, and early convergence of WOA. Another hybrid algorithm is HPSOBOA which combines PSO with BOA [19]. It uses both algorithms in order to obtain superior results.

In research [20] authors introduce a new approach using trigonometric operators to improve the exploitation phase of the original AOA method. Sine and cosine functions are used to avoid local optima. In studies [21, 22] TLBO is modified to improve solutions and accelerate convergence speed. In the first study, modification is done by altering updating mechanism of a single solution. In the second study, population individuals group mechanisms are presented into phases. Moreover, in another study, Harris Hawks Optimization Algorithm (HHO) is modified [23]. In the modified version, various update strategies are introduced. In study [24] Moth Flame Optimization (MFO) algorithm is modified to avoid cases of local optima and early convergence. Modification is done by utilizing a modified dynamic opposite learning (DOL) strategy which aims to find a better solution by determining a quasi-opposite number. A modified version of BOA is proposed in [25]. Algorithm parameters such as the switching probability, power of exponent (a), stimulus intensity (I), and sensor modality (c) are modified in search of better working efficiency of BOA. The Arithmetic Optimization Algorithm is a recently proposed study inspired by the arithmetic operators. This algorithm is modified by incorporating an operator for opposition-based learning (OBL) and a constant parameter [26]. Similarly, in studies [27,28,29,30,31,32,33,34], algorithms are hybridized or improved in order to obtain better results for various fields of studies such as optimal power flow, mobile robot path planning, and centrifugal pump optimization.

The modification process can be carried out by adjusting the coefficients in the algorithm or by changing the parts of the structure of an algorithm. Modification is focused on obtaining better results while considering the performance of the algorithm. Although there are many studies on the improvement of optimization algorithms, problem-oriented improvements are anticipated to be achievable. Studies have been carried out on modifying the algorithm around a specific problem and relatively better results are obtained [35,36,37,38].

This study aims to increase the performance of AOA in a wide range of problems. AOA features simplicity, scalability, and few control options. In addition, it has been evaluated on complex test functions and better results have been achieved when compared to other algorithms in the study [5]. Furthermore, it has an efficient and robust structure with regard to exploration and exploitation balance. At the exploration stage of the search, new solutions are searched in the unknown areas. At the exploitation stage, algorithms search solutions already found in the neighborhood. This way fitness value improves and more accurate solutions are acquired. The balance between the two stages significantly improves the success of the algorithm.

Therefore, to further increase the effectiveness of AOA, part of the algorithm and parameters were modified to calculate the problem-specific coefficients. The candidate positions of objects are optimized using the DL strategy given in [39, 40]. In addition, another metaheuristic algorithm, HBA, was used to calculate the coefficients. In other words, optimization algorithm is optimized by another algorithm.

A summary of contributions of this study is:

-

MDAOA, a modified version of AOA, is developed which provides better results on a wide range of problem functions.

-

Modification is applied with a two-step process: optimizing the candidate positions of objects using the dimension learning-based strategy and modifying predetermined five parameters used in the original AOA. Parameters are optimized with a different optimization algorithm, namely HBA, in order to solve a specific engineering problem.

-

Avoiding early convergence and improving balance between exploration and exploitation phases of AOA are accomplished.

-

The proposed modified algorithm, MDAOA, is tested on four groups of problem functions: standard benchmark functions, CEC 2017 test suite, engineering problems, and optimal placement of EVCSs on IEEE-33 distribution system. Results indicate that the Modified AOA (MDAOA) algorithm can produce better results than other well-known algorithms by calculating the problem-specific parameters.

The rest of the paper is organized as: Sect. 2 presents AOA before applying modification steps, and HBA which is used in the modification process. Section 3 describes the modification of AOA. In Sect. 4, the simulation results are presented in detail. The conclusion based on the results is presented in Sect. 5.

2 Optimization algorithms

2.1 Archimedes optimization algorithm

AOA is an optimization algorithm inspired by the Archimedes’ Principle [5]. An object is immersed in a liquid and pushed up by a buoyancy force. This force is equivalent to the mass of the displaced liquid. According to this approach, every object immersed in the liquid tries to be at the equilibrium state. In this state, the buoyant force and the weight of the object are equal. This condition is given in Eq. 1. Equations 1–13 are taken from the reference [5].

where \(v\) is the volume, \(p\) is the density, \(b\) indicates fluid, \(o\) indicates immersed object, and \(a\) is the acceleration. The speed in the liquid is determined by the volume and weight of the objects.

In AOA, submerged objects generate a population. Initial search is performed with random values which is a common practice in most optimization algorithms. For every iteration, the values of density and volume are updated until the algorithm’s ending criteria are fulfilled. Steps implemented in AOA can be listed as:

Step 1 Values of the objects are randomly assigned as in Eq. 2.

where N is population, \(O_{i}\) is the ith object in N, \(lb_{i}\) is the lower bound and \(ub_{i}\) is the upper bound. Volume (vol) and density (den) values are randomly initialized as in Eq. 3. Acceleration (acci) is initialized in Eq. 4 [5].

Step 2 Density and volume are updated by Eq. 5.

where volbest and denbest are the best volume and density values.

Step 3 The transfer operator (TF) is increased, on the other hand, the density factor is decreased. This enables the changeover between phases (exploration–exploitation) with equilibrium state after the collisions. This is accomplished by the Eq. 6.

where \(t\) indicates the iteration number. \(t_{\max }\) is the maximum number of iterations. Density decreasing factor (d) decreases over time using Eq. 7:

Step 4 Exploration phase: In this step, collisions occur according to the TF value. Object’s acceleration (acci) is updated by Eq. 8.

where \(den_{i}\) stands for density and \(vol_{i}\) is volume. \(acc_{i}\) indicates acceleration of object i, mr indicates values of random material.

Step 5 Exploitation phase: Depending on the TF value, the collision does not take place. In this case, object’s acceleration is updated by Eq. 9.

where accbest is the best acceleration value.

Step 6 Normalize acceleration step: In this step, acceleration is excessive in circumstances where the solution is far from the global minimum and decreases over time in other cases. Therefore, \(acc_{{i,norm{ }}}^{{t + 1{ }}}\) adjusts the change of step size for each object. For this, Eq. 10 is used.

where \(u\) and \(l\) are the upper and lower values.

Step 7 Update step. In this step, positions are updated.

If TF less than or equal to 0.5 (exploration phase) Eq. 11 is used.

where C1 is equal to 2. If TF is greater than 0.5, exploitation phase is executed. Object positions are updated using Eq. 12.

where C2 = 6. T = C3 × TF. The value of T increases with time in the range of [C3 × 0.3, 1]. \(F\) indicates the flag parameter used for altering the direction Eq. 13:

where \(P = 2 \times rand - C_{4}\).

Step 8 Evaluation step. In this step, the fitness function is evaluated. If a better result is found, it is remembered.

2.2 Honey Badger algorithm

Five constant values in AOA are optimized using HBA. It is a well performing algorithm tested on standard benchmark functions, engineering problems, and CEC 2017 benchmark functions. Results indicate that it can be effective in solving complex problems. In addition, HBA performs well in terms of balance of exploration and exploitation phases as well as convergence speed. As a result, HBA is employed in order to find the constant parameters of AOA.

HBA was inspired by the foraging behavior of the honey badger [8]. Food source or prey is located in two ways: smelling and digging or by following a bird that guides the honey badger to a source of honey. The first phase is digging where the prey’s rough location is established through smelling. Next, an appropriate location is selected for digging. The second phase is honey mode. In this phase, the honey guide bird is tracked in order to locate the source of honey. The pseudo code of HBA applied in the study is given in Algorithm 1 [8].

The system parameters are specified, and the initial positions are randomly determined. The population of honey badgers are represented in the matrix below [8].

Fitness evaluation results are stored in the matrix using Eq. 14. Equations 14–19 are obtained from reference [8]. ri is a random number between 0 and 1 where i = 1,..,n, and n = 7.

where \(N\) is population, \(x_{i}\) is the \(ith\) honey badger position.

The fitness function is calculated for each honey badger. The best position of xprey is remembered and fitness value is assigned to fprey. Afterward, Ii, the smell intensity, is calculated using Eq. (15).

Decreasing factor (α) is updated using Eq. (16).

The positions of the xnew are updated by either Eq. 17 or Eq. 19 depending on a random number.

\(F\) is the flag which changes direction. Its value is determined by Eq. 18.

Honey phase is the second part of Step 5. Equation 19 simulates the condition where the honey guide bird is followed to find honey.

where \(x_{new}\) is the updated position of honey badger. \(x_{{\begin{array}{*{20}c} {prey } \\ {} \\ \end{array} }}\) indicates the location of food/prey.

Updated positions are evaluated and assigned to fnew. The steps are repeated until the ending criteria are met.

3 Modifying Archimedes optimization algorithm

In [5], AOA is compared with recent and state-of-the-art optimization algorithms. AOA can provide very good solutions to the standard, real-world engineering as well as CEC benchmark functions. However, carefully analyzing and testing through benchmark functions, it has been observed that population diversity can be increased leading to an improved balance between exploitation and exploration and more precise set of solutions. In order to achieve modification, two stages are applied: optimizing the candidate positions of objects using the dimension learning-based strategy and predetermined five parameters used in the original AOA with a different optimization algorithm, namely HBA, in order to solve a specific engineering problem.

3.1 Stage 1: applying dimension learning-based steps

In this stage, each iteration has two strategies to update the object’s position to a better position: DL strategy and standard AOA search strategies similar to the work in [35].

In original AOA, Steps 4 through 7 given in Sect. 2 are used for balancing exploration and exploitation phases. By applying this stage to the AOA, balance of exploration and exploitation is improved.

The DL strategy uses a distinctive methodology to establish neighborhood for each object. According to this approach, neighborhood data can be conveyed among objects. DL strategy consists of four phases.

3.1.1 Initiation step

\(N\) is the population of objects. They are distributed randomly by Eq. 20.

where D is dimension. Xi(t) represents the position of ith immersed object for iteration \(t\). frnd indicates F distribution.

3.1.2 Movement/transfer step

In dimension learning strategy, objects are relocated by surrounding objects in order to be a new location candidate for Xi(t).

Objects’ new position dimensions are computed by Eq. 23. First, radius between the current and candidate positions are computed by Eq. 21. [39, 40].

Afterward, object’s neighbors are computed by Eq. 22.

where Ni(t) is matrix containing the neighbors of Xi(t).

3.1.3 Selecting/and updating step

Neighbor relocating is performed by Eq. 23

where \(X_{n,d} \left( t \right)\) is a random neighbor \(\in N_{i} \left( t \right)\). \(X_{r,d} \left( t \right)\) is a random object selected population.

The fitness values of Xi−AOA(t + 1) and Xi−DL,d(t + 1) are compared. The former and latter locations are compared and updated with Eq. 24. [40].

3.1.4 Termination step

This process is repeated for all iterations.

3.2 Stage 2: updating parameters

In this stage, the C3, C4 values in the AOA algorithm take different values for the use of CEC 2017 and Engineering problems and Standard Optimization Functions. In the AOA article, sensitivity analysis was performed by selecting three CEC 2017 test functions. Partial cost function values obtained by changing the constant parameters are given in Table 1.

It is stated in AOA article that different values could be tried depending on the difficulty and landscape of the problem [5]. Implementing algorithms with default parameters may perform well, however, fine tuning parameters for a specific problem returns better solutions. For example, in [35] the structure of AOA is modified for solving the optimal power flow problem on three different power systems. Results of implementing the modified algorithm show effectiveness obtained by the modification. Therefore, one or more parameters of an algorithm or part of its structure can be modified in order to increase effectiveness of outcome. These parameters could be optimized by another optimization algorithm. This may result in a high amount of computational cost because the search domain is very wide and optimization algorithms need to change and try new values constantly to fine tune parameters. Since, this part is only required once for each type of problem, running the modified algorithm afterward will not differ in terms of complexity and computational time. After the new parameters are found, a new modified algorithm is executed. The pseudo code of the proposed MDAOA is given in Algorithm 2.

In addition to C3 and C4, three fixed probability values are optimized. Probability values are as follows:

-

p1 is the probability compared with Transfer Operator (TF) parameter,

-

p2 is the probability compared with the flag (F) for changing the search direction,

-

p3 is used in Step 5 of AOA to compare TF value to determine either exploration or exploitation phases.

These probabilities are optimized by HBA considering the lower and upper boundary limits. Second and third columns in Table 2 indicates the original values of AOA parameters.

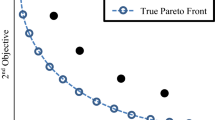

The flowchart of the proposed MDAOA is given in Fig. 1.

4 Experimental results

The effectiveness of the MDAOA algorithm is evaluated in four groups of functions. Standard benchmark functions, CEC 2017 test suite, five engineering problems and optimal placement of EVCSs into the IEEE-33 bus distribution system.

In addition to AOA and Modified AOA, the formulated objective function is run with recent optimization algorithms with proven effectiveness using various benchmark functions (CEC, engineering and standard). Algorithms used for comparison are: Honey Badger Algorithm (HBA-2021), Sine Cosine Algorithm (SCA-2016), Butterfly Optimization Algorithm (BOA-2019), Particle Swarm Optimization Butterfly Optimization Algorithm (PSOBOA-2020), Golden Jackal Optimization (GJO-2022), Whale Optimization Algorithm (WOA-2016), Ant Lion Optimizer (ALO-2015), Salp Swarm Algorithm (SSA-2017), Atomic Orbital Search (AOS-2021) [4, 7,8,9,10,11, 15, 19, 41].

All algorithm evaluations are applied for 30 runs to provide sufficient consistency (tmax = 1000). Table 3 shows parameters for algorithms used in comparison.

The Matlab programming of optimization algorithms was obtained from Matlab File Exchange. Comparisons of algorithms are executed using MATLAB R2019 version, on a Microsoft Windows 10 operating system environment.

4.1 Standard benchmark functions

The group of standard benchmark functions is the first of the four test function groups. Unimodal, multimodal and fixed dimensional functions are used to evaluate in multiple aspects. The function parameters of selected standard benchmark functions are shown in Table 4.

Convergence curve graphs of 13 standard benchmark functions are presented in Fig. 2 which shows fitness values and number of iterations. Results of 30 runs with maximum iteration of 1000 are presented in Table 5. Bold numbers indicate the minimum values. Results indicate that MDAOA provides best results on all of the standard benchmark functions. In addition, the average standard deviation values are consistently low and better on most functions when compared to other algorithms in the comparison group.

To conclude comparison information from the solution sets in the study, two hypotheses are defined: the null hypothesis H0 and the alternative hypothesis H1. H0 indicates that medians errors of compared algorithms are identical and equal to zero while H1 suggests at least one of the medians errors of compared algorithms is not identical from the others and different from zero. A level of statistical significance (α) is a threshold value defined in order to decide whether or not to accept or reject the hypothesis. The level of significance in this study is considered to be 0.05.

In order to determine the most appropriate method to compare the proposed algorithm with the other algorithms statistically, a normality test is performed. For this purpose, Shapiro–Wilk (SW) test is used. Based on the results, the Friedman test is utilized to compare the algorithms errors which are produced in every iteration similar to the studies in [8, 19, 24, 34]. The Friedman test is a nonparametric statistical test where errors of algorithms are compared in order to check if there is a statistical significance [42]. Mean ranks are presented in Table 5. Next, Bonferroni corrected Wilcoxon rank-sum test is employed as post hoc test for making pairwise comparisons of the algorithms similar to the studies in [6, 12, 19, 24]. In other words, two solutions sets are compared based on the median values which are statistically significant. If the p-value is lower than the level of significance which is set at 0.05, algorithms output statistically significant results. P-values computed by the Bonferroni corrected Wilcoxon rank-sum test are presented in Table 5.

According to the table, MDAOA returns best results on all functions. MDAOA is the only function to provide the minimum results on five functions and shares best results on the remaining eight function with consistently low standard deviation values. According to Table 5, MDAOA has the lowest Friedman mean rank and ranks first in optimizing standard benchmark functions.

4.2 Competitions on evolutionary computation 2017

Competitions on Evolutionary Computation 2017 (CEC 2017) is a test bed which provides an environment to test the performance of unconstrained numerical optimization algorithms. The CEC 2017 includes 29 benchmark functions, (f2 is excluded) among which are unimodal, multimodal, hybrid, and composition functions. In these benchmark functions, optimization algorithms’ performance on avoiding local minima, exploitation and exploration performance are evaluated. Search range for functions is [− 100, 100]. The dimension is 30. Summary of the CEC 2017 test functions are presented in Table 6.

In this group, 29 CEC 2017 benchmark functions are evaluated. Convergence curve graphs indicating minimum fitness values and number of iterations are presented in Fig. 3. Results including mean, median and standard deviation of 30 runs with maximum iteration of 1000 are presented in Table 7. MDAOA returned optimum results for 26 of the total 29 benchmark functions. Consequently, MDAOA can perform well on a wide range of problems.

4.3 Optimal placement of EVCS in the distribution network

Placement of EVCSs is critical due to the adverse effects such as deterioration of the voltage profile, increase of active power losses, instant load peaks, overloading of transmission lines and transformers which may take place in the absence of planned deployment. Thus, in this study, EVCSs are aimed to be placed at the best locations (specific buses) that minimize the effect of EVCSs in the distribution network as much as possible. This placement is performed based on an index that consists of power loss, voltage deviation, and voltage stability index (VSI-the ability of a system to return to normal operating condition after a disturbance) solved using standard AOA and the modified MDAOA. The results include the EVCSs locations in the appropriate buses of the distribution network.

4.3.1 Objective function

The multi-objective function given in Eq. 25 is used in the EVCS placement problem.

Here, w1, w2 and w3 are weight factors and represent the coefficients of the f1, f2, and f3 functions. The objective function \(f_{1}\), calculated by Eq. 26, is used to minimize the power loss value.

The objective function f2, calculated by Eq. 27 is used for minimum voltage deviation value.

The value of VSI is preferred to be greater than 0. However, the higher this value, the better the stability of the system would be. In order to calculate the VSI values, the formula in reference [44] is used. The objective function that calculates the VSI values is presented in Eq. 28.

The lowest single VSI value among the entire VSI values, represents the weakest link in terms of system stability. The lowest VSI value is found by the Eq. 29 and w3 in Eq. 25 is divided by this value.

4.3.2 Constraints

The following constraints (Eq. 30) are used to secure optimal power flow including minimum voltage, power and voltage stability of the distribution network.

where N is the number of buses. \(P_{i}\) is active power of \(ith\) bus. \(Q_{i} :\) is reactive power of \(ith\) bus. \(V_{i}\): is voltage of \(ith\) bus.

Figure 4 shows the comparison of convergence curve graphs of 11 optimization algorithms. Numerical results are presented in Table 8. Results show that compared to other techniques, MDAOA has achieved successful results with lowest average standard deviation values. This shows that MDAOA can be used in the EVCS placement problem, which is an important and challenging issue in power system engineering.

4.4 Constrained engineering design problems

Validity and efficiency of the proposed MDAOA is evaluated through five real life constrained engineering problems. The problems are highly complex with multiple design variables and constraints. However, MDAOA returned optimum results on all of the five engineering problems. Table 9 shows the list of engineering design problems with relative parameters.

4.4.1 Tension/compression spring design

The tension/compression spring design problem is used as a benchmark function. It is an optimization problem aiming to minimize the cost of a spring with three variables. These are: the number of active coils (N), wire diameter (d), and the diameter of coil (D). The problem has four constraints requiring deflection, stress and surge frequency. The figure of the problem is shown in Fig. 5.

The problem is mathematically formulated as:

Constraints:

Range of variables

Comparative convergence curves are presented in Fig. 6. The numerical results are shown in Table 10. Results demonstrate that solutions calculated by MDAOA and GJO are superior in the comparison group.

4.4.2 Pressure vessel design

The pressure vessel design problem is an optimization problem aiming to minimize the manufacturing cost of a cylindrical pressure vessel. The design and the parameters of the optimization are shown in Fig. 7. These are: shell thickness (Ts), inner radius (R), length of the cylindrical section (L) (excluding head) and head thickness (Th).

The mathematical formulation of the problem is:

Constraints:

Range of variables

Convergence curves are presented in Fig. 8. The comparative results are shown in Table 10. Results show that MDAOA outperforms other algorithms in the comparison group.

4.4.3 Welded beam design

Welded beam design problem is a cost minimization optimization problem of manufacturing a welded beam shown in Fig. 9. Cost function includes four decision variables: weld thickness (h), bar thickness (b), length of the attached section of the bar (l), and the bar’s height (t). There are seven constraints some of which are shear stress (s), bucking load on the bar (Pc) and bending stress (θ). Mathematical formulation of the problem is:

The problem is mathematically formulated as:

Constraints:

Range of variables

where

L = 14 in, δmax = 0.25 in, P = 6000 Lb, E = 30 × 106 psi, G = 12 × 106 psi, τmax = 13,600 psi, σmax = 30,000 psi.

The convergence curve graph is shown in Fig. 10. Results are shown in Table 10. The solution calculated by MDAOA is better than all other algorithms in the comparison group.

4.4.4 Speed reducer problem

In this engineering design problem, the goal is to choose parameters for a speed reducer used in a small aircraft that yields minimum weight. It has seven design variables and eleven constraints. These are: teeth module, face width, number of teeth on pinion, length between bearings for both first and second shafts, and diameters of both first and second shafts. Schematic of the speed reducer design problem is presented in Fig. 11.

The problem is mathematically formulated as:

Constraints:

Range of variables

The comparison of convergence curves of the algorithms are shown in Fig. 12. According to results given in Table 10, the proposed algorithm returns superior results along with HBA.

4.4.5 Three bar truss design

Three bar truss design problem is a weight minimization problem as shown in Fig. 13. Buckling, deflection, and stress are constraints of the system.

The mathematical formulation of the problem is:

Constraints:

Range of variables

where

The convergence curve of the problem for MDAOA and the other algorithms are shown in Fig. 14. Results in Table 10 shows that MDAOA returned the minimum result for all functions.

5 Conclusion

In this study, a novel MDAOA optimization algorithm is proposed based on modifying the AOA. The goal of the modification is to avoid early convergence and improve balance between exploitation and exploration. This is accomplished by two phase mechanism: optimizing the candidate positions of objects using the dimension learning-based strategy and calculating predetermined five parameters used in the original AOA.

MDAOA uses an additional measure to select the winning object and update the existing location. The DL strategy uses a diverse approach to form a neighborhood for individual object in which neighborhood data can be conveyed among other objects. The learning dimension used in the proposed work can improve the balance between exploitation and exploration by means of four phases: initiation, movement/transfer, selection/updating, and termination strategies.

In the second phase of modification, five constant values of AOA are computed by another optimization algorithm, HBA. These parameters are computed once for each optimization problem. Next, the new modified algorithm MDAOA is applied to a wide range of problems. The efficiency of the proposed algorithm is tested on 13 standard benchmark functions, 29 CEC 2017 benchmark functions, optimal placement of EVCSs on the IEEE-33 distribution system and five real-life engineering problems. Furthermore, results of the proposed modified algorithm are compared with ten algorithms published in recent years. Comparison includes statistical analysis employing Friedman test with Wilcoxon rank-sum as post hoc test for pairwise comparisons. Experimental results and statistical analysis indicate that MDAOA performed well with consistently low standard deviation values. MDAOA returned best results in all of 13 standard benchmarks, 26 of 29 CEC 2017 benchmarks (89.65%), optimal placement of EVCSs problem and all of five real-life engineering problems. Overall success rate is 45 out of 48 problems (93.75%). Although MDAOA shared the lead for 13 functions, it was the only algorithm that provided the best results for 32 functions. The algorithm presented in the study can be especially used in engineering optimization studies as well as constrained, unimodal, multimodal, hybrid, and composition functions.

Proposed method improves the performance of the original AOA; however, it requires a preprocessing for parameter optimization using another algorithm. Future studies can be conducted to eliminate this step to develop a self-adaptive approach. In addition, more real-life optimization problems such as the optimal placement of EVCSs problem solved in this study can be identified in order to be optimized by MDAOA.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

JH Holland 1992 Genetic algorithms Sci Am 267 66 73

Kennedy J, Eberhart R (1995) Particle swarm optimization. In: Proceedings of ICNN’95—ınternational conference on neural networks, vol 4, pp 1942–1948. https://doi.org/10.1109/ICNN.1995.488968

F Glover 1987 Tabu search methods in artificial intelligence and operations research ORSA Artificial Intelligence Newsletter

S Mirjalili A Lewis 2016 The Whale optimization algorithm Adv Eng Softw 95 51 67 https://doi.org/10.1016/j.advengsoft.2016.01.008

FA Hashim K Hussain EH Houssein MS Mabrouk W Al-Atabany 2021 Archimedes optimization algorithm: a new metaheuristic algorithm for solving optimization problems Appl Intell 51 3 1531 1551 https://doi.org/10.1007/s10489-020-01893-z

I Matoušová P Trojovský M Dehghani E Trojovská J Kostra 2023 Mother optimization algorithm: a new human-based metaheuristic approach for solving engineering optimization Sci Rep 13 1 1 26 https://doi.org/10.1038/s41598-023-37537-8

S Mirjalili AH Gandomi SZ Mirjalili S Saremi H Faris SM Mirjalili 2017 Salp Swarm Algorithm: a bio-inspired optimizer for engineering design problems Adv Eng Softw 114 163 191 https://doi.org/10.1016/j.advengsoft.2017.07.002

FA Hashim EH Houssein K Hussain MS Mabrouk W Al-Atabany 2022 Honey Badger Algorithm: New metaheuristic algorithm for solving optimization problems Math Comput Simul 192 84 110 https://doi.org/10.1016/j.matcom.2021.08.013

S Mirjalili 2016 SCA: a Sine Cosine Algorithm for solving optimization problems Knowl-Based Syst 96 120 133 https://doi.org/10.1016/j.knosys.2015.12.022

S Arora S Singh 2019 Butterfly optimization algorithm: a novel approach for global optimization Soft Comput 23 3 715 734 https://doi.org/10.1007/s00500-018-3102-4

N Chopra M Mohsin Ansari 2022 Golden jackal optimization: a novel nature-inspired optimizer for engineering applications Expert Syst Appl 198 116924 https://doi.org/10.1016/j.eswa.2022.116924

E Trojovska M Dehghani P Trojovsky 2022 Fennec fox optimization: a new nature-inspired optimization algorithm IEEE Access 10 84417 84443 https://doi.org/10.1109/ACCESS.2022.3197745

FA Zeidabadi SA Doumari M Dehghani Z Montazeri P Trojovský G Dhiman 2022 MLA: a new mutated leader algorithm for solving optimization problems Comput Mater Contin 70 3 5631 5649 https://doi.org/10.32604/cmc.2022.021072

SA Doumari H Givi M Dehghani Z Montazeri V Leiva JM Guerrero 2021 A new two-stage algorithm for solving optimization problems Entropy 23 4 1 17 https://doi.org/10.3390/e23040491

M Azizi 2021 Atomic orbital search: a novel metaheuristic algorithm Appl Math Model 93 657 683 https://doi.org/10.1016/j.apm.2020.12.021

DH Wolpert WG Macready 1997 No free lunch theorems for optimization IEEE Trans Evol Comput 1 1 67 82

H Liu S Duan H Luo 2022 A hybrid engineering algorithm of the seeker algorithm and particle swarm optimization Mater Test 64 7 1051 1089 https://doi.org/10.1515/mt-2021-2138

J Luo B Shi 2019 A hybrid whale optimization algorithm based on modified differential evolution for global optimization problems Appl Intell 49 5 1982 2000 https://doi.org/10.1007/s10489-018-1362-4

M Zhang D Long T Qin J Yang 2020 A chaotic hybrid butterfly optimization algorithm with particle swarm optimization for high-dimensional optimization problems Symmetry (Basel) 12 11 1 27 https://doi.org/10.3390/sym12111800

I Neggaz N Neggaz H Fizazi 2022 Boosting Archimedes optimization algorithm using trigonometric operators based on feature selection for facial analysis Neural Comput Appl https://doi.org/10.1007/s00521-022-07925-8

P Niu Y Ma S Yan 2019 A modified teaching–learning-based optimization algorithm for numerical function optimization Int J Mach Learn Cybern 10 6 1357 1371 https://doi.org/10.1007/s13042-018-0815-8

Y Ma X Zhang J Song L Chen 2021 A modified teaching–learning-based optimization algorithm for solving optimization problem Knowl-Based Syst 212 106599https://doi.org/10.1016/j.knosys.2020.106599

Y Zhang X Zhou PC Shih 2020 Modified Harris Hawks optimization algorithm for global optimization problems Arab J Sci Eng 45 12 10949 10974 https://doi.org/10.1007/s13369-020-04896-7

S Kumar S Apu K Saha S Nama M Masdari 2022 An improved moth flame optimization algorithm based on modified dynamic opposite learning strategy Springer

S Sharma S Chakraborty A Kumar S Sukanta N Saroj K Sahoo 2022 mLBOA: A modified butterfly optimization algorithm with lagrange interpolation for global optimization J Bionic Eng 19 4 1161 1176 https://doi.org/10.1007/s42235-022-00175-3

Y Zhou F Ge G Dai Q Yang H Zhu N Youssefi 2022 Modified arithmetic optimization algorithm : a new approach for optimum modeling of the CCHP system J Electr Eng Technol https://doi.org/10.1007/s42835-022-01140-0

AM Shaheen AM Elsayed RA El-Sehiemy SSM Ghoneim MM Alharthi AR Ginidi 2022 Multi-dimensional energy management based on an optimal power flow model using an improved quasi-reflection jellyfish optimization algorithm Eng Optim https://doi.org/10.1080/0305215X.2022.2051021

S Kumar A Sikander 2022 A modified probabilistic roadmap algorithm for efficient mobile robot path planning Eng Optim https://doi.org/10.1080/0305215X.2022.2104840

LM Thi TT Mai Anh N Van Hop 2022 An improved hybrid metaheuristics and rule-based approach for flexible job-shop scheduling subject to machine breakdowns Eng Optim https://doi.org/10.1080/0305215X.2022.2098283

X Gan J Pei W Wang S Yuan B Lin 2022 Application of a modified MOPSO algorithm and multi-layer artificial neural network in centrifugal pump optimization Eng Optim https://doi.org/10.1080/0305215X.2021.2015585

Z Tang 2022 Enhancing the search ability of a hybrid LSHADE for global optimization of interplanetary trajectory design Eng Optim https://doi.org/10.1080/0305215X.2021.2019250

J Jelovica Y Cai J Jelovica 2022 Improved multi-objective structural optimization with adaptive repair-based constraint handling repair-based constraint handling Eng Optim https://doi.org/10.1080/0305215X.2022.2147518

KW Huang ZX Wu CL Jiang ZH Huang SH Lee 2023 WPO: a whale particle optimization algorithm Int J Comput Intell Syst https://doi.org/10.1007/s44196-023-00295-6

M Yassami P Ashtari 2023 A novel hybrid optimization algorithm: dynamic hybrid optimization algorithm Multimed Tools Appl https://doi.org/10.1007/s11042-023-14444-8

O Akdag 2022 A ımproved archimedes optimization algorithm for multi/single-objective optimal power flow Electr Power Syst Res 206 107796 https://doi.org/10.1016/j.epsr.2022.107796

S Suganya SC Raja P Venkatesh 2017 Simultaneous coordination of distinct plug-in Hybrid Electric Vehicle charging stations: a modified Particle Swarm Optimization approach Energy 138 92 102 https://doi.org/10.1016/j.energy.2017.07.036

QM Alzubi M Anbar Y Sanjalawe MA Al-Betar R Abdullah 2022 Intrusion detection system based on hybridizing a modified binary grey wolf optimization and particle swarm optimization Expert Syst Appl 204 117597 https://doi.org/10.1016/j.eswa.2022.117597

M Nurmuhammed O Akdag T Karadag 2023 A novel modified Archimedes optimization algorithm for optimal placement of electric vehicle charging stations in distribution networks Alexandria Eng J 84 81 92 https://doi.org/10.1016/j.aej.2023.10.055

Kaur N (2021) DLHO-: an enhanced version of harris hawks optimization by dimension learning-based hunting for breast cancer and other serious diseases detection, pp 0–40

MH Nadimi-shahraki S Taghian S Mirjalili 2021 An improved grey wolf optimizer for solving engineering problems Expert Syst Appl 166 113917 https://doi.org/10.1016/j.eswa.2020.113917

S Mirjalili 2015 The ant lion optimizer Adv Eng Softw 83 80 98 https://doi.org/10.1016/j.advengsoft.2015.01.010

M Friedman 1937 The use of ranks to avoid the assumption of normality implicit in the analysis of variance J Am Stat Assoc 32 200 675 701 https://doi.org/10.1080/01621459.1937.10503522

Awad NH, Ali MZ, Suganthan PN, Liang JJ, Qu BY (2017) Problem definitions and evaluation criteria for the CEC 2017

S Deb K Tammi K Kalita P Mahanta 2018 Impact of electric vehicle charging station load on distribution network Energies 11 1 1 25 https://doi.org/10.3390/en11010178

JS Arora 1989 Introduction to optimum design McGraw-Hill New York

Belegundu AD, ARORA JS (1982) A study of mathematical programming methods for structural optimization[Ph. D. Thesis]

Kannan SNKBK (1994) An augmented Lagrange multiplier based method for mixed integer discrete continuous optimization and its applications to mechanical design. J Mech Des Trans ASME

SS Rao 1996 Engineering optimization 3 Wiley

E Sandgren 1990 Nonlinear integer and discrete programming in mechanical design optimization ASME J Mech Des 112 223 322

T Ray P Saini 2001 Engineering design optimization using a swarm with an intelligent information sharing among individuals Eng Optim 33 6 735 748 https://doi.org/10.1080/03052150108940941

Acknowledgements

This research is supported by Inonu University—the Scientific Research Projects (BAP) Unit (No. FDK-2023-3163). The Authors would like to thank Dr. Ahmet Kadir Arslan for comments and discussions which helped improving the quality of the paper.

Funding

Open access funding provided by the Scientific and Technological Research Council of Türkiye (TÜBİTAK).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nurmuhammed, M., Akdağ, O. & Karadağ, T. Modified Archimedes optimization algorithm for global optimization problems: a comparative study. Neural Comput & Applic 36, 8007–8038 (2024). https://doi.org/10.1007/s00521-024-09497-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-024-09497-1