Abstract

Ground reaction force and moment (GRF&M) measurements are vital for biomechanical analysis and significantly impact the clinical domain for early abnormality detection for different neurodegenerative diseases. Force platforms have become the de facto standard for measuring GRF&M signals in recent years. Although the signal quality achieved from these devices is unparalleled, they are expensive and require laboratory setup, making them unsuitable for many clinical applications. For these reasons, predicting GRF&M from cheaper and more feasible alternatives has become a topic of interest. Several works have been done on predicting GRF&M from kinematic data captured from the subject’s body with the help of motion capture cameras. The problem with these solutions is that they rely on markers placed on the whole body to capture the movements, which can be very infeasible in many practical scenarios. This paper proposes a novel deep learning-based approach to predict 3D GRF&M from only 5 markers placed on the shoe. The proposed network “Attention Guided MultiResUNet” can predict the force and moment signals accurately and reliably compared to the techniques relying on full-body markers. The proposed deep learning model is tested on two publicly available datasets containing data from 66 healthy subjects to validate the approach. The framework has achieved an average correlation coefficient of 0.96 for 3D ground reaction force prediction and 0.86 for 3D ground reaction momentum prediction in cross-dataset validation. The framework can provide a cheaper and more feasible alternative for predicting GRF&M in many practical applications.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The measurement of Ground Reaction Force (GRF) and Ground Reaction Moment (GRM) is a great topic of interest in functional gait analysis, clinical research, and rehabilitation [1]. The GRF is the force experienced by the subject’s foot when it is in contact with the ground, while GRM is the sum of the moments produced by the individual reaction forces about the Center of Pressure (CoP). 3D GRF&M can fully characterize a subject’s movements, e.g., walking, running, or jumping, providing important measurements for biomechanical analysis [2]. In the clinical domain, GRF&M has widespread use cases involving gait disorders [3], neurodegenerative diseases [4], knee osteoarthritis [5], etc., and physiotherapists, orthopedics, and physicians widely use it. Kinetic parameters such as joint angle and torque can be measured both outside or inside of a laboratory setup using a marker-based Optoelectronic System (OS) or Inertial Measurement Units (IMU) [6, 7]. However, the analysis of kinetic parameters such as GRF&M and joint movements is strictly limited to force platform analysis and inverse dynamics simulation, which requires a dedicated laboratory setup [8].

The de facto standard technique of measuring biomechanical parameters in walking, running, and other common activities is with the help of a multi-camera OS in conjunction with two or more ground or treadmill-mounted force platforms. Such a setup can be further enhanced by introducing electromyography (EMG) sensors, IMUs, and video recording providing multimodal data for multifactorial functional evaluation [2]. Similar setups have been applied over the years for various ranges of tasks, including gait and posture analysis [9], jumping capability analysis [10], upper and lower limb evaluation, and other physical activities [11, 12]. However, repeated trials might be necessary for some activities like walking and running to capture long-range patterns. To solve this, a treadmill-mounted force platform in conjunction with an OS can be used [13, 14].

The quality of kinematic data like GRF&M collected from a good force platform is unparalleled. However, this technique allows collecting data for only a few footsteps (one step per force platform) which might limit its capability in analyzing fluctuating gait patterns [15, 16]. Instrumented treadmills solve this problem to some extent but come with their own set of shortcomings. Additionally, force platforms must be mounted to the ground to collect data properly and, therefore, cannot be used everywhere. So, despite its high accuracy, reliability, repeatability, and outstanding metrological functionalities, the force platforms have several drawbacks, as listed below.

-

The setup is inherently bulky and cumbersome and requires a dedicated space and laboratory setup to get the most out of it.

-

Since they require a laboratory setup, they are unsuitable for outdoor data collection.

-

Force platforms are very expensive, and the setup and maintenance that goes with them massively increase the cost.

-

They also require highly skilled operators.

-

It has also been observed that the subject’s normal walking pattern changes when a force platform or treadmill is introduced [17].

For these reasons, various alternative approaches for predicting the GRF&M have been proposed in recent years that do not require a force platform [15, 18, 19]. Some of the attempts involve a thin layer of strain gauge transducers [20] or piezoelectric copolymer film [21] that can be placed inside the sole of a shoe. Other attempts use shoes instrumented with force sensors placed under the heel and toe areas of the foot [22, 23]. The instrumented shoe approach can accurately measure the vertical and shear GRF signals, but the process has some drawbacks. The thickness of the sensor separates the foot from the ground quite a bit which affects the walking gait, while the friction with the walking surface and the overall weight of the instrumented shoe also affects an individual’s gait [24]. Another alternative approach to estimating GRF&M is with the help of an OS. Since this approach requires minimal instrumentation, it can overcome several issues that are present in the case of instrumented approaches. Oh et al. [25] proposed an Artificial Neural Network (ANN) model to predict 3D GRF&M during the double support phase of the gait cycle while the single support phase was predicted using inverse dynamics. The authors equipped 5 subjects with full-body markers to achieve the displacement, velocity, and acceleration of different body parts and predicted GRF&M signals with an average correlation coefficient (\(R\)) of 0.9. Fluit et al. [26] proposed an inverse dynamics-based approach to predict GRF&M from full-body motion. In their experiment, 53 reflective markers were placed on the body, and by using Newton’s laws of motion and musculoskeletal modeling, force and moment signals were predicted. Mundt et al. [27] compared the performance of a feed-forward neural network and a Long Shot-Term Memory (LSTM) network for predicting the GRF signals from marker trajectories. In their experiment, the feed-forward network outperformed the LSTM network in predicting GRF by achieving an average correlation coefficient (\(r\)) of 0.96. All of the above-mentioned studies used a full-body marker system that had several drawbacks such as:

-

Placing reflective markers all over the body is inconvenient and might not be feasible for many practical use cases.

-

To capture the body movements correctly, these studies required the subjects to wear a tight-fit outfit to which the markers were attached, which might not be suitable for many users and different cultures.

-

The results achieved from these studies might not be reproducible because the GRF&M predictions had strict dependence on the marker’s placements. If the markers were not placed in the exact locations as they were when the model was trained, the model would have performed very poorly.

For these reasons, in this paper, the authors have tried to minimize the number of markers required to predict the 3D GRF&M. The investigations have achieved good results by using only 5 markers placed on the foot area, which can be placed on a shoe or a sock. Since wearing a marker-equipped shoe is enough for the proposed method to work, it greatly improves the feasibility of practical use cases. Also, the subject does not need to wear a full outfit, as body markers are not used, making it more convenient. Another big advantage is that the proposed approach can be used to train for shoes of different sizes pre-equipped with markers, and later those shoes can be provided to a new user who should be able to get good predictions since the marker placements are unchanged. This opens up possibilities of a generalized model for out-of-sample users because of the control over the marker placements.

For synthesizing GRF&M signals from marker trajectories, there are mainly two approaches investigated in the literature: inverse dynamics-based approaches [26] and deep learning-based approaches [25, 27, 28]. It has been shown that ANNs can learn gait patterns and have been used in many gait analysis problems [29]. Several works have been done where a fully-connected neural network has been used to predict 3D GRF&M for a complete gait cycle [30, 31] or to derive the indeterminacy in the double support phase [25]. In some studies, LSTM networks have also been used to predict force and moment waveforms [27]. In this study, however, the UNet [32] based architectures are used because of their capabilities in signal-to-signal synthesis problems. A novel neural network was developed by combining the MultiResUnet [33] and the Attention Gate [34], which outperformed vanilla UNet and MultiResUNet architectures in most experiments. Two different datasets and a cross-dataset validation were used to validate the proposed network’s capability. With the help of the proposed model, better accuracies compared to almost all of the previous works have been achieved by only utilizing \(5\) markers from the foot. So, the key contributions of this study can be summarized as follows:

-

1.

A low-cost alternative for predicting 3D GRF and GRM from optical motion capture data without needing a dedicated force platform has been proposed.

-

2.

A novel neural network architecture called Attention Guided MultiResUNet (AG-MultiResUNet) has been developed, outperforming the classical UNet and MultiResUNet architectures for such synthesis tasks.

-

3.

Only five reflective markers placed on the subjects’ feet were used to obtain state-of-the-art results instead of the inconvenient full-body markers.

-

4.

The effectiveness/robustness of the proposed approach has been validated by applying it to two different datasets and performing a cross-dataset validation.

The rest of the paper is organized in the following manner: Section 1 introduces the problem, similar studies trying to solve the problem, and the novelty of the proposed solution. Section 2 presents the methodology adopted in this study to develop the proposed solution, details of the dataset, preprocessing stages, and technical details of the proposed network. Section 3 presents the results from different experiments, and Sect. 4 is dedicated to a discussion of the results confirming the robustness of the proposed solution. Some limitations of the proposed solution have been addressed in Sect. 5. Finally, a conclusion is drawn in Sect. 6.

2 Methodology

The diagram of the complete framework is shown in Fig. 1. The process starts with the instrumentation phase, where the reflective markers are placed on the subject’s feet, and several cameras are placed along the walkway. After the setup, the subject walks straight through the walkway, and the cameras capture the movement of the markers. After the walking trials, the footage of the cameras is merged through motion-capture software, where the trajectories of the markers are retrieved. After that, the trajectories are provided as the input to the proposed network, which predicts the 3D GRF&M signals. Two publicly available datasets have been utilized that contains both the motion capture data and force platform data to train and validate the network. The whole process is described in detail in the next sections.

2.1 Dataset description

Creating a combined kinetic and kinematic dataset of human gait is challenging. Expensive equipment, laboratory setup, sophisticated data collection technique, the necessity for expert knowledge of the domain, and the privacy of the subjects add to the complexity of the process. As a result, only a few public datasets are available that combine kinetic and kinematic data of human gait. For this work, the authors have only focused on normal walking gait and healthy subjects’ data without any gait-related disorder, further narrowing down the available options. Finally, two different available datasets are utilized for this work. The idea was to validate the proposed method on multiple datasets and check its performance on out-of-sample data. The first dataset is titled “A multimodal dataset of human gait at different walking speeds established on injury-free adult participants,” available on Nature Scientific Data [35]. The second dataset is also from Nature Scientific Data and is titled “Lower limb kinematic, kinetic, and EMG data from young, healthy humans during walking at controlled speeds” [36]. In the following sections, we will refer to the first dataset as “Dataset A” and the second as “Dataset B”. The two datasets used in this paper have been summarized in Table 1.

2.1.1 Dataset A

This dataset contains kinetic, kinematic, and EMG data from 50 healthy injury-free subjects. Among the 50 subjects, 24 were women, and 26 were men, with a mean age of 37 ± 13.6 years, a mean height of 1.74 ± 0.09 m, and a mean weight of 71 ± 12.3 kg. The data collection was approved by the local ethical committee of Reahazenter and conducted according to the declaration of Helsinki. All of the participants provided their informed consent for the data collection. The participants were equipped with 52 cutaneous reflective markers and were asked to walk a couple of times in a straight 10-m walkway. Two OR6-5, AMTI, USA force plates were fixed in the center of the walkway to measure the GRF, GRM, and CoP. A 10-camera optoelectronic system (OS) was used to track the 3D trajectories of the reflective markers. The force plates were sampled at 1500 Hz and the OS at 100 Hz. Qualisys Track Manager software (QTM 2.8.1065, Sweden) was used to synchronize the force plates and OS data and store it in the C3D file format. Each subject recorded at least 3 trials. The dataset also includes 5 different walking speed trials: 0–0.4 ms−1 (C1), 0.4–0.8 ms−1 (C2), 0.8–1.2 ms−1 (C3), self-selected natural speed (C4), and self-selected fast speed (C5). In total, 1143 trials were recorded and included in the dataset during the data collection process.

2.1.2 Dataset B

This dataset contains lower limb kinetic, kinematic, and EMG data collected from 16 participants. All the subjects were healthy and had no history of injuries or musculoskeletal dysfunctions. Among the participants, 8 were male and 8 were female. The range of body mass for the subject was 45–90 kg, the height range was 1.50–1.90 m, and their mean age was 23.8 ± 2.02 years. Written informed consent was provided by all the participants following the ethical guidelines of the University of Minho Ethics Committee (CEICVS 006/2020). 24 reflective markers were placed on the lower limbs of the subjects. They were asked to walk on a 10-m flat walkway equipped with 6 embedded force plates. Among the six force plates, four (two FP4060 and two FP6090) were from Bertec (Ohio, United States of America), and two (9281 EA–FP4060) were from Kistler (Winterthur, Switzerland). A 20N force threshold was applied to eliminate the noise from the force platform signals. A 12-camera motion-capture system (Oqus, Qualisys–Motion-Capture System, Göteborg, Sweden) was used to capture the 3D kinematic information. The force platforms were sampled at 2000 Hz, and the motion capture system was sampled at 200 Hz. The data was processed and synchronized using the Visual3D software and was saved in the C3D file format. All participants went through 10 walking trials where they walked at 7 different walking speeds (1.0, 1.5, 2.0, 2.5, 3.0, 3.5, and 4.0 km/h). There are 1120 trials collected from both legs, including 1120 and 2240 strides for the left and right legs, respectively.

2.2 Preprocessing

Several preprocessing steps were performed before using the data as input to our deep learning model. First, a filtering step was performed to remove any unnecessary frequency components. After that, the gait data were segmented into individual gait-cycle segments. Next, the signals from two different modalities were synchronized. Figure 2 provides the effect on the “FM1” marker trajectories along with the force and moment signals. After each preprocessing step, we can see a gradual improvement in the signal quality.

The preprocessing steps. a The raw trajectory, force, and moment signals contain different noises and bad signals. The trajectory signals are shown for the “FM1” marker position only. b The signals after applying a low pass filter and baseline correction. c The signals after synchronization and segmentation

2.2.1 Filtering

The force platform signals and motion trajectories contained high-frequency noises. So, at first, they were filtered using a Butterworth low-pass filter. The filter order was set to 4 for the trajectories, and the cut-off frequency was set to 6 Hz. On the other hand, for the force platform signals, the filter order was set to 2, and the cut-off frequency was set to 15 Hz. The selection of filter order and cut-off frequencies was made according to the related works of literature in this domain [35, 36]. It was noticed that some of the vertical GRF signals had an offset associated with them. In other words, the signal’s amplitude was not zero when no force was applied. So, after filtering, the signals were also baseline corrected.

2.2.2 Segmentation

A single trial from Dataset A contained 2 gait cycles, and a single trial from Dataset B contained 4 gait cycles combining left and right feet signals. The authors experimented with the number of gait cycles in a single segment and found that one gait cycle per segment provided the best results. So, the trials from both datasets were segmented into individual gait cycles. A complete gait cycle comprises two main phases: the stance phase is when his foot is in contact with the ground, and the swing phase is when the foot has no contact with the ground. The force platform is only active when the pressure is applied during the stance phase. As a result, the GRF and GRM signals are only available during the stance phase of the gait cycle. During the swing phase, the signal remains zero since there is no contact of the foot with a force platform. For this study, the authors only needed the stance phase of the gait cycle where the signals are present. So, the swing phase was excluded from the segmented gait cycles.

2.2.3 Sign correction and synchronization

In Dataset A, the participants walked back and forth along the pathway during their trials. Due to walking in opposite directions, the sign of the signal produced by the force platforms in the anterior–posterior and medial–lateral planes changed with the walking direction. Also, in both datasets, the sign of the GRF signal in the medial–lateral plane from the left and right foot had opposite directions. A similar pattern was also observed for GRM signals. It was ensured that all force and moment signals in the same plane had the same sign regardless of the foot or walking direction not to confuse the neural network during its training.

While training the network, it was necessary to feed the network with the force or moment segments along with their corresponding segment of the trajectories. This raised a challenge for Dataset A because there was no protocol set to allocate one force platform to either the left or right foot. As a result, the authors had to analyze the signal pattern to couple a particular foot’s trajectory to its corresponding force platform signal. The problem was simpler for Dataset B because all the participants were instructed to step on the first force platform using their right foot, and all other cases were discarded.

It is also noticed from Fig. 3 that during synchronization, when a particular foot is on the force platform and is producing force and moment signals, its corresponding trajectories are flat since the movement of the foot is very small while it is in contact with the ground. However, simultaneously, the other foot is in the air (swing phase) and moving along the anterior–posterior plane. As a result, the trajectories of this foot have variations with respect to time and can be correlated with the force and moment being produced by the other foot. So, to predict the right foot’s GRFs and GRMs, the left foot’s trajectories were utilized, and vice-versa.

Synchronizing the force and moment signals with the marker trajectories. During the Right foot stance phase, the marker trajectories from the left foot can correlate with the right foot’s force and moment signals. However, the force and moment signals from the left foot are absent during this phase because the left foot is not in contact with the ground

2.2.4 Normalization

Before moving on to the network training process, ensuring all the segments had the same length was necessary. This was accomplished by time normalizing each gait cycle. The stance phase of the gait cycles was resampled to 1024 data points for each segment. The amplitude of the signals also needed normalization for faster convergence of the neural network. A range normalization technique was utilized, dividing each segment by the difference between its maximum and minimum amplitude. After the normalization process, all the segments had an amplitude ranging between 0 and 1.

2.3 The proposed network architecture

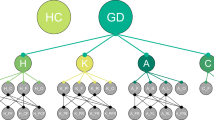

The authors have developed an Attention Guided MultiResUNet (AG-MultiResUNet) [33] (Fig. 4a) for the prediction of GRF and GRM signals from motion trajectories. The network is developed by modifying a 5-layer MultiResUNet architecture and introducing attention-guided residual connections. The model takes a 5-channel input and produces a single-channel output. The number of neurons in the first layers is set to \(16,\) doubling in every layer. The network also utilizes the Deep Supervision [37] concept to monitor the losses and train the model more effectively. The main components of the model: MultiRes Block, Attention Gate, and Deep Supervision, are discussed in detail below.

2.3.1 Multi-residual block

MutliResUNet was proposed by Ibtehaz et al. [33] and was designed to improve the classical UNet [32] architecture. In the MultiResUNet network, the sequence of two convolution layers is replaced by the Multi-Residual Block or MultiRes block shown in Fig. 4b. The MultiRes block is similar to the Inception block [38]. However, instead of using bigger and computationally expensive kernels in parallel, it uses multiple consecutive smaller kernels with increasing filters and residual connections.

The MultiRes block also has a width expansion parameter \(W\) that controls the number of filters of the convolutional layers in that block. The value of \(W\) can be computed using the following equation

where \(U\) is the number of filters in the corresponding layers of the original UNet architecture. The authors of the MultiResUnet paper set the value of the “\(a\)” parameter to \(1.67\) to keep the number of trainable parameters similar to UNet. In this paper, we have also utilized this value for “\(a\)”. For the three successive convolutional layers in a MultiRes block, we have assigned \([W/6]\), \([W/3]\), and \([W/2]\) number of filters, respectively, similar to the original paper.

2.3.2 Attention guided residual path

The main novelty of our proposed model lies in the modified Residual path (or ResPath) used in this architecture by implementing guided attention [34] on the originally proposed ResPath [33] for MultiResUNet. ResPath was implemented to reduce the semantic gap between the encoder and decoder feature maps by replacing direct skip connection with some convolutional operations on the feature maps propagating from the encoder layers. ResPath primarily focuses on gradually reducing the intensity of semantic gaps between encoder and decoder feature maps. Therefore, the number of convolutional blocks is gradually reduced for ResPaths at deeper levels. For this study, the five-layer AG-MultiResUNet had 5, 4, 3, 2, and 1 convolutional blocks along the ResPaths. To match the size of the feature maps in various levels, the number of filters for the convolutional blocks gradually varied from 16, 32, 64, 128, to 256, respectively, in five ResPaths, from shallower to deeper levels. On the other hand, to capture contextual information from a sufficiently large section of a signal, the feature map is continuously down-sampled in standard 1D-CNN networks, also in ResPaths. However, not all signal portions contain valuable information that helps the network learn signal patterns. The attention mechanism [39] assigns weights to the feature map so that the more important sections of the signal get higher priority. The proposed model utilizes the Attention Gate (AG) block (Fig. 4c) proposed in [34] on the output from the Residual Path. The attention coefficient \(\alpha\) identifies salient signal regions and prunes feature responses to preserve only the activations relevant to the task given to the model.

2.3.3 Deep supervision

To monitor auxiliary losses from deeper layers, Deep Supervision (\(\mathcal{L}\)) has been used in the proposed network. Deep Supervision has proven itself to be capable of improving model training performance in segmentation tasks [40]. The proposed network has employed additional loss operations on each deeply supervised encoder output. Generally, for Deep Supervision, the weights of the premature outputs from the deeper layers are set lower than the final output [41]. For example, the loss weight array for the five-layer AG-MultiResUNet proposed in this study is set as [1.0, 0.9, 0.8, 0.7, 0.6], i.e., the final output is provided with the highest weight of 1.0 and is gradually decreased afterward for deeper layers.

2.4 Experiments

The authors investigated two datasets in this paper and performed a cross-dataset investigation. The results section can be divided into three subsections. Firstly, the investigation on Dataset A was performed. After that, the investigation on Dataset B was performed. Finally, a cross-dataset investigation was performed. For any machine learning algorithm, checking the model’s generalizability by validating it with out-of-sample data is important. For this purpose, the cross-dataset validation experiments were done alongside the individual dataset experiments. In the cross-dataset experiment, the models were trained on the data from Dataset A and tested on Dataset B. At first, the authors tried experimenting without any fine-tuning done to the model, which did not work. This is because the marker placements do not match across the datasets. Both datasets have used similar marker placements but do not match completely. Due to this variation in placement, the trajectories of these markers also vary significantly across the dataset. So, a model trained on one dataset fails to work on another dataset because the input signals are completely different. To solve this issue, fine-tuning was done during a cross-dataset experiment. From the experimentation, it was found that re-training/fine-tuning the model on a very small portion of the second dataset was enough for the model to perform well on the later dataset. This is similar to the transfer learning concept in machine learning, where the network was trained on Dataset A, fine-tuned using \(20\%\) of the data from Dataset B, and tested on the remaining 80% of the data in Dataset B.

The 3D GRF and 3D GRM signals were predicted from the marker trajectories in each experiment and compared with their corresponding ground-truth signals. The three GRF components were denoted as Medial–Lateral (\({F}_{x}\)), Anterior–Posterior (\({F}_{y}\)) and Vertical (\({F}_{z}\)). The three GRM components were denoted as Sagittal (\({M}_{x}\)), Frontal (\({M}_{y}\)), and Transverse (\({M}_{z}\)). For all the experiments, the results of the proposed method were compared with the equivalent UNet and MultiResUNet architectures. The experiments are summarized in Fig. 5.

Visualization of the three experiments done in this paper. First, a fivefold cross-validation was performed on Dataset A and Dataset B for all the force and moment components. After that, a cross-dataset validation was performed where the model was trained on Dataset A and then fine-tuned and tested on Dataset B

2.5 Loss functions

Two different loss functions were utilized for getting the trained network. At first, the network was trained using the Mean Squared Error (\(MSE\)) loss defined in Eq. (2). The Adam optimizer was used to optimize this loss with a learning rate of \(0.001\).

where \({y}_{i}\) is the vector of predicted values, \({x}_{i}\) is the vector of observed values, and \(n\) is the total number of data points.

After the first training phase, the model was fine-tuned by training it again using the Correlation Coefficient (\(R\)) loss defined in Eq. (3).

where r is the correlation coefficient, \({x}_{i}\) are the observed values, \(\overline{x }\) is the mean of the observed values, \({y}_{i}\) are the predicted values, and \(\overline{y }\) is the mean of the predicted values.

The Adam optimizer was used for optimization with a learning rate of \(0.0003\). For both training phases, the maximum number of epochs was set to 300, and the training was kept running while the validation loss decreased with a tolerance of \(30\) epochs.

2.6 Evaluation criterion

The goal of the work is to predict the GRF and GRM signals as close as possible to the ground truth signals from the force platforms, and thus some metrics to measure the similarities between the predicted and ground truth signals were needed. Related works in this domain have used the correlation coefficient (\(r\)) metric as the preferred evaluation criterion. The correlation coefficient (\(r\)) was used as the evaluation metric to compare with the existing literature. Along with the correlation coefficient, Mean Absolute Error (\(MAE\)) and Root Mean Squared Error (\(RMSE\)) metrics were also measured for better evaluation. Equation (3) defines the Correlation Coefficient, while Eqs. (4–5) define the other two metrics below.

Here \({x}_{i}\) are the observed values, \(\overline{x }\) is the mean of the observed values, \({y}_{i}\) are the predicted values, and \(\overline{y }\) is the mean of the predicted values.

2.7 Validation technique

Cross-validation is necessary to estimate the effectiveness of any machine learning algorithm’s performance on an independent dataset. This paper utilized a fivefold cross-validation for evaluating performance on both datasets. For each training and testing iteration, 20% of the training data was used for validation. All the metrics provided in the result section were generated through the 5-Fold cross-validation process.

3 Results

In this paper, the authors investigated two different datasets and performed a cross-dataset investigation. For all the experiments, the results of the proposed method are compared with the equivalent baseline UNet architecture. The results for Dataset A are shown in Table 2. This dataset is the most challenging among the two datasets evaluated because it has more subjects (50) and fewer trials per subject. As a result, there is a large variability in the data. Table 2 reports the \(MAE\), \(RMSE\), and \(R\) for all the experiments done using UNet, MultiResUNet, and AG-MultiResUNet. Among all the models, AG-MultiResUNet is the best overall performer for predicting force. For predicting GRMs, the performance is lower compared to GRFs, with the Sagittal component being the most challenging. This outcome is because the GRM signals vary significantly in different trials for a particular subject compared to the GRF signals.

The results for Dataset B are shown in Table 3. In this dataset, 16 subjects walked at \(7\) different speeds. From the visualization of the data, it was noticed that the signal quality of this dataset is much better than that of Dataset A, which was also reflected in the results. Table 3 reports the \(MAE\), \(RMSE\), and \(R\) for all the experiments done using UNet, MultiResUNet, and AG-MultiResUNet. For predicting force, all the models performed very well with an average \(R > 0.98\). All of the models performed well, and their performances were very close to one another. The UNet model got a slightly higher performance in predicting GRFs but did not perform well while predicting GRM. For predicting GRMs, the performance was slightly lower compared to GRFs, with the transverse component being the most challenging one. All of the networks showed similar performance, while AG-MultiResUNet performed slightly better than other networks in most cases.

The results from the cross-dataset validation experiments are shown in Table 4. For predicting force, all the models performed well with an average \(R > 0.95\). The AG-MultiResUNet got a slightly higher performance in predicting GRFs. For predicting GRMs, the performance was slightly lower compared to GRFs, with the transverse component being the most challenging one.

4 Discussion

When working on any signal synthesis problem, it is much easier to visualize the results qualitatively compared to the different evaluation metrics (i.e., quantitatively). The predictions from the models compared to their corresponding ground truth are shown in Fig. 6. The blue lines represent the ground truth signal, and the orange lines represent the prediction. The shaded regions represent the Standard Deviation (SD). All the results shown are from the proposed AG-MultiResUNet model. It can be seen from Fig. 6 that the model performs well in mimicking the ground truth for all the different signals. For Dataset B, the results are near perfect. In some cases, the model performance is compromised while predicting the Sagittal and Transverse components of the GRM.

However,, the overall performance is good. The reason behind the lower performance is the intra-subject variability for these components. In Fig. 7, ground truth signals from the two lowest-performing cases have been visualized. The figures contain signals from different trials for a single subject. In Fig. 7a, the sagittal component of GRM is visualized for a particular subject. The signals are from different trials, and a lot of variabilities can be observed among them. Due to these variabilities in the ground truth signals, our model could not perform equally well for this component. The same can be said for the transverse GRM component for Dataset B, shown in Fig. 7b. In Dataset B, there are more trials per subject compared to Dataset A, and the variability among the trials is clear from the figure.

Table 5 compares the results with the existing literature. The proposed approach outperforms almost all of the existing works for regular walking. Oh et al. [25] achieved better results for some force and moment components, but their sample size was very small (5 subjects) compared to the one used in this study (66 subjects). Mundt et al. [27] got slightly better results than the proposed network for some force components, but they did not predict moment signals and used many markers (28) placed on the whole body. To the best of the authors’ knowledge, the proposed approach uses the lowest number of markers to predict 3D GRF&M while producing state-of-the-art results.

5 Limitations

Although the proposed method worked very well on two of the individual datasets it was tested, some challenges were faced while performing the cross-dataset validation. At first, the issue of fine-tuning was needed when the network was trained on one of the datasets and tested on the other. Without the fine-tuning approach, the results were poor. After some investigation of the data quality of the datasets, it was found that the marker trajectories did not match. Dataset B followed a slightly different protocol for placing the markers on the participant’s body [36]. As a result, the position of the markers was not the same in the two datasets. Also, the motion capture systems utilized in these datasets were very different. Combining all these effects resulted in very different signal qualities in these datasets. So, the model trained on one dataset could not perform equally on the other dataset. As discussed in the previous section, this problem was solved by fine-tuning the model. Another way of addressing this issue is to keep the marker positions constant during the trials. Since the approach relies on markers placed on the foot, uniformity can be achieved by permanently placing the markers on the shoes when collecting data from different users. For this to work, however, it is needed to first train the model on different sizes of shoes equipped with markers. Once the model generalizes on different shoe sizes, the pre-equipped shoes can be provided to new users who can use this framework without fine-tuning because the marker positions will not change.

6 Conclusion and future works

Ground reaction forces and moments can be used clinically for the early diagnosis of many health conditions. This work proposed a low-cost alternative for predicting ground reaction force and moment from optical motion capture data. The proposed system can work with only 5 markers placed on the subject’s foot. Using only 5 markers makes the approach much more convenient than other techniques that require full-body markers. Although using very few body markers, it is found that the performance of this approach outperforms the existing works that use full-body markers. A detailed analysis of the processing of the marker trajectories and force platform signals to make them suitable for a neural network model has been provided. A novel network architecture that outperformed the classical UNet architecture with a similar number of parameters has also been proposed. The performance of the proposed network has been verified using two datasets in this paper. The proposed system has provided a very reliable prediction of GRFs and GRMs in both datasets. The system can be useful for medical and clinical diagnosis where GRFs and GRMs are critical, but setting up and maintaining a force platform is inconvenient and infeasible. In these situations, the system can be easily implemented to get a good estimation of GRF and GRM. In the future, we would like to address one of the limitations of our work which is its dependence on the marker positions. We would like to develop a generalized framework that won’t rely on markers and will be able to generate motion trajectories from the video data only. Several recent research works have been done in the camera-based motion tracking and pose estimation domain that we would like to utilize for this purpose. We would also like to investigate generative algorithms as a substitute for the UNet-based architecture used in this work. Hopefully, our contributions will pave the way for a robust camera based GRF and GRM measurement framework.

Data availability

Data used in this study can be made available on reasonable request to the corresponding author.

References

Nüesch C, Overberg J-A, Schwameder H, Pagenstert G, Mündermann A (2018) Repeatability of spatiotemporal, plantar pressure and force parameters during treadmill walking and running. Gait Posture 62:117–123. https://doi.org/10.1016/j.gaitpost.2018.03.017

Ancillao A (2018) Stereophotogrammetry in functional evaluation: history and modern protocols. In: Ancillao A (ed) Modern functional evaluation methods for muscle strength and gait analysis. Springer, Cham, pp 1–29. https://doi.org/10.1007/978-3-319-67437-7_1

Schniepp R, Möhwald K, Wuehr M (2019) Clinical and automated gait analysis in patients with vestibular, cerebellar, and functional gait disorders: perspectives and limitations. J Neurol 266(1):118–122. https://doi.org/10.1007/s00415-019-09378-x

Zeng W, Wang C (2015) Classification of neurodegenerative diseases using gait dynamics via deterministic learning. Inf Sci 317:246–258. https://doi.org/10.1016/j.ins.2015.04.047

Kwon SB, Ku Y, Han H-S, Lee MC, Kim HC, Ro DH (2020) A machine learning-based diagnostic model associated with knee osteoarthritis severity. Sci Rep. https://doi.org/10.1038/s41598-020-72941-4

Robert-Lachaine X, Mecheri H, Larue C, Plamondon A (2017) Accuracy and repeatability of single-pose calibration of inertial measurement units for whole-body motion analysis. Gait Posture 54:80–86. https://doi.org/10.1016/j.gaitpost.2017.02.029

Tao W, Liu T, Zheng R, Feng H (2012) Gait analysis using wearable sensors. Sensors. https://doi.org/10.3390/s120202255

Shahabpoor E, Pavic A (2017) Measurement of walking ground reactions in real-life environments: a systematic review of techniques and technologies. Sensors. https://doi.org/10.3390/s17092085

Ancillao A, van der Krogt MM, Buizer AI, Witbreuk MM, Cappa P, Harlaar J (2017) Analysis of gait patterns pre- and post-single event multilevel surgery in children with cerebral palsy by means of offset-wise movement analysis profile and linear fit method. Hum Mov Sci 55:145–155. https://doi.org/10.1016/j.humov.2017.08.005

Ancillao A, Galli M, Rigoldi C, Albertini G (2014) Linear correlation between fractal dimension of surface EMG signal from Rectus Femoris and height of vertical jump. Chaos Solitons Fractals 66:120–126. https://doi.org/10.1016/j.chaos.2014.06.004

Charbonnier C, Chagué S, Ponzoni M, Bernardoni M, Hoffmeyer P, Christofilopoulos P (2014) Sexual activity after total hip arthroplasty: a motion capture study. J Arthroplasty 29(3):640–647. https://doi.org/10.1016/j.arth.2013.07.043

Ancillao A, Savastano B, Galli M, Albertini G (2017) Three dimensional motion capture applied to violin playing: a study on feasibility and characterization of the motor strategy. Comput Methods Programs Biomed 149:19–27. https://doi.org/10.1016/j.cmpb.2017.07.005

Owings TM, Grabiner MD (2003) Measuring step kinematic variability on an instrumented treadmill: how many steps are enough? J Biomech 36(8):1215–1218. https://doi.org/10.1016/S0021-9290(03)00108-8

van Gelder L, Booth ATC, van de Port I, Buizer AI, Harlaar J, van der Krogt MM (2017) Real-time feedback to improve gait in children with cerebral palsy. Gait Posture 52:76–82. https://doi.org/10.1016/j.gaitpost.2016.11.021

Senden R, Grimm B, Heyligers IC, Savelberg HHCM, Meijer K (2009) Acceleration-based gait test for healthy subjects: reliability and reference data. Gait Posture 30(2):192–196. https://doi.org/10.1016/j.gaitpost.2009.04.008

Chau T, Young S, Redekop S (2005) Managing variability in the summary and comparison of gait data. J Neuroeng Rehabil 2(1):22. https://doi.org/10.1186/1743-0003-2-22

van der Krogt MM, Sloot LH, Harlaar J (2014) Overground versus self-paced treadmill walking in a virtual environment in children with cerebral palsy. Gait Posture 40(4):587–593. https://doi.org/10.1016/j.gaitpost.2014.07.003

Xiang Y, Arora JS, Abdel-Malek K (2011) Optimization-based prediction of asymmetric human gait. J Biomech 44(4):683–693. https://doi.org/10.1016/j.jbiomech.2010.10.045

Ancillao A, Tedesco S, Barton J, O’Flynn B (2018) Indirect measurement of ground reaction forces and moments by means of wearable inertial sensors: a systematic review. Sensors. https://doi.org/10.3390/s18082564

Davis BL, Perry JE, Neth DC, Waters KC (1998) A device for simultaneous measurement of pressure and shear force distribution on the plantar surface of the foot. J Appl Biomech 14(1):93–104. https://doi.org/10.1123/jab.14.1.93

Razian MA, Pepper MG (2003) Design, development, and characteristics of an in-shoe triaxial pressure measurement transducer utilizing a single element of piezoelectric copolymer film. IEEE Trans Neural Syst Rehabil Eng 11(3):288–293. https://doi.org/10.1109/TNSRE.2003.818185

Faber GS, Kingma I, Martin Schepers H, Veltink PH, van Dieën JH (2010) Determination of joint moments with instrumented force shoes in a variety of tasks. J Biomech 43(14):2848–2854. https://doi.org/10.1016/j.jbiomech.2010.06.005

Liedtke C, Fokkenrood SAW, Menger JT, van der Kooij H, Veltink PH (2007) Evaluation of instrumented shoes for ambulatory assessment of ground reaction forces. Gait Posture 26(1):39–47. https://doi.org/10.1016/j.gaitpost.2006.07.017

Fong DT-P, Chan Y-Y, Hong Y, Yung PS-H, Fung K-Y, Chan K-M (2008) Estimating the complete ground reaction forces with pressure insoles in walking. J Biomech 41(11):2597–2601. https://doi.org/10.1016/j.jbiomech.2008.05.007

Oh SE, Choi A, Mun JH (2013) Prediction of ground reaction forces during gait based on kinematics and a neural network model. J Biomech 46(14):2372–2380. https://doi.org/10.1016/j.jbiomech.2013.07.036

Fluit R, Andersen MS, Kolk S, Verdonschot N, Koopman HFJM (2014) Prediction of ground reaction forces and moments during various activities of daily living. J Biomech 47(10):2321–2329. https://doi.org/10.1016/j.jbiomech.2014.04.030

Mundt M, Koeppe A, David S, Bamer F, Potthast W, Markert B (2020) Prediction of ground reaction force and joint moments based on optical motion capture data during gait. Med Eng Phys 86:29–34. https://doi.org/10.1016/j.medengphy.2020.10.001

Choi A, Lee J-M, Mun JH (2013) Ground reaction forces predicted by using artificial neural network during asymmetric movements. Int J Precis Eng Manuf 14(3):475–483. https://doi.org/10.1007/s12541-013-0064-4

Ferber R, Osis ST, Hicks JL, Delp SL (2016) Gait biomechanics in the era of data science. J Biomech 49(16):3759–3761. https://doi.org/10.1016/j.jbiomech.2016.10.033

Leporace G, Batista LA, Metsavaht L, Nadal J (2015) Residual analysis of ground reaction forces simulation during gait using neural networks with different configurations. In: 2015 37th annual international conference of the IEEE engineering in medicine and biology society (EMBC), pp 2812–2815. https://doi.org/10.1109/EMBC.2015.7318976

Eltoukhy M, Kuenze C, Andersen MS, Oh J, Signorile J (2017) Prediction of ground reaction forces for Parkinson’s disease patients using a kinect-driven musculoskeletal gait analysis model. Med Eng Phys 50:75–82. https://doi.org/10.1016/j.medengphy.2017.10.004

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation. arXiv, arXiv:1505.04597. https://doi.org/10.48550/arXiv.1505.04597

Ibtehaz N, Rahman MS (2020) MultiResUNet: rethinking the U-Net architecture for multimodal biomedical image segmentation. Neural Netw 121:74–87. https://doi.org/10.1016/j.neunet.2019.08.025

Oktay O et al (2018) Attention U-Net: learning where to look for the pancreas. arXiv, arXiv:1804.03999. https://doi.org/10.48550/arXiv.1804.03999

Schreiber C, Moissenet F (2019) A multimodal dataset of human gait at different walking speeds established on injury-free adult participants. Sci Data. https://doi.org/10.1038/s41597-019-0124-4

Moreira L, Figueiredo J, Fonseca P, Vilas-Boas JP, Santos CP (2021) Lower limb kinematic, kinetic, and EMG data from young healthy humans during walking at controlled speeds. Sci Data. https://doi.org/10.1038/s41597-021-00881-3

Wang L, Lee C-Y, Tu Z, Lazebnik S (2015) Training deeper convolutional networks with deep supervision. arXiv, arXiv:1505.02496. https://doi.org/10.48550/arXiv.1505.02496

Szegedy C et al (2014) Going deeper with convolutions. arXiv, arXiv:1409.4842. https://doi.org/10.48550/arXiv.1409.4842

Vaswani A et al (2017) Attention is all you need. In: Advances in neural information processing systems, vol 30. Accessed 05 June 2022. https://proceedings.neurips.cc/paper/2017/hash/3f5ee243547dee91fbd053c1c4a845aa-Abstract.html

“Frontiers | Improving CT Image Tumor Segmentation Through Deep Supervision and Attentional Gates | Robotics and AI.” https://www.frontiersin.org/articles/10.3389/frobt.2020.00106/full. Accessed 05 June 2022

Ibtehaz N, Rahman MS (2020) PPG2ABP: translating photoplethysmogram (PPG) signals to arterial blood pressure (ABP) waveforms using fully convolutional neural networks. https://doi.org/10.48550/arXiv.2005.01669

Johnson WR, Mian A, Donnelly CJ, Lloyd D, Alderson J (2018) Predicting athlete ground reaction forces and moments from motion capture. Med Biol Eng Comput 56(10):1781–1792. https://doi.org/10.1007/s11517-018-1802-7

Acknowledgements

This work was supported in part by the Qatar National Research Fund under Grant NPRP12S-0227-190164 and in part is also supported via funding from Prince Sattam Bin Abdulaziz University Project No. (PSAU/2023/R/1444). The statements made herein are solely the responsibility of the authors. Open-access publication is supported by Qatar National Library.

Funding

Open Access funding provided by the Qatar National Library.

Author information

Authors and Affiliations

Contributions

MAAF: Conceptualization, Methodology, Software, Writing—Original draft preparation, Writing—Reviewing and Editing. SM: Conceptualization, Methodology, Software, Validation, Writing—Original draft preparation. MEHC: Conceptualization, Methodology, Supervision, Funding Acquisition, Writing—Original draft preparation, Writing—Reviewing and Editing. AK: Validation, Methodology, Software, Writing—Original draft preparation, Writing—Reviewing and Editing. MUA: Validation, Supervision, Writing—Original draft preparation, Writing—Reviewing and Editing. AA: Validation, Supervision, Funding Acquisition, Writing—Reviewing and Editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

The dataset used in this research is collected from two open-access datasets, where the standard protocols were used for creating the dataset.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Faisal, M.A.A., Mahmud, S., Chowdhury, M.E.H. et al. Robust and novel attention guided MultiResUnet model for 3D ground reaction force and moment prediction from foot kinematics. Neural Comput & Applic 36, 1105–1121 (2024). https://doi.org/10.1007/s00521-023-09081-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-023-09081-z