Abstract

This work proposes a novel reptile search algorithm (RSA) to solve optimization problems called reinforcement reptile search algorithm (RLRSA). The basic RSA performs exploitation through highly walking in the first half of searching process while the exploration phase is executed through the hunting phase in the second half. Therefore, the algorithm is not able to balance exploration and exploitation and this behavior results in trapping in local optima. A novel learning method based on reinforcement learning and Q-learning model is proposed to balance the exploitation and exploration phases when the solution starts deteriorating. Furthermore, the random opposite-based learning (ROBL) is introduced to increase the diversity of the population and so enhance the obtained solutions. Twenty-three typical benchmark functions, including unimodal, multimodal and fixed-dimension multimodal functions, were employed to assess the performance of RLRSA. According to the findings, the RLRSA method surpasses the standard RSA approach in the majority of benchmark functions evaluated, specifically in 12 out of 13 unimodal functions, 9 out of 13 multimodal functions, and 8 out of 10 fixed multimodal functions. Furthermore, the RLRSA is applied to vessel solve pressure and tension/compression spring design problems. The results show that RLRSA significantly found the solution with minimum cost. The experimental results reveal the superiority of the RLRSA compared to RSA and other optimization methods in the literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Optimizations techniques categorized into two main categories are deterministic and stochastic methods. Deterministic techniques are partitioned into nonlinear and linear techniques [1]. The conventional methods utilize gradient learning to navigate through the search space with the goal of identifying the best possible solution [2, 3]. However, the deterministic techniques are very useful for unimodal search problems (linear), they are sensitive for trapping in local minima if they are implemented for multimodal search problems (nonlinear). This obstacle was solved by developing several techniques based on modification or hybridization of algorithm [4].

Stochastic methods employ randomized search parameters to explore the search space and identify a solution that is close to optimal [5]. The most common stochastic technique is metaheuristic techniques which have the advantage of easy implementation, simplicity, flexibility and independency to the problem [6,7,8]. Lately, metaheuristic algorithms were proposed to find solution for complex optimization problems [8, 9] [10]. Metaheuristic algorithm finds the near optimal solution with acceptable accuracy in a reasonable time.

Metaheuristic technique has two main merits that are diversification (exploration) and intensification (exploitation) [8]. Diversification search scheme explores the search space widely; however, intensification intensifies the searching process in a narrow region of the promising solutions to obtain the best solution. Metaheuristic algorithms can be grouped into four essential classes [11]: (1) swarm-based intelligence (SI) [12], (2) human-based methods (HM) [13], (3) physics-based methods (PM) [14], and (4) evolutionary-based algorithms (EA) [15].

SI mimics the social behavior of some animal swarms such as herds, flocks and schools where communications between animals are the main characteristics and this behavior is emulated via optimization operation. The particle swarm optimization (PSO) algorithm is an example of a stochastic method within this category [16], whale optimization algorithm (WOA) [17], reptile search algorithm (RSA) [18], moth–flame optimization algorithm [19], Harris hawk optimization algorithm [20], salp optimization algorithm [21], bat optimization algorithm [22], and gray wolf optimization algorithm [23].

HMs mimic the behavior of human communication in communities. The most common HM algorithms are teaching learning-based optimization (TLBO) [24], brain storm optimization algorithm [25], human mental search [26], poor and rich optimization algorithm [27], simple human learning algorithm [28], and imperialist competitive algorithm (ICA) [29].

PMs' search strategy is mimicked by the physical and mathematical laws in life. The most common PM algorithms are simulated annealing (SA) [30], sine–cosine optimization algorithm (SCA) [31], gravitational search algorithm (GSA) [32], arithmetic optimization algorithm (AOA) [33], electromagnetic field optimization [34], ions motion optimization algorithm [35], and Henry gas solubility optimization [36]. EAs simulate the attitude of natural evolution where it uses the operators such as mutation and crossover which is inspired by biology. The most common EA algorithms are genetic algorithm (GA) [37], differential evolution (DE) [38], and evolution strategy (ES) [39].

The after-mentioned metaheuristic methods are being used to solve various optimization problems in different areas such as bioinformatics [40,41,42,43,44,45,46], photovoltaic design [47, 48], fuel cell design [49, 50], PID controller design [51,52,53,54], passive suspension system design [55, 56], combinatorial optimization problems [57], structural design [58], image watermarking [59, 60], image segmentation [61, 62], mining high-utility item sets [63], privacy-preserving data mining [64, 65], maximization occupancy of GPU [66, 67], human activity recognition [68], fuel cell [49, 69], exploiting GPU parallelism technique using MA [70], and others. While these algorithms have shown success in solving various optimization problems, it is widely acknowledged, as per the no-free-lunch theory of optimization, that no single algorithm can attain the optimal solution for all optimization problems [71].

The reptile search algorithm (RSA) is a recently developed population-based metaheuristic algorithm that models the hunting behavior of crocodiles [18]. The main advantages of RSA are concluded as achieving a near optimum solution with reasonable quality for the tested optimization problems, ease of implementation and its control parameters are few. These advantages motivate many researchers to use it for optimization of many engineering problems such as optimize an adaptive neuro-fuzzy inference system (ANFIS) to predict the swelling potentiality for fine-grained soils in the foundation bed [72], optimization of switching angle of the selective harmonic elimination (SHE) [73], parameter extraction of photovoltaic models [74], structure design optimization [75], routing and clustering in cognitive radio sensor network [76], and image retrieval [77].

However, there are some disadvantages of RSA such as the influence of objective value on the updating mechanism of solution, self-learning mechanism vanish, slow convergence, poor balancing mechanism between exploitation and exploration, and high chance for trapping in local optima. These drawbacks motivated many researchers to enhance the behavior of RSA.

In [78], RSA was enhanced by using Levy flight and crossover strategy (LICRSA) to improve the poor and slow convergence accuracy of optimization problems. Levy flight was used to increase the flexibility and variety of solutions to ban early convergence and enhance the robustness of the solution. LICRSA was applied to solve CEC2020 functions and 5 mechanical engineering optimization problems against other methods from the literature. LICRSA had superiority and powerful stability for gripping the engineering optimization problems. Furthermore, RSA has been utilized in power system applications to estimate the parameters of a PID controller, where it incorporates the Levy flight and Nelder–Mead algorithm [79]. Besides, an integration of RSA and Levy flight was presented for parameters estimation of PID controller for vehicle cruise control system [80].

A hybrid between RSA and remora optimization algorithm (ROA) was presented to enhance the data clustering process of data mining [81]. The proposed hybrid methods avoid the weakness of RSA and enhance the quality of found solution. The proposed method was tested on 20 mathematical benchmark functions and 8 data clustering problems where it beats comparative methods in terms of accuracy of the obtained solution. A binary RSA version was implemented based on different chaotic maps (CRSA) to solve feature selection problem in machine learning [82]. Three objectives combined as objective function were maximization of classification accuracy, produced wrapper models' complexity, and the size of features. Twenty UCI datasets were applied as test data and the results shown the superiority of CRSA over well-known techniques in the literature.

To tackle the feature selection problem in telecommunication applications, RSA has been combined with the ant colony optimization (ACO) algorithm [83]. Seven customer churn prediction datasets were used as test data for evaluation of ACO–RSA FS technique where its performance beat that of other comparative algorithms. In [84], the weakness of conventional RSA was enhanced based on mutation technique. The proposed algorithm is evaluated using CEC2019 benchmark functions and 5 industrial engineering optimization design problems. Snake optimizer (SO) algorithm was merged with RSA in parallel manner to achieve the optimal features subset in a given datasets [85]. The ideas of hybridization avoid RSA for trapping in local optima by enhancing the balancing between exploration and exploitation of the search space.

A modified version of RSA was presented to enhance the population diversity using an adaptive chaotic reverse learning strategy [86]. In addition, the elite alternative pooling strategy is introduced to balance between exploitation and exploration process. The improved algorithm was evaluated using both benchmark CEC2017 functions and the robot path planning problem, and it demonstrated superior performance in comparison to other algorithms in terms of accuracy, convergence speed, and stability. In [87], RSA was enhanced by merging the chaos theory to enhance the exploration capability while simulated annealing algorithm was used to avoid trapping in local optima. The proposed technique's performance was evaluated to optimize the feature selection of medical datasets. However, these trials of enhancement of RSA achieve a reasonable accuracy of the obtained solutions but still trials to enhance the avoidance of RSA from trapping in local optima and quick the convergence rate. This paper aims to hybrid the reinforcement learning technique and opposition operators to enhance the performance of RSA.

Reinforcement learning (RL) which is a category of machine learning is used to solve many optimization problems [88]. Machine learning techniques categorized into four kinds are supervised learning, semi supervised learning, unsupervised learning, and reinforcement learning (RL). In RL techniques, the agents are learned to be trained on the optimal behavior in the ganglion environment. The agents are trained to use their training knowledge the ulterior actions. Two categories of RL algorithms are model-based and model-free approaches. The model-free approach can be categorized into two kinds which are policy-based and value-based techniques. The value-based techniques suitable for coordination with metaheuristic techniques since they are policy-free and model-free which supply more flexibility.

The agent is trained from the experience of environment and its action in the value-based RL techniques via penalty and reward. The agent mensuration success for performing the task aim via the reward penalty then its decision was made according to its achievement. The Q-learning technique is most the common one of the values-based RL techniques. The agent is obtained penalty or reward after it did random actions. According to the subsequent agent's actions, the experience is progressively constructed. The Q table is constructed through the operation of constructing experience [89]. All allowable actions are considered by each agent and according to the values of Q table agent's state is updated to choose the optimum action which maximizes the current reward’s state. Hence, the agent decides to exploit or explore the search space. The advantage of RL algorithms over metaheuristic algorithms that it balances between the exploitation and exploration of the search space and also can determine the optimum design of parameters. The dynamic search of metaheuristic algorithm is lower than that of RL techniques (for value-based techniques especially) due to metaheuristic algorithm working with determined policies in specific situations. However, in value-based techniques the agent determines its action according to the reward penalty strategy with no need for any specified policies and this operation of change is online.

RL techniques were merged with many metaheuristic techniques to enhance it. In [90], GA was improved by merging with RL with mutation (RMGA) to solve traveling salesman problem (TSP). In RMGA, the pairing selection is performed heterogeneously instead of randomly in the edge assembly crossover (EAX). In addition, the reinforcement mutation operator is performed by adjusting the Q-learning technique which is applied to the agent produced from altered EAX. The developed technique RMGA was applied on TSP with small and large instances and had superiority over than traditional GA and EAX–GA in terms of running time and quality of solutions. Teaching learning-based optimization (TLBO) was improved by merging RL technique [88]. RL–TLBO was modified via two phases: First, the effect of the teacher is presented as a new learner process. Second, a switching process between two learning modes is introduced in the learner phase based on the Q-learning method in RL technique. This enhancement aimed to enhance the speed of convergence and the accuracy of solution. CEC mathematical benchmark functions were employed to evaluate the performance of the algorithm, which was also applied to solve engineering design problems.

The particle swarm optimization (PSO) was enhanced using reinforcement learning and the per-training concept for enhancing the parameter adaptation ability to enhance the convergence speed [91]. The adaptation of the single parameter of gray wolf optimizer (GWO) was presented based on reinforcement learning techniques merged with neural networks to improve the performance of GWO [92]. The performance of the enhanced GWO was evaluated on choosing the weight' values of the neural network for feature selection to produce the superior over the convenient GWO and other comparative algorithms.

Artificial bee colony algorithm with modified by using Q-learning technique to reduce the tardiness of distributed three-stage assembly scheduling problem to the minimum level [93]. The Q-learning technique is applied to choose the search operator dynamically and it consists of 12 states which is based on the quality evaluation of the population. Besides, its actions are 8 which is determined by the neighborhood and global search where a new and effective action selection and reward were presented.

To enhance the exploration capability and enhance the search efficiency, two employed bee swarms are constructed where an adaptive competition operation and communication between them are embraced.

Opposition-based learning (OBL) operators were used to enhance the exploration capability of the metaheuristic algorithms and increase the avoidance of trapping in local optima. For example, in [94] salp swarm algorithm (SSA) was improved through the integration of OBL to enhance the accuracy of solutions, balance the exploration and exploitation scheme, and improve the convergence process of SSA. SSA–OBL was evaluated using CEC2015 benchmark mathematical functions and was applied to solve real-world optimization problems such as spacecraft trajectory optimization problem and circular antenna array design problem.

The imbalance between exploitation and exploration of PSO was enhanced by merging OBL for optimizing the feature selection operators [95]. Datasets consist of 24 benchmarks that are used to evaluate PSO-OBL algorithm against other metaheuristic algorithms in the literature. The algorithm shows superiority over other techniques in terms of high prediction accuracy. Hunger games search algorithm (HGSA) was merged with OBL to tune the fractional order PID controller [96]. Besides, the enhanced version of HGSA was evaluated on CEC2017 test functions.

An improved SCA by applying the opposition of solutions to increase the exploration of SCA (ISCA) [97]. In m-SCA [98], SCA enhanced by applying the opposition on the solutions beside adding a self-adaptive parameter was added in the updating equations of SCA to enhance the exploitation of promising regions of search space.

Improved Harris hawks optimization (HHO) based on OBL was introduced to address the problem of trapping in local optima and quick the convergence of search process [99]. HHO–OBL's performance was evaluated on CEC2017 benchmark mathematical test functions and 5 constrained engineering problems such as feature selection using 7 UCI datasets.

OBL has been introduced to enhance the arithmetic optimization algorithm (AOA) and prevent it from getting stuck in local optima [100]. This integration aims to improve the algorithm's ability to find the global optimal solution. Besides, a spiral model was used for accelerating the convergence speed of AOA. The enhanced AOA's performance was evaluated on 23 mathematical benchmark functions and 4 engineering optimization problems were the tubular column design, the cantilever beam design, the three-bar truss design, and the pressure vessel design. TLBO was merged with random OBL to enhance the avoidance of local optima beside using RL techniques to accelerated the convergence speed [88].

Based on the literature study, it is concluded that RL technique can effectively balance the exploration and exploitation of many metaheuristic algorithms and accelerate its convergence speed. Moreover, OBL was embedded in many algorithms to enhance the avoidance of trapping in local optima. These studies motivate us to embed RL technique and OBL in RSA to overcome its drawbacks.

The novelty of this work is listed as follows:

-

1:

Introduce reinforcement learning is applied to find a proper balance between exploration and exploitation and therefore prevent premature convergence.

-

2:

Prevent the tapping in local minimum through exploring the search space by introducing random opposition-based learning.

The paper is organized as follows: Sect. 2 represents preliminaries including the standard RSA and the concept of reinforcement learning. Section 3 shows the novelty of the RLRSA. Section 4 demonstrates experimental results and discussion. Finally, Sect. 5 concludes the work.

2 Preliminaries

2.1 Reptile search algorithm (RSA)

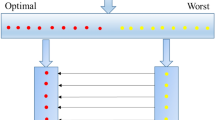

RSA is a novel optimization algorithm inspired by the hunting behavior of crocodiles, which involves encircling and hunting prey. The algorithm is modeled into two mechanisms that are divided into the global search phase, represented by the encircling behavior and is shown in Fig. 1, and the local search phase, represented by the hunting behavior and is shown in Fig. 2. These mechanisms occur in the first and second half of the iteration, respectively. The following two subsections discuss the characteristics of these two mechanisms.

2.1.1 Encircling phase

The global exploration phase of RSA employs two main operators, high walking and belly walk, which facilitate the hunting phase and help identify areas with a high density of prey. High walking and belly walk occur in the first and second half of the exploration phase, respectively. Accordingly, the crocodile positions are updated as demonstrated in Eq. 1

where \({x}_{\mathrm{best}\left(j\right)}\left(t\right)\) at index j corresponds to the jth position of the best solution discovered in the current iteration t, \({\mathfrak{n}}_{\left(i,j\right)}\left(t\right)\) is the jth position hunting operator for the ith solution and based on Eq. 2,, \(\mathcal{B}\) is a control parameter used to increase the accuracy of high walking phase, \({R}_{\left(i,j\right)}\left(t\right)\) determine the search region and is can be estimated using Eq. 3, rand is a random number \(\in\) (0,1) drawn from uniform distribution, \({x}_{\left(r1,j\right)}\) is the jth position of random selected solution, and ES(t) is evolutionary sense that represents a probability ratio between 2 and − 2 and is calculated according to Eq. 4.

where \({p}_{\left(i,j\right)}\) represents the distance between the jth positions of fittest individual and the position of the current individual and is estimated using Eq. 5, \(\upvarepsilon\) represents a small value, \({r}_{2}\) random number \(\in \left[1,N\right]\), and \({r}_{3}\) is random number \(\in \left[-\mathrm{1,1}\right]\)

where \(\propto\) is also a parameter that control the exploration accuracy and equals to 0.1, \(M\left({x}_{i}\right)\) is the average position of the ith solution and is calculated from Eq. 6, \({\mathrm{UB}}_{j}\mathrm{ and }{\mathrm{LB}}_{j}\) are the upper and lower boundaries of the j-th position.

2.1.2 Hunting phase

The exploitative phase of RSA occurs in the second half of the iteration, following the encircling phase, and consists of hunting coordination and cooperation strategies. These two strategies involve an intensive search near optimal solution and can be demonstrated in Eq. 7.

The first part of Eq. 7 represents coordination hunting, whereas the second part represents cooperation hunting. In this context, when \(t\le \frac{T}{2}\),the encircling phase takes place (global search), otherwise when\(t<\frac{T}{2}\), the hunting phase happens (local search) to find near/optimal solution. Algorithm 1 presents the pseudocode for RSA. The algorithm initializes the parameters and the population in the first line. The algorithm searches for the solution from line 3 to line 13 until the termination condition is satisfied as demonstrated in line 3. Line 9 of the algorithm updates the position of each individual in every iteration using either Eq. 1 or Eq. 7.

The best solution obtained thus far is output by the algorithm in line 14.

2.2 Reinforcement learning

RL has been widely used to solve problems across various domains. The fundamental idea of RL involves an agent taking an action that alters the environment state, and receiving a reward based on that action. RL has two different categories: policy-based method and value-based method. Q learning (QL) is an example of a value-based method. It is model-free in which the agent learns how to act properly in Markovian domain [101]. During the learning phase, the agent executes the action with the highest expected Q value. The simplest form of Q learning is one-step Q learning in which the Q value is updated according to the state action in one step. The one-step Q learning is applied in this work. The Q table is dynamically updated according to the reward of each state action as in Eq. 8.

where \(\odot\) and \(\gamma\) are the learning rate and discount factor, respectively, and both \(\in \left[\mathrm{0,1}\right]\). The \(Q\left({s}_{t}, {a}_{t}\right)\) is the Q value of taking action \({a}_{t}\) in the current state \({s}_{t}\) whereas \({\mathrm{max}}_{a}Q\left({s}_{t+1}, {a}_{t+1}\right)\) is the highest expected Q value in Q table when taking action \({a}_{t+1}\) on state \({s}_{t+1}\). It is worth mentioning that the highest learning rate \(\odot\) makes the algorithm learn from expected Q value, whereas the low value of learning rate makes the algorithm exploit the previous Q value. Therefore, the learning rate balances between exploitation and exploration.

Algorithm 2 shows pseudo-code for Q learning. The algorithm 2 initializes the Q table and the reward table with random values then the algorithm randomly selects random state. The algorithm then chooses the action corresponding to the selected state that maximize the future reward as indicated in line 4 and 5. Consequently, the Q table, reward table, and the new state are updated. The algorithm repeats the line 3–9 until the termination condition is stratified.

3 The development of the proposed RLRSA

3.1 Main idea of the proposed method

The basic RSA algorithm involves two main strategies to find solutions: exploration and exploitation. During the exploration phase, highly efficient walking and belly walking strategies are used to search for new solutions. On the other hand, during the exploitation phase, hunting coordination and hunting cooperation are used to refine the best solutions. However, the algorithm's ability to change direction is limited because exploration is done in the first half of the iterations, while exploitation is done in the second half. Consequently, the algorithm is susceptible to being trapped in local optima. This presents a limitation in that RSA is unable to change direction during the iterations, which makes it susceptible to being trapped in local optima. Therefore, the global minimum is not guaranteed by static search behavior. The adaptive search operation becomes a better choice for obtaining the global minimum and reinforcement learning is introduced to accomplish this task effectively. Opposition-based learning introduced to enhance the search for potential solutions is by augmenting the variety within the population.

3.2 The RLRSA structure

In RLRSA, the search space is considered as the interactive environment, whereas each individual is regarded as the train agent of RL. The Q-learning method is utilized to dynamically switch between exploration and exploitation. In Q-learning method, the Q value corresponding to state action is updated based on the current best fitness and average fitness in the previous iterations. In addition, a reward table is used to reward or penalize the agent based on the current action and state. The details are demonstrated in the following:

3.2.1 State set and action set

The proposed RLRSA has three actions corresponding to the value of the exploration rate (φ), namely increase exploration rate, decrease the exploration rate, and no change. The value of φ in the next iteration is updated based on the best fitness in the current iteration and the accumulated average fitness value as follows

where \({\varphi }^{t+1}\) is the exploration rate at next iteration, and \(\Delta\) is the incremental value, \(f\left({X}_{\mathrm{best}}^{t}\right)\) is the fitness of the best position in the current iteration. \(M\) is the average fitness of the fittest individuals found so far and can be calculated as

where \(n\) is the number of executed iterations until the current one, and \({w}_{i}\) is the weighted factor for the fittest individual \({X}_{\mathrm{best}}^{t}\) at the iteration \(t\) and can be calculated as follows.

where \(t\) is the current iteration and \(T\) is the total number of the iteration. It is worth mentioning that the recently fittest individuals have more significant contributions in calculating the value of \(M\). In more details, if they obtained fitness is greater than the mean average fitness, the algorithm needs to narrow the searching area and enhance the obtained solutions otherwise, the algorithm explores more searching area to find new solutions and avoid to be trapped in local optima and premature convergence. To sum up, the first case of Eq. 9 commonly happens when the agent successfully obtains better fitness than the average fitness. The second case shows that the fitness of the agent begins to deteriorate compared to the previous agent’s experience. Finally, the exploration rate does not change if the agent is not motivated to change the exploration rate.

The RLRSA has three states \(s=\left\{1, -1, 0\right\}\) corresponding to the after-mentioned actions as demonstrated on Eq. 12

where \({s}_{t}\) is the state obtained by the agent at the iteration t.

3.2.2 Reward

The reward table in this work contains the positive (+ 1) corresponding to the state \({s}_{t}=1\) and negative (− 1) otherwise. The current state \({s}_{t}\) is equal to 1 in case of the obtained fitness at iteration \(t\) is better than the average fitness of the last \(t-1\) iterations. Consequently, Eq. 13 demonstrates the reward method.

3.2.3 Adaptive learning rate

In addition, the proposed RLRSA carefully adapts the learning rate based on the accumulated performance because it has a significant impact on getting optimal solution. On the one hand, when the learning rate is close to one, the newly acquired information has a large impact on the future reward. When the learning rate is low, existing information is more valuable than newly acquired information. To achieve the best results, the learning rate is reduced adaptively through iteration using the equation below.

where \(\odot_{{{\text{init}}}} { },{ } \odot_{{{\text{final}}}}\) are the initial and the final value of the learning rate, respectively.

3.2.4 Random opposition-based learning

One of the most robust and effective methods to increase the diversity of the population is the opposition-based learning (OBL) is proposed by [102]. To enhance the performance of the algorithm, this method explores the opposite location of the individual being evaluated. Random opposition learning (ROBL) is introduced by [103] and uses randomization to improve the OBL methods which is defined as follows.

where \({x}_{j}^{\mathrm{^{\prime}}}\), \({x}_{j}\) represent the opposite and original solutions, and \({l}_{j}\) and \({u}_{j}\) indicate the lower and upper bound of the variables. OBL is introduced in RLRSA to adaptively help the algorithm to avoid trapping in local optima and therefore improve the obtained solution.

3.3 Overview of the RLRSA

Figure 3 shows the flowchart of the proposed RLRSA and algorithm 3 demonstrates more details on how the algorithm searches for the global solution. In the initialization phase, the algorithm initializes the basic parameters, states, actions, Q table, and reward table. The algorithm searches for the global if the stopping criteria is not reached. The searching process begins by calculating the fitness of the individuals and finding the best solution then the best action is selected from the Q table and the exploration rate is updated accordingly based on this selection using Eq. 9. Based on random number and exploration rate, either exploration or the exploitation takes place, and the new solution is generated. The reverse solution is then calculated using OBL based on the newly obtained solution after that the elitism mechanism is used to select the fittest solution between the reverse solution and the new solution. Finally, the Q table, reward table and the current state are updated. The learning rate is updated after each iteration and the process is repeated until the stopping criteria is satisfied.

3.4 Time complexity

As demonstrated in Fig. 3, the RLRSA algorithm divides the finding of the global solution into two phases. In the first phase, the algorithm initializes the population, and this phase is executed on \(T={\varvec{O}}({\varvec{N}})\) and in the second phase, the searching for the global solution is repeated Tmax times. Accordingly, the computation complexity of each phase can be demonstrated as follows.

4 Phase 1: Initialization.

The algorithm initializes N population with random values for the next stage and thus the complexity of this phase is \({\varvec{O}}({\varvec{N}})\).

5 Phase 2: Searching

The searching process of the RLRSA includes four operations: exploitation, exploration, random opposite-based learning, and elitism mechanisms. Because the algorithm switch between exploration and exploration based on the accumulated performance; therefore, it is assumed that each process is executed for all individuals. In the exploration phases, all individuals are directed toward the global solution, whereas during the exploitation process, all the individuals move toward the local solution. The total complexity of exploration and exploration process is \({\varvec{O}}({\varvec{N}} \times {\varvec{T}})\)+ \({\varvec{O}}({\varvec{N}} \times {\varvec{T}}\times {\varvec{D}})\). Therefore, the computational complexity of RLRSA can be expressed as: \({\varvec{O}}\left({\varvec{N}}\right)+{\varvec{O}}({\varvec{N}} \times {\varvec{T}})\)+ \({\varvec{O}}({\varvec{N}} \times {\varvec{T}}\times {\varvec{D}})\). Here, T represents the maximum number of iterations, and D denotes the dimensionality of the solution.

6 Experimental setup and discussions

In this study, twenty-three global optimization problems are used to evaluate the performance of the proposed RLRSA. In addition, the proposed RLRSA is applied to find the best solution for one of the most common engineering problems, namely pressure vessel design problem. In order to evaluate the effectiveness of the proposed RLRSA, it was compared against several other global optimization algorithms, which included: ant lion optimizer (ALO) [104], covariance matrix adaptation evolution strategy (CMAES) [105], slap swarm algorithm (SSA) [106], marine predator algorithm (MPA) [107], dragonfly algorithm (DA) [108], whale optimization algorithm (WOA) [17], equilibrium optimizer (EO) [109], particle swarm optimization algorithm (PSO) [110], grasshopper optimization algorithm (GOA) [111], gray wolf optimizer (GWO) [23], sine–cosine algorithm (SCA) [31], and the standard RSA.

In experiments, the common parameters setting, such as the number population is set to 30, the number of independent runs is set to 25, and the number of function evaluations is set to 15,000. In addition, other parameters values of algorithms are set as their implementation and are shown in Table 1. Furthermore, the parameter settings of RLRSA are identical to those of the standard RSAseco [18]. Statistical measurements are used to quantify the quality of the obtained solution including the worst, best, average, and standard deviation of the fitness values.

Three scenarios are used to evaluate the effectiveness of RLRSA against other methods. The first scenario shows the comparative analysis of RLRSA and other methods to unimodal and multimodal benchmark functions with solve 10, 500, 100, and 500 dimensions. The second scenario evaluates the performance of RLRSA on fixed-dimensional multimodal benchmark functions. The fourth scenario shows how the RLRSA can be used to solve real-world problems and compare the performance of RLRSA against other methods.

6.1 Description of the benchmark

The twenty-three benchmarks used to evaluate the performance of the proposed RLRSA are classified based on the number of extreme solutions. The first type is known as unimodal functions and the main feature of this type is that it has only one single solution in the search space. Table 2 shows the unimodal functions (F1–F7) and their definition. The 2nd and 3rd categories of benchmark functions are, respectively, classified as multimodal and fixed-dimension multimodal. The main characteristics of these types are that they have more than one extreme solution in the search space. Functions F8–F13 and their definition shown in Table 3 are examples for multimodal function, whereas Table 4 shows the functions F13–F23 and their description as examples of multimodal with fixed dimensions.

6.2 Results and discussion

This section presents a comprehensive comparison of the performance of the proposed reinforcement learning-based RSA (RLRSA) against the standard RSA and other methods from the literature. The objective is to identify the best solution for both unimodal and multimodal functions. In all tables, the algorithms are ranked based on average performance.

Various algorithms are compared in Table 5, which presents the worst, average, best, and standard deviation (STD) fitness values for unimodal and multimodal functions with 10 dimensions. The results highlight that RLRSA achieves the smallest average values with small standard deviation for 12 out of 13 test functions, outperforming other optimization methods. The CMA-ES algorithm comes second and achieves better performance in 2 out of 13 test functions. This indicates that RLRSA demonstrates remarkable stability and accuracy in solving both unimodal and multimodal functions. Additionally, the results confirm that RLRSA exhibits superior exploration and exploitation capabilities, thanks to the learning behavior of the reinforcement agent and the ROBL strategy.

Furthermore, the performance of RLRSA is evaluated at different dimensions: 50, 100, and 500, as illustrated in Tables 6, 7, and 8, respectively. Table 6 reveals that RLRSA consistently achieves minimum fitness values for most problems at 50 dimensions. Specifically, RLRSA ranks first in 9 out of 13 functions. CMA-ES performs better on three functions (F6, F8, and F12). In addition, RSA, RICRSA, MPA, and WOA show a good performance on three functions.

Tables 7 and 8 show the performance of RLRSA, the basic RSA, and other methods at 100 and 500 dimensions, respectively. In Table 7, RLRSA demonstrates a superior average fitness value in 11 out of 13 functions (84%). However, RSA can find the best average fitness on only 2 out of 13 test functions. In addition, RICRSA ranks second with high performance on three test functions.

Moving to high dimensions (500), RLRSA continues to outperform other methods significantly, as indicated in Table 7. On the one hand, RLRSA shows a superior performance on 10 test functions compared to other methods. On the other hand, MPA comes second with good performance on four test functions.

Finally, Table 9 provides insights into the performance of different algorithms in discovering solutions for fixed-dimensional multimodal functions. On the one hand, RLRSA shows competitive results when compared to the basic RSA. Specifically, RLRSA surpasses the basic RSA and other methods in 8 out of 10 benchmarks. On the other hand, RSA comes second with good performance on 5 test functions. To sum up, the reinforcement agent enables RLRSA to effectively search for the global solution with minimum number of iterations and find the best solution in most benchmark functions.

6.3 Analysis of the convergence curve of RLRSA versus standard RSA

The convergence curve of the proposed RLRSA compared to other methods in the literature is shown in Fig. 4. The convergence curves show that the RLRSA has a noticeable improvement in exploration and exploitation when reinforcement learning is applied. For example, the convergence rate of RLRSA on F1-F4, F7-F11 is faster than that of other methods, and the convergence accuracy is also better. The RLRSA outperforms the other methods on F5 in the first twenty-two iterations and RSA achieves the same convergence rate as RLRSA after iteration 23. The RLRSA does not perform well on benchmarks F6 and F12. To recap, reinforcement learning enables the algorithm to efficiently explore the search space from the first iteration and successfully find the best position of the agent.

6.4 Real-world application

This section addresses two engineering design problems, including pressure vessel design problems and tension and compressing spring problem. The RLRSA method is applied to solve this optimization problem. The results of RLRSA are compared to various optimization algorithms from the literature.

This section focuses on two specific engineering design problems: pressure vessel design and tension/compression spring problems. To tackle these optimization challenges, this work applies the RLRSA method. We then compare the performance of RLRSA with several optimization algorithms found in the literature. This comparison allows us to assess the effectiveness and efficiency of RLRSA in solving these design problems.

6.4.1 pressure vessel design problem (PVD)

To evaluate the performance of the proposed RLRSA, one of the complex engineering problems known as pressure vessel design problem (PVD) is shown in Fig. 5. The parameter settings for all methods are the same as the above experiment. The PVD problem has four variables including the thickness of the shell (Ts), the inner radius R, the length of cylindrical section of the vessel (L), and the thickness of the head (Th). The main aim of the design is to minimize the cost of welding, forming of the pressure vessel and the materials.

Pressure vessel design problem [18]

The mathematical formulation of this problem is:

Consider \(\mathop{x}\limits^{\rightharpoonup} = \left[ {x_{1} x_{2} x_{3} x_{4} } \right]\)

Minimize \(f\left( {\mathop{x}\limits^{\rightharpoonup} } \right) = 0.6224 x_{1} x_{2} x_{4} + 1.7781 x_{2 } x_{3}^{2} + 3.1661 x_{1}^{2} x_{4} + 19.84 x_{1}^{2} x_{3}\)

Subject to \(g_{1} \left( {\mathop{x}\limits^{\rightharpoonup} } \right) = - x_{1} + 0.0193 x_{3} \le 0, g_{2} \left( {\mathop{x}\limits^{\rightharpoonup} } \right) = - x_{3} + 0.000954 x_{3} \le 0,\)

Variables range \(0 \le x_{1} , x_{2} \le 99), \left( {10 \le x_{3} , x_{4} \le 200} \right)\)

It can be seen from the results in Table 10 that the proposed RLRSA can get the best solution which achieves the smallest cost with nearly 5888.4833 with a difference of 146.275 compared with the standard RSA. The main reason for the superiority of the RLRSA compared to RSA is that the reinforcement learning as well as ROL enhance exploration and exploitation phase and balance between them when it is necessary.

6.4.2 Tension/compression spring design problem

The design of tension/compression spring problem shown in Fig. 6 includes the optimization of the wire diameter (d), the mean coil diameter (D), the number of coils (N), and the objective function and the constraints for this problem can be described as follows.

Tesnion/compression spring design problem [18]

Minimize \(f\left(X\right)=\left({x}_{3}+2\right){x}_{2}{{x}_{1}}^{2}\)

subject to

the range of variables

Table 11 shows the solutions to the problem using different optimization methods. It is obvious that the RLRSA attains the better performance and get the best solution of the problem and achieves minimum weight of the objective function.

7 Conclusion

This work presents an enhanced reptile search algorithm (RLRSA) by integrating the Q-learning model and random opposite-based learning (ROBL) strategies. The Q-learning model helps the individual to learn from the results and to switch between encircling and hunting phases, and hence increase the convergence speed of the algorithm. In addition, ROBL prevents the algorithm from trapping in local optima through increasing the diversity of the population. The experimental results show competitive performance compared to the basic RSA and other state-of-the-art algorithms. Moreover, the proposed is applied to solve pressure vessel design and tension/compression spring engineering design problems. The results also show the competitive performance of RLRSA compared to other methods from the literature. One drawback of the proposed RLRSA is that it has only significant impact when the search space is too large but in case of simple functions with only one extreme solution, the algorithm does not show significant difference than the standard RSA.

Data availability

All data generated or analyzed during this study are included in this published article.

References

Horst R, Tuy H (2013) Global optimization: deterministic approaches. Springer Science & Business Media

Abualigah L (2021) Group search optimizer: a nature-inspired meta-heuristic optimization algorithm with its results, variants, and applications. Neural Comput Appl 33(7):2949–2972

Abualigah L, Diabat A (2020) A comprehensive survey of the Grasshopper optimization algorithm: results, variants, and applications. Neural Comput Appl 32(19):15533–15556

Luenberger DG, Ye Y (1984) Linear and nonlinear programming, vol 2. Springer

Gardiner CW (1985) Handbook of stochastic methods, vol 3. Springer, Berlin

Abualigah L (2020) Multi-verse optimizer algorithm: a comprehensive survey of its results, variants, and applications. Neural Comput Appl 32(16):12381–12401

Abualigah L, Diabat A, Geem ZW (2020) A comprehensive survey of the harmony search algorithm in clustering applications. Appl Sci 10(11):3827

Talbi E-G (2009) Metaheuristics: from design to implementation, vol 74. John Wiley & Sons

Soler-Dominguez A, Juan AA, Kizys R (2017) A survey on financial applications of metaheuristics. ACM Comput Surv (CSUR) 50(1):1–23

Valadi J, Siarry P (2014) Applications of metaheuristics in process engineering, vol 31. Springer

Abualigah L, Diabat A (2021) Advances in sine cosine algorithm: a comprehensive survey. Artif Intell Rev 54(4):2567–2608

Parpinelli RS, Lopes HS (2011) New inspirations in swarm intelligence: a survey. Int J Bio-Inspired Comput 3(1):1–16

Kosorukoff A (2001) Human based genetic algorithm. In: 2001 IEEE international conference on systems, man and cybernetics. e-Systems and e-Man for Cybernetics in Cyberspace (Cat. No. 01CH37236). IEEE

Biswas A et al (2013) Physics-inspired optimization algorithms: a survey. J Optim 2013

Fonseca CM, Fleming PJ (1995) An overview of evolutionary algorithms in multiobjective optimization. Evol Comput 3(1):1–16

Kennedy (1995) Particle swarm optimization. Neural Netw

Mirjalili S, Lewis A (2016) The whale optimization algorithm. Adv Eng Softw 95:51–67

Abualigah L et al (2022) Reptile search algorithm (RSA): a nature-inspired meta-heuristic optimizer. Expert Syst Appl 191:116158

Mirjalili S (2015) Moth-flame optimization algorithm: a novel nature-inspired heuristic paradigm. Knowl-Based Syst 89:228–249

Heidari AA et al (2019) Harris hawks optimization: algorithm and applications. Futur Gener Comput Syst 97:849–872

Mirjalili S et al (2017) Salp swarm algorithm: a bio-inspired optimizer for engineering design problems. Adv Eng Softw

Yang X-S (2010) A new metaheuristic bat-inspired algorithm. Nature inspired cooperative strategies for optimization (NICSO 2010). Springer, pp 65–74

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61

Rao RV, Savsani VJ, Vakharia D (2011) Teaching–learning-based optimization: a novel method for constrained mechanical design optimization problems. Comput Aided Des 43(3):303–315

Shi Y (2011) Brain storm optimization algorithm. International conference in swarm intelligence. Springer

Mousavirad SJ, Ebrahimpour-Komleh H (2017) Human mental search: a new population-based metaheuristic optimization algorithm. Appl Intell 47(3):850–887

Moosavi SHS, Bardsiri VK (2019) Poor and rich optimization algorithm: a new human-based and multi populations algorithm. Eng Appl Artif Intell 86:165–181

Wang L et al (2014) A simple human learning optimization algorithm. Computational intelligence, networked systems and their applications. Springer, pp 56–65

Atashpaz-Gargari E, Lucas C (2007) Imperialist competitive algorithm: an algorithm for optimization inspired by imperialistic competition. In: 2007 IEEE congress on evolutionary computation. IEEE

Liñán-García E, Gallegos-Araiza LM (2012) Simulated annealing with previous solutions applied to DNA sequence alignment. ISRN Artif Intell 2012

Mirjalili S (2016) SCA: a sine cosine algorithm for solving optimization problems. Knowl-Based Syst 96:120–133

Rashedi E, Nezamabadi-Pour H, Saryazdi S (2009) GSA: a gravitational search algorithm. Inf Sci 179(13):2232–2248

Abualigah L et al (2021) The arithmetic optimization algorithm. Comput Methods Appl Mech Eng 376:113609

Abedinpourshotorban H et al (2016) Electromagnetic field optimization: a physics-inspired metaheuristic optimization algorithm. Swarm Evol Comput 26:8–22

Javidy B, Hatamlou A, Mirjalili S (2015) Ions motion algorithm for solving optimization problems. Appl Soft Comput 32:72–79

Ekinci S, Hekimoğlu B, Izci D (2021) Opposition based Henry gas solubility optimization as a novel algorithm for PID control of DC motor. Eng Sci Technol Int J 24(2):331–342

Holland JH (1992) Genetic algorithms. Sci Am 267(1):66–73

Storn R, Price K (1997) Differential evolution–a simple and efficient heuristic for global optimization over continuous spaces. J Glob Optim 11(4):341–359

Hansen N, Müller SD, Koumoutsakos P (2003) Reducing the time complexity of the derandomized evolution strategy with covariance matrix adaptation (CMA-ES). Evol Comput 11(1):1–18

Issa M, Abd Elaziz, M (2020) Analyzing COVID-19 virus based on enhanced fragmented biological Local Aligner using improved Ions Motion Optimization algorithm. Appl Soft Comput 106683

Issa M, Hassanien AE (2017) Multiple sequence alignment optimization using meta-Heuristic techniques. Handbook of research on machine learning innovations and trends. IGI Global, pp 409–423

Issa M et al (2018) Pairwise global sequence alignment using Sine-Cosine optimization algorithm. International conference on advanced machine learning technologies and applications. Springer

Ali AF, Hassanien A-E (2016) A survey of Metaheuristics methods for bioinformatics applications. Applications of intelligent optimization in biology and medicine. Springer, pp 23–46

Issa M (2021) Expeditious Covid-19 similarity measure tool based on consolidated SCA algorithm with mutation and opposition operators. Appl Soft Comput 104:107197

Issa M et al (2022) A biological sub-sequences detection using integrated BA-PSO based on infection propagation mechanism: case study COVID-19. Expert Syst Appl 189:116063

Issa M, Helmi, A (2021) Two layer hybrid scheme of IMO and PSO for optimization of local aligner: COVID-19 as a case study. Artif Intell COVID-19 363–381

Chen H et al (2020) Parameters identification of photovoltaic cells and modules using diversification-enriched Harris hawks optimization with chaotic drifts. J Clean Prod 244:118778

Yu K et al (2018) Multiple learning backtracking search algorithm for estimating parameters of photovoltaic models. Appl Energy 226:408–422

Abd Elaziz M et al (2023) Optimal parameters extracting of fuel cell based on Gorilla Troops Optimizer. Fuel 332:126162

Almodfer R et al (2022) Improving parameter estimation of fuel cell using Honey Badger optimization algorithm. Front Energy Res 10:875332

Issa M (2022) Parameter tuning of PID controller based on arithmetic optimization algorithm in IOT systems. Integrating Meta-Heuristics and machine learning for real-world optimization problems. Springer, pp 399–417

Issa M (2022) Enhanced arithmetic optimization algorithm for parameter estimation of PID controller. Arab J Sci Eng 1–15

Issa M (2021) Performance optimization of PID controller based on parameters estimation using Meta-Heuristic techniques: a comparative study. Metaheuristics in machine learning: theory and applications. Springer, pp 691–709

Issa M et al (2019) PID controller tuning parameters using Meta-heuristics algorithms: comparative analysis. Machine learning paradigms: theory and application. Springer, pp 413–430

Mehdizadeh SA (2015) Optimization of passive tractor cabin suspension system using ES, PSO and BA. J Agric Technol 11(3):595–607

Issa M, Samn A (2022) Passive vehicle suspension system optimization using Harris Hawk Optimization algorithm. Math Comput Simul 191:328–345

Shao Y et al (2021) Multi-objective neural evolutionary algorithm for combinatorial optimization problems. IEEE Trans Neural Netw Learn Syst

Issa M, Mostafa Y (2022) Gradient-based optimizer for structural optimization problems. Integrating Meta-Heuristics and machine learning for real-world optimization problems. Springer, pp 461–480

Soliman MM, Hassanien AE (2017) 3D watermarking approach using particle swarm optimization algorithm. Handbook of research on machine learning innovations and trends. IGI Global, pp 582–613

Issa M (2018) Digital image watermarking performance improvement using bio-inspired algorithms. Advances in soft computing and machine learning in image processing. Springer, pp 683–698

Mookiah S, Parasuraman K, Kumar Chandar S (2022) Color image segmentation based on improved sine cosine optimization algorithm. Soft Comput 1–11

Giuliani D (2022) Metaheuristic algorithms applied to color image segmentation on HSV space. J Imaging 8(1):6

Lin JC-W et al (2016) Mining high-utility itemsets based on particle swarm optimization. Eng Appl Artif Intell 55:320–330

Lin JC-W et al (2016) A sanitization approach for hiding sensitive itemsets based on particle swarm optimization. Eng Appl Artif Intell 53:1–18

Lin JC-W et al (2019) Hiding sensitive itemsets with multiple objective optimization. Soft Comput 23:12779–12797

Mohamed Issa AH, Ibrahim Ziedan, Ahmed Alzohairy (2017) Maximizing occupancy of GPU for fast scanning biological database using sequence alignment. J Appl Sci Res 13(6)

Elloumi M, Issa MAS, Mokaddem A (2013) Accelerating pairwise alignment algorithms by using graphics processor units. In: Biological knowledge discovery handbook: preprocessing, mining, and postprocessing of biological data, pp 969–980

Issa ME et al (2022) Human activity recognition based on embedded sensor data fusion for the internet of healthcare things. In: Healthcare. MDPI

Almodfer R et al, Improving parameters estimation of fuel cell using Honey Badger optimization algorithm. Front Energy Res 565

Djenouri Y et al (2019) Exploiting GPU parallelism in improving bees swarm optimization for mining big transactional databases. Inf Sci 496:326–342

Wolpert DH, Macready WG (1997) No free lunch theorems for optimization. IEEE Trans Evol Comput 1(1):67–82

El Shinawi A et al (2021) Enhanced adaptive neuro-fuzzy inference system using reptile search algorithm for relating swelling potentiality using index geotechnical properties: a case study at El Sherouk City. Egypt Math 9(24):3295

Khan RA et al (2022) Reptile search algorithm (RSA)-based selective harmonic elimination technique in packed E-Cell (PEC-9) inverter. Processes 10(8):1615

Chauhan S, Vashishtha G, Kumar A (2022) Approximating parameters of photovoltaic models using an amended reptile search algorithm. J Ambient Intell Humaniz Comput 1–16

Yildiz BS et al (2022) Reptile search algorithm and kriging surrogate model for structural design optimization with natural frequency constraints. Mater Test 64(10):1504–1511

Sunitha D et al (2022) Congestion centric multi‐objective reptile search algorithm‐based clustering and routing in cognitive radio sensor network. Trans Emerg Telecommun Technol e4629

Raja D, Karthikeyan M (2022) Content based image retrieval using reptile search algorithm with deep learning for agricultural crops. In: 2022 7th international conference on communication and electronics systems (ICCES). IEEE

Huang L et al (2022) An improved reptile search algorithm based on lévy flight and interactive crossover strategy to engineering application. Mathematics 10(13):2329

Ekinci S et al (2022) Development of Lévy flight-based reptile search algorithm with local search ability for power systems engineering design problems. Neural Comput Appl 34(22):20263–20283

Ekinci S, Izci D (2022) Enhanced reptile search algorithm with Lévy flight for vehicle cruise control system design. Evol Intell 1–13

Almotairi KH, Abualigah L (2022) Hybrid reptile search algorithm and remora optimization algorithm for optimization tasks and data clustering. Symmetry 14(3):458

Abualigah L, Diabat A (2022) Chaotic binary reptile search algorithm and its feature selection applications. J Ambient Intell Humaniz Comput 1–17

Al-Shourbaji I et al (2022) Boosting ant colony optimization with reptile search algorithm for churn prediction. Mathematics 10(7):1031

Almotairi KH, Abualigah L (2022) Improved reptile search algorithm with novel mean transition mechanism for constrained industrial engineering problems. Neural Comput Appl 1–21

Al-Shourbaji I et al (2022) An efficient parallel reptile search algorithm and snake optimizer approach for feature selection. Mathematics 10(13):2351

Yuan Q et al (2022) a modified reptile search algorithm for numerical optimization problems. Comput Intell Neurosci 2022

Elgamal Z et al (2022) Improved reptile search optimization algorithm using chaotic map and simulated annealing for feature selection in medical filed. IEEE Access

Wu D et al (2022) An improved teaching-learning-based optimization algorithm with reinforcement learning strategy for solving optimization problems. Comput Intell Neurosci 2022

Lingam G, Rout RR, Somayajulu DV (2019) Adaptive deep Q-learning model for detecting social bots and influential users in online social networks. Appl Intell 49(11):3947–3964

Liu F, Zeng G (2009) Study of genetic algorithm with reinforcement learning to solve the TSP. Expert Syst Appl 36(3):6995–7001

Xu Y, Pi D (2020) A reinforcement learning-based communication topology in particle swarm optimization. Neural Comput Appl 32(14):10007–10032

Emary E, Zawbaa HM, Grosan C (2017) Experienced gray wolf optimization through reinforcement learning and neural networks. IEEE Trans Neural Netw Learn Syst 29(3):681–694

Wang J, Lei D, Cai J (2022) An adaptive artificial bee colony with reinforcement learning for distributed three-stage assembly scheduling with maintenance. Appl Soft Comput 117:108371

Si T, Miranda PB, Bhattacharya D (2022) Novel enhanced Salp Swarm Algorithms using opposition-based learning schemes for global optimization problems. Expert Syst Appl 207:117961

Too J, Sadiq AS, Mirjalili SM (2022) A conditional opposition-based particle swarm optimisation for feature selection. Connect Sci 34(1):339–361

Izci D et al (2022) A novel modified opposition-based hunger games search algorithm to design fractional order proportional-integral-derivative controller for magnetic ball suspension system. Adv Control Appl Eng Ind Syst 4(1):e96

Abd Elaziz M, Oliva D, Xiong S (2017) An improved opposition-based sine cosine algorithm for global optimization. Expert Syst Appl 90:484–500

Gupta S, Deep K (2019) A hybrid self-adaptive sine cosine algorithm with opposition based learning. Expert Syst Appl 119:210–230

Hussien AG, Amin M (2022) A self-adaptive Harris Hawks optimization algorithm with opposition-based learning and chaotic local search strategy for global optimization and feature selection. Int J Mach Learn Cybern 13(2):309–336

Yang Y et al (2022) An opposition learning and spiral modelling based arithmetic optimization algorithm for global continuous optimization problems. Eng Appl Artif Intell 113:104981

Watkins CJCH D P (1992) Q-learning. mach learn

Tizhoosh HR (2005) Opposition-based learning: a new scheme for machine intelligence. In: International conference on computational intelligence for modelling, control and automation and international conference on intelligent agents, web technologies and internet commerce (CIMCA-IAWTIC'06). IEEE

Long W et al (2019) A random opposition-based learning grey wolf optimizer. IEEE Access 7:113810–113825

Mirjalili S (2015) The ant lion optimizer. Adv Eng Softw 83:80–98

Hansen N, Ostermeier A (2001) Completely derandomized self-adaptation in evolution strategies. Evol Comput 9(2):159–195

Mirjalili S et al (2017) Salp Swarm Algorithm: a bio-inspired optimizer for engineering design problems. Adv Eng Softw 114:163–191

Faramarzi A et al (2020) Marine predators algorithm: a nature-inspired metaheuristic. Expert Syst Appl 152:113377

Mirjalili S (2016) Dragonfly algorithm: a new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput Appl 27(4):1053–1073

Faramarzi A et al (2020) Equilibrium optimizer: a novel optimization algorithm. Knowl-Based Syst 191:105190

Eberhart R, Kennedy J (1995) A new optimizer using particle swarm theory. In: MHS'95. Proceedings of the sixth international symposium on micro machine and human science. IEEE

Saremi S, Mirjalili S, Lewis A (2017) Grasshopper optimisation algorithm: theory and application. Adv Eng Softw 105:30–47

Sandgren E (1988) Nonlinear integer and discrete programming in mechanical design. In: International design engineering technical conferences and computers and information in engineering conference. American Society of Mechanical Engineers

Mezura-Montes E, Coello CAC (2008) An empirical study about the usefulness of evolution strategies to solve constrained optimization problems. Int J Gen Syst 37(4):443–473

He Q, Wang L (2007) An effective co-evolutionary particle swarm optimization for constrained engineering design problems. Eng Appl Artif Intell 20(1):89–99

Mahdavi M, Fesanghary M, Damangir E (2007) An improved harmony search algorithm for solving optimization problems. Appl Math Comput 188(2):1567–1579

Coello CAC (2000) Use of a self-adaptive penalty approach for engineering optimization problems. Comput Ind 41(2):113–127

He Q, Wang L (2007) A hybrid particle swarm optimization with a feasibility-based rule for constrained optimization. Appl Math Comput 186(2):1407–1422

Kaveh A, Talatahari S (2010) An improved ant colony optimization for constrained engineering design problems. Eng Comput

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ghetas, M., Issa, M. A novel reinforcement learning-based reptile search algorithm for solving optimization problems. Neural Comput & Applic 36, 533–568 (2024). https://doi.org/10.1007/s00521-023-09023-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-023-09023-9