Abstract

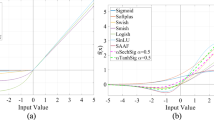

Activation functions are crucial parts of artificial neural networks. From the first perceptron created artificially up to today, many functions are proposed. Some of them are currently in common use, such as Rectified Linear Unit (ReLU) and Exponential Linear Unit (ELU) and other ReLU variants. In this article we propose a novel activation function, called ExtendeD Exponential Linear Unit (DELU). After its introduction and presenting its basic properties, by making various simulations with different datasets and architectures, we show that it may perform better than other activation functions in certain cases. While also inheriting most of the good properties of ReLU and ELU, DELU offers an increase of success in comparison with them by slowing the alignment of neurons in early stages of training process. In experiments, DELU performed better than other activation functions in general, for Fashion MNIST, CIFAR-10 and CIFAR-100 classification tasks with different sized Residual Neural Networks (ResNet). Specifically, DELU managed to reduce the error rate by sufficiently high confidence levels in CIFAR datasets in comparison with ReLU and ELU networks. In addition, DELU is compared in an image segmentation example as well. Also, compatibility of DELU is tested with different initializations, and statistical methods are employed to verify these success rates by using Z-score analysis, which may be considered as a different view of success assessment in neural networks.

Similar content being viewed by others

References

Ding B, Qian H, Zhou J (2018) Activation functions and their characteristics in deep neural networks. Chin Control Decis Conf 2018:1836–1841. https://doi.org/10.1109/CCDC.2018.8407425

Alhassan AM, Zainon WMNW (2021) Brain tumor classification in magnetic resonance image using hard swish-based RELU activation function-convolutional neural network. Neural Comput Appl 33:9075–9087. https://doi.org/10.1007/s00521-020-05671-3

Çatalbaş B (2022) Control and system identification of legged locomotion with recurrent neural networks (Doctoral Dissertation). Retrieved from http://repository.bilkent.edu.tr/handle/11693/90921

Haykin S (1999) Neural networks: a comprehensive foundation. Prentice Hall, New Jersey, NY

Dubey SR, Singh SK, Chaudhuri BB (2022) Activation functions in deep learning: a comprehensive survey and benchmark. Neurocomputing 503:92–108. https://doi.org/10.1016/j.neucom.2022.06.111

Nair V, Hinton GE (2010) Rectified linear units improve restricted boltzmann machines. In: Proceedings of the 27th international conference on machine learning (ICML-10), pp 807–814

Williams A (2017) The art of building neural networks. TheNewStack. https://thenewstack.io/art-building-neural-networks/

Zheng Q, Yang M, Yang J, Zhang Q, Zhang X (2018) Improvement of generalization ability of deep CNN via implicit regularization in two-stage training process. IEEE Access 6:15844–15869. https://doi.org/10.1109/ACCESS.2018.2810849

Li H, Zeng N, Wu P, Clawson K (2022) Cov-Net: a computer aided diagnosis method for recognizing COVID-19 from chest X-ray images via machine vision. Expert Syst with Appl 207:118029. https://doi.org/10.1016/j.eswa.2022.118029

Zhang K, Yang X, Zang J, Li Z (2021) FeLU: a fractional exponential linear unit. In: 2021 33rd Chinese Control and Decision Conference (CCDC), pp 3812–3817. https://doi.org/10.1109/CCDC52312.2021.9601925

Apicella A, Donnarumma F, Isgrò F, Prevete R (2021) A survey on modern trainable activation functions. Neural Netw 138:14–32. https://doi.org/10.1016/j.neunet.2021.01.026

Clevert D-A, Unterthiner T, Hochreiter S (2015) Fast and accurate deep learning by exponential linear units (ELUs). In: The International Conference on Learning Representations (ICLR), pp 1–14. https://doi.org/10.48550/arXiv.1511.07289

Qiumei Z, Dan T, Fenghua W (2019) Improved convolutional neural network based on fast exponentially linear unit activation function. IEEE Access 7:151359–151367. https://doi.org/10.1109/ACCESS.2019.2948112

Adem K (2021) P + FELU: flexible and trainable fast exponential linear unit for deep learning architectures. Neural Comput Appl 34:21729–21740. https://doi.org/10.1007/s00521-022-07625-3

Sakketou F, Ampazis N (2019) On the invariance of the SELU activation function on algorithm and hyperparameter selection in neural network recommenders. In: IFIP International Conference on Artificial Intelligence Applications and Innovations, Springer, Cham, pp 673–685. https://doi.org/10.1007/978-3-030-19823-7_56

Ramachandran P, Zoph B, Le QV (2017) Searching for activation functions. arXiv preprint arXiv:1710.05941v2. https://doi.org/10.48550/arXiv.1710.05941

Zhou Y, Li D, Hou D, Kung SY (2021) Shape autotuning activation function. Expert Syst with Appl 171:114534. https://doi.org/10.1016/j.eswa.2020.114534

Alkhouly AA, Mohammed A, Hefny HA (2021) Improving the performance of deep neural networks using two proposed activation functions. IEEE Access 9:82249–82271. https://doi.org/10.1109/ACCESS.2021.3085855

Li K, Fan C, Li Y, Wu Q, Ming Y (2018) Improving deep neural network with multiple parametric exponential linear units. Neurocomputing 301:11–24. https://doi.org/10.1016/j.neucom.2018.01.084

Github (2018) Code for improving deep neural network with multiple parametric exponential linear units. Github. Retrieved from https://github.com/Coldmooon/Code-for-MPELU

Lu L, Shin Y, Su Y, Karniadakis G (2020) Dying ReLU and initialization: theory and numerical examples. arXiv preprint arXiv:1903.06733v3. https://doi.org/10.48550/arXiv.1903.06733

Billingsley P (1995) Probability and measure, 3rd edn. John Wiley & Sons, New York, NY

Ioffe S, Szegedy C (2015) Batch normalization: accelerating deep networks training by reducing internal covariate shift. In: International Conference on Machine Learning, pp 448–456, PMLR. https://doi.org/10.48550/arXiv.1502.03167

Alcaide E (2018) E-swish: adjusting activations to different network depths. arXiv: 1801.07145. https://doi.org/10.48550/arXiv.1801.07145

Krizhevsky A, Hinton G (2009) Learning multiple layers of features from tiny images. Retrieved from www.cs.utoronto.ca/\(^\sim \)kriz/learning-features-2009-TR.pdf

Xiao H, Rasul K, Vollgraf R (2017) Fashion-mnist: a novel image dataset for benchmarking machine learning algorithms. arXiv preprint arXiv:1708.07747. https://doi.org/10.48550/arXiv.1708.07747

Shan S, Willson E, Wang B, Li B, Zheng B, Zhao BY (2019) Gotta catch ’em all: using concealed trapdoor to detect adversarial attacks on neural networks. In: Proceedings of the 2020 ACM SIGSAC Conference on Computer and Communications Security, pp 1–14

Ruiz P (2018) Understanding and visualizing ResNets. Towards Data Science. Retrieved from https://towardsdatascience.com/understanding-and-visualizing-resnets-442284831be8

He K, Zhang X, Ren S, Sun J (2016) Identity mappings in deep residual networks. In: European conference on computer vision, Springer, Cham, pp 630–645. https://doi.org/10.1007/978-3-319-46493-0_38

Keras (n.d.) Trains a ResNet on the CIFAR10 dataset. Keras. Retrieved from https://keras.io/zh/examples/cifar10_resnet/

He K, Zhang X, Ren S, Sun J (2015) Delving deep into rectifiers: surpassing human-level performance on imagenet classification. In: Proceedings of the IEEE international conference on computer vision, pp 1026–1034. https://doi.org/10.1109/ICCV.2015.123

Parkhi O, Vedaldi A, Zisserman A, Jawahar CV (2012) Cats and dogs. In: IEEE Conference on Computer Vision and Pattern Recognition (2th ed.). Retrieved from www.robots.ox.ac.uk/\(^\sim \)vgg/data/pets/. https://doi.org/10.1109/CVPR.2012.6248092

Chollet F (2020) Image segmentation with a U-Net-like architecture. Keras. Retrieved from keras.io/examples/vision/oxford_pets_image_segmentation

He Y, Zhang X, Sun J (2017) Channel pruning for accelerating very deep neural networks. In: Proceedings of the IEEE international conference on computer vision. https://doi.org/10.48550/arXiv.1707.06168

Salakhutdinov R, Larochelle H (2010) Efficient learning of deep boltzmann machines. In: Proceedings of the thirteenth international conference on artificial intelligence and statistics

Papoulis A (1985) Probability, random variables, and stochastic processes, 2nd edn. McGraw-Hill, New York, NY

Epanechnikov VA (1969) Non-parametric estimation of a multivariate probability density. Theory Probab Appl 14(1):153–158

The Math Works Inc. (2021) Kernel Distribution. MathWorks. https://www.mathworks.com/help/stats/kernel-distribution.html

Poor HV (2013) An introduction to signal detection and estimation. Springer Science & Business Media, Berlin

DeVore GR (2017) Computing the Z score and centiles for cross-sectional analysis: a practical approach. J Ultrasound Med 36(3):459–473

Urolagin S, Sharma N, Datta TK (2021) A combined architecture of multivariate LSTM with Mahalanobis and Z-Score transformations for oil price forecasting. Energy 231:120963. https://doi.org/10.1016/j.energy.2021.120963

Adler K, Gaggero G, Maimaitijiang Y (2010) Distinguishability in EIT using a hypothesis-testing model. J Phys: Conf Ser 224(1):12056. https://doi.org/10.1088/1742-6596/224/1/012056

LaMorte WW (2017) Hypothesis testing: upper-, lower, and two tailed tests. Boston University School of Public Health. Retrieved from sphweb.bumc.bu.edu/otlt/mph-modules/bs/bs704_hypothesistest-means-proportions/bs704_hypothesistest-means-proportions3.html

Acknowledgements

This work was supported by the Scientific and Technological Research Council of Türkiye (TÜBİTAK). The authors appreciate the financial support of the TÜBİTAK. We gratefully acknowledge the support of NVIDIA Corporation with the donation of the P6000 Quadro GPU used for this research.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Çatalbaş, B., Morgül, Ö. Deep learning with ExtendeD Exponential Linear Unit (DELU). Neural Comput & Applic 35, 22705–22724 (2023). https://doi.org/10.1007/s00521-023-08932-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-023-08932-z