Abstract

Many classical methods have been used in automatic sleep stage classification but few methods explore deep learning. Meanwhile, most deep learning methods require extensive expertise and suffer from a mass of handcrafted steps which are time-consuming. In this paper, we propose an efficient convolutional neural network, Sle-CNN, for five-sleep-stage classification. We attach each kernel in the first layers with a trainable coefficient to enhance the learning ability and flexibility of the kernel. Then, we make full use of the genetic algorithm’s heuristic search and the advantage of no need for the gradient to search for the sleep stage classification architecture. We verify the convergence of Sle-CNN and compare the performance of traditional convolutional neural networks before and after using the trainable coefficient. Meanwhile, we compare the performance between the Sle-CNN generated through genetic algorithm and the traditional convolutional neural networks. The experiments demonstrate that the convergence of Sle-CNN is faster than the normal convolutional neural networks and the Sle-CNN generated by genetic algorithm outperforms the traditional handcrafted counterparts too. Our research suggests that deep learning has a great potential on electroencephalogram signal processing, especially with the intensification of neural architecture search. Meanwhile, neural architecture search can exert greater power in practical engineering applications. We conduct the Sle-CNN with the Python library, Pytorch, and the code and models will be publicly available.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Sleep [1, 2] plays a significant role in human health and can reflect what diseases the body has such as narcolepsy [3], bruxism [4], obstructive sleep apnea [5]. Therefore, researchers use sleep as an indicator of health [6] and confirm that the sleep stage can help people understand what’s going on in the body. In recent years, many researchers have been focusing on sleep analysis, especially automatic sleep stage classification.

Lots of automatic sleep stage classification methods have been proposed to support the sleep analysis based on the sleep standard of rechtschaffen and kales or the American academy of sleep medicine (AASM). Most of them involve the two main steps including feature extraction and classification. Traditional machine learning methods are the most common analysis ways used by researchers to implement the two steps in the past. For example, Hassan et al. [7] used just one channel of EEG signals [8, 9] which was extracted by tunable-Q wavelet transformation, then exploited bootstrap aggregating as a classifier. Diykh et al. [10] combined structural graph similarity and the k-means to identify six sleep stages. Gunnarsdottir et al. [11] developed likelihood ratio decision tree classifier and extracted features from EEG, electromyogram (EMG), and electrooculogram (EOG) signals. Although the traditional machine learning methods have been widely used, they still have some drawbacks. Most of them have a two-step approach to identifying sleep stages. The first step is feature extraction, and the second step is to train the classifier with the extracted features. The feature extraction and classification tasks are separated, which not only increases the workload of algorithm design, but also has the problem that the quality of feature extraction directly affects the performance of classifier. With the development of deep learning [12, 13], a variety of convolutional neural networks (CNNs) are proposed to realize automatic feature extraction and data classification. A model with excellent performance can be obtained by adjusting the parameters of convolutional neural network based on the existing data and back propagation algorithm [14]. In existing works, due to the sequential relationship of sleep signals, some researchers use recurrent neural networks (RNNs) to classify sleep stages. For example, Supratak et al. [15] employed convolutional neural networks to extract features that remain constant over time and bidirectional-long short-term memory to automatically learn the patterns in the sleep stage transitions from recorded EEG epochs. Michielli et al. [16] proposed a cascaded architecture of recurrent neural network based on long short-term memory (LSTM) blocks to automate scoring of sleep stages using EEG signals derived from a single-channel. Eldele et al. [17] proposed an attention-based architecture to classify sleep stages using single-channel EEG signals, which start with a feature extraction module based on multi-resolution convolutional neural networks and adaptive feature recalibination. In addition, many CNN-based methods have been used to classify one-dimensional biological signals [18], such as Phan et al. [19] proposed a joint classification-and-prediction framework based on convolutional neural networks for automatic sleep staging, and, subsequently, introduces a simple yet efficient CNN architecture to power the framework.

However, in order to find a neural network model that is more suitable for the task of classifying sleep stages, researchers not only need to have professional domain knowledge, but also need to repeatedly adjust the convolutional neural network model’s architecture and hyperparameters. For example, when designing a feedforward neural network, many hyper-parameters need to be taken into consideration, such as the number of layers, the number of neurons in each layer, batch size of data, learning rate, etc. Therefore, designing suitable neural network model by hand is a time-consuming and laborious process. In addition, the channel information of images has a certain correlation, while the sleep signals are independent of each other, which indicates that the information contained in each sleep signal does not change with the changes of other sleep signals.

In this paper, in order to reduce the difficulty of manual design of sleep classification model and improve the accuracy of the model for sleep stage classification task, we propose to use evolutionary neural architecture search (NAS) to automatically construct efficient convolutional neural networks. NAS is a powerful technique that can better combine deep learning theory and practice [20,21,22]. We rely on the search ability of genetic algorithms (GA) to realize the search of efficient network architectures suitable for sleep classification tasks from a predefined unit-based search space. In addition, in order to better obtain important features from the data, we propose to attach a trainable coefficient to the kernel of the first layer to enhance the ability and flexibility of extracting signal features.

We summarize our contributions as follows:

-

1.

We construct a genetic algorithm-based approach to automatically design convolutional neural network architecture for the task of sleep stage classification. A search space composed of basic network modules is constructed and the genetic algorithm is used to automatically search the architecture in the search space. The convolutional network architecture with excellent performance can be found without human intervention.

-

2.

We add trainable coefficients to the first layer of the searched convolutional network architecture to adaptively learn the importance of each sleep signal, so that the proportion of important features in the sleep signal is increased and the noise is reduced, so as to improve the stability and accuracy of the network architecture. The experimental results show that the network architecture with trainable coefficient has better performance than the vanilla network architecture.

The rest of this paper is organized as follows. Section 2 provides the basic background for understanding the proposed method, and Sect. 3 describes the details of the proposed method. The experiments are designed and illustrated in Sect. 4, and the experimental results are presented and analyzed in Sect. 5. Finally, Sect. 6 provides conclusions and future work.

2 Related work

The CNNs and NAS are the main technologies we use to perform the task of automatic sleep stage classification [23, 29]. In this section, we discuss the relevant work from two aspects: the deep representation mechanism of CNNs and the basic framework of neural architecture search.

2.1 Deep representation mechanism of CNNs

In the last decade, CNNs have been developed rapidly and widely used in many fields such as pattern recognition and image recognition [23] because of their powerful capability of representation. CNNs promote feature extraction from the manual design stage to the self-learning stage, so it can better fit the data [24]. The depth of CNN is an important factor. However, the growth of layers increases the number of parameters and causes the device to be overwhelmed. Hence, many researchers improve the convolution operator to reduce the number of parameters without losing performance. Ioffe et al. [25] proposed batch normalization to accelerate the training process of deep network. Ding et al. [26] proposed to fuse various operations including convolution and skip connection. This is a device-friendly method because its fusion reduces a lot of parameters caused by skip connections. Similarly, they proposed a universal building block of convolutional neural network to improve the performance without any inference-time costs [27]. Zhang et al. [28] proposed to use the divide-and-conquer strategy to compact convolution operator which forms a plug-and-play module.

Convolutional neural networks were originally proposed to solve the task of image classification, such as LeNet, AlexNet, VGG and other classical convolutional neural networks [14]. However, a major drawback of classical convolutional neural networks when dealing with classification problems with multi-stage nature (such as the "sleep" case) is that they fail to take into account the time dependence [18]. This is because in the design of traditional convolutional neural networks, all convolution layers and pooling layers are translationally invariant, and such structures cannot directly deal with the time-dependent properties of sequential signals. For sleep sorting tasks, each moment of the sleep signal contains important information about different sleep stages that are time-dependent, such as rapid eye movement sleep, which usually follows deep sleep. Therefore, if only traditional convolutional neural networks are used, these time dependencies cannot be fully taken into account, which may lead to the degradation of classification performance.

2.2 Neural architecture search

There are many hyper-parameters including connection, depth, and size of filters in CNN to fine-tune. NAS [29] automates such manual operations, reduces the reliance on the expertise of the CNN architecture, and focuses on datasets. NAS makes it more convenient to exploit CNNs in practice. Currently, the performance of the network searched by NAS on some tasks has far exceeded the manually designed network on many tasks such as semantic segmentation [30] and image classification [31]. With the development of NAS, the framework of NAS can be divided into three dimensions, including search space, search strategy, and performance estimation strategy.

Search space parameterizes the architecture of CNNs and defines what kind of architectures can be discovered in principle. The simplest search space is chain-structured neural networks which are in line with the original CNN design mode that the connections just exist between adjacent layers. Motivated by handcrafted architectures like [32, 33] proposed multi-branch networks based on cells and blocks, which incorporate skip connection that allows the architectures more complex and accurate.

Search strategy specifies how to explore search space encompassing Bayesian optimization, random search, reinforcement learning, evolutionary methods, and gradient-based methods. Evolutionary computation [34] is the earliest method applied to NAS [35]. Bergstra et al. [36] incorporated Bayesian optimization into NAS in 2013 and achieved state-of-the-art vision architectures.

The performance estimation strategy refers to the evaluation of the networks generated by the search strategy. The simplest operation is to train the network and evaluate it from scratch in a traditional way which is unfortunately computationally expensive. The lower fidelity estimate reduces time cost through training with fewer epochs on the subset of data. Learning curve extrapolation [37] extrapolates the performance of architectures after just a few training epochs. Instead of training from scratch, weight inheritance [38, 39] proposed that architectures inherit their parents’ weights.

3 Proposed methods

In this part, we elaborate on our algorithm, Sle-CNN, and how it is generated. First, we introduce the thinking and the Sle-CNN design in detail. Then we introduce the generation method of Sle-CNN. In order to allow readers to clearly understand Sle-CNN, we provide detailed picture descriptions.

3.1 Sle-CNN

The convolution filter is the core component of the CNN. It is responsible for the task of extracting features. Grad-CAM [40] indicates that the convolution filter has a certain attention mechanism, that is, extracting the features of the recognized object. The more important point is that the convolution filter also plays a role in filtering noise. We can regard the non-recognized object part as a kind of noise. Because of the spatial difference between the noise and the recognized object, the convolution filter can easily filter it out, so it is very robust to such noise. But the noise fused with the recognized object is very difficult to process. For biological signals, it is more difficult to identify. We believe that it is precisely because of the diversification of the functions of the convolution filter that the convolution filter cannot be better focused on extracting features or filtering noise. Therefore, to enable the convolution kernel to play a greater role in extracting features and filtering noise, especially in the denoising of biological signals, we bind each kernel in each filter to a trainable coefficient and this CNN is termed Sle-CNN because of its purpose of sleep stage classification. Different from images, sleep data is polymorphic and consists of multiple signals. Therefore, we believe that these trainable coefficients can further highlight the differences between different signals, thereby improving the performance of the network. The sleep data has ten modalities and Fig. 1 demonstrates how the coefficients work. This gives the convolutional filters a certain degree of flexibility of denoising and feature extraction. Meanwhile it offers autonomous selectivity to adaptively evaluate the importance of each signal, so that the features which have a greater impact on the classification result are better propagated, while the propagation of the less influential features is impaired. It is more flexible when extracting representations in first layers.

3.2 Generating Sle-CNN

In consideration of a large number of hyper-parameters such as the number of layers, the type of operation performed on each layer, and the number of output channels in each layer, the process of exploring the best hyper-parameters suitable for the particular problem requires a lot of professional skills and empirical knowledge. Besides, constant experimentation, test, and troubleshooting are needed too. To mitigate the complexity, we incorporate NAS based on the GA and its framework is shown in Algorithm 1.

First, initialize a population of N individuals, and calculate the fitness value of the individuals in the population (line 1–2). Second, in the T generations, each generation selects two parent individuals from the parent population through the binary tournament selection according to the fitness. Then perform crossover and mutation operations on the two parent individuals to generate offspring individuals and form the offspring population (line 3–9). Evaluate the fitness of offspring individuals (line 10). Finally, a new population is selected from the parent and offspring population according to fitness through environmental selection (line 11–12). When the T generations terminate, the best Sle-CNN will be decoded from the best individual that has the maximal fitness.

To introduce this process more clearly, the flowchart corresponding to the algorithm is shown in Fig. 2. In the following sections, we elucidate its mechanism from three aspects: encoding strategy, fitness evaluation, and offspring generation.

3.2.1 Encoding strategy

To improve the performance of architecture in search space and accelerate the speed of searching, we introduce the prior knowledge of two skip blocks, ResNet block (RB) [32] and DenseNet block (DB) [41]. In addition to these blocks, a pooling block is employed too, which just contains a pooling layer. According to the experience of orthodox hand-designed CNN, we use the filter of (1, 3), and the stride is set to 1. To change the length of the individual more flexibly, we design three units, ResNet unit (RU) [32], DenseNet [41] unit (DU), and Pooling unit (PU), to contain different convolutional components. RU contains ResNet [32] blocks, DU contains DenseNet [41] blocks, and PU contains one pooling block, respectively. Figure 3 shows the details of RB, DB, RU, and DU. Hence, one CNN is built based on the three units. A list is created to denote an individual (architecture), the length of which is defined randomly within a range, then multiple RUs, DUs, and PUs are generated in the list randomly. Therefore, an individual represents the list of units. Note that the number of PUs cannot exceed \(\log _2 N\), where N denotes the length of the input. For example, if the size of the input is (1,100), the number of PUs is 6 at most, otherwise, it leads to logical error. As a result, the parameters of a RU are unit tag, input channel, output channel, and the number of ResNet [32] blocks. The parameters of DU are unit tag, input channel, output channel, the number of DenseNet [41] blocks, and the growth rate k. The parameter of PU is the unit tag and the type of pooling. Figure 4 shows the details of the encoding strategy of the three types of units. The unit represents the type of unit and 0 denotes RU, 1 denotes DU, and 2 denotes PU. The in and out in RU and DU represent number of input channel and number of output channel, respectively. The num in RU represents number of RB and the num in DU represents the number of DU. The k is growth rate. The type in PU represents the type of pooling and 0 denotes max pooling and 1 denotes average pooling.

3.2.2 Fitness evaluation

The fitness of an individual indicates the accuracy on dataset. According to the encoding strategy, we first decode each individual into a CNN, then we train the CNN on training dataset. Finally, we take the accuracy of each individual on the validation dataset as its fitness value. Equation 1 formulates the fitness. \(\text {Acc}(\cdot )\) measures the performance of a CNN denoted by \(\Gamma\) on the validation dataset \(D_\text {v}\) after being trained on the training dataset \(D_\text {t}\).

3.2.3 Offspring generation

First of all, two parent architectures are selected. To produce the offspring architectures with better property than parents, it needs to be ensured that the performance of selected architectures is quite excellent, because the nature of GA is the inheritance of merit from parents, and at the same time, the trap of local optima needs to be avoided. To this end, binary tournament selection is used to achieve this goal. First, it selects two architectures in population, then the architecture with higher fitness is chosen as one parent. The other is selected in the same way.

Crossover process. a Firstly, select one crossover point in each parent architecture. Different from traditional crossover, the selection of crossover points between architectures does not affect each other. b Then, swap the second parts after the two crossover points to generate offspring architectures

Secondly, the crossover is performed on these two parent architectures. Considering the depth of CNN is variable and is one important factor influencing the quality of CNNs, the crossover of traditional GA conducted on individuals with the same length is not suitable for the CNN design. Consequently, we choose one crossover point in each parent architecture randomly as Fig. 5a shows, then the first part of \(parent_1\) connects to the second part of \(parent_2\), and the first part of \(parent_2\) connects to the second part of \(parent_1\) as Fig. 5b shows. A red or purple rectangle in Fig. 5 represents a unit that is DU, RU, or PU. Note that the first unit cannot be selected as crossover point, otherwise, empty solutions will be generated. Finally, the mutation is conducted on the two offspring architectures. Three types including ‘add’, ‘reduce’ and ‘alter’ constitute mutation. ‘add’ means adding an extra unit RU, DU, or PU. Note that the number of PUs cannot exceed the maximum. ‘reduce’ means reducing a unit. ‘alter’ means altering the attribute of a unit, such as the output channel or the number of ResNet [32] blocks or DenseNet [41] blocks.

4 Experiments

In this section, we introduce the datasets and training details. First, we show the datasets extracted from humans. Then we provide the training parameter setting in our experiment.

4.1 Datasets

The used datasets in this paper are extracted from three subjects by a laboratory in a hospital. A total of 2889 samples and the details of the number of each stage are shown in Table 1. AASM defined standard of sleep scoring: awake (W), non-rapid eye movement (NREM), and rapid eye movement (REM). NREM is further divided into three stages: N1, N2, and N3. The face and head of subjects are attached to electrodes for about eight hours to collect various physiological frequency signals. Then generate ten signals by select some electrode values to subtract others. Here we get ten signals which mean ten modalities, F4-M1, C4-M1, O2-M1, F3-M2, C3-M2, O1-M2, E1-M2, E2-M2, Chin1-Chin2, and RIP ECG. Among them, F4-M1, C4-M1, O2-M1, F3-M2, C3-M2 and O1-M2 are EEG signals, which are mainly used to monitor the electrical activity of different brain regions during sleep, so as to evaluate the sleep depth and distribution of sleep stages. E1-M2 and E2-M2 are EOG signals, which are mainly used to monitor the rapid eye movement phase during sleep, so as to evaluate the occurrence time and duration of REM sleep. Chin1-Chin2 is a EMG signal used to monitor jaw muscle activity during sleep to assess sleep quality and jaw relaxation during sleep. RIP ECG is a ECG signal, which is mainly used to monitor changes in respiration and heart rate during sleep in order to evaluate sleep quality and respiratory and heart health. Based on the ten modalities, the rule of sampling is that taking one frame as a sample which is composed of 30 s and one second includes 512 frequency points. This indicates that one sample contains ten modalities and each modality consists of 15360 frequency points. Due to the high serialization of the modalities, we convert each into a one-dimensional vector. This process not only keeps the independence of each modality but also keeps the serialization. Figure 6 illustrates the components of a sample extracted. To fully verify our method, we randomly collect 83% of the samples as the training dataset and 17% as the validation dataset. Six datasets are generated by repeating this process six times.

4.2 Training details

4.2.1 Data processing

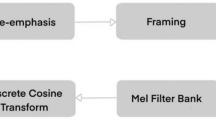

Before verifying Sle-CNN, we explore the use of data using, that is, how to use a long biological signal to achieve the best performance. Table 2 shows the details of the seven data transformations. In \(E_0\), we keep signals in their original state and then process them, which means we concatenate them into one channel and perform a convolution operation with the filter of (1, 3) on the result. In the second way \(E_1\), inspired by processing traditional images, we transform the shape of original signal (10, 15360) into (256, 200, 3). In \(E_2\), given the ten signals, we reshape to (128, 120, 10) which has ten channels like the original signals. Both \(E_1\) and \(E_2\) do not consider the independence between each signal and just regard the entire ten signals as one 2-D sample. In \(E_3\), we transform the shape of each signal into (128, 120) and concatenate them together as (128, 120, 10). Based on \(E_3\), we attach a trainable coefficient to each kernel in the input layer in \(E_4\). In every transformation above, we design each filter as (3, 3) due to their shape. In \(E_5\), we incorporate the 1-D convolution. We first convert the signals into 1-D matrices, (1, 15360, 10). Given that the data size is quite large in the first layers, especially when the signal data is just input (1, 15360, 10), then as the feature size decreases, we also reduce the size of the filters. Therefor, we design the convolution filters as (1, 30), (1, 20), (1, 10), and (1, 5). After the convolution using the filters with the size style (1, X), we rebuild the results into a 2-D style and utilize the filter with the size of (3, 1) as Fig. 7 shows, because this process can extract the features between signals. Based on \(E_5\), we attach a trainable coefficient to each kernel in the input layer in \(E_6\). Figure 8 shows the architecture used in \(E_6\). The training setting on each transformation is the same: the batch size is 64, the epoch is 80, the optimizer is Adam, and the learning rate is 0.0001. We set the batch size to 64 because this architecture is not very complicated (compared with the searched ones), and this value depends on our computing resource.

4.2.2 Searching for Sle-CNN

Different from the architecture of CNN used in \(E_6\), we add the component of RB in addition to DB to improve the networks’ ability to extract the features when using GA to search Sle-CNN. Meanwhile, considering the relatively high complexity of the architecture to be searched, we introduce the pooling operation and cancel the convolution filter (3, 1).

In the case of using the RTX 2080 Ti GPU, we set population size number and the generation number to 17 and 20 respectively. The probability of crossover and mutation are set to 1 and 0.2, respectively, because according to the mechanism of environmental selection in GA (Algorithm 1), probability of crossover 1 can expand search space, which increases the chance of generating good individuals. Mutation can also expand search space, but if the probability of mutation is too large, the parent architectures are easily destroyed, which slows down the search speed, so we set the probability of mutation to 0.2. During the fitness evaluation, we employ the Adam optimizer to train individuals and the parameters of Adam adopt default values, the learning rate is 0.0001, \(beta_1\) is 0.9, and \(beta_2\) is 0.999, because Adam is currently an optimizer with relatively good performance. The batch size is 64 because we need to leave more GPU memory for the architectures of Sle-CNNs. Finally, we set the number of epochs to 79.

4.2.3 Traditional models

We compare our method with three models of the ResNet family [32] and two classical models with attention mechanism, SENet [42] and CBAM [43]. To better extract features of signals, we just modify their filter of (3, 3) with (1, 3). The training setting of each model is that batch size is 64, the epoch is 80, the optimizer is Adam, and the learning rate is 0.0001. To exhibit the performance of our method, we first train and validate the five models with their vanilla architecture on the six datasets, then we train and validate them with the trainable coefficients.

5 Results and discussion

5.1 Results of data processing

Fig. 9 shows the accuracy on the six validation datasets when training the seven transformations (\(E_0\)–\(E_6\)) where the horizontal axis denotes the training steps and the vertical axis denotes the classification accuracy. Each curve represents the accuracy trend of one transformation on one validation dataset. On the right side of each subgraph is the average accuracy of each model after 1500 steps. All experiments converge around 1300 steps. Before convergence, \(E_0\), \(E_1\), \(E_2\), and \(E_3\) start to fit data from accuracy of 0.2, \(E_5\) starts to fit data from accuracy of 0.3, and \(E_4\) and \(E_6\) start to fit the data from an accuracy of 0.4. After convergence, \(E_1\) has the worst performance of around 0.3, and is far from others. \(E_6\) has the highest performance around 0.7. The performance of \(E_5\), \(E_4\), and \(E_0\) is very close, but experiments still show that the performance of \(E_5\) is better than that of \(E_4\) whose performance is better than \(E_0\)’s performance. Although \(E_0\) has not been improved in any way, its performance is not worse than that of \(E_1\), \(E_2\), and \(E_3\).

We can infer from Fig. 9 that \(E_1\) has the worst performance because \(E_1\) completely disrupts the internal correlation in the processing of raw data. \(E_0\) does not change each raw single signal when merges them into one channel. \(E_2\) breaks raw data too, but compared to \(E_1\), the signals are not very disturbed which results in the improvement in accuracy. Differing from the two models above, \(E_3\) first transforms each signal into two-dimensional and then concatenates them along channels, which destroys every signal (one-dimensional) in the original data, but maintains the independence of each signal, so the validation performance is improved. \(E_4\) adds the trainable coefficients on the top of \(E_3\), and filters with coefficients strengthen the feature extraction and denoising, so it is obvious that the performance is better than that of \(E_3\). Besides, we can deduce that breaking the inner correlation is not wise, and this is why \(E_1\), \(E_2\), and \(E_3\) cannot outperform \(E_0\), but the coefficients can make up for this shortcoming very well, which can be inferred from the comparison between \(E_3\) and \(E_4\). In particular, we can see from the comparison between \(E_1\), \(E_2\), and \(E_3\) that the less damage is done to original data, the better performance the CNN gets. Although \(E_5\) does not use the data processing trick, \(E_5\) completely keeps original data, so before the convergence, it can fit the data better than \(E_0\)-\(E_3\). From the comparison between \(E_5\) and \(E_0\)–\(E_3\), we can draw that keeping raw data can perfect the fitting. On basis of \(E_5\), \(E_6\) adds coefficients, and we can see that the fitting ability before convergence surpasses the previous models, except for \(E_4\) and surpasses all models after convergence. Interestingly, \(E_4\) and \(E_6\) are the only transformations that use the trainable coefficients. With the enhancement of this trick, their ability to fit in the early stage is better than other models, and their accuracy after convergence is also quite high among all models. \(E_6\)’s accuracy is the highest, and the accuracy of \(E_4\) is second only to that of \(E_5\). The main reason why the performance of \(E_6\) exceeds that of \(E_4\) is that \(E_6\) keeps the integrity of signals. Meanwhile, \(E_5\) keeps the integrity of signals but does not use the trick and \(E_4\) uses the trick but destroys the integrity of signals, but their accuracy is very close. So we argue that the trainable coefficients can make up for the performance loss caused by signal damage. To sum up, we can conclude that (1) Corrupting the original data structure is inadvisable for signal classification using CNNs. (2) the coefficients can strengthen the ability of fitting, and at the same time make up for the performance loss caused by signals’ damage.

5.2 Result of generation

After 20 generations, a total of 340 individuals (Sle-CNNs) are generated. On account of the characteristic of GA that in every generation, crossover and mutation can generate some individuals that are the same as the previous generations did, in our experiment, there are 59 repeats and in the statistical process. For clarity, they are ignored. As a result, there are 281 individuals that are exhibited below. Figure 10a shows the result of the search process, where the horizontal axis denotes the individuals, and the vertical axis denotes the fitness. As we can see, the fitness values of individuals present two completely different parts, and one is around 0.8 and the other is zero. Meanwhile, fitness values appear most intensively in the first 50 Sle-CNNs. The improvement of fitness values after the \(200\text {th}\) Sle-CNN is more obvious than before it. To elucidate the search process more clearly, we give the tendency of fitness in which individuals with a fitness of zero are abandoned as Fig. 10b illustrates. The blue line represents the tendency and value in the blue line is the mean value of the neighborhood (\(\zeta -35\), \(\zeta +35\)) where \(\zeta\) denotes the original value (the red dots). In the front and the rear, we copy the front values and the rear values to meet the length of the neighborhood. We can infer that with the increase in generation, individuals with good performance are constantly produced. The fitness values are between 0.75 and 0.90 and the main fitness is between 0.80 and 0.90. The fitness value is rising in the first 100 Sle-CNNs, the plain period appears between \(100\text {th}\) and \(150\text {th}\) Sle-CNNs, and the fitness value is rising again after \(150\text {th}\) Sle-CNN. Figure 11 shows the perfect architecture searched by NAS whose fitness (accuracy) is 89.6%. This Sle-CNN involves two RUs, three DUs, and eleven PUs.

In the initial stage, most individuals have a fitness value of zero, because, in the early stages of evolution, the architectures are too complex to be supported by computing power, which makes performance evaluation impossible. However, with the evolution of the population and the crossover and mutation between different individuals, too complex architectures are gradually eliminated, so in the subsequent evolution process, most individuals are adapted to computing power. This confirms the powerful search ability of GA. In addition to the elimination of overly complex individuals, the performance is constantly evolving. Under the mechanism of environmental selection, crossover allows good architecture components (hyper-parameters) to be passed on to the next generations. Therefore, in the early stages of evolution, the fitness values of individuals are rising. However, in the mid-term, the fitness values have a short period of saturation, and the population performance falls into the plain stage. This is because the populations enter the stage of the local optimal solution. This is mainly due to the effect of crossover because crossover just recombines components in different architectures and this recombination can find a locally optimal solution in a short time which indicates that crossover’s local search capability is very strong. However, new components can be generated through mutation, which makes new architectures different from others, thus the population can jump out of the local optimal, which indicates that the global search ability of mutation is very strong. This is also the reason why population performance rebounds again in the later stage of evolution. In this architecture generated by GA, the proportion of PUs is the largest and the PUs are used to reduce the data dimension, which, therefore, indicates that there is a lot of noise in the dataset and a denoising work is required, and this work is done by the PUs. When designing a model artificially, it is difficult to consider superimposing multiple PUs because most researchers think that continuous PUs reduce information and are detrimental to model performance. This also confirms that search process not only saves a lot of time overhead caused by artificial design, but also generates architectures that humans cannot consider because of the huge search space.

5.3 Result of comparison with traditional models

As shown in Table 3, among the vanilla CNNs, the performance of ResNet-50 [32] is almost better than ResNet-18 [32] on those datasets, but the difference between the two is not very large. The performance of ResNet-101 [32] is the worst on those datasets except Dataset 0. The performance of SENet [42] and CBAM [43] surpasses the ResNet family [32]. SENet [42] is the best among the 5 models on those datasets except Dataset 0. After using the trainable coefficients, models are almost improved. Sle-ResNet-50 [32] still almost exceeds Sle-ResNet-18 [32] and Sle-ResNet-101 [32] on datasets and Sle-ResNet-101 [32] remains the worst. SENet [42] and CBAM [43] still are superior to the ResNet family [32]. This indicates that the proposed trainable parameters can improve the performance of the vanilla architecture to some extent. It is worth noting that the performance of the Sle-CNN generated through GA is almost the best.

We can infer from Table 3 that on those datasets, the performance of ResNet-50 [32] in the ResNet [32] family is almost the best, and ResNet-101 [32] is the worst. This shows that the architecture of ResNet-18 [32] is too simple to fit the data well. ResNet-50 [32] has twice as many parameters as ResNet-18 [32], so it can extract more information and fit data better. The amount of parameters of Reset-101 is twice that of ResNet-50 [32], but the worst effect is obtained. This is because the architecture of ResNet-101 [32] is too complex, and the performance degrades during the training. This indicates that for our datasets, ResNet-50’s architecture complexity is the most suitable, and increasing the complexity on this basis may result in a decrease in performance. SENet [42] and CBAM [43] achieve better performance among the five traditional models. To some extent, they are affected by the attention mechanism and capture the meaningful information in signals. This phenomenon also shows that making the model more focused on the important part of the signal can improve the performance of the model. Clearly, as shown in Table 3, the architectures searched by GA outperform the compared traditional architectures, but the gap in their performance is not very large. Interestingly, unlike the handcrafted architecture, a total of 281 architectures were incrementally generated by the genetic algorithm. In the process of evolution, excellent parent individuals are retained by environmental selection, and various offspring individuals are generated by genetic operators. The process is similar to designing a network architecture by hand, tweaking the current optimal model in the hope of getting a better one. However, the neural architecture search method based on genetic algorithm is limited by the predefined search space and hardware resources. As a result, it is not possible to explore all network architectures in the search space, and it is not possible to search for network architectures outside the predefined search space. As shown at the bottom of Fig. 10a, the architectures searched by genetic algorithms have problems with model parameters so large that their performance needs to be verified on more powerful computing devices. In addition, the design of the search space also affects the performance of the final searched architecture. In this paper, we use the unit-based search space, which inherits the prior knowledge of human beings to some extent and reduces the scale of the search space, but also reduces the flexibility of the search space, making the network structure in the search space relatively fixed.

6 Conclusions and future work

In this paper, a neural architecture search method based on genetic algorithm is developed to automate the construction of network architecture for sleep stage classification. By adding trainable coefficients to the first layer of the searched network architecture, an efficient convolutional neural network, Sle-CNN, for sleep stage classification is obtained. A unit-based search space is constructed to retain human prior knowledge, and genetic algorithm is used to explore the excellent network architecture in the search space. The experimental results show that the performance of the network architecture with additional trainable coefficients is better than that of the vanilla one. This method compensates the performance loss caused by signal damage by trainable coefficients and improves the accuracy of traditional sleep classification model. In addition, compared with the traditional network architecture designed by hand, the network architecture searched by NAS method based on genetic algorithm can achieve higher accuracy of sleep stage classification. The proposed method can realize the automatic construction of sleep stage classification task model and improve the performance of the model depending on trainable coefficients. However, automating the construction of network architectures through neural architecture search methods based on evolutionary algorithms shifts the pressure of manual design onto computing devices. This makes the method need enough hardware resources to handle the actual signal classification task. In future work, we will design more efficient algorithms from the perspective of accelerating performance evaluation and reducing the pressure of computing equipment.

Data availability

All data generated or analyzed during this study are included in this published article.

Abbreviations

- AASM:

-

American academy of sleep medicine

- EEG:

-

Electroencephalogram

- EMG:

-

Electromyogram

- EOG:

-

Electrooculogram

- NAS:

-

Neural architecture search

- GA:

-

Genetic algorithm

- CNN:

-

Convolutional neural network

- RB:

-

ResNet block

- DB:

-

DenseNet block

- RU:

-

ResNet unit

- DU:

-

DenseNet unit

- PU:

-

Pooling unit

- W:

-

Awake

- N1:

-

Non-rapid eye movement (stage 1)

- N2:

-

Non-rapid eye movement (stage 2)

- N3:

-

Non-rapid eye movement (stage 3)

- REM:

-

Rapid eye movement

References

Phan H, Andreotti F, Cooray N, Chen OY, De Vos M (2019) SeqSleepNet: end-to-end hierarchical recurrent neural network for sequence-tosequence automatic sleep staging. IEEE Trans Neural Syst Rehabil Eng 27(3):400–410

Dong H, Supratak A, Pan W, Wu C, Matthews PM, Guo Y (2018) Mixed neural network approach for temporal sleep stage classification. IEEE Trans Neural Syst Rehabil Eng 26(2):324–333

Barateau L, Dauvilliers Y (2021) Narcolepsy, idiopathic hypersomnia, and dysautonomia. In: Autonomic nervous system and sleep: order and disorder, pp 187–198

Heyat MBB, Lai D, Akhtar F, Hayat MAB, Azad S (2020) Bruxism detection using single-channel C4-A1 on human sleep S2 stage recording. In: Intelligent data analysis: from data gathering to data comprehension, pp 347–367

Gottlieb DJ, Punjabi NM (2020) Diagnosis and management of obstructive sleep apnea: a review. JAMA 323(14):1389–1400

Garstang J, Cohen M, Mitchell EA, Sidebotham P (2021) Classification of sleep-related sudden unexpected death in infancy: a national survey. Acta Paediatr 110(3):869–874

Hassan AR, Subasi A (2017) A decision support system for automated identification of sleep stages from single-channel EEG signals. Knowl Based Syst 128:115–124

Babiloni F, Cincotti F, Lazzarini L, Millan J, Mourino J, Varsta M, Heikkonen J, Bianchi L, Marciani MG (2000) Linear classification of low-resolution EEG patterns produced by imagined hand movements. IEEE Trans Rehabil Eng 8(2):186–188

Snyder KL, Kline JE, Huang HJ, Ferris DP (2015) Independent component analysis of gait-related movement artifact recorded using EEG electrodes during treadmill walking. Front Hum Neurosci 9

Diykh M, Li Y, Wen P (2016) EEG sleep stages classification based on time domain features and structural graph similarity. IEEE Trans Neural Syst Rehabil Eng 24(11):1159–1168

Gunnarsdottir KM, Gamaldo CE, Salas RME, Ewen JB, Allen RP, Sarma SV (2018) A novel sleep stage scoring system: combining expert-based rules with a decision tree classifier. In: 2018 40th annual international conference of the IEEE engineering in medicine and biology society (EMBC), pp 3240–3243

Hossain MS, Amin SU, Alsulaiman M, Muhammad G (2019) Applying deep learning for epilepsy seizure detection and brain mapping visualization. ACM Trans Multimed Comput Commun Appl 15(1s):1–17

Ou C, Karray F (2020) Deep learning-based driving maneuver prediction system. IEEE Trans Veh Technol 69(2):1328–1340

Li Z, Liu F, Yang W, Peng S, Zhou J (2022) A survey of convolutional neural networks: analysis, applications, and prospects. IEEE Trans Neural Netw Learn Syst 33(12):6999–7019

Supratak A, Dong H, Wu C, Guo Y (2017) DeepSleepNet: a model for automatic sleep stage scoring based on raw single-channel EEG. IEEE Trans Neural Syst Rehabil Eng 25(11):1998–2008

Michielli N, Acharya UR, Molinari F (2019) Cascaded LSTM recurrent neural network for automated sleep stage classification using single-channel EEG signals. Comput Biol Med 106:71–81

Eldele E et al (2021) An attention-based deep learning approach for sleep stage classification with single-channel EEG. IEEE Trans Neural Syst Rehabil Eng 29:809–818

Parekh N, Dave B, Shah R, Srivastava K (2021) Automatic sleep stage scoring on raw single-channel EEG : a comparative analysis of CNN architectures. In: International conference on electrical, computer and communication technologies (ICECCT), pp 1–8

Phan H, Andreotti F, Cooray N, Chén OY, De Vos M (2019) Joint classification and prediction CNN framework for automatic sleep stage classification. IEEE Trans Biomed Eng 66(5):1285–1296

Gu Y-C, Wang L-J, Liu Y, Yang Y, Wu Y-H, Lu S-P, Cheng M-M (2021) DOTS: decoupling operation and topology in differentiable architecture search. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 12311–12320

Gu X, Cao Z, Jolfaei A, Xu P, Wu D, Jung T-P, Lin C-T (2021) EEG-based brain-computer interfaces (BCIs): a survey of recent studies on signal sensing technologies and computational intelligence approaches and their applications. IEEE/ACM Trans Comput Biol Bioinform 18(5):1645–1666

He H, Wu D (2020) Different set domain adaptation for brain-computer interfaces: a label alignment approach. IEEE Trans Neural Syst Rehabil Eng 28(5):1091–1108

Zhang X, Wang L, Su Y (2021) Visual place recognition: a survey from deep learning perspective. Pattern Recognit 113:107760

Lecun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc of the IEEE 86(11):2278–2324

Ioffe S, Szegedy C (2015) Batch normalization: accelerating deep network training by reducing internal covariate shift. In: Proceedings of the 32nd international conference on machine learning, vol 37, pp 448–456

Ding X, Zhang X, Ma N, Han J, Ding G, Sun J (2021) RepVGG: making VGG-style ConvNets great again. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 13733–13742

Ding X, Zhang X, Han J, Ding G (2021) Diverse branch block: building a convolution as an inception-like unit. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 10886–10895

Zhang C, Xu Y, Shen Y (2021) CompConv: a compact convolution module for efficient feature learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR) Workshops, pp 3012–3021

Wan A, Dai X, Zhang P, He Z, Tian Y, Xie S, Wu B, Yu M, Xu T, Chen K, Vajda P, Gonzalez JE (2020) FBNetV2: differentiable neural architecture search for spatial and channel dimensions. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 12965–12974

Minaee S, Boykov Y, Porikli F, Plaza A, Kehtarnavaz N, Terzopoulos D (2022) Image segmentation using deep learning: a survey. IEEE Trans Pattern Anal Mach Intell 44(7):3523–3542

Jia S, Jiang S, Lin Z, Li N, Xu M, Yu S (2021) A survey: deep learning for hyperspectral image classification with few labeled samples. Neurocomputing 448:179–204

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 771–778

Zoph B, Vasudevan V, Shlens J, Le QV (2018) Learning transferable architectures for scalable image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 8697–8710

Tan KC, Feng L, Jiang M (2021) Evolutionary transfer optimization—a new frontier in evolutionary computation research. IEEE Comput Intell Mag 16(1):22–33

Angeline PJ, Saunders GM, Pollack JB (1994) An evolutionary algorithm that constructs recurrent neural networks. IEEE Trans Neural Netw 5(1):54–65

Bergstra J, Yamins D, Cox D (2013) Making a science of model search: hyperparameter optimization in hundreds of dimensions for vision architectures. In: Proceedings of the 30th international conference on machine learning, vol 28, pp 115–123

Bordelon B, Canatar A, Pehlevan C (2020) Spectrum dependent learning curves in kernel regression and wide neural networks. In: Proceedings of the 37th international conference on machine learning, vol 119, pp 1024–1034

Lu Z, Deb K, Goodman E, Banzhaf W, Boddeti VN (2020) NSGANetV2: evolutionary multi-objective surrogate-assisted neural architecture search. In: Computer Vision — ECCV 2020, pp 35–51

Guo Z, Zhang X, Mu H, Heng W, Liu Z, Wei Y, Sun J (2020) Single path one-shot neural architecture search with uniform sampling. In: Computer Vision - ECCV 2020, pp 544–560

Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D (2017) Grad-CAM: visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE international conference on computer vision (ICCV), pp 618–626

Huang G, Liu Z, van der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 4700–4708

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 7132–7141

Woo S, Park J, Lee J-Y, Kweon IS (2018) CBAM: convolutional block attention module. In: Proceedings of the European conference on computer vision (ECCV), pp 3–19

Acknowledgements

This work was partially supported by the National Natural Science Foundation of China (61876089, 61403206, 61876185, 61902281), the Opening Project of Jiangsu Key Laboratory of Data Science and Smart Software (No. 2019DS302), the Natural Science Foundation of Jiangsu Province (BK20141005), the Natural Science Foundation of the Jiangsu Higher Education Institutions of China (14KJB520025), the Postgraduate Research & Practice Innovation Program of Jiangsu Province, the Science and technology program of Ministry of Housing and Urban-Rural Development (2019-K-141). (Corresponding author: Yu Xue).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

In the present work, we have not used any material from previously published. So we have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, Z., Xue, Y., Slowik, A. et al. Sle-CNN: a novel convolutional neural network for sleep stage classification. Neural Comput & Applic 35, 17201–17216 (2023). https://doi.org/10.1007/s00521-023-08598-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-023-08598-7