Abstract

The pressing need for pretraining algorithms has been diminished by numerous advances in terms of regularization, architectures, and optimizers. Despite this trend, we re-visit the classic idea of unsupervised autoencoder pretraining and propose a modified variant that relies on a full reverse pass trained in conjunction with a given training task. This yields networks that are as-invertible-as-possible and share mutual information across all constrained layers. We additionally establish links between singular value decomposition and pretraining and show how it can be leveraged for gaining insights about the learned structures. Most importantly, we demonstrate that our approach yields an improved performance for a wide variety of relevant learning and transfer tasks ranging from fully connected networks over residual neural networks to generative adversarial networks. Our results demonstrate that unsupervised pretraining has not lost its practical relevance in today’s deep learning environment.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

While approaches such as greedy layer-wise autoencoder pretraining [4, 18, 72, 78] paved the way for many fundamental concepts of today’s methodologies in deep learning, the pressing need for pretraining neural networks has been diminished in recent years. An inherent problem is the lack of a global view: layer-wise pretraining is limited to adjusting individual layers one at a time. Thus, bottom layers that are optimized first cannot be adjusted to correct errors in higher layers [11, 87]. In addition, numerous advancements in regularization [28, 43, 66, 76], network architectures [30, 63, 71], and improved optimization algorithms [44, 52, 62] have decreased the demand for layer-wise pretraining. Despite these advances, training deep neural networks that generalize well to a wide range of previously unseen tasks remains a fundamental challenge [20, 40, 55, 56] (Fig. 1).

Our pretraining (denoted as \(\text {RR}_{\text {}}\)) yields improvements for numerous applications: a For difficult shape classification tasks, it outperforms existing approaches (\(\text {Std}_{\text {TS}}\), \(\text {Ort}_{\text {TS}}\), \(\text {Pre}_{\text {TS}}\)): the \(\text {RR}_{\text {TS}}\) model classifies the airplane shape with significantly higher confidence. b Our approach establishes mutual information between input and output distributions. c For CIFAR 10 classification with a ResNet 110, \(\text {RR}_{\text {C10}}\) yields substantial practical improvements over the state-of-the-art. d Learned weather forecasting likewise benefits from our pretraining, with \(\text {RR}_{\text {}}\) yielding \(13.7\%\) improvements in terms of latitude-weighted RMSE for the ERA dataset [31]. Pressure is shown for 2019-08-09, 22:00 UTC, together with Mean Absolute Error (MAE) for \(\text {Std}_{\text {}}\) and \(\text {RR}_{\text {}}\) models

In this paper, we develop an algorithm that reformulates autoencoder pretraining in a global way to arrive at a method that efficiently extracts general, dominant features from datasets. These features in turn improve performance for new tasks. Our approach is also inspired by techniques for orthogonalization [3, 38, 50, 57]. Hence, we propose a modified variant that relies on a full reverse pass trained in conjunction with a given training task. A key insight is that there is no need for “greediness,” i.e., layer-wise decompositions of the network structure, and it is additionally beneficial to take into account a specific problem domain at the time of pretraining. We establish links between singular value decomposition (SVD) and pretraining, and show how our approach yields an embedding of problem-aware dominant features in the weight matrices. An SVD can then be leveraged to conveniently gain insights about learned structures. Unlike orthogonalization techniques, we focus on embedding the dominant features of a dataset into the weights of a network. This is achieved via a reverse pass network. This reverse pass is generic, simple to construct, and directly relates to model performance, instead of, e.g., constraining the orthogonality of weights. Most importantly, we demonstrate that the proposed pretraining yields an improved performance for a variety of learning and transfer tasks, while incurring only a minor extra computational cost from the reverse pass.

The structure of our networks is influenced by invertible network architectures that have received significant attention in recent years [24, 34, 36, 85]. However, these approaches rely heavily on specific network architectures. Instead of aiming for a bijective mapping that reproduces inputs, we strive for learning a general representation by constraining the network to represent an as-reversible-as-possible process for all intermediate layer activations. Thus, even for cases where a classifier can, e.g., rely on color for inference of an object type, the model is encouraged to learn a representation that can recover the input. Hence, not only the color of the input should be retrieved, but also, e.g., its shape, so that more dominant features of the input dataset are embedded into the networks. In contrast to most structures for invertible networks, our approach does not impose architectural restrictions. We demonstrate the benefits of our pretraining for a variety of architectures, from fully connected layers to convolutional neural networks (CNNs) [46], over networks with batch normalization or dropout regularization, to generative adversarial networks (GAN) architectures [25].

Below, we will first give an overview of our formulation and its connection to singular values, before evaluating our model in the context of transfer learning. For a regular, i.e., a non-transfer task, the goal usually is to train a network that gives optimal performance for one specific goal. During a regular training run, the network naturally exploits any observed correlations between input and output distribution. An inherent difficulty in this setting is that typically no knowledge about the specifics of the new data and task domains is available when training the source model. Hence, it is common practice to target broad and difficult tasks hoping that this will result in features that are applicable in new domains [14, 26, 82]. Motivated by autoencoder pretraining, we instead leverage a pretraining approach that takes into account the data distribution of the inputs and drives the network to extract dominant features from the datasets, which differs from regular training for optimal performance of one specific goal. We demonstrate that our approach boosts the model accuracy for original and new tasks for a wide range of applications, from image classification to data-driven weather forecasting.

2 Related work

Greedy layer-wise pretraining was first proposed by Bengio et al. [4], and influenced a large number of follow-up works, providing a crucial method for feature extraction and enabling stable training runs of deeper networks. A detailed evaluation was performed by Erhan et al. [18], also highlighting cases where it can be detrimental. These problems were later on detailed in other works [1]. Principal component analysis (PCA) [29, 77] is a popular approach for dimensionality reduction and feature extraction, and was proposed to, e.g., handle nonlinear relationships between variables [33, 51], separate interpretable components [5], and improve robustness in the presence of outliers [80]. However, PCA is computationally intensive in both memory and run time for larger dataset. Clustering is another popular alternative [6, 22, 65, 84, 89]. As these methods rely on data similarities, they yield a high complexity when the dataset size increases [7]. Sharing similarities with our approach, Rasmus et al. [58] combined supervised and unsupervised learning objectives, but focused on denoising autoencoders and a layer-wise approach without weight sharing.

Unsupervised approaches for representation learning [23, 37, 42, 48, 81], especially contrastive learning, such as SimCLR [8], MoCo-v2 [10], ProtoNCE [49], and PaCo [13], similarly aim for learning generic features from a given dataset, but typically necessitate sophisticated training algorithms. We demonstrate the importance of leveraging state-of-the-art methods for training deep networks, i.e., without decomposing or modifying the network structure. This not only improves performance, but also very significantly simplifies the adoption of the pretraining pass in new application settings.

Extending the classic viewpoint of unsupervised autoencoder pretraining, regularization techniques have also been commonly developed to improve the properties of neural networks [45, 47]. Several prior methods employed “hard orthogonal constraints” to improve weight orthogonality via SVD at training time [35, 38, 57]. Bansal et al. [3] additionally investigated efficient formulations of the orthogonality constraints. Orthogonal convolutional neural networks (OCNN) [75] reformulate the orthogonality constraints to be computed efficiently for networks convolutional layers. In practice, these constraints are difficult to satisfy, and correspondingly only weakly imposed. In addition, all of these methods focus on improving performance for a known, given task. This means the training process only extracts features that the network considers useful for improving the performance of the current task, not necessarily improving generalization or transfer performance [70]. While our approach shares similarities with SVD-based constraints, it can be realized with a very efficient \(L^2\)-based formulation, and takes the full input distribution into account.

Recovering all input information from hidden representations of a network is generally very difficult [15, 53, 54], due to the loss of information throughout the layer transformations. In this context, [69] proposed the information bottleneck principle, which states that for an optimal representation, information unrelated to the current task is omitted. This highlights the common specialization of conventional training approaches.

Reversed network architectures were proposed in previous work [2, 24, 36, 39], but mainly focus on how to make a network fully invertible via augmenting the network with special structures. As a consequence, the path from input to output is different from the reverse path that translates output to input. Besides, the augmented structures of these approaches can be challenging to apply to general network architectures. In contrast, our approach fully preserves an existing architecture for the backward path, and does not require any operations that were not part of the source network. As such, it can easily be applied in new settings, e.g., adversarial training [25]. While methods using reverse connections were previously proposed [67, 85], these modules primarily focus on transferring information between layers for a given task, and on autoencoder structures for domain adaptation, respectively.

3 Method

With state-of-the-art deep learning methods [27, 88], there is no need for breaking down the training process into single layers. Hence, we consider approaches that target whole networks, and employ orthogonalization regularizers as a starting point [35]. Orthogonality constraints were shown to yield improved training performance in various settings [3], and for an n-layer network, they can be formulated as:

i.e., enforcing the transpose of the weight matrix \(M_m\in \mathbb {R}^{s_{m}^{\text {out}}\times s_{m}^{\text {in}}}\) for all layers m to yield its inverse when being multiplied with the original matrix. I denotes the identity matrix with \(I=(\mathbf {e}_{m}^{1},...\mathbf {e}_{m}^{s_{m}^{\text {in}}})\), \(\mathbf {e}_{m}^{j}\) denoting the \(j_{th}\) column unit vector. Theoretically, \(\mathcal {L}_{\text {ort}} = \mathbf{0}\) cannot be perfectly fulfilled because of the information imbalance between inputs and outputs in most deep learning cases [69]. We will first analyze the influence of the loss function \(\mathcal {L}_{\text {ort}}\) assuming that it can be fulfilled, before applying the analysis to our full pretraining method.

Minimizing Eq. (1), i.e., \(M_{m}^{T} M_{m} - I=\mathbf{0}\) is mathematically equivalent to:

with \(rank(M_{m}^{T}M_{m})=s_{m}^{\text {in}}\), and \(\mathbf {e}_{m}^{j}\) as eigenvectors of \(M_{m}^{T}M_{m}\) with eigenvalues of 1. This formulation highlights that Eq. (2) does not depend on the training data, and instead only targets the content of \(M_{m}\). Instead, we will design a constraint that jointly considers data and the trainable weights, allowing us to learn the dominant features of the training dataset directly. We naturally would like to recover all the features of the dataset with a learning task, but finite network capacity makes this infeasible in practice. Instead, we aim for extracting the features that contribute the most in order to achieve a minimum loss value for our designed constraint. As a result, the features that appear the most, i.e., dominant features, will be extracted. In this section, we will introduce our constraint and analysis how it guides the weights to learn dominant features from the dataset. Then, we will illustrate how we insert our constraint into training with a reversed pass network.

Inspired by the classical unsupervised pretraining, we reformulate the orthogonality constraint in a data-driven manner to take into account the set of inputs \(\mathcal {D}_{m}\) for the current layer (either activation from a previous layer or the training data \(\mathcal {D}_{1}\)), and instead minimize

where \(\mathbf {d}^{i}_{m} \in \mathcal {D}_{m} \subset \mathbb {R}^{s_{m}^{\text {in}}}\). Due to its reversible nature, we will denote our approach with an RR subscript in the following. In contrast to classical autoencoder pretraining, we are minimizing this loss jointly for all layers of a network, and while orthogonality only focuses on \(M_m\), our formulation allows for minimizing the loss by extracting the dominant features of the input data.

Let q denotes the number of linearly independent entries in \(\mathcal {D}_{m}\), i.e., its dimension, and t the size of the training data, i.e., \(\mathcal {D}_{m}=t\) , usually with \(q<t\). For every single datum \(\mathbf {d}^{i}_{m}, i=1,2,\ldots ,t\), Eq. (3) results in

and hence \(\mathbf {d}^{i}_{m}\) are eigenvectors of \(M_{m}^{T}M_{m}\) with corresponding eigenvalues being 1. Thus, instead of the generic constraint \(M_{m}^{T} M_{m}=I\) that is completely agnostic to the data at hand, the proposed formulation of Eq. (4) is aware of the training data, which improves the generality of the learned representation, as we will demonstrate in detail below.

The result of applying layer m of a network represents the features extracted this layer via its weight matrix \(M_m\). The singular vectors of the SVD of \(M_m\), can be regarded as input filters, and we can thus analyze the result of \(M_m\) by focusing on its singular vectors. We employ SVD to identify what features are extracted by the parameters in \(M_m\). As by construction, \(rank(M_{m})=r\leqslant min(s_{m}^{\text {in}},s_{m}^{\text {out}})\), the SVD of \(M_{m}\) yields:

with left and right singular vectors in \(U_{m}\) and \(V_{m}\), respectively, and \(\Sigma _{m}\) having square roots of the r eigenvalues of \(M_{m}^{T} M_{m}\) on its diagonal. \(\mathbf {u}_{m}^{k}\) and \(\mathbf {v}_{m}^{k} (k=1,\ldots ,r)\) are the eigenvectors of \(M_{m}M_{m}^{T}\) and \(M_{m}^{T}M_{m}\), respectively [73]. Here, especially the right singular vectors in \(V_{m}^{T}\) are important, as they determine which structures of the input are processed by the transformation \(M_{m}\). The original orthogonality constraint with Eq. (2) yields r unit vectors \(\mathbf {e}_{m}^{j}\) as the eigenvectors of \(M_{m}^{T}M_{m}\). Hence, the influence of Eq. (2) on \(V_{m}\) is completely independent of training data and learning objectives.

Next, we show that \(\mathcal {L}_{\text {RR}}\) facilitates learning dominant features from a given dataset. For this, we consider an arbitrary basis for spanning the space of inputs \(\mathcal {D}_{m}\) for layer m. Let \(\mathcal {B}_{m}:\left\langle \mathbf {w}_{m}^{1},\ldots ,\mathbf {w}_{m}^{q}\right\rangle\) denote a set of q orthonormal basis vectors obtained via a Gram–Schmidt process, with \(t\!\geqslant \!q\!\geqslant \!r\), and \(D_{m}\) denoting the matrix of the vectors in \(\mathcal {B}_{m}\). As we show in more detail in Appendix, our constraint from Eq. (4) requires eigenvectors of \(M_m^{T}M_m\) to be \(\mathbf {w}_{m}^{i}\), with \(V_{m}\) containing r orthogonal vectors \((\mathbf {v}_{m}^{1},\mathbf {v}_{m}^{2},\ldots ,\mathbf {v}_{m}^{r})\) from \(\mathcal {D}_{m}\) and \((s_{m}^{\text {in}}-r)\) vectors from the null space of M.

We are especially interested in how \(M_{m}\) changes w.r.t. input in terms of \(D_{m}\), i.e., we express \(\mathcal {L}_{\text {RR}}\) in terms of \(D_{m}\). By construction, each input \(\mathbf {d}^{i}_{m}\) can be represented as a linear combination via a vector of coefficients \(\mathbf {c}_{m}^{i}\) that multiplies \(D_{m}\) so that \(\mathbf {d}^{i}_{m}\!=\!D_{m}\mathbf {c}_{m}^{i}\). Since \(M_{m} \mathbf {d}_{m}=U_{m} \Sigma _{m} V_{m}^{T}\mathbf {d}_{m}\), the loss \(\mathcal {L}_{\text {RR}}\) of layer m can be rewritten as

where we can assume that the coefficient vector \(\mathbf {c}_{m}\) is accumulated over the training dataset size t via \(\mathbf {c}_{m}=\sum _{i=1}^{t}\mathbf {c}_{m}^{i}\), since eventually every single datum in \(\mathcal {D}_{m}\) will contribute to \(\mathcal {L}_{\text {RR}_{m}}\). The central component of Eq. (6) is \(V_{m}^{T}D_{m}\). For a successful minimization, \(V_{m}\) needs to retain those \(\mathbf {w}_{m}^{i}\) with the largest \(\mathbf {c}_{m}\) coefficients. As \(V_{m}\) is typically severely limited in terms of its representational capabilities by the number of adjustable weights in a network, it needs to focus on the most important eigenvectors in terms of \(\mathbf {c}_{m}\) in order to establish a small distance to \(D_{m}\mathbf {c}_{m}\). Thus, features that appear most in the input data with a corresponding factor in \(\mathbf {c}_{m}\) will more strongly contribute to minimizing \(\mathcal {L}_{\text {RR}_m}\). Above, \(D_{m}\) is only used implicitly to analyze different approaches, and we do not specify any explicit requirements for \(D_{m}\). Since a fixed dataset determines the corresponding \(D_m\), different orthogonal decompositions via Gram–Schmidt lead to different orthonormal bases. However, these different orthonormal bases can be aligned via rotation, and all span the same vector space. Thus, regardless of the particular orthonormal basis that is used, our method always focuses on extracting dominant features that appear most frequently in the dataset. This means the components of \(D_m\) which contribute most to minimizing the loss will be embedded in the neural network. More in-depths discussions are provided in Appendix A.3.

Comparing our constraint from Eq. (3) with the orthogonal constraint in Eq. (1), we can see that our formulation is actually stricter. As a consequence, our method can retain the advantages of orthogonal constraints while simultaneously embedding dominant features into the weight matrices.

To summarize, \(V_{m}\) is driven toward containing r orthogonal vectors \(\mathbf{w}_{m}^{i}\) that represent the most frequent features of the input data, i.e., the dominant features. Additionally, due to the column vectors of \(V_{m}\) being mutually orthogonal, \(M_{m}\) is encouraged to extract different features from the input. For the sake of being distinct and representative of the dataset, these features have the potential to be useful for new inference tasks. The feature vectors embedded in \(M_m\) can be extracted from the network weights in practical settings, as we will demonstrate below.

3.1 Realization in neural networks

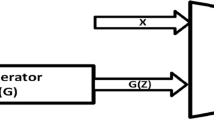

Calculating \(M_{m}^{T} M_{m} \mathbf {d}^{i}_{m}\) in Eq. (3) directly is usually very expensive due to the dimensionality of \(M_{m}\). Instead, we reuse \(M_{m} \mathbf {d}^{i}_{m}\) in the forward pass network and build an extra reverse pass network to calculate \(M_{m}^{T} M_{m} \mathbf {d}^{i}_{m}\) by reusing parameters from the forward pass network. In the following, we will explain how to constrain the intermediate results of the network to efficiently realize Eq. (3) when training.

Regular training typically starts with a chosen network structure and trains the model weights for a given task via a suitable loss function. Our approach fully retains this setup and adds a second pass that reverses the initial structure while reusing all weights and biases. For instance, for a typical fully connected layer in the forward pass with \(\mathbf {d}_{m+1} = M_{m} \mathbf {d}_{m} + \mathbf {b}_{m}\), the reverse pass operation is given by \(\mathbf {d}^{'}_{m} = M^{T}_{m} (\mathbf {d}_{m+1}-\mathbf {b}_{m})\), where \(\mathbf {d}^{'}_{m}\) denotes the reconstructed input.

Left: An overview of the regular forward pass (blue) and the corresponding reverse pass (yellow). The input of layer m is denoted by \(\mathbf {d}_{m}\). The right side illustrates how parameters are reused for a convolutional layer in the reverse pass. The activation function and batch normalization of layer m are denoted by \(f_m\) and \(BN_m\), respectively. The shared kernel and bias are represented by \(M_m\) and \(\mathbf {b}_{m}\), respectively

Our goal with the reverse pass is to transpose all operations of the forward pass to obtain identical intermediate activations between the layers with matching dimensionality. We can then constrain the intermediate results of each layer of the forward pass to match the results of the backward pass, as illustrated in Fig. 2. While the construction of the reverse pass is straightforward for all standard operations, i.e., fully connected layers, convolutions, pooling, etc., slight adjustments are necessary for nonlinear activation functions (AFs) and batch normalization (BN). It is crucial for our formulation that \(\mathbf {d}_{m}\) and \(\mathbf {d}^{'}_{m}\) contain the same latent space content in terms of range and dimensionality, such that they can be compared in the loss. Hence, we use the BN parameters and the activation of layer \(m-1\) from the forward pass, i.e., \(f_{m-1}\) and \(BN_{m-1}\), for layer m in the reverse pass.

Unlike greedy layer-wise autoencoder pretraining, which trains each layer separately and only constrains \(\mathbf {d}_{1}\) and \(\mathbf {d}^{'}_{1}\), we jointly train all layers and constrain all intermediate results. Due to the symmetric structure of the two passes, we can use a simple \(L^2\) difference to drive the network toward aligning the results:

Here, \(\mathbf {d}_{m}\) denotes the input of layer m in the forward pass and \(\mathbf {d}^{'}_{m}\) the output of layer m for the reverse pass. \(\lambda _{m}\) denotes a scaling factor for the loss of layer m, which, however, is typically constant in our tests across all layers. Note that with our notation, \(\mathbf {d}_{1}\) and \(\mathbf {d}_{1}^{'}\) refer to the input data, and the reconstructed input, respectively.

Next, we show how this setup realizes the regularization from Eq. (3). For clarity, we use a fully connected layer with bias. In a neural network with n hidden layers, the forward process for a layer m is given by \(\mathbf {d}_{m+1}=M_{m} \mathbf {d}_{m}+\mathbf {b}_{m}\), with \(\mathbf {d}_{1}\) and \(\mathbf {d}_{n+1}\) denoting input and output, respectively. All neural networks can be classified according to whether the full reverse pass can be built from the output to input, and we also classify our pretraining as full network pretraining and localized pretraining in implementation.

3.1.1 Full network pretraining

For networks where a unique path from output to input exists, we build a reverse pass network with transposed operations starting with the final output where \(\mathbf {d}_{n+1}=\mathbf {d}^{'}_{n+1}\), and the intermediate results \(\mathbf {d}^{'}_{m+1}\):

where the reverse pass activation \(\mathbf {d}^{'}_{m}\) depends on \(\mathbf {d}_{m+1}{'}\), this formulation yields a full reverse pass from output to input, which we use for most training runs below. Here, we analyze the influence of Eq. (7) during training by assuming \(\mathcal {L}_{\text {RR}}=0\) during the minimization. We then obtain activated intermediate content during the reverse pass that reconstructs the values computed in the forward pass, i.e., \(\mathbf {d}^{'}_{m+1}=\mathbf {d}_{m+1}\) holds. In this case

which means that Eq. (7) is consistent with Eq. (3).

3.1.2 Localized pretraining

For architectures that have a reverse path that is not unique, e.g., in the presence of additive residual connections, we cannot uniquely determine the b, c in \(a=b+c\) given only a. In such cases, we use a local formulation, and \(d_{m+1}\) is used as input of the reverse path of layer m directly. In this case Eq. (8) can be written as:

which effectively employs \(\mathbf {d}_{m+1}\) for jointly constraining all intermediate activations in the reverse pass. Moreover, it is consistent with Eq. (3).

In summary, Eq. (7) will drive the network toward a state that is as-invertible-as-possible for the given input dataset. Comparing the full network pretraining and localized pretraining, the full network pretraining establishes a stronger relationship among the loss terms of different layers, and allows earlier layers to decrease the accumulated loss of later layers. Localized pretraining, on the other hand, is even valid for cases where the reverse path from output to input is not unique.

Up to now, the discussion focused on simplified neural networks with convolutional operations, which are crucial for feature extraction, but without AFs or extensions such as BN, which are applied to increase model nonlinearity. While we leave a more detailed theoretical analysis of these extensions for future work, we apply these nonlinear extensions for all of our tests in Sects. 4 and 5. Thus, our experiments demonstrate that our method works in conjunction with BN and AFs. They show consistently show that the inherent properties of our pretraining remain valid: even in the nonlinear setting our approach successfully extracts dominant structures and yields improved generalization.

In Appendix, we give details on how to ensure that the latent space content for forward and reverse pass is aligned such that differences can be minimized, and we give practical examples of full and localized pretraining architectures.

To summarize, we realize the loss formulation of Eq. (7) to minimize \(\sum _{m=1}^{n}\left\| (M_{m}^{T} M_{m} - I) \mathbf {d}_{m}\right\| _2^2\) without explicitly having to construct \(M_{m}^{T} M_{m}\). Following the notation above, we will refer to networks trained with the added reverse structure and the additional loss terms as RR variants. We consider two variants for the reverse pass: a local pretraining Eq. (10) using the datum \(\mathbf {d}_{m+1}\) of a given layer, and a full version via Eq. (8) which uses \(\mathbf {d}^{'}_{m+1}\) incoming from the next layer during the reverse pass. It is worth pointing out that our constraint is only used during the pretraining stage, and pretrained models are used as a starting point for the fine-tuning stage, where the search space for parameters is the same as in standard training, i.e., training is not constrained by our approach.

3.2 Embedding singular values

In the following, we evaluate networks trained with different methodologies. We distinguish our pretraining approach \(\text {RR}\)(in green), regular autoencoder pretraining \(\text {Pre}_{\text {}}\) (in gray), and orthogonality constraints \(\text {Ort}_{\text {}}\) (in blue). In addition, \(\text {Std}_{\text {}}\) denotes a regular training run (in in graphs below), i.e., models trained without autoencoder pretraining, orthogonality regularization or our proposed method. Besides, a subscript will denote the task variant the model was trained for, such as \(\text {Std}_{\text {T}}\) for task T. While we typically use all layers of a network in the constraints, a reduced variant that we compare to below only applies the constraint for the input data, i.e., m=1. A network trained with this variant, denoted by \(\text {RR}_\text {A}^{1}\), is effectively trained to only reconstruct the input. It contains no constraints for the inner activations and layers of the network. For the \(\text {Ort}_{\text {}}\) models, we use the Spectral Restricted Isometry Property algorithm [3].

We verify that the column vectors of \(V_{m}\) of models from RR training contain the dominant features of the input with the help of a classification test, employing a single fully connected layer, i.e., \(\mathbf {d}_{2}=M_{1} \mathbf {d}_{1}\), with BN and activation. To quantify this similarity, we compute a Learned Perceptual Image Patch Similarity (LPIPS) [86] between \(v_{m}^{i}\) and the training data (lower values being better).

Column vectors of \(V_{m}\) for different trained models \(\text {Std}_{\text {}}\), \(\text {Ort}_{\text {}}\), \(\text {Pre}_{\text {}}\) and \(\text {RR}^{}_{\text {}}\) for peaks. Input features clearly are successfully embedded in the weights of \(\text {RR}^{}_{\text {}}\), as confirmed by the LPIPS scores

We employ a training dataset constructed from two dominant classes (a peak in the top-left and bottom-right quadrant, respectively), augmented with noise in the form of random scribbles, as shown in Fig. 3. Based on the analysis above, we expect the RR training to extract the two dominant peaks during training. The LPIPS measurements confirm our SVD argumentation above, with average scores of \(0.217 \pm 0.022\) for \(\text {RR}^{}_{\text {}}\), \(0.319 \pm 0.114\) for \(\text {Pre}_{\text {}}\), \(0.495 \pm 0.006\) for \(\text {Ort}_{\text {}}\), and \(0.500 \pm 0.002\) for \(\text {Std}_{\text {}}\), i.e., the \(\text {RR}^{}_{\text {}}\) model fares significantly better than the others. At the same time, the peaks are clearly visible for RR models, while the other models fail to extract structures that resemble the input. Thus, by training with the full network and the original training objective, our pretraining yields structures that are interpretable and be inspected by humans.

The results above experimentally confirm our formulation of the RR loss and its ability to extract dominant and generalizing structures from the training data. In addition, they give the first indication that this still holds when nonlinear components such as AFs are present. Next, we will focus on quantified metrics and turn to measurements in terms of mutual information to illustrate the behavior of our pretraining for deeper networks.

4 Evaluation in terms of mutual information

Mutual information (MI) measures the dependence of two random variables, i.e., higher MI means that there is more shared information between two parameters. As our approach hinges on the introduction of the reverse pass, we will show that it succeeds in terms of establishing MI between the input and the constrained intermediates inside a network. More formally, MI I(X; Y) of random variables X and Y measures how different the joint distribution of X and Y is w.r.t. the product of their marginal distributions, i.e., the Kullback–Leibler divergence \(I(X;Y) = D_{KL}[P_{(X,Y)}||P_X P_Y]\). [69] proposed MI plane to analyze trained models, which show the MI between the input X and activations of a layer \(\mathcal {D}_{m}\), i.e., \(I(X;\mathcal {D}_{m})\) and \(I(\mathcal {D}_{m};Y)\), i.e., MI of layer \(\mathcal {D}_{m}\) with output Y. These two quantities indicate how much information about the input and output distributions are retained at each layer, and we use them to show to which extent our pretraining succeeds at incorporating information about the inputs throughout training.

MI planes for different models: a Visual overview of the contents. b Plane for task A. Points on each line correspond to layers of one type of model. All points of \(\text {RR}^{}_{\text {A}}\), are located in the center of the graph, while \(\text {Std}_{\text {A}}\) and \(\text {Ort}_{\text {A}}\), exhibit large \(I(\mathcal {D}_{m};Y)\), i.e., specialize on the output. \(\text {Pre}_{\text {A}}\) strongly focuses on reconstructing the input with high \(I(X; \mathcal {D}_{m})\) for early layers. c, d After fine-tuning for A/B. The last layer \(\mathcal {D}_{7}\) of \(\text {RR}^{}_{\text {AA}}\) and \(\text {RR}^{}_{\text {AB}}\) successfully builds the strongest relationship with Y, yielding the highest accuracy

The following tests employ networks with six fully connected layers and nonlinear AFs, with the objective to learn the mapping from 12 binary inputs to 2 binary output digits [64], with results accumulated over five runs. Experimental details are illustrated in Appendix. We compare the versions \(\text {Std}_{\text {A}}\), \(\text {Pre}_{\text {A}}\), \(\text {Ort}_{\text {A}}\), and \(\text {RR}^{}_{\text {A}}\). We visualize model comparisons with the MI planes, the content of which is visually summarized in Fig. 4a. Horizontal/vertical axis of the MI plane denotes \(I(X; \mathcal {D}_{m})/I(Y; \mathcal {D}_{m})\), which measures the amount of shared information between the \(m^{th}\) layer \(\mathcal {D}_{m}\) and X/Y after training. This depicts how much information about input and output distribution is retained at each layer, as well as how these relationships change throughout the network. For regular training, the information bottleneck principle [69] states that early layers contain more information about the input, i.e., show high values for \(I(X;\mathcal {D}_{m})\) and \(I(\mathcal {D}_{m};Y)\). As a result, these layers are frequently visible in the top-right corner of MI plane visualizations. After training, later layers typically share a large amount of information with the output, i.e., show high \(I(\mathcal {D}_{m};Y)\) values, and correlate less with the input (low \(I(X;\mathcal {D}_{m})\)). As a result, they typically appear in the top-left corner of MI plane graphs.

The graph in Fig. 4b highlights that training with the RR loss \(\text {RR}^{}_{\text {A}}\) correlates input and output distributions across all layers: the cluster of green points in the center of the graph indicates that all layers contain balanced MI between input as well as output and the activations of each layer. \(\text {Std}_{\text {A}}\) and \(\text {Ort}_{\text {A}}\) almost exclusively focus on the output with \(I(\mathcal {D}_{m};Y)\) being close to one and information dropped out layer by layer leads to a low \(I(X; \mathcal {D}_{7})\) value. \(\text {Pre}_{\text {A}}\) instead only focuses on reconstructing inputs. Thus, the early layers cluster in the upper-right corner, while the last layer \(I(\mathcal {D}_{7};Y)\) fails to align with the outputs. Once we continue to fine-tune these models without regularization, the MI naturally shifts toward the output, as illustrated in Fig. 4c. Here, \(\text {RR}^{}_{\text {AA}}\) outperforms the other models in terms of the final performance. Furthermore, we design a transfer task B with switched output digits, which means that in task B, the original two binary output digits, e.g., (1, 0), will be switched into (0, 1). This change of the dataset results in significantly different mapping relationships between inputs and outputs compared with original task A. Likewise, \(\text {RR}^{}_{\text {AB}}\) performs best for a transfer task B with switched output digits, as shown in graph d, the final performance for both tasks across all runs is summarized in Table 1. The graph demonstrates that the proposed pretraining succeeds in robustly establishing mutual information between inputs and targets across a full network while extracting reusable features. The nonlinearity of the underlying network architectures does not impede the performance of the \(\text {RR}^{}_{\text {}}\) models. It is worth pointing out that \(\text {Std}_{\text {}}\) and \(\text {Ort}_{\text {}}\) exhibit high performance variance in transfer task B, but not in base task A, because \(\text {Std}_{\text {A}}\) and \(\text {Ort}_{\text {A}}\) were trained solely to improve task A performance. The extracted features are not guaranteed to be useful for task B in this process. As a result, performance in task B is not consistent across training. On the other hand, \(\text {RR}^{}_{\text {A}}\) focuses on extracting dominant features from the dataset, rather than specific tasks, which significantly improves the stability of training across different runs for tasks A and B.

Comparing Fig. 4b and d, we can see that after pretraining via our approach, balanced MI is obtained between input as well as output and the activations of each layer, indicating that our model extracted balanced features from both the input and output. After transfer learning for task B, we can see that all layers are located at the top part of the graph with high \(I(\mathcal {D}_{m};Y)\) values, indicating that the model aims to improve the performance for a specific task.

We also compare the mutual information of three variants of our pretraining: the local variant \(\text {lRR}_\text {A}\), the full version \(\text {RR}^{}_{\text {A}}\), and a variant of the latter: \(\text {RR}_\text {A}^{1}\), i.e., a version where only the input \(\mathbf {d}_{1}\) is constrained to be reconstructed. Figure 5 shows the MI planes for these three models. Only one layer is constrained with our formulation in \(\text {RR}_\text {A}^{1}\), but we can see that the last two layers of the model are already located in the middle part of the MI plane (Fig. 5a), and the influence is in line with our full version \(\text {RR}^{}_{\text {A}}\). Despite the local nature of \(\text {lRR}_\text {A}\), it manages to establish MI for the majority of the layers, as indicated by the cluster of layers in the center of the MI plane. Only the first layer moves toward the upper-right corner, and the second layer is affected slightly. In other words, these layers exhibit a stronger relationship with the distribution of the outputs. Despite this, the overall performance when fine-tuning or for the task transfer remains largely unaffected, e.g., the \(\text {lRR}_\text {AA/AB}\) still clearly outperforms \(\text {RR}^{1}_{\text {AA/AB}}\). This confirms our choice to use the full pretraining when network connectivity permits, and employ the local version in all other cases. Accuracy comparisons among different models are displayed in Table 1. \(\text {RR}^{}_{\text {AA/AB}}\) yields the highest performance, while \(\text {lRR}_\text {AA/AB}\) performs similarly with \(\text {RR}^{}_{\text {AA/AB}}\).

In summary, from the MI tests we can conclude that training with our formulation (\(\text {RR}^{}_{\text {A}}\) and \(\text {lRR}_\text {A}\)) is useful for correlating input and output distributions across all layers. Furthermore, this correlation would be strengthened if more layers were constrained with our formulations, e.g., comparing \(\text {RR}^{}_{\text {A}}\) with \(\text {RR}^{1}_{\text {A}}\). On the other hand, models pretrained with our formulation, e.g., \(\text {RR}^{}_{\text {A}}\) and \(\text {lRR}_\text {A}\), can achieve highest value of \(I(\mathcal {D}_{7};Y)\) and performance for source task A and transfer task B after fine-tuning.

MI plane comparisons among \(\text {RR}_\text {A}^{1}\), local variant \(\text {lRR}_\text {A}\) and the full version \(\text {RR}^{}_{\text {A}}\). Points on each line correspond to layers of one type of model. a MI Plane for task A. All points of \(\text {RR}^{}_{\text {A}}\) and the majority of points for \(\text {lRR}_\text {A}\) (five out seven) are located in the center of the graph, i.e., successfully connect input and output distributions. b, c After fine-tuning for A/B. The last layer \(\mathcal {D}_{7}\) of \(\text {RR}^{}_{\text {AA/AB}}\) builds the strongest relationship with Y. \(I(\mathcal {D}_{7};Y)\) of \(\text {lRR}_\text {AA/AB}\) is only slightly lower than \(\text {RR}^{}_{\text {AA/AB}}\)

MI has received attention recently as a learning objective, e.g., in the form of the InfoGAN approach [9] for learning disentangled and interpretable latent representations. While MI is typically challenging to assess and estimate [74], the results above show that our approach provides a straightforward and robust way for including it as a learning objective. In this way, we can easily, e.g., reproduce the disentangling results from [9] without explicitly calculating mutual information, which are shown in Fig. 1c. A generative model with our pretraining extracts intuitive latent dimensions for the different digits, line thickness, and orientation without any additional modifications to the loss function. The joint training of the full network with the proposed reverse structure, including nonlinearities and normalization, yields a natural and intuitive decomposition.

5 Experimental results

We now turn to a broad range of network structures, i.e., CNNs, Autoencoders, and GANs, with a variety of datasets and tasks to show our approach succeeds in improving inference accuracy and generality for modern-day applications and architectures. All tests use nonlinear activations and several of them include BN. Experimental details are provided in Appendix.

5.1 CIFAR-100 classification

We first focus on orthogonalization for a CIFAR-100 classification task with a ResNet 18 network, and compare the performance of \(\text {RR}^{}_{\text {}}\) with the variants \(\text {Std}_{\text {}}\), \(\text {Ort}_{\text {}}\), in addition to an OCNN (in light blue) network [75]. The CNN architecture has ca. 11 million trainable parameters in each case. \(\text {Pre}_{\text {}}\) is not included in this comparison due to its incompatibility with ResNet architectures. The resulting performance for the different variants (evaluated for 3 runs each) is shown in Fig. 6. For CIFAR-100, the orthogonal regularizations (\(\text {Ort}_{\text {}}\) and OCNN) result in noticeable performance gains of \(0.33\%\) and \(0.337\%\), but \(\text {RR}^{}_{\text {}}\) clearly outperforms both with an improvements of \(1.2\%\). Despite being different formulations, both \(\text {Ort}_{\text {}}\) and OCNN represent orthogonal regularizers that aim for the same goal of weight orthogonality. Hence, their performance is on-par, and we will focus on the more generic \(\text {Ort}_{\text {}}\) variant for the following evaluations.

5.2 Transfer learning benchmarks

We evaluate our approach with two state-of-the-art benchmarks for transfer learning (Fig. 7). The first one uses the texture-shape dataset from [21], which contains challenging images of various shapes combined with patterns and textures to be classified. The results below are given for 10 runs each. For the stylized data shown in Fig. 8a, the accuracy of \(\text {Pre}_{\text {TS}}\) is low with 20.8%. This result is in line with observations in previous work and confirms the detrimental effect of classical pretraining. \(\text {Std}_{\text {TS}}\) yields a performance of 44.2%, and \(\text {Ort}_{\text {TS}}\) improves the performance to 47.0%, while \(\text {RR}^{}_{\text {TS}}\) yields a performance of 54.7% (see Fig. 8b). Thus, the accuracy of \(\text {RR}^{}_{\text {TS}}\) is \(162.98\%\) higher than \(\text {Pre}_{\text {TS}}\), \(23.76\%\) higher than \(\text {Std}_{\text {TS}}\), and \(16.38\%\) higher than \(\text {Ort}_{\text {TS}}\). To assess generality, we also apply the models to new data without re-training, i.e., an edge and a filled dataset, also shown in Fig. 8a. For the edge dataset, \(\text {RR}^{}_{\text {TS}}\) outperforms \(\text {Pre}_{\text {TS}}\), \(\text {Std}_{\text {TS}}\) and \(\text {Ort}_{\text {TS}}\) by \(178.82\%\), \(50\%\) and \(16.75\%\), respectively.

Exemplary curves for test accuracy at training time for \(\text {Std}_{\text {TS}}\), \(\text {Ort}_{\text {TS}}\), and \(\text {RR}^{}_{\text {TS}}\) are shown in Fig. 7. \(\text {Pre}_{\text {TS}}\) is not included since its layer-wise curriculum precludes a direct comparison. The graph shows that \(\text {RR}^{}_{\text {TS}}\) converges faster than \(\text {Std}_{\text {TS}}\) and \(\text {Ort}_{\text {TS}}\) from the very beginning. It achieves the performance of \(\text {Std}_{\text {TS}}\) and \(\text {Ort}_{\text {TS}}\) with ca. \(\frac{1}{3}\) and \(\frac{1}{2}\) of number of training epochs, respectively. Achieving comparable performance with less training effort, and a higher final performance support the reasoning given in Sect. 3: \(\text {RR}^{}_{\text {TS}}\) with its reverse pass is more efficient at extracting relevant features from the training data. Over the course of our tests, we observed a similar convergence behavior for a wide range of other runs.

It is worth pointing out that the additional constraints of our training approach lead to moderately increased requirements for memory and computations, e.g., 41.86% more time per epoch than regular training for the texture-shape test. As this test employs a small network with only ca. \(1.2\times 10^4\) trainable weights, the computations for our approach still make a noticeable difference in training time. However, as we show below, the difference becomes negligible for larger networks. On the other hand, it allows us to train smaller models: we can reduce the weight count by 32% for the texture-shape case while still being on-par with \(\text {Ort}_{\text {TS}}\) in terms of classification performance. By comparison, regular layer-wise pretraining requires significant overhead and fundamental changes to the training process. Our pretraining fully integrates with existing training methodologies and can easily be deactivated via \(\lambda _{m}=0\). More details of runtime performance and training behavior are given in Appendix.

As a second test case, we use a CIFAR-based task transfer [61] that measures how well models trained on the original CIFAR 10, generalize to a new dataset (CIFAR 10.1) collected according to the same principles as the original one. Here, we use a ResNet 110 with 110 layers and 1.7 million parameters, Due to the consistently low performance of the \(\text {Pre}_{\text {}}\) models [1], we focus on \(\text {Std}_{\text {}}\), \(\text {Ort}_{\text {}}\) and \(\text {RR}^{}_{\text {}}\) for this test case. In terms of accuracy across 5 runs, \(\text {Ort}_{\text {C10}}\) outperforms \(\text {Std}_{\text {C10}}\) by 0.39%, while \(\text {RR}^{}_{\text {C10}}\) outperforms \(\text {Ort}_{\text {C10}}\) by another 0.28% in terms of absolute test accuracy (Fig. 9). This increase for RR training matches the gains reported for orthogonality in previous work [3], thus showing that our approach yields substantial practical improvements over the latter. It is especially interesting how well performance for CIFAR 10 translates into transfer performance for CIFAR 10.1. Here, \(\text {RR}^{}_{\text {C10}}\) still outperforms \(\text {Ort}_{\text {C10}}\) and \(\text {Std}_{\text {C10}}\) by 0.22% and 0.95%, respectively. Hence, the models from our pretraining very successfully translate gains in performance from the original task to the new one, indicating that the models have successfully learned a set of more general features. To summarize, both benchmark cases confirm that the proposed pretraining benefits generalization.

5.3 Smoke generation

In this section, we employ our pretraining in the context of generative models for transferring from synthetic to real-world data from the ScalarFlow dataset [17]. As super-resolution task A, we first use a fully convolutional generator network, adversarially trained with a discriminator network on the synthetic flow data. While regular pretraining is more amenable to generative tasks than orthogonal regularization, it cannot be directly combined with adversarial training. Hence, we pretrain a model \(\text {Pre}_{\text {}}\) for a reconstruction task at high-resolution without discriminator instead. Figure 10a demonstrates that our method works well in conjunction with the GAN training: As shown in the bottom row, the trained generator succeeds in recovering the input via the reverse pass without modifications. A regular model \(\text {Std}_{\text {A}}\), only yields a black image in this case. For \(\text {Pre}_{\text {A}}\), the layer-wise nature of the pretraining severely limits its capabilities to learn the correct data distribution [88], leading to low performance.

a Example output and reconstructed inputs, with the reference shown right. Only \(\text {RR}^{}_{\text {A}}\) successfully recovers the input, \(\text {Std}_{\text {A}}\) produces a black image, while \(\text {Pre}_{\text {A}}\) fares poorly. b Mean squared error \(L^2\) loss comparisons for two different generative transfer learning tasks (averaged across 5 runs each). The \(\text {RR}^{}_{\text {}}\) models show the best performance for both tasks

Latitude-weighted RMSE comparisons between \(\text {Std}_{\text {}}\) and \(\text {RR}_{\text {}}\) for ERA and CMIP datasets. Models trained with \(\text {RR}_{\text {}}\) pretraining significantly outperform state-of-the-art \(\text {Std}_{\text {}}\) for all cases. The minimum performance improvements of \(\text {RR}_{\text {}}\) is \(5.7\%\) for the case with ERA dataset and dropout regularization

a Comparisons of predictions for T2M and T850 on 9 Aug. 2019, 22:00 for the ERA dataset without dropout regularization. b Prediction comparisons of three physical quantities on 26 June 2014, 0:00 for the CMIP dataset without dropout regularization. As confirmed by the quantified results, \(\text {RR}^{}_{\text {}}\) predicts results closer to the reference

We now mirror the generator model from the previous task to evaluate an autoencoder structure that we apply to two different datasets: the synthetic smoke data used for the GAN training (task \(B_1\)), and a real-world RGB dataset of smoke clouds (task \(B_2\)). Thus, both variants represent transfer tasks, the second one being more difficult due to the changed data distribution. The resulting losses, summarized in Fig. 10b, show that RR training performs best for both autoencoder tasks: the \(L^2\) loss of \(\text {RR}_{AB_{1}}\) is \(68.88\%\) lower than \(\text {Std}_{AB_{1}}\), while it is \(13.3\%\) lower for task \(B_2\). The proposed pretraining also clearly outperforms the \(\text {Pre}_{\text {}}\) variants. Example outputs of \(\text {Pre}_{AB_{1}}\), \(\text {Std}_{AB_{1}}\) and \(\text {RR}_{AB_{1}}\) for transfer task \(B_1\) are shown in Fig. 11. It is apparent that \(\text {RR}_{AB_{1}}\) provides the best performance among these models. Figure 12 provides visual comparisons for transfer task \(B_2\), where \(\text {RR}_{AB_{2}}\) generates results that are closer to the reference. Within this series of tests, the \(\text {RR}_{\text {}}\) performance for task \(B_2\) is especially encouraging, as this task represents a synthetic to real transfer.

5.4 Weather forecasting

Pretraining is particularly attractive in situations where the amount of data for training is severely limited. Weather forecasting is such a case, as accurate, real-world data for many relevant quantities are only available for approximately 50 years. We use the ERA dataset [31] consisting of assimilated measurements, and additionally evaluate our models with simulated data from the CMIP database [19]. We replicate the architecture and training procedure of the WeatherBench benchmark [59]. Hence, we use prognostic variables at seven vertical levels, together with some surface and constant fields at the current time t as well as \(t-6h\) and \(t-12h\) as input, and target three-day forecasts of 500 hPa geopotential (Z500), 2-meter temperature (T2M), and 850 hPa temperature (T850). For training, we employ a convolutional ResNet architecture with 19 residual blocks and 6.36M trainable parameters, as well as a latitude-weighted root mean squared error (RMSE) as loss functions. For worldwide observations dataset ERA (six-hour intervals with a 5.625\(^{\circ }\) resolution.), we train the models with data from 1979 to 2015 and evaluate performance with RMSE measurements across all data points from the years 2017 and 2018. For the historical simulation dataset CMIP, years 1850 to 2005 are used as training data, while performance is measured with years 2006 to 2014.

We show comparisons between the regular model \(\text {Std}_{\text {}}\) and \(\text {RR}_{\text {}}\) for both ERA and CMIP datasets. As Rasp et al. [59] relied on dropout regularization, we additionally train and evaluate models for both datasets with and without dropout. Following their methodology, \(L_2\) regularization is applied for all tests. As regular pretraining does not support residual connections, we omit it for the weather forecasting tests.

Performance comparisons are shown in Fig. 13. Across all cases, irrespective of whether observation data or simulation data is used, the \(\text {RR}_{\text {}}\) models clearly outperform the regular models and yield consistent improvements. This also indicates that our approach is compatible with other forms of regularization, such as dropout and \(L_2\) regularization. The \(\text {RR}_{\text {}}\) models yield performance improvements of \(6\%\) ~\(8\%\) for the CMIP cases, and the ERA case with dropout. Here, the re-trained \(\text {Std}_{\text {}}\) version is on-par with the data reported in [59], while our \(\text {RR}_{\text {}}\) model exhibits a performance improvement of \(6.3\%\) on average. For the ERA dataset without dropout regularization, the \(\text {RR}_{\text {}}\) model decreases the loss even more strongly by \(13.7\%\).

Visualizations of an inference result for 9 Aug. 2019 22:00 for the ERA dataset without dropout regularization are shown in Figs. 1d and 14a. Predictions of \(\text {RR}_{\text {}}\) yield lower errors, and are closer to the reference. The same conclusions can be drawn from the example at 26 June 2014 0:00 from the CMIP dataset without dropout regularization in Fig. 14b.

6 Conclusions

We have proposed a novel pretraining approach inspired by classic methods for unsupervised autoencoder pretraining and orthogonality constraints. In contrast to the classical methods, we employ a constrained reverse pass for the full nonlinear network structure and include the original learning objective. Weight matrix SVD is applied to visually analyze and interpret that our proposed method is more capable of extracting dominant features from the training dataset. We have shown for a wide range of scenarios, from mutual information, over transfer learning benchmarks to weather forecasting, that the proposed pretraining yields networks with improved performance and better generalizing capabilities. Our training approach is general, easy to integrate, and imposes no requirements regarding network structure or training methods. As a whole, our results show that unsupervised pretraining has not lost its relevance in today’s deep learning environment.

As future work, we believe it will be exciting to evaluate our approach in additional contexts, e.g., for temporal predictions [12, 32], and for training explainable and interpretable models [9, 16, 83].

Data availability

All data generated or analyzed during this study are included in this published article and its supplementary material.

References

Alberti M, Seuret M, Ingold R, et al (2017) A pitfall of unsupervised pre-training. arXiv preprint arXiv:1703.04332

Ardizzone L, Kruse J, Wirkert S, et al (2018) Analyzing inverse problems with invertible neural networks. arXiv preprint arXiv:1808.04730

Bansal N, Chen X, Wang Z (2018) Can we gain more from orthogonality regularizations in training deep cnns? In: Advances in Neural Information Processing Systems, Curran Associates Inc., pp 4266–4276

Bengio Y, Lamblin P, Popovici D, et al (2007) Greedy layer-wise training of deep networks. In: Advances in Neural Information Processing Systems, pp 153–160

Cai TT, Ma Z, Wu Y (2013) Sparse pca: optimal rates and adaptive estimation. Ann Stat 41(6):3074–3110

Caron M, Bojanowski P, Joulin A, et al (2018) Deep clustering for unsupervised learning of visual features. In: Proceedings of the European Conference on Computer Vision (ECCV), pp 132–149

Caron M, Bojanowski P, Mairal J, et al (2019) Unsupervised pre-training of image features on non-curated data. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 2959–2968

Chen T, Kornblith S, Norouzi M, et al (2020a) A simple framework for contrastive learning of visual representations. In: International Conference on Machine Learning, PMLR, pp 1597–1607

Chen X, Duan Y, Houthooft R, et al (2016) Infogan: Interpretable representation learning by information maximizing generative adversarial nets. In: Advances in Neural Information Processing Systems, pp 2172–2180

Chen X, Fan H, Girshick R, et al (2020b) Improved baselines with momentum contrastive learning. arXiv preprint arXiv:2003.04297

Chen Y, Li J, Jiang H, et al (2022) Metalr: Layer-wise learning rate based on meta-learning for adaptively fine-tuning medical pre-trained models. arXiv preprint arXiv:2206.01408

Cho K, Van Merriënboer B, Gulcehre C, et al (2014) Learning phrase representations using rnn encoder-decoder for statistical machine translation. arXiv preprint arXiv:1406.1078

Cui J, Zhong Z, Liu S, et al (2021) Parametric contrastive learning. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 715–724

Ding H, Zhou SK, Chellappa R (2017) Facenet2expnet: Regularizing a deep face recognition net for expression recognition. In: 2017 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017), IEEE, pp 118–126

Dinh L, Sohl-Dickstein J, Bengio S (2016) Density estimation using real nvp. arXiv preprint arXiv:1605.08803

Du M, Liu N, Hu X (2018) Techniques for interpretable machine learning. arXiv preprint arXiv:1808.00033

Eckert ML, Um K, Thuerey N (2019) Scalarflow: a large-scale volumetric data set of real-world scalar transport flows for computer animation and machine learning. ACM Trans Graph TOG 38(6):239

Erhan D, Courville A, Bengio Y, et al (2010) Why does unsupervised pre-training help deep learning? In: Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, pp 201–208

Eyring V, Bony S, Meehl GA et al (2016) Overview of the coupled model intercomparison project phase 6 (cmip6) experimental design and organization. Geosci Model Dev 9(5):1937–1958

Frankle J, Carbin M (2018) The lottery ticket hypothesis: finding sparse, trainable neural networks. arXiv preprint arXiv:1803.03635

Geirhos R, Rubisch P, Michaelis C, et al (2018) Imagenet-trained cnns are biased towards texture; increasing shape bias improves accuracy and robustness. arXiv preprint arXiv:1811.12231

Ghazal TM, Hussain MZ, Said RA, et al (2021) Performances of k-means clustering algorithm with different distance metrics. Intell Autom Soft Comput

Gidaris S, Singh P, Komodakis N (2018) Unsupervised representation learning by predicting image rotations. arXiv preprint arXiv:1803.07728

Gomez AN, Ren M, Urtasun R, et al (2017) The reversible residual network: Backpropagation without storing activations. In: Advances in Neural Information Processing Systems, pp 2214–2224

Goodfellow I, Pouget-Abadie J, Mirza M, et al (2014) Generative adversarial nets. In: Advances in Neural Information Processing Systems, pp 2672–2680

Gopalakrishnan K, Khaitan SK, Choudhary A et al (2017) Deep convolutional neural networks with transfer learning for computer vision-based data-driven pavement distress detection. Constr Build Mater 157:322–330

Hanafy YA, Mashaly M, Abd El Ghany MA (2021) An efficient hardware design for a low-latency traffic flow prediction system using an online neural network. Electronics 10(16):1875

Hanson SJ, Pratt LY (1989) Comparing biases for minimal network construction with back-propagation. In: Advances in Neural Information Processing Systems, pp 177–185

Hasan BMS, Abdulazeez AM (2021) A review of principal component analysis algorithm for dimensionality reduction. J Soft Comput Data Min 2(1):20–30

He K, Zhang X, Ren S, et al (2016) Deep residual learning for image recognition. In: IEEE Conference on Computer Vision and Pattern Recognition, pp 770–778

Hersbach H, Bell B, Berrisford P et al (2020) The era5 global reanalysis. Q J R Meteorol Soc 146(730):1999–2049

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Hoffmann H (2007) Kernel pca for novelty detection. Pattern Recognit 40(3):863–874

Huang JJ, Dragotti PL (2022) Winnet: wavelet-inspired invertible network for image denoising. IEEE Trans Image Process

Huang L, Liu X, Lang B, et al (2018) Orthogonal weight normalization: Solution to optimization over multiple dependent stiefel manifolds in deep neural networks. In: Thirty-Second AAAI Conference on Artificial Intelligence

Jacobsen JH, Smeulders A, Oyallon E (2018) i-revnet: deep invertible networks. arXiv preprint arXiv:1802.07088

Jean N, Wang S, Samar A, et al (2019) Tile2vec: Unsupervised representation learning for spatially distributed data. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 3967–3974

Jia K, Tao D, Gao S, et al (2017) Improving training of deep neural networks via singular value bounding. In: IEEE Conference on Computer Vision and Pattern Recognition, pp 4344–4352

Jing J, Deng X, Xu M, et al (2021) Hinet: deep image hiding by invertible network. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 4733–4742

Kawaguchi K, Kaelbling LP, Bengio Y (2017) Generalization in deep learning. arXiv preprint arXiv:1710.05468

Kazhdan M, Funkhouser T, Rusinkiewicz S (2003) Rotation invariant spherical harmonic representation of 3 d shape descriptors. In: Symposium on Geometry Processing, pp 156–164

Kim D, Choi J (2022) Unsupervised representation learning for binary networks by joint classifier learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 9747–9756

Kim T, Yun SY (2022) Revisiting orthogonality regularization: a study for convolutional neural networks in image classification. IEEE Access

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980

Krizhevsky A, Hinton G et al (2009) Learning multiple layers of features from tiny images. Tech. rep, Citeseer

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp 1097–1105

Kulkarni P, Zepeda J, Jurie F, et al (2015) Learning the structure of deep architectures using l1 regularization. In: British Machine Vision Conference, 2015

Lee HY, Huang JB, Singh M, et al (2017) Unsupervised representation learning by sorting sequences. In: Proceedings of the IEEE International Conference on Computer Vision, pp 667–676

Li J, Zhou P, Xiong C, et al (2020) Prototypical contrastive learning of unsupervised representations. arXiv preprint arXiv:2005.04966

Li M, Wang Y, Lin Z (2022) Cerdeq: Certifiable deep equilibrium model. In: Int Conf Mach Learn PMLR, pp 12,998–13,013

Linting M, Meulman JJ, Groenen PJ et al (2007) Nonlinear principal components analysis: introduction and application. Psychol Methods 12(3):336

Loshchilov I, Hutter F (2017) Decoupled weight decay regularization. arXiv preprint arXiv:1711.05101

Madono K, Tanaka M, Onishi M et al (2021) Sia-gan: scrambling inversion attack using generative adversarial network. IEEE Access 9:129385–129393

Mahendran A, Vedaldi A (2016) Visualizing deep convolutional neural networks using natural pre-images. Int J Comput Vis 120(3):233–255

Momeny M, Neshat AA, Hussain MA et al (2021) Learning-to-augment strategy using noisy and denoised data: improving generalizability of deep cnn for the detection of covid-19 in x-ray images. Comput Biol Med 136(104):704

Neyshabur B, Bhojanapalli S, McAllester D, et al (2017) Exploring generalization in deep learning. In: Advances in Neural Information Processing Systems, pp 5947–5956

Ozay M, Okatani T (2016) Optimization on submanifolds of convolution kernels in cnns. arXiv preprint arXiv:1610.07008

Rasmus A, Berglund M, Honkala M, et al (2015) Semi-supervised learning with ladder networks. In: Advances in Neural Information Processing Systems, pp 3546–3554

Rasp S, Thuerey N (2021) Data-driven medium-range weather prediction with a resnet pretrained on climate simulations: a new model for weatherbench. J Adv Model Earth Syst, p e2020MS002405

Rasp S, Dueben PD, Scher S, et al (2020) Weatherbench: a benchmark dataset for data-driven weather forecasting. arXiv preprint arXiv:2002.00469

Recht B, Roelofs R, Schmidt L, et al (2019) Do imagenet classifiers generalize to imagenet? In: International Conference on Machine Learning

Reddi SJ, Kale S, Kumar S (2019) On the convergence of adam and beyond. arXiv preprint arXiv:1904.09237

Ronneberger O, Fischer P, Brox T (2015) U-net: Convolutional networks for biomedical image segmentation. In: International Conference on Medical Image Computing and Computer-Assisted Intervention, Springer, pp 234–241

Shwartz-Ziv R, Tishby N (2017) Opening the black box of deep neural networks via information. arXiv preprint arXiv:1703.00810

Sinaga KP, Yang MS (2020) Unsupervised k-means clustering algorithm. IEEE Access 8:80716–80727

Srivastava N, Hinton G, Krizhevsky A et al (2014) Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 15(1):1929–1958

Teng Y, Choromanska A (2019) Invertible autoencoder for domain adaptation. Computation 7(2):20

Thuerey N, Pfaff T (2018) MantaFlow. http://mantaflow.com

Tishby N, Zaslavsky N (2015) Deep learning and the information bottleneck principle. In: 2015 IEEE Information Theory Workshop (ITW), IEEE, pp 1–5

Torrey L, Shavlik J (2010) Transfer learning. In: Handbook of research on machine learning applications and trends: algorithms, methods, and techniques. IGI Global, pp 242–264

Vaswani A, Shazeer N, Parmar N, et al (2017) Attention is all you need. In: Advances in Neural Information Processing Systems, pp 5998–6008

Vincent P, Larochelle H, Lajoie I, et al (2010) Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion. J Mach Learn Res 11(12)

Wall ME, Rechtsteiner A, Rocha LM (2003) Singular value decomposition and principal component analysis. In: A Practical Approach to Microarray Data Analysis. Springer, pp 91–109

Walters-Williams J, Li Y (2009) Estimation of mutual information: A survey. In: International Conference on Rough Sets and Knowledge Technology, Springer, pp 389–396

Wang J, Chen Y, Chakraborty R, et al (2020) Orthogonal convolutional neural networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 11,505–11,515

Weigend AS, Rumelhart DE, Huberman BA (1991) Generalization by weight-elimination with application to forecasting. In: Advances in Neural Information Processing Systems, pp 875–882

Wold S, Esbensen K, Geladi P (1987) Principal component analysis. Chemom Intell Lab Syst 2(1–3):37–52

Wu Z, Wang X, Zhou P, et al (2021) Transmission line fault location based on the stacked sparse auto-encoder deep neural network. In: 2021 IEEE 5th Conference on Energy Internet and Energy System Integration (EI2), IEEE, pp 3201–3206

Xie Y, Franz E, Chu M et al (2018) tempogan: a temporally coherent, volumetric gan for super-resolution fluid flow. ACM Trans Graph TOG 37(4):95

Xu H, Caramanis C, Sanghavi S (2010) Robust pca via outlier pursuit. arXiv preprint arXiv:1010.4237

Yu Y, Odobez JM (2020) Unsupervised representation learning for gaze estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 7314–7324

Zamir AR, Sax A, Shen W, et al (2018) Taskonomy: Disentangling task transfer learning. In: IEEE Conference on Computer Vision and Pattern Recognition, pp 3712–3722

Zeiler MD, Fergus R (2014) Visualizing and understanding convolutional networks. In: European Conference on Computer Vision, Springer, pp 818–833

Zhan X, Xie J, Liu Z, et al (2020) Online deep clustering for unsupervised representation learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 6688–6697

Zhang L, Lu Y, Song G, et al (2018a) Rc-cnn: Reverse connected convolutional neural network for accurate player detection. In: Pacific Rim International Conference on Artificial Intelligence, Springer, pp 438–446

Zhang R, Isola P, Efros AA, et al (2018b) The unreasonable effectiveness of deep features as a perceptual metric. In: IEEE Conference on Computer Vision and Pattern Recognition, pp 586–595

Zhou Y, Govindaraju V (2014) Learning deep autoencoders without layer-wise training. stat 1050:14

Zhou Y, Arpit D, Nwogu I, et al (2014) Is joint training better for deep auto-encoders? arXiv preprint arXiv:1405.1380

Zhuang Y, Rui Y, Huang TS, et al (1998) Adaptive key frame extraction using unsupervised clustering. In: Proceedings 1998 International Conference on Image Processing. icip98 (cat. no. 98cb36269), IEEE, pp 866–870

Funding

Open Access funding enabled and organized by Projekt DEAL. This work was funded by the ERC-2019-COG-863850 SpaTe project.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A Details of the method

1.1 A.1 Pretraining and singular value decomposition

In this section, we give a more detailed derivation of our loss formulation, extending Sect. 3 of the main paper. As explained there, our loss formulation aims for minimizing

where \(M_m\in \mathbb {R}^{s_{m}^{\text {out}}\times s_{m}^{\text {in}}}\) denotes the weight matrix of layer m, and data from the input dataset \(\mathcal {D}_{m}\) is denoted by \(\mathbf {d}^{i}_{m} \subset \mathbb {R}^{s_{m}^{\text {in}}}, i=1,2,\ldots ,t\). Here, t denotes the number of samples in the input dataset. Minimizing Eq. (A1) is mathematically equivalent to

for all \(\mathbf {d}^{i}_{m}\). Hence, perfectly fulfilling Eq. (A1) would require all \(\mathbf {d}^{i}_{m}\) to be eigenvectors of \(M_{m}^{T}M_{m}\) with corresponding eigenvalues being 1. As in Sect. 3 of the main paper, we make use of an auxiliary orthonormal basis \(\mathcal {B}_{m}:\left\langle \mathbf {w}_{m}^{1},\ldots ,\mathbf {w}_{m}^{q}\right\rangle\), for which q (with \(q \le t\)) denotes the number of linearly independent entries in \(\mathcal {D}_{m}\). While \(\mathcal {B}_{m}\) never has to be explicitly constructed for our method, it can, e.g., be obtained via Gram–Schmidt. The matrix consisting of the vectors in \(\mathcal {B}_{m}\) is denoted by \(D_{m}\).

Since the \(\mathbf {w}_{m}^{h} (h=1,2,...q)\) necessarily can be expressed as linear combinations of \(\mathbf {d}^{i}_{m}\), Eq. (A1) similarly requires \(\mathbf {w}_{m}^{h}\) to be eigenvectors of \(M_{m}^{T}M_{m}\) with corresponding eigenvalues being 1, i.e.,

We denote the vector of coefficients to express \(\mathbf {d}^{i}_{m}\) via \(D_{m}\) with \(\mathbf {c}_{m}^{i}\), i.e., \(\mathbf {d}^{i}_{m}\!=\!D_{m}\mathbf {c}_{m}^{i}\). Then, Eq. (A2) can be rewritten as:

Via an SVD of the matrix \(M_m\) in Eq. (A4) we obtain

where the coefficient vector \(\mathbf {c}_{m}\) is accumulated over the training dataset size t via \(\mathbf {c}_{m}=\sum _{i=1}^{t}\mathbf {c}_{m}^{i}\). Here, we assume that over the course of a typical training run eventually every single datum in \(\mathcal {D}_{m}\) will contribute to \(\mathcal {L}_{\text {RR}_{m}}\). This form of the loss highlights that minimizing \(\mathcal {L}_{\text {RR}}\) requires an alignment of \(V_{m} \Sigma _{m}^{T}\Sigma _{m} V_{m}^{T}\mathbf {w}_{m}^{h}\mathbf {c}_{m_h}\) and \(\mathbf {w}_{m}^{h}\mathbf {c}_{m_h}\).

By construction, \(\Sigma _{m}\) contains the square roots of the eigenvalues of \(M_{m}^{T} M_{m}\) as its diagonal entries. The matrix has rank \(r=rank(M_{m}^{T}M_{m})\), and since all eigenvalues are required to be 1 by Eq. (A3), the multiplication with \(\Sigma _{m}\) in Eq. (A5) effectively performs a selection of r column vectors from \(V_{m}\). Hence, we can focus on the interaction between the basis vectors \(\mathbf {w}_{m}\) and the r active column vectors of \(V_{m}\):

As \(V_{m}\) is obtained via an SVD it contains r orthogonal eigenvectors of \(M_{m}^{T}M_{m}\). Eq. (A3) requires \(\mathbf {w}_{m}^{1},\ldots ,\mathbf {w}_{m}^{q}\) to be eigenvectors of \(M_{m}^{T}M_{m}\), but since typically the dimension of the input dataset is much larger than the dimension of the weight matrix, i.e., \(r \le q\), in practice only r vectors from \(\mathcal {B}_{m}\) can fulfill Eq. (A3). This means the vectors \(\mathbf {v}_{m}^{1},\ldots ,\mathbf {v}_{m}^{r}\) in \(V_{m}\) are a subset of the orthonormal basis vectors \(\mathcal {B}_{m}:\left\langle \mathbf {w}_{m}^{1},\ldots ,\mathbf {w}_{m}^{q}\right\rangle\) with \(\left\| \mathbf {w}_{m}^{h}\right\| _2^{2}=1\). Then, for any \(\mathbf {w}_{m}^{h}\) we have

Thus, if \(V_{m}\) contains \(\mathbf {w}_{m}^{h}\), we have

and we trivially fulfill the constraint

However, due to r being smaller than q in practice, \(V_{m}\) typically cannot include all vectors from \(\mathcal {B}_{m}\). Thus, if \(V_{m}\) does not contain \(\mathbf {w}_{m}^{h}\), we have \((\mathbf {v}_{m}^{f})^{T}\mathbf {w}_{m}^{h}=0\) for every vector \(\mathbf {v}_{m}^{f}\) in \(V_{m}\), which means

As a consequence, the constraint Eq. (A2) is only partially fulfilled:

As the \(\mathbf {w}_{m}^{h}\) have unit length, the factors \(\mathbf {c}_{m}\) determine the contribution of a datum to the overall loss. A feature \(\mathbf {w}_{m}^{h}\) that appears multiple times in the input data will have a correspondingly larger factor in \(\mathbf {c}_{m}\) and hence will more strongly contribute to \(\mathcal {L}_{\text {RR}}\). The \(L^2\) formulation of Eq. (A1) leads to the largest contributors being minimized most strongly, and hence the repeating features of the data, i.e., dominant features, need to be represented in \(V_{m}\) to minimize the loss. Interestingly, this argumentation holds when additional loss terms are present, e.g., a loss term for classification. In such a case, the factors \(\mathbf {c}_{m}\) will be skewed toward those components that fulfill the additional loss terms, i.e., favor basis vectors \(\mathbf {w}_{m}^{h}\) that contain information for about the loss terms. This, e.g., leads to clear digit structures being embedded in the weight matrices for the MNIST example below.

In summary, to minimize \(\mathcal {L}_{\text {RR}}\), \(V_{m}\) is driven toward containing r orthogonal vectors \(\mathbf{w}_{m}^{h}\) which represent the most frequent features of the input data, i.e., the dominant features. It is worth emphasizing that above \(\mathcal {B}_{m}\) is only an auxiliary basis, i.e., the derivation does not depend on any particular choice of \(\mathcal {B}_{m}\).

1.2 A.2 Examples of network architectures with pretraining

While the proposed pretraining is significantly easier to integrate into training pipelines than classic autoencoder pretraining, there are subtleties w.r.t. the order of the operations in the reverse pass that we clarify with examples in the following sections. To specify NN architectures, we use the following notation: C(k, l, q), and D(k, l, q) denote convolutional and deconvolutional operations, respectively, while fully connected layers are denoted with F(l), where k, l, q denote kernel size, output channels, and stride size, respectively. The bias of a CNN layer is denoted with b. I/O(z) denote input/output, their dimensionality is given by z. \(I_{r}\) denotes the input of the reverse pass network. tanh, relu, lrelu denote hyperbolic tangent, ReLU, and leaky ReLU activation functions (AFs), where we typically use a leaky tangent of 0.2 for the negative half-space. UP, MP and BN denote \(2\times\) nearest-neighbor up-sampling, max pooling with \(2\times 2\) filters and stride 2, and batch normalization, respectively.

Below we provide additional examples of how to realize the pretraining loss \(\mathcal {L}_{\text {RR}}\) in a neural network architecture. As explained in the main document, the constraint Eq. (A1) is formulated via

with \(\mathbf {d}_{m}\), and \(\lambda _{m}\) denoting the vector of activated intermediate data in layer m from the forward pass, and a scaling factor, respectively. \(\mathbf {d}^{'}_{m}\) denotes the activations of layer m from the reverse pass. For instance, let \(L_m()\) denote the operations of a layer m in the forward pass, and \(L_m'()\) the corresponding operations for the reverse pass. Then, \(\mathbf {d}^{}_{m+1}=L_m(\mathbf {d}^{}_{m})\), and \(\mathbf {d}^{'}_{m}=L_{m}'(\mathbf {d}^{'}_{m+1})\).

When Eq. (A12) is minimized, we obtain activated intermediate content during the reverse pass that reconstructs the values computed in the forward pass, i.e., \(\mathbf {d}^{'}_{m+1}=\mathbf {d}_{m+1}\) holds. Then, \(\mathbf {d}^{'}_{m}\) can be reconstructed from the incoming activations from the reverse pass, i.e., \(\mathbf {d}^{'}_{m+1}\), or from the output of layer m, i.e., \(\mathbf {d}_{m+1}\). Using \(\mathbf {d}^{'}_{m+1}\) results in a global coupling of input and output throughout all layers, i.e., the full loss variant. On the other hand, \(\mathbf {d}_{m+1}\) yields a variant that ensures local reversibility of each layer, and yields a very similar performance, as we will demonstrate below. We employ this local loss for networks without a unique, i.e., bijective, connection between two layers. Intuitively, when inputs cannot be reliably reconstructed from outputs.