Abstract

This study demonstrates how to profit from up-to-date dynamic economic big data, which contributes to selecting economic attributes that indicate logistics performance as reflected by the Logistics Performance Index (LPI). The analytical technique employs a high degree of productivity in machine learning (ML) for prediction or regression using adequate economic features. The goal of this research is to determine the ideal collection of economic attributes that best characterize a particular anticipated variable for predicting a country’s logistics performance. In addition, several potential ML regression algorithms may be used to optimize prediction accuracy. The feature selection of filter techniques of correlation and principal component analysis (PCA), as well as the embedded technique of LASSO and Elastic-net regression, is utilized. Then, based on the selected features, the ML regression approaches artificial neural network (ANN), multi-layer perceptron (MLP), support vector regression (SVR), random forest regression (RFR), and Ridge regression are used to train and validate the data set. The findings demonstrate that the PCA and Elastic-net feature sets give the closest to adequate performance based on the error measurement criteria. A feature union and intersection procedure of an acceptable feature set are used to make a more precise decision. Finally, the union of feature sets yields the best results. The findings suggest that ML algorithms are capable of assisting in the selection of a proper set of economic factors that indicate a country's logistics performance. Furthermore, the ANN was shown to be the best effective prediction model in this investigation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The World Bank's Logistics Performance Index (LPI) is well-known practical information available to policymakers for judging a country's logistics performance [1]. The LPI, which has been assessing nations' logistics performance on a biannual basis by analyzing survey data, is perhaps the most important tool to emerge from trade facilitation. It delivers a macroeconomic overview on how policymakers may favorably affect global supply chain capabilities and the performance of relevant businesses representing the efficiency of the clearance process, trade quality, and transportation-related infrastructure [2]. Perhaps it would be advantageous if there was constant and up-to-date information reflecting the country’s logistical efficiency. It may monitor changes in variable data and continuously evaluate trends or predict logistics efficiency to provide policymakers with rapid access to projected logistics performance to improve the country's logistics and supply chain capabilities.

Nonetheless, prior research has shown that variables such as institutional reforms and resource enhancements significantly accelerate logistics performance. Countries with a low level of corruption and a stable political environment, according to [3], are more likely to have a high level of logistics performance, and improvements in resource supply such as infrastructure, technology, labor, and education related to the country's competitiveness have a significant positive effect on performance. Similarly, [1] emphasizes that governance weaknesses and societal instability might reduce performance. However, the aforementioned study variables are static data, and certain components were also collected through the survey.

Since then, the components, particularly the economic component, have demonstrated a significant link between a nation's logistics performance (expressed by LPI scores) and an economic element, such as the country's economic development indicator [1, 4]. For example, GDP per capita [1], export and import volume show LPI components have a substantial beneficial influence on expanding international commerce for both import and export [5]. There are, nevertheless, unstudied substantial economic aspects that connect with logistics efficiency.

This study demonstrates how to profit from up-to-date dynamic economic big data, which contributes to the selection of economic attributes that indicate logistics performance as reflected by the LPI. The analytical technique employs a high degree of productivity in the field of machine learning (ML) for prediction or regression using an adequate set of economic feature subsets. Because the accuracy of ML prediction outcomes is reliant not only on the model structure and associated training algorithm but also on the feature space constructed using the initial feature set and feature selection algorithm [6]. In ML applications, feature selection is often employed as a portion of the pre-processing phase to get a subset of features by reducing elements with minimal predictive information [7].

There are two aims in this study: (1) to determine the ideal subset of economic features that best represents a particular anticipated variable for predicting a country's logistics performance and (2) to improve prediction accuracy by employing a set of alternative ML regression algorithms. This research looks into two major research questions: to begin, can ML algorithms assist in selecting the proper subset of economic features that reflect the country's logistics performance? Second, what is the appropriate ML regression approach for predicting logistics performance based on certain economic attributes? This paper's structure is as follows. Section 2 includes a review of the literature on feature selection and regression machine learning. Section 3 contains methodology, which includes the ML feature selection procedure, data sources and data preparation, data analysis, and parameter setup. Sections 4 and 5 provide the results and discussion, as well as the concluding remark and future work.

2 Literature review

2.1 Feature selection

The process of selecting a subset of important features, especially variables, for model building is known as feature selection. It is well recognized that a subset of relevant features may be beneficial in improving model performance. With minimum information loss, the feature selection process aims to eliminate duplicate or superfluous features and other features that are closely connected in the data. It is often used to make the model more comprehensible and to improve generality by decreasing variance [8]. The three forms of feature selection strategies are filter, wrapper, and embedded.

To rank all features, filter techniques rely on statistical properties. The challenge of feature selection is viewed as a ranking problem by the majority of filter techniques. These techniques are independent of the ML learning algorithm that will be used with the selected subset [9]. This category comprises mutual information-based, correlation-based, Chi-square test-based and principal component analysis-based techniques. Filter techniques are often used in high-dimensional datasets because of their processing efficiency [8].

Wrapper techniques select feature subsets based on how useful they are to a certain predictor or classifier. Selection is viewed as a search problem in these techniques, with various feature combinations created, evaluated, and compared to other combinations. The search is driven by heuristic intelligent optimization techniques. Simplified methods, such as sequential search, or evolutionary algorithms, such as particle swarm optimization (PSO) or genetic algorithm (GA), that generate local optimal results and are computationally viable, are used to achieve good results [6]. As a selection criterion, these approaches use the performance of the inductive algorithm. They wrap the learning algorithm with feature selection and estimate the advantages of adding or deleting a feature using cross-validation [9]. Wrapper techniques outperform filter methods because each cycle, a new prediction model or learning algorithm evaluates a different feature subset [10].

By embedding feature selection within the model learning, embedded methods provide a trade-off solution between filter techniques and wrapper methods. They return both the learned model and the selected features at the same time [11]. The learning and feature selection components of embedded techniques cannot be separated [12]. Embedded techniques include feature selection into the model training process; for example, starting regularization processes while the model is being trained is a frequent example [6]. Regularization models such as sparse linear discriminant analysis regularized support vector machine (SVM) and LASSO are the most commonly used embedded approaches [11]. For the instance of an embedded method, the LASSO technique normalizes the parameters of a linear model using an L1-norm penalty, reducing the less correlated coefficients to zero [13]. Many new sparse learning approaches for multi-class classification have been suggested, including L2, 1-norm regularized regression models [11].

Since there are several methods for selecting features, in our work, we used filter techniques to rank the predictors under consideration. Then, using the well-known ML regression, we evaluated several potential subsets of selected features using a particular learning algorithm and ranked those that performed the best. Furthermore, this work compares the embedded techniques of penalized linear regression, which technique is helpful since it allows for simultaneous feature selection and prediction [14].

2.2 ML of regression

ML regression approaches are rapidly being used. The following fundamental techniques are utilized in the literature: artificial neural network (ANN), SVM, and random forest (RF) [15]. These are effective data-driven techniques. These models can also give regression results in a variety of fields, including energy, environmental, waste and pollution, medical, information technology, finance, and business and economics.

One of the most significant artificial intelligence approaches is ANN [16] and extensions of MLP-ANN models are possibly the most popular and frequently utilized in the field of machine learning prediction. To make precise predictions, these models serve as a reliable predictive tool [17]. [18] use nonlinear regression techniques to anticipate ozone levels based on main pollutants and meteorological variables. The findings produced from the nonlinear regression techniques ANN were satisfactory, and it had shown its robustness as a helpful tool for evaluating and forecasting air quality situations. To improve simulation for medical operations, a versatile and reliable predictor of body size, shape, and ligament thickness is required. Using clinical data, the ANN can predict patient conditions, and it produced more accurate results than traditional regression analysis methods [19]. It is used to validate the prediction of yearly generation rates of household, commercial, and building and demolition wastes for MLP-ANN. MLP-ANN models demonstrated high prediction accuracy, making them useful for forecasting trash generation rates from various sources and potentially a cost-effective strategy for developing integrated municipal solid waste management systems [20]. In business and economics, researchers suggested ANN regression models cope with the challenge of predicting GDP growth. It is demonstrated that the ANN model can predict GDP growth rates significantly more accurately than a corresponding linear model [21]. Moreover, ANN models are appropriate tools for analyzing economic data such as GDP and GDP per capita since they allow for a trade-off between the capacity to predict these features and model size [21, 22]. MLP-ANN was used by [23] to predict customer quality in e-commerce social networks. By employing word-of-mouth marketing tactics, MLP-ANN produces a strong model for predicting which referrers will attract high-quality referrals (in terms of transaction volume). And the MLP-ANN technique performed better in terms of estimating building costs [16].

The use of SVM for classification and regression issues has grown significantly. Support vector regression (SVR) is a subset of SVM [24]. SVR techniques are typically used for predicting, and the results are usually satisfactory. As an example, consider a large office building's energy consumption prediction, in which the summer hourly cooling load statistics are utilized as energy consumption data. In terms of accuracy, robustness, and generalization ability, the findings show that the suggested SVR-based technique outperforms generally used methods [25]. [26] utilized the ε-SVR and υ-SVR using linear, polynomial, radial basis function, and sigmoid kernels for predicting software enhancement effort in the information technology area. When prediction accuracies for both types of SVR were compared to those of statistical regressions, they were statistically better than statistical regression. In banking, SVR and enhanced versions of SVR techniques are used to forecast corporate bond losses in the event of default [27]. Overall, their empirical findings indicate that SVR techniques are a promising method for banks to utilize to anticipate loss given failure.

Random forest RF regression (RFR) is a widely used method for analyzing high-dimensional data. Due to poor predictors, its advantages may be reduced in sparse environments, necessitating a pre-estimation dimension reduction (targeting) phase. Nonetheless, this approach is usually useful for predicting and frequently produces an adequate outcome. For example, [28] suggested RFR-based techniques for battery capacity estimate, with experimental findings demonstrating that the proposed technique is capable of evaluating the health statuses of various batteries and promising for online battery capacity estimation. Furthermore, when the RFR performance was compared to the multiple linear regression (MLR) techniques, RFR has a significantly better predictive potential than a typical linear regression model. RFR is regarded as a particularly promising approach for large-scale modeling of groundwater nitrate contamination [29]. In terms of economics, [30] used RFR to estimate GDP at the town scale versus MLR, with the RFR model achieving considerably greater accuracy.

3 Methodology

3.1 ML feature selection process

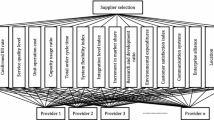

Figure 1 depicts the merging of two major approaches in the feature selection process. To begin with, we use correlation and principal component analysis (PCA) approaches to identify the possible feature set. Second, the embedded technique employs the ML of linear regression analysis approaches, namely LASSO and Elastic-net (E-net) regression. In the case of LASSO and E-net, the selected feature can be validated continuously after the model has been trained. Remembering the probable feature set of filter methods, the data associated with those feature sets will be trained using ANN, MLP-ANN, SVR, RFR, and Ridge regression. LASSO or E-net have a high accurate subset selection but lack optimal prediction rates. The dataset of features selected by both regression algorithms is then used to supervise using the suggested ML regression method. The models based on specified sets of features will be validated continuously using the test dataset. Finally, the model’s performance will be evaluated.

3.2 Data sources and data preparation

3.2.1 LPI of World Bank

The World Bank’s Logistics Performance Index (LPI) is a biennially announced indicator for judging a country's logistics performance. For this research period from 2010 to 2018 (5 periods), there are 134 countries with LPI information available (see Table 11 of the 'Appendix').

3.2.2 The economic statistics data of S&P global market intelligence

The economic features are derived from the macroeconomic data of S&P Global Market Intelligence's economic statistics. The economic and demographic statistics data provide the macroeconomic attribute, which includes 52 features divided into five categories: (1) market size and growth (total of 10 features), (2) macroeconomic stability (total of 17 features), (3) personal income and labor (total of 9 features), (4) external sector (total of 13 features), and (5) tax rates (total of 3 features).

3.2.3 Data preparation

According to data preparation, in the study of 5 periods from 2009 to 2018 (biannual average to matching to LPI data), initial 26 features were selected, which are accessible for 100 countries when mapping to the LPI data (see Table 11 of the 'Appendix'). Table 1 displays the 26 possible economic features. The first feature selection is motivated by the trade-off between the number of instances and the missing value of country economic data. When the instance must be maximized due to the typically high accuracy even with big datasets [31], the missing value must be minimized. Because missing values might cause bias and reduce analytical efficiency, they should be avoided [32].

For the dataset of 500 instances (5 periods of 100 countries), 70% of the data is utilized as a training set for ML methods, while the remainder is used to test or validate model performance. Furthermore, just one missing item in the dataset is replaced with a mean value based on the country's economic attribute.

3.3 Data analysis and parameter setting

3.3.1 Feature selection

3.3.1.1 Correlation

The Pearson correlation coefficient is a measure of the linear correlation between two variables in a collection of variables in statistics. It has a value between − 1 and + 1, where + 1 represents completely positive linear correlation, 0 represents nonlinear correlation, and − 1 represents completely negative linear correlation [33]. Pearson correlation coefficient r is defined as follows:

where \(n\) is the sample size; \(x_{i}\) and \(y_{i}\) are the individual sample points indexed with \(i\); \(\overline{x}\) is the sample mean representing as \(\frac{1}{n}\mathop \sum \nolimits_{i = 1}^{n} x_{i}\), and analogously for \(\overline{y}\).

The correlation matrix is used to select the subset of relevant features. In this study, the high correlated input with output attributes will be considered [34] stated that it will be weak positive correlation if \(r\) value = 0 to 0.25, fair positive correlation if \(r\) value = 0.25 to 0.5, good correlation if \(r\) value = 0.5 to 0.75, excellent correlation if \(r\) value is more than 0.75. It’s also a similar rank to the negative correlation if \(r\) = [0, \(-\) 1]. A tool for scientific data analysis of correlation package in Microsoft Excel is utilized to obtain the result. The suggested Excel tool is a cost-effective alternative to costly software, and it is simple to use for basic data analysis.

3.3.1.2 PCA

PCA is arguably the most common multivariate statistical technique for reducing data with multiple dimensions, and it is frequently used to reduce well-being indicators to a single index of well-being. PCA was performed in this study using RStudio (version 4.1.1), which was utilized to extract the major components input and output variables after the input and output datasets were centered and scaled. On the one hand, while using PCA, the factor loading criterion is set to 0.3, which means that only variables with absolute factor loading equal to or greater than 0.3 are considered. The varimax factor rotation approach was used to minimize variables with excessive loading on a factor to assure factor interpretability because a loading less than 0.3 is regarded as insignificant [35]. PCA-biplots, on the other hand, were created for feature selection [36]. PC1 is designated as dimension one, whereas PC2 is designated as dimension two. To display and validate, a biplot-based PCA technique is employed, which is a form of statistics graph that may be used to depict the relationships between multiple parameters [37]. Vectors are the projector variables in a PCA-based biplot. Furthermore, the attributes were centered and scaled during the PCA preprocessing.

3.3.1.3 Penalized linear regression

Penalized regression models (for example, least absolute shrinkage and selection operator (LASSO) or Elastic-net (E-net)). The high performance of LASSO and E-net is since these models avoid overfitting and minimize model complexity by penalizing the size of coefficients [38]. These models use the simultaneous feature selection–prediction process. [39] introduced LASSO, a famous penalized technique for choosing individual variables, which is based on the following stated model:

Finding the elements of \(\beta\) that equal zero is how the feature selection issue is expressed. Estimates are selected by

subject to \(\sum \left| {\beta_{j} } \right| < t\).

where \(y_{i}\) is the dependent variable, \(x_{i}\) indicates the predictor variables, \(\beta_{0}\) intercept and \(\beta^{\prime}\) show the unknown parameters of the regression equation. Furthermore, \(t > 0 \) is a tuning parameter that governs the degree of shrinkage applied to the estimations. It is the same as minimizing,

For high coefficient estimations, this is the common least squares with a penalty term set by \(\lambda\). \(\sum \left| {\beta_{j} } \right|\) is a coefficient vector constraint that yields a sparse solution vector \(\beta_{\lambda }\); as increases \(\lambda\), more members of \(\beta_{\lambda }\) become zero. LASSO is able to choose a limit of \(N - 1\) features, where \(N\) is the sample size [40]. This might be an issue when performing a regression with a limited number of samples but a large number of features [41].

The elastic-net approach attempts to overcome the constraints of the LASSO technique; it is especially effective when there are numerous correlated features [42]. This model is equivalent to minimizing,

The values for \(\alpha\) of Elastic-net lie between \(\alpha = \left[ {0, 1} \right]\), if \(\alpha = 1\) it is the formulation of the LASSO algorithm.

In this paper, the penalized linear regression analysis was carried out using RStudio in which the glmnet package is employed. In addition, the datasets were centered and scaled, and tenfold cross-validation was performed to produce internally valid performance metrics.

3.3.2 Regression and validation

3.3.2.1 ANN

Three critical aspects influence ANN: the unit's input and activation functions, network architecture, and the weight of each input connection [43]. It is composed of three levels of nodes (neurons), namely the input, hidden, and output layers (Fig. 2a). The data sample is accepted by the input layer, and the target category is returned by the output layer [44]. The neuron, the fundamental unit of these networks, mimics the human counterpart, having dendrites for taking input variables and emitting an output value that may be used as input for other neurons [45]. The neural network’s layers of fundamental processing units are interconnected, with weights assigned to each connection [46], which are changed during the network’s learning process. This step improves not only the interconnections between the layers of neurons, but also the parameters of the transfer functions between one layer and another, reducing mistakes. Finally, the neural network’s final layer is in charge of combining all of the signals from the preceding layer into a single output signal—the network’s reaction to specific input data [15].

A basic ANN structure is depicted in Fig. 2b, which includes neuron connections, biases assigned to neurons, and weights assigned to connections. Two equations can be used to identify a neuron \(k\) [47]:

and

where \(x_{1}\), \(x_{2}\), …, \(x_{n}\) are the inputs, \(w_{k1}\), \(w_{k2}\), …, \(w_{kn}\) are the neuron weights, \(u_{k}\) is the result of weighted input calculation, \(b_{k}\) is the bias term, \(f\left( \cdot \right)\) is the activation function, and \(y_{k}\) is the output. There are numerous algorithms that may be used to train a network [43].

The MATLAB 2020b Neural network toolbox was used in this investigation. The ANN was built using the default network and parameters of hidden layer sizes of 1 \(\times\) 10 (one hidden layer with ten nodes). For prediction tasks, a feed-forward ANN with backpropagation learning has been built as a default. Since then, backpropagation has been the most often used supervised algorithm [48]. TRAINLM is a network training function that uses the Levenberg–Marquardt optimization technique to alter the weight and bias variables. TRAINLM is a fast algorithm, although it takes up more memory than other algorithms. LEARNGDM (Gradient descent with momentum weight and bias learning function) is used for error minimization. This function computes the weight change regarding a specific neuron while accounting for the input and error terms, weight and bias, learning rate, and momentum term of the neuron, and is equal to gradient descent with momentum backpropagation. The tangent sigmoid function (TANSIG) is used as a transfer function in the following equation for the input variable x [49]:

TANSIG is employed in both the hidden and output layers. They calculate the output based on the net input. The values returned by this activation function range from 1 to + 1.

3.3.2.2 MLP-ANN

The network architecture refers to the structure of connectivity between distinct neurons in ANN. One of the most frequent and useful ANN architectures is the multi-layer perceptron (MLP) network. Each neuron in MLP-ANN is linked to many of its neighbors, with variable weights indicating the relative importance of the individual neuron inputs to the other neurons. MLP is a type of network that belongs to the feed-forward ANN family, and its learning method is backpropagation [50].

In this study, ANN multilayer perceptron is designed based on hidden layer sizes of 10 \(\times \) 10 (ten hidden layers with ten nodes each). The MATLAB 2020b Neural network toolbox which parameter setting similar to the abovementioned ANN was used in this investigation.

3.3.2.3 SVR

SVR is an analytical technique used to explore the connection between one or more predictor variables and a real-valued (continuous) dependent variable [51]. When addressing nonlinear problems, SVR uses a kernel function to transfer the nonlinear regression problem to a higher latitude space, allowing it to determine the best hyperplane to separate the sample points [24],

subject to \(\mathop \sum \nolimits_{i = 1}^{k} \left( {a_{i} - a_{i}^{*} } \right) = 0\), \(0 \le \left( {a_{i} - a_{i}^{*} } \right) \le \frac{C}{l}\) and \(i = 1,2, \ldots ,l\),

where \(X_{i}\) is the sample data; \(l\) is the sample size; \(C\) is the penalty coefficient; \(\varepsilon\) surpasses the penalty size of the error sample; \(K \left( {Xi, Xj} \right)\) is the kernel function to the optimal solution of \(a\).

In RStudio SVR setting as library caret for classification and regression training, library e1071 for a regression machine in which epsilon-regression type is applied, the radial basis kernel is used in predicting method, and cost of constraints violation is set as default (= 1). In the case of a probabilistic regression model, the fitted model of the sigma parameter is the scale parameter of the hypothesized (zero-mean) Laplace distribution calculated by maximum likelihood.

3.3.2.4 RFR

The RF technique is a tree-based ensemble approach that was created to overcome the limitations of the classic classification and regression tree (CART) method [52]. RFR is an ensemble learning technique that employs regression algorithms and decision trees [53]. The RF regression technique employs regression trees as base learners. \(N\) bootstrapped sample sets are taken from the source dataset to train the RF [52]. Following the selection of the forest's number of trees (\(C\)), each regression tree is built on a different bootstrap sample. As split candidates, only a limited and fixed number of randomly picked \(K\) predictors are chosen. The procedures are then repeated until \(C\) such trees are formed, and fresh data are anticipated by aggregating the \(C\) trees’ predictions. An RF regression predictor is denoted as [52]:

where \(x\) is the vectored input variable, \(C\) is the number of trees, and \(T_{i} \left( x \right)\) is a single regression tree created from a subset of input parameters and the bootstrapped samples.

In RStudio RFR, we use the libraries caret for classification and regression training, and randomForest to implement Breiman's random forest method for classification and regression. Number of trees to grow, or ntree = 500, this should not be set too low to guarantee that every input row is forecasted at least a few times [54]. The regression problem's default parameters are mtry or the number of variables randomly selected as candidates at each split, and importance = TRUE.

3.3.2.5 Penalized linear regression

One kind of penalized linear regression is ridge regression. This approach has the potential to reduce the magnitude of the regression coefficients, resulting in improved generalizability for predicting unseen data [53]. The ridge coefficients are calculated using the following equation:

Ridge regression has one apparent drawback: it includes all predictors in the final model. It will reduce all of the coefficients toward zero, but not precisely [55]. The LASSO and E-net regression are newer alternatives to Ridge regression that help to address this limitation. As previously stated, this study used LASSO and E-net regression for feature selection and prediction, as Ridge regression is only employed to carry out the regression technique.

Similar to LASSO and E-net regression, RStudio of the glmnet package is also employed in Ridge regression with centered and scaled datasets pre-processing and tenfold validation are utilized.

3.3.3 Performance evaluation

The mean absolute errors (MAE):

mean absolute percentage errors (MAPE):

Root-mean-square error (RMSE):

Nash − Sutcliffe efficiency coefficient (NSE):

and determination coefficient (R2):

will be used to evaluate the performance of the models for the prediction validation method [56,57,58,59], where \(N\) is the amount of validation data, \(y\) is the real output of LPI score which \(\overline{y}\) is its average value and \(\hat{y}\) is the prediction of the output of LPI score which \(\overline{{\hat{y}}}\) is the average of the predicted value.

3.4 Analysis time

Using the big O-notation analysis, we determine the theoretical computational time complexity of ML models. The O-notation is used to present any asymptotic computing characteristics by estimating the worst-case computational time. Table 2 displays the time complexity of the study ML models.

4 Result and discussion

4.1 Feature selection result

4.1.1 The result of correlation method

Figure 3 depicts the result of the correlation study performed using the Microsoft Excel data analysis tool. When a regression type prediction is used, the input of a correlation model spanning both the dependent and predictor variables is used. We begin by constructing a feature set of predictor factors that have a direct good or outstanding correlation to the dependent variable of LPI (\(r \ge\) 0.5, as shown in the red border in Fig. 3) (namely set A). A set A's predictor variables are X4 (GDP_C; \(r =\) 0.75), X18 (Exp; \(r =\) 0.52), and X19 (Imp; \(r =\) 0.5), for a total of three features. Furthermore, the predictor variables that have a strong or outstanding correlation with a member of set A (\(r \ge\) 0.5, as shown in the yellow border in Fig. 3) are taken into account and subsequently extended to a member of set B. The additional predictor variables of a set A into set B include X2 (N_GDP; \(r =\) 0.83 (with Exp) and \(r =\) 0.93 (with Imp)), X16 (LF; \(r =\) 0.62 (with Exp) and \(r =\) 0.57 (with Imp)), X21 (FER; \(r =\) 0.6 (with Exp) and \(r =\) 0.52 (with Imp)), X22 (Iwd_DI; \(r =\) 0.54 (with Exp) and \(r =\) 0.59 (with Imp)), and X23 (Owd_DI; \(r =\) -0.56 (with Exp) and \(r =\) -0.6 (with Imp)) The total number of features in set B is 3 + 5 = 8. Table 4 shows a summary of the subset of features selected using the correlation approach.

4.1.2 The result of PCA method

The PCA result is generated by RStudio in which the dependent variable and predictor variables are used as PCA model input to select the feature for the regression purpose [56]. Figure 4 depicts the proportion of variance of each principal component based on the overall result (only PC1 to PC10 out of a total of 27 PCs). The first and second principal components (i.e., PC1 and PC2) exhibited 34.9 percent variance, whereas PC1 through PC10 may encompass roughly 80 percent of the variation (81.41 percent). Furthermore, when PC1 to PC3 were evaluated, the variation was 46.33 percent, which is more than half of the range of PC1 to PC10. When PC1 to PC5 is considered half of the 10 PCs from PC1 to PC10, the variance is 62.12 percent. To construct a collection of selected features, we examined the attribute that provides a high loading on a factor (equal to or greater than 0.3). The detected attributes in PC1 to PC3 (46.33 percent variance), PC1 to PC5 (62.12 percent variation), and PC1 to PC10 (81.41 percent variation) are allocated to feature sets C, D, and E, respectively. Set C such as X1 (R_GDP_Gr), X2 (R_GDP_Gr), X4 (GDP_C), X5 (Pri_C_Gr), X11 (BB/GDP), X14 (BE/GDP), X15 (GNS_Rt), X18 (Exp), X19 (Imp), X22 (Iwd_DI), and X23 (Owd_DI), 11 features by total. Set D of 16 features is set C plus 5 features which are X8 (CAB), X9 (CP_G), X12 (GDP_D), X21 (FER), and X24 (TB). And set E has a total of 24 from the overall 26 features that exclude X16 (LF) and X17 (CAB/GDP).

Furthermore, the feature selection while constructing a PCA-biplot is illustrated in Fig. 5, with the selected features represented by blue vectors. The selection is motivated by the interrelationships of each feature to LPI. The direction of the feature vector reflects the positive or negative correlations [65]. When a feature has a comparable direction that is the smallest in the angle of the vector relative to the LPI vector, it indicates the strongest positive correlations, while the opposite direction indicates negative correlations. Vectors close to perpendicular to the LPI vector, on the other hand, are weakly correlated (orange vectors in Fig. 5.) Based on the PCA-biplot, the selected features of set F, i.e., X2 (R_GDP_Gr), X4 (GDP_C), X16 (LF), X18 (Exp), X19 (Imp), X21 (FER), X22 (Iwd_DI), X23 (Owd_DI), and X25 (MMI_Rt), which are 9 features in total. The summary of the subset of features selected using the PCA method is shown in Table 4.

4.1.3 The result of penalized linear regression method

Table 3 displays the results of the LASSO and E-net penalized linear regression methods. Using RStudio, the model for LASSO regression of Eq. (4) has been reducing the predictor parameters from 26 to 10, which offer various interception values and parameter significance.

The 9 features selected by LASSO (set G) include X2 (R_GDP_Gr), X3 (Pop_Gr), X4 (GDP_C), X9 (CP_G), X13 (PD/GDP), X18 (Exp), X19 (Imp), X25 (MMI_Rt), and X26 (DC_Gr). For Elastic-net related to Eq. (5), in this study, we vary the α as 0.1, 0.25, 0.5, 0.75, and 0.9. The results of feature selection from RStudio which provides the preferred parameters that the model does not shrink are displayed in Table 3. It was found that when α = 0.9 the set of selected features is similar to the results of LASSO. When α is assigned with the value of 0.25, 0.5, and 0.75, they provide the likely set of 10 selected features (set H). Finally, for α = 0.1, we found that 15 parameters were non-shrink (set I). Set H contains all attributes of set G which X15 (GNS_Rt) is added. And set I comprised all elements of set G with X1 (R_GDP_Gr), X14 (BE/GDP), X17 (CAB/GDP), X20 (NDIF), and X21 (FER) combined. The summary of the subset of features selected using penalized linear regression method is shown in Table 4.

4.2 Regression and validation result

According to the subset of selected features (set A to set I), 70% of datasets are trained utilizing identified ML methods such as ANN, MLP-ANN, SVR, RFR, and Ridge. Furthermore, the LASSO and E-net models constantly train their datasets using only the selected feature set that they have been trained on. Furthermore, the entire collection of all features is compared. The test sets are then utilized to validate the model. The validation findings are represented by a performance evaluator or criterion such as MAE, MAPE, RMSE, NSE, and R2.

The summary of performance evaluation findings is given in Table 5 and Fig. 6 for easier comparison. SVR of a feature set I has the greatest MAE performance (*0.1349) (minimum value), while this model with a comparable set has the best MAPE performance as the lowest value (*4.6387). For RMSE, ANN with a comparable feature set I achieves the best performance (*0.1808) (minimum value). NSE values range between \(- \infty\) and 1 to indicate the prediction performance, whereas NSE values close to 1 indicate best prediction performance [57] that the SVR with a comparable feature set I achieves the most excellent performance (*0.8938). Moreover, SVR of a feature set I has the highest R2 performance (*0.8964) (maximum value). And if R2 > 0.8, then there is a strong correlation between actual values and model estimations [16].

When ML is examined using the average value based on each model for all feature sets, the best performing model is ANN, with average MAE, MAPE, RMSE, and NSE values of **0.1525, **5.1775, **0.1985, and **0.8691, respectively. When compared to ANN, MLP-ANN, SVR, and RFR show satisfactory performance for all criteria. When compared to each admissible model, the performance of all penalized linear regression methods (Ridge, LASSO, and E-net) is a lesser amount of performance. We determined the average performance of admissible ML models that omit penalized linear regression approaches when we focused on the set of selected features. Set H has the greatest performance for MAE, MAPE, and R2 (***0.1497, ***5.1452, and ***0.8803, respectively), whereas set C has the best performance for RMSE and NSE (***0.1975 and ***0.8728, respectively).

Because of a different perspective or set of criteria produces a different set of optimal results, hence, in this study, we reprocessed using the feature union and intersection operations. To reorganize the acquired feature subsets, a feature union and intersection procedure are presented [66]. Table 6 displays the new feature set based on sets C, H, and I. However, certain feature sets are close enough that we can merge them while reprocessing, such as C ∪ I & C ∪ H ∪ I and C ∩ H & C ∩ H ∩ I. Or like the previous set that we do not reprocess such as H & H ∩ I and I & H ∪ I. Table 7 shows a summary of the ML regression model performance evaluation of the reprocess of a new feature set that has been merged with the parent sets.

For MAE, the best performance is obtained by ANN of a feature set C ∪ H (*0.1318), and this model with a comparable set performs best in MAPE (*4.4512), RMSE (*0.1723), NSE (*0.9017), and R2 (*0.9033). When ML is examined using the average value based on each model for all new and parent feature sets, the top-performing model is ANN, with average MAE, MAPE, RMSE, NSE, and R2 values of **0.1412, **4.7946, **0.1874, **0.8834 and **0.887, respectively, while SVR is second. [67] noted that when the connections between parameters become noninvertible (due to a large number of predictor variables), the input and output configurations used in ANN have a major influence on the accuracy. Furthermore, ANN outperforms linear models in terms of accuracy (where the number of important predictor variables is restricted) [68].

Moreover, concentrating on the set of selected features, we determined the average performance of the four suitable ML models described above. The best performance is exhibited in set C ∪ H, which offered the best for all performance metrics, namely MAE, MAPE, RMSE, NSE, and R2 as ***0.1463, ***5.0066, ***0.1908, ***0.8811, and ***0.888, respectively. The members of set C \(\cup \) H which affect the accuracy of LPI when predicting are X1 (R_GDP_Gr), X2 (R_GDP_Gr), X3 (Pop_Gr), X4 (GDP_C), X5 (Pri_C_Gr), X9 (CP_G), X11 (BB/GDP), X13 (PD/GDP), X14 (BE/GDP), X15 (GNS_Rt), X18 (Exp), X19 (Imp), X22 (Iwd_DI), X23 (Owd_DI), X25 (MMI_Rt), and X26 (DC_Gr), total 16 features. As previously stated, the instance must be maximized due to the normally high accuracy even with large datasets, and the number of missing values must be minimized because it can be involved in reducing bias and improving the efficiency of the analysis; furthermore, a limitation on the number of features may support this. Taking into account the parent features of C and H, this limits the number of features to 11 and 10, respectively. Those features may be used as an alternative since they give an adequate performance (closest to the best). The other explanation, set C, is supplied by the PCA method, which is one of the algorithms with higher performance than the other algorithms, resulting in many studies. While the penalized linear of E-net regression provides set H, this regression technique has accurate subset selection but lacks optimum prediction rates.

The four acceptable ML models do not differentiate from each other for the best performance set of C ∪ H and the parent set of C and H based on the errors shown by the boxplots (Fig. 7), and the RFR is circled as the biggest error values. However, it is discovered that the extreme error levels of all models are nearly the same. Furthermore, Taylor diagrams were created for the evaluation of the acquired results, and they allow for the determination of the correctness of the developed models in many areas [57, 58]. Figure 8 clearly shows that the prediction results of the set of C ∪ H and the parent set of C and H based on the four acceptable ML models are close to the observations. What is interesting about the findings shown in Fig. 8c is that the ANN model outperformed the other models for the set of C ∪ H that gives the shortest distance to the observation. The statistical significance of the acquired data was examined using the Kruskal–Wallis test in this study, as well as an analysis of whether the predicted and observed or logistics performance index distributions, were consistent [57, 69]. H0 denotes a hypothesis based on the statistically significant difference between mean predicted and observed LPI values. Table 8 reveals that the H0 hypothesis was rejected (P value \(\ge \) 0.05) in all C, H, and C ∪ H set predictions; in other words, there is no significant difference between predicted and observed averages. H0 hypotheses were rejected, similarly, this indicates all of the ANN, MLP-ANN, SVR, and RFR models produce more accurate results. This suggests that the pre-processing of data preparation and feature selection had a statistically significant beneficial influence on ML predictions.

Table 9 shows the analysis time of ML algorithms provided by analysis tools (MATLAB shows the values in second and RStudio shows the values in millisecond). The average analysis time in the MLP-ANN training procedure was longer (a constant of eight seconds approximately for all sets), otherwise less than a second.

4.3 Discussion

Finally, as shown in Table 10, we discussed the finding outcomes based on both feature selection techniques of filter and embedding method which is focused on the suggested statistical property and ML algorithm. The discussion describes the advantages and disadvantages of models that influence the findings of this study.

To get good results, effective wrapper strategies, such as sequential search, or evolutionary algorithms, such as Particle Swarm Optimization (PSO) or Genetic Algorithm (GA), provide local optimum solutions and are computationally viable, are utilized. Because of the potential of overfitting and computationally costly [72], wrappers have a significant disadvantage, particularly in terms of computational inefficiency, which becomes more obvious as the feature space develops. The wrapper technique is thus eliminated from this analysis, although it will be significant in future studies.

5 Concluding remark and future work

In conclusion, the current study illustrates an application of machine learning regression to feature selection. In this study, we looked at the impact of logistics performance utilizing the World Bank's LPI and the economic attributes of S&P Global Market Intelligence's macroeconomic data source. The 500 case samples ranged from 2009 to 2018, with an initial set of 26 economic features accessible. Furthermore, the number of instances (maximize) and the missing value of nation economic data have been traded-off in the first feature selection (minimize). The filter methods of correlation and PCA are employed in the suggested feature selection procedure. The ML regression algorithms ANN, MLP-ANN, SVR, RFR, and Ridge are then utilized to train and verify the data set depending on the selected feature. To select the feature, the embedded technique of penalized linear regression of LASSO and E-net is also used, followed by continuous training and validation of the dataset. In feature selection, the proposed ML regression uses a subset of penalized linear regression features to train and validate the dataset. According to the results of the model's performance based on the MAE, MAPE, RMSE, NSE, and R2 criteria, the feature set of PCA (set C), and E-net (set H and I) offer the most closely acceptable performance.

Then, using parent sets (C, H, and I), a feature union and intersection operation are performed. Finally, the set of C ∪ H (a total of 16 features) performs the best across all criteria. Furthermore, the findings may address the study issue that ML algorithms can select the appropriate set of economic features that reflect the country’s logistics performance. In response to the question: what is the best ML regression technique for predicting logistics performance based on selected economic attributes? The findings indicate that ANN is the most effective model for prediction in this study. Furthermore, we note that features C and H limit the number of features to 11 and 10, respectively. Those features may be used as an alternative since they give an adequate performance (near to the best) when it is necessary to maximize the instance and reduce the missing data of the dataset.

Furthermore, in a future study, the focus may be on utilizing more diverse feature dimensions integrated with economic attributes. The unique elements connected to the megatrend, such as the carbon emissions rate, the cost and consumption rate of fuel and renewable energy, the e-commerce market size, and growth, may reflect on logistics performance in the new era of a global supply chain. The enrichment work also extends to the wrapper technique.

References

World Bank (2018) Connecting to Compete 2018 Trade Logistics in the Global Economy The Logistics Performance Index and Its Indicators. http://hdl.handle.net/10986/29971. Accessed 31 August 2021

Gerschberger M, Manuj I, Freinberger PP (2017) Investigating supplier-induced complexity in supply chains. Int J of Phys Distrib Logist Manag 47(8):688–711

Wong WP, Tang CF (2018) The major determinants of logistic performance in a global perspective: evidence from panel data analysis. Int J of Logist Res Appl 21(4):431–443

D’Aleo V, Sergi BS (2017) Does logistics influence economic growth? European Exp Manag Decis 55(8):1613–1628

Takele TB (2019) The relevance of coordinated regional trade logistics for the implementation of regional free trade area of Africa. JTSCM 13(1):1–11

Chandrashekar G, Sahin F (2014) A survey on feature selection methods. Comput Electr Eng 40(1):16–28

Vieira SM, Sousa JM, Runkler TA (2010) Two cooperative ant colonies for feature selection using fuzzy models. Expert Syst Appl 37(4):2714–2723

Muthukrishnan R, Rohini R (2016) LASSO: A feature selection technique in predictive modeling for machine learning. In: Proceeding of the 2016 IEEE international conference on advances in computer applications (ICACA), pp. 18–20

Khmaissia F et al (2018) Accelerating band gap prediction for solar materials using feature selection and regression techniques. Comput Mater Sci 147:304–315

Sikora R, Piramuthu S (2007) Framework for efficient feature selection in genetic algorithm based data mining. Eur J Oper Res 180(2):723–737

Lu M (2019) Embedded feature selection accounting for unknown data heterogeneity. Expert Syst Appl 119:350–361

Lal TN et al (2006) Embedded methods, in Feature extraction. Springer, pp 137–165.

Jiang S et al (2017) Modified genetic algorithm-based feature selection combined with pre-trained deep neural network for demand forecasting in outpatient department. Expert Syst Appl 82:216–230

Bolón-Canedo V, Sánchez-Maroño N, Alonso-Betanzos A (2015) Recent advances and emerging challenges of feature selection in the context of big data. Knowl Based Syst 86:33–45

Henrique BM, Sobreiro VA, Kimura H (2019) Literature review: machine learning techniques applied to financial market prediction. Expert Syst Appl 124:226–251

Bayram S et al (2016) Comparison of multilayer perceptron (MLP) and radial basis function (RBF) for construction cost estimation: the case of Turkey. J Civ Eng Manag 22(4):480–490

Zarei FA, Baghban A (2017) Phase behavior modelling of asphaltene precipitation utilizing MLP-ANN approach. Pet Sci Technol 35(20):2009–2015

Luna A et al (2014) Prediction of ozone concentration in tropospheric levels using artificial neural networks and support vector machine at Rio de Janeiro, Brazil. Atmos Environ 98:98–104

Vaughan N et al (2014) Parametric model of human body shape and ligaments for patient-specific epidural simulation. Artif Intell Med 62(2):129–140

Coskuner G et al (2021) Application of artificial intelligence neural network modeling to predict the generation of domestic, commercial and construction wastes. Waste Manag Res 39(3):499–507

Jahn M (2020) Artificial neural network regression models in a panel setting: Predicting economic growth. Econ Model 91:148–154

Tümer AE, Akkuş A (2018) Forecasting gross domestic product per capita using artificial neural networks with non-economical parameters. Phys A: Stat Mech Appl 512:468–473

Ballestar MT, Grau-Carles PP, Sainz J (2019) Predicting customer quality in e-commerce social networks: a machine learning approach. Rev Manag Sci 13(3):589–603

Quan Q et al (2020) Research on water temperature prediction based on improved support vector regression. Neural Comput Appl. https://doi.org/10.1007/s00521-020-04836-4

Zhong H et al (2019) Vector field-based support vector regression for building energy consumption prediction. Appl Energy 242:403–414

García-Floriano A et al (2018) Support vector regression for predicting software enhancement effort. Inf Softw Technol 97:99–109

Yao X, Crook J, Andreeva G (2015) Support vector regression for loss given default modelling. Eur J Oper Res 240(2):528–538

Li Y et al (2018) Random forest regression for online capacity estimation of lithium-ion batteries. Appl Energy 232:197–210

Ouedraogo I, Defourny P, Vanclooster M (2019) Application of random forest regression and comparison of its performance to multiple linear regression in modeling groundwater nitrate concentration at the African continent scale. Hydrogeol J 27(3):1081–1098

Liang H et al (2020) GDP spatialization in Ningbo City based on NPP/VIIRS night-time light and auxiliary data using random forest regression. Adv Space Res 65(1):481–493

Bouktif S et al (2018) Optimal deep learning lstm model for electric load forecasting using feature selection and genetic algorithm: Comparison with machine learning approaches. Energies 11(7):1636

Alamoodi A et al (2021) Machine learning-based imputation soft computing approach for large missing scale and non-reference data imputation. Chaos Solit Fractals 151:111236

Cai J et al (2020) Prediction and analysis of net ecosystem carbon exchange based on gradient boosting regression and random forest. Appl Energy 262:114566

Cohen J (1992) Statistical power analysis. Curr Dir Psychol Sci 1(3):98–101

Lawrence S et al (2013) Source apportionment of traffic emissions of particulate matter using tunnel measurements. Atmos Environ 77:548–557

Abimbola O-PP et al (2020) Predicting Escherichia coli loads in cascading dams with machine learning: An integration of hydrometeorology, animal density and grazing pattern. Sci Total Environ 722:137894

Zhang H, Srinivasan R (2021) A biplot-based PCA approach to study the relations between indoor and outdoor air pollutants using case study buildings. Buildings 11(5):218

Das B et al (2018) Evaluation of multiple linear, neural network and penalised regression models for prediction of rice yield based on weather parameters for west coast of India. Int J Biometeorol 62(10):1809–1822

Tibshirani R (1996) Regression shrinkage and selection via the lasso. J R Stat Soc Series B Stat Methodol 58(1):267–288

Efron B et al (2004) Least angle regression. Ann Stat 32(2):407–499

Zhang X et al (2014) A causal feature selection algorithm for stock prediction modeling. Neurocomputing 142:48–59

Zou H, Hastie T (2005) Regularization and variable selection via the elastic net. J R Stat Soc Series B Stat Methodol 67(2):301–320

Osisanwo F et al (2017) Supervised machine learning algorithms: classification and comparison. Int J Comput 48(3):128–138

Lima-Junior FR, Carpinetti LC-R (2019) Predicting supply chain performance based on SCOR® metrics and multilayer perceptron neural networks. Int J Prod Econ 212:19–38

Laboissiere LA, Fernandes RA, Lage GG (2015) Maximum and minimum stock price forecasting of Brazilian power distribution companies based on artificial neural networks. Appl Soft Comput 35:66–74

Lahmiri S (2014) Improving forecasting accuracy of the S&P500 intra-day price direction using both wavelet low and high frequency coefficients. Fluct Noise Lett 13(01):1450008

Fath AH, Madanifar F, Abbasi M (2020) Implementation of multilayer perceptron (MLP) and radial basis function (RBF) neural networks to predict solution gas-oil ratio of crude oil systems. Petroleum 6(1):80–91

Heiat A (2002) Comparison of artificial neural network and regression models for estimating software development effort. Inf Softw Technol 44(15):911–922

Moayedi H, Rezaei A (2019) An artificial neural network approach for under-reamed piles subjected to uplift forces in dry sand. Neural Comput Appl 31(2):327–336

Kahani M et al (2018) Development of multilayer perceptron artificial neural network (MLP-ANN) and least square support vector machine (LSSVM) models to predict Nusselt number and pressure drop of TiO2/water nanofluid flows through non-straight pathways. Numer Heat Tr A-Appl 74(4):1190–1206

Zhang F, O'Donnell LJ (2020) Support vector regression, in Machine Learning. Elsevier, pp. 123–140

Ahmad MW, Reynolds J, Rezgui Y (2018) Predictive modelling for solar thermal energy systems: A comparison of support vector regression, random forest, extra trees and regression trees. J Clean Prod 203:810–821

Yuchi W et al (2019) Evaluation of random forest regression and multiple linear regression for predicting indoor fine particulate matter concentrations in a highly polluted city. Environ Pollut 245:746–753

Nandipati SC, XinYing C, Wah KK (2020) Hepatitis C virus (HCV) prediction by machine learning techniques. Appl Model Simul 4:89–100

García-Nieto PJ, García-Gonzalo E, Paredes-Sánchez JP (2021) Prediction of the critical temperature of a superconductor by using the WOA/MARS, ridge, lasso and elastic-net machine learning techniques. Neural Comput Appl 33:17131–17145

Kong X et al (2015) Wind speed prediction using reduced support vector machines with feature selection. Neurocomputing 169:449–456

Başakın EE et al (2021) A new insight to the wind speed forecasting: robust multi-stage ensemble soft computing approach based on pre-processing uncertainty assessment. Neural Comput Appl 34:783–812

Uncuoğlu E, Latifoğlu L, Özer AT (2021) Modelling of lateral effective stress using the particle swarm optimization with machine learning models. Arab J Geosci 14:2441

Lu X et al (2018) Daily pan evaporation modeling from local and cross-station data using three tree-based machine learning models. J Hydrol 566:668–684

Ullah QZ et al (2021) A Cartesian genetic programming based parallel neuroevolutionary model for cloud server’s CPU usage prediction. Electronics 10:67

Guo Y et al (2020) A spatiotemporal thermo guidance based real-time online ride-hailing dispatch framework. IEEE Access 8:115063–115077

Mohammed MS et al (2021) PEW: prediction-based early dark cores wake-up using online ridge regression for many-core systems. IEEE Access 9:124087–124099

Yang ZY et al (2019) Multi-view based integrative analysis of gene expression data for identifying biomarkers. Sci Rep 9:13504

Koç O, Peters J (2019) Learning to serve: an experimental study for a new learning from demonstrations framework. IEEE Robot Autom Lett 4(2):1784–1791

Karaman M (2019) Evaluation of bread wheat genotypes in irrigated and rainfed conditions using biplot analysis. Appl Ecol Environ Res 17(1):1431–1450

Tsai CF, Hsiao YC (2010) Combining multiple feature selection methods for stock prediction: Union, intersection, and multi-intersection approaches. Decis Support Syst 50(1):258–269

Venkatesan D, Kannan K, Saravanan R (2009) A genetic algorithm-based artificial neural network model for the optimization of machining processes. Neural Comput Appl 18(2):135–140

Suryanarayana G et al (2018) Thermal load forecasting in district heating networks using deep learning and advanced feature selection methods. Energy 157:141–149

Citakoglu H (2021) Comparison of multiple learning artificial intelligence models for estimation of long-term monthly temperatures in Turkey. Arab J Geosci 14:2131

Guo J et al (2019) An XGBoost-based physical fitness evaluation model using advanced feature selection and Bayesian hyper-parameter optimization for wearable running monitoring. Comput Netw 151:166–180

Fauvel M, Chanussot J (2009) Benediktsson JA (2009) Kernel principal component analysis for the classification of hyperspectral remote sensing data over urban areas. EURASIP J Adv Signal Process 1:783194

Bolón-Canedo V, Sánchez-Maroño N, Alonso-Betanzos A (2013) A review of feature selection methods on synthetic data. Knowl Inf Syst 34(3):483–519

Kwak N, Choi CH (2002) Input feature selection for classification problems. IEEE Trans Neural Netw 13(1):143–159

Syam N, Sharma A (2018) Waiting for a sales renaissance in the fourth industrial revolution: machine learning and artificial intelligence in sales research and practice. Ind Mark Manag 69:135–146

Hundi P, Shahsavari R (2020) Comparative studies among machine learning models for performance estimation and health monitoring of thermal power plants. Appl Energy 265:114775

Huang R et al (2021) Machine learning in natural and engineered water systems. Water Res 205:117666

Zhu R et al (2021) Application of machine learning techniques for predicting the consequences of construction accidents in China. Process Saf Environ Prot 145:293–302

Ahmadi-Nedushan B et al (2006) A review of statistical methods for the evaluation of aquatic habitat suitability for instream flow assessment. River Res Appl 22(5):503–523

Boucher TF et al (2015) A study of machine learning regression methods for major elemental analysis of rocks using laser-induced breakdown spectroscopy. Spectrochim Acta B 107:1–10

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visithttp://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jomthanachai, S., Wong, W.P. & Khaw, K.W. An application of machine learning regression to feature selection: a study of logistics performance and economic attribute. Neural Comput & Applic 34, 15781–15805 (2022). https://doi.org/10.1007/s00521-022-07266-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07266-6