Abstract

Based on CT and MRI images acquired from normal pressure hydrocephalus (NPH) patients, using machine learning methods, we aim to establish a multimodal and high-performance automatic ventricle segmentation method to achieve an efficient and accurate automatic measurement of the ventricular volume. First, we extract the brain CT and MRI images of 143 definite NPH patients. Second, we manually label the ventricular volume (VV) and intracranial volume (ICV). Then, we use the machine learning method to extract features and establish automatic ventricle segmentation model. Finally, we verify the reliability of the model and achieved automatic measurement of VV and ICV. In CT images, the Dice similarity coefficient (DSC), intraclass correlation coefficient (ICC), Pearson correlation, and Bland–Altman analysis of the automatic and manual segmentation result of the VV were 0.95, 0.99, 0.99, and 4.2 ± 2.6, respectively. The results of ICV were 0.96, 0.99, 0.99, and 6.0 ± 3.8, respectively. The whole process takes 3.4 ± 0.3 s. In MRI images, the DSC, ICC, Pearson correlation, and Bland–Altman analysis of the automatic and manual segmentation result of the VV were 0.94, 0.99, 0.99, and 2.0 ± 0.6, respectively. The results of ICV were 0.93, 0.99, 0.99, and 7.9 ± 3.8, respectively. The whole process took 1.9 ± 0.1 s. We have established a multimodal and high-performance automatic ventricle segmentation method to achieve efficient and accurate automatic measurement of the ventricular volume of NPH patients. This can help clinicians quickly and accurately understand the situation of NPH patient's ventricles.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In 1965, Hakim and Adams [1] first proposed the concept of normal pressure hydrocephalus (NPH), that clinical symptoms are gait disorder, urinary incontinence, and dementia; the pressure of the cerebrospinal fluid during lumbar puncture is normal; the imaging manifestations are communicating hydrocephalus [2, 3]. In most parts of the world, the number of elderly and dementia patients is increasing [4]. Studies have shown that the prevalence of NPH is as high as 5.9% among the elderly over 80 [5]. As a kind of dementia disease that can be treated in the elderly [2], NPH is of increasing clinical importance [6]. On the one hand, early diagnosis and surgical treatment may increase the likelihood of a good prognosis for patients with NPH [7]. On the other hand, NPH has a spectrum of disease development, and radiological signs precede clinical symptoms [8]. Morphological evaluation of CT or MRI is essential for screening and diagnosing patients with NPH. Enlargement of the ventricle is an imaging feature of NPH [2]. More importantly, similar to NPH, enlarged ventricles are also related to cognitive and gait disorders [9]. Therefore, it is very necessary to evaluate the ventricles of patients with NPH.

In the past, researchers manually segmented the ventricle to calculate the volume. But this method needs to be based on professional knowledge [10, 11] and is very time- and energy-consuming [12,13,14]. More importantly, it is prone to human errors [15]. Therefore, it is precise because of these shortcomings that manual calculation of ventricular volume is often only used in clinical research with small samples and is difficult to apply in clinical practice with large samples [16, 17]. With the development of science and technology, artificial intelligence methods [18, 19] and the field of medical imaging are getting closer and closer [20, 21]. In recent years, some researchers have proposed using artificial intelligence methods to automatically extract ventricle features and calculate ventricular volume [22,23,24] through medical image analysis techniques [25, 26]. However, these methods for automatically measuring the volume of the ventricle are single mode. What's more, these methods cannot be applied to CT and MRI images at the same time, let alone calculate ventricular volume (VV) and intracranial volume (ICV) at the same time.

So, our purpose is based on CT and MRI images of NPH patients, using machine learning methods, to achieve efficient and accurate automatic measurement of the ventricular volume of NPH patients. This will lay a solid foundation for the development of a large sample of the ventricular volume of NPH patients, and it will also help clinicians quickly and accurately assess the ventricular condition of NPH patients [12, 14, 27].

2 Method

2.1 Patient and instrument information

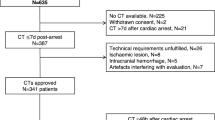

By strictly following the guidelines [28], we retrospectively extracted CT and MRI images of the brains of 143 definite NPH patients in Shenzhen Second People's Hospital from January 1, 2014 to December 31, 2020. Among the 143 patients with definite NPH, 38 patients had only CT images, 46 patients had only MRI images, and 84 patients had both CT images and MRI images. Therefore, we obtained the brain CT images of 122 patients (38 + 84 = 122) with definite NPH and the brain MRI images of 130 patients (46 + 84 = 130) with definite NPH. Their characteristic information is listed in Table 1. In this study, a total of three MRI equipments and two CT equipments are included. Their specific parameters are described in Table 2. The flowchart and network structure of the whole process are shown in Fig. 1.

2.2 Ethical approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards. This study passed the ethical approval of The First Affiliated Hospital of Shenzhen University's bioethics committee (approval no. KS20190114001), and the researchers all signed the informed consent form.

2.3 Manually label the ventricles and the intracranial volume

First, a radiologist with more than 5 years of clinical work experience manually marked the VV and ICV. Then, a neurosurgeon with more than 10 years of clinical work experience adjusted the manually marked results. Finally, an anatomy expert with more than 20 years of work experience reviewed the manual annotation results.

2.4 Computer processor information

All of the experiments were conducted on a machine with 48 cores Intel Xeon Platinum (Cooper Lake) 8369 processor and 192 GB memory. For training the model, the PyTorch framework was utilized with 4 Nvidia Titan RTX GPUs.

2.5 Image preprocessing

In the data preprocessing stage, we first normalized images using the z-score normalization through subtracting its mean and divided by its standard deviation. For handling the anomaly pixels in scanned CT/MRI volumes, we clipped them within the range of 2.5-quantile and 97.5-quantile. Then, we augmented the data with random horizontal flipping with 0.5 probability and random scaling of hue, saturation, and brightness within the range [0.8, 1.2]. Besides, we scaled the images and masks using the bicubic interpolation method and nearest interpolation, respectively.

2.6 Machine learning process

First, we randomly select 20% of the data from the dataset as the test set, and the rest are regarded as the training set. Then, the multimodal segmentation model is described in the following part.

In real-world scenarios, the thick-slice images can be easily obtained since they do not require heavy human annotations, whereas annotating thin-slice images is labor-intensive. Besides, the distribution of thick-slice images and thin-slice images is distinctive, which leads to the domain shift problem that hinders the ability of deep learning models. Moreover, CT and MRI images are easy to obtain, to leverage these two modalities of images. We propose a segmentation model that can automatically segment the CT images and MRI images regardless of the thickness. In our previous research, the feasibility of this model was verified [21, 29].

Our goal is to utilize the thick images of different modalities to minimize the performance gap between thick-slice CT and MRI images. We denote the thick slices as \({D}_{S}=\left\{\left({x}_{s},{ y}_{s}\right)\right| {x}_{s}\in {R}^{H\times W\times 3},{ y}_{s}\in {R}^{H\times W}\}\) and the thin-slice images as \({D}_{T}=\{{x}_{t}|{x}_{t}\in {R}^{H\times W\times 3}\}\). For the image extraction, we use the ResNet-34 that is pretrained on the ImageNet dataset as the encoder. For the decoder, we adopt the sub-pixel convolution to upsample and construct the images without heavy computations while bringing additional information to the prediction. In specific, the sub-pixel convolution can be derived as:

where \(SP(\cdot )\) operator arranges a tensor shaped in \(H\times W\times C\times {r}^{2}\) into a tensor shaped in \(rH\times rW\times C\). \({F}^{L}\) and \({F}^{L-1}\) are the feature maps of the image.

For training the model, we use the thick-slice images and thin-slice images as the inputs of the model and optimize the model with the following objective function:

where \({p}_{s}\) and \({p}_{t}\) are the model’s predictions, and \({y}_{s}\) are the label of thick-slice images. More concrete, the \({L}_{S}\) is the cross-entropy loss which is computed as:

and \({L}_{T}\) is the entropy loss that guides the segmentation of the thin slices, which is obtained by:

During model training, we iteratively optimize the above loss functions. For testing, we feed the image slices as the input into the trained model and get the predicted segmentation.

Compared to the previous medical segmentation approaches, they tend to train the networks from scratch within end-to-end manner. However, it has been widely proved that using the pretrained model on the large-scale dataset, e.g., ImageNet can enable the network to learn the textual and shape prior effectively in the early stage of training. Therefore, we incorporated the ImageNet pretrained ResNet as the initialization of the encoder for obtaining powerful image representation during the first stage of training.

2.7 Calculation of VV and ICV

For this part, we present a method how to automatically calculate the VV and ICV using the segmentation method described in the previous sections. We first extract the ratio of the image from the scanned MRI or CT files; we denote this ratio \(r=({r}_{x}, {r}_{y}, {r}_{z})\)(mm3/pixel). Given an input MRI/CT volume, we first used the ventricle segmentation model to predict each type of ventricle, i.e., left lateral ventricle, right lateral ventricle, third ventricle, and fourth ventricle. For the i-th slice of the volume \({\varvec{V}}\), the prediction of each pixel is \({{\varvec{V}}}_{{\varvec{j}}{\varvec{k}}}^{{\varvec{i}}}\). Therefore, for the ventricle categorized as c, we calculate its \(V{V}_{c}\) as follows:

where \(1\left[x=y\right]\) is the indicator function.

Therefore, given a scanned CT/MRI volume, we can use the above equation to estimate the VV of each ventricle. Similarly, we can estimate the volume of the whole brain by training a whole-brain segmentation model as we have stated in the previous section. Then, the volume of the brain \(ICV\) can be calculated. We use the trained whole-brain segmentation model to predict the pixel. For the i-th slice of the volume \({\varvec{V}}\), the prediction of each pixel is \({{\varvec{V}}}_{{\varvec{j}}{\varvec{k}}}^{{\varvec{i}}}\). With the whole-brain region categorized as 1, we can calculate the corresponding ICV as follows:

Then, according to the definition, the VV/ICV can be obtained as follows:

2.8 Statistical analysis

Combining statistical methods used in previous literature [22, 30], we use Dice’s similarity coefficient (DSC), intraclass correlation coefficients (ICC), Pearson correlation, and Bland–Altman analysis to evaluate the spatial overlap, reliability, correlation, and consistency between the results of automatic and manual ventricle segmentation.

2.9 Implementation detail

For the implementation, we first trained the model on the thick-slice datasets with SGD optimizer for 200 epochs. The initial learning rate was set to 1e-3 with linear decay schedule. Then, we used the pretrained model as the initialization of the model and applied both thick slice and thin slices on the proposed objective function with the initial learning rate 1e-4 for 100 epochs. The weight decay factor was 1e-5 for training. For the training time, the pretrain stage took about 5 h on the machine with 4 NVIDIA TITAN RTX GPUs, and the main training took 10 h on the same machine.

3 Results

3.1 The processing results of CT image

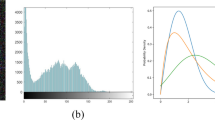

The DSC, ICC, and Pearson correlations of the VV generated by our model for automatic segmentation and the VV generated by manual segmentation are 0.95, 0.99, and 0.99, respectively. The DSC, ICC, and Pearson correlations of ICV generated by automatic segmentation and manual segmentation are 0.96, 0.99, and 0.99, respectively (Table 3 and Fig. 2). Bland–Altman analysis shows that manual and automatic segmentation bias mean ± standards deviations of VV and ICV are 4.2 ± 2.6 and 6.0 ± 3.8 (Fig. 3). It takes 3.4 ± 0.3 s for our model to automatically segment the VV and ICV of a patient (Table 4).

Pearson correlation analysis diagram of manual and automatic segmentation results. Whether it is the ventricle volume (VV) and intracranial volume (ICV) of the CT image or MRI image; the Pearson correlation between automatic and manual segmentation results is 0.99, and there is the statistical significance (P < 0.01)

Bland–Altman analysis diagram of manual and automatic segmentation results. In the CT image, the Bland–Altman analysis shows that manual and automatic segmentation bias mean ± standards deviations of VV and ICV are 4.2 ± 2.6 and 6.0 ± 3.8. In the MRI image, the Bland–Altman analysis shows that manual and automatic segmentation bias mean ± standards deviations of VV and ICV are 2.0 ± 0.6 and 7.9 ± 3.8

3.2 The processing results of MRI image

The DSC, ICC, and Pearson correlations of the VV generated by automatic segmentation and manual segmentation are 0.94, 0.99, and 0.99, respectively. The DSC, ICC, and Pearson correlations of ICV generated by automatic segmentation and manual segmentation are 0.93, 0.99, and 0.99, respectively (Table 3 and Fig. 2). Bland–Altman analysis shows that manual and automatic segmentation bias mean ± standards deviations of VV and ICV are 2.0 ± 0.6 and 7.9 ± 3.8 (Fig. 3). It takes 1.9 ± 0.1 s for our model to automatically segment the VV and ICV of a patient (Table 4).

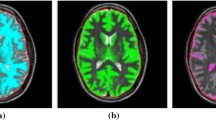

3.3 Processing result display

For qualitatively evaluating the effectiveness of our proposed method, we visualize the segmentation results as well as their corresponding 3D reconstruction results for both MRI and CT samples in Fig. 4. As depicted in the figure, the right lateral ventricle is colored in red; the left lateral ventricle is colored in green; the yellow-colored region represents the third ventricle; and the blue region represents the fourth ventricle. Besides, the 3D segmentation results are visualized from the axial plane, the coronal plane, and the sagittal plane. We can notice that our method could not only predict the two ventricles mentioned above but also segment the third ventricle and the fourth ventricle well. By comparing the results of our method with other methods in the automatic segmentation of the ventricle of the validation set, we can see that our method is superior to the other two methods in automatically segmenting the ventricle (Table 5).

The visualization of the 3D brain ventricles and whole-brain segmentation results with two different modalities (CT/MRI) and the corresponding three-dimensional visualization of the predictions. The right lateral ventricle is colored in red; the left lateral ventricle is the green one; the third ventricle is colored with yellow; and the blue region indicates the fourth ventricle

4 Discussions

The results produced by the multimodal automatic ventricle segmentation method established through the brain images of NPH patients and the results produced by manual segmentation have excellent spatial overlap, reliability, correlation, and consistency. Therefore, the artificial intelligence method can realize the efficient and accurate assessment of the ventricular condition of NPH patients.

Volume measurement is obtained by segmentation, which refers to the process of describing the structure in imaging research [23]. The segmentation of the ventricle provides a quantitative measurement for the changes of the ventricle, forming important diagnostic information [31]. For manual segmentation, it took about 30 min to obtain the VV and ICV of a patient by manual segmentation [17]. There is no doubt that this will hinder the evaluation of the ventricles of large-scale samples [13]. Because of this, manual segmentation is often impractical in large-scale clinical practice, and more automated methods are urgently needed to complete it [32, 33]. So, the automated brain image segmentation method is a research hotspot in recent years [16]. More importantly, the development of accurate, fast, and easy-to-use ventricular volume segmentation methods is of great significance for further research and evaluation of the standardized use of ventricular volume in NPH patients [27]. The automatic ventricle segmentation method can overcome the limitations of the manual segmentation method [10]. It is an efficient and rapid ventricle segmentation method [12, 23]. The most important thing is that it can significantly shorten the operation time [24]; this lays a solid foundation for the direct measurement of the ventricle volume in large-scale clinical practice. However, due to the difference between CT and MRI images, automatic ventricle segmentation methods based on CT images are often difficult to process MR images [34]. On the other hand, the existing automatic ventricle segmentation methods for NPH patients only segment the ventricle structure [24]. Compared with the volume of the ventricle, the VV/ICV can reflect the situation of the ventricle in the whole skull [22] and take into account the differences in the volume of each subject caused by changes in anatomy [35].

Compared with current semi-supervised segmentation techniques, Li et al. [36] proposed a generative method that adopts StyleGAN2 with an augmented label synthesis branch, which utilizes partially labeled images to predict the out-of-domain images. During the inference time, it requires to fine-tune the network to find the best reconstruction with their proposed objective function for the target image. By contrast, our method fine-tunes the network with labeled and unlabeled images together to preserve the generalization ability for both thick-slice and thin-slice images under both CT and MRI modalities.

This study has the following limitations: first of all, this is a retrospective study; it is based on existing images and clinical information. Next, we will collect more comprehensive clinical information and imaging data of NPH patients through prospective research; we will also pay more attention to the follow-up process of NPH patients and the situation after surgery; this will help us to understand NPH disease more comprehensively. Secondly, our study is a single-center study; patients are all from a single area. Previous studies mentioned that the ventricles of different races are different [17]. Then, we will conduct multicenter research to further understand the ventricles of NPH patients and improve the applicability and application value of the automatic ventricle segmentation method. Thirdly, we only performed routine brain imaging analysis. Functional imaging is also very important for the diagnosis and treatment of NPH patients [37]. Therefore, in a follow-up research, we will use conventional imaging and functional imaging to further understand the changes in brain structure and function of NPH patients. The last but most important thing is that both the medical field and the artificial intelligence field are constantly evolving [18, 20]. Our approach needs to fine-tune the network on the thin slice and thick slice together for preserving the generalization ability on both types of images, which requires to access the thick-slice images when training for the thin-slice images although it works for multiple modalities. This would be impractical when it comes to the privacy of the data. Subsequently, we will continue to optimize the algorithm of the model to meet the actual needs of clinical practice. Besides, since numerous artificial intelligence algorithms have been successfully deployed to the real-world system [19], we also consider integrating our algorithm into the out-of-the-box healthcare system directly. To better integrate artificial intelligence and medicine, complement each other and make progress together.

5 Conclusion

In summary, we have established a multimodal and high-performance automatic ventricle segmentation method to achieve efficient and accurate automatic measurement of the ventricular volume of NPH patients. It can not only process CT and MRI images at the same time but also calculate the ventricular volume and relative ventricular volume at the same time. The whole process is relatively fast compared to the traditional method. Besides, the thickness agnostic ventricle and whole-brain segmentation can handle the samples generated by different scanners as well as the thickness of slices. This is an effective combination of the medical field and the field of artificial intelligence. It not only lays a solid foundation for the subsequent analysis of large samples of NPH patients, but also helps clinicians quickly and accurately understand the situation of normal pressure hydrocephalus patient's ventricles, which can help clinicians in the diagnosis of NPH patients, the follow-up process, and the evaluation of surgical effects.

Availability of data and material

All datasets analyzed during the present study are available from the corresponding author on reasonable request.

Code availability

Open-source codes will be uploaded and published on GitHub subject to the publication of this work.

References

Adams RD, Fisher CM, Hakim S, Ojemann RG, Sweet WH (1965) Symptomatic occult hydrocephalus with normal cerebrospinal-fluid pressure: a treatable syndrome. N Engl J Med 273:117–126. https://doi.org/10.1056/NEJM196507152730301

Nakajima M, Yamada S, Miyajima M (2021) Guidelines for Management of Idiopathic Normal Pressure Hydrocephalus (Third Edition): endorsed by the Japanese Society of Normal Pressure Hydrocephalus. Neurol Med Chir (Tokyo) 61:63–97. https://doi.org/10.2176/nmc.st.2020-0292

He W, Fang X, Wang X (2020) A new index for assessing cerebral ventricular volume in idiopathic normal-pressure hydrocephalus: a comparison with Evans’ index. Neuroradiology 62:661–667. https://doi.org/10.1007/s00234-020-02361-8

Prince M, Bryce R, Albanese E, Wimo A, Ribeiro W, Ferri CP (2013) The global prevalence of dementia: a systematic review and metaanalysis. Alzheimers Dement 9:63–75. https://doi.org/10.1016/j.jalz.2012.11.007

Jaraj D, Rabiei K, Marlow T, Jensen C, Skoog I, Wikkelso C (2014) Prevalence of idiopathic normal-pressure hydrocephalus. Neurology 82:1449–1454. https://doi.org/10.1212/WNL.0000000000000342

Kazui H, Miyajima M, Mori E, Ishikawa M (2015) Lumboperitoneal shunt surgery for idiopathic normal pressure hydrocephalus (SINPHONI-2): an open-label randomised trial. Lancet Neurol 14:585–594. https://doi.org/10.1016/S1474-4422(15)00046-0

Andren K, Wikkelso C, Tisell M, Hellstrom P (2014) Natural course of idiopathic normal pressure hydrocephalus. J Neurol Neurosurg Psychiatry 85:806–810. https://doi.org/10.1136/jnnp-2013-306117

Jaraj D, Wikkelso C, Rabiei K (2017) Mortality and risk of dementia in normal-pressure hydrocephalus: a population study. Alzheimers Dement 13:850–857. https://doi.org/10.1016/j.jalz.2017.01.013

Palm WM, Saczynski JS, van der Grond J (2009) Ventricular dilation: association with gait and cognition. Ann Neurol 66:485–493. https://doi.org/10.1002/ana.21739

Kocaman H, Acer N, Köseoğlu E, Gültekin M, Dönmez H (2019) Evaluation of intracerebral ventricles volume of patients with Parkinson’s disease using the atlas-based method: A methodological study. J Chem Neuroanaty 98:124–130. https://doi.org/10.1016/j.jchemneu.2019.04.005

Kempton MJ, Underwood TSA, Brunton S (2011) A comprehensive testing protocol for MRI neuroanatomical segmentation techniques: evaluation of a novel lateral ventricle segmentation method. Neuroimage 58:1051–1059. https://doi.org/10.1016/j.neuroimage.2011.06.080

Quon JL, Han M, Kim LH (2021) Artificial intelligence for automatic cerebral ventricle segmentation and volume calculation: a clinical tool for the evaluation of pediatric hydrocephalus. J Neurosurg Pediatrics 27:131–138. https://doi.org/10.3171/2020.6.PEDS20251

Dubost F, Bruijne MD, Nardin M (2020) Multi-atlas image registration of clinical data with automated quality assessment using ventricle segmentation. Med Image Anal 63:101698. https://doi.org/10.1016/j.media.2020.101698

Qiu W, Yuan J, Rajchl M (2015) 3D MR ventricle segmentation in pre-term infants with post-hemorrhagic ventricle dilatation (PHVD) using multi-phase geodesic level-sets. Neuroimage 118:13–25. https://doi.org/10.1016/j.neuroimage.2015.05.099

Poh LE, Gupta V, Johnson A, Kazmierski R, Nowinski WL (2012) Automatic segmentation of ventricular cerebrospinal fluid from ischemic stroke CT images. Neuroinformatics 10:159–172. https://doi.org/10.1007/s12021-011-9135-9

Cherukuri V, Ssenyonga P, Warf BC, Kulkarni AV, Monga V, Schiff SJ (2018) Learning based segmentation of CT brain images: application to postoperative hydrocephalic scans. IEEE Trans Biomed Eng 65:1871–1884. https://doi.org/10.1109/TBME.2017.2783305

Ambarki K, Israelsson H, Wåhlin A, Birgander R, Eklund A, Malm J (2010) Brain ventricular size in healthy elderly. Neurosurgery 67:94–99. https://doi.org/10.1227/01.NEU.0000370939.30003.D1

Hassan MM, Alam MGR, Uddin MZ, Huda S, Almogren A, Fortino G (2019) Human emotion recognition using deep belief network architecture. Inform Fusion 51:10–18. https://doi.org/10.1016/j.inffus.2018.10.009

Zhang Y, Gravina R, Lu H, Villari M, Fortino G (2018) PEA: Parallel electrocardiogram-based authentication for smart healthcare systems. J Netw Computer Appl 117:10–16. https://doi.org/10.1016/j.jnca.2018.05.007

Piccialli F, Somma VD, Giampaolo F, Cuomo S, Fortino G (2021) A survey on deep learning in medicine: Why, how and when? Inform Fusion 66:113–137. https://doi.org/10.1016/j.inffus.2020.09.006

Yang G, Ye Q, Xia J (2022) Unbox the black-box for the medical explainable AI via multi-modal and multi-centre data fusion: A mini-review, two showcases and beyond. Inform Fusion 77:29–52. https://doi.org/10.1016/j.inffus.2021.07.016

Ntiri EE, Holmes MF, Forooshani PM (2021) Improved segmentation of the intracranial and ventricular volumes in populations with cerebrovascular lesions and atrophy using 3D CNNs. Neuroinformatics. https://doi.org/10.1007/s12021-021-09510-1

Huff TJ, Ludwig PE, Salazar D, Cramer JA (2019) Fully automated intracranial ventricle segmentation on CT with 2D regional convolutional neural network to estimate ventricular volume. Int J Comp Assisted Radiol Surg 14:1923–1932. https://doi.org/10.1007/s11548-019-02038-5

Shao M, Han S, Carass A (2019) Brain ventricle parcellation using a deep neural network: Application to patients with ventriculomegaly. Neuroimage Clin 23:101871. https://doi.org/10.1016/j.nicl.2019.101871

Zhao S, Gao Z, Zhang H et al (2017) Robust segmentation of intima-media borders with different morphologies and dynamics during the cardiac cycle. IEEE J Biomed Health Informatics 22:1571–1582. https://doi.org/10.1109/JBHI.2017.2776246

Zhao S, Wu X, Chen B, Li S (2021) Automatic vertebrae recognition from arbitrary spine MRI images by a category-Consistent self-calibration detection framework. Med Image Anal 67:101826. https://doi.org/10.1016/j.media.2020.101826

Neikter J, Agerskov S, Hellström P (2020) Ventricular volume is more strongly associated with clinical improvement than the evans index after shunting in idiopathic normal pressure hydrocephalus. Am J Neuroradiol 41:1187–1192. https://doi.org/10.3174/ajnr.A6620

Mori E, Ishikawa M, Kato T (2012) Guidelines for management of idiopathic normal pressure hydrocephalus: second edition. Neurol Med Chir (Tokyo) 52:775–809. https://doi.org/10.2176/nmc.52.775

Zhou X, Ye Q, Jiang Y (2020) Systematic and comprehensive automated ventricle segmentation on ventricle images of the elderly patients: a retrospective study. Front Aging Neurosci 12:618538. https://doi.org/10.3389/fnagi.2020.618538

Zhao SX, Xiao YH, Lv FR, Zhang ZW, Sheng B, Ma HL (2018) Lateral ventricular volume measurement by 3D MR hydrography in fetal ventriculomegaly and normal lateral ventricles. J Magnetic Resonance Imaging 48:266–273. https://doi.org/10.1002/jmri.25927

Chen W, Smith R, Ji S, Ward KR, Najarian K (2009) Automated ventricular systems segmentation in brain CT images by combining low-level segmentation and high-level template matching. BMC Med Inform Decis Making 9:S4. https://doi.org/10.1186/1472-6947-9-S1-S4

Chou Y, Leporé N, de Zubicaray GI (2008) Automated ventricular mapping with multi-atlas fluid image alignment reveals genetic effects in Alzheimer’s disease. Neuroimage 40:615–630. https://doi.org/10.1016/j.neuroimage.2007.11.047

Tang X, Crocetti D, Kutten K (2015) Segmentation of brain magnetic resonance images based on multi-atlas likelihood fusion: testing using data with a broad range of anatomical and photometric profiles. Front Neurosci 9:61. https://doi.org/10.3389/fnins.2015.00061

Qian X, Lin Y, Zhao Y, Yue X, Lu B, Wang J (2017) Objective Ventricle segmentation in brain ct with ischemic stroke based on anatomical knowledge. Biomed Res Int 2017:1–11. https://doi.org/10.1155/2017/8690892

Tarnaris A, Toma AK, Pullen E (2011) Cognitive, biochemical, and imaging profile of patients suffering from idiopathic normal pressure hydrocephalus. Alzheimers Dement 7:501–508. https://doi.org/10.1016/j.jalz.2011.01.003

Li D, Yang J, Kreis K, Torralba A, Fidler S (2021) Semantic segmentation with generative models: Semi-supervised learning and strong out-of-domain generalization. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2021:8300–8311

Zhang H, He WJ, Liang LH (2021) Diffusion spectrum imaging of corticospinal tracts in idiopathic normal pressure hydrocephalus. Front Neurol 12:636518. https://doi.org/10.3389/fneur.2021.636518

Acknowledgements

The author is very grateful to Mengyao Xu and Yibo Xu for their guidance on the experimental design of this study, Chuming Xu and Xiaolian Li for their sincere help in data collection, Weiwen Zhou and Fengping Huang for their suggestion on data analysis. This study is supported in part by Project of Shenzhen International Cooperation Foundation (GJHZ20180926165402083), in part by the National Natural Science Foundation of China (grant number 82171913), in part by the funding support from the Basque Government (Eusko Jaurlaritza) through the Consolidated Research Group MATHMODE (IT1294-19), in part by the British Heart Foundation (Project Number: TG/18/5/34111, PG/16/78/32402), in part by the Hangzhou Economic and Technological Development Area Strategical Grant (Imperial Institute of Advanced Technology), in part by the European Research Council Innovative Medicines Initiative on Development of Therapeutics and Diagnostics Combatting Coronavirus Infections Award “DRAGON: rapiD and secuRe AI imaging based diaGnosis, stratification, fOllow-up, and preparedness for coronavirus paNdemics” [H2020-JTI-IMI2 101005122], in part by the AI for Health Imaging Award “CHAIMELEON: Accelerating the Lab to Market Transition of AI Tools for Cancer Management” [H2020-SC1-FA-DTS-2019-1 952172], in part by the MRC (MC/PC/21013), and in part by the UK Research and Innovation Future Leaders Fellowship [MR/V023799/1].

Author information

Authors and Affiliations

Contributions

XZ, QY, XY, JC, HM, JX, JS, and GY contributed to study design and writing—original draft preparation. XZ, XY, JC, HM, and JX contributed to data collection. QY, JX, JS and GY contributed to data visualization. XZ, QY, JX, JS, and GY contributed to writing—review and editing. JX, JS, and GY contributed to supervision and funding acquisition.

Corresponding authors

Ethics declarations

Conflict of interest

There are no potential competing interests in our paper. And all authors have seen the manuscript and approved to submit to your journal. We confirm that the content of the manuscript has not been published or submitted for publication elsewhere.

Ethics approval

This study passed the ethical approval of The First Affiliated Hospital of Shenzhen University's bioethics committee (approval no. KS20190114001), and the researchers all signed the informed consent form.

Consent to participate

Informed consent was obtained from all individual participants included in the study.

Consent for publication

All authors have read and agreed to the published version of the manuscript.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Javier Del Ser and Guang Yang are co-last authors of this work.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhou, X., Ye, Q., Yang, X. et al. AI-based medical e-diagnosis for fast and automatic ventricular volume measurement in patients with normal pressure hydrocephalus. Neural Comput & Applic 35, 16011–16020 (2023). https://doi.org/10.1007/s00521-022-07048-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07048-0