Abstract

Storage and retrieval of data in a computer memory play a major role in system performance. Traditionally, computer memory organization is ‘static’—i.e. it does not change based on the application-specific characteristics in memory access behaviour during system operation. Specifically, in the case of a content-operated memory (COM), the association of a data block with a search pattern (or cues) and the granularity (details) of a stored data do not evolve. Such a static nature of computer memory, we observe, not only limits the amount of data we can store in a given physical storage, but it also misses the opportunity for performance improvement in various applications. On the contrary, human memory is characterized by seemingly infinite plasticity in storing and retrieving data—as well as dynamically creating/updating the associations between data and corresponding cues. In this paper, we introduce BINGO, a brain-inspired learning memory paradigm that organizes the memory as a flexible neural memory network. In BINGO, the network structure, strength of associations, and granularity of the data adjust continuously during system operation, providing unprecedented plasticity and performance benefits. We present the associated storage/retrieval/retention algorithms in BINGO, which integrate a formalized learning process. Using an operational model, we demonstrate that BINGO achieves an order of magnitude improvement in memory access times and effective storage capacity using the CIFAR-10 dataset and the wildlife surveillance dataset when compared to traditional content-operated memory.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Digital memory is an integral part of a computer system. It plays a major role in determining system performance. Memory access behaviour largely depends on the nature of the incoming data and the specific information-processing steps on the data. Emergent applications ranging from wildlife surveillance [2] to infrastructure damage monitoring [3, 4] that collect, store and analyse data often exhibit distinct memory access (e.g. storage and retrieval of specific data blocks) behaviour. Even within the same application, such behaviour often changes with time. Hence, these systems with variable and constantly evolving memory access pattern can benefit from a memory organization that can dynamically tailor itself to meet the requirements.

Additionally, many computing systems, specifically the emergent internet of things (IoT) edge devices, must deal with a huge influx of data of varying importance while being constrained in terms of memory storage capacity, energy and communication bandwidth [5,6,7]. Hence, for these applications, it is important for the memory framework to be efficient in terms of energy, space, and transmission bandwidth utilization. Edge devices often also deal with multi-modal data, and the memory system must be flexible enough to handle inter-modality relations.

Based on these observations, we believe an ideal data storage framework for these emergent applications should have the following properties:

-

Dynamic in nature to accommodate for the constantly evolving application requirements and scenarios.

-

Able to exhibit virtually infinite capacity that can deal with a huge influx of sensor data—a common feature for many IoT applications.

-

Capable of trading off data granularity with transmission and energy efficiency.

-

Able to efficiently handle multi-modal data in the context of the application-specific requirements.

Traditional memories [1] (both address-operated and content-operated) are not ideal for meeting these requirements due to lack of flexibility in their memory organization and operations. In an address-operated memory, each address is associated with a data unit. And for a content-operated memory, each data-search-pattern (cue/tag) is associated with a single data unit. Hence, in both cases, the mapping is one-to-one and does not evolve without direct user interference. Moreover, data in a traditional memory are stored at a fixed quality/granularity. When a memory runs out of space, it can either stop accepting new data or remove old data based on a specific data replacement policy. All these traits of a traditional memory are tied to its ‘static’ nature, which makes it inefficient for many modern applications that have evolving access requirements as established earlier. For example, in a wildlife image-based surveillance system geared towards detecting wolves, any image frame with at least one wolf can be considered to be of importance. A traditional memory, due to lack of dynamism, will statically store all incoming image frames at the same quality and with the same level of accessibility. This will lead to the storage of irrelevant data (non-wolf images) and uniform access time for both important (wolf images) and unimportant (non-wolf images) data units.

To design an ideal memory framework for these emergent applications, we draw inspiration from biological memory and try to model some of its most useful properties, such as: (1) Virtually Infinite Capacity: the ability of the biological brain to deal with a huge influx of data of varying importance; (2) Impreciseness: the tendency of biological memory to store and retrieve imprecise but approximately correct data; (3) Plasticity: the ability of organic brain to undergo internal change based on external stimuli; (4) Intelligence: the capability of the human brain to learn from historical memory access patterns and improve storage/access efficiency.

Performing a complex task such as efficient data storage for a target application/scenario, requires the incorporation of real-time knowledge [8]. Different artificial intelligence (AI) and machine learning (ML) methods have been widely used for solving diverse problems, via learning the domain knowledge. Hence, we hypothesize that incorporating AI in a digital memory will allow us to model useful human brain traits in a digital memory framework.

With this vision in mind, we propose a new paradigm of content-operated memory framework, BINGO (Brain-Inspired LearNinG MemOry), which mimics the intelligence of the human brain for efficient storage and access of multi-modal data. In BINGO, the memory storage is a network of cues (search-patterns) and data, we term Neural Memory Network (NoK). Based on the feedback generated from each memory operation we use reinforcement learning to (1) optimize the NoK organization and (2) adjust the granularity (feature quality) of specific data units. BINGO is designed to have the same interface as any traditional COM and this allows BINGO to efficiently replace traditional COMs in any application as shown in Fig. 1. Applications that are resistant to imprecise data storage/retrieval and deals with storing data of varying importance will benefit the most from using BINGO.

To quantitatively analyse the effectiveness of BINGO as a memory system in a computing device, we implement a BINGO memory simulator with an array of hyperparameters. We evaluate the framework using two vision datasets [9, 10] and observe that the BINGO framework utilizes orders of magnitude less space, and exhibits higher retrieval efficiency while incurring minimal impact on the application performance. In summary, we make the following contributions:

-

1.

We present a new paradigm of learning computer memory, called BINGO, that can track data access patterns to dynamically organize itself for providing high efficiency in terms of data storage and retrieval performance.

-

2.

We formalize the learning process of BINGO and prove interesting properties of the NoK.

-

3.

For quantitatively analysing the capabilities of BINGO, we have designed a memory performance simulator with an array of tuneable hyperparameters.

-

4.

We present a formal process to select and customize BINGO for a target application. To demonstrate BINGO’s merit compared to traditional content-operated memory, we provide a comprehensive performance analysis of BINGO using the CIFAR-10 dataset and a wildlife surveillance dataset [9, 10].

The rest of the paper is organized as follows: Sect. 2 discusses different state-of-the-art digital memory frameworks and provides motivations for the proposed intelligent digital memory design. Section 3 describes in detail the proposed memory framework. Section 4 quantitatively analyses the effectiveness of the BINGO framework using different datasets. Section 5 concludes the paper.

2 Background and motivation

In this section, we shall first discuss the major difference between our proposed memory framework (BINGO) and existing similar technologies. Next we will provide motivations that led to the development of BINGO.

2.1 Computer memory: a brief review

Computer memory is one of the key components of a computer system [11]. Digital memories are broadly divided into two categories based on how data are stored and retrieved: (1) address operated and (2) content operated [1]. In an address operated memory (for example a Random Access Memory or RAM [11, 12]), the access during read/write is done based on a memory address/location. During data retrieval/load, the memory system takes in an address as input and returns the associated data. Different variants of RAM such as SRAM (Static Random Access Memory) and DRAM (Dynamic Random Access Memory) are widely used [11]. On the contrary, in a content operated memory (COM), memory access during read/write operations is performed based on a search pattern (i.e. content).

2.1.1 Content operated memory

A COM [1, 13] does not assign any specific data to a specific address during the store operation. During data retrieval/load, the user provides the memory system with a search pattern/tag and the COM searches the entire memory and returns the address in the memory system where the required data are stored. This renders the search process extremely slow if performed sequentially. To speed up this process of content-based searching, parallelization is employed which generally requires additional hardware. And adding more hardware makes the COM a rather expensive solution limiting its large-scale usability. A COM can be implemented in several ways as shown in Fig. 2, each with its own set of advantages and disadvantages. CAM (Associative Memory) is the most popular variant of COM and has been used for decades in the computing domain but the high-level architecture of a CAM has not evolved much. When a CAM becomes full, it must replace old data units with new incoming data units based on a predefined replacement policy.

Traditional CAMs are designed to be precise [1]. No data degradation happens over time and in most cases, a perfect match is required with respect to the search pattern/tag to qualify for a successful retrieval. This feature is essential for certain applications such as destination MAC address lookup for finding the forwarding port in a network device. However, there are several applications in implantable, multimedia, Internet-of-Things (IoT) and data mining which can tolerate imprecise storage and retrieval.

BINGO and CAM are both content operated memory frameworks. However, there are several differences between a traditional CAM and BINGO as shown in Table 1. For both Binary Content Addressable Memory (BCAM) and Ternary Content Addressable Memory (TCAM), (1) there is no learning component, (2) data resolution remains fixed unless directly manipulated by the user, (3) associations between search-pattern (tag/cue) and data remain static unless directly modified, (4) only a one-to-one mapping relation exists between search-pattern/cue and data units. Consequently, space and data fetch efficiency is generally low, and we provide supporting results for this claim in Sect. 4.

2.1.2 Instance retrieval frameworks

Apart from standard computer memory organizations, researchers have also investigated different software-level memory organizations for efficient data storage and retrieval. An instance retrieval (IR) framework is one such software wrapper on top of traditional memory systems that are used for feature-based data storage and retrieval tasks [14]. In an IR framework, during the training phase (code-book generation), visual words are identified/learned based on features of an image dataset. These visual words are, in most cases, cluster centroids of the feature distribution. Insertion of data in the system follows, and the data are generally organized in a tree-like data structure. The location of each data is determined based on the visual words (previously learned) that exist in the input image. During the retrieval phase, a search-image (or a search feature set) is provided and in an attempt to search for similar data in the framework, the tree is traversed based on the visual words in the search image. If a good match exists with a stored image, then that specific stored image is retrieved. These systems are primarily used for storing and retrieving images. The learning component of an IR framework is limited to the code-book generation phase, which takes place during initialization. Furthermore, once a data unit is inserted in the framework, no more location and accessibility change is possible. No associations exist between data units, and the granularity of data units does not change. On the contrary, BINGO represents a low-level memory organization where data granularity and association between data and search patterns evolve dynamically (see Table 1).

2.1.3 Intelligent caching and prefetching

Some prior works have focused on intelligent cache replacement policies and data prefetching [15, 16] in processor-based systems. Zang et. al. proposes an LSTM-based cache data block replacement approach to increase overall hit rate [15]. Additional modules (a training trigger, a training module, and a prediction module) are incorporated in the standard setup, and the overall caching system performance was evaluated using an OpenAI-Gym-based simulation environment [17]. Training an LSTM network can be computationally expensive; hence, an asynchronous training approach is adopted using the training trigger module. This approach works best for a static database and does not scale well when old data are removed and new data gets added. This limitation arises because the input length of the LSTM model and the representation of an individual input bit cannot be changed after deployment. Hashemi et. al. proposed a memory data block prefetching approach using an LSTM-based model [16]. This offline learning approach was able to outperform table-based prefetching techniques. However, the efficiency of the static machine learning model gets significantly reduced when the distribution of the access and cache misses change. It is also unclear if such a bulky recurrent neural network model (such as LSTM) can achieve acceptable performance when realized in hardware.

In comparison with [15, 16], BINGO organizes the whole memory as a graph and dynamically (1) modify the graph structure and weights for increased accessibility and (2) adjust the details/granularity of each data units/neurons stored in the memory based on the memory access pattern. The proposed lightweight learning process, although inspired by online reinforcement learning, does not use traditional machine learning algorithms and is designed for easy hardware implementation. Additionally, BINGO does not have any limitations in terms of old data removal and new data storage. Hence, it significantly deviates from the intelligent memory frameworks (see Table 1).

2.1.4 Other approaches for intelligent data storage

Another software level memory organization proposed by Niederee et al. outlines the benefit of forgetfulness in a digital memory [18]. However, due to the lack of quantitative analysis and implementation details, it is unclear how effective this framework might be. Human brain-inspired spatio-temporal hierarchical models such as Hierarchical Temporal Memory (HTM) have been proposed for pattern recognition and time-series analysis [19]. However, HTM is not designed to be used as a data storage system that can replace traditional CAM. Hence, it differs from BINGO both in terms of functionality and methodology. Additionally, efforts have been made towards developing AI-guide compression techniques and data retrieval algorithms [20,21,22], but to the best of our knowledge there is no intelligent memory framework with the same level of dynamism and plasticity as BINGO.

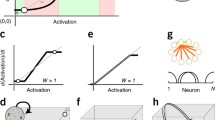

2.2 Motivation: taking inspiration from human memory

Computer and human memory are both designed to perform data storage, retention and retrieval. Although the functioning of human memory is far from being completely formalized and understood, it is clear that it is vastly different in the way data are handled. Several properties of the human brain have been identified which allows it to be far superior than traditional computer memory in certain aspects. In the following subsections, we will look into some of the most interesting properties of the human brain and envision their potential digital counterparts.

2.2.1 Virtually infinite capacity

The capacity of the human brain is difficult to estimate. John von Neumann, in his book “The computer and the brain” [23], estimated that the human brain has a capacity of \(10^{20}\) bits. Researchers now even believe that our working memory (short-term memory) can be increased through “plasticity”, provided certain circumstances. According to Lövdén et al., “... increase in working-memory capacity constitutes a manifestation of plasticity ...” [24]. On top of that, due to the intelligent pruning of unnecessary information, a human brain is able to retain only the key aspects of huge chunks of data for a long period of time.

If a digital memory can be designed with this human brain feature, then the computer system, through intelligent dynamic memory re-organization (learning-guided plasticity) and via pruning of unnecessary data features (learned from statistical feedback), can attain a state of virtually infinite capacity. For example, in a wildlife image-based surveillance system that is geared towards detecting wolves, the irrelevant data (non-wolf frames) can be subject to feature-loss to save space without hampering the effectiveness of the application.

2.2.2 Imprecise/imperfect storage and access

The idea of pruning unnecessary data, as mentioned in the previous section, is possible because the human brain operates in an imprecise domain ([25]) contrary to traditional digital memory. Certain tasks may not require precise memory storage/recall, and only specific high-level features of the data may be sufficient.

Hence, supporting the imprecise memory paradigm in a digital memory is crucial for attaining virtually infinite capacity and faster data access. For example, a wildlife image-based surveillance system can operate in the imprecise domain because some degree of compression/feature-reduction of images will not completely destroy the high-level features necessary for its automatic detection tasks. This can lead to higher storage and transmission efficiency.

2.2.3 Dynamic organization

We have mentioned that plasticity can lead to increased memory capacity but it also provides several other benefits in the human brain. According to Lindenberger et al., “Plasticity can be defined as the brain’s capacity to respond to experienced demands with structural changes that alter the behavioural repertoire.” [26]. It has been also hypothesized that the human brain post-processes and re-organizes old memories during downtime (i.e. sleeping) [27]. Hence, we believe plasticity leads to better accessibility of important and task-relevant data in the human brain. And the ease-of-access of particular memory units is adjusted with time as per the individual’s requirements.

If we can design a digital memory that can re-organize itself based on data access patterns and statistical feedback, then there will be great benefits in terms of reducing the overall memory access effort. For example, a wildlife image-based surveillance system designed to detect wolves will have to deal with retrieval requests mostly related to frames containing wolves. Dynamically adjusting the memory organization can enable faster access to data that are requested more frequently.

2.2.4 Learning guided memory framework

Ultimately, the human brain can boast of so many desirable qualities due to its ability to learn and adapt. It is safe to say the storage policies of the human brain also vary from person to person and time to time [25]. Depending on the need and requirement, certain data are prioritized over others. The process of organizing the memories, feature reduction, storage and retrieval procedure changes over time based on statistical feedback. This makes each human brain unique and tuned to excel at a particular task at a particular time.

Hence, the first step towards mimicking the properties of the human brain is to incorporate a learning component in the digital memory system. We envision that using this learning component, the digital memory will re-organize itself over time and alter the granularity of the data to become increasingly efficient (in terms of storage, retention and retrieval) at a particular task. For example, a wildlife image-based surveillance system will greatly benefit from a memory framework that can learn to continuously re-organize itself to enable faster access to application-relevant data and continuously control the granularity of the stored data depending on the evolving usage scenario.

3 BINGO organization and operations

To incorporate dynamism and embody the desirable qualities of a human brain in a digital memory, we have designed BINGO. It is an intelligent, self-organizing, virtually infinite content addressable memory framework capable of dynamically modulating data granularity. We propose a novel memory architecture, geared for learning, along with algorithms for implementing standard operations, such as store, retrieve and retention. A preliminary analysis of such a memory is presented in our archived paper [28].

3.1 Memory organization

The BINGO memory organization can be visualized as a network, and we refer to it as “Neural Memory Network” (NoK). The NoK, as shown in Fig. 3, consists of multiple hives each of which is used to store data of a specific modality (data-type). For example, if an application requires to store image and audio data, then the BINGO framework will instantiate two separate memory hives for each data modality. This allows the search to be more directed based on the query data type.

The fundamental units of the NoK are (1) data neurons and (2) cue neurons. A data neuron stores an actual data unit and a cue neuron stores a cue (data search pattern or tag). Each data neuron is associated with a ‘memory strength’ which governs its size and the quality of the data inside it. A cue is a vector, of variable dimension, representing a certain concept. Assume that a BINGO memory framework is configured to support n different types of cues, in terms of their dimensions. Then, the cues with the biggest dimension/size among all the cues are referred to as level-1 cues and the cues with the ith biggest dimension are referred to as level-i cues. So it follows that the cues with the smallest dimension are referred to as level-n cues. The level-n cues are used as entry points into the NoK while (i) searching for a specific data neuron and (ii) finding a suitable place to insert a new data neuron. For example, in a wildlife surveillance system, the level-n cue neurons may contain vectors corresponding to high-level concepts such as “Wolf”, “Deer”, etc. The level-1 cue neurons can contain more detailed image features of the stored data. The data neurons will be image frames containing wolves, deer, jungle background, etc.

The cue neuron and data neuron associations in the NoK (<cue neuron, cue neuron> and <cue neuron, data neuron>, <data neuron, data neuron>) change with time, based on the memory access pattern and hyperparameters. The data neuron memory strengths are also modulated during memory operations to increase storage efficiency. To introduce the effect of ageing, all association weights and data neuron strengths decay based on a user-defined periodicity. The effect of ageing is carried out during the retention procedure.

Additionally, to facilitate multi-modal data search, connections between data neurons across memory hives are allowed. For example, when searched with the cue “deer” (the visual feature of a deer), if the system is expected to fetch both images and sound data related to the concept of “deer”, then this above-mentioned flexibility will save search effort.

3.2 BINGO parameters

We propose several parameters for BINGO that can modulate its behaviour. These parameters are of two types: (1) Learnable Parameters that change throughout the system lifetime guided by online reinforcement-learning and ageing; (2) Hyperparameters that are set during system initialization and changed infrequently.

3.2.1 Learnable parameters

We consider the following parameters as learnable parameters for BINGO:

-

1.

Data neuron and cue neuron weighted graph The weighted graph (NoK) directly impacts the data search efficiency (time and energy). Hence, the elements of the graph adjacency matrix are considered as learnable parameters.

-

2.

Memory strength vector The quality and size of a data neuron depends on its memory strength. Hence, the memory strengths of all the data neurons are also considered as learnable parameters. They jointly dictate the space-utilization, transmission efficiency and retrieved data quality.

The number of learnable parameters can also change after each operation. These parameters constantly evolve via an online reinforcement learning process and an ageing process as described in Sects. 3.3 and 3.4, respectively.

3.2.2 Hyperparameters

We have defined a set of hyperparameters that influences the memory organization and operations of a BINGO framework. For each memory hive, we propose the following hyperparameters:

-

1.

Memory strength modulation factor (\(\delta _1\)) Used to determine the step size for increasing data neuron memory strength inside the NoK in response to a specific memory access pattern. This hyperparameter is used during store (Algorithm 1) and retrieve (Algorithm 5) operations.

-

2.

Memory decay rate (\(\delta _2\)) Controls the rate at which data neuron memory strength and features are lost due to ageing. This hyperparameter is used during the retention operation (Algorithm 7).

-

3.

Maximum memory strength (\(\delta _3\)) This is the maximum value the memory strength of a data neuron can attain. This hyperparameter is used during the store (Algorithm 1) and retrieve (Algorithm 5) operations.

-

4.

Association strengthening step-size (\(\eta _1\)) Step size for increasing association weights inside the NoK in response to a specific access pattern. This hyperparameter is used during the store (Algorithm 1) and retrieve (Algorithm 5) operations.

-

5.

Association weight decay rate (\(\eta _2\)) Used as a step size for decreasing association weights inside the NoK due to ageing. This hyperparameter is used during the retention operation (Algorithm 7).

-

6.

Association pull-up hastiness (\(\eta _3\)) Used to determine the haste with which the accessibility of a given neuron is increased in response to a specific access pattern. This hyperparameter is used during the store (Algorithm 1) and retrieve (Algorithm 5) operations.

-

7.

Cue neuron matching metric (\(\Lambda\)) Similarity thresholds for cues. It is a list of threshold values where each entry corresponds to a specific cue level. \(\Lambda = \{ \lambda _1, \lambda _2, ... \lambda _n\}\), for a system with n different cue levels. This hyperparameter is used during the store (Algorithm 1) and retrieve (Algorithm 5) operations.

-

8.

Degree of allowed impreciseness (\(\varphi\)) Limits the amount of data feature which is allowed to be lost due to memory strength decay during ageing. \(\varphi = 0\) implies data can get completely removed if the need arises.

This hyperparameter is used during the retention operation (Algorithm 7).

-

9.

Initial association weight (\(\varepsilon _1\)) Determines the association weight of a newly formed association. This hyperparameter is used during the store (Algorithm 1) and retrieve (Algorithm 5) operations.

-

10.

Minimum association weight (\(\varepsilon _2\)) Limits the decay of association weight beyond a certain point. Setting \(\varepsilon _2 = 0\), will allow associations to get deleted. This hyperparameter is used during the retention operation (Algorithm 7).

-

11.

Store effort limit (\(\pi _1\)) Limits the operation effort during store (Algorithm 1).

-

12.

Retrieve effort limit (\(\pi _2\)) Limits the search effort during retrieve operation (Algorithm 5). \(-1\) indicates infinite limit.

-

13.

Locality crossover (\(\omega\)) It is a flag that enables localizing similar data neurons. This hyperparameter is used during the store (Algorithm 1) and retrieve (Algorithm 5) operations.

-

14.

Frequency of retention procedure (\(\xi\)) The retention procedure of BINGO brings in the effect of ageing. This hyperparameter is a positive integer denoting the number of normal operations to be performed before the retention operation is called once. A lower value will increase dynamism.

-

15.

Compression techniques For each memory hive an algorithm for data compression must be specified. For example, we can use JPEG compression [29] for an image hive.

3.3 Learning process

The learnable parameters, governing the behaviour of BINGO’s NoK, are updated based on the feedback from different memory operations. The proposed learning process draws inspiration from online reinforcement learning although it has a very different goal and execution strategy [30]. The goals/objectives of the learning in BINGO are to:

-

1.

Increase memory search speed by learning the right NoK organization based on the memory access pattern.

-

2.

Reduce space requirement while maintaining data retrieval quality and application performance. This should be achieved by learning the granularity (details) at which each data neuron should be stored given the data access-pattern and system requirements.

The learnable parameters (\(\Theta\)) has two components: (1) A, the adjacency matrix for the entire NoK graph; (2) M, a vector where each element is the corresponding neuron’s memory strength. For a NoK with n neurons (DNs and CNs):

For \(\forall s_i \in M\), \(s_i \in [\varphi , \delta _3]\) if the neuron i is a data neuron and \(s_i = 0\) if the neuron i is a cue neuron. Here \(\varphi\) and \(\delta _3\) are hyperparameters defined earlier.

In Fig. 4, we present the flowcharts of all the BINGO operations. The learning process is embedded in the store and retrieve operations. The first step, for both store and retrieve operations, is to search for a suitable cue neuron in the NoK which will serve as a reference point for either (i) inserting a new data or (ii) retrieving a data neuron. This search is carried out with the help of the associated/search cues C. For the search, we have proposed a limited and weighted breadth-first search algorithm (Algorithm 2), which is explained in Sect. 3.5.1. Let us assume that the outcome of this search sub-operation is a traversal order \(Y^t = \{ N_1^t, N_2^t, N_3^t, \ldots , N_f^t \}\), where each \(N_i^t \in Y^t\) is the index of a neuron in the NoK which have been visited during the search process. The neuron \(N_f^t\) is considered as the neuron which has been accessed at the end of the search (a.k.a. the reference point). Assume that the path from \(N_1^t\) to \(N_f^t\) in \(Y^t\) be \(Y^p\). And, \(Y^p = \{ N_1^p, N_2^p, N_3^p, \ldots , N_k^p \}\) where \(N_1^t = N_1^p\) and \(N_f^t = N_k^p\). Then, both \(Y^t\) and \(Y^p\) constitutes the feedback on the basis of which the NoK learnable parameters must be adjusted. In the best case, the \(length(Y^t)\) can be minimum 2 for a search. Hence, based on the search outcome \(Y^t\), \(\Theta\) is modified such that the \(probability(length(Y^t) = 2| C, \Theta )\) can be maximized. This is achieved as described in Sect. 3.3.1. During a store operation, extra neurons may also get added to the NoK. This will lead to the addition of more parameters as explained in Sect. 3.3.2.

3.3.1 Learning from the operation feedback

The here goal is to compute the updated parameters \(\Theta ' = (A', M')\) based on \(Y^t\) and \(Y^p\). If \(length(Y^p) > 2\), \(\Delta A\) is a zero matrix of dimension (n, n) with the following exceptions: (i) \(\Delta A [N_{k-\tau }^p][N_k^p] = \varepsilon _1\) and (ii) \(\Delta A [N_k^p] [N_{k-\tau }^p] = \varepsilon _1\). Here \(\tau = min(k-1,\eta _3 + 1)\). If \(length(Y^p) = 2\), \(\Delta A\) is a zero matrix with the following exceptions: (i) \(\Delta A [N_0^p][N_1^p] = \eta _1\) and (ii) \(\Delta A [N_1^p] [N_0^p] = \eta _1\). With the \(\Delta A\) defined, we compute the updated parameters as follows: \((A')\) = \((A) + \Delta A\). Here \(\varepsilon _1\), \(\eta _3\), and \(\eta _1\) are hyperparameters defined earlier.

Next we compute \(\Delta M\) based on \(Y^p\). If \(D = \{ d_1, d_2, d_3, \ldots , d_l\}\) are the indices of data neurons associated with the cue neuron \(N_k^p\). Then, \(\Delta M\) is a zero vector of dimension n with the following exceptions: \(\forall d_i \in D, \Delta M[d_i] = min(f^1(\delta _1), \delta _3 - (M[d_i]))\). Here \(\delta _1\) is the memory strength modulation factor (hyperparameter) and \(\delta _3\) is the maximum memory strength allowed (hyperparameter). The function \(f^1\) is application dependent. For example, \(f^1\) can be \(f^1(x) = x\). With the \(\Delta M\) defined, we compute the updated parameters: \(M'\) = \(M + \Delta M\). Note that no parameter change is done in the case of a failed retrieval attempt.

3.3.2 Parameter expansion due to neuron addition

As a continuation of Sect. 3.3.1, in case of a store operation, if new cue neurons and data neurons are added to the NoK, then the previously computed \(\Theta '\) is expanded to \(\Theta '' = (A'', M'')\) for accommodating the parameters of the new neurons. Let \(N' = \{ N_1', N_2', \ldots , N_o' \}\) be the indices of new neurons added to the NoK. Then, we compute \(\Theta ''\) as follows:

-

1.

Additional o rows and columns are added to \(A'\) to generate \(A''\). Each of the new entries (learnable parameters) in the matrix are zero with the following exceptions: \(\forall N_i' \in N'\), if \(N_i'\) is to be associated with neurons \(AN^i = \{ AN_1^i, AN_2^i, \ldots , AN_q^i \}\), then \(\forall AN_j^i \in AN^i\), \(A''[N_i'][AN_j^i] = \varepsilon _1\) and \(A''[AN_j^i][N_i'] = \varepsilon _1\). Here \(\varepsilon _1\) is a hyperparameter defined earlier.

-

2.

The dimension of vector \(M'\) is expanded by o to form \(M''\). \(\forall i \in [n+1, n+o]\) \(M''[i] = \delta _3\) if i corresponds to a data neuron and \(M''[i] = 0\) if i corresponds to a cue neuron. Here \(\delta _3\) is a hyperparameter defined earlier.

3.4 Ageing process

During the retention operation (Fig. 4), we introduce the effect of ageing by reducing the memory strength of all data neurons and decreasing the weight of all associations. As a data neuron’s strength decreases, BINGO starts compressing the data gradually. This compression leads to data granularity (details) loss, but it also frees up space for more relevant data. This creates a tug-of-war between ageing and positive reinforcements from the store and retrieve operations. The method of ageing is explained in the next sub-sections.

3.4.1 Parameter adjustment due to retention

Assume that, before retention, the BINGO parameters were \(\Theta = (A, M)\). First we compute \(\Delta A\) of dimension (n, n), such that \(\Delta A[i][j] = min(\eta _2, A[i][j] -\varepsilon _2)\) for \(i,j \in [1,n]\). Then, we compute \(\Delta M\) of dimension n such that \(\Delta M[i]=min(f^2(\delta _2), M[i] -\varphi )\) for \(i \in [1,n]\). The function \(f^2\) can be \(f^2(x) = x\) or something more complex depending on the application requirement. The updated parameters, \(\Theta ' = (A', M')\), are computed as follows: (i) \(A' = A - \Delta A\) and (ii) \(M' = M - \Delta M\). Here \(\varepsilon _2\), \(\varphi\), and \(\delta _2\) are hyperparameters defined earlier.

3.4.2 Parameter freezing

As a continuation of Sect. 3.4.1, after a retention operation, some of the parameters in \(\Theta '\) may get frozen if they reach a certain value. Specifically, if the entries \(A'[i][j]\) and \(A'[j][i]\) reach zero, then the association is considered dead and remains frozen until it is revived due to the feedback from a future memory operation. If an entry \(M'[i]\), where i corresponds to a data neuron reaches zero, then the data neuron is considered dead and the parameter \(M'[i]\) freezes.

3.5 Memory operation algorithms

We implement the store, retrieve, and retention operations based on the learning process and the ageing process described in Sects. 3.3 and 3.4. For the implementation, we use the Algorithms 1, 2, 3, 4, 5, 6 and 7. Up to two levels of cues are supported in this embodiment of BINGO. However, the BINGO framework can be adapted to support additional cue levels of varying dimensions with minor adjustments to the algorithms.

3.5.1 Store

In Algorithm 1, we highlight the high-level steps required for storing a data in the BINGO NoK. MEM is the NoK where the data are to be stored. D is the data, and C are the associated cues for the given data. We provide the cues (C) as input to have a similar interface compared to traditional CAM. However, BINGO can be easily extended to perform in-memory feature/cue extraction. HP is the set of hyperparameters for MEM. Before the new data can be stored, we must ensure that there is enough space in MEM (line 2 and line 3). If there is a shortage of space, then the framework uses the retention procedure (Algorithm 7, described in Sect. 3.5.3) to compress less accessed data neurons. If the NoK is empty, then for the first insertion (lines 4 and line 5), we generate the NoK graph with the seed organization as shown in Fig. 5. A new cue neuron is generated for each cue in C, and they are connected depending on their level. A data neuron for D is generated and appended at the end. If the NoK is not empty, then the system must search for a suitable location to insert the incoming data. The first step is to select a level-n cue neuron in the NoK graph as the starting location for the search (line 7). \(Loc\_C_n\) is the selected level-n cue neuron from where the search is to begin and \(Flag\_C_{n}\) indicates whether or not the similarity between \(C \rightarrow level\_n\_cue\) and \(Loc\_C_{n}\) is more than \(\lambda _n\) (hyperparameter). Next, the search in the NoK is carried out starting from \(Loc\_C_{n}\) for finding a suitable location for inserting d (line 8). Here \(H \rightarrow \pi _1\) is the hyperparameter that limits the search effort. The suitable location for inserting the data neuron is represented as a level-1 cue neuron around which the data are to be inserted. \(access\_Path\) is the list of neurons in the path from \(Loc\_C_{n}\) to the selected suitable level-1 cue neuron (\(access\_Path[-1]\) i.e. the last element in the list \(access\_Path\)). \(Flag\_C_{1}\) indicates whether or not the similarity between \(C \rightarrow level\_1\_cue\) and the cue-neuron \(access\_Path[-1]\) is more than \(\lambda _1\) (hyperparameter). Finally, the data are inserted (or merged with an existing data neuron) around \(access\_Path[-1]\).

The proposed search algorithm is described in Algorithm 2. MEM is the memory hive where the operation is taking place, C is set of cues provided by the user for the operation, HP is the set of hyperparameters for MEM, ep is the level-n cue neuron (\(n=2\)) selected as the starting/entry point of the search, and limit is the search effort limit for the operation. The search is a limited-weighted version of the breadth-first-search (BFS) where neuronQueue is the BFS Queue, bestCandidate is the level-1 cue which is deemed to be the best search candidate at a given point of time, and \(bestCandidate\_Sim\) is the similarity between the bestCandidate and \(C \rightarrow level\_1\_cue\). The visited list keeps track of the neurons already visited, and the traversalMotion list keeps track of the order in which each neuron is visited along with their parent neuron. While the neuronQueue is not empty (line 4) and the number of neurons traversed so far is less than limit, the search continues. If the hyperparameter \(HP \rightarrow \omega = 1\), then paths blocked by level-n cue neurons are ignored to restrict the search within a specific locality (sub-graph) of the NoK graph (line 9). During each step of the search, a new neuron is encountered and if it is a level-1 cue neuron, then it is compared with \(C \rightarrow level\_1\_cue\). If the similarity of the said comparison (sim) is greater than \(HP \rightarrow \lambda _1\) (hyperparameter), then we have found a good match. In this case, the path traversed to access this level-1 cue neuron (neuron) is extracted from the traversalMotion (line 15) and returned from the procedure along with a flag value of 1 indicating that a good match was found (line 16). After visiting each neuron, all the adjacent neurons of the visited neuron are also enqueued in descending order of their corresponding association weights (lines 20-25). If a level-1 cue neuron with \(sim > HP \rightarrow \lambda _1\) is not found at the end of the search, then the best candidate level-1 cue neuron encountered so far is considered. The path traversed to access this level-1 cue neuron (bestCandidate) is extracted from the traversalMotion (line 26) and returned from the procedure along with a flag value of 0 indicating that a good match was not found (line 27).

Looking back at Algorithm 1, in line 9, the \(LEARN\_STORE\) procedure (Algorithm 3) is invoked. \(access\_Path\) and \(Flag\_C_1\) from the SEARCH procedure (Algorithm 2) and \(Flag\_C_n\) from the \(find\_Entry\_Point\) procedure are part of the inputs. Inside \(LEARN\_STORE\) procedure (Algorithm 3), the NoK is modified based on the insertion location search results. Four different scenarios may arise based on the values of \(Flag\_C_n\) and \(Flag\_C_1\).

-

1.

If \(Flag\_C_n = 1\) and \(Flag\_C_1 = 1,\) then it can be inferred that the graph search entry point level-n neuron has a good match with \(C \rightarrow level\_n\_cue\) and the level-1 cue selected (\(access\_Path[-1]\)) at the end of the SEARCH procedure also has a good match with \(C \rightarrow level\_1\_cue\). This indicates that the data D or a very similar data already exist in the NoK and is connected to \(access\_Path[-1]\). In this scenario, we simply strengthen the data-neuron (based on \(HP \rightarrow \delta _1\) and \(HP \rightarrow \delta _3\)) connected to \(access\_Path[-1]\) and increase the accessibility of the level-1 cue neuron \(access\_Path[-1]\) in the BINGO NoK using the procedure \(INC\_ACCESSIBILITY\) (Algorithm 4). This is a form of data neuron merging because no new data neurons are inserted but only the strength and accessibility of an existing data neuron are enhanced.

-

2.

If \(Flag\_C_n = 0\) and \(Flag\_C_1 = 1,\) then it can be inferred that the graph search entry point level-n neuron does not have a good match with \(C \rightarrow level\_n\_cue\) but the level-1 cue selected (\(access\_Path[-1]\)) at the end of the SEARCH procedure has a good match with \(C \rightarrow level\_1\_cue\). This indicates that the data D or a very similar data already exist in the NoK and is connected to \(access\_Path[-1]\) but no cue neuron for \(C \rightarrow level\_n\_cue\) exist in the NoK. In this scenario, we strengthen the data-neuron (based on \(HP \rightarrow \delta _1\) and \(HP \rightarrow \delta _3\)) connected to \(access\_Path[-1]\), make a new level-n cue neuron (newCN) for \(C \rightarrow level\_n\_cue\), connect newCN with \(access\_Path[-1]\), and increase the accessibility of the level-1 cue neuron \(access\_Path[-1]\) in the NoK using the procedure \(INC\_ACCESSIBILITY\) (Algorithm 4). This is a form of data neuron merging as well.

-

3.

If \(Flag\_C_n = 1\) and \(Flag\_C_1 = 0,\) then it can be inferred that the graph search entry point level-n neuron has a good match with \(C \rightarrow level\_n\_cue\) but the level-1 cue selected (\(access\_Path[-1]\)) at the end of the SEARCH procedure does not have a good match with \(C \rightarrow level\_1\_cue\). In this scenario, we make a new level-1 cue neuron for \(C \rightarrow level\_1\_cue\), make a new data neuron for D, connect the newly formed data neuron (newDN) and cue neuron (newCN), connect newCN with the \(access\_Path[-1]\), and increase the accessibility of the level-1 cue neuron \(access\_Path[-1]\) in the BINGO NoK using the procedure \(INC\_ACCESSIBILITY\) (Algorithm 4). All new connections are initialized with association strength \(H \rightarrow \varepsilon _1\).

-

4.

If \(Flag\_C_n = 0\) and \(Flag\_C_1 = 0,\) then it can be inferred that the graph search entry point level-n neuron does not have a good match with \(C \rightarrow level\_n\_cue\) and the level-1 cue neuron selected (\(access\_Path[-1]\)) at the end of the SEARCH procedure also does not have a good match with \(C \rightarrow level\_1\_cue\). In this scenario we make a new level-1 cue neuron for \(C \rightarrow level\_1\_cue\), make a new level-n cue neuron for \(C \rightarrow level\_n\_cue\), make a new data neuron for D, connect \(newCN\_l_n\) with \(newCN\_l_1\), connect \(newCN\_l_1\) with newDN, connect \(newCN\_l_n\) with \(access\_Path[-1]\), and increase the accessibility of the level-1 cue neuron \(access\_Path[-1]\) in the BINGO NoK using the procedure \(INC\_ACCESSIBILITY\) (Algorithm 4). All new connections are initialized with association strength \(HP \rightarrow \varepsilon _1\).

The \(INC\_ACCESSIBILITY\) procedure (Algorithm 4) increases the accessibility of the neuron at the end of the access_Path (\(access\_Path[-1]\)). Based on the current location of \(access\_Path[-1]\) we select and use one of the two possible rules for increasing the accessibility of \(access\_Path[-1]\).

-

1.

Pull up: If \(len(access\_Path) > 2\), then we connect the level-1 cue neuron \(access\_Path[-1]\) with an ancestor neuron in the \(access\_Path\) depending on the \(len(access\_Path)\) and \(H \rightarrow \eta _3\). This essentially allows future searches to bypass one or more neurons in the \(access\_Path\) while trying to reach \(access\_Path[-1]\) from \(access\_Path[0]\).

-

2.

Strengthen: If \(len(access\_Path) == 2\), then it implies that the level-1 cue neuron \(access\_Path[1]\) is directly connected to the level-n cue neuron \(access\_Path[0]\) which was also the starting point of the search. In this scenario, we simply increase the association weight between \(access\_Path[0]\) and \(access\_Path[1]\) (by \(HP \rightarrow \eta _1\)) to give it a higher search priority (as we are doing a weighted BFS during the SEARCH procedure defined in Algorithm 2).

3.5.2 Retrieve

In Algorithm 5, we highlight the high-level steps required for retrieving a data from the BINGO NoK. MEM is the BINGO NoK from where we attempt to retrieve the data. C is the set of search cues on the basis of which the data are to be retrieved. Cues (C) is provided as input to ensure a traditional CAM like interface. However, the feature/cue extraction can potentially be done inside the BINGO framework itself. HP is the set of hyperparameters for MEM. If the number of neurons in MEM is 0, then NULL is returned indicating a failed retrieval. Otherwise, we (1) find an entry point in the NoK (a level-n cue, \(Loc\_C_n\)) best situated as a starting point for searching the desired data, (2) search the NoK starting from \(Loc\_C_n\) using the SEARCH procedure, and (3) based on the search results retrieve the desired data if it exists and modify the NoK in light of this access using the function \(LEARN\_RETRIEVE\). \(Flag\_Cn\) indicates whether or not the similarity between \(C \rightarrow level\_n\_cue\) and \(Loc\_C_{n}\) is more than \(\lambda _n\) (hyperparameter). \(HP \rightarrow \pi _2\) is the hyperparameter that limits the search effort. \(Flag\_C_{1}\) indicates whether or not the similarity between \(C \rightarrow level\_1\_cue\) and the cue-neuron \(access\_Path[-1]\) is more than \(\lambda _1\) (hyperparameter). If \(Flag\_C_{1}=1\), then a matching level-1 cue neuron (attached to the desired data) is found in the NoK. \(access\_Path\) is the list of neurons in the path from \(Loc\_C_{n}\) to this matching level-1 cue neuron (\(access\_Path[-1]\) i.e. the last element in the list \(access\_Path\)). The retrieved data D (if exists) are returned at the end of the procedure (line 9).

For the \(LEARN\_RETRIEVE\) procedure (Algorithm 6) \(access\_Path\) and \(Flag\_C_1\) from the SEARCH procedure (Algorithm 2) are part of the inputs. Inside the sub-operation \(LEARN\_RETRIEVE\) (Algorithm 6), the NoK is modified based on the search results. Two different scenarios may arise based on the value of \(Flag\_C_1\).

-

1.

If \(Flag\_C_1 = 1,\) then it can be inferred that at the end of the SEARCH procedure a good match between \(C \rightarrow level\_1\_cue\) and \(access\_Path[-1]\) was found. This indicates that the desired data are associated with the level-1 cue neuron \(access\_Path[-1]\). Hence, we (1) enhance the memory strength of this desired data neuron DN (based on \(H \rightarrow \delta _1\) and \(HP \rightarrow \delta _3\)), (2) increase the accessibility of the level-1 cue neuron \(access\_Path[-1]\), and (3) return DN.

-

2.

If \(Flag\_C_1 = 0\) then we return NULL because no matching level-1 cue was located. This essentially implies that the queried data does not exist in the memory or could not be located within the search effort limit (\(HP \rightarrow \pi _2\)).

The description of SEARCH and \(INC\_ACCESSIBILITY\) sub-operations are provided in Sect. 3.5.1.

3.5.3 Retention

In a traditional CAM, data retention involves maintaining the memory in a fixed state. BINGO, on the other hand, allows the NoK to modify itself to show the effect of ageing as shown in Algorithm 7. The strength of all the associations in the NoK is decreased as seen in line 5 (by \(\eta _2\)), and the memory strength of all the data neurons is weakened as seen in line 9 (based on Eqn. 1). This is an exponential decay function, but it is also possible to use a linear function instead. Here \(\delta _2\) and \(\varphi\) are hyperparameters introduced earlier. Weakening a data neuron leads to compression and data feature loss. If all the associated data neurons of a level-1 cue neuron die, then that cue neuron is also marked as dead and is bypassed during future searches. Hyperparameter \(HP \rightarrow \varepsilon _2\) restricts the decay of strength(a).

3.6 Properties of the NoK and the learning process

The dynamic memory organization and constant learning creates an constantly changing complex BINGO NoK graph, but there are several interesting bounds that exist and observations that can be made regarding its structure and behaviour.

3.6.1 Upper bound of the NoK depth

Assume that there are m data neurons in the NoK, \(D = \{ d_1, d_2, \ldots , d_m \}\). The minimum number of hops between each of these data neurons to any level-n cue neuron be \(H = \{ h_1, h_2, \ldots , h_m \}\). Then, the depth of the NoK is defined as max(H). We define the depth in this manner because any search within the NoK graph starts from a level-n cue-neuron and the target of every search is the level-1 cue neuron associated with a particular data neuron.

Theorem 1

If (i) \(\varphi >0\), (ii) \(\pi _1 \ge 2\), (iii) \(\varepsilon _2 > 0\), and (iv) the previously proposed algorithms are used for implementing the operations then the depth of the NoK at any state is \(\le (\pi _1 + 1)\).

Proof

Assume that the premise is true. In a NoK with 0 data-neurons, the \(depth = 0 \le (\pi _1 + 1)\), for \(\pi _1 \ge 2\). In a NoK with 1 data neuron (\(d_1\)), it must be the first data neuron that was inserted because no data neurons and associations are deleted according to the premise (\(\varphi >0\) and \(\varepsilon _2 > 0\)). According to Algorithm 1, the first data neuron is inserted as shown in Fig. 5. Hence, for a BINGO system with two levels of cues (assumption made by the proposed algorithms), the depth of the NoK will be 2 which is \(\le (\pi _1 + 1)\), for \(\pi _1 \ge 2\).

It appears that the consequence of the theorem statement holds for the base cases where the NoK has 0 or 1 data neuron(s). Now let us assume a NoK with m data neurons, \(D = \{ d_1, d_2, \ldots , d_m \}\), where \(m>1\) and k level-2 cue neurons \(C^2 = \{ c_1^2, c_2^2, \ldots , c_k^2 \}\). Based on the definition of depth of a NoK, the consequence of the theorem statement can be rewritten as \(\forall d_i \in D\), \(\exists c_j^2 \in C^2\) such that \(hops(d_i, c_j^2) \le (\pi _1 + 1)\). It is already established that for \(d_1\) (the first insertion), \(\exists c_j^2 \in C^2\), such that \(hops(d_1, c_j^2) \le (\pi _1 + 1)\). For any \(d_i \in D\) (\(i > 1\)), the insertion could have been done in two different ways:

-

1.

If during insertion it was the case that (in Algorithm 3), \(Flag\_C_n == 1\) and \(Flag\_C_1 == 0\), then a new level-1 cue neuron (\(CN_{new}\)) and the data neuron \(d_i\) would have been first instantiated. \(CN_{new}\) is then connected with the level-1 cue neuron \(access\_Path[-1]\) and \(d_i\) is connected to \(CN_{new}\). If the level-1 cue neuron \(access\_Path[-1]\) is x hops from the level-2 cue neuron \(access\_path[0]\), then \(hops(d_i, access\_path[0]) = x + 2\). That is, \(hops(d_i, access\_path[0]) - 1 = x + 1\). We know \(x \le \pi _1 - 1\) because \(\pi _1\) is the search effort limit in Algorithm 2. So it follows that \(x + 1 \le \pi _1\). Hence, \(hops(d_i, access\_path[0]) - 1 \le \pi _1\) which implies that \(hops(d_i, access\_path[0]) \le \pi _1 + 1\). This will continue to hold true because no data neurons and associations are deleted according to the premise (\(\varphi >0\) and \(\varepsilon _2 > 0\)). Hence, for any \(d_i \in D\) inserted in this way \(\exists c_j^2 \in C^2\) such that \(hops(d_i, c_j^2) \le (\pi _1 + 1)\). In this case the \(c_j^2 = access\_path[0]\).

-

2.

If during insertion it was the case that (in Algorithm 3), \(Flag\_C_n == 0\) and \(Flag\_C_1 == 0\), then a new level-1 cue neuron (\(CN_{new}^1\)), a new level-n (\(n = 2\)) cue neuron (\(CN_{new}^2\)) and the data neuron \(d_i\) would have been first instantiated. \(CN_{new}^2\) is connected to the level-1 cue neuron \(access\_path[0]\), \(CN_{new}^1\) is connected to \(CN_{new}^2\) and \(d_i\) is connected to \(CN_{new}^1\). Hence, \(hops(d_i, CN_{new}^2) = 2 \le \pi _1 + 1\), as \(\pi _1 \ge 2\). This will continue to hold true because no data neurons and associations are deleted according to the premise (\(\varphi >0\) and \(\varepsilon _2 > 0\)). Hence, for any \(d_i \in D\) inserted in this way \(\exists c_j^2 \in C^2\) such that \(hops(d_i, c_j^2) \le (\pi _1 + 1)\). In this case the \(c_j^2 = CN_{new}^2\).

Hence, we have proved that if the premise is true, then the depth of the NoK at any state is \(\le (\pi _1 + 1)\). \(\square\)

3.6.2 Number of mistakes to attain the ideal accessibility state

To define ‘mistakes’ for our framework, we draw inspiration from the definition of ‘mistakes’ in regard to online learning [31]. In BINGO, the effort of searching for a specific level-1 cue neuron and the associated data neuron is proportional to the number of neurons visited during the search (Algorithm 2) sub-operation. During a retrieve operation if the search sub-operation selects a level-1 cue neuron, \(c^1\) as the target cue neuron (\(access\_path[-1]\)) and if the \(Flag\_C_1 == 1\), then the neuron \(c^1\) is considered to have been accessed. The “Pull up and strengthen” strategy (in Algorithm 4) is used to increase the accessibility of neurons that are accessed more often. Hence, if continuously accessed, the search effort of a specific level-1 cue neuron and the associated data neuron will continue to decrease. After a certain number of accesses the level-1 cue-neuron \(c^1\) will be reachable by visiting only two neurons from a given level-2 cue neuron (\(c^2\)) as the starting point of the search. At this state, the search of \(c^1\) from \(c^2\) can be termed as “ideal” because no further accessibility improvement can be made and \(c^1\) is said to be in the ideal accessibility state with respect to \(c^2\). From a given NoK state, the number of subsequent accesses to \(c^1\) starting from \(c^2\) required to attain the ideal accessibility state is defined as mistakes (MIST) in regard to \(c^1\) and \(c^2\).

Theorem 2

If (i) \(\varphi >0\), (ii) \(\varepsilon _2 > 0\), (iii) \(\eta _3 \ge 1\), (iv) \(\eta _1 > 0\), (v) \(\varepsilon _1 > 0\), (vi) in the current NoK state, \(access\_path\) is the list of neurons (\(length =l\)) that needs to be traversed to reach the target level-1 cue neuron \(access\_path[-1]\) starting from a level-n cue neuron \(access\_path[0]\), (vii) S is the weight of the strongest association attached to the neuron \(access\_path[0]\), (viii) the previously proposed algorithms are used for implementing the operations, (ix) no more retention and store operations are performed until \(access\_path[-1]\) reaches ideal accessibility state with respect to \(access\_path[0]\), and (x) MIST is the number of mistakes before \(access\_path[-1]\) can reach ideal accessibility state with respect to \(access\_path[0]\) then:

\(MIST = \lceil \frac{l-2}{\eta _3}\rceil\) if \(S < \varepsilon _1\)

\(MIST = \lceil \frac{l-2}{\eta _3}\rceil +\lfloor \frac{S-\varepsilon _1}{\eta _1} + 1\rfloor\) if \(S \ge \varepsilon _1\)

Proof

Assume that the premise is true, then the current state of the NoK can be of three types as follows:

-

1.

If \(access\_path[-1]\) is adjacent to \(access\_path[0]\) (i.e. \(l = 2\)) and if \(S < \varepsilon _1\), then the first neuron visited from \(access\_path[0]\) will be \(access\_path[-1]\) based on the weighted BFS search carried out in Algorithm 2. Hence, in this case, \(access\_path[-1]\) is already in the ideal accessibility state with respect to \(access\_path[0]\), that is, \(MIST = 0 = \lceil \frac{2-2}{\eta _3}\rceil = \lceil \frac{l-2}{\eta _3}\rceil\).

-

2.

If \(access\_path[-1]\) is not adjacent to \(access\_path[0]\) and \(S < \varepsilon _1\), then once the cue neuron \(access\_path[-1]\) becomes adjacent to \(access\_path[0]\), the search will become ideal. After each access to \(access\_path[-1]\) via \(access\_path[0]\), the subsequent \(access\_path\) length reduces based on \(\eta _3\) (as described in Algorithm 4). So it follows that \(access\_path[-1]\) will be adjacent to \(access\_path[0]\) after \(\lceil \frac{l-2}{\eta _3}\rceil\) accesses. Hence, \(MIST = \lceil \frac{l-2}{\eta _3}\rceil\).

-

3.

If \(access\_path[-1]\) is not adjacent to \(access\_path[0]\) and \(S \ge \varepsilon _1\), then once the cue neuron \(access\_path[-1]\) becomes adjacent to \(access\_path[0]\), the search will still not be ideal. Only when association weight between \(access\_path[-1]\) and \(access\_path[0]\) becomes \(>S\), the ideal accessibility state will be achieved. As mentioned before, after each access to \(access\_path[-1]\) via \(access\_path[0]\), the subsequent \(access\_path\) length reduces based on \(\eta _3\) (as described in Algorithm 4). So it follows that neuron \(access\_path[-1]\) will be adjacent to \(access\_path[0]\) after \(\lceil \frac{l-2}{\eta _3}\rceil\) accesses. After this, each access to \(access\_path[-1]\) from \(access\_path[0]\) will increase the association weight between \(access\_path[-1]\) and \(access\_path[0]\). Hence, after \(\lfloor \frac{S-\varepsilon _1}{\eta _1} + 1\rfloor\) accesses the association weight between the neurons \(access\_path[-1]\) and \(access\_path[0]\) will exceed S. So, \(MIST = \lceil \frac{l-2}{\eta _3}\rceil +\lfloor \frac{S-\varepsilon _1}{\eta _1} + 1\rfloor\) if \(S \ge \varepsilon _1\).

We have demonstrated that if the premise is true, then \(MIST = \lceil \frac{l-2}{\eta _3}\rceil\) if \(S < \varepsilon _1\) and \(MIST = \lceil \frac{l-2}{\eta _3}\rceil +\lfloor \frac{S-\varepsilon _1}{\eta _1} + 1\rfloor\) if \(S \ge \varepsilon _1\).

3.6.3 Data neuron strength depends on access frequency

In order to prove the next property of BINGO, we start with the following assumptions:

-

1.

Assume that there are d data neurons \(D = \{ DN_1, DN_2, \ldots , DN_d \}\) and c cue neurons \(C = \{ CN_1, CN_2, \ldots , CN_c\}\) in the NoK.

-

2.

Assume that the memory strengths of \(DN_i\) & \(DN_k\) to be \(S_i\) & \(S_k\), respectively, such that \(S_i = S_k\).

-

3.

Assume that a total n retrieval operations and m retention operations are performed next in any order. Out of the n retrieval operations, x retrieves \(DN_i\) and \((n-x)\) retrieves \(DN_k\). Also, \(x>(n-x)\).

-

4.

Assume that a linear decay function is used such that during retention operation, memory strength of data neurons decrease by \(\delta _2\). Also, \(S_i > m\delta _2\) and \(S_k > m\delta _2\).

-

5.

Assume that \(S_i'\) & \(S_k'\) are the memory strengths of \(DN_i\) & \(DN_k\), respectively, after all the n retrieval operations and the m retention operations are finished.

-

6.

Each access to a data neuron increases its memory strength by \(\delta _1\)

Theorem 3

If the previously mentioned assumptions are true, then \(S_i' > S_k'\).

Proof

Assume that the premise is true. Hence, after x accesses and m retention operations, \(S_i' = S_i - m\delta _2 +x\delta _1\). That is, \(S_i = S_i' + m\delta _2 - x\delta _1\). After \((n-x)\) accesses and m retention operations, \(S_j' = S_j - m\delta _2 + (n-x)\delta _1\). That is, \(S_j = S_j' + m\delta _2 - n\delta _1 + x\delta _1\). Based on our previous assumptions, \(S_i = S_j\) hence, \(S_i' + m\delta _2 - x\delta _1 = S_j' + m\delta _2 - n\delta _1 + x\delta _1\). Upon simplification we obtain, \(S_i' = S_j' + (x-(n-x))\delta _1\). As \(x>0\), \(\delta _1 > 0\), and \(x>(n-x)\) implies that \(S_i' > S_j'\). Hence proved that when the premise is true the consequence is also true.

3.6.4 Similar data neurons form localities

If the hyperparameter \(\omega = 1\), then the search sub-operation (for both store and retrieve) is not allowed to pass through any level-n cue neuron (as described in Algorithm 2). Due to this, any data neuron that is inserted using a specific level-n cue neuron \(c^n_i\) can also be retrieved by starting the search from \(c^n_i\) without crossing over any other level-n cue neuron. We define a locality as the NoK sub-graph formed by neurons bounded by level-n cue neurons which are not \(c^n_i\). Each locality in the NoK will store a specific type of data relating to the central level-n cue neuron and the adjacent level-n cue neurons.

3.6.5 Resistance of BINGO to sporadic noise

With regard to the recent historical access pattern, we define noise as an uncharacteristic retrieval access to a level-1 cue neuron and the associated data neuron. For example, assume that in the last N retrieve operations \(C^1\) is the set of level-1 cue neurons that have been accessed. Now if another retrieve operation accesses a cue \(c \notin C^1\), then we consider this as a potential noise. Note that this may not be a noise simply because N may be too small to capture the full scope of the access pattern or there may be a legitimate change in the access behaviour of the application using the BINGO framework. However, if this is indeed a one-of noisy access then it will not have too much impact on the BINGO NoK organization because according to Theorem. 2, the accessibility of a neuron takes \(MIST = \lceil \frac{l-2}{\eta _3}\rceil\) if \(S < \varepsilon _1\) or \(MIST = \lceil \frac{l-2}{\eta _3}\rceil +\lfloor \frac{S-\varepsilon _1}{\eta _1} + 1\rfloor\) if \(S \ge \varepsilon _1\) accesses before an ideal accessibility state is reached. One noisy access may increase the accessibility of c slightly and may reduce the accessibility of some cue neurons in \(C^1\) slightly but such changes will soon get overshadowed by subsequent accesses. Hence, we believe that the BINGO NoK is robust against sporadic & noisy retrieve operations.

3.7 Dynamic behaviour of BINGO

In Fig. 6a, we illustrate the dynamic nature of BINGO by displaying how the NoK changes during a sequence of operations. Accessibility of different data neurons are changed and the memory strength of data neurons increase or decrease based on the feedback-driven reinforcement learning and ageing. In this figure, a thicker line represents a stronger association and a bigger data neuron signifies its higher memory strength. In contrast, as seen in Fig. 6b, the traditional CAM does not show any sign of intelligence or dynamism to facilitate data storage/retrieval. In Sect. 4.3, a more detailed simulation-accurate depiction of BINGO’s dynamism is provided.

3.8 BINGO and traditional CAM simulators

In order to quantitatively analyse the effectiveness of BINGO in a computer system, we have implemented a BINGO simulator with the following features:

-

It can simulate all memory operations and provide relative benefits with respect to traditional CAM in terms of operation effort.

-

The framework is configurable with an array of hyperparameters described in Sect. 3.2.2.

-

The BINGO simulator can be mapped to any application designed for using a CAM or a similar framework.

-

The simulator implements the learning paradigm as described in Algorithms 1, 2, 3, 4, 5, 6 and 7.

-

The BINGO simulator is highly scalable and can simulate a memory of arbitrarily large size.

For all the analysis provided in Sect. 4, we define operation effort as shown in Eqn. 2 where \(Comp\_L1\) is the number of level-1 cues compared and \(Comp\_L2\) is the number of level-2 cues compared during the operation. A weight of 4 is applied for \(Comp\_L1\) because, in our experiments, the dimension of a level-1 cue (4096) is 4 times bigger than the dimension of a level-2 cue (1024).

We have also created a traditional CAM simulator to compare against BINGO. This traditional CAM behaviour is modelled based on standard CAM organization [32]. A single 4096 dimensional tag/cue is associated with each data unit. A first-in-first-out (FIFO) or a least-recently-used (LRU) replacement policy is used if the CAM runs out of space. During data retrieve, the query cue is compared with the existing cues in the CAM. If a matching cue is found in the CAM, then the associated data are returned. There is no effect of ageing and dynamic memory re-organization in the traditional CAM. For the traditional CAM, we define operation effort as shown in Eqn. 3 where Comp is the number of tags/cues compared during the operation. Because the cue dimension is 4096, a weight of 4 is applied to make the comparison with BINGO fair.

3.9 Desirable application characteristics

Certain applications will benefit more than others from using BINGO and we formalize the properties of such applications next.

3.9.1 Imprecise store and retrieval

It is recommended to use the BINGO framework in the imprecise mode for storage and search efficiency. Assume \(D =\) Set of data neurons in the Memory/NoK (MEM) at a given instance. For a given data \(D_i \in MEM\), if \(D_i\) is compressed (in lossy manner) to \({D_i}'\) and \(size(D_i) = size({D_i}') + \kappa _1\), then in order for the application to operate in the imprecise domain, it must be that the \(Quality ({D_i}') = Quality ({D_i}) - \kappa _2\). Where size(X) is the size of the data neuron X and Quality(X) is the quality of the data in the data neuron X, in light of the specific application. \(\kappa _1\) and \(\kappa _2\) are small quantities. For example, in a wildlife surveillance system, if an image containing a wolf is compressed slightly, it will still look like an image with the same wolf.

3.9.2 Notion of object(s)-of-interest

For a specific application, assume that D is a new incoming data which must be stored in the Memory. \(OL =\) objects in data D. Then for the application to benefit from BINGO, \(\exists O_1, O_2 \in OL\) such that \(Imp(O_1) > Imp(O_2)\). \(Imp(O_i)\) denotes the importance of the object \(O_i\) for the specific application. For example, in wildlife surveillance designed to detect wolves, frames containing at least one wolf are considered to be of higher importance. This importance is learned based on the data access pattern during operation. If the importance shifts after a while, then the memory adjusts itself accordingly.

4 Effectiveness of BINGO: a quantitative and visual analysis

To model BINGO and traditional CAM, we use the simulators as described in Sect. 3.8. We first evaluate the effectiveness of BINGO using the standard CIFAR-10 dataset [9]. After that, we try to emulate a wildlife surveillance system by using multiple video footage gathered from a camera deployed in the wild and then observe the effectiveness of BINGO if used in such an application. For all the experiments, the VGG16 [33] last layer outputs before softmax (\(dim = 1024\)) are used as level-n (\(n=2\)) cues and the second last layer outputs (\(dim =4096\)) are used as level-1 cues. The last layer outputs of VGG16 are indicative of the class of the data, while the second last layer output contains richer features. We use the cosine similarity metric for estimating the similarity between two cues. JPEG [29] is used for compressing the data neurons due to memory strength loss. The choice of hyperparameters will depend on the system using the BINGO framework. Just like in the case of AI frameworks, different hyperparameter searching techniques can be used for determining the ideal BINGO hyperparameters.

4.1 Evaluations using CIFAR-10

First we use the CIFAR-10 dataset [9] for obtaining a general understanding of BINGO’s capabilities. There are 10 classes in the dataset, {airplane, automobile, bird, cat, deer, dog, frog, horse, ship, truck} . We sample 50 images of each classes from the ‘data_batch_1’. During each experiment all the 500 images are stored in the memory using 500 store operations which are interleaved with 500 retrieve operations of previously stored randomly selected images of a specific class which is considered as ‘prioritized’. A specific class is prioritized for each experiment to simulate the notion of ‘Object-of-Interest’. We use the following BINGO hyperparameters for our experiments unless otherwise mentioned: [\(\delta _1: (\delta _3 - current\_strength)\), \(\delta _2: 0.1\), \(\delta _3: 100\), \(\eta _1: 10000\), \(\eta _2: 10000\), \(\eta _3: 1\), \(\Lambda : \{ \lambda _1 = 90, \lambda _2 = 10\}\), \(\varphi : 1\), \(\varepsilon : 100\), \(\varepsilon _2: 1\), \(\pi _1: 2\), \(\pi _2: -1\), \(\omega : 0\), \(\xi : 100\) ].

4.1.1 Evaluation with different classes prioritized

We run a total of 30 experiments prioritizing each CIFAR-10 class one-by-one for BINGO, CAM with FIFO and CAM with LRU. The above-mentioned hyperparameters were used and the memory size was limited to 250,000 bytes for all the 3 memory variants. We see the simulation results in Table 2. The detection accuracy is based on the GluonCV [34] Model Zoo ResNet-110 v1 for CIFAR-10 [35]. In a CAM, all data units are accessed in parallel during the retrieve operation and we show this effort in row ‘Avg. Par. Retrieve Effort’. However, as we only report sequential efforts for the BINGO operations, we have also reported the sequential efforts for CAM (‘Avg. Seq. Retrieve Effort’). Operation effort is the average of store effort and retrieve effort for a specific access type (sequential or parallel).

BINGO is superior to CAM-FIFO is every aspect. On average, BINGO (i) stores \(\approx \,2\times\) more data units than CAM-FIFO, (ii) has an average operation effort 11.15\(\times\) lower with respect to CAM-FIFO’s average sequential operation effort, (iii) has an average operation effort 18.8\(\times\) lower with respect to CAM-FIFO’s average parallel operation effort, and (iv) has a detection accuracy about 11% higher compared to CAM-FIFO. On average, BINGO (i) stores \(\approx 2\times\) more data units than CAM-LRU, (ii) has an average operation effort 7.25\(\times\) lower with respect to CAM-LRU’s average sequential operation effort, (iii) has an average operation effort 18.8\(\times\) lower with respect CAM-LRU’s average parallel operation effort and (iv) has a detection accuracy about 4% lower in comparison with CAM-LRU. For IoT applications operating under strict space, time and energy constraints, the benefits of BINGO can far outweigh this 4% accuracy loss.

a Quality factor growth for BINGO and CAM under memory size constraint when deer images are prioritized for CIFAR-10. BINGO appears to perform better at different memory size limits. b Quality factor growth for BINGO and CAM under memory size constraint when automobile images are prioritized for CIFAR-10. BINGO appears to be superior in this scenario as well

4.1.2 Evaluation under progressive memory constraint

To have a more in-depth understanding of the behaviours of BINGO and CAM with strict memory constraint, we perform a series of experiments with varying memory limits. To obtain a single metric for measuring the performance of a memory system, we have designed a metric called ‘memory quality factor’ as shown in Eqn. 4. Here QF stands for ‘Memory Quality Factor’, DA is the ‘Detection Accuracy’, DU is the number of ‘Data Units Stored’, Total_Samples is the total number of data that was presented to the memory system for storage, FIFO_Seq_OE is the ‘Average Sequential Operation Effort’ for CAM-FIFO under the same settings (this is treated as a baseline), and OE is the ‘Average Sequential Operation Effort’ of the memory for which we are computing QF. Also for these experiments, we use the hyperparameters as mentioned above except for \(\varphi\), which is set to 0.

We plot the memory quality factors for BINGO, CAM-FIFO and CAM-LRU in Fig. 7. In Fig. 7a, we observe the results when deer-class is prioritized and in Fig. 7b, we observe the results when automobile-class is prioritized. BINGO appears to be superior in comparison with CAM-LRU and CAM-FIFO. The memory quality factor growth rate for BINGO appears to be polynomial, while CAM-FIFO appears to grow linearly and CAM-LRU appears to be stable. Interestingly, CAM-FIFO and CAM-LRU converge to the same quality factor as the memory limit constraint gets relaxed.

4.2 A real application case study: wildlife surveillance

Image sensors are widely deployed in the wilderness for rare species tracking and poacher detection. The wilderness can be vast and edge devices operating in these regions often deal with low storage space, limited transmission bandwidth and energy shortage. This demands efficient data storage, transmission and power management. Interestingly, this specific application is resistant to imprecise data storage and retrieval because compression does not easily destroy high-level data features in images. Also, in the context of this application, certain objects such as a rare animal of a specific species can be considered more important than an image with only trees or common animals. Hence, this application has the desirable characteristics suitable for using BINGO and will certainly benefit from BINGO’s learning guided data preciseness modulation and plasticity schemes.

To emulate a wildlife surveillance application, we construct an image dataset from a wildlife camera footage containing 40 different animal sightings. The details, of this dataset, are located at the publicly available dataset repository that we have released [10]. We sample the video with a rate of 1 frame for every 20 frames to avoid too much repetition and obtain 644 frames. During each experiment all the 644 images are stored in the memory using 644 store operations which are interleaved with 644 retrieve operations of previously stored randomly selected images of wolf or fox. Here wolf and fox images are considered as ‘prioritized’. We use the following BINGO hyperparameters for our experiments unless otherwise mentioned: [\(\delta _1: (\delta _3 - current\_strength)\), \(\delta _2: 0.1\), \(\delta _3: 100\), \(\eta _1: 100\), \(\eta _2: 10\), \(\eta _3: 1\), \(\Lambda : \{ \lambda _1 = 90, \lambda _2 = 10\}\), \(\varphi : 1\), \(\varepsilon : 100\), \(\varepsilon _2: 1\), \(\pi _1: 2\), \(\pi _2: -1\), \(\omega : 0\), \(\xi : 100\) ].

We run the experiments with memory size limited to 50,000,000 bytes for all the 3 memory variants. We see the simulation results in Table 3. The detection accuracy is based on the pretrained ImageNet VGG16 net [33]. For these experiments a detection is considered correct if any of the top-25 predictions belong to the following classes: {red_fox, red_wolf, timber_wolf, white_wolf, grey_fox, kit_fox, Arctic_fox }.