Abstract

This article presents a hybrid model for predicting the temperature of molten steel in a ladle furnace (LF). Unique to the proposed hybrid prediction model is that its neural network-based empirical part is trained in an indirect way since the target outputs of this part are unavailable. A modified cuckoo search (CS) algorithm is used to optimize the parameters in the empirical part. The search of each individual in the traditional CS is normally performed independently, which may limit the algorithm’s search capability. To address this, a modified CS, information interaction-enhanced CS (IICS), is proposed in this article to enhance the interaction of search information between individuals and thereby the search capability of the algorithm. The performance of the proposed IICS algorithm is first verified by testing on two benchmark sets (including 16 classical benchmark functions and 29 CEC 2017 benchmark functions) and then used in optimizing the parameters in the empirical part of the proposed hybrid prediction model. The proposed hybrid model is applied to actual production data from a 300 t LF at Baoshan Iron & Steel Co. Ltd, one of China's most famous integrated iron and steel enterprises, and the results show that the proposed hybrid prediction model is effective with comparatively high accuracy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Ladle furnace (LF) is a pivotal equipment utilized to fully refine and alloy during secondary metallurgy processes in iron and steel industries [1]. Close control of the temperature of molten steel in LF is vital for the improvement of product quality and productivity [2]. However, the temperature of molten steel cannot be continuously measured in the actual production, which makes it difficult to achieve accurate control. Therefore, it has considerable practical significance to develop a model to predict the temperature of molten steel in LF.

Models for predicting the temperature of molten steel in LF are traditionally developed based on thermodynamics and the energy conservation law [3, 4]. However, due to the intrinsic complicacy of LF metallurgy processes, the fundamental mechanisms of involved physicochemical phenomena are not entirely clear by far, and developing a mechanistic prediction model is very time-consuming and costly. As a result, empirical modeling approaches have been extensively used in developing the temperature prediction models of molten steel in LF. In empirical modeling, the model is developed exclusively from the production data without the need to invoke the phenomenology of the process [5,6,7,8]. Thus, the time-consuming and expensive nature associated with the development of a suitable mechanistic prediction model can be averted.

In recent years, hybrid modeling approaches have been considered as an appealing alternative for developing molten steel temperature prediction models. A hybrid prediction model commonly consists of a mechanistic thermal model for representing the known priori knowledge of the LF metallurgy process under consideration, and one or more empirical models for approximating unknown functions in the mechanistic thermal model [9,10,11]. Moreover, according to existing researches, hybrid prediction models have better properties than pure empirical prediction models [9, 10]; they typically have better prediction accuracy and generalization performance, and are easier to interpret and analyze.

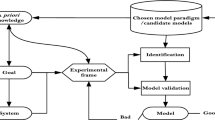

Regarding to the training of the empirical part, most of the reported hybrid modeling approaches use a direct method as schematically shown in Fig. 1a. The parameters in the empirical part are determined by minimizing the errors between outputs of the empirical part, denoted by \(\hat{\user2{\eta }} = [\hat{\eta }_{1} {\kern 1pt} ,\; \cdots {\kern 1pt} ,\;\hat{\eta }_{n} ]\), and the actual values of unknown functions, denoted by \({\varvec{\eta}} = [\eta_{1} {\kern 1pt} {,}\; \cdots {\kern 1pt} {,}\;\eta_{n} {]}\). Here, n denotes the number of unknown functions. Obviously, the precondition for using the direct method to train the empirical part is that these actual values are available. In other words, when the actual values of one or more unknown functions are unavailable just like the hybrid prediction model proposed in this article, the direct method could not be used.

To address the above issue, this article proposes an alternative method for the determination of the parameters in the empirical part using the available values of the molten steel temperature instead of the target outputs of the empirical part, as is schematically shown in Fig. 1b. This allows the empirical part being trained indirectly without having its target outputs. In Fig. 1b, x and \(\hat{x}\) denote the measured and predicted temperature values of molten steel respectively.

The determination of the parameters in the empirical part using the above indirect method is a complex optimization problem. It is difficult to calculate the derivative information required by traditional optimization algorithms. Intelligent optimization algorithms, such as the genetic algorithm (GA) [12], particle swarm optimization (PSO) [13], differential evolution (DE) [14], ant colony optimization (ACO) [15], salp swarm algorithm (SSA) [16], artificial bee colony (ABC) [17], and cuckoo search (CS) [18], do not require any derivative information and can perform global search [19,20,21], so using them for finding the parameters in the empirical part is a viable alternative. Amongst these algorithms, CS is a comparatively new one, initially introduced by Yang and Deb [22]. Due to some attractive features like good balance between the local search and global search, simplicity, and efficiency [23, 24], the CS algorithm has been successfully applied to many optimization problems in various fields with promising results [25,26,27,28,29], including the parameter optimization problems in modeling manufacturing processes such as parameter estimation of a common empirical model for the temperature of cutting tools [30], estimation of soft-sensing model parameters for fermentation processes [31], and parameter identification of a neural network model for the electron beam welding process [32]. Besides, some researches have revealed that compared with PSO, GA, and some other intelligent optimization algorithms, CS is potentially far more efficient [30, 33, 34]. However, in the search process of the basic CS (BCS), there is no interchange of search information between individuals (i.e., cuckoos). To address this issue, we propose a modified CS, information interaction-enhanced CS (IICS), by introducing an information interaction-enhanced mechanism into BCS. It is based on the common idea that the information interchange between people would be in favor of their team accomplishing an assignment with efficiency. The proposed IICS is employed to optimize the parameters in the empirical part of the proposed hybrid prediction model.

The remainder of the article is organized as follows. Section 2 elucidates the development of the hybrid temperature prediction model with the proposed indirect training method for its empirical part. Section 3 briefly discusses BCS and details the IICS algorithm. Section 4 describes using IICS for determining the parameters in the empirical part of the proposed hybrid prediction model. Section 5 analyzes the performance of IICS by testing on two sets of benchmark functions and then presents the application of the proposed hybrid prediction model on the actual production data from a 300 t LF at Baoshan Iron & Steel Co. Ltd. Finally, conclusions of this study are drawn and considerations for future works are pointed out in the last section.

2 Development of a hybrid prediction model

In this section, a mechanistic thermal model (i.e., the mechanistic part of the proposed hybrid prediction model) is first derived based on thermodynamics as well as the law of energy conservation. Next, artificial neural network-based empirical models (i.e., the empirical part of the proposed hybrid prediction model) are used to approximate the unknown functions in the mechanistic part, and the indirect training method for these empirical models is elaborated.

2.1 Development of mechanistic part

Taking the molten steel and slag as a unitized system, a mechanistic thermal model is developed in this subsection based on the energy conservation law and thermodynamics. Similar to the existing literature [2], the following three assumptions are made: (1) no local temperature gradient exists in the steel bath, i.e., the steel bath is fully mixed; (2) there is only radial heat flow in the ladle wall; and (3) only axial heat flow occurs in the ladle bottom.

In the remainder of this subsection, the primary factors affecting the temperature change of molten steel are described in detail. Then, a mechanistic thermal model with two unknown functions is presented.

2.1.1 Thermal gain from the arc

The energy required for secondary steel refinement in LF is mainly from the arc. The thermal gain of the steel bath due to the energy injection through arc can be calculated as

where Qarc is the power in W injected into the steel bath, ηarc is the efficiency coefficient of heat transfer from the arc to the steel bath, and Parc is the total power. For a given LF system, the value of ηarc mainly depends on the slag thickness Hsl and arc length Larc [10]; that is to say, ηarc is a function of Hsl and Larc, shown as

Hence, once the function farc has been obtained, the value of ηarc can be calculated using the online available Hsl and Larc. However, it is noteworthy that the concrete expression of this function is hard to derive by mechanistic approaches.

2.1.2 Thermal loss from the ladle lining

Thermal loss from the ladle lining consists of two components, the thermal loss from the ladle wall and the thermal loss from the ladle bottom. Here, the instantaneous temperature distribution models of the ladle wall and ladle bottom are first established; then the thermal loss from the ladle lining is calculated based on these two models.

2.1.2.1 Instantaneous temperature distribution model of the ladle wall

With the assumptions above, the heat transfer in the ladle wall can be considered as a one-dimensional unsteady heat conduction in cylindrical coordinates [2, 3, 35], formulated as

Equation (3) is the heat conduction differential equation of the ladle wall, where Tw, ρw, λw, and cw are the temperature in °C, density in kg/m3, heat conductivity in W/(m °C), and specific heat in J/(kg °C) of the ladle wall respectively. The boundary conditions for Eq. (3) are given in Eqs. (4) and (5), where r1 and r2 are respectively the inner and outer diameters of the ladle wall in m; Tst is the molten steel temperature in °C; αw-en is the convection heat transfer coefficient between the steel shell of the ladle wall and the environment in W/(m2 °C); and T∞ is the environment temperature in °C. Equation (6) is the initial condition. For more details, please see the existing literature [2].

2.1.2.2 Instantaneous temperature distribution model of the ladle bottom

Heat transfer in the ladle bottom can be considered as a one-dimensional unsteady heat conduction [2, 3, 35], formulated as

Equation (7) is the heat conduction differential equation of the ladle bottom, where Tb, ρb, λb, and cb are, respectively, the temperature in °C, density in kg/m3, heat conductivity in W/(m °C), and specific heat in J/(kg °C) of the ladle bottom. Equations (8) and (9) are the boundary conditions for Eq. (7), where hb is the thickness of the ladle bottom in m; and αb-en is the convection heat transfer coefficient between the steel shell of the ladle bottom and the environment in W/(m2 °C). Equation (10) is the initial condition. See the existing literature [2] for more details.

2.1.2.3 Thermal loss from the ladle lining

Thermal loss from the ladle lining is obtained by calculating the heat flow of the contact surface between the ladle lining and the molten steel as

where Qlin is the thermal loss from the ladle lining in W; h is the height of the steel bath in m; λw,1 is the heat conductivity of the ladle wall material that is in contact with the molten steel in W/(m °C); and λz,1 is the heat conductivity of the ladle bottom material that is in contact with the molten steel in W/(m °C).

2.1.3 Thermal loss from the top surface

The top surface thermal loss mostly results from the radiation loss through the bare molten steel surface and slag surface. It is difficult to do an exact calculation for this loss using traditional mechanistic models. So, here the cooling water energy change is used to indirectly calculate this part of thermal loss as

where Qsur is the top surface thermal loss in W; ηsur is the correction coefficient; ccw, Fcw, and ΔTcw are the specific heat in J/(kg °C), flow rate in kg/s, and temperature difference in °C between inlet and outlet of the cooling water. Through analysis, ηsur can be considered as a function of three online available variables, Farg (the argon flow rate in Nm3/s), Dsl-co (the distance between the steel bath and the ladle cover in m), and Tst as

Similar with farc, the concrete expression of fsur is also hard to derive by mechanistic approaches.

2.1.4 Thermal effects resulting from the additions

The additions include the slag and metal alloys. Their thermal effects are calculated as

where Qadd is the total thermal effect of additions in W; i denotes a specific addition with mass mi in kg, and ki is its temperature influence coefficient in °C/kg; τi is the time that addition i takes to reach the steel bath temperature in s; and mst and cst are the mass in kg and specific heat in J/(kg K) of molten steel respectively. The value of ki can be obtained by statistical analysis based on actual production data. Table 1 shows the temperature influence coefficients kis of various additions for the LF system considered in this study.

2.1.5 Thermal loss due to stirring-argon injection

The thermal loss due to stirring-argon injection is calculated as

where Qarg is the heat flow carried away by argon in W; and carg and Targ are the specific heat in J/(Nm3 °C) and initial temperature in °C of argon respectively.

2.1.6 The overall mechanistic thermal model

According to the energy conservation law, the following mechanistic thermal model can be obtained by combining all of the above factors

where msl and csl are the mass in kg and specific heat in J/(kg K) of the slag respectively.

2.2 Development of empirical part using indirect training method

Obviously, the above mechanistic thermal model cannot be immediately used for predicting the temperature of molten steel, as there are two unknown functions, namely farc and fsur. In this article, two single-hidden layer feed-forward neural networks (SLFNs) are utilized to respectively approximate farc and fsur (see Fig. 2), for such neural networks can approximate any nonlinear relationships arbitrarily well [36], and they have been successfully applied in modeling some LF metallurgy processes to predict the molten steel temperature [5, 6, 9, 10]. However, for practical LF metallurgy processes, only the values of the inputs to two empirical models, [Hsl, Larc] and [Farg, Dsl-co, Tst], are available, whereas no target values of their outputs ηarc and ηsur are available. Therefore, traditional neural network training methods, which usually train the empirical part by directly minimizing the errors between the outputs of the empirical model(s) and its (their) target outputs, would be difficult to apply. In this study, the two SLFN-based empirical models are trained indirectly by minimizing the errors between the molten steel temperature predicted by the hybrid model and its measured values. The basic description of this indirect training method is given below.

The two SLFN-based empirical models of farc and fsur can be formulated as

where \(\hat{f}_{{{\text{arc}}}}\) and \(\hat{f}_{{{\rm sur}}}\) denote the SLFN-based empirical models used to approximate the unknown functions farc and fsur, respectively, while θarc and θsur are the vectors of weights and bias of the corresponding SLFNs.

In essence, to train \(\hat{f}_{{{\text{arc}}}}\) and \(\hat{f}_{{{\rm sur}}}\) is to determine the optimal values of their parameters θarc and θsur. The indirect method fulfills the training task as follows. Firstly, Eqs. (17) and (18) are, respectively, substituted into Eqs. (1) and (12), so that a hybrid prediction model is obtained

Then, θarc and θsur are regarded as the vectors of parameters to be identified in the hybrid prediction model, and further they are determined by using the proposed IICS algorithm to minimize an objective function which is defined with the measurements of the molten steel temperature, as shown below.

where \(H\) is the number of heats of training data; Mh is the number of temperature samples of molten steel in the hth heat; \(x\) represents the measured value; and \(\hat{x}\) represents the hybrid model prediction. In such way, the training of \(\hat{f}_{{{\text{arc}}}}\) and \(\hat{f}_{{{\rm sur}}}\) can be appropriately fulfilled while avoiding the requirement of their target outputs.

3 Information interaction-enhanced CS

In this section, a brief overview of basic CS (BCS) is first given for self-completeness. Next, the proposed information interaction-enhanced CS (IICS) algorithm is elaborated, followed by the complexity analysis of IICS.

3.1 BCS algorithm

Cuckoo search (CS), introduced by Yang and Deb in 2009 [22], is one of the intelligent optimization algorithms. The core idea behind this algorithm is some cuckoo species’ brood parasitism. Besides, the CS algorithm also incorporates into its framework the mathematical model of the Lévy flight behavior found in some birds and fruit flies. The following are some idealized rules adopted in CS development [22]: (1) the number of available host nests is fixed, and each cuckoo each time lays one egg in a randomly selected nest; (2) the nests containing high-quality eggs will be chosen to partake in the next generation; and (3) the egg laid by a cuckoo can be identified by the host bird with a probability pa. Once it is identified, the host bird will either push the egg out or just discard the nest, and then make a new one. In addition, it is worthy to point out that a nest, an egg or a cuckoo is equivalent to a solution and only minimization problems are considered in the rest of the article without loss of generality.

Based on the rules above, Lévy flight is performed first to generate the new solution \({\varvec{z}}_{i}^{{\text{new}}}\) for cuckoo \(i\) with the following formula:

where \({\varvec{\alpha}}\) is a vector of step size scaling factors that should be related to the scales of the problem under consideration, and it can be in most cases used as [37,38,39]

where \(\alpha_{0}\) is a constant usually set as 0.01 [38, 39] and \({\varvec{z}}_{{{\rm best}}}\) represents the current best solution. In Eq. (21), Lévy(λ) is a random vector drawn from a Lévy distribution, \(\lambda\) is a Lévy flight parameter, and \(\otimes\) represents the entry-wise multiplication.

In essence, Lévy flights offer a random walk with random steps drawn from a Lévy distribution. From the perspective of implementation, there are two procedures to generate random numbers using Lévy flights [38], that is, the selection of a random direction and the generation of steps that obey the selected Lévy distribution. The generation of a direction should be drawn from a uniform distribution, whereas the generation of steps is quite tricky. There are several ways of accomplishing this, but one of the most efficient and yet straightforward ways is to utilize the Mantegna algorithm [40], in which the step size s is given by

where u and v are drawn from the following normal distributions:

where \(\Gamma\) represents the Gamma function, and \(\beta\) is a distribution parameter related with \(\lambda\) in Eq. (21) as \(\lambda = 1 + \beta\) (\(0 < \beta \le 2\), and \(\beta = 1.5\) [38] in CS).

As per the above, Eq. (21) can be rewritten as

where r is a random vector with all its elements generated from the standard normal distribution N (0, 1), and s is calculated using Eq. (23).

After comparing the fitness values between each old and new solution at the same nest and retaining the solutions with lower fitness values, the new solution \({\varvec{z}}_{i}^{{{\rm new}}}\) for cuckoo \(i\) is generated again by imitating the action of alien egg discovery, which can be formulated as:

where zj and zk are two randomly selected solutions; pa is a vector with all its elements being pa; rnd1 is a random number generated from the standard uniform distribution \(U(0,\;1)\); rnd2 is a random vector with all elements generated from \(U(0,\;1)\); and H(•) is a step function, defined as

where Hd = 1 if xd > 0, otherwise Hd = 0. More details on BCS can be found in [38].

3.2 IICS algorithm

As mentioned above, information interaction between individuals is lacking in the search process of BCS, while it is well known that the information interaction between people plays an important role for their team to accomplish an assignment with high efficiency. Accordingly, it is expected that a CS with an information interaction-enhanced mechanism can realize a better search performance than BCS. Based on this consideration, a modified CS called information interaction-enhanced CS (IICS) is proposed in this article. In IICS, cuckoo i is offered an opportunity to get some potentially useful information from a selected information provider zpi (here pi defines which cuckoo should be selected as the information provider for cuckoo \(i\)). To get the zpi for cuckoo \(i\), three candidates are chosen from z = [z1, ..., zi-1, zi+1,..., zNp] randomly, where Np denotes the population size. Among these three candidates, the one with the best (i.e., smallest) fitness value is selected as zpi, and meanwhile its index is assigned to pi.

The main differences between IICS and BCS are the updating formulas of the Lévy flight and alien egg discovery. To be specific, in IICS, the updating formulas used in BCS, namely Eqs. (26) and (27), are replaced with Eqs. (29) and (30), respectively.

where

In the above equations, I is a vector with each element being one; ca is a vector with all elements being ca, which is a coefficient for adjusting the combination of the proposed information interaction-enhanced mechanism and Lévy flights (or the alien egg discovery); rnd3, rnd4, rnd5, and rnd6 are random vectors with all their elements drawn from U (0, 1); and H(•) is the same step function as defined in Eq. (28).

Figure 3 presents the complete implementation of the proposed IICS. Firstly, various parameters are set and a group of Np solutions are randomly initialized (lines 1–2). Next, the fitness function value for each solution is calculated, and the one with the best fitness in the current population is assigned to \({\varvec{z}}_{{{\rm best}}}\)(lines 3–4). Thereafter, these solutions are sequentially evolved with the first (lines 7–9) and second (line 14) proposed search operators, which respectively combine the information interaction-enhanced mechanism with Lévy flights and the alien egg discovery. Following the generation of each new solution, the optimal selection between the old and newly-generated solutions at the same nest will be performed, and the one with the smaller fitness value is retained (lines 10–13 and 15–18). Finally, \({\varvec{z}}_{{{\rm best}}}\) is updated and it is selected as the optimal solution of the search process (lines 19–21).

3.3 Complexity analysis of IICS

To facilitate the analysis, the computation time complexity of BCS is given firstly in this section. For each cuckoo, O (D) number of operations are performed in an iteration in BCS, resulting in O (Np, D) complexity. However, generally CS runs for a number of iterations, so the overall complexity depends on the maximum iteration number (gmax). This procedure gives the overall time complexity of BCS as O (Np. D. gmax). Compared with BCS, our IICS needs to perform additional computations of O (Np. D. gmax) for the proposed information interaction-enhanced mechanism. Meanwhile, the selection of information providers consumes further computational complexity of O (Np. D. gmax). Accordingly, the computation time complexity of IICS is the same as that of BCS, i.e., O (Np. D. gmax). However, IICS significantly outperforms BCS according to the experimental results given in the following sections. These observations suggest that when compared with BCS, our IICS achieves better tradeoff between performance improvement and computation time complexity.

4 Optimizing parameters of the hybrid model using IICS

In this section, the IICS algorithm is applied to solve the parameter optimization problem of empirical models \(\hat{f}_{{{\text{arc}}}}\) and \(\hat{f}_{{{\rm sur}}}\) in the hybrid prediction model described by Eq. (19), hereafter referred as to the PO problem, and thus accomplish their training with the proposed indirect method. In the process of search, nest i is encoded as zi = [θarci, θsuri] to represent a set of candidate values of θarc and θsur, and Eq. (20) is used as the fitness function to evaluate the quality of each nest. Algorithm 2, as illustrated in Fig. 4, describes the procedures for the calculation of the fitness value Ji for nest i.

The implementation of IICS for optimizing the parameters in the empirical part with the indirect training method is given by Algorithm 3, as illustrated in Fig. 5.

5 Experiments

In this section, the IICS algorithm was validated on 16 classical benchmark functions and the 29 CEC 2017 benchmark functions. Then, the actual production data from a 300 t LF at Baoshan Iron & Steel Co. Ltd were used to build the proposed hybrid prediction model using IICS.

5.1 Performance validation of IICS on benchmark functions

5.1.1 Validation on classical benchmark functions

In this subsection, 16 classical benchmark functions [41,42,43] are employed for investigating the performance of IICS. These benchmark functions are listed in Table 2, which includes the mathematical formula, search range, and function value at the global minimum (\(F^{*}\)) of each benchmark function. These benchmark functions fall into two categories: unimodal problems and multimodal problems. Of them, f1, f2, f3, f4, and f5 are unimodal ones containing only one optimum, whereas the rest 11 benchmark functions, namely f6 to f16, are multimodal ones having many local optima, but only one global optimum. In addition, it should be pointed out that the validation is performed with 50 variables, that is, the dimension (D) of each benchmark function is 50.

In order to show the competitiveness of the proposed IICS, the proposed IICS is compared with six recently developed CS variants, i.e., CCS [43], ACS [44], ICS [45], NNCS [46], BHCS [47], and HECS [48], as well as BCS in this subsection. For fair comparison, Np and gmax (the maximum iteration number used to terminate the iteration) of the eight CS-based algorithms are set to be same, that is Np = D and gmax = 5000 as done in the studies [46, 49]. We follow the parameter settings of CCS, ACS, ICS, NNCS, BHCS, and HECS used or recommended in the studies conducted on them. For BCS and IICS, the configuration of the common parameter pa is taken from that originally utilized by Yang and Deb [22, 38]. From these studies, pa = 0.25 is a better choice for most optimization problems. The specific parameter of IICS, i.e., ca, is adjusted via experiment analysis. According to the experiments, it was found that when ca = 0.08 the search performance of IICS can be well balanced on different kinds of optimization problems. The parameter settings of the eight CS-based algorithms are summarized in Table 3.

To reduce random discrepancy, each algorithm is performed independently for 30 runs on each benchmark function, and the mean, best, worst, and standard deviation (SD) of the function error (f(xbest) − F*), where xbest represents the best solution achieved by the algorithm in a run, are calculated and recorded. The test results of the eight CS-based algorithms on the 16 classical benchmark functions are shown in Table 4. The best mean error values among the eight algorithms are highlighted in boldface. On these 16 classical benchmark functions, IICS produced the lowest mean error values for eight of these, while BHCS produces the lowest mean error values in five cases. For two of the benchmark functions, IICS and some of its competitors are seen to produce equal results. For f14, it is observed that CCS produces the better solution. Although BHCS attains the best mean results on 4 out of the 5 unimodal benchmark functions, it sacrifices performance on multimodal ones. On the contrary, IICS is not only very efficient in solving the unimodal problems but also attain very competitive performance on the multimodal ones. To statistically compare IICS with each competitor, the multiple-problem Wilcoxon rank test is conducted at a significance level of 5% based on the mean error value. In Tables 4 and 5, the statistical significance state is indicated with the symbols + , ≈, and –, denoting that the competitor performs significantly worse than, insignificantly different from, and significantly better than the IICS algorithm respectively. Moreover, the Friedman test is also applied to determine the differences between these algorithms and rank them with a significance level of 0.05. Table 4 indicates that IICS provides significantly better results than all of the other seven algorithms. The row headed “Mean Rank” provides the final ranking of different algorithms for all 16 classical benchmark functions. The results show that IICS, NNCS and BHCS are in the first, second and third orders with 1.56, 3.84, and 3.91 mean rank values respectively. In addition, the p value (7.4999e−09) is smaller than the chosen significance level (0.05). This indicates that there is at least one significant difference among the algorithms’ results.

To show the convergence process visually, the convergence curves of each algorithm in term of the mean error values on the classical benchmark functions are presented in Fig. 6. For the ease of comparison, semilogarithmic coordinate is used to plot the convergence curves of each benchmark function, except that of f14. When the convergence curves in Fig. 6 are analyzed, it can be observed that IICS performs well in 14 out of the 16 classical benchmark functions. IICS has significantly higher convergence speeds in f6, f7, f9 to f13, and f16 compared with the other seven CS-based algorithms. Therefore, IICS can be regarded efficient. However, the result patterns slightly differ in some of the 14 functions: for f14, it can be seen that the rapid convergence of IICS is at the expense of being trapped in the local minima, while the results of f1 to f3, f5, and f8 show that IICS and BHCS perform similarly in terms of convergence speed and accuracy. In addition, it can also be observed that IICS is weak in f4 and f15. For these two functions, BHCS has the highest convergence speeds and best search results.

5.1.2 Validation on CEC 2017 benchmark functions

In this subsection, benchmark functions from CEC 2017 are used as the benchmark test set. This test set consists of 29 benchmark functions: two unimodal functions, F1 and F3; seven simple multimodal functions, F4 − F10; ten hybrid functions, F11 − F20; and ten composition functions, F21 − F30. The order of these functions is the same as the original article [50]. The detailed functions are not presented here to save space. These benchmark functions are considered difficult to optimize, as all of them are shifted and rotated, and some of them are hybrid or composition functions. It should be noted that the function F2 named “Shifted and Rotated Sum of Different Power” is not used here due to unstable behavior especially for higher dimensions, as described in [50]. The detailed definitions can be found in the original article.

In addition to BCS, CCS, ACS, ICS, NNCS, BHCS, and HECS, this subsection compares IICS with four other intelligent algorithms, i.e., hybrid firefly and particle swarm optimization (HFPSO) [51], enhanced LSHADE-SPACMA (ELSHADE-SPACMA) [52], hybrid sampling evolution strategy (HSES) [53], and improved sine cosine algorithm with crossover scheme (ISCA) [54], which belong to PSO, DE, covariance matrix adaptation evolution strategy (CMA-ES), and sine cosine algorithm (SCA) communities, respectively. These four algorithms have all proved their good performance on CEC 2017 benchmark functions, and HSES and ELSHADE-SPACMA have won the first and third places in the CEC 2018 competition respectively. Parameter configurations of the new selected algorithms are the same as in the corresponding references, as listed in Table 3. Size of the population (Np) is set equal to the benchmark function dimension (D) in IICS, BCS, CCS, ACS, ICS, NNCS, BHCS, HECS, HFPSO, and ISCA, while the settings of Np in ELSHADE-SPACMA and HSES are consistent with those in the original studies. In accordance with the original article of the competition of CEC 2017 problems [50], each algorithm is repeated 51 runs with the maximum number of function evaluations set to 10,000 × D.

Table 5 lists the obtained results from all involved algorithms on CEC 2017 benchmark functions with D = 50, including the mean, best, worst and standard deviation (SD) values of the function error of every benchmark function obtained by each algorithm, as well as the findings from the multiple-problem Wilcoxon rank test and Friedman test both at a significance level of 0.05. In Table 5, the mean, best, worst and SD values for ELSHADE-SPACMA and HSES are collected from the original articles [52, 53], and values smaller than 10−8 are indicated as 0.00e+00. When the results from IICS compared with those from the other seven CS-based algorithms, it can be seen IICS produces the best mean error values for 17 of the 29 benchmark functions, while all the other seven CS-based competitors do so for just 13 benchmark functions. Examination of Symbol row in Table 5 further indicates that IICS achieves significantly better results than the other seven CS-based competitors, as well as HFPSO and ISCA. However, the ELSHADE-SPACMA and HSES algorithms are seen to return superior results to IICS. The findings from the Friedman test show that ELSHADE-SPACMA, HSES and IICS have the first, second, and third mean rank values of 1.69, 1.95, and 4.02, respectively.

5.2 Experimental verification based on actual production data

In this section, 537 heats of actual production data from a 300 t LF built in Baoshan Iron & Steel Co., Ltd., are employed to verify the ability of the proposed hybrid prediction model, as well as the performance of the IICS algorithm. Among these data, 437 heats are randomly selected for the development of the proposed prediction model, and the remainders are utilized for testing its performance.

The parameter setting for IICS to solve the PO problem is as follows: \(p_{a} = 0.25\), \(c_{a} = 0.08\), \(N_{p} = D\) (where D = [(2 + 1) × hd1 + (hd1 + 1) × 1] + [(3 + 1) × hd2 + (hd2 + 1) × 1], and hd1 and hd2 denote the hidden neuron numbers of the empirical models \(\hat{f}_{{{\text{arc}}}}\) and \(\hat{f}_{{{\rm sur}}}\) respectively), and gmax = 5000. Moreover, it should be pointed out that when IICS is employed to solve the following model parameter optimization problems, 20 independent calculation runs are conducted to reduce random discrepancy. Correspondingly, the mean predicted values are utilized for the following hidden neuron number selection, as well as the model prediction performance exhibition and comparison.

For each of the above two empirical models, the activation functions for the hidden layer and the output layer are the sigmoid function and the linear function, respectively. The optimal numbers of hidden neurons for \(\hat{f}_{{{\text{arc}}}}\) and \(\hat{f}_{{{\rm sur}}}\) are 3 and 5, respectively, determined by trial and error; that is to say, the selected topologies of these two empirical models are 2–3–1 and 3–5–1, respectively. After the topologies of \(\hat{f}_{{{\text{arc}}}}\) and \(\hat{f}_{{{\rm sur}}}\) are selected, all the 437 heats of modeling data are utilized for determining the model parameters with the methodology depicted in Sect. 4, to obtain the overall hybrid temperature prediction model of molten steel.

Then, the 100 heats of testing data are utilized to evaluate the performance of the proposed hybrid prediction model. Figure 7 shows the final molten steel temperature predicted by the developed hybrid model. Figure 7 shows that this model can predict the temperature with high accuracy. Out of these prediction results, the absolute error in 91% of the cases is lower than 5 °C (desirable value), and in 95% of the cases, it is lower than 7 °C (tolerable value), and only in 2% of the cases is absolute error higher than 10 °C. This demonstrates the effectiveness of the hybrid prediction model, with the proposed indirect training method for its empirical part.

To demonstrate the excellent prediction ability of the proposed hybrid model, this article also develops an empirical prediction model based on the above selected 437 heats of production data. To be fair to the comparison, this empirical model is also established utilizing a SLFN and its parameters (namely the network’s weights and thresholds) are determined by IICS. The input layer of this SLFN-based empirical prediction model has eight neurons. They are the initial molten steel temperature, total power consumption, ladle state, heat effect of additions, total argon consumption, weight of molten steel, refining time, and energy change of cooling water in the water-cooled cover. The hidden layer has 13 neurons (determined by trial and error), and the output layer has one neuron (the final molten steel temperature). Figure 8 shows the results predicted by the empirical model. For ease of comparison, the prediction errors (PE) of the proposed hybrid model and empirical model, as well as the differences between the prediction errors (D_PE) of these models are presented in Fig. 9a, b respectively. Herein, the differences in more details are the results obtained by subtracting the absolute prediction errors of the empirical model from that of the hybrid model. Thus, obviously the D_PE value can indicate whether the prediction performance of the hybrid model is better than (i.e., D_PE < 0), ties (i.e., D_PE = 0), or worse than (i.e., D_PE > 0) that of the empirical model on the corresponding heat. In addition, four performance evaluation indices are used for quantitative comparison. They are the root mean square error (RMSE), mean absolute error (MAE), mean relative error (MRE), and accuracy rate (AR) which is defined as

where Na is the number of heats with absolute prediction errors not higher than 5 °C, and Nt is the number of total testing heats. The calculation results with respect to these four indices for the above two models are listed in Table 6.

From Figs. 7–9, it can be observed that both the hybrid model and the empirical one could predict the molten steel temperature with certain accuracy, while the prediction values given by the former are much closer to the measured values than those given by the latter. Furthermore, as can be observed from the data in Table 6, the proposed hybrid model predicts significantly better than the empirical one. Compared with the empirical model, the RMSE, MAE, and MRE of the proposed hybrid model are respectively lower by 33.11%, 30.81%, and 30.77%; while the AR of the proposed hybrid model exceeds 90%, an 18.18% improvement over the empirical one. These demonstrate the excellent prediction performance of the proposed hybrid model in a practical application. From these observations and comparisons above, it can be concluded that the proposed hybrid model is a promising predictor for the molten steel temperature.

Moreover, to confront the search capability of IICS with some other widely used intelligent algorithms in model parameter optimization, GA [12], PSO [13], DE [14], ACO [15], and BCS are employed to solve the same PO problem with the topologies of \(\hat{f}_{{{\text{arc}}}}\) and \(\hat{f}_{{{\rm sur}}}\) being 2–3–1 and 3–5–1 respectively, and the 437 heats of modeling data. In addition, it is interesting to investigate the performance of the two winners of the CEC 2018 competition, i.e., HSES and ELSHADE-SPACMA, on this PO problem. The parameters of these four new selected algorithms are set according to the respective studies. Specifically, in GA the BLX-α Crossover is used, and the crossover probability pc, mutation probability pm, and parameter α for BLX are set to 0.85, 0.02, and 2.0 respectively. In PSO the inertia weight ω, cognitive acceleration coefficient c1, and social acceleration coefficient c2 are taken as 0.5, 2.0, and 1.0, respectively. In DE the scale factor F and crossover probability Pxover are both set to 0.5, and the mutation operator is DE/best/1. In ACO the selection parameter q0, two weighting parameters α and β, and two updating parameters Q and γ are respectively set to 0.85, 1, 4, 0.1, and 0.7. The parameters of IICS, BCS, ELSHADE-SPACMA, and HSES are in common with those used in Sect. 5.1. The size of population Np is set to be the number of model parameters to be estimated (i.e., D = 39) for IICS, GA, PSO, DE, ACO, and BCS, while the settings of Np for ELSHADE-SPACMA and HSES are consistent with the original studies. To be fair, the maximum number of fitness function evaluations in all 20 runs is equal to 10,000 × D.

Table 7 gives the results of the eight algorithms on the PO problem in 20 independent runs, and the best results are shown in boldface for clarity. The columns headed ‘Mean’, ‘Min’, ‘Max’, and ‘SD’ show the mean, minimum, maximum, and standard deviation values of the fitness function defined by Eq. (20) for each algorithm. As can be seen from Table 7, in terms of the mean fitness function value both BCS and IICS give better results than the other four widely-used model parameter optimization algorithms, revealing that CS is relatively more suitable for solving the PO problem involved in this study. It can also be seen that IICS performs better than ELSHADE-SPACMA and HSES, which indicates that IICS is an efficient algorithm for solving the PO problem. Furthermore, it can be observed that IICS produces much better optimization results than BCS. Figure 10 illustrates the convergence progress of IICS and BCS on the PO problem. It can be found from Fig. 10 that IICS has a better search accuracy and a higher convergence speed, which demonstrates again that the proposed IICS algorithm has greatly enhanced the performance of BCS.

6 Conclusions

A hybrid model for the prediction of molten steel temperature in LF is proposed. In the proposed hybrid prediction model, two SLFN-based empirical models are incorporated within the structure of a mechanistic thermal model, to represent the unknown functions in the mechanistic thermal model. The primary difference between the proposed hybrid prediction model and existing ones is that its empirical part is not trained in the traditional direct way since the target outputs of the two empirical models are unavailable in advance. In the proposed approach, the empirical part is trained indirectly with the readily available temperature measurements of molten steel but not the barely accessible target outputs of this part, which means the hybrid prediction model with its empirical part trained by the proposed indirect method has more extensive application range when compared to existing ones. Application results on the production data from a 300 t LF at Baoshan Iron & Steel Co., Ltd, show the effectiveness and superiority of the proposed hybrid prediction model.

Another main innovation of this article is the development of the information interaction-enhanced CS (IICS), which is used to optimize the parameters in the empirical part so as to complete the development of the proposed hybrid prediction model. One of the problems with BCS and many of its variants is that the information interaction among cuckoos is lacking in the search process, which would decrease their search performance considerably. In order to overcome this problem, an information interaction-enhanced mechanism is proposed and employed in IICS. The optimization results on the model parameter estimation problem and two benchmark sets (16 classical benchmark functions and 29 CEC 2017 benchmark functions) indicate that IICS has distinct advantages over its competitive algorithms (expect ELSHADE-SPACMA and HSES) on these optimization problems. When compared with the winners of the CEC 2018 competition, i.e., ELSHADE-SPACMA and HSES, the performance of IICS is found to be inferior to the two top algorithms on the CEC 2017 benchmark set, but it produces better results on the parameter optimization problem involved in this study. Despite its promising performance, the proposed IICS still has limitations. First of all, compared with BCS, one more parameter (i.e., ca) is used by the algorithm to perform the proposed information interaction-enhanced mechanism. Consequently, the parameter tuning process used to achieve a reasonably good performance of IICS can be time consuming. As for the two common parameters of IICS and BCS (i.e., α and pa), our current study sets them directly according to the recommendation of Yang and Deb [22, 38]. There may be better value combinations of the three parameters. But their tuning process will no doubt become much more time consuming, and it might also require retuning when the algorithm is applied to solve different optimization problems.

Based on the current study, several future work directions can be pursued. Firstly, a parameter self-learning strategy could be constructed for the proposed IICS algorithm so as to tune the three involved parameters (i.e., α, pa, and ca) adaptively. Secondly, there is still room for improvement in the selection strategy of information providers in IICS. The selection of information providers in this article is a kind of blindness; therefore, a more effective selection strategy is worthy to research. Finally, the proposed indirect hybrid modeling method could be also applied to other LF refining processes or other similar complex industrial processes.

References

Çamdali Ü, Tunç M (2006) Steady state heat transfer of ladle furnace during steel production process. J Iron Steel Res Int 13:18–20

Volkova O, Janke D (2003) Modelling of temperature distribution in refractory ladle lining for steelmaking. ISIJ Int 43:1185–1190

Wu YJ, Jiang ZH, Jiang MF, Gong W, Zhan DP (2002) Temperature prediction model of molten steel in LF. J Iron Steel Res 14:9–12 ((in Chinese))

Nath NK, Mandal K, Singh AK, Basu B, Bhanu C, Kumar S, Ghosh A (2006) Ladle furnace on-line reckoner for prediction and control of steel temperature and composition. Ironmak Steelmak 33:140–150

Tian HX, Mao ZZ, Wang AN (2009) A new incremental learning modeling method based on multiple models for temperature prediction of molten steel in LF. ISIJ Int 49:58–63

Tian HX, Mao ZZ (2010) An ensemble ELM based on modified AdaBoost. RT algorithm for predicting the temperature of molten steel in ladle furnace. IEEE Trans Autom Sci Eng 7:73–80

Wang XJ, Yuan P, Mao ZZ, You MS (2016) Molten steel temperature prediction model based on bootstrap feature subsets ensemble regression trees. Knowl-Based Syst 101:48–59

Wang X-J, Wang X-Y, Zhang Q, Mao Z-Z (2018) The soft sensor of the molten steel temperature using the modified maximum entropy based pruned bootstrap feature subsets ensemble method. Chem Eng Sci 189:401–412

Tian HX, Mao ZZ, Wang Y (2008) Hybrid modeling of molten steel temperature prediction in LF. ISIJ Int 48:58–62

Lü W, Mao ZZ, Yuan P (2012) Ladle furnace liquid steel temperature prediction model based on optimally pruned bagging. J Iron Steel Res Int 19:21–28

Fu GQ, Liu Q, Wang Z, Chang J, Wang B, Xie FM, Lu XC, Ju QP (2013) Grey box model for predicting the LF end-point temperature of molten steel. J Univ Sci Technol B 35:948–954 ((in Chinese))

Chisari C, Bedon C, Amadio C (2015) Dynamic and static identification of base-isolated bridges using genetic algorithms. Eng Struct 102:80–92

Rahman MA, Anwar S, Izadian A (2016) Electrochemical model parameter identification of a lithium-ion battery using particle swarm optimization method. J Power Sources 307:86–97

Quaranta G, Marano GC, Greco R, Monti G (2014) Parametric identification of seismic isolators using differential evolution and particle swarm optimization. Appl Soft Comput 22:458–464

Bououden S, Chadli M, Karimi HR (2015) An ant colony optimization-based fuzzy predictive control approach for nonlinear processes. Inform Sci 299:143–158

Kang F, Li JJ, Dai JH (2019) Prediction of long-term temperature effect in structural health monitoring of concrete dams using support vector machines with Jaya optimizer and salp swarm algorithms. Adv Eng Softw 131:60–76

Kang F, Li JJ, Ma ZY (2011) Rosenbrock artificial bee colony algorithm for accurate global optimization of numerical functions. Inform Sci 181:3508–3531

Abd Elazim SM, Ali ES (2016) Optimal power system stabilizers design via cuckoo search algorithm. Int J Electron Power 75:99–107

Schwaab M, Biscaia EC Jr, Monteiro JL, Pinto JC (2008) Nonlinear parameter estimation through particle swarm optimization. Chem Eng Sci 63:1542–1552

Lee KH, Kim KW (2015) Performance comparison of particle swarm optimization and genetic algorithm for inverse surface radiation problem. Int J Heat Mass Transf 88:330–337

Xu W, Zhang LB, Gu XS (2011) Soft sensor for ammonia concentration at the ammonia converter outlet based on an improved particle swarm optimization and BP neural network. Chem Eng Res Des 89:2102–2109

Yang XS, Deb S (2009) Cuckoo search via Lévy flights. In: 2009 World congress on nature & biologically inspired computing (NaBIC 2009). IEEE, Coimbatore, India, pp 210–214

Shehab M, Khader AT, Al-Betar MA (2017) A survey on applications and variants of the cuckoo search algorithm. Appl Soft Comput 61:1041–1059

Zhu XH, Wang N (2018) Splicing process inspired cuckoo search algorithm based ENNs for modeling FCCU reactor-regenerator system. Chem Eng J 354:1018–1031

Du XJ, Wang JL, Jegatheesan V, Shi GH (2018) Parameter estimation of activated sludge process based on an improved cuckoo search algorithm. Bioresour Technol 249:447–456

Zhao J, Wong PK, Xie ZC, Ma XB, Hua XQ (2019) Design and control of an automotive variable hydraulic damper using cuckoo search optimized pid method. Int J Autom Technol 20:51–63

Meng XJ, Chang JX, Wang XB, Wang YM (2019) Multi-objective hydropower station operation using an improved cuckoo search algorithm. Energy 168:425–439

Mareli M, Twala B (2018) An adaptive cuckoo search algorithm for optimisation. Appl Comput Inform 14:107–115

Yang B, Miao J, Fan ZC, Long J, Liu XH (2018) Modified cuckoo search algorithm for the optimal placement of actuators problem. Appl Soft Comput 67:48–60

Faris H, Sheta A (2016) A comparison between parametric and non-parametric soft computing approaches to model the temperature of a metal cutting tool. Int J Comput Integr Manuf 29:1–12

Zhu XL, Rehman KU, Wang B, Shahzad M (2020) Modern soft-sensing modeling methods for fermentation processes. Sensors 20:1771

Das D, Pal AR, Das AK, Pratihar DK, Roy GG (2020) Nature-inspired optimization algorithm-tuned feed-forward and recurrent neural networks using CFD-based phenomenological model-generated data to model the EBW process. Arab J Sci Eng 45:2779–2797

Civicioglu P, Besdok E (2013) A conceptual comparison of the cuckoo-search, particle swarm optimization, differential evolution and artificial bee colony algorithms. Artif Intell Rev 39:315–346

Bhateja AK, Bhateja A, Chaudhury S, Saxena PK (2015) Cryptanalysis of vigenere cipher using cuckoo search. Appl Soft Comput 26:315–324

Fredman TP, Saxén H (1998) Model for temperature profile estimation in the refractory of a metallurgical ladle. Metall Mater Trans B 29:651–659

Hornik K, Stinchcombe M, White H (1989) Multilayer feedforward networks are universal approximators. Neural Netw 2:359–366

Ahmed J, Salam Z (2014) A maximum power point tracking (MPPT) for PV system using Cuckoo Search with partial shading capability. Appl Energy 119:118–130

Yang XS (2014) Nature-inspired optimization algorithms. Elsevier, Oxford

Sekhar P, Mohanty S (2016) An enhanced cuckoo search algorithm based contingency constrained economic load dispatch for security enhancement. Int J Elec Power 75:303–310

Mantegna RN (1994) Fast, accurate algorithm for numerical simulation of Lévy stable stochastic processes. Phys Rev E Stat Phys Plasmas Fluids Relat Interdiscip Topics 49:4677–4683

Yao X, Liu Y, Lin GM (1999) Evolutionary programming made faster. IEEE Trans Evol Comput 3:82–102

Lim WH, Mat Isa NA (2013) Two-layer particle swarm optimization with intelligent division of labor. Eng Appl Artif Intel 26:2327–2348

Huang L, Ding S, Yu S, Wang J, Lu K (2016) Chaos-enhanced cuckoo search optimization algorithms for global optimization. Appl Math Model 40:3860–3875

Naik MK, Panda R (2016) A novel adaptive cuckoo search algorithm for intrinsic discriminant analysis based face recognition. Appl Soft Comput 38:661–675

Valian E, Tavakoli S, Mohanna S, Haghi A (2013) Improved cuckoo search for reliability optimization problems. Comput Ind Eng 64:459–468

Wang LJ, Zhong YW, Yin YL (2016) Nearest neighbour cuckoo search algorithm with probabilistic mutation. Appl Soft Comput 49:498–509

Chen X, Yu KJ (2019) Hybridizing cuckoo search algorithm with biogeography-based optimization for estimating photovoltaic model parameters. Sol Energy 180:192–206

Huang ZY, Gao ZZ, Qi L, Duan H (2019) A heterogeneous evolving cuckoo search algorithm for solving large-scale combined heat and power economic dispatch problems. IEEE Access 7:111287–111301

Noman N, Iba H (2008) Accelerating differential evolution using an adaptive local search. IEEE Trans Evol Comput 12:107–125

Awad NH, Ali MZ, Suganthan PN, Liang JJ, Qu BY (2016) Problem definitions and evaluation criteria for the CEC 2017 special session and competition on single objective real-parameter numerical optimization

Aydilek İB (2018) A hybrid firefly and particle swarm optimization algorithm for computationally expensive numerical problems. Appl Soft Comput 66:232–249

Hadi AA, Wagdy A, Jambi K (2018) Single-objective real-parameter optimization: enhanced LSHADE-SPACMA algorithm

Zhang G, Shi YH (2018) Hybrid sampling evolution strategy for solving single objective bound constrained problems. IEEE C Evol Comput 1–7

Gupta S, Deep K (2019) Improved sine cosine algorithm with crossover scheme for global optimization. Knowl Based Syst 165:374–406

Acknowledgements

This work was supported by the Fundamental Research Funds for the Central Universities (Grant Number N2025032), the Liaoning Provincial Natural Science Foundation (Grant Number 2020-MS-362), and the National Key Research and Development Program of China (Grant Number 2017YFA0700300). The first author would also like to thank China Scholarship Council.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yang, Q., Fu, Y. & Zhang, J. Molten steel temperature prediction using a hybrid model based on information interaction-enhanced cuckoo search. Neural Comput & Applic 33, 6487–6509 (2021). https://doi.org/10.1007/s00521-020-05413-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-020-05413-5