Abstract

In this paper, by combining the dynamic gain and the self-adaptive neural network, an output feedback fault-tolerant control method was proposed for a class of nonlinear uncertain systems with actuator faults. First, the dynamic gain was introduced and the coordinate transformation of the state variables of the system was performed to design the corresponding state observers. Then, the observer-based output feedback controller was designed through the back-stepping method. The output feedback control method based on the dynamic gain can solve the adaptive fault-tolerant control problem when there are simple nonlinear functions with uncertain parameters in the system. For the more complex uncertain nonlinear functions in the system, in this paper, a single hidden layer neural network was used for compensation and the fault-tolerant control was realized by combining the dynamic gain. Finally, the height and posture control system of the unmanned aerial vehicle with actuator faults was taken as an example to verify the effectiveness of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fault-tolerant control of the nonlinear systems has always been a concern in the control field. Since nonlinear systems usually have complex structures and more uncertainties, it is more difficult to compensate once the fault occurs. At current stage, there have been many achievements in the research of the fault-tolerant control of nonlinear systems. But there are still many problems that need to be solved.

The main problems that need to be solved to achieve the fault-tolerant control of nonlinear systems are nonlinear functions in the system, uncertain parameters and unknown fault signals. The dynamic feedback is a good method to compensate for the uncertainties in the system [1, 2]. When the nonlinear functions and the uncertainties satisfied certain conditions in the system, the dynamic feedback can effectively perform adaptive compensation. The time-varying feedback was introduced for a class of nonlinear systems with time-varying uncertain parameters in [3]. The existence of the time-varying feedback was analyzed, and all state variables of the system were stabilized. However, the time-varying gain could not form a closed loop with the original system and it was an unbounded signal. Therefore, this method had great limitations. The time-varying gain that does not form a closed loop with the original system is convenient and intuitive in theoretical analysis, but is greatly limited in practical applications.

The time-varying dynamic gain that can form a closed loop with the system has always been a hot topic in adaptive control research. Applying the dynamic gain, an output feedback stabilization method for a class of uncertain nonlinear systems with control functions was designed in [4]. The dynamic equation of the gain was related to the output of the system and the state of the observer. So it could form a closed loop with the system. The stability of the whole closed-loop system was also demonstrated. On this basis, the stabilization based on the dynamic gain for systems with stronger nonlinearity and uncertainty was studied, respectively, in [5, 6]. And the papers [5, 6] have given different dynamic gain design methods and proof methods for the stability of the closed-loop system. The paper [7] realized the error tracking of nonlinear systems by using the dynamic gain, so that all variables of the closed-loop system were uniformly bounded and the output signal could track the reference signal with a set accuracy parameter.

When the fault occurs in the system, no matter it is the effectiveness loss fault of the actuator or the unknown stuck fault, both of them can be regarded as the uncertainties of the system and adaptively compensated by the dynamic gain. In [8], the fault-tolerant control of actuators with dead zone in the nonlinear system was considered and the dead-zone faults were compensated by introducing the dynamic gain. The paper [9] introduced a switching mechanism to the dynamic gain fault-tolerant control method which has accelerated the fault compensation. When applying the dynamic gain to solve the nonlinear control problem, it is usually necessary to assume that the nonlinear function should satisfy certain conditions, such as Lipschitz properties and Lipschitz-like properties. The dynamic gain cannot be used when the nonlinear function is complex that cannot satisfy certain conditions.

Neural networks are widely used in the adaptive control of various nonlinear systems [10]. Compensation to the nonlinear functions is achieved through adaptive weight which further solves the control problem [11]. In [12], comprehensive faults in nonlinear systems were considered and the fault-tolerant control method by using the radial basis function (RBF) neural network was studied. The designed observer was only used for faults information extraction and not for output feedback where the controller was the state feedback.

The paper [13] compensated the nonlinear functions for a class of nonlinear interconnected systems by the RBF neural network, and the state feedback fault-tolerant controller was designed by using the back-stepping method and combining the interconnected characteristics of the system. Since state feedback requires all variables of the system to be measurable, this proposed method cannot be well applied in practice. The paper [14] used the RBF neural network to design an output feedback fault-tolerant controller for a class of nonlinear systems while the effectiveness loss fault of the actuator and the unknown stuck fault were both considered. However, in [14], when designing the state observer, the effectiveness loss fault was not considered and the effectiveness of the proposed method was demonstrated by the simulation results without theoretical basis. In [15], an output feedback controller was designed when the system was normal without any fault and the compensation effect of the controller to the faults was demonstrated in the simulation results. On the other hand, the fault was considered in designing the output feedback controller [16]. However, to prove the stability of the system theoretically required harsh assumptions. Therefore, this method cannot be further promoted. When there is a fault in the system, especially an effectiveness loss fault, the output feedback based on the neural network will introduce new difficult-to-handle nonlinear items in the design process due to the actuator effectiveness loss. Therefore, more studies first design the controller when there is no fault, and then demonstrate the compensation effect to faults through simulation results which lacks the theoretical basis.

Most of the neural networks used in the literature were non-hidden or hidden where the weights of the hidden layers were artificially set rather than self-adaptively updated. Networks with hidden layers were rarely used in the control of the nonlinear systems. A single hidden layer neural network was combined with a filter to design an output feedback stabilization method for a class of nonlinear systems in [17]. This method was difficult to promote since it placed harsh requirements on the system. The paper [18] adopted the single hidden layer neural network to compensate the nonlinear functions in a quad-rotor UAV system and designed the output feedback trajectory tracking controller which was useful for the tracking control of the UAV. Although the single hidden layer network was adopted in [18], the weights of the hidden layers were artificially set constant rather than self-adaptively updated. When the network with hidden layers is applied in the system, and if the weights are adaptively updated, the system will become more complex and more parameters which are difficult to handle will emerge, and the stability will be affected too.

At the current stage, for the fault-tolerant control of the nonlinear system, various methods emerged and each method had its own advantages and disadvantages. Combining various fault-tolerant control methods to solve the fault compensation problem of the systems with stronger nonlinearity and uncertainty is still a problem that needs to be studied and solved.

In this paper, the dynamic gain was combined with the adaptive neural network. The simple nonlinearity, uncertainty and faults were adaptively compensated through the dynamic gain. For the more complex nonlinear functions, the dynamic single hidden layer neural network was used for approximation and the compensation was completed by combining the dynamic gain. The way of combining the dynamic gain with the neural network can make the adaptive single hidden layer network be successfully applied in the fault-tolerant control of the nonlinear system.

2 Problem formulation

Consider a class of nonlinear systems described by

where \(\xi_{i} \in R^{n}\), \(i = 1,\ldots,N\) are the system state vector; \(u^{F} (t) \in R^{n}\) is the input vector under the fault of actuators \(A_{i} (t) \in R^{n \times n}\), \(i = 1,\ldots,N - 1\) are the unknown time-varying matrices; \(B(t) \in R^{n \times n}\) is the known time-varying matrix which is continuously differentiable for \(t\); \(\Delta_{i} \left( {\xi_{1} ,\ldots,\xi_{i} } \right) \in R^{n}\), \(i = 1,\ldots,N\) are uncertain nonlinear functions; \(f\left( {\xi_{1} ,\ldots,\xi_{N} } \right) \in R^{n}\) is more complex uncertain nonlinear function, which is not necessarily satisfying the Lipschitz properties and may have complex and unknown structures; only \(\xi_{1}\) is measurable of all the state variables.

The faults considered in this article are: \(u^{F} (t) = \rho (t)u(t) + \psi (t)\), where \(\rho (t) = {\text{diag}}\left\{ {\rho_{1} (t),\ldots,\rho_{n} (t)} \right\}\) are the effectiveness factors of the actuators which represent the effectiveness loss of actuators, such as the rotor damage of the UAV; \(\psi (t) \in R^{n}\) is the unknown stuck fault, such as the unknown intense disturbance of the UAV system. Before starting to study the fault-tolerant control method of system (1), the following assumptions are necessary.

Assumption 1

There exist known positive constants \(\overline{A}\), \(\underline{A}\), \(\overline{B}\) and \(\underline{B}\), such that

Assumption 2

There exists known matrix \(\hat{A}(t)\), such that

where \(\lambda_{0}\) is a known constant.

Assumption 3

There exist unknown positive constants \(\theta_{i1}\) and \(\theta_{i2}\), such that

Assumption 4

There exist unknown positive constants \(\underline{\psi }\) and \(\overline{\psi }\), such that

Assumption 5

There exists known positive constant \(\underline{\rho }\), such that

Remark 1

The system state variables \(\xi_{i} \in R^{n}\) studied in this paper are multidimensional. And the system contains more complex nonlinear functions \(f\left( {\xi_{1} ,\ldots,\xi_{N} } \right)\). This is true of the dynamic models of various rigid bodies in reality, such as the rotor unmanned aerial vehicle (UAV). The fault compensation for such systems cannot be realized by using dynamic gain simply. The assumptions in this paper are all about the Lipschitz-like nature of simple nonlinear functions and the bounded nature of uncertain parameters and faults. So the assumptions are general.

3 Dynamic gain-based fault-tolerant control design

3.1 Observer design

At first, we define \(\overline{A}_{i} (t) = A_{i} (t)..A_{N - 1} (t)B(t)\rho (t)\hat{A}(t)\), \(i = 1,\ldots,N\), and introduce the transformation \(\eta_{i} = \left( {A_{i} (t)..A_{N - 1} (t)B(t)\rho (t)\hat{A}(t)} \right)^{ - 1} \left( {\xi_{i} - \xi_{ir} } \right)\), \(i = 1,\ldots,N\), where \(\xi_{ir}\)\(i = 1,\ldots,N\) are the reference signals, which are known and bounded.

System (1) can be converted into,

where

According to Assumptions 1, 2, and 3, we can obtain that \(\left\| {\Delta_{i}^{\prime} } \right\| \le \theta_{i1}^{\prime} + \theta_{i2}^{\prime} \sum\nolimits_{j = 1}^{i} {\left\| {\eta_{j} } \right\|}\), \(\underline{{\psi^{\prime} }} \le \left\| {\psi^{\prime} (t)} \right\| \le \overline{{\psi^{\prime} }}\), where \(\theta_{i1}^{\prime}\), \(\theta_{i2}^{\prime}\), \(i = 1..N\), \(\underline{{\psi^{\prime} }}\) and \(\overline{{\psi^{\prime} }}\) are unknown constants. Then, we introduce the dynamic gain \(L(t)\) and the following transformation,

where \(b\) is a parameter to be designed.

By (2) and (3), it can be obtained that

Then, we design the following observer for system (2)

By the similar transformation,

observer (5) can be converted into

We define \(e_{i} = x_{i} - \hat{x}_{i}\), \(i = 1,\ldots,N\), the following dynamic system of error

and (8) can be expressed as

where \(\tilde{I} = \left( {0_{n} ,\ldots,0_{n} ,I_{n} } \right)^{\rm T}\), \(e = (e_{1}^{\rm T} ,\ldots,e_{N}^{\rm T} )^{\rm T}\), \(\tilde{\Delta } = \left( {\frac{1}{{L^{b} }}\Delta_{1}^{\prime{\rm T}} , \ldots ,\frac{1}{{L^{b + N - 1} }}\Delta_{N}^{\prime{\rm T}} } \right)^{\rm T}\), and \(a = (a_{1} ,\ldots,a_{N} )^{\rm T}\); \(\otimes\) is the Kronecker product.

According to the paper [19], there is the following Lemma 1, by which the appropriate observer parameters \(a_{i}\), \(i = 1,\ldots,N\) can be selected.

Lemma 1

[17] There exist\(a_{i}\), \(i = 1,\ldots,N\), which can make\(A\)be a Hurwitz matrix, that there exist positive constants\(\mu\), \(\mu_{1}\), \(\mu_{2}\), and positive-definite matrix\(P \in R^{N \times N}\)such that

where\(D = {\text{diag}}\left\{ {\begin{array}{*{20}c} b & \ldots & {b + N - 1} \\ \end{array} } \right\}\).

After selecting the parameters of the observer, we first construct the following Lyapunov function:

By taking the derivatives of \(U\) with respect to time, we get

In (11), the following inequality can be obtained,

Then, by

we can deduce that

So, we get

3.2 Design of output feedback fault-tolerant control

The design of output feedback fault-tolerant control is realized by back-stepping in this paper. At first, we define

By taking the derivatives of \(z_{1}\) with respect to time, we get

where \(A_{1} (t)\xi_{2} = A_{1} (t)\left( {\xi_{2} - \xi_{2r} } \right) + A(t)\xi_{2r} = A_{1} (t)\overline{A}_{2}^{ - 1} (t)\eta_{2} + A_{1} (t)\xi_{2r}\), and \(\overline{A}_{ 1}^{{}} = A_{ 1} (t)..A_{N - 1} (t)B(t)\rho (t)\). So, (17) can be written as

further, (18) can be written as

Then, the following Lyapunov function can be constructed for \(z_{1}\),

Taking the derivatives of \(V_{0}\) with respect to time, we get

where \(\delta_{11}\) is a positive constant to be designed; \(\delta_{11}^{\prime} = \delta_{11}^{\prime} \left( {\delta_{11}^{{}} } \right)\) is a positive constant dependent on \(\delta_{11}\), which can be compensated by the control designed in the next steps; \(\alpha_{1}\) is the virtual control to be designed.

Next, we define \(V_{1} = U + V_{0}\) and take the derivatives of \(V_{1}\) with respect to time, we can deduce that

Then, we select \(\delta_{11} < \frac{\mu }{2}\), and design the virtual control \(\alpha_{1} = - q_{1} z_{1}\), where \(q_{1} > \frac{{2\delta_{11}^{\prime} }}{{\lambda_{0} }}\). By defining \(c_{1} = \frac{\mu }{2} - \delta_{11}\) and \(d_{11} = \frac{ 1}{ 2}q_{1} \lambda_{0} - \delta_{11}^{\prime}\), it can be deduced that

where \(d_{11}\) is an known positive constant; \(\overline{\theta }_{11}\) and \(\overline{\theta }_{12}\) are unknown positive constants.

By the above steps and assuming that step \(k\), \(k \ge 1\) has been completed, that the virtual control \(\alpha_{j} = - q_{j} z_{j}\), \(j = 1,\ldots,k\) have been designed which make (24) established

where \(c_{k}\), \(c_{Lk}\), \(d_{kj}\), \(d_{Lkj}\), \(j = 1,\ldots,k\) and \(\overline{\sigma }_{k - 1}\) are known positive constants; \(\overline{\sigma }_{0} = 1\); \(\overline{\theta }_{k1}\) and \(\overline{\theta }_{k2}\) are unknown positive constants.

Next, for the step \(k + 1\), we define \(z_{k + 1} = \hat{x}_{k + 1} - \alpha_{k}\) and construct the Lyapunov function as \(V_{k + 1} = \sigma_{k} V_{k} + \frac{1}{2}z_{k + 1}^{\rm T} z_{k + 1}\), where \(\sigma_{k}\) a positive constant to be designed. By taking the derivatives of \(z_{k + 1}\) with respect to time, we can obtain that

so, according to (25), we get

By direct derivation, the following three inequalities are established in (26):

and

where \(\delta_{k + 1,1,j} ,\; j = 1,\ldots,k,\delta_{k + 1,2}\) are positive constants to be designed; \(\delta_{k + 1,1}^{\prime} = \delta_{k + 1,1}^{\prime} \left( {\delta_{k + 1,1,j} ,\delta_{k + 1,2} } \right)\) is a positive constant dependent on \(\delta_{k + 1,1,j} ,\delta_{k + 1,2} ,j = 1,\ldots,k\); \(\lambda_{k + 1}\) is a positive constant to be designed; \(\lambda_{k + 1,j}^{\prime} = \lambda_{k + 1,j}^{\prime} \left( {\lambda_{k + 1} } \right),j = 1,\ldots,k\) are positive constants dependent on \(\lambda_{k + 1}\); \(\delta_{k + 1,3}\) is a positive constant to be designed; \(\delta_{k + 1,3}^{\prime} = \delta_{k + 1,3}^{\prime} \left( {\delta_{k + 1,3} ,\sigma_{k} } \right)\) a positive constant dependent on \(\delta_{k + 1,3}\) and \(\sigma_{k}\).

Therefore, According to (27), (28), and (29), the following can be obtained

Because

where \(\delta_{\theta ,k + 1,1}\) and \(\delta_{\theta ,k + 1,2}\) are unknown positive constants, we can select \(\lambda_{k + 1} < b + 1\), and \(\sigma_{k} > \hbox{max} \left\{ {\frac{{\lambda_{k + 1,j}^{\prime} }}{{d_{Lkj} }},j = 1,\ldots,k} \right\}\). Then, we select \(\delta_{k + 1,1,j} + \delta_{k + 1,3} < \sigma_{k} d_{kj}\) and \(\delta_{k + 1,2} < \sigma_{k} c_{k}\), and define \(\lambda_{k + 1} = b + 1 - d_{L,k + 1,k + 1}\), \(d_{Lk + 1j} = \sigma_{k} d_{Lkj} - \lambda_{k + 1,j}^{\prime}\), \(d_{k + 1j} = \sigma_{k} d_{kj} - \left( {\delta_{k + 1,1,j} + \delta_{k + 1,3} } \right)\), \(c_{k + 1} = \sigma_{k} c_{k} - \delta_{k + 1,2}\). Finally, the virtual control can be designed as \(\alpha_{k + 1} = - q_{k + 1} z_{k + 1}\), where \(q_{k + 1} = \delta_{k + 1,1}^{\prime} + \delta_{k + 1,3}^{\prime} + d_{k + 1,k + 1}\). After the above design, we have

where \(c_{k + 1}\), cLk+1, \(d_{k + 1j}\), \(d_{Lk + 1j}\), \(j = 1,\ldots,k + 1\) and \(\overline{\sigma }_{k} = \overline{\sigma }_{k - 1} \sigma_{k}\) are known positive constants, and \(\overline{\theta }_{k + 11}\) and \(\overline{\theta }_{k + 12}\) are unknown positive constants.

Thus, we design the real control as

such that

Then, we select the initial value of \(L(t)\) as \(L(0) = 1\), and design the following dynamic update rate:

where \(\in > 0\) is a parameter to be designed which indicates the accuracy.

Remark 2

It can be seen from (34) that the update rate of dynamic gain \(L(t)\) depends on the measurable variables \(z_{1}\) and \(\hat{x}_{i}\), \(i = 1,\ldots,N\), and the dynamic gain can form a closed loop with the system. Its role is to change with the measurable state variables to compensate the uncertain parameter nonlinear function and the fault in the system adaptively. Besides, the dynamic gain also enables the output to track the reference signal with certain accuracy. Qualitatively, (34) means that \(L(t)\) will continue to adjust until variables \(z_{1}\) and \(\hat{x}_{i}\), \(i = 1,\ldots,N\) satisfy the tracking conditions. Because the dynamic gain forms a closed loop with the system, we can prove that it is uniformly bounded with all other variables.

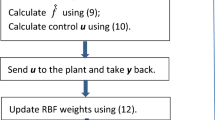

4 Compensation of nonlinear function by dynamic neural network

It can be seen from (33) that \(\frac{{2\overline{\sigma }_{N - 1} }}{{L^{b + N - 1} }}e^{\rm T} \left( {P \otimes I_{n} } \right)\tilde{I}\hat{A}^{ - 1} (t)\left( {f^{\prime} - \hat{{f^{\prime} }}} \right)\) includes more complex nonlinear function, and dynamic gain cannot be processed effectively. The following method is combined with the dynamic neural network to compensate the nonlinear function and realize the fault-tolerant control of the system. The main idea of this method is to use the Lipschitz property of the nonlinear function after compensated by the neural network, and the dynamic gain can effectively compensate the nonlinear function with Lipschitz property.

At first, we assume the nonlinear function \(f^{\prime} \left( {\xi_{1} ,\ldots,\xi_{N} ,\rho_{1} ,\ldots,\rho_{n} } \right)\) as follows

where

\(W_{0}^{\rm T} \in R^{{n \times l_{h} }}\) is the expected weight of input layer of the neural network; \(W_{h}^{\rm T} \in R^{{l_{h} \times \left( {Nn + n} \right)}}\) is the expected weight of hidden layer; \(l_{h}\) is the number of hidden layer nodes; \(\varphi \left( \cdot \right)\) is the activation function; \(\omega \in R^{n}\) is the error which satisfied \(\left\| \omega \right\| \le \overline{\omega }\).

Select the compensation of \(f^{\prime}\) as

where \(\hat{\zeta } = \left( {\xi_{1}^{\rm T} ,\hat{\eta }_{2}^{\rm T} + \xi_{2r}^{\rm T} \ldots ,\hat{\eta }_{N}^{\rm T} + \xi_{Nr}^{\rm T} ,\hat{\rho }_{1} ,\ldots,\hat{\rho }_{n} } \right)^{\rm T}\); \(\hat{W}_{0}^{\rm T} \in R^{{n \times l_{h} }}\) and \(\hat{W}_{h}^{\rm T} \in R^{{l_{h} \times \left( {Nn + n} \right)}}\) are the adaptive weight of input layer and hidden layer. And we can have the following proposition.

Proposition 1

There exist constants\(C_{a}\), \(\theta_{W}\), \(\theta_{\rho }\), and\(\theta_{\omega }\), such that

where\(\tilde{W}_{0} = W_{0} - \hat{W}_{0}\); \(\tilde{W}_{h} = W_{h} - \hat{W}_{h}\); \(\tilde{\rho } = \rho - \hat{\rho }\);\(\varphi^{\prime}\)is the derivative of\(\varphi\).

Remark 3

Equation (37) in Proposition 1 indicates the upper bound of the nonlinear function after compensating by the neural network. The dynamic gain can compensate the first two terms on the right side of the inequality (37) adaptively, and the latter three items can be compensated by the dynamic update rate of the unknown parameters.

By Proposition 1, we can select the following the dynamic update rate of the weight of the neural networks and the adaptive update rate of the effectiveness factors.

To describe the stability of the system, we construct the following Lyapunov function.

where \(\tilde{V} = \frac{1}{2}tr\left\{ {\tilde{W}_{0}^{\rm T} \varGamma_{0}^{ - 1} \tilde{W}_{0} } \right\} + \frac{1}{2}tr\left\{ {\tilde{W}_{h}^{\rm T} \varGamma_{h}^{ - 1} \tilde{W}_{h} } \right\} + \frac{1}{2}\gamma^{ - 1} \tilde{\rho }^{\rm T} \tilde{\rho }\); \(tr{\text{\{ }} \cdot {\text{\} }}\) means the trace of a matrix.

By taking the derivatives of \(T\) with respect to time and according to (39)–(42) and Proposition 1, we can obtain that

where \(\tilde{C}\) and \(D\) are known positive constants; \(\tilde{\theta }\) and \(\tilde{\theta }_{0}\) are unknown positive constants.

5 Stability analysis

We have the following Propositions 2 and 3 for the stability of the system. These two propositions show that the closed-loop system is globally uniformly bounded and that the output signal can track the reference signal with \(\in\).

Proposition 2

For the closed-loop system consisting of (7), (8), (32), (34), (36), (38), (39), and (40), the dynamic gain\(L(t)\), state vectors\(\hat{x}\)and\(e\)are globally bounded on\(\left[ {0,T_{f} } \right)\).

Proposition 3

For the system with the actuator faults (1) which satisfies Assumptions 1–4, if the output feedback fault-tolerant control is given by (32), then for any given initial condition, all variables in the closed-loop system of (1), (5), (32), (34), (36), (38), (39), and (40) are bounded on\(\left[ {0, + \infty } \right)\).

Proof

By Proposition 2, we can obtain that all variables in the closed-loop system consisting of (1), (5), (33), (39), (40), and (41) are bounded. Then, we can deduce that \(\mathop {\lim }\nolimits_{t \to \infty } \dot{L} (t) = 0\). Therefore, There exists a finite time \(t_{ \in }\) for any initial condition such that \(\frac{{\left\| {\xi_{1} - \xi_{1r} } \right\|^{2} }}{{L^{2b} }} - \frac{{ \in^{2} }}{{2L^{2b} }} \le \dot{L} (t) \le \frac{{ \in^{2} }}{{2L^{2b} }}\), \(\forall t > t_{ \in }\). Thus, we can obtain that \(\left\| {\xi_{1} - \xi_{1r} } \right\| \le \in\).□

6 Simulation results

To verify the effectiveness of the proposed method, the following height and attitude control systems of the UAV with actuator faults are considered [18].

Height control system of the UAV:

Posture control system control system of the UAV:

where \(\xi_{ 1 1}\) is the height of the UAV (m); \(\xi_{ 2 1}\) is the vertical speed of the UAV (m/s); \(\xi_{ 1 2}\), \(\xi_{ 1 3}\), \(\xi_{ 1 4}\) are the posture angles of the UAV (rad); the angular rates are \(\xi_{ 2 2}\), \(\xi_{ 2 3}\), \(\xi_{ 2 4}\) rad/s; \(m = 1.2\,{\text{kg}}\) is the quality of the UAV; \(g = 9.8\,{\text{m/S}}^{2}\) is the gravity acceleration; \(l = 0.2\,{\text{m}}\) is the distance from the rotor to the center of gravity of the UAV; \(J_{x} = 0.3\,{\text{kg}}\,{\text{m}}^{2}\), \(J_{y} = 0.4\,{\text{kg}}\,{\text{m}}^{2}\), and \(J_{z} = 0.6\,{\text{kg}}\,{\text{m}}^{2}\) are the moments of inertia in three directions of the UAV relative to its own coordinate system; \(c = 0.79\) is the ratio of the anti-torque coefficient of the rotor motor to the lift coefficient corresponding to the motor speed; \(k_{0}\) is an unknown parameter which indicates the air resistance; \(k_{1}\) and \(k_{2}\) are unknown parameters which indicate the disturbance caused by the motor; we select \(k_{0} = 0.03\), \(k_{1} = 0.1\) and \(k_{2} = - 0.1\) in the simulation.

In the above height and attitude control systems (43) and (44), \(\tilde{u}_{i}^{F}\), \(i = 1,\ldots,4\) are

where \(u_{i} ,i = 1,\ldots,4\) are the rotor motor drives the lift of the propellers; \(\rho_{i} (t)\), \(i{ = 1,}.. 4\) the effectiveness factors of the actuators; \(\psi_{i}\), \(i{ = 1,}.. 4\) are the unknown stuck faults.

Then, (43) and (44) can be expressed as

where

According to the method described above, we introduce the transformation \(\eta_{1} = \left( {B(t)\rho (t)\hat{A}(t)} \right)^{ - 1} \xi_{1}\), \(\eta_{2} = \left( {B(t)\rho (t)\hat{A}(t)} \right)^{ - 1} \xi_{2}\), and design the following observer:

then, make the further transformation \(\hat{x}_{1} = \frac{{\hat{\eta }_{1} }}{{L^{b} }}\), \(\hat{x}_{2} = \frac{{\hat{\eta }_{2} }}{{L^{b + 1} }}\), we get

Thus, we can design the control \(v(t) = - q_{2} \hat{A}(t)z_{2} - \frac{1}{{L^{b} }}\hat{A}(t)\hat{{f^{\prime} }}\left( {\hat{\xi }_{22} ,\hat{\xi }_{23} ,\hat{\xi }_{24} ,\hat{\rho }_{1} ,\ldots,\hat{\rho }_{4} } \right)\), where the adaptive dynamic of \(L\) is given by (34), and \(\hat{A}(t) = B^{\rm T} (t)\), \(q_{1} = 9.3\), \(q_{2} = 15.1\), \(\in = 0.2\).

We select the faults as follows:

Then, we design the compensation for nonlinear functions by neural networks: \(\hat{{f^{\prime} }}\left( {\hat{\xi }_{22} ,\hat{\xi }_{23} ,\hat{\xi }_{24} ,\hat{\rho }_{1} ,\ldots,\hat{\rho }_{4} } \right) = \hat{W}_{0}^{\rm T} \varphi \left( {\hat{W}_{h}^{\rm T} \hat{\zeta }} \right)\), \(\hat{W}_{0}^{\rm T} \in R^{{4 \times l_{h} }}\), \(\hat{W}_{h}^{\rm T} \in R^{{l_{h} \times 16}}\). The number of hidden layer nodes is 16. We select the activation function as the sigmoid function. The adaptive dynamic of \(\hat{W}_{0}^{{}}\), \(\hat{W}_{h}^{{}}\) and \(\hat{\rho }\) is given by (38)–(40). We select \(\varOmega_{0} = \varOmega_{h} = \varOmega_{\rho } = 30\) and \(F_{0} = F_{h} = F_{\rho } = 1\). So, (45), (47) and the dynamic of \(L\), \(\hat{W}_{0}^{{}}\), \(\hat{W}_{h}^{{}}\), \(\hat{\rho }\) can form a closed-loop system.

In the simulation, the initial value of each variable is:\(\xi_{ 1 1} (0) = \xi_{ 1 2} (0) = \xi_{ 1 3} (0) = \xi_{ 1 4} (0) = 0\), \(\hat{x}_{1} (0) = \hat{x}_{2} (0) = 0\), \(\hat{W}_{0} (0) = \hat{W}_{h} (0) = 0\), and \(\hat{\rho }(0) = 1\). And the reference signals are: \(\xi_{ 1 1r} = 3.5m\), \(\xi_{ 1 2r} = 10\deg\), \(\xi_{ 1 3r} = 15\deg\), \(\xi_{ 1 4r} = 20\deg\). The simulation results are shown in Figs. 1, 2 and 3.

As shown in Fig. 1a, b, when there is no fault in the system, the control method proposed in this paper can make the system track the reference signals effectively. Figure 1c is the variation process of dynamic gain. By the adjustment of the dynamic gain, the uncertainties in the system can be compensated adaptively. Finally, the system can track the reference signals stably and the dynamic gain is bounded.

It can be seen from Fig. 2a, b that if there is no fault-tolerant control (select \(L = 4.9\) by Fig. 1c), the system can respond well without faults (\(t < 8s\)). However, when the faults occur in the system (\(t \ge 8s\)), neither the height nor the posture angles of UAV can track the reference signal properly without fault-tolerant control.

In Fig. 3, we can see that the proposed fault-tolerant control method in this paper can compensate faults effectively. It can be seen from Fig. 3a–c that when the faults occur, the dynamic gain changes from the original stable state and the faults can be compensated by the adaptive adjustment of the dynamic gain. From Fig. 3d, e, we can see that the weights of the neural network tend to converge at first (\(t < 8\,{\rm s}\)). Then, as the faults occur, the weights have large oscillation in the process of the fault compensation. After the faults have been compensated, the dynamic gain and the network weights are stable, and the height and posture angles of UAV can track the reference signals effectively.

7 Conclusions

In this paper, by combining the dynamic gain and the neural network, the output feedback fault-tolerant control problem of a class of nonlinear uncertain systems was solved. The compensation to the faults can be achieved through the adaptive adjustment of the dynamic gain. Meanwhile, the dynamic gain can also compensate for the simple nonlinear uncertain functions of the system. For the more complex nonlinear functions, the single hidden layer neural network was adopted for approximation and combined with the dynamic gain to achieve the compensation. Taking the height and posture angle control system of the quad-rotor UAV as an example, the effectiveness of the proposed method was verified. Based on the work of this paper, there are still further questions to be studied. First, the fault-tolerant method needs to be solved when the lower limitation of the effectiveness factors is unknown and the full loss of actuator effectiveness is allowed. The second question is that the condition of the assumption 2 may be a bit harsh and whether it can be further relaxed.

References

Krishnamurthy P, Khorrami F (2016) Global output-feedback control of systems with dynamic nonlinear input uncertainties through singular perturbation-based dynamic scaling. Int J Adapt Control Signal Process 30(5):690–714

Liu YG (2014) Global output-feedback tracking for nonlinear systems with unknown polynomial-of-output growth rate. J Control Theory Appl 31:921–933

Kaliora G, Astolfi A (2004) Nonlinear control of feedforward systems with bounded signals. IEEE Trans Autom Control 49(11):1975–1990

Krishnamurthy P, Khorrami F (2008) Dual high-gain-based adaptive output-feedback control for a class of nonlinear systems. Int J Adapt Control Signal Process 22(1):23–42

Zhai J, Qian C (2012) Global control of nonlinear systems with uncertain output function using homogeneous domination approach. Int J Robust Nonlinear Control 22(14):1543–1561

BenAbdallah A, Khalifa T, Mabrouk M (2015) Adaptive practical output tracking control for a class of uncertain nonlinear systems. Int J Syst Sci 46(8):1421–1431

Jin S, Liu Y, Li F (2016) Further results on global practical tracking via adaptive output feedback for uncertain nonlinear systems. Int J Control 89(2):368–379

Ma HJ, Yang GH (2010) Adaptive output control of uncertain nonlinear systems with non-symmetric dead-zone input. Automatica 46(2):413–420

Ma HJ, Yang GH (2011) Adaptive logic-based switching fault-tolerant controller design for nonlinear uncertain systems. Int J Robust Nonlinear Control 21(4):404–428

Zouari F, Boulkroune A, Ibeas A (2017) Observer-based adaptive neural network control for a class of MIMO uncertain nonlinear time-delay non-integer-order systems with asymmetric actuator saturation. Neural Comput Appl 28(1):993–1010

Zhou S, Chen M, Ong CJ (2016) Adaptive neural network control of uncertain MIMO nonlinear systems with input saturation. Neural Comput Appl 27(5):1317–1325

Zhang X, Polycarpou MM, Parisini T (2010) Fault diagnosis of a class of nonlinear uncertain systems with Lipschitz nonlinearities using adaptive estimation. Automatica 46(2):290–299

Li XJ, Yang GH (2018) Neural-network-based adaptive decentralized fault-tolerant control for a class of interconnected nonlinear systems. IEEE Trans Neural Netw Learn Syst 29(1):144–155

Mao Z, Jiang B, Shi P (2010) Observer based fault-tolerant control for a class of nonlinear networked control systems. J Frankl Inst 347(6):940–956

Tong S, Huo B, Li Y (2014) Observer-based adaptive decentralized fuzzy fault-tolerant control of nonlinear large-scale systems with actuator failures. IEEE Trans Fuzzy Syst 22(1):1–15

Zhou Q, Shi P, Liu H (2012) Neural-network-based decentralized adaptive output-feedback control for large-scale stochastic nonlinear systems. IEEE Trans Syst Man Cybern Part B Cybern 42(6):1608–1619

Dinh HT, Kamalapurkar R, Bhasin S (2014) Dynamic neural network-based robust observers for uncertain nonlinear systems. Neural Netw 60:44–52

Dierks T, Jagannathan S (2010) Output feedback control of a quadrotor UAV using neural networks. IEEE Trans Neural Netw 21(1):50–66

Krishnamurthy P, Khorrami F, Jiang ZP (2002) Global output feedback tracking for nonlinear systems in generalized output-feedback canonical form. IEEE Trans Autom Control 47(5):814–819

Acknowledgements

This work was supported by the National Science Foundation of China (Grant No. 61273190). The authors would like to thank the editor and reviewers for the valuable comments and constructive suggestions to improve the paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Proof of Proposition 1

By

and

we can obtain that

In (50), we get

And for the first item on the right of the unequal sign in (50), we have

Further,

For the second item on the right of the unequal sign in (50), the following inequality holds

For the third item on the right of the unequal sign in (50), we get

In (53), it can be obtained that

Therefore, Proposition 1 is proved by substituting (51), (53), (54), (55), (56) into (50).□

Proof of Proposition 2

At first, let us prove the boundedness of \(L(t)\) by counter-evidence. We assume that \(L(t)\) is unbounded on \([0,T_{f} )\), that \(\mathop {\lim }\nolimits_{{t \to T_{f} }} L(t) = \infty\). Then, we discuss in the following two situations.

- 1.

\(T_{f} < \infty\)

By the assumption that \(L(t)\) is unbounded, there exists a limited time \(t_{1} < T_{f}\), such that \(\dot{T} \le - DT + \tilde{\theta }_{0}\) for \(t > t_{1}\); so, there is \(T \le e^{{ - D(t - t_{1} )}} T(t_{1} ) + \frac{{\tilde{\theta }_{0} }}{D}\), \(\forall t > t_{1}\). Thus, there is \(+ \infty = L(T_{f} ) - L(0) = \mathop \int \nolimits_{0}^{{T_{f} }} \dot{L} (t){\text{d}}t \le \mathop \int \nolimits_{0}^{{T_{f} }} \sum\nolimits_{i = 1}^{N} {\hat{x}_{i}^{\rm T} \hat{x}_{i} } + z_{1}^{\rm T} z_{1} {\text{d}}t < + \infty\), which contradicts the assumption. Therefore, \(L(t)\) is bounded when \(T_{f} < \infty\).

- 2.

\(T_{f} = \infty\)

Since we have assumed that \(L(t)\) is unbounded, there exists a limited time \(t_{ 2}\), such that \(\dot{T} \le - DT + \tilde{\theta }_{0}\) for \(t > t_{2}\), then we can deduce that \(T\) is bounded. Because \(T = V_{N} + \tilde{V}\), \(V_{N}\) and \(\tilde{V}\) are bounded, and by (33) and (37), it can be obtained that \({\dot{V}_{N} } \le - \left( {L(t)\tilde{c}_{2} - \tilde{\theta }_{N1} } \right)\left( {||e||^{2} + ||z||^{2} } \right) + \tilde{\theta }_{N2}\), \(\forall t > t_{2}\), where \(\tilde{c}_{2}\), \(\tilde{\theta }_{N1}\), \(\tilde{\theta }_{N2}\) are positive constants. Thus, there exists \(t_{3}\), such that \({\dot{V}_{N} } \le - L(t)\tilde{c}_{3} V_{N} + \tilde{\theta }_{N2}\), \(t > t_{3}\), where \(\tilde{c}_{3}\) is a positive constant. So, for any \(\tau > 0\), there exists \(t_{\tau 1}\), such that \(L(t)\tilde{c}_{3} > \frac{{2\tilde{\theta }_{N1} }}{\tau }\) for \(t > t_{\tau 1}\). Then, we can obtain that \(V_{N} (t) \le e^{{ - \tilde{c}_{3} (t - t_{\tau 1} )}} V_{N} (t_{\tau 1} ) + \frac{\tau }{2}\), \(\forall t > t_{\tau 1}\). Therefore, there exists \(t_{\tau 2} > t_{\tau 1}\), such that \(V_{N} (t) < \tau\), \(\forall t > t_{\tau 2}\). According to the above analysis, we can obtain that \(\mathop {\lim }\nolimits_{t \to \infty } V_{N} = 0\), and by (34), we have \(\mathop {\lim }\nolimits_{t \to \infty } \dot{L} (t) = 0\).

Then, we define \(\varGamma (t) = L(t)V_{N} (t)\), and take the derivatives of \(\varGamma (t)\) with respect to time, we get

According to \(\mathop {\lim }\nolimits_{t \to \infty } \dot{L} (t) = 0\) and \(\mathop {\lim }\nolimits_{t \to \infty } L(t) = 0\), we can deduce that \(\varGamma (t)\) is bounded, that \(\varGamma (t) < \varGamma_{M}\). So, there is

By (58), there exists a limited time \(t_{4}\), such that \(\left( {\sum\nolimits_{i = 1}^{N} {\hat{x}_{i}^{\rm T} \hat{x}_{i} } + z_{1}^{\rm T} z_{1} } \right)L^{2b} < \in^{2}\) for \(t > t_{4}\). Then, \(\dot{L} (t) = 0\),\(\forall t > t_{4}\), which contradicts the assumption.

According to the above analysis, \(L(t)\) is bounded.

Next, let us prove the boundedness of \(\hat{x}\). The dynamic equation of \(\hat{x}\) can be written as

and the Lyapunov function can be constructed as \(V_{x} = \hat{x}^{\rm T} \left( {P \otimes I_{n} } \right)\hat{x}\). Taking the derivatives of \(V_{x}\) with respect to time, we get

Then, we have

further, it can be obtained that

By (62), we can deduce that \(V_{x}\) is bounded, and then \(\hat{x}\) is bounded.

Finally, let us prove the boundedness of \(e\). We can select the \(L^{*}\) which is large enough and introduce the following transformation,

then we have

where \(\varLambda_{1} = {\text{diag}}\left\{ {\begin{array}{*{20}c} {1 - \frac{L}{{L^{*} }}} & \cdots & {1 - \frac{{L^{N} }}{{L^{*N} }}} \\ \end{array} } \right\}\) and \(\varLambda_{2} = {\text{diag}}\left\{ {\begin{array}{*{20}c} {\frac{{L^{b} }}{{L^{*b} }}} & \cdots & {\frac{{L^{b + N - 1} }}{{L^{*b + N - 1} }}} \\ \end{array} } \right\}\).

We construct the Lyapunov function as \(V_{\varepsilon } = \varepsilon^{\rm T} \left( {P \otimes I_{n} } \right)\varepsilon\), and take the derivatives of \(V_{\varepsilon } (t)\) with respect to time, it can be obtained that

In (65), there is

Where \(\theta_{\varepsilon 1}\) and \(\theta_{\varepsilon 2}\) are positive constants which are not related to \(L^{*}\).

Therefore, we have

where \(C_{\varepsilon 1}\), \(C_{\varepsilon 2}\), and \(\theta_{\varepsilon }\) are positive constants which are not related to \(L^{*}\).

Since \(L^{*}\) is large enough and \(\left\| {\varepsilon_{1} } \right\|^{2} \le c\left( {\dot{L} + \in^{2} } \right)\), we can deduce that

where \(\tilde{C}_{\varepsilon }\), \(\tilde{C}_{\varepsilon 1}\), and \(\tilde{\theta }_{\varepsilon }\) are positive constants.

Then, there is

by (69), we have

Therefore, from the above analysis, we can deduce that \(V_{\varepsilon }\) is bounded, and according to (63), \(e\) is bounded.□

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Xi, X., Liu, T., Zhao, J. et al. Output feedback fault-tolerant control for a class of nonlinear systems via dynamic gain and neural network. Neural Comput & Applic 32, 5517–5530 (2020). https://doi.org/10.1007/s00521-019-04583-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-019-04583-1