Abstract

Inspired by the success of deploying deep learning in the fields of Computer Vision and Natural Language Processing, this learning paradigm has also found its way into the field of Music Information Retrieval. In order to benefit from deep learning in an effective, but also efficient manner, deep transfer learning has become a common approach. In this approach, it is possible to reuse the output of a pre-trained neural network as the basis for a new learning task. The underlying hypothesis is that if the initial and new learning tasks show commonalities and are applied to the same type of input data (e.g., music audio), the generated deep representation of the data is also informative for the new task. Since, however, most of the networks used to generate deep representations are trained using a single initial learning source, their representation is unlikely to be informative for all possible future tasks. In this paper, we present the results of our investigation of what are the most important factors to generate deep representations for the data and learning tasks in the music domain. We conducted this investigation via an extensive empirical study that involves multiple learning sources, as well as multiple deep learning architectures with varying levels of information sharing between sources, in order to learn music representations. We then validate these representations considering multiple target datasets for evaluation. The results of our experiments yield several insights into how to approach the design of methods for learning widely deployable deep data representations in the music domain.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the Music Information Retrieval (MIR) field, many research problems of interest involve the automatic description of properties of musical signals, employing concepts that are understood by humans. For this, tasks are derived that can be solved by automated systems. In such cases, algorithmic processes are employed to map raw music audio information to humanly understood descriptors (e.g., genre labels or descriptive tags). To achieve this, historically, the raw audio would first be transformed into a representation based on hand-crafted features, which are engineered by humans to reflect dedicated semantic signal properties. The feature representation would then serve as input to various statistical or machine learning (ML) approaches [1].

The framing as described above can generally be applied to many applied ML problems: complex real-world problems are abstracted into a relatively simpler form, by establishing tasks that can be computationally addressed by automatic systems. In many cases, the task involves making a prediction based on a certain observation. For this, modern ML methodologies can be employed that automatically can infer the logic for the prediction directly from (a numeric representation of) the given data, by optimizing an objective function defined for the given task.

However, music is a multimodal phenomenon that can be described in many parallel ways, ranging from objective descriptors to subjective preference. As a consequence, in many cases, while music-related tasks are well understood by humans, it often is hard to pinpoint and describe where the truly ‘relevant’ information is in the music data used for the tasks, and how this properly can be translated into numeric representations that should be used for prediction. While research into such proper translations can be conducted per individual task, it is likely that informative factors in music data will be shared across tasks. As a consequence, when seeking to identify informative factors that are not explicitly restricted to a single task, multitask learning (MTL) is a promising strategy. In MTL, a single learning framework hosts multiple tasks at once, allowing for models to perform better by sharing commonalities between involved tasks [2]. MTL has been successfully used in a range of applied ML works [3,4,5,6,7,8,9,10], also including the music domain [11, 12].

Following successes in the fields of Computer Vision (CV) and Natural Language Processing (NLP), deep learning approaches have recently also gained increasing interest in the MIR field, in which case deep representations of music audio data are directly learned from the data, rather than being hand-crafted. Many works employing such approaches reported considerable performance improvements in various music analysis, indexing and classification tasks [13,14,15,16,17,18,19,20].

In many deep learning applications, rather than training a complete network from scratch, pre-trained networks are commonly used to generate deep representations, which can be either directly adopted or further adapted for the current task at hand. In CV and NLP, (parts of) certain pre-trained networks [21,22,23,24] have now been adopted and adapted in a very large number of works. These ‘standard’ deep representations have typically been obtained by training a network for a single learning task, such as visual object recognition, employing large amounts of training data. The hypothesis on why these representations are effective in a broader spectrum of tasks than they originally were trained for, is that deep transfer learning (DTL) is happening: information initially picked up by the network is beneficial also for new learning tasks performed on the same type of raw input data. Clearly, the validity of this hypothesis is linked to the extent to which the new task can rely on similar data characteristics as the task on which the pre-trained network was originally trained.

Although a number of works deployed DTL for various learning tasks in the music domain [25,26,27,28], to our knowledge, however, transfer learning and the employment of pre-trained networks are not as standard in the MIR domain as in the CV domain. Again, this may be due to the broad and partially subjective range and nature of possible music descriptions. Following the considerations above, it may then be useful to combine deep transfer learning with multitask learning.

Indeed, in order to increase robustness to a larger scope of new learning tasks and datasets, the concept of MTL also has been applied in training deep networks for representation learning, both in the music domain [11, 12] and in general [3, p. 2]. As the model learns several tasks and datasets in parallel, it may pick up commonalities among them. As a consequence, the expectation is that a network learned with MTL will yield robust performance across different tasks, by transferring shared knowledge [2, 3]. A simple illustration of the conceptual difference between traditional DTL and deep transfer learning based on MTL (further referred to as multitask based deep transfer learning (MTDTL)) is shown in Fig. 1.

Simplified illustration of the conceptual difference between traditional deep transfer learning (DTL) based on a single learning task (above) and multitask based deep transfer learning (MTDTL) (below). The same color used for a learning and an target task indicates that the tasks have commonalities, which implies that the learned representation is likely to be informative for the target task. At the same time, this representation may not be that informative to another future task, leading to a low transfer learning performance. The hypothesis behind MTDTL is that relying on more learning tasks increases robustness of the learned representation and its usability for a broader set of target tasks (color figure online)

The mission of this paper is to investigate the effect of conditions around the setup of MTDTL, which are important to yield effective deep music representations. Here, we understand an ‘effective’ representation to be a representation that is suitable for a wide range of new tasks and datasets. Ultimately, we aim for providing a methodological framework to systematically obtain and evaluate such transferable representations. We pursue this mission by exploring the effectiveness of MTDTL and traditional DTL, as well as concatenations of multiple deep representations, obtained by networks that were independently trained on separate single learning tasks. We consider these representations for multiple choices of learning tasks and considering multiple target datasets.

Our work will address the following research questions:

RQ1: Given a set of learning sources that can be used to train a network, what is the influence of the number and type of the sources on the effectiveness of the learned deep representation?

RQ2: How do various degrees of information sharing in the deep architecture affect the effectiveness of a learned deep representation?

By answering the RQ1, we arrive at an understanding of important factors regarding the composition of a set of learning tasks and datasets (which in the remainder of this work will be denoted as learning sources) to achieve an effective deep music representation, specifically on the number and nature of learning sources. The answer to RQ2 provides insight into how to choose the optimal multitask network architecture under MTDTL context. For example, in MTL, multiple sources are considered under a joint learning scheme that partially shares inferences obtained from different learning sources in the learning pipeline. In MTL applications using deep neural networks, this means that certain layers will be shared between all sources, while at other stages, the architecture will ‘branch’ out into source-specific layers [2, 5,6,7,8, 12, 29]. However, an investigation is still needed on where in the layered architecture branching should ideally happen—if a branching strategy would turn out beneficial in the first place.

To reach the aforementioned answers, it is necessary to conduct a systematic assessment to examine relevant factors. For RQ1, we investigate different numbers and combinations of learning sources. For RQ2, we study different architectural strategies. However, we wish to ultimately investigate the effectiveness of the representation with respect to new, target learning tasks and datasets (which in the remainder of this paper will be denoted by target datasets). While this may cause a combinatorial explosion with respect to possible experimental configurations, we will make strategic choices in the design and evaluation procedure of the various representation learning strategies.

The scientific contribution of this work can be summarized as follows:

We provide insight into the effectiveness of various deep representation learning strategies under the multitask learning context.

We offer in-depth insight into ways to evaluate desired properties of a deep representation learning procedure.

We propose and release several pre-trained music representation networks, based on different learning strategies for multiple semantic learning sources.

The rest of this work is presented as follows: a formalization of this problem, as well as the global outline of how learning will be performed based on different learning tasks from different sources, will be presented in Sect. 2. Detailed specifications of the deep architectures we considered for the learning procedure will be discussed in Sect. 3. Our strategy to evaluate the effectiveness of different representation network variants by employing various target datasets will be the focus of Sect. 4. Experimental results will be discussed in Sect. 5, after which general conclusions will be presented in Sect. 6.

2 Framework for deep representation learning

In this section, we formally define the deep representation learning problem. As Fig. 2 illustrates, any domain-specific MTDTL problem can be abstracted into a formal task, which is instantiated by a specific dataset with specific observations and labels. Multiple tasks and datasets are involved to emphasize different aspects of the input data, such that the learned representation is more adaptable to different future tasks. The learning part of this scheme can be understood as the MTL phase, which is introduced in Sect. 2.1. Subsequently in Sect. 2.2, we discuss learning sources involved in this work, which consist of various tasks and datasets to allow investigating their effects on the transfer learning. Further, we introduce the label preprocessing procedure that is applied in this work in Sect. 2.3, ensuring that the learning sources are more regularized, such that their comparative analysis is clearer.

Schematic overview of what this work investigates. The upper scheme illustrates a general problem solving framework in which multitask transfer learning is employed. The tasks \(t \in \{t_0, t_1, \ldots , t_M\}\) are derived from a certain problem domain, which is instantiated by datasets, that often are represented as sample pairs of observations and corresponding labels \((X_{t}, y_{t})\). Sometimes, the original dataset is processed further into simpler representation forms \((X_{t}, z_{t})\), to filter out undesirable information and noise. Once a model or system \(f_{t}(X_{t})\) has learned the necessary mappings within the learning sources, this knowledge can be transferred to another set of target datasets, leveraging commonalities already obtained by the pre-training. Below the general framework, we show a concrete example, in which the broad MIR problem domain is abstracted into various sub-problems with corresponding tasks and datasets

2.1 Problem definition

A machine learning problem, focused on solving a specific task t, can be formulated as a minimization problem, in which a model function \(f_t\) must be learned that minimizes a loss function \({\mathcal {L}}\) for given dataset \({\mathcal {D}}_{t} = \{\,(x^{(i)}_{t}, y^{(i)}_{t}) \mid i \in \{1, \ldots , I\} \,\}\), comparing the model’s predictions given by the input \(x_t\) and actual task-specific learning labels \(y_t\). This can be formulated using the following expression:

where \(x_{t}\in {\mathbb {R}}^d\) is, traditionally, a hand-crafted d-dimensional feature vector and \(\theta\) is a set of model parameters of f.

When deep learning is employed, the model function f denotes a learnable network. Typically, the network model f is learned in an end-to-end fashion, from raw data at the input to the learning label. In the speech and music field, however, using true end-to-end learning is still not a common practice. Instead, raw data is typically transformed first, before serving as network input. More specifically, in the music domain, common input to function f would be \(X\in {\mathbb {R}}^{c\times {n}\times {b}}\), replacing the originally hand-crafted feature vector \(x\in {\mathbb {R}}^d\) from (1) by a time-frequency representation of the observed music data, usually obtained through the short-time Fourier transform (STFT), with potential additional filter bank applications (e.g., mel-filter bank). The dimensions c, n, b indicate channels of the audio signal, time steps, and frequency bins, respectively.

If such a network still is trained for a specific single machine learning task t, we can now reformulate (1) as follows:

In MTL, in the process of learning the network model f, different tasks will need to be solved in parallel. In the case of deep neural networks, this is usually realized by having a network in which lower layers are shared for all tasks, but upper layers are task-specific. Given m different tasks t, each having the learning label \(y_{t}\), we can formulate the learning objective of the neural network in MTL scenario as follows:

Here, \({\mathcal {T}}=\{t_{1},t_{2},\ldots ,t_{m}\}\) is a given set of tasks to be learned and \(\theta ^{*}=\{\theta ^{1},\theta ^{2},\ldots ,\theta ^{m}\}\) indicates a set of model parameters \(\theta ^{t}\) with respect to each task. Since the deep architecture initially shares lower layers and branches out to task-specific upper layers, the parameters of shared layers and task-specific layers are referred to separately as \(\theta ^{s}\) and \(\theta ^{t}\), respectively. Updates for all parameters can be achieved through standard back-propagation. Further specifics on network architectures and training configurations will be given in Sect. 3.

Given the formalizations above, the first step in our framework is to select a suitable set \({\mathcal {T}}\) of learning tasks. These tasks can be seen as multiple concurrent descriptions or transformations of the same input fragment of musical audio: each will reflect certain semantic aspects of the music. However, unlike the approach in a typical MTL scheme, solving multiple specific learning tasks is actually not our main goal; instead, we wish to learn an effective representation that captures as many semantically important factors in the low-level music representation as possible. Thus, rather than using learning labels \(y_{t}\), our representation learning process will employ reduced learning labels \(z_{t}\), which capture a reduced set of semantic factors from \(y_{t}\). We then can reformulate (3) as follows:

where \(z_t\in {\mathbb {R}}^{k}\) is a k-dimensional vector that represents a reduced learning label for a specific task t. Each \(z_t\) will be obtained through task-specific factor extraction methods, as described in Sect. 2.3.

2.2 Learning sources

In MTDTL context, a training dataset can be seen as the ‘source’ to learn the representation, which will be further transferred to the future ‘target’ dataset. Different learning sources of different nature can be imagined that can be globally categorized as Algorithm or Annotation. As for the Algorithm category, by employing traditional feature extraction or representation transformation algorithms, we will be able to automatically extract semantically interesting aspects from input data. As for the Annotation category, these include different types of label annotations of the input data by humans.

The dataset used as a resource for our learning experiments is the Million Song Dataset (MSD) [30]. In its original form, it contains metadata and precomputed features for a million songs, with several associated data resources, e.g., considering Last.fm social tags and listening profiles from the Echo Nest. While the MSD does not distribute audio due to copyright reasons, through the API of the 7digital service, 30-s audio previews can be obtained for the songs in the dataset. These 30-s previews will form the source for our raw audio input.

Using the MSD data, we consider several subcategories of learning sources within the Algorithm and Annotation categories; below, we give an overview of these, and specify what information we considered exactly for the learning labels in our work.

2.2.1 Algorithm

-

Self. The music track is the learning source itself; in other words, intrinsic information in the input music track should be captured through a learning procedure, without employing further data. Various unsupervised or auto-regressive learning strategies can be employed under this category, with variants of autoencoders, including the Stacked Autoencoder [31, 32], Restricted Boltzmann Machines (RBM) [33], Deep Belief Networks (DBN) [34] and Generative Adversarial Networks (GAN) [35]. As another example within this category, variants of the Siamese networks for similarity learning can be considered [36,37,38].

In our case, we will employ the Siamese architecture to learn a metric that measures whether two input music clips belong to the same track or two different tracks. This can be formulated as follows:

$${\hat{\theta }}^{self}, {\hat{\theta }}^{s} = {{\,\mathrm{arg\,min}\,}}\; {\mathbb {E}}_{X_l, X_r \sim {\mathcal {D}}_{self}} {\mathcal {L}}(y_{self}, f_{self}(X_{l},X_{r};\theta ^{self},\theta ^{s}))$$(5)$$y_{self}= {\left\{ \begin{array}{ll} 1, &{} {\text {if }}\,X_{l}\,{\text{and}}\,X_{r}\,{\text{sampled from same track}} \\ 0 &{} {\text {otherwise}} \end{array}\right. }$$(6)where \(X_{l}\) and \(X_{r}\) are a pair of randomly sampled short music snippets (taken from the 30-s MSD audio previews) and \(f_{self}\) is a network for learning a metric between given input representations in terms of the criteria imposed by \(y_{self}\). It is composed of one or more fully connected layers and one output layer with softmax activation. A global outline illustration of our chosen architecture is given in Fig. 3. Further specifications of the representation network and sampling strategies will be given in Sect. 3.

-

Feature. Many algorithms exist already for extracting features out of musical audio, or for transforming musical audio representations. By running such algorithms on musical audio, learning labels are automatically computed, without the need for soliciting human annotations. Algorithmically computed outcomes will likely not be perfect and include noise or errors. At the same time, we consider them as a relatively efficient way to extract semantically relevant and more structured information out of a raw input signal.

In our case, under this category, we use beat per minute (BPM) information, released as part of the MSD’s precomputed features. The BPM values were computed by an estimation algorithm, as part of the Echo Nest API.

2.2.2 Annotation

Metadata. Typically, metadata will come ‘for free’ with music audio, specifying side information, such as a release year, the song title, the name of the artist, the corresponding album name, and the corresponding album cover image. Considering that this information describes categorization facets of the musical audio, metadata can be a useful information source to learn a music representation. In our experiments, we use release year information, which is readily provided as metadata with each song in the MSD.

Crowd. Through interaction with music streaming or scrobbling services, large numbers of users, also designated as the crowd, left explicit or implicit information regarding their perspectives on musical content. For example, they may have created social tags, ratings, or social media mentionings of songs. With many services offering API access to these types of descriptors, crowd data, therefore, offers scalable, spontaneous and diverse (albeit noisy) human perspectives on music signals.

In our experiments, we use social tags from Last.fmFootnote 1 and user listening profiles from the Echo Nest.

Professional. As mentioned in [1], annotation of music tracks is a complicated and time-consuming process: annotation criteria frequently are subjective, and considerable domain knowledge and annotation experience may be required before accurate and consistent annotations can be made. Professional experts in categorization have this experience, and thus are capable of indicating clean and systematic information about musical content. It is not trivial to get such professional annotations at scale; however, these types of annotations may be available in existing professional libraries.

In our case, we use professional annotations from the Centrale Discotheek Rotterdam (CDR), the largest music library in The Netherlands, holding all music ever released in the country in physical and digital form in its collection. The CDR collection can be digitally accessed through the online MuziekwebFootnote 2 platform. For each musical album in the CDR collection, genre annotations were made by a professional annotator, according to a fixed vocabulary of 367 hierarchical music genres.

As another professional-level ‘description,’ we adopted lyrics information per each track, which is provided in Bag-of-Words format with the MSD. To filter out trivial terms such as stop-words, we applied TF-IDF [39].

Combination. Finally, learning labels can be derived from combinations of the above categories. In our experiment, we used a combination of artist information and social tags, by making a bag of tags at the artist level as a learning label.

Not all songs in the MSD actually include learning labels from all the sources mentioned above. Clearly, it is another advantage of using MTL that one can use such unbalanced datasets in a single learning procedure, to maximize the coverage of the dataset. However, on the other hand, if one uses an unbalanced number of samples across different learning sources, it is not trivial to compare the effect of individual learning sources. We, therefore, choose to work with a subset of the dataset, in which equal numbers of samples across learning sources can be used. As a consequence, we managed to collect 46,490 clips of tracks with corresponding learning source labels. A 41,841/4,649 split was made for training and validation for all sources from both MSD and CDR. Since we mainly focus on transfer learning, we used the validation set mostly for monitoring the training, to keep the network from overfitting.

2.3 Latent factor preprocessing

Most learning sources are noisy. For instance, social tags include tags for personal playlist management, long sentences, or simply typos, which do not actually show relevant nuances in describing the music signal. The algorithmically extracted BPM information also is imperfect, and likely contains octave errors, in which BPM is under- or overestimated by a factor of 2. To deal with this noise, several previous works using the MSD [16, 26] applied a frequency-based filtering strategy along with top-down domain knowledge. However, this shrinks the available sample size. As an alternative way to handle noisiness, several other previous works [11, 17, 27, 40,41,42] apply latent factor extraction using various low-rank approximation models to preprocess the label information. We also choose to do this in our experiments.

A full overview of chosen learning sources, their category, origin dataset, dimensionality, and preprocessing strategies is shown in Table 1. In most cases, we apply probabilistic latent semantic analysis (pLSA), which extracts latent factors as a multinomial distribution of latent topics [43]. Table 2 illustrates several examples of strong social tags within extracted latent topics.

For situations in which learning labels are a scalar, non-binary value (BPM and release year), we applied a Gaussian mixture model (GMM) to transform each value into a categorical distribution of Gaussian components. In the case of the Self category, as it basically is a binary membership test, no factor extraction was needed in this case.

After preprocessing, learning source labels \(y_t\) are now expressed in the form of probabilistic distributions \(z_t\). Then, the learning of a deep representation can take place by minimizing the Kullback–Leibler (KL) divergence between model inferences \(f_t(X)\) and label factor distributions \(z_t\).

Along with the noise reduction, another benefit from such preprocessing is the regularization of the scale of the objective function between different tasks involved in the learning, when the resulting factors have the same size. This regularity between the objective functions is particularly helpful for comparing different tasks and datasets. For this purpose, we used a fixed single value \(k=50\) for the number of factors (pLSA) and the number of Gaussians (GMM). In the remainder of this paper, the datasets and tasks processed in the above manner will be denoted by learning sources for coherent presentation and usage of the terminology.

3 Representation network architectures

In this section, we present the detailed specification of the deep representation neural network architecture we exploited in this work. We will discuss the base architecture of the network and further discuss the shared architecture with respect to different fusion strategies that one can take in the MTDTL context. Also, we introduce details on the preprocessing related to the input data served into networks.

3.1 Base architecture

As the deep base architecture for feature representation learning, we choose a convolutional neural network (CNN) architecture inspired by [21], as described in Fig. 4 and Table 3.

CNN is one of the most popular architectures in many music-related machine learning tasks [16, 17, 20, 25, 44,45,46,47,48,49,50,51,52,53,54,55]. Many of these works adopt an architecture having cascading blocks of 2-dimensional filters and max-pooling, derived from well-known works in image recognition [21, 56]. Although variants of CNN using 1-dimensional filters also were suggested by [12, 57,58,59] to learn features directly from a raw audio signal in an end-to-end manner, not many works managed to use them on music classification tasks successfully [60].

The main difference between the base architecture and [21] is the use of global average pooling (GAP) and the Batch Normalization (BN) layers. BN is applied to accelerate the training and stabilize the internal covariate shift for every convolution layer and the fc-feature layer [61]. Also, global spatial pooling is adopted as the last pooling layer of the cascading convolution blocks, which is known to effectively summarize the spatial dimensions both in the image [22] and music domain [20]. We also applied the approach to ensure the fc-feature layer not to have a huge number of parameters.

We applied the rectified linear unit (ReLU) [62] to all convolution layers and the fc-feature layer. For the fc-output layer, softmax activation is used. For each convolution layer, we applied zero-padding such that the input and the output have the same spatial shape. As for the regularization, we choose to apply dropout [63] on the fc-feature layer. We added L2 regularization across all the parameters with the same weight \(\lambda =10^{-6}\).

3.1.1 Audio preprocessing

We aim to learn a music representation from as-raw-as-possible input data to fully leverage the capability of the neural network. For this purpose, we use the dB-scale mel-scale magnitude spectrum of an input audio fragment, extracted by applying 128-band mel-filter banks on the short-time Fourier transform (STFT). mel-spectrograms have generally been a popular input representation choice for CNN applied in music-related tasks [16, 17, 20, 26, 41, 64]; besides, it also was reported recently that their frequency-domain summarization, based on psycho-acoustics, is efficient and not easily learnable through data-driven approaches [65, 66]. We choose a 1024-sample window size and 256-sample hop size, translating to about 46 ms and 11.6 ms, respectively, for a sampling rate of 22 kHz. We also applied standardization to each frequency band of the mel spectrum, making use of the mean and variance of all individual mel spectra in the training set.

3.1.2 Sampling

During the learning process, in each iteration, a random batch of songs is selected. Audio corresponding to these songs originally is 30 s in length; for computational efficiency, we randomly crop 2.5 s out of each song each time. Keeping stereo channels of the audio, the size of a single input tensor \(X^*\) we used for the experiment ended up with \(2\times 216\times 128\), where the first dimension indicates the number of channels, and following dimensions mean time steps and mel-bins, respectively. Along with the computational efficiency, a number of previous works in MIR field reported that using a small chunk of the input not only inflates the dataset but also shows good performance on the high-level tasks such as music auto-tagging [20, 57, 60]. For the self case, we generate batches with equal numbers of songs for both membership categories in \(y_{self}\).

Default CNN architecture for supervised single-source representation learning. Details of the representation network are presented at the left of the global architecture diagram. The numbers inside the parentheses indicate either the number of filters or the number of units with respect to the type of layer

3.2 Multi-source architectures with various degrees of shared information

When learning a music representation based on various available learning sources, different strategies can be taken regarding the choice of architecture. We will investigate the following setups:

As a base case, a Single-Source Representation (SS-R) can be learned for a single source only. As mentioned earlier, this would be the typical strategy leading to pre-trained networks, that later would be used in transfer learning. In our case, our base architecture from Sect. 3.1 and Fig. 4 will be used, for which the layers in the representation network also are illustrated in Fig. 5a. Out of the fc-feature layer, a d-dimensional representation is obtained.

If multiple perspectives on the same content, as reflected by the multiple learning labels, should also be reflected in the learned representation, one can learn SS-R representations for each learning source and simply concatenate them afterward. With d dimensions per source and m sources, this leads to a \(d \times m\)Multiple Single-Source Concatenated Representation (MSS-CR). In this case, independent networks are trained for each of the sources, and no shared knowledge will be transferred between sources. A layer setup of the corresponding representation network is illustrated in Fig. 5b.

When applying MTL learning strategies, the deep architecture should involve shared knowledge layers, before branching out to various individual learning sources, whose learned representations will be concatenated in the final \(d \times m\)-dimensional representation. We call these Multi-Source Concatenated Representations (MS-CR). As the branching point can be chosen at different stages, we will investigate the effect of various prototypical branching point choices: at the second convolution layer (MS-CR@2, Fig. 5c), the fourth convolution layer (MS-CR@4, Fig. 5d), and the sixth convolution layer (MS-CR@6, Fig. 5e). The later the branching point occurs, the more shared knowledge the network will employ.

In the most extreme case, branching would only occur at the very last fully connected layer, and a Multi-Source Shared Representation (MS-SR) (or, more specifically, MS-SR@FC) is learned, as illustrated in Fig. 5f. As the representation is obtained from the fc-feature layer, no concatenation takes place here, and a d-dimensional representation is obtained.

A summary of these different representation learning architectures is given in Table 4. Beyond the strategies we choose, further approaches can be thought of to connect representations learned for different learning sources in neural network architectures. For example, for different tasks, representations can be extracted from different intermediate hidden layers, benefiting from the hierarchical feature encoding capability of the deep network [26]. However, considering that learned representations are usually taken from a specific fixed layer of the shared architecture, we focus on the strategies as we outlined above.

The various model architectures considered in the current work. Beyond single-source architectures, multi-source architectures with various degrees of shared information are studied. For simplification, multi-source cases are illustrated here for two sources. The fc-feature layer from which representations will be extracted is the FC(256) layer in the illustrations (see Table 3)

3.3 MTL training procedure

Similar to [4, 11], we choose to train the MTL models with a stochastic update scheme as described in Algorithm 1. At every iteration, a learning source is selected randomly. After the learning source is chosen, a batch of observation-label pairs \((X, z_{t})\) is drawn. For the audio previews belonging to the songs within this batch, an input representation \(X^*\) is cropped randomly from its super-sample X. The updates of the parameters \(\varTheta\) are conducted through back-propagation using the Adam algorithm [67]. For each neural network we train, we set \(L=lm\), where l is the number of iterations needed to visit all the training samples with fixed batch size \(b=128\), and m is the number of learning sources used in the training. Across the training, we used a fixed learning rate \(\epsilon =0.00025\). After a fixed number of epochs N is reached, we stop the training.

3.4 Implementation details

We used PyTorch [68] to implement the CNN models and parallel data serving. For the evaluation of models and cross-validation, we made extensive use of functionality in Scikit-Learn [69]. Furthermore, Librosa [70] was used to process audio files and its raw features including mel-spectrograms. The training is conducted with 8 Graphical Processing Unit (GPU) computation nodes, composed of 2 NVIDIA GRID K2 GPUs and 6 NVIDIA GTX 1080Ti GPUs.

Overall system framework. The first row of the figure illustrates the learning scheme, where the representation learning is happening by minimizing the KL divergence between the network inference \(f_t(X)\) and the preprocessed learning label \(z_t\). The preprocessing is conducted by the blue blocks which transform the original noisy labels \(y_t\) to \(z_t\), reducing noise and summarizing the high-dimensional label space into a smaller latent space. The second row describes the entire evaluation scenario. The representation is first extracted from the representation network, which is transferred from the upper row. The sequence of representation vectors is aggregated as the concatenation of their means and standard deviations. The purple block indicates a machine learning model employed to evaluate the representation’s effectiveness (color figure online)

4 Evaluation

So far, we discussed the details regarding the learning phase of this work, which corresponds to the upper row of Fig. 6. This included various choices of sources for the representation learning, and various choices of architecture and fusion strategies. In this section, we present the evaluation methodology we followed, as illustrated in the second row of Fig. 6. First, we will discuss the chosen target tasks and datasets in Sect. 4.1, followed in Sect. 4.2 by the baselines against which our representations will be compared. Section 4.3 explains our experimental design, and finally, we discuss the implementation of our evaluation experiments in Sect. 4.4.

4.1 Target datasets

In order to gain insight into the effectiveness of learned representations with respect to multiple potential future tasks, we consider a range of target datasets. In this work, our target datasets are chosen to reflect various semantic properties of music, purposefully chosen semantic biases, or popularity in the MIR literature. Furthermore, the representation network should not be configured or learned to explicitly solve the chosen target datasets.

While for the learning sources, we could provide categorizations on where and how the learning labels were derived, and also consider algorithmic outcomes as labels, the existing popular research datasets mostly fall in the Professional or Crowd categories. In our work, we choose 7 evaluation datasets commonly used in MIR research, which reflect three conventional types of MIR tasks, namely classification, regression, and recommendation:

Classification. Different types of classification tasks exist in MIR. In our experiments, we consider several datasets used for genre classification and instrument classification.

For genre classification, we chose the GTZAN [72] and FMA [71] datasets as main exemplars. Even though GTZAN is known for its caveats [79], we deliberately used it, because its popularity can be beneficial when compared with previous and future work. We note though that there may be some overlap between the tracks of GTZAN and the subset of the MSD we use in our experiments; the extent of this overlap is unknown, due to the lack of a confirmed and exhaustive track listing of the GTZAN dataset. We choose to use a fault-filtered data split for the training and evaluation, which is suggested in [73]. The split originally includes a training, validation and evaluation split; in our case, we also included the validation split as training data.

Among the various packages provided by the FMA, we chose the top-genre classification task of FMA-Medium [71]. This is a classification dataset with an unbalanced genre distribution. We used the data split provided by the dataset for our experiment, where the training is validation set are combined as the training.

Considering another type of genre classification, we selected the Extended Ballroom dataset [74, 75]. Because the classes in this dataset are highly separable with regard to their BPM [80], we specifically included this ‘purposefully biased’ dataset as an example of how a learned representation may effectively capture temporal dynamics properties present in a target dataset, as long as learning sources also reflected these properties. Since no pre-defined split is provided or suggested by other literature, we used stratified random sampling based on the genre label.

The last dataset we considered for classification is the training set of the IRMAS dataset [76], which consists of short music clips annotated with the predominant instruments present in the clip. Compared to the genre classification task, instrument classification is generally considered as less subjective, requiring features to separate timbral characteristics of the music signal as opposed to high-level semantics like the genre. We split the dataset to make sure that observations from the same music track are not split into training and test sets.

As a performance metric for all these classification tasks, we used classification accuracy.

Regression. As exemplars of regression tasks, we evaluate our proposed deep representations on the dataset used in the MediaEval Music Emotion prediction task [77]. It contains frame-level and song-level labels of a two-dimensional representation of emotion, with valence and arousal as dimensions [81]. Valence is related to the positivity or negativity of the emotion, and arousal is related to its intensity [77]. The song-level annotation of the V-A coordinates was used as the learning label. In similar fashion to the approach taken in [26], we trained separate models for the two emotional dimensions. As for the dataset split, we used the split provided by the dataset, which is done by the random split stratified by the genre distribution.

As an evaluation metric, we measured the coefficient of determination \(R^{2}\) of each model.

Recommendation. Finally, we employed the ‘Last.fm - 1K users’ dataset [78] to evaluate our representations in the context of a content-aware music recommendation task (which will be denoted as Lastfm in the remaining of the paper). This dataset contains 19 million records of listening events across 961, 416 unique tracks collected from 992 unique users. In our experiments, we mimicked a cold-start recommendation problem, in which items not seen before should be recommended to the right users. For efficiency, we filtered out users who listened to less than 5 tracks and tracks known to less than 5 users.

As for the audio content of each track, we obtained the mapping between the MusicBrainz Identifier (MBID) with the Spotify identifier (SpotifyID) using the MusicBrainz API.Footnote 3 After cross-matching, we collected 30 s previews of all track using the Spotify API.Footnote 4 We found that there is a substantial amount of missing mapping information between the SpotifyID and MBID in the MusicBrainz database, where only approximately 30% of mappings are available. Also, because of the substantial amount of inactive users and unpopular tracks in the dataset, we ultimately acquired a dataset of 985 unique users and 27, 093 unique tracks with audio content.

Similar to [28], we considered the outer matrix performance for un-introduced songs; in other words, the model’s recommendation accuracy on the items newly introduced to the system [28]. This was done by holding out certain tracks when learning user models and then predicting user preference scores based on all tracks, including those that were held out, resulting in a ranked track list per user. As an evaluation metric, we consider Normalized Discounted Cumulative Gain (nDCG@500), only treating held-out tracks that were indeed liked by a user as relevant items. Further details on how hold-out tracks were chosen are given in Sect. 4.4.

A summary of all evaluation datasets, their origins, and properties, can be found in Table 5.

4.2 Baselines

We examined three baselines to compare with our proposed representations:

Mel-Frequency Cepstral Coefficient (MFCC). These are some of the most popular audio representations in MIR research. In this work, we extract and aggregate MFCC following the strategy in [26]. In particular, we extracted 20 coefficients and also used their first- and second-order derivatives. After obtaining the sequence of MFCCs and its derivatives, we performed aggregation by taking the average and standard deviation over the time dimension, resulting in 120-dimensional vector representation.

Random Network Feature (Rand). We extracted the representation at the fc-feature layer without any representation network training. With random initialization, this representation, therefore, gives a random baseline for a given CNN architecture. We refer to this baseline as Rand.

Latent Representation from Music Auto-Tagger (Choi). The work in [26] focused on a music auto-tagging task and can be considered as yielding a state-of-the-art deep music representation for MIR. While the model’s focus on learning a representation for music auto-tagging can be considered as our SS-R case, there are a number of issues that complicate direct comparisons between this work and ours. First, the network in [26] is trained with about 4 times more data samples than in our experiments. Second, it employed a much smaller network than our architecture. Further, intermediate representations were extracted, which is out of the scope of our work, as we only consider representations at the fc-feature layer. Nevertheless, despite these caveats, the work still is very much in line with ours, making it a clear candidate for comparison. Throughout the evaluation, we could not fully reproduce the performance reported in the original paper [26]. When reporting our results, we, therefore, will report the performance we obtained with the published model, referring to this as Choi.

4.3 Experimental design

In order to investigate our research questions, we carried out an experiment to study the effect of the number and type of learning sources on the effectiveness of deep representations, as well as the effect of the various architectural learning strategies described in Sect. 3.2. For the experimental design, we consider the following factors:

Representation strategy, with 6 levels: SS-R, MS-SR@FC, MS-CR@6, MS-CR@4, MS-CR@2, and MSS-CR).

8 2-level factors indicating the presence or not of each of the 8 learning sources: self, year, bpm, taste, tag, lyrics, cdr_tag, and artist.

Number of learning sources present in the learning process (1 to 8). Note that this is actually calculated as the sum of the eight factors above.

Target dataset, with 7 levels: Ballroom, FMA, GTZAN, IRMAS, Lastfm, Arousal, and Valence.

Given a learned representation, fitting dataset-specific models is much more efficient than learning the representation, so we decided to evaluate each representation on all 7 target datasets. The experimental design is thus restricted to combinations of representation and learning sources, and for each such combination we will produce 7 observations. However, given the constraint of SS-R relying on a single learning source, that there is only one possible combination for n = 8 sources, as well as the high unbalance in the number of sources,Footnote 5 we proceeded in three phases:

- 1.

We first trained the SS-R representations for each of the 8 sources and repeated 6 times each. This resulted in 48 experimental runs.

- 2.

We then proceeded to train all five multi-source strategies with all sources, that is, \(n=8\). We repeated this 5 times, leading to 25 additional experimental runs.

- 3.

Finally, we ran all five multi-source strategies with \(n=2,\dots ,7\). The full design matrix would contain 5 representations and 8 sources, for a total of 1230 possible runs. Such an experiment was unfortunately infeasible to run exhaustively given available resources, so we decided to follow a fractional design. However, rather than using a pre-specified optimal design with a fixed amount of runs [83], we decided to run sequentially for as long as time would permit us, generating at each step a new experimental run on demand in a way that would maximize desired properties of the design up to that point, such as balance and orthogonality.Footnote 6

We did this with the greedy Algorithm 2. From the set of still remaining runs \({\mathcal {A}}\), a subset \({\mathcal {O}}\) is selected such that the expected unbalance in the augmented design \({\mathcal {B}}\cup \{o\}\) is minimal. In this case, the unbalance of design is defined as the maximum unbalance found between the levels of any factor, except for those already exhausted.Footnote 7 From \({\mathcal {O}}\), a second subset \({\mathcal {P}}\) is selected such that the expected aliasing in the augmented design is minimal, here defined as the maximum absolute aliasing between main effects.Footnote 8 Finally, a run p is selected at random from \({\mathcal {P}}\), the corresponding representation is learned, and the algorithm iterates again after updating \({\mathcal {A}}\) and \({\mathcal {B}}\).

Following this on-demand methodology, we managed to run another 352 experimental runs from all the 1230 possible.

After going through the three phases above, the final experiment contained \(48+25+352=425\) experimental runs, each producing a different deep music representation. We further evaluated each representation on all 7 target datasets, leading to a grand total of \(42\times 7=2975\) data points. Figure 7 plots the alias matrix of the final experimental design, showing that the aliasing among main factors is indeed minimal. The final experimental design matrix can be downloaded along with the rest of the supplemental material.

Each considered representation network was trained using the CNN representation network model from Sect. 3, based on the specific combination of learning sources and deep architecture as indicated by the experimental run. In order to reduce variance, we fixed the number of training epochs to \(N = 200\) across all runs and applied the same base architecture, except for the branching point. This entire training procedure took approximately 5 weeks with given computational hardware resources introduced in Sect. 3.4.

4.4 Implementation details

In order to assess how our learned deep music representations perform on the various target datasets, transfer learning will now be applied, to consider our representations in the context of these new target datasets.

As a consequence, new machine learning pipelines are set up, focused on each of the target datasets. In all cases, we applied the pre-defined split if it is feasible. Otherwise, we randomly split the dataset into an 80% training set and 20% test set. For every dataset, we repeated the training and evaluation for 5 times, using different train/test splits. In most of our evaluation cases, validation will take place on the test set; in case of the recommendation problem, the test set represents a set of tracks to be held out from each user during model training, and re-inserted for validation. In all cases, we will extract representations from evaluation dataset audio as detailed in Sect. 4.4.1, and then learn relatively simple models based on them, as detailed in Sect. 4.4.2. Employing the metrics as mentioned in the previous section, we will then take average performance scores over the 5 different train/test splits for final performance reporting.

4.4.1 Feature extraction and preprocessing

Taking raw audio from the evaluation datasets as input, we take non-overlapping slices out of this audio with a fixed length of 2.5 s. Based on this, we apply the same preprocessing transformations as discussed in Sect. 3.1.1. Then, we extract a deep representation from this preprocessed audio, employing the architecture as specified by the given experimental run. As in the case of Sect. 3.2, representations are extracted from the fc-feature layer of each trained CNN model. Depending on the choice of architecture, the final representation may consist of concatenations of representations obtained by separate representation networks.

Input audio may originally be (much) longer than 2.5 s; therefore, we aggregate information in feature vectors over multiple time slices by taking their mean and standard deviation values. As a result, we get representation with averages per learned feature dimension and another representation with standard deviations per feature dimension. These will be concatenated, as illustrated in Fig. 6.

4.4.2 Target dataset-specific models

As our goal is not to over-optimize dataset-specific performance, but rather perform a comparative analysis between different representations (resulting from different learning strategies), we keep the model simple and use fixed hyper-parameter values for each model across the entire experiment.

To evaluate the trained representations, we used different models according to the target dataset. For classification and regression tasks, we used the multilayer perceptron (MLP) model [84]. More specifically, the MLP model has two hidden layers, whose dimensionality is 256. As for the nonlinearity, we choose ReLU [62] for all nodes, and the model is trained with ADAM optimization technique [67] for 200 iterations. In the evaluation, we used the Scikit-Learn’s implementation for ease of distributed computing on multiple CPU computation nodes.

For the recommendation task, we choose a similar model as suggested in [28, 85], in which the learning objective function \({\mathcal {L}}\) is defined as

where \(P\in {\mathbb {R}}^{u\times {i}}\) is a binary matrix indicating whether there is interaction between users u and items i, \(U\in {\mathbb {R}}^{u\times {r}}\) and \(V\in {\mathbb {R}}^{i\times {r}}\) are r dimensional user factors and item factors for the low-rank approximation of P. P is derived from the original interaction matrix \(R\in {\mathbb {R}}^{u\times {i}}\), which contains the number of interaction from users u to items i, as follows:

\(W\in {\mathbb {R}}^{d\times {r}}\) is a free parameter for the projection from d-dimensional feature space to the factor space. \(X\in {\mathbb {R}}^{i\times {d}}\) is the feature matrix where each row corresponds to a track. Finally, \(||\cdot ||_{C}\) is the Frobenious norm weighted by the confidence matrix \(C\in {\mathbb {R}}^{u\times {i}}\), which controls the credibility of the model on the given interaction data, given as follows:

where \(\alpha\) controls credibility. As for hyper-parameters, we set \(\alpha =0.1\), \(\lambda ^{V}=0.00001\), \(\lambda ^{U}=0.00001\), and \(\lambda ^{W}=0.1\), respectively. For the number of factors we choose \(r=50\) to focus only on the relative impact of the representation over the different conditions. We implemented an update rule with the alternating least squares (ALS) algorithm similar to [28], and updated parameters during 15 iterations.

5 Results and discussion

In this section, we present results and discussion related to the proposed deep music representations. In Sect. 5.1, we will first compare the performance across the SS-Rs, to show how different individual learning sources work for each target dataset. Then, we will present general experimental results related to the performance of the multi-source representations. In Sect. 5.2, we discuss the effect of the number of learning sources exploited in the representation learning, in terms of their general performance, reliability, and model compactness. In Sect. 5.3, we discuss the effectiveness of different representations in MIR. Finally, we present some initial evidence for multifaceted semantic explainability of the proposed MTDTL in Sect. 5.5.Footnote 9

5.1 Single-source and multi-source representation

Figure 8 presents the performance of SS-R representations on each of the 7 target datasets. We can see that all sources tend to outperform the Rand baseline on all datasets, except for a handful cases involving sources self and bpm. Looking at the top performing sources, we find that tag, cdr_tag, and artist perform better or on-par with the most sophisticated baseline, Choi, except for the IRMAS dataset. The other sources are found somewhere between these two baselines, except for datasets Lastfm and Arousal, where they perform better than Choi as well. Finally, the MFCC is generally outperformed in all cases, with the notable exception of the IRMAS dataset, where only Choi performs better.

Zooming in to dataset-specific observed trends, the bpm learning source shows a highly skewed performance across target datasets: it clearly outperforms all other learning sources in the Ballroom dataset, but it achieves the worst or second-worst performance in the other datasets. As shown in [80], this confirms that the Ballroom dataset is well-separable based on BPM information alone. Indeed, representations trained on the bpm learning source seem to contain a latent representation close to the BPM of an input music signal. In contrast, we can see that the bpm representation achieves the worst results in the Arousal dataset, where both temporal dynamics and BPM are considered as important factors determining the intensity of emotion.

On the IRMAS dataset, we see that all the SS-Rs perform worse than the MFCC and Choi baselines. Given that they both take into account low-level features, either by design or by exploiting low-level layers of the neural network, this suggests that predominant instrument sounds are harder to distinguish based solely on semantic features, which is the case of the representations studied here.

Also, we find that there is small variability for each SS-R run within the training setup we applied. Specifically, in 50% of cases, we have within-SS-R variability less than 15% of the within-dataset variability. 90% of the cases are within 30% of the within-dataset variability.

We now consider how the various representations based on multiple learning sources perform, in comparison to those based on single learning sources. The boxplots in Fig. 9 show the distributions of performance scores for each architectural strategy and per target dataset. For comparison, the gray boxes summarize the distributions depicted in Fig. 8, based on the SS-R strategy. In general, we can see that these SS-R obtain the lowest scores, followed by MS-SR@FC, except for the IRMAS dataset. Given that these representations have the same dimensionality, these results suggest that adding a single-source-specific layer on top of a heavily shared model may help to improve the adaptability of the neural network models, especially when there is no prior knowledge regarding the well-matching learning sources for the target datasets. The MS-CR and MSS-CR representations obtain the best results in general, which is somewhat expected because of their larger dimensionality.

5.2 Effect of number of learning sources and fusion strategy

While the plots in Fig. 9 suggest that MSS-CR and MS-CR are the best strategies, the high observed variability makes this statement still rather unclear. In order to gain a better insight of the effects of the dataset, architecture strategies and number and type of learning sources, we further analyzed the results using a hierarchical or multi-level linear model on all observed scores [86]. The advantage of such a model is essentially that it accounts for the structure in our experiment, where observations nested within datasets are not independent.

By Fig. 9, we can anticipate a very large dataset effect because of the inherently different levels of difficulty, as well as a high level of heteroskedasticity. We, therefore, analyzed standardized performance scores rather than raw scores. In particular, the i-th performance score \(y_i\) is standardized with the within-dataset mean and standard deviation scores, that is, \(y^*_i=(y_i - {\bar{y}}_{d[i]})/s_{d[i]}\), where d[i] denotes the dataset of the i-th observation. This way, the dataset effect is effectively 0 and the variance is homogeneous. In addition, this will allow us to compare the relative differences across strategies and number of sources using the same scale in all datasets.

We also transformed the variable n that refers to the number of sources to \(n^*\), which is set to \(n^*=0\) for SS-Rs and to \(n^*=n-2\) for the other strategies. This way, the intercepts of the linear model will represent the average performance of each representation strategy in its simplest case, that is, SS-R (\(n=1\)) or non-SS-R with \(n=2\). We fitted a first analysis model as follows:

where \(\beta _{0r[i]d[i]}\) is the intercept of the corresponding representation strategy within the corresponding dataset. Each of these coefficients is defined as the sum of a global fixed effect \(\beta _{0r}\) of the representation, and a random effect \(u_{0rd}\) which allows for random within-dataset variation.Footnote 10 This way, we separate the effects of interest (i.e., each \(\beta _{0r}\)) from the dataset-specific variations (i.e., each \(u_{0rd}\)). The effect of the number of sources is similarly defined as the sum of a fixed representation-specific coefficient \(\beta _{1r}\) and a random dataset-specific coefficient \(u_{1rd}\). Because the slope depends on the representation, we are thus implicitly modeling the interaction between strategy and number of sources, which can be appreciated in Fig. 10, especially with MS-SR@FC.

Figure 11 shows the estimated effects and bootstrap 95% confidence intervals. The left plot confirms the observations in Fig. 9. In particular, they confirm that SS-R performs significantly worse than MS-SR@FC, which is similarly statistically worse than the others. When carrying out pairwise comparisons, MSS-CR outperforms all other strategies except MS-CR@2 (\(p=0.32\)), which outperforms all others except MS-CR@6 (\(p=0.09\)). The right plot confirms the qualitative observation from Fig. 10 by showing a significantly positive effect of the number of sources except for MS-SR@FC, where it is not statistically different from 0. The intervals suggest a very similar effect in the best representations, with average increments of about 0.16 per additional source—recall that scores are standardized.

To gain better insight into differences across representation strategies, we used a second hierarchical model where the representation strategy was modeled as an ordinal variable \(r^*\) instead of the nominal variable r used in the first model. In particular, \(r^*\) represents the size of the network, so we coded SS-R as 0, MS-SR@FC as 0.2, MS-CR@6 as 0.4, MS-CR@4 as 0.6, MS-CR@2 as 0.8, and MSS-CR as 1 (see Fig. 5). In detail, this second model is as follows:

In contrast to the first model, there is no representation-specific fixed intercept but an overall intercept \(\beta _0\). The effect of the network size is similarly modeled as the sum of an overall fixed slope \(\beta _{10}\) and a random dataset-specific effect \(u_{1d}\). Likewise, this model includes the main effect of the number of sources (fixed effect \(\beta _{20}\)), as well as its interaction with the network size (fixed effect \(\beta _{30}\)). Figure 12 shows the fitted coefficients, confirming the statistically positive effect of the size of the networks and, to a smaller degree but still significant, of the number of sources. The interaction term is not statistically significant, probably because of the unclear benefit of the number of sources in MS-SR@FC.

Overall, these analyses confirm that all multi-source strategies outperform the single-source representations, with a direct relation to the number of parameters in the network. In addition, there is a clearly positive effect of the number of sources, with a minor interaction between both factors.

Figure 10 also suggests that the variability of performance scores decreases with the number of learning sources used. This implies that if there are more learning sources available, one can expect less variability across instantiations of the network. Most importantly, variability obtained for a single learning source (\(n=1\)) is always larger than the variability with 2 or more sources. The Ballroom dataset shows much smaller variability when BPM is included in the combination. For this specific dataset, this indicates that once bpm is used to learn the representation, the expected performance is stable and does not vary much, even if we keep including more sources. Section 5.3 provides more insight in this regard.

5.3 Single source versus multi-source

(Standardized) performance by number of learning sources. Solid points mark representations including the source performing best with SS-R in the dataset; empty points mark representations without it. Solid and dashed lines represent linear fits, respectively; dashed areas represent 95% confidence intervals (color figure online)

The evidence so far tells us that, on average, learning from multiple sources leads to better performance than learning from a single source. However, it could be possible that the SS-R representation with the best learning source for the given target dataset still performs better than a multi-source alternative. In fact, in Fig. 10 there are many cases where the best SS-R representation (black circles at \(n=1\)) already perform quite well compared to the more sophisticated alternatives. Figure 13 presents similar scatter plots, but now explicitly differentiating between representations using the single best source (filled circles, solid lines) and not using it (empty circles, dashed lines). The results suggest that even if the strongest learning source for the specific dataset is not used, the others largely compensate for it in the multi-source representations, catching up and even surpassing the best SS-R representations. The exception to this rule is again bpm in the Ballroom dataset, where it definitely makes a difference. As the plots shows, the variability for low numbers of learning sources is larger when not using the strongest source, but as more sources are added, this variability reduces.

To further investigate this issue, for each target dataset, we also computed the variance component due to each of the learning sources, excluding SS-R representations [87]. A large variance due to one of the sources means that, on average and for that specific dataset, there is a large difference in performance between having that source or not. Table 6 shows all variance components, highlighting the per-dataset largest. Apart from bpm in the Ballroom dataset, there is no clear evidence that one single source is specially good in all datasets, which suggests that in general there is not a single source that one would use by default. Notably though, sources artist, tag and self tend to have large variance components.

In addition, we observe that the sources with the largest variance are not necessarily the sources that obtain the best results by themselves in an SS-R representation (see Fig. 8). We examined this relationship further by calculating the correlation between variance components and (standardized) performance of the corresponding SS-Rs. The Pearson correlation is 0.38, meaning that there is a mild association. Figure 14 further shows this with a scatterplot, with a clear distinction between poorly-performing sources (year, taste and lyrics at the bottom) and well-performing sources (tag, cdr_tag, and artist at the right).

This result implies that even if some SS-R is particularly strong for a given dataset, when considering more complex fusion architectures, the presence of that one source is not necessarily required because the other sources make up for its absence. This is especially important in practical terms, because different tasks generally have different best sources, and practitioners rarely have sufficient domain knowledge to select them up front. Also, and unlike the Ballroom dataset, many real-world problems are not easily solved with a single feature. Therefore, choosing a more general representation based on multiple sources is a much simpler way to proceed, which still yields comparable or better results.

In other words, if “a single deep representation to rule them all” is pre-trained, it is advisable to base this representation on multiple learning sources. At the same time, given that MSS-CR representations also generally show strong performance (albeit that they will bring high dimensionality), and that they will come ‘for free’ as soon as SS-R networks are trained, alternatively, we could imagine an ecosystem in which the community could pre-train and release many SS-R networks for different individual sources in a distributed way, and practitioners can then collect these into MSS-CR representations, without the need for retraining.

5.4 Compactness

Under an MTDTL setup with branching (the MS-CR architectures), as more learning sources are used, not only the representation will grow larger, but so will the necessary deep network to learn it: see Fig. 15 for an overview of necessary model parameters for the different architectures. When using all the learning sources, MS-CR@6, which for a considerable part encompasses a shared network architecture and branches out relatively late, has an around 6.3 times larger network size compared to the network size needed for SS-R. In contrast, MS-SR@FC, which is the most heavily shared MTDTL case, uses a network that is only 1.2 times larger than the network needed for SS-R.

Also, while the representations resulting from the MSS-CR and various MS-CR architectures linearly depend on the chosen number of learning sources m (see Table 4), for MS-SR@FC, which has a fixed dimensionality of d independent of m, we do notice increasing performance as more learning sources are used, except IRMAS dataset. This implies that under MTDTL setups, the network does learn as much as possible from the multiple sources, even in case of fixed network capacity.

5.5 Multiple explanatory factors

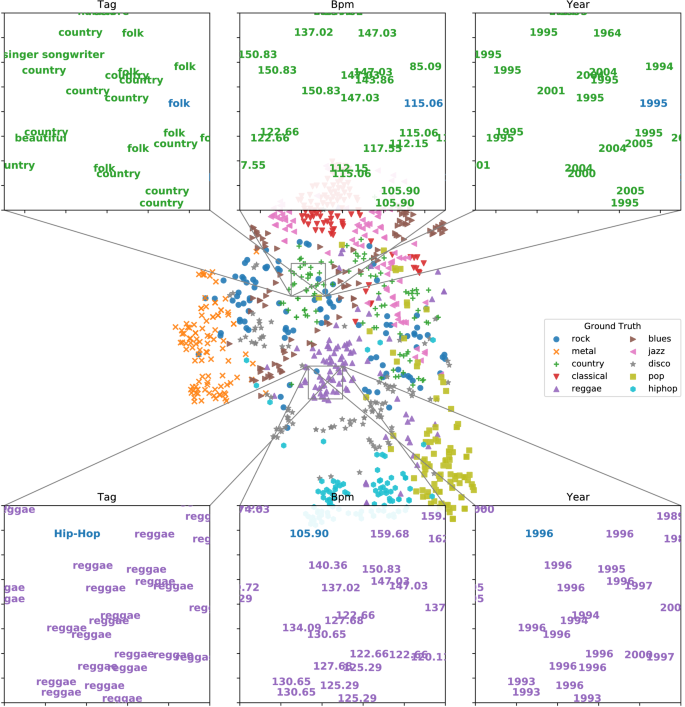

Potential semantic explainability of DTMTL music representations. Here, we provide a visualization using t-SNE [88], plotting 2-dimensional coordinates of each sample from the GTZAN dataset, as resulting from an MS-CR representation trained on 5 sources. In the zoomed-in panes, we overlay the strongest topic model terms in \(z_t\), for various types of learning sources. The specific model used in the visualization is the 232th model from the experimental design we introduce in Sect. 4.3, which is performing better than 95% of other models on GTZAN target dataset

By training representation models on multiple learning sources in the way we did, our hope is that the representation will reflect latent semantic facets that will ultimately allow for semantic explainability. In Fig. 16, we show a visualization that suggests this indeed may be possible. More specifically, we consider one of our MS-CR models trained on 5 learning sources. For each learning source-specific block of the representation, using the learning source-specific fc-out layers, we can predict a factor distribution \(z_t\) for each of the learning sources. Then, from the predicted \(z_t\), one can either map this back on the original learning labels \(y_t\), or simply consider the strongest predicted topics (which we visualized in Fig. 16), to relate the representation to human-understandable facets or descriptions.Footnote 11

6 Conclusion

In this paper, we have investigated the effect of different strategies to learn music representations with deep networks, considering multiple learning sources and different network architectures with varying degrees of shared information. Our main research questions are how the number and combination of learning sources (RQ1), and different configurations of the shared architecture (RQ2) affect the effectiveness of the learned deep music representation. As a consequence, we conducted an experiment training 425 neural network models with different combinations of learning sources and architectures.

After an extensive empirical analysis, we can summarize our findings as follows:

RQ1 The number of learning sources positively affects the effectiveness of a learned deep music representation, although representations based on a single learning source will already be effective in specialized cases (e.g., BPM and the Ballroom dataset).

RQ2 In terms of architecture, the amount of shared information has a negative effect on performance: larger models with less shared information (e.g., MS-CR@2, MSS-CR) tend to outperform models where sharing is higher (e.g., MS-CR@6, MS-SR@FC), all of which outperform the base model (SS-R).

Our findings give various pointers to useful future work. First of all, ‘generality’ is difficult to define in the music domain, maybe more so than in CV or NLP, in which lower-level information atoms may be less multifaceted in nature (e.g., lower-level representations of visual objects naturally extend to many vision tasks, while an equivalent in music is harder to pinpoint). In case of clear task-specific data skews, practitioners should be pragmatic about this.

Also, we only investigated one special case of transfer learning, which might not be generalized well if one considers the adaptation of the pre-trained network for further fine-tuning with respect to their target dataset. Since there are various choices to make, which will bring a substantial amount of variability, we decided to leave the aspects for further future works. We believe open-sourcing the models we trained throughout this work will be helpful for such follow-up works. Another limitation of current work is the selective set of label types in the learning sources. For instance, there are also a number of MIR-related tasks that are using time-variant labels such as automatic music transcription, segmentation, beat tracking and chord estimation. We believe that such tasks should be investigated as well in the future to build a more complete overview of MTDTL problem.

Finally, in our current work, we still largely considered MTDTL as a ‘black box’ operation, trying to learn how MTDTL can be effective. However, the original reason for starting this work was not only to yield an effective general-purpose representation, but one that also would be semantically interpretable according to different semantic facets. We showed some early evidence our representation networks may be capable of picking up such facets; however, considerable future work will be needed into more in-depth analysis techniques of what the deep representations actually learned.

Notes

For instance, from the 255 possible combinations of up to 8 sources, there are 70 combinations of \(n=4\) sources, but 28 with \(n=2\), or only 8 for \(n=7\). Simple random sampling from the 255 possible combinations would lead to a very unbalanced design, that is, a highly non-uniform distribution of observation counts across the levels of the factor (n in this case). A balanced design is desired to prevent aliasing and maximize statistical power. See section 15.2 in [82] for details on unbalanced designs.

An experimental design is orthogonal if the effects of any factor balance out across the effects of the other factors. In a non-orthogonal design, effects may be aliased, meaning that the estimate of one effect is partially biased with the effect of another, the extent of which ranges from 0 (no aliasing) to 1 (full aliasing). Aliasing is sometimes referred to as confounding. See sections 8.5 and 9.5 in [82] for details on aliasing.

For instance, let a design have 20 runs for SS-R, 16 for MS-SR@FC, and 18 for all other representations. The unbalance in the representation factor is thus \(20-16=4\). The total unbalance of the design is defined as the maximum unbalance found across all factors.

See section 2.3.7 in [83] for details on how to compute an alias matrix.

For the reproducibility, we release all relevant materials including code, models and extracted features at https://github.com/eldrin/MTLMusicRepresentation-PyTorch.

We note that hierarchical models do not fit each of the individual \(u_{0rd}\) coefficients (a total of 42 in this model), but the amount of variability they produce, that is, \(\sigma ^2_{0r}\) (6 in total).

Note that as soon as a pre-trained representation network model will be adapted to an new dataset through transfer learning, the fc-out layer cannot be used to obtain such explanations from the learning sources used in the representation learning, since the layers will then be fine-tuned to another dataset. However, we hypothesize it may be possible that the semantic explainability can still be preserved, if fine-tuning is jointly conducted with the original learning sources used during the pre-training time in the multi-objective strategy.

References

Casey MA, Veltkamp RC, Goto M, Leman M, Rhodes C, Slaney M (2008) Content-based music information retrieval: current directions and future challenges. Proc IEEE 96(4):668–696. https://doi.org/10.1109/JPROC.2008.916370

Caruana R (1997) Multitask learning. Mach Learn 28(1):41–75. https://doi.org/10.1023/A:1007379606734. ISSN: 1573-0565

Bengio Y, Courville AC, Vincent P (2013) Representation learning: a review and new perspectives. IEEE Trans Pattern Anal Mach Intell 35(8):1798–1828. https://doi.org/10.1109/TPAMI.2013.50. ISSN: 0162-8828

Liu W, Mei T, Zhang Y, Che C, Luo J (2015) Multi-task deep visual-semantic embedding for video thumbnail selection. In: IEEE conference on computer vision and pattern recognition CVPR, Boston, MA, USA, pp 3707–3715. https://doi.org/10.1109/CVPR.2015.7298994

Bingel J, Søgaard A (2017) Identifying beneficial task relations for multi-task learning in deep neural networks. In: Proceedings of the 15th conference of the European chapter of the association for computational linguistics, vol 2. Association for Computational Linguistics, Valencia, Spain, pp 164–169

Li S, Liu Z-Q, Chan AB (2015) Heterogeneous multi-task learning for human pose estimation with deep convolutional neural network. Int J Comput Vis 113(1):19–36. https://doi.org/10.1007/s11263-014-0767-8. ISSN: 1573-1405