Abstract

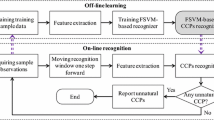

Various types of abnormal control chart patterns can be linked to certain assignable causes in industrial processes. Hence, control chart patterns recognition methods are crucial in identifying process malfunctioning and source of variations. Recently, the hybrid soft computing methods have been implemented to achieve high recognition accuracy. These hybrid methods are complicated, because they require optimizing algorithms. This paper investigates the design of efficient hybrid recognition method for widely investigated eight types of X-bar control chart patterns. The proposed method includes two main parts: the features selection and extraction part and the recognizer design part. In the features selection and extraction part, eight statistical features are proposed as an effective representation of the patterns. In the recognizer design part, an adaptive neuro-fuzzy inference system (ANFIS) along with fuzzy c-mean (FCM) is proposed. Results indicate that the proposed hybrid method (FCM-ANFIS) has a smaller set of features and compact recognizer design without the need of optimizing algorithm. Furthermore, computational results have achieved 99.82% recognition accuracy which is comparable to published results in the literature.

Similar content being viewed by others

References

Montgomery DC (2009) Statistical quality control-a modern introduction. Wiley, New York

Western Electric Company. (1958) Statistical quality control handbook. The Company

Nelson LS (1984) The Shewhart control chart–tests for special causes. J Qual Technol 16(4):237–239

Lucy-Bouler TL (1993) Problems in control chart pattern recognition systems. Int J Qual Reliab Manag 10(8):5–13

Guh RS (2005) A hybrid learning-based model for on-line detection and analysis of control chart patterns. Comput Ind Eng 49(1):35–62

Uğuz H (2012) Adaptive neuro-fuzzy inference system for diagnosis of the heart valve diseases using wavelet transform with entropy. Neural Comput Appl 21(7):1617–1628

Demircan S, Kahramanli H (2016) Application of fuzzy C-means clustering algorithm to spectral features for emotion classification from speech. Neural Comput Appl. https://doi.org/10.1007/s00521-016-2712-y

Lavanya B, Hannah Inbarani H (2017) A novel hybrid approach based on principal component analysis and tolerance rough similarity for face identification. Neural Comput Appl. https://doi.org/10.1007/s00521-017-2994-8

Kazemi MS, Kazemi K, Yaghoobi MA, Bazargan H (2016) A hybrid method for estimating the process change point using support vector machine and fuzzy statistical clustering. Appl Soft Comput 40:507–516

Haykin SS (2001) Neural networks: a comprehensive foundation. Tsinghua University Press, Beijing

Vapnik V (2013) The nature of statistical learning theory. Springer, Berlin

Ebrahimzadeh A, Ranaee V (2011) High efficient method for control chart patterns recognition. Acta technica ČSAV 56(1):89–101

Xanthopoulos P, Razzaghi T (2014) A weighted support vector machine method for control chart pattern recognition. Comput Ind Eng 70:134–149

A Viattchenin D, Tati R, Damaratski A (2013) Designing Gaussian membership functions for fuzzy classifier generated by heuristic possibilistic clustering. J Inf Organ Sci 37(2):127–139

Zarandi MF, Alaeddini A, Turksen IB (2008) A hybrid fuzzy adaptive sampling–run rules for Shewhart control charts. Inf Sci 178(4):1152–1170

Khajehzadeh A, Asady M (2015) Recognition of control chart patterns using adaptive neuro-fuzzy inference system and efficient features. Int J Sci Eng Res 6(9):771–779

Khormali A, Addeh J (2016) A novel approach for recognition of control chart patterns: Type-2 fuzzy clustering optimized support vector machine. ISA Trans 63:256–264

Das P, Banerjee I (2011) An hybrid detection system of control chart patterns using cascaded SVM and neural network–based detector. Neural Comput Appl 20(2):287–296

Pham DT, Wani MA (1997) Feature-based control chart pattern recognition. Int J Prod Res 35(7):1875–1890

Gauri SK, Chakraborty S (2009) Recognition of control chart patterns using improved selection of features. Comput Ind Eng 56(4):1577–1588

Hassan A, Baksh MSN, Shaharoun AM, Jamaluddin H (2011) Feature selection for SPC chart pattern recognition using fractional factorial experimental design. In: Pham DT, Eldukhri EE, Soroka AJ (eds) Intelligent production machines and system: 2nd I* IPROMS virtual international conference, Elsevier, pp 442–447

Hassan A, Baksh MSN, Shaharoun AM, Jamaluddin H (2003) Improved SPC chart pattern recognition using statistical features. Int J Prod Res 41(7):1587–1603

Al-Assaf Y (2004) Recognition of control chart patterns using multi-resolution wavelets analysis and neural networks. Comput Ind Eng 47(1):17–29

Cheng CS, Huang KK, Chen PW (2015) Recognition of control chart patterns using a neural network-based pattern recognizer with features extracted from correlation analysis. Pattern Anal Appl 18(1):75–86

Masood I, Hassan A (2010) Issues in development of artificial neural network-based control chart pattern recognition schemes. Eur J Sci Res 39(3):336–355

Hachicha W, Ghorbel A (2012) A survey of control-chart pattern-recognition literature (1991–2010) based on a new conceptual classification scheme. Comput Ind Eng 63(1):204–222

Swift JA (1987) Development of a knowledge-based expert system for control-chart pattern recognition and analysis. Oklahoma State Univ, Stillwater

De la Torre Gutierrez H, Pham DT (2016) Estimation and generation of training patterns for control chart pattern recognition. Comput Ind Eng 95:72–82

Alcock (1999) http://archive.ics.uci.edu/ml/databases/syntheticcontrol/syntheticcontrol.data.html

Bennasar M, Hicks Y, Setchi R (2015) Feature selection using joint mutual information maximisation. Expert Syst Appl 42(22):8520–8532

Haghtalab S, Xanthopoulos P, Madani K (2015) A robust unsupervised consensus control chart pattern recognition framework. Expert Syst Appl 42(19):6767–6776

Bezdek JC, Ehrlich R, Full W (1984) FCM: the fuzzy c-means clustering algorithm. Comput Geosci 10(2–3):191–203

Deer PJ, Eklund P (2003) A study of parameter values for a Mahalanobis distance fuzzy classifier. Fuzzy Sets Syst 137(2):191–213

Sumathi S, Surekha P, Surekha P (2010) Computational intelligence paradigms: theory and applications using MATLAB, vol 1. CRC Press, Boca Raton

Jang JS (1993) ANFIS: adaptive-network-based fuzzy inference system. IEEE Trans Syst Man Cybern 23(3):665–685

Gacek A, Pedrycz W (eds) (2011) ECG signal processing, classification and interpretation: a comprehensive framework of computational intelligence. Springer, Berlin

Joaquim PMDS, Marques S (2007) Applied statistics using SPSS, statistica, Matlab and R. Springer, Berlin, pp 205–211

Carbonneau R, Laframboise K, Vahidov R (2008) Application of machine learning techniques for supply chain demand forecasting. Eur J Oper Res 184(3):1140–1154

Stukowski A (2009) Visualization and analysis of atomistic simulation data with OVITO—the Open Visualization Tool. Modell Simul Mater Sci Eng 18(1):015012

Mylonopoulos NA, Doukidis GI, Giaglis GM (1995). Assessing the expected benefits of electronic data interchange through simulation modelling techniques. In: The proceedings of the 3rd European conference on information systems, Athens, Greece, pp 931–943

Abdulmalek FA, Rajgopal J (2007) Analyzing the benefits of lean manufacturing and value stream mapping via simulation: a process sector case study. Int J Prod Econ 107(1):223–236

Assaleh K, Al-Assaf Y (2005) Features extraction and analysis for classifying causable patterns in control charts. Comput Ind Eng 49(1):168–181

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest. Also, this research work has not been submitted for publication nor has it been published in part or in whole elsewhere. We verify to the fact that all authors listed on the title page have contributed significantly to the work, have read the document, confirm to the validity and legitimacy of the data and its explanation, and agree to its submission to the Journal of Neural Computing and Applications.

Appendices

Appendix 1

The mathematical expressions are summarized here for eight different types of statistical features.

-

1.

Mean: Mean is the average value of all points. Here \(X_{i}\) is the input vector for fully developed pattern and n is the window size. The general expression for the mean is given as

$${\text{Mean}} = \frac{{\mathop \sum \nolimits_{i = 1}^{n} X_{i} }}{n}$$(5) -

2.

Standard Deviation: The standard deviation is found by general formula given as

$${\text{Standard}}\;{\text{Deviation}} = \sqrt {\frac{{\mathop \sum \nolimits_{i = 1}^{n} \left( {X_{i} - \mu } \right)^{2} }}{n - 1}}$$(6)where Xi is the individual measurement and µ is the mean and n is the total number of samples points or window size.

-

3.

Skewness: Skewness is the estimate of symmetry of the shape of the distribution it may either zero or positive or negative. The estimate of the data points from X1 to Xn is given by equations

$$\gamma = \frac{{n\mathop \sum \nolimits_{i = 1}^{n} \left( {X_{i} - \mu } \right)^{3} }}{{\left[ {\left( {n - 1} \right)\left( {n - 2} \right)s^{3} } \right]}}$$(7)where Xi is individual µ is mean and s is sample standard deviation and n is the total number of points or window size [37].

-

4.

Mean Square Value: Mean square value is calculated using following formula

$${\text{MSV}} = \frac{1}{n + 1}\mathop \sum \limits_{i = 1}^{n} X_{i}^{2}$$(8)where Xi is individual values and n is the total number of points or window size.

-

5.

CUSUM: CUSUSM is the cumulative SUM chart values. The last statistical value of CUSUM is taken as feature in this study. The general formula for upper and lower CUSUM statistics is given as:

$$C_{i}^{ + } = \hbox{max} \left[ {0,x_{i} - \left( {\mu_{0} + K} \right) + C_{i - 1}^{ + } } \right]$$(9a)$$C_{i}^{ - } = \hbox{max} \left[ {0,\left( {\mu_{0} - K} \right) - x_{i} + C_{i - 1}^{ - } } \right]$$(9b)where starting values of \(C_{i}^{ + } ,C_{i}^{ - }\) are taken zero.

-

6.

Autocorrelation: The average autocorrelation exist when the signals are dependent on previous part of signals. The autocorrelation is calculated by following equation:

$$A_{xx} \left[ m \right] = \frac{1}{N + 1 - m}\left[ {x_{0} x_{1} + x_{1} x_{1 + m} + \ldots x_{N - m} x_{N} } \right]$$(10) -

7.

Kurtosis: Kurtosis measure the peakness of the distribution. The following formula gives the kurtosis for any distribution

$$k = \frac{{E\left[ {\left( {X - \mu } \right)^{4} } \right]}}{{\sigma^{4} }} - 3$$(11)The factor 3 is used for normal distribution in order to get k = 0.

-

8.

Slope: The first-order line fitting to the data points is given by equation

$$Y = mX + C$$(12)where C y-intercept and m is the slope of the line.

This can be calculated by the following equation

$$m = \frac{{\mathop \sum \nolimits_{i = 1}^{n} (X_{i} - \bar{X})\left( {Y_{i} - \bar{Y}} \right)}}{{\mathop \sum \nolimits_{i = 1}^{n} \left( {X_{i} - \bar{X}} \right)^{2} }} .$$(13)The slope m is used as feature in this study.

Appendix 2

-

1.

Normal pattern: Normal pattern is the pattern for stable processes. The basic equation is given by

$$y_{t } = \mu + {\rm N}_{t}$$(14)where Nt is the random generation and µ is the mean.

-

2.

Trend up/down: The trend up equation is given by

$$y_{t } = \mu + {\rm N}_{t} \pm \gamma_{1} t$$(15)where the Nt is the random generation and \(\gamma_{1}\) is slope of the trend. The \(\pm\) sign is used for trend up and down patterns, respectively.

-

3.

Shift up/down: The shift up/down equation is given by

$$y_{t } = \mu + {\rm N}_{t} \pm \gamma_{2}$$(16)where \({\rm N}_{t}\) is the random generation and \(\gamma_{2}\) is the shift magnitude. The \(\pm\) sign is used for shift up and down patterns, respectively.

-

4.

Cyclic pattern: The basic equation for cyclic pattern is given by

$$y_{t } = \mu + {\rm N}_{t} \pm \gamma_{3} \sin \left( {\frac{2\pi t}{{\gamma_{4} }}} \right)$$(17)where \({\rm N}_{t}\) is the random generation and \(\gamma_{3}\) is the amplitude and \(\gamma_{4}\) is the frequency of signals.

-

5.

Systematic pattern: The basic equation for systematic departure is given by

$$y_{t } = \mu + {\rm N}_{t} \pm \gamma_{5} \left( { - 1} \right)^{t}$$(18)where \({\rm N}_{t}\) is the random generation and \(\gamma_{5}\) is the systematic departure parameters.

-

6.

Stratification pattern: The basic equation for stratification is given by

$$y_{t } = \mu + \gamma_{6} {\rm N}_{t}$$(19)where \({\rm N}_{t}\) is the random generation and \(\gamma_{6}\) is the stratification parameter.

Appendix 3

-

1.

Accuracy Overall accuracy formula is given by

$${\text{Accuracy}} = \frac{{{\text{TP}} + {\text{TN}}}}{N}*100\%$$(20) -

2.

Sensitivity: The measure of actual positive is called sensitivity and is given by formula

$${\text{Sensitivity}} = \frac{\text{TP}}{{{\text{TP}} + {\text{FN}}}}*100\%$$(21) -

3.

Specificity: The measure of actual negative is called specificity.

$${\text{Specificity}} = \frac{\text{TN}}{{{\text{TN}} + {\text{FP}}}}*100\%$$(22)where TP, TN, FN, FP represent abbreviations of True Positive, True Negative, False Negative and False Positive, respectively. Sensitivity indicates how well the methods/algorithm performs on the positive class and specificity indicates how well the algorithm performs on the negative class. N represents total number of patterns.

-

4.

Root-mean-square error (RMSE): The RMSE equates the anticipated output value y, and the actual output of the FIS \(\hat{y}\). Let N represent the number of control chart patterns for training. The RMSE for training can be expressed as:

$${\text{RMSE}} = \sqrt {\frac{{\mathop \sum \nolimits_{i = 1}^{N} y_{i} - \hat{y}_{i} }}{N}}$$(23)

Rights and permissions

About this article

Cite this article

Zaman, M., Hassan, A. Improved statistical features-based control chart patterns recognition using ANFIS with fuzzy clustering. Neural Comput & Applic 31, 5935–5949 (2019). https://doi.org/10.1007/s00521-018-3388-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-018-3388-2