Abstract

Local binary pattern (LBP) algorithm and its variants have been used extensively to analyse the local textural features of digital images with great success. Numerous extensions of LBP descriptors have been suggested, focusing on improving their robustness to noise and changes in image conditions. In our research, inspired by the concepts of LBP feature descriptors and a random sampling subspace, we propose an ensemble learning framework, using a variant of LBP constructed from Pascal’s coefficients of n-order and referred to as a multiscale local binary pattern. To address the inherent overfitting problem of linear discriminant analysis, PCA was applied to the training samples. Random sampling was used to generate multiple feature subsets. In addition, in this work, we propose a new feature extraction technique that combines the pyramid histogram of oriented gradients and LBP, where the features are concatenated for use in the classification. Its performance in recognition was evaluated using the Hong Kong Polytechnic University database. Extensive experiments unmistakably show the superiority of the proposed approach compared to state-of-the-art techniques.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Palmprint is a relatively newly discovered physiological biometric trait that has recently arisen as an active area of study. The rich features of the palmprint are the key to its recognition power. Patterns elicited from palms have excellent discriminatory power as they have more features on the surface than fingerprints while being stable. However, it should be noted that observations of palmprint features are often affected by various issues, i.e. variations in lighting, orientation and noisy sensors, which make the task of identification more complex. Variance in illuminations in particular can seriously affect the ability of systems to recognize individuals. The majority of palm recognition methods can be regarded as sufficiently robust to deal with all variations in image conditions. However, researchers today continue to attempt to solve this problem and develop systems that can be used accurately to identify a person.

In the literature, a number of techniques are reported for use in palmprint recognition, classified into several different categories: structure based, statistic based, subspace based and code based. Structure-based algorithms mainly concern information on the direction and location of the main lines and folds in the palmprint, such as principal lines, wrinkles, delta points and minutiae. Structure-based algorithms are the traditional approaches to extract the features of palmprints and provide effective representation and matching. For feature extraction, there are many proposed approaches which use a variety of line detection operators. Funada et al. [1] presented an algorithm that extracts high probability local palmprint features, such as ridges, by eliminating the creases. Zhang and Shu [2] attempted to determine datum points from the main lines using a directional projection technique. These datum points are found to be rotation and translation invariant due to the steadiness of the main lines. Sobel and morphological operations were used in [3] to extract line features from palmprints. For the representation of the features, this method primarily uses straight line segments or feature points instead of ridges. Housdorrf and Euclidean distances are widely used for the matching process.

In the case of statistic-based palmprint identification, the works that have been published include local or global statistical approaches. Systems based on the local features of the palmprint include discrete cosine transform, Fourier transform, wavelet transform and Gabor transform. These tools have been studied and used to transform images before feature extraction task in order to extract more distinctive features. In [4], the authors transformed a palmprint image into the wavelet domain and computed the average and variance of each patch to create a normalized palmprint vector. The standard deviation of the small block is used as a feature. In [5], the mean and standard deviation of the small patch are employed as a feature after transformation with a Gabor filter. In [6], a histogram of a local binary palm image was used as a palmprint feature. Global statistical approaches compute the global features of the palmprint, such as moments, centres of density and gravity , directly on the palmprint image. Correlation coefficients, first-order norms and the Euclidean distance are often used for the purpose of matching. A palmprint recognition system is proposed in [7] using Hu invariant moments as patterns on an Otsu binarized palmprint.

Other efforts in this domain have also explored a variety of subspace-based algorithms to derive a compact feature subspace for palmprint data. The main subspace approaches reported in the literature employ a palmprint as a high-dimensional matrix and mapping it to a lower-dimensional matrix. Then, the generated images can be represented and matched in this low-dimensional space. Subspace-based approaches include linear and nonlinear space algorithms. Lu et al. [8] introduced PCA effectively in palmprint recognition. Notwithstanding the significant achievements of PCA, some challenges remain requiring more investigation. In [9], two-dimensional PCA (2DPCA) was successfully introduced for palmprint recognition. This method relies on a two-dimensional palm image matrix rather than one-dimensional vector, and a palm covariance matrix is generated directly employing the original palm matrices. In [10], Niyogi suggested locality preserving projection (LPP). The aim of LPP is to solve a generalized eigenvalue problem. It seems to be more stable to noise than PCA and LDA [10]. Researchers have also proposed a number of approaches based on coding to extract features for palmprint recognition. These include Fourier transform, the Gaussian derivative filter, wavelet transform and Gabor wavelet transform. Among these methods, Kong and Zhang [11] suggested a fusion code algorithm to encode the Gabor filter phase using six directions. Moreover, based on ordinal code, Song et al. [12] proposed a phase coding scheme using 2D orthogonal Gabor filters. These are employed for various directions to extract texture features, and a phase coding algorithm is applied to describe the palm image. Another approach, discussed in [13], introduced a robust line orientation code (RLOC) for palmprint recognition as an improved version of the competitive code. In the proposed approach, the LBP technique is customized based on conventional thresholding using Pascal’s coefficients of order n [14]. The proposed variant called Pascal coefficient LBP (PCLBP) descriptor is inspired by the SLBP descriptor [15, 16] . This allows us to detect only the robust patterns from the palmprint images. This approach has many advantages, such as the simplification of implementation and high-speed computation. The main idea is to use a varied number of intervals to generate a distribution of binary codes for every pixel position thus creating more robust descriptors to cope with the changing image distortions. In the proposed variant, the main difference from LBP is that the threshold value is tuned using Pascal’s coefficients of order n with an alternating sign. Furthermore, this variant is also extended to MLBP in this paper, referred to as the Pascal coefficient MLBP (PCMLBP) descriptor, for which the PCMLBP features of the different scales are first extracted and their histograms subsequently concatenated into a long feature. Furthermore, to achieve higher recognition rates, we propose a novel feature method to form a new set of features based on the combination of the pyramid histogram orientation gradient (PHOG) descriptor with the PCLBP descriptor, so that the histogram bins have a more powerful discriminatory capability. Nevertheless, having a large number of features can become a curse in terms of classification. To solve this problem, PCA is used to reduce the size of the dimension of the vector of palm features. In addition, we construct a multiple LDA classifier from many individual clarifiers. A powerful decision rule is used for the purposes of combination and is known as ensemble learning. LDA can be achieved by maximizing the ratio of the determinant of the within-class variance and the determinant of the between-class variance. The assessment of the performance of our proposed approaches was conducted using the multispectral palmprint database available from the Hong Kong Polytechnic University (PolyU), using LDA classification. In addition, a comparative analysis of our proposed algorithms was performed against a number of state-of-the-art counterparts, including the techniques described in [17,18,19,20]. The main body of this paper is composed of six main sections. Sections 2 and 3 describe the different steps constituting our proposed multispectral palmprint recognition approach, whereas the experimental results related to the proposed methods are reported in Sect. 4. Section 5 discusses the computational complexity of the proposed methods. The paper ends with a conclusion and proposals for future work in Sect. 6.

2 Multispectral palmprint recognition: proposed approach

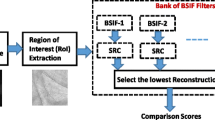

Palmprint recognition can be effectively performed by using the best spectrum wavelength range (blue, green, red and NIR). A typical scheme for such a system comprises of the following steps: palmprint image acquisition, feature extraction, dimensionality reduction and classification. Figure 1 shows the main steps of our proposed method. The palmprint and textures are extracted using PCMLBP and PCLBP–PHOG descriptors. The classification of this new set of features is undertaken using PCA and random subspace LDA. The correct identification is achieved when the test palmprint label matches the palmprint label of the same subject in the training samples. The process may result in misclassification when the test palmprint is classified using a palmprint label of a different subject from the training sample. LDA-based classification results are combined at the fusion stage to obtain higher recognition rates. In this work, an ensemble framework is proposed based on the PHOG and the customized LBP descriptor for the classification of palmprint images. To enhance the accuracy of existing LBP descriptors, Pascal coefficients’ approach is used for the purpose of customization.

2.1 PCLBP-based feature extraction

In the conventional LBP, only eight neighbours of the pixel are considered. Ojala et al. [21] were among the first to proposed the use of LBP in recognition, demonstrated the superior discriminative of this descriptor for texture classification. Later, this was extended with different modified versions, used extensively to analyse the local textural features of digital images with great success. In this approach, we suggest adopting a different pixel threshold. PCLBP descriptor proved to be tolerant of illumination changes and computational simplicity. In addition, it does not require many parameters to be set. First, the value of the pixel in use \(g_{c}\) is used as a threshold for each of its neighbouring \(g_{p}\; \{0,1, \ldots ,G-1\},\) taking the result as a binary number. Using Pascal’s coefficients, a local binary pattern (PCLBP) is created for each pixel location. By changing the expression \((g_{p}-g_{c})\) in \({\hbox {LBP}}_{G,r}(w,z)=\sum \nolimits _{p=0}^{G-1} S ( g_{p} - g_{c} )^{2^p}\) to \((g_{p}-g_{c}-l)\).

The PCLBP is presented in Eq. 1 for a location (w, z) and a value l. Here, l takes the different coefficients of the Pascal values with an alternating sign, as shown in Eq. 3; for each change in l, a new binary number is formed and included in the histogram.

where G is a set of sample points regularly spaced on a circle of radius r while S is described as follows:

In our case, l is the coefficient in a Pascal triangle of order n, which represents a threshold value; l is defined as follows:

where

If n is set equal to 3, for example, the Pascal coefficients (l) from Eq. 3 will be \(\left( {(-1)^0}\left( {\begin{array}{c}3\\ 0\end{array}}\right) =1, {(-1)^1}\left( {\begin{array}{c}3\\ 1\end{array}}\right) = -3, {(-1)^2}\left( {\begin{array}{c}3\\ 2\end{array}}\right) =3 \;{\hbox {and}}\; {(-1)^3}\left( {\begin{array}{c}3\\ 3\end{array}}\right) =1\right)\), which correspond to the fourth row of the Pascal triangle as shown in Fig. 2. Therefore, for each pixel location, l will generate four binary codes contributing to the histogram. Thus, similarly to other LBP techniques, the final histogram is divided by \(n+1\), resulting in a histogram sum equal to the number of pixel positions previously considered.

2.2 PCMLBP-based feature extraction

Our proposed PCMLBP method proves to be an efficient and effective descriptor for palmprint recognition. In real scenarios in which only one scale is used, there is a significant constraint attributed to the dimension of the support area, i.e. the \(3\times 3\) neighbourhood which cannot cope with significant structures in capturing the dominant features of some textures. Formally, the use of the multiscale local binary pattern (MLBP) results in considerable success in capturing structures at different levels [22]. In our proposed method, the multiscale version of the PCLBP has been used to improve the classification results and hence the prediction of the model. In a multiscale version, the principle is based on changing the radius of the PCLBP and then concatenating all the resulting features. However, there is an issue in terms of the high dimension of the combined feature vector resulting from the multi-resolution decomposition; this issue can be overcome by means of effective feature selection to reduce the unnecessary or redundant information. Changes in the dimension of radii depend on the distance of the neighbouring pixel from the centre of the window used, making it possible to generate a multiscale representation by concatenating PCLBP histograms that determine the size of the radius [21] [e.g. \(2{^8}\) yields 1280 bins for 5 scales (\(R=1,3,5,7,9\))]. The information contained in the histogram is related to how the multiscale features are distributed over the whole palmprint. The multiscale approach has been found to be more precise than the single-scale description of PCLBP. The resulting PCMLBP histograms for each scale of image size \(M\times N\) are computed as follows:

where n is the maximum bin value of the PCLBP and R is the maximum radius used to the multiscale.

The PCLBP histograms calculated at various radii provide local information on the observation vector. The resultant multiscale palmprint histogram is as follows:

2.3 PHOG-based feature extraction

A PHOG descriptor represents the spatial structure of a local imagery shape in a flexible manner. First, the PHOG extracts the edge contour of given stimuli known as the canny edge operator. The palmprint image is then split into spatial grids through an iterative technique which will double the number of splits in every dimension; for example, level k would have 2k cells in each dimension. Then, the histograms of oriented gradients (HOGs) are computed using the \(3\times 3\) Sobel mask is followed by the weighted contribution of each edge in accordance with its related magnitude. Every single cell’s histogram is given a particular quantity described as M bins. The bins relate to a number of edge directions in an angular range. The final PHOG descriptor is then obtained where the HOGs are determined one after the other at the same level. Therefore, the PHOG descriptor of an image is obtained from the HOGs computed at various pyramid levels. The PHOG descriptor for the related region is a vector of the dimension \(d=M\sum \nolimits _{k=0}^K 4^k\). We used pyramid \(K=3\) with a bin size \(M=8\) and a range of orientation [0,360]. This results in a descriptor of the dimension 680 [23].

2.4 Principal components analysis (PCA)

PCA, also called Karhunen–Love transformation, is a linear transformation that obtains the variance of the input data. PCA is a powerful unsupervised method for transforming a number of possibly correlated attributes into a number of uncorrelated attributes named principal components. This technique computes the eigenvectors of the covariance matrix and approximates the original data set by a linear combination of the leading eigenvectors. The idea of using the PCA approach is to reduce the size of data set without much loss of features, where the eigenvectors help to find the optimal feature subspace in the lower dimensionality needed for the recognition of a test image. Suppose the training data set of the palm is \(Z_{1},Z_{2},\ldots ,Z_{N}\), where N is the number of palmprints in the training data set. The palmprint images are mean centred at the training set by subtracting the matrix Z from the mean palmprint image, defined as follows [24]:

The data matrix Z is multiplied by transpose \(Z^{\mathrm{T}}\) to obtain the covariance matrix \({\varOmega }\) [25]:

The covariance matrix \({\varOmega }\) has eigenvectors and corresponding nonzero eigenvalues. The eigenvectors are sorted in ascending order of the eigenvalues. To reduce the dimensionality, it is necessary to select the eigenvectors with the largest eigenvalues to be components of the eigenvectors which represent the variance space of the training palmprint set.

When testing the proposed method, six spectrum-related training images for each subject were used to construct the training vector in Protocol I, whereas three training images were used in Protocol II. Thus, the training feature vector dimension is \(D\times M\), where D denotes the PCMLBP and PCLBP–PHOG feature dimensions and M is the total number of subjects for each spectrum. The covariance matrix is derived from randomly selected features from the training data, and finally, eigenvectors and eigenvalues from this covariance matrix are obtained. Sufficient dimensions in the training data were retained to account for 99% of the total energy. Using the new palm eigenvector matrix, the training and testing samples were transformed onto the new subspace. The mathematical representation of this transformation matrix can be expressed as follows:

- Step 1::

-

Centralize all training palmprint images by subtracting the mean palmprint image as given in Eq. 8.

- Step 2::

-

Compute the covariance matrix as given in Eq. 9.

- Step 3::

-

Compute the eigenvectors of the covariance matrix.

- Step 4::

-

Sort the eigenvectors by decreasing eigenvalues.

- Step 5::

-

Choose k eigenvectors with the largest eigenvalues.

- Step 6::

-

Transform the samples onto the new subspace.

2.5 LDA-based classification

LDA is a generative probabilistic method and is one of the most popular approaches used for biometric recognition. The basic concept of LDA is to separate classes by finding a suitable border between them, and the classification is then executed in the transformed space depending on some metric such as Euclidean distance. The classification procedure can be divided into two steps: (1) computing the posterior (confidence) values for each class and (2) determining the index of the class to which the test sample belongs in relation to the class with the maximum scores by solving \({\arg \max }_l g_{l}\) [26, 27]. Thus,

where \({\varSigma }_l\) is the class covariance matrix of the class l, \(\mu _l\) is the mean vector of the class l and \(\pi _l\) is the prior probability of the class l. These are estimated by:

where \(n_l\) is the number of images in class l and n is the total number of objects in the training set. The construction of the classifier can be summarized as follows:

- Step 1::

-

Calculate the mean vector \(\mu _l\) and prior probability of class \(\pi _l\) as given in Eqs. 11 and 13.

- Step 2::

-

Compute the pooled covariance matrix \({\varSigma }_l\), as given in Eq. 12, which must be positive definite.

- Step 3::

-

Estimate the linear discriminantFootnote 1 as given in Eq. 10.

- Step 4::

-

Choose the maximum of \({\arg \max }_l g_{l}\).

3 Feature sampling

The previous section has demonstrated that LDA outperforms common subspace methods. However, there is still plenty of room to improve its performance further. One likely avenue for improvement has been proposed which enhances performance by employing numerous LDAs trained on various parts of the data which are then combined in an ensemble [28].

An ensemble is a method used to merge a number of weak classifiers to build up a strong classifier, as shown in Fig. 3. Such multiple classifier ensembles are variously called mixtures of experts or combinations of multiple classifiers. The idea is to use a set of weak classifiers and assemble them to build an ensemble classifier with a powerful decision rule. It should be noted that weak classifiers do not always make the same error. The overall error of the combined classifiers together provides an outcome that improves considerably on what any individual classifier could achieve on its own. One main condition for the success of combining different weak classifiers is that the outcomes of single classifiers for the same inputs must vary. The diverse individual classifiers are obtained by employing various training datasets, various feature datasets, different types of single classification methods and a fusion rule. A multiple LDA classifier is constructed in this study by combining many individual weak classifiers, aiming to build a more robust LDA classifier that includes the overall palm feature space without loss of discriminant information [28].

3.1 Random sampling-based LDA for palmprint recognition

The random sampling method (RSM) is a common ensemble building method used to improve the precision of weak classifiers. The classifiers are constructed using the concept of random sampling of the palmprint feature to develop an ensemble from the individual classifiers trained using different feature subsets. This section proposes the application of the random sampling procedure to build many weak LDA classifiers. Different palmprint feature subsets are first randomly selected. Then, an LDA classifier is built on each of those palm subsets, and a fusion rule is used at the end for prediction with the palm testing set. The proposed random sampling LDA classification methodology includes the following steps:

- Step 1::

-

Generate random training data set.

- Step 2::

-

Use PCA on the palm training sets. Then remove all the eigenvectors with null eigenvalues.

- Step 3::

-

The M LDA classifiers are the constructs from the low-dimensional PCA subspace.

- Step 4::

-

In the palm recognition step, the outcomes of the M LDA classifiers are fused using a fusion rule to generate with a final decision.

Figure 4 illustrates the main steps of the FKP recognition process.

3.2 Fusion process

In ensemble learning, strong classifiers are built by combining different weak classifiers to overcome the overall error for the results of classification. Different feature subsets, data sets and individual classifiers are used to obtain the set of weak classifiers. Fusion rules are used to combine the individual classifiers. The proposed ensemble framework based on various training samples is presented in Fig. 2.

In our proposed approach, it possible to undertake the fusion at two levels: the feature level and the score level.

-

1.

Feature-level fusion The fusion technique is preferred in the area of biometrics because it provides sufficient informational content. In our approach, a palmprint image sample has two different types of feature, PCLBP and PHOG. PCLBP and PHOG features are concatenated for the purpose of classification.

-

2.

Score-level fusion The objective of using fusion is to improve performance by constructing an ensemble of different LDA classifiers, which are trained on the different feature subsets. In our proposed method, several classifiers are combined by applying the sum score rule for reducing overall error. The overall accuracy of palmprint recognition appears to be improved by using this ensemble approach.

4 Experiments and results

Extensive experiments were conducted to validate and evaluate the effectiveness of the proposed methods, using the multispectral palmprint database. The multispectral palmprint database was obtained from the Hong Kong Polytechnic University (PolyU) [29].

4.1 Palm database

The palmprint images were captured from 195 male and 55 female volunteers (a total of 250) who were aged between 20 and 60 years. The images in the database were captured with infrared and visible light, i.e. red, green, blue and NIR illuminations, in two individual sessions. Furthermore, regarding each session, the researcher captured six images for each type of illumination—red, green, blue and NIR spectra. Overall, for each illumination type, the database contains 6000 (\(500\times 12\)) images obtained from 500 different palms. Therefore, in total the database contains \(6000\times 4=24{,}000\) palmprint images. The average interval between the consecutive sessions was approximately 9 days. Figure 5 presents sample palmprint images of different spectra (red, green, blue and NIR).

4.2 Protocol I

In our experiments, we used an evaluation protocol similar to nearest feature space DWT [17], (NFS)[19], CFKNNC [19] and TPTSR [20]. In this protocol, the palmprint images gathered in the first session were selected as the training set and all of the samples from the second session were employed as the testing set (six training sets and six test sets). Therefore, for each spectrum (blue, green, green and NIR) there were 3000 (\(500\times 6\)) training samples and 3000 (\(500\times 6\)) test samples. For palm features, a PCMLBP descriptor was generated with different radii for five scales (\(R=1,3,5,7,9\)) and eight neighbours. The final dimension of the PCMLBP is 1280 (\(5\times 256\)) for five scales. Furthermore, in terms of PCLBP–PHOG, the PCLBP descriptor was generated with a radius of six and eight neighbours. For the PHOG descriptor, we used \(L=3\) pyramids, a bin size of \(N=8\) and an orientation range of [0,360]. This results in a descriptor with a dimension of 680. It should be noted that the experimentation process was repeated ten times to obtain the different training datasets. The results of the experiments presented in Table 1 show the recognition rates obtained for 250 random subsets of the feature dataset.

Recognition rates for the six training samples and six testing samples from the PolyU multispectral palmprint database. The horizontal axis represents the number of features (50, 100, 150, 200, 250, 300 and 350), and the vertical axis indicates the recognition rates of the PCLBP–PHOG descriptor for the four spectra (blue, green, red and NIR)

In the case of the blue spectrum, the PCLBP–PHOG approach yields the highest rate of 99.4%, while the PCMLBP has the second highest rate of 98.90%. Moreover, it can be seen that in the case of the green spectrum, the PCLBP–PHOG method achieves the best result with a rate of 99.07%. Furthermore, comparing the ensemble approach to other state-of-the-art methods, it results in increased performance of 2.7, 0.3, 5.57 and 1.05% over NFS, CFKNNC, DWT and the TPTSR, respectively. The PCMLBP method offers the best result of 97.27%, higher than for NFS and the DWT, but 1.5 and 0.75% lower than CFKNNC and the TPTSR, respectively. In the case of the red spectrum, the PCLBP–PHOG yields better results than the NFS, CFKNNC, DWT and TPTSR. Furthermore, the results indicate that PCLBP–PHOG achieves the best result, with a performance accuracy of 99.6% followed by the PCMLBP with a rate of 98.74%. The table also confirms that the accuracy of performance of the PCLBP–PHOG is greater than that of other existing methods, i.e. the NFS, DWT and TPTSR. In addition, the experiments reveal that the PCMLBP technique achieves an accuracy of 97.54%, outperforming the DWT and CFKNNC methods, respectively, although it is 0.63 and 0.8% lower than the NFS and TPTSR methods, respectively, for the NIR spectrum.

Furthermore, using an ensemble of several LDA classifiers has an impact on the recognition rates, exhibiting an improvement in recognition accuracy compared to the individual LDA classifier recognition rates. The results of the different features selected (50, 100, 150, 200, 250, 300 and 350) for the training sets in the PCLBP–PHOG approach are shown in Fig. 6. The X-axis indicates the number of features in the training set, whil e the Y-axis shows the accuracy of the test images. The multiclassifier recognition rate of the blue spectrum offers the highest performance, above all other spectra, when using the PCMLBP features. However, red was the best spectrum when the fusion PCLBP–PHOG was applied. Figures 7 and 8 illustrate the receiver operating characteristic (ROC) curves, plotting the false acceptance rate (FAR) versus the genuine acceptance rate (GAR) for the different multispectral palmprint data sets. These curves represent the red, green, blue and NIR spectra. It is apparent from these figures that the recognition rate of the ensemble classifier in the proposed PCLBP–PHOG method is better for the red spectrum than the other spectral bands (blue, green and NIR).

4.3 Protocol II

To validate the performance of our methodology, we used the same palmprint database to compare the proposed PCMLBP and PCLBP–PHOG approaches to two state-of-the-art methods, specifically the RBF [18] and the NFS [19]. For validation purposes, we followed the standard evaluation protocol as given in [18, 19], using the first three images under the blue, red, green and NIR spectra for the training set and six palms from the second session for the testing set. Moreover, all parameters of the proposed methods were initialized as described in Protocol I. A detailed comparison of the results using the LDA ensemble trained on a subset of 250 random features is summarized in Table 2. This table clearly demonstrates the advantage of the robustness of the PCLBP–PHOG approach in terms of the histogram features, in addition to its effectiveness over other reported methods. The PCLBP–PHOG offers attractive recognition performance rates of 99.17, 98.33, 99.34 and 98.77% for the blue, green, red and NIR spectra, respectively. Moreover, the results displayed in the table indicate that the PCMLBP approach outperforms the NFS. With regard to the red spectrum, the PCMLBP method achieves an accuracy of 97.67%, which is higher than the NFS but 0.53% lower in comparison with the RBF. The recognition rate of the PCMLBP in relation to the blue spectrum is 97.99% and thus outperforms the RBF method. However, the PCMLBP method still achieves lower results than the RBF method for the other spectra (green, red and NIR). The ROC curves for the PCMLBP method are shown in Fig. 9. The effectiveness of the proposed PCLBP–PHOG method is presented in Figure 10, which reflects the ROC curves showing the best recognition rates for all spectra.

LDA ROC curves for the PCLBP and PHOG descriptors under the red spectrum using six palmprint images were gathered in the first session for the training sample and six palmprint images were gathered in the second session for the testing sample. Comparison of the use of single classifiers versus an ensemble approach

4.4 Discussion

The proposed approach successfully captures discriminative information using the multi-LDA classifier. The method combines ten LDA classifiers based on random sampling using the sum score rule. This leads to a significant increase in the recognition rates compared to other existing methods. The results are plotted in Fig. 11, which shows that the ensemble of several LDA classifiers has an impact on the recognition rates. It has also been demonstrated that the accuracy of recognition is above the individual LDA classifier recognition rates.

In the two experiments, we noticed that the results of our proposed method for Protocol II are slightly lower in comparison with the outcomes obtained in Protocol I. This is because three training and six testing samples are used for the experimentation in Protocol II, which does not provide results as good as those for the six training and six testing samples in Protocol I. Moreover, as different training and testing samples are used in our two experiments, we provide a comparison reliant on the number of testing and training sets applied in state-of-the-art techniques. Our PCLBP–PHOG approach is found to outperform existing methods in all respects. The ROCs in Figs. 7, 8, 9 and 10 plot the false acceptance rate versus the genuine acceptance rate for the different multispectral palmprint data sets, represented by the red, green, blue and NIR spectral. As shown in these figures, it is possible to achieve greater accuracy using six training and six testing samples compared to three training and six testing samples. Moreover, there are several results in Tables 1 and 2 that show the PCLBP–PHOG method performs well and can obtain a higher recognition rate than PCMLBP and other state-of-the-art methods. It is also observed from the tables and graphs that red spectrum palmprints perform better in comparison with other spectral palmprints. The red spectrum captures the palm line and analysis the vein structures; this helps in the comparison and classification of palms with similar palm lines [29, 30].

5 Computational complexity

The first method used different radii of the PCLBP operator. It is noticeable that there are too many patterns in the multiscale PCLBP, with high computational complexity. The second method is a combination of PHOG and PCLBP, which leads to the dimensionality problem and further increases computational complexity. Thus, the idea was to reduce the dimension size, which has a large number of palmprint features for use in the classification. The dimension of the PCLBP–PHOG feature vector can be reduced by using feature selection or extraction methods. PCA was applied to a randomly selected feature subset in order to reduce both the dimensionality of the palmprint feature vector and the computational complexity involved. The performance of the proposed PCLBP–PHOG method was compared to other methods. The experimental results obtained showed that it gives outstanding results when compared to other existing methods.

6 Conclusion

A new multispectral palmprint recognition methodology has been proposed based on the combination of PCLBP and PHOG descriptors. The study used random sampling to construct many individual LDA clarifiers and applied PCA for to reduce the dimensions on random length feature vectors before using an LDA classifier for final classification. In this work, we use the fusion rules to fuse a number of LDA classifiers and thus demonstrate the concept of the framework proposed. The experimentation is performed according to two different protocols, confirming that the proposed methodology achieves higher recognition rates. Furthermore, it can be observed that in the PCMLBP approach, both with three and six training samples, there is better performance for the blue spectral band than other spectral bands. Moreover, the PCLBP–PHOG approach achieves a higher recognition rate and outperforms the PCMLBP and other state-of-the-art techniques, with the red spectral band performing better compared to other palmprint spectra. The proposed method, using either protocol, has many advantages, such as being simple to implement. Currently, we are extending this work to finger knuckle print data for validation purposes and to verify the effectiveness of the proposed approach.

Notes

Matlab offers to find discriminant functions with the command classify.

References

Funada J, Ohta N, Mizoguchi M, Temma T, Nakanishi K, Murai A, Sugiuchi T, Wakabayashi T, Yamada Y (1998) Feature extraction method for palmprint considering elimination of creases. In: Fourteenth international conference on pattern recognition, 1998. Proceedings, vol 2. IEEE, pp 1849–1854

Zhang D, Shu W (1999) Two novel characteristics in palmprint verification: datum point invariance and line feature matching. Pattern Recognit 32(4):691–702

Han CC, Cheng HL, Lin CL, Fan KC (2003) Personal authentication using palm-print features. Pattern Recognit 36(2):371–381

Lu G, Wang K, Zhang D (2002) Wavelet-based feature extraction for palm-print identification. In: Second international conference on image and graphics. International Society for Optics and Photonics, pp 780–784

Kumar A, Shen HC (2004) Palmprint identification using PalmCodes. In: Third international conference on image and graphics (ICIG’04). IEEE, pp 258–261

Wang X, Gong H, Zhang H, Li B, Zhuang Z (2006) Palmprint identification using boosting local binary pattern. In: 18th international conference on pattern recognition, 2006, ICPR 2006, vol 3. IEEE, pp 503–506

Noh JS, Rhee KH (2005) Palmprint identification algorithm using Hu invariant moments and Otsu binarization. In: Fourth annual ACIS international conference on computer and information science. IEEE, pp 94–99

Lu G, Zhang D, Wang K (2003) Palmprint recognition using eigenpalms features. Pattern Recognit Lett 24(9):1463–1467

Sang H, Yuan W, Zhang Z (2009) Research of palm-print recognition based on 2DPCA. In: Advances in neural networks—ISNN 2009. Springer, pp 831–838 (2009)

Niyogi X (2004) Locality preserving projections. In: Neural information processing systems, vol 16. MIT, p 153

Kong AWK, Zhang D (2004) Competitive coding scheme for palmprint verification. In: Proceedings of the 17th international conference on pattern recognition, 2004, ICPR 2004, vol 1. IEEE, pp 520–523

Song Z, Yan X, Liu YP (2010) Palmprint verification based on orthogonal code. In: Third international conference on information and computing (ICIC), vol 3. IEEE, pp 221–224

Jia W, Huang DS, Zhang D (2008) Palmprint verification based on robust line orientation code. Pattern Recognit 41(5):1504–1513

Hoggatt V Jr, Bicknell M (1973) Generalized Fibonacci polynomials. Fibonacci Q 11(5):457–465

Kylberg G, Sintorn IM (2013) Evaluation of noise robustness for local binary pattern descriptors in texture classification. EURASIP J Image Video Process 2013(1):1–20

El-Tarhouni W, Shaikh MK, Boubchir L, Bouridane A (2014) Multi-scale shift local binary pattern based-descriptor for finger-knuckle-print recognition. In: IEEE 26th international conference on microelectronics (ICM). IEEE, pp 184–187

Xu X, Guo Z, Song C, Li Y (2012) Multispectral palmprint recognition using a quaternion matrix. Sensors 12(4):4633–4647

Zhang S, Gu X (2013) Palmprint recognition based on the representation in the feature space. Optik 124(22):5434–5439

Xu Y, Zhu Q, Fan Z, Qiu M, Chen Y, Liu H (2013) Coarse to fine K nearest neighbor classifier. Pattern Recognit Lett 34(9):980–986

Li J, Cao J, Lu K (2013) Improve the two-phase test samples representation method for palmprint recognition. Optik 124(24):6651–6656

Ojala T, Pietikainen M, Maenpaa T (2002) Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans Pattern Anal Mach Intell 24(7):971–987

Chan C, Kittler J, Messer K (2007) Advances in biometrics. Lect Notes Comput Sci 4642:809–818

Khan RA, Meyer A, Konik H, Bouakaz S (2012) Human vision inspired framework for facial expressions recognition. In: 2012 19th IEEE international conference on image processing (ICIP). IEEE, pp 2593–2596

Behera M, Govindan V (2014) Palm print authentication using PCA technique. Int J Comput Sci Inf Technol 5:3638–3640

Minaee S, Wang Y (2016) Palmprint recognition using deep scattering convolutional network. arXiv preprint arXiv:1603.09027

Fukunaga K (2013) Introduction to statistical pattern recognition. Academic Press, London

Barnouti NH, Al-Dabbagh SSM, Matti WE, Naser MAS (2016) Face detection and recognition using Viola–Jones with PCA–LDA and square Euclidean distance. Int J Adv Comput Sci Appl 7(5):371–377

Wang X, Tang X (2006) Random sampling for subspace face recognition. Int J Comput Vis 70(1):91–104

Zhang D, Guo Z, Lu G, Zuo W (2010) An online system of multispectral palmprint verification. IEEE Trans Instrum Meas 59(2):480–490

Cui J (2013) Bidirectional representation for face recognition across pose. Neural Comput Appl 23(5):1437–1442

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

El-Tarhouni, W., Boubchir, L., Elbendak, M. et al. Multispectral palmprint recognition using Pascal coefficients-based LBP and PHOG descriptors with random sampling. Neural Comput & Applic 31, 593–603 (2019). https://doi.org/10.1007/s00521-017-3092-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-017-3092-7