Abstract

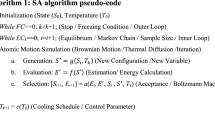

A new hybrid gradient simulated annealing algorithm is introduced. The algorithm is designed to find the global minimizer of a nonlinear function of many variables. The function is assumed to be smooth. The algorithm uses the gradient method together with a line search to ensure convergence from a remote starting point. It is hybridized with a simulated annealing algorithm to ensure convergence to the global minimizer. The performance of the algorithm is demonstrated through extensive numerical experiments on some well-known test problems. Comparisons of the performance of the suggested algorithm and other meta-heuristics methods were reported. It validates the effectiveness of our approach and shows that the suggested algorithm is promising and merits to be implemented in practice.

Similar content being viewed by others

References

Ali MM, Gabere M (2010) A simulated annealing driven multi-start algorithm for bound constrained global optimization. J Comput Appl Math 233(10):2661–2674

Ali MM, Khompatraporn C, Zabinsky ZB (2005) A numerical evaluation of several stochastic algorithms on selected continuous global optimization test problems. J Glob Optim 31:635–672

Armijo L (1966) Minimization of functions having Lipschitz continuous first-partial derivatives. Pac J Math 16(1):187–192

Ayumi V, Rere L, Fanany MI, Arymurthy AM (2016) Optimization of convolutional neural network using microcanonical annealing algorithm. arXiv preprint arXiv:1610.02306

Bertsekas DP (1999) Nonlinear programming. Athena Scientific, Belmont

Bessaou M, Siarry P (2001) A genetic algorithm with real-value coding to optimize multimodal continuous functions. Struct Multidisc Optim 23:63–74

Bonnans J-F, Gilbert JC, Lemaréchal C, Sagastizábal CA (2006) Numerical optimization: theoretical and practical aspects. Springer, Berlin

Cardoso MF, Salcedo RL, Azevedo SFD (1996) The simplex-simulated-annealing algorithm approach to continuous non-linear optimization. Comput Chem Eng 20:1065–1080

Certa A, Lupo T, Passannanti G (2015) A new innovative cooling law for simulated annealing algorithms. Am J Appl Sci 12(6):370

Chakraborti S, Sanyal S (2015) An elitist simulated annealing algorithm for solving multi objective optimization problems in internet of things design. Int J Adv Netw Appl 7(3):2784

Chelouah R, Siarry P (2000) Tabu search applied to global optimization. Eur J Oper Res 123:256–270

Corana A, Marchesi M, Martini C, Ridella S (1987) Minimizing multimodal functions of continuous variables with the simulated annealing algorithm. ACM Trans Math Softw 13(3):262–280

Dekkers A, Aarts E (1991) Global optimization and simulated annealing. Math Program 50(1):367–393

Dennis JE Jr, Schnabel RB (1996) Numerical methods for unconstrained optimization and nonlinear equations, vol 16. SIAM, Philadelphia

El-Alem M, Tapia R (1995) Numerical experience with a polyhedral-norm cdt trust-region algorithm. J Optim Theory Appl 85(3):575–591

EL-Alem M, Aboutahoun A, Mahdi S, (2019) Efficient modified simulated-annealing algorithm for finding the global minimizer of a nonlinear unconstrained optimization problem. Appl Math Inf Sci Int J 13:1–13

Fan S-KS, Zahara E (2007) A hybrid simplex search and particle swarm optimization for unconstrained optimization. Eur J Oper Res 181(2):527–548

Farid M, Leong WJ, Hassan MA (2010) A new two-step gradient-type method for large-scale unconstrained optimization. Comput Math Appl 59(10):3301–3307

Fletcher R (2013) Practical methods of optimization. Wiley, New York

Gonzales GV, dos Santos ED, Emmendorfer LR, Isoldi LA, Rocha LAO, Estrada EdSD (2015) A comparative study of simulated annealing with different cooling schedules for geometric optimization of a heat transfer problem according to constructal design. Sci Plena 11(8):11

Gosciniak I (2015) A new approach to particle swarm optimization algorithm. Exp Syst Appl 42(2):844–854

Guodong Z, Ying Z, Liya S (2015) Simulated annealing optimization bat algorithm in service migration joining the Gauss perturbation. Int J Hybrid Inf Technol 8(12):47–62

Han S (1977) A globally convergent method for nonlinear programming. J Optim Theory Appl 22(3):297–309

Hedar A-R, Fukushima M (2002) Hybrid simulated annealing and direct search method for nonlinear unconstrained global optimization. Optim Methods Softw 17(5):891–912

Kelley CT (1999) Iterative methods for optimization, vol 18. SIAM, Philadelphia

Laguna M, Martí R (2005) Experimental testing of advanced scatter search designs for global optimization of multimodal functions. J Glob Optim 33(2):235–255

Metropolis N, Rosenbluth AW, Rosenbluth MN, Teller AH, Teller E (1953) Equation of state calculations by fast computer machines. J Chem Phys 21(10):1087–1092

Narushima Y, Yabe H (2006) Global convergence of a memory gradient method for unconstrained optimization. Comput Optim Appl 35(3):325–346

Nocedal J, Wright S (2006) Numerical optimization. Springer, Berlin

Paulavičius R, Chiter L, Žilinskas J (2018) Global optimization based on bisection of rectangles, function values at diagonals, and a set of Lipschitz constants. J Glob Optim 71(1):5–20

Poorjafari V, Yue WL, Holyoak N (2016) A comparison between genetic algorithms and simulated annealing for minimizing transfer waiting time in transit systems. Int J Eng Technol 8(3):216

Rere LR, Fanany MI, Murni A (2014) Application of metaheuristic algorithms for optimal smartphone-photo enhancement. In: 2014 IEEE 3rd global conference on consumer electronics (GCCE). IEEE, pp 542–546

Rere L, Fanany MI, Arymurthy AM (2016) Metaheuristic algorithms for convolution neural network. Comput Intell Neurosci 13:2016

Samora I, Franca MJ, Schleiss AJ, Ramos HM (2016) Simulated annealing in optimization of energy production in a water supply network. Water Resour Manag 30(4):1533–1547

Shi Z-J, Shen J (2004) A gradient-related algorithm with inexact line searches. J Comput Appl Math 170(2):349–370

Siarry P, Berthiau G, Durbin F, Haussy J (1997) Enhanced simulated-annealing algorithm for globally minimizing functions of many continuous variables. ACM Trans Math Softw 23(2):209–228

Tsoulos IG, Stavrakoudis A (2010) Enhancing PSO methods for global optimization. Appl Math Comput 216:2988–3001

Vrahatis MN, Androulakis GS, Lambrinos J, Magoulas GD (2000) A class of gradient unconstrained minimization algorithms with adaptive stepsize. J Comput Appl Math 114(2):367–386

Wang G-G, Guo L, Gandomi AH, Alavi AH, Duan H (2013) Simulated annealing-based krill herd algorithm for global optimization. In: Abstract and applied analysis, volume 2013. Hindawi Publishing Corporation

Wu J-Y (2013) Solving unconstrained global optimization problems via hybrid swarm intelligence approaches. Math Probl Eng. https://doi.org/10.1155/2013/256180

Xu P, Sui S, Du Z (2015) Application of hybrid genetic algorithm based on simulated annealing in function optimization. World Acad Sci Eng Technol Int J Math Comput Phys Electr Comput Eng 9(11):677–680

Yarmohamadi H, Mirhosseini SH (2015) A new dynamic simulated annealing algorithm for global optimization. J Math Comput Sci 14(2):16–23

Zhenjun S (2003) A new memory gradient method under exact line search. Asia-Pac J Oper Res 20(2):275–284

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no conflict of interest.

Additional information

Communicated by V. Loia.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: List of graphs and shapes

Appendix: List of graphs and shapes

In the following, there are several graphs and shapes of some problems of the second type of test problems which are listed in Column 6 of Table 4. Figures 3, 4, 5, 6 and 7 show the shapes of some functions of the second type of test problems in their two-dimensional form. Figures 8, 9, 10, 11, 12 and 13 show graphs of the relationship between the values of the object function f(x) and the number of function evaluations, also the relationship between the values of gradient norms \(||grad f(x*)||_{2}\) and the number of function evaluations using “GL” and “GLMSA” algorithms

Rights and permissions

About this article

Cite this article

EL-Alem, M., Aboutahoun, A. & Mahdi, S. Hybrid gradient simulated annealing algorithm for finding the global optimal of a nonlinear unconstrained optimization problem. Soft Comput 25, 2325–2350 (2021). https://doi.org/10.1007/s00500-020-05303-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-020-05303-x