Abstract

Fuzzy variable weights comprehensive evaluation is investigated in the paper. Its necessity and principle are discussed. The complexity and weak effect of direct variable weights methods, which are the traditional ways, are illustrated. So the necessity of indirect variable weights methods is shown, which only need to determine a parameter p. To determine it, variable weights degrees are divided into ten grades based on the change quantity of evaluation value. Besides, factors affecting p are investigated. Through data analyses and computer simulations, some reference values of p for different variable weights degrees are given. Moreover, two fitting functions for p are also given. These studies derive a simple method to get a satisfactory variable weights evaluation value. Three examples are given to demonstrate indirect variable weights methods. Lastly, some relevant discussions are given.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the reality, we often need evaluate some things for decision makings. When the object is complicated, we often evaluate its some related and important factors or aspects firstly, called single-factor evaluation, and then synthesize the obtained values to get a total evaluation of the thing. This is comprehensive evaluation. When the evaluation has fuzzy characteristic, fuzzy comprehensive evaluation becomes a suitable evaluation method (Peng and Sun 2007; Hu 2014). Fuzzy comprehensive evaluation has been used in engineering and techniques extensively (Engin et al. 2004; Khan and Sadiq 2005; Jia et al. 2011; Li et al. 2015).

As the existence of difference of importance among these factors, the weight indicating the importance of a factor is involved. However, in many real problems, those weak items (factors whose single-factor evaluation values are smaller, and strong items indicate factors whose values are greater) often play an important role in evaluations. So, we need emphasize them, i.e., give them great weights. But before evaluations, which factors are weaker is unknown, and different objects have different weak items. Thus, taking the way that each factor is given a fixed weight often leads to a poor total evaluation, even if each single-factor evaluation is reasonable. As there really are differences in importance among these factors generally, a practical method is giving the weight of each factor in general conditions or in the condition that all evaluation values are the same (assume that the weight is constant as long as all values are equal) firstly. Such a weight is called basic weight or constant weight (Li et al. 2004; Huang et al. 2005; Peng and Sun 2007; Hu 2014). Basic weight has a general meaning for a class of objects. It can be obtained by methods of AHP (Haq and Kannan 2006; Wang et al. 2012), etc. Although basic weight has a general meaning, poor total evaluations are still obtained by the above reasons. Thus, when to evaluate an object, we should adjust the weight properly or reasonably by all evaluation values, which is called variable weight, then use the changed weights and chosen evaluation function to get a better evaluation value of the thing. This is fuzzy variable weights comprehensive evaluation.

The concept of variable weights was presented by Wang (1985) in the 1980s. Yao and Li (2000), Li et al. (2004), Li and Li (2012), and some other scholars further studied it. Now it has been used in many areas (Huang et al. 2005; Wu et al. 2016, 2017). In fact, variable weights have been noticed by many scholars, and may be given different names such as variable weight, dynamic viewpoint, dynamic comprehensive evaluation (Mei 1996; Peng et al. 1996; Feng et al. 2010). The famous “entropy weight comprehensive evaluation” also has the ingredient of variable weights (Jia et al. 2011). It shows the importance of variable weights from another angle. Variable weights evaluation requires computing the changed weights firstly, then uses the weights to compute evaluation value. But the methods presented by this idea are too complicated in computations (Yao and Li 2000; Li et al. 2004; Peng and Sun 2007; Jia et al. 2011; Wu et al. 2016). They also have not reflected the influence of emphasizing degree (see Sect. 3). Thus, the effect of the variable weights usually is unsatisfactory. Chai (2009) presented a new variable weight method to compute fuzzy variable weights evaluation values. But the discussions are too elementary. This paper will continue the work and perform the following works: perfect the principle of variable weights, point out the faults of direct variable weights methods and the necessity of doing it indirectly, which mainly lies on determining a parameter p, find out factors influencing p greatly and then give some reference values of p for different variable weights degrees, and two fitting functions to determine p. These studies give a simple method to obtain a satisfactory variable weights evaluation value. Some relevant discussions are given lastly.

2 Concepts and some previous results

First, we discuss the simplest fuzzy comprehensive evaluation model. Let U = {u1, u2,…, un}(n ≥ 2) be the evaluation factors set, V = [0,1] the comment set, xi the single-factor evaluation value of ui and ωi the weight, i = 1,2,…,n. Then, X = (x1, x2,…, xn) ∈Vn is the single-factor evaluation vector (simply called evaluation vector) and ω = (ω1, ω2,…, ωn)∈Vn the weights vector (suppose ωi > 0 for any i). If ω satisfies ∑ n i=1 ωi = 1(now there are ωi < 1 for any i), it is called normalization. There are a lot of fuzzy comprehensive evaluation functions (Li et al. 2000; Peng and Sun 2007; Hu 2014). The three involved in the paper are

where ω is normalized, p > 0 is parameter.

Denote xs = min1≤i≤n{xi}, xg = max1≤i≤n{xi}, by the axioms of evaluation function (Li et al. 2000; Peng and Sun 2007), there is

Theorem 2.1

(Li et al. 2000; Hu 2014). Let U = {u1, u2,…, un} be the evaluation factors set, X = (x1, x2,…, xn) the evaluation vector, then for any fuzzy comprehensive evaluation function f (x1, x2,…, xn), there is xs ≤ f (x1, x2,…, xn) ≤ xg.

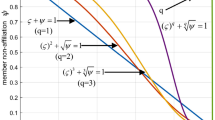

For (2), denote f2(x1, x2,…, xn; p) simply by f2(p). Let \( f_{2} (0) = \prod\nolimits_{i = 1}^{n} {x_{i}^{{\omega_{i} }} } \), then f2(p) increases monotonously and continuously from \( \prod\nolimits_{i = 1}^{n} {x_{i}^{{\omega_{i} }} } \) to xg on [0,∞) (Chai 2009). For (3), denote f3(x1, x2,…, xn; p) simply by f3(p). Let \( f_{3} (0) = 1 - \prod\nolimits_{i = 1}^{n} {(1 - x_{i} )^{{\omega_{i} }} } \), then f3(p) decreases monotonously and continuously from \( 1 - \prod\nolimits_{i = 1}^{n} {(1 - x_{i} )^{{\omega_{i} }} } \) to xs on [0,∞) (Chai 2009). Besides, f2(1) = f3(1) = f1(x1, x2,…, xn). As the union of ranges of f2(p) and f3(p) is [xs, xg], so from Theorem 2.1, there is

Theorem 2.2

Let U = {u1, u2,…, un} be the evaluation factors set, X = (x1, x2,…, xn) the evaluation vector, ω = (ω1, ω2,…, ωn) the weights vector, then for any value obtained by a fuzzy comprehensive evaluation function f(x1, x2,…, xn), there exists a p such that f(x1, x2,…, xn) = f2(p) or f(x1, x2,…, xn) = f3(p).

Proof

By Theorem 2.1, there is xs ≤ f (x1, x2,…, xn) ≤ xg. By it and the monotonicity of f2(p) and f3(p), there are (Chai 2009) xg ≥\( 1 - \prod\nolimits_{i = 1}^{n} {(1 - x_{i} )^{{\omega_{i} }} } \)≥ f3(1) = f2(1) ≥\( \prod\nolimits_{i = 1}^{n} {x_{i}^{{\omega_{i} }} } \) ≥ xs. As the ranges of f2(p) and f3(p) are [\( \prod\nolimits_{i = 1}^{n} {x_{i}^{{\omega_{i} }} } \), xg] and [xs,\( 1 - \prod\nolimits_{i = 1}^{n} {(1 - x_{i} )^{{\omega_{i} }} } \)] respectively, so the union of them is [xs, xg]. Considering the continuity of f2(p) and f3(p), the conclusion holds.

Theorem 2.2 shows that (2) and (3) are two inclusive fuzzy comprehensive evaluation functions [in fact, for each p > 0, (2) is an evaluation function, and the same for (3)]. Hence, the study for any evaluation function can be replaced with the study of (2) and (3) in some degree.

Besides, although there are a lot of evaluation functions, but for an evaluation vector, an evaluation value usually can be obtained by different functions with different weights. So, when the weight is variable, thinking of that one function is better than another has little meaning. In comprehensive evaluations, formula (1) is often used, especially in variable weights evaluations (Li et al. 2004; Peng and Sun 2007; Hu 2014; Wang et al. 2012). The reasons are that its computation is simple, it uses all weights and evaluation values, and its range is [xs, xg]. This paper also uses it as evaluation function.

3 Principle of fuzzy variable weights comprehensive evaluation

There are three cases in variable weights (Li et al. 2004): emphasizing weak items (weak items play an important role in evaluations), emphasizing strong items (strong items play an important role in evaluations), and the mixture (emphasizing some weak and some strong items). Here, we do not consider the last case. As the two former cases are similar, so we mainly study the first one. In the following, the discussed variable weights are only for it, except Sects. 7, 8 and 9.

In variable weights, the weight is influenced by single-factor evaluation values, so Li et al. (2004), Peng and Sun (2007), and Hu (2014) gave the following variable weights formulae

However, these formulae have defects. Basic weights have some influences in variable weights obviously. They are the initial weights of variable weights. But they are not reflected in (4). Besides, the importance of weak items in evaluations for different things is different. Some are less important; some are very important. For the latter, we should emphasize weak items greatly; thus, the change extent of weights in variable weights is great. For the former, the extent should be little. We use variable weights degree to denote the degree of emphasizing weak items or the change degree of weights, but it is not reflected in (4). By these reasons, the effect of variable weights by (4) will be unsatisfactory.

Denote basic weights vector by \( \omega_{b} = (\omega_{{b_{1} }} ,\omega_{{b_{2} }} , \ldots ,\omega_{{b_{n} }} ) \), variable weights degree by d, then (4) should be rewritten as:

Let \( \omega_{i} = \omega_{{b_{i} }} + \Delta \omega_{i} \), where ωi is the left side of (5), then \( \Delta \omega_{i} = \omega_{i} - \omega_{{b_{i} }} \) is just the change quantity of the ith weight to its basic weight. So, the fuzzy variable weights comprehensive evaluation formula should be

or

For (6) and (7), a changed weights vector still is weights vector. So, they should satisfy ∑ n i=1 Δωi = 0 and \( 0 < \omega_{{b_{i} }} + \Delta \omega_{i} < 1 \) for any i.

4 Necessity of indirect variable weights

Expressions (6) and (7) depict the principle of variable weights comprehensive evaluation well. Variable weights evaluation is that some changes of the weights occur to their basic weights. As the sum of all weights is one, so the increase in weights of some factors will result in the decrease in weights of some other factors. For the variable weights of emphasizing weak items, it is the increase in weights of weak items and the decrease of strong items. This results in the decline of evaluation value, but it cannot be less than xs by Theorem 2.1. As f3(p) decreases monotonously and continuously from the evaluation value under basic weights (without variable weights) to xs on [1,∞), there is

Theorem 3.1

(Chai 2009). LetU = {u1, u2,…, un} be the evaluation factors set,X = (x1, x2,…, xn) the evaluation vector,ωb the basic weights vector, then for any fuzzy variable weights comprehensive evaluation valuef(x1, x2,…, xn) of emphasizing weak items, there exists a unique value ofp on [1,∞) such thatf(x1, x2,…, xn) = f3(p), namely

Expression (8) shows there are two ways to achieve the goal of variable weights evaluation. One is direct variable weights by the left side of (8), i.e., compute the changed weights firstly using (5), then compute variable weights evaluation value using (6) or (1) with the changed weights. The other is indirect variable weights by the right side of (8) or (3) with basic weights, i.e., determine the value of p firstly, then compute evaluation value. When using direct variable weights, n new weights need to determine, in which each is a function of all xi, \( \omega_{{b_{i} }} \), and d. As there are mutually effects among them, the effects are nonlinear, so it is difficult to build reasonable variable weights formulae suitable for any case. Formulae under some assumptions may be given, such as those formulae in some above-mentioned references which do not consider basic weights and variable weights degree. But they are still too complicated in computations. When using indirect variable weights, we only need to determine a value of p. After it, the evaluation value is easy to obtain. Thus, we need to investigate indirect variable weights methods.

In indirect variable weights, we should determine p. To achieve this goal, we should study factors affecting it and how they affect it. As variable weights are unnecessary when all xi are equal, so we suppose that not all xi are equal in the following.

5 Factors affecting p

When considering variable weights evaluation of emphasizing weak items, f3(p) should be used. To determine p, we should measure d, the variable weights degree, firstly. Obviously, the stronger the intensity of emphasizing weak items, the greater the change of the weights, thus the greater the decline of the evaluation value. Hence, we can use the change quantity of evaluation value to measure d. When X and ωb are given, f3(1) represents the evaluation value under basic weights or the value without variable weights. When weights change, the evaluation value declines, but at most to xs, so the greatest extent of decline of evaluation value is f3(1) − xs. For convenience, we divide variable weights degrees into ten grades: extremely small, very small, small, relatively small, medium inclining small, medium inclining great, relatively great, great, very great, and extremely great. We introduce a function to measure the d of variable weights of a thing

where f3(p) indicates the variable weights evaluation value of the thing, and stipulate generally that variable weights degrees belong to the preceding ten grades when d belong to (0, 0.1], (0.1, 0.2], (0.2, 0.3], (0.3, 0.4], (0.4, 0.5], (0.5, 0.6], (0.6, 0.7], (0.7, 0.8], (0.8, 0.9], (0.9, 1], respectively.

From (5)–(8) evaluation vector, basic weights, and d all influence p. Evaluation vector is characterized by its mean, variance, and dimension. Basic weights vector is characterized by its variance, dimension, and the relationship between it and the evaluation vector. So, we study the influence of these factors to p.

Notice 1

For a class, the d should be the d(p) determined by (9) in general conditions or the average of d(p) of all objects in the class. Thus, for an object, its d(p) will differ from the d, but the difference should be little because it belongs to the class. Hence, if the d of a class in unknown, we can use the d(p) of a thing in the class instead of it.

5.1 Influence of the mean of evaluation vector

Consider two evaluation vectors X1 = (0.85, 0.75, 0.9) and X2 = (0.45, 0.35, 0.5), whose basic weights vectors both are ωb = (0.4, 0.24, 0.36). Obviously, each number of X2 can be obtained from the corresponding number of X1 by subtracting 0.4, so they have the same variance, but differ in mean. For these two vectors, the corresponding p values computed by (9), (3) for some special d values are shown in Table 1.

Table 1 shows the mean of evaluation vector has a significant effect on p, and the effect is nonlinear. To get a same d, p should be less if the mean of the vector is greater. This conclusion can be obtained from (3): when the mean is little, each xi will be little, so 1 − xi will be great. Hence, to let (1 − xi)p have a same change, p should be greater.

Table 1 also shows that d has a significant effect on p, and the effect is nonlinear.

5.2 Influence of the variance of evaluation vector

Consider two evaluation vectors X3 = (0.3, 0.5, 0.9) and X4 = (0.5, 0.55, 0.65), whose basic weights vectors both are ωb = (0.45, 0.3, 0.25). The mean of the two vectors is the same, but they differ in variance. Their variances are 0.093 and 0.0058, respectively. For these two vectors, the corresponding p values computed by (9), (3) for those special d values are shown in Table 2.

Table 2 shows the variance of evaluation vector has a significant effect on p, and the effect is nonlinear. To get a same value of d, p should be less if the variance is greater.

5.3 Influence of basic weights

Consider evaluation vector X = (0.3, 0.5, 0.9) and three basic weights vectors ωb1 = (0.1, 0.35, 0.55), ωb2 = (0.55, 0.35, 0.1), and ωb3 = (0.36, 0.34, 0.3). The first two have the same variance, but for the first, the weight of the weak item is less and the weight of the strong item is greater. And it is opposite for the second. The last two differ only in variance. For these vectors, the corresponding p values computed by (9), (3) for those special d values are shown in Table 3.

Table 3 shows that the variance of basic weights vector and the relationship between it and evaluation vector have some effects on p, but the effect is not significant. To get a same d, p should be less if the weights of the weak items are great, and the same if the variance of the basic weights vector is less.

5.4 Influence of the number of factors

Consider the influence of the number of evaluation factors, i.e., n, to p. For two evaluation vectors with different n, we cannot use a same basic weights vector. Besides, let two vectors’ mean and variance be equal, we cannot determine one from another. For convenience, we take stochastic simulations: for some numbers of n, we simulate 5000 times each and take the mean of p. In the simulations, each single-factor evaluation value is given randomly and independently, following uniform distribution on [0, 1]. Weights are given by the same way and then normalized. The results of the simulations are given in Table 4.

Notice 2

Table 4 shows for those d and n, the values of p are less than 100. However, in simulations, the case that p is greater than 100 does occur (it occurs frequently when n is 2). Sometimes p is very greater. Of course, it seldom occurs. For the convenience of handling, p is considered as 200 when it is greater than 200.

Table 4 indicates that n has some effects on p, but the effect is not significant. Besides, the influence is decreasing with the increasing of n. When d = 0.1, p decreases with the increasing of n; when d is from 0.3 to 0.9, p decreases first, then increases.

In simulations, we found that the values of p are more unstable when n is less. In reality, evaluations with n = 2 are little, and the same for evaluations whose n are greater, say, greater than 40, so we do not consider them. Now, the averages of p in Table 4 for n= 3, 4, 5, 7, 10, 15, 25, 40 and d being from 0.1 to 0.9 are 1.71, 2.53, 3.57, 5.03, 6.98, 9.970 15.04, 25.11, 55.51, respectively. So, the following suggestions are given: p should take values in (1, 1.71], (1.71, 2.53], (2.53, 3.57], (3.57, 5.03], (5.03, 6.98], (6.98,9.97], (9.97, 15.04], (15.04, 25.11], (25.11, 55.51], (55.51,∞) when variable weights degree belongs to extremely small, very small, small, little small, medium trending small, medium trending great, little great, great, very great, and extremely great, respectively. If we need only one p value, it is suggested for above variable weights degrees except the last one that p takes the midpoint of the interval. For the last case, it is suggested that p takes 116 by simulations using d = 0.95.

6 Variable weights fitting function

We found that variable weights degree, the mean, variance, and dimension of evaluation vector and basic weights vector all influence p. Thus, although the suggested values of p are given above for different variable weights degrees, for a given evaluation vector and basic weights vector, because of the influence of them, the suggested value of p may differ greatly with the reasonable value (compare the average values of p from Table 4 with the computed p values for the evaluation and basic weights vectors in Tables 1, 2). Hence, it is necessary to improve above method to give more reasonable values of p for the given evaluation vector and basic weights.

We learned that although basic weights and n have some influences on p, but the influence is not significant, so we do not consider them in improving the above method. Now we try to give a better fitting function of the mean and variance of evaluation vector and d so as to determine more reasonable values of p.

After a great deal of data analyses, testing for different combinational forms of functions, and regression analyses using evaluation vectors and basic weights vectors with different dimensions generated randomly and different values of d (including d = 0), a preferable fitting function is given as follows (whose decisive coefficient is more than 0.87).

where mx and vx stand for the mean and variance of evaluation vector, respectively.

We know that xi in X takes values on [0, 1]. If it follows uniform distribution, its mean and variance are 0.5 and 1/12, respectively. Thus, the values of p computed for those special d values by mx = 0.5 and vx = 1/12 and (10) with the suggested values of p above are given in Table 5.

Table 5 shows that for those special d values, the computed p values by (10) are quite close to the suggested p values. So the fitting function is well.

Furthermore, use (10) to compute the values of p using the data in Tables 1 and 2. The results are shown in Table 6.

Table 6 shows that for the evaluation vectors in Tables 1 and 2, the values of p computed by (10) have really considered the influence of the mean and variance of the vectors. From Table 6, one sees that although in some cases the p values obtained by (10) are not desirable, most of them are acceptable. The p values obtained by (10) have some improvements compared with the suggested p values. Considering that the vectors in Tables 1 and 2 are constructed specially for the influence of mean and variance of evaluation vector, having some particularities, (10) is acceptable.

7 Variable weights of emphasizing strong items

In the reality, when variable weights are needed, cases of emphasizing weak items are common seen. But the opposite cases also exist (Li et al. 2004; Huang et al. 2005), so we need discuss them. Emphasizing strong items means that they play an important role in evaluations, so their weights should be increased to their basic weights. Meanwhile, the weights of weak items should be decreased. Thus, the whole evaluation value increases. In such a case, one may think of using 1 minus each xi to convert the problem of variable weights of emphasizing strong items into a problem of emphasizing weak items. As the complexity of variable weights, doing as this may generate some unexpected problems. So, we deal with it directly.

As f2(p) with basic weights increases continuously and monotonously from the evaluation value under basic weights to xg when p increases on [1,∞), we use f2(p) as the variable weights evaluation function. In this situation, we just need to determine the value of p. We also divide variable weights degrees into ten grades: extremely small, very small, small, little small, medium trending small, medium trending great, little great, great, very great, and extremely great, and introduce a function

(d denotes variable weights degree, so the same symbol is used for convenience), and stipulate that variable weights degrees belong to the ten grades generally when d (p) is in (0, 0.1], (0.1, 0.2], (0.2, 0.3], (0.3, 0.4], (0.4, 0.5], (0.5, 0.6], (0.6, 0.7], (0.7, 0.8], (0.8, 0.9], and (0.9, 1], respectively. Through analyses and computations as above, we found that for the variable weights of emphasizing strong items, p is also influenced by d, the mean, variance and dimension of evaluation vector and basic weights vector, and the relationship between them. But the first three are great, whereas the others are not. Using computer simulations, we found that the suggested values of p for variable weights of emphasizing strong items for those special d are very close to that for emphasizing weak items. Considering the randomness of simulations, we give the same suggestions: p should take value in (1, 1.71], (1.71, 2.53], (2.53, 3.57], (3.57, 5.03], (5.03, 6.98], (6.98,9.97], (9.97, 15.04], (15.04, 25.11], (25.11, 55.51], (55.51,∞) when variable weights degree belongs to extremely small, very small, small, little small, medium trending small, medium trending great, little great, great, very great, and extremely great, respectively. If we only consider one value of p, it is suggested that for those above variable weights degrees except the last one, p takes the midpoint value of the interval. For the last case, p takes 116.

We also give the following fitting function for p through data analyses and simulations

where mx and vx are the same as above.

8 Examples

Following we use some examples to show the necessity of variable weights and how to use indirect variable weights method.

Example 1

Consider a variable weights evaluation reorganized by an exercise in Peng and Sun (2007). For an object, the factors set is U = {u1, u2, u3, u4, u5}, its evaluation vector and basic weights vector are X = (0.9, 0.8, 0.6, 0.4, 0.2) and ωb = (0.1,0.15,0.2,0.25,0.3), respectively, so the evaluation value under basic weights is f3(1) = 0.49. Besides, a reasonable changed weights vector ω = (0.043, 0.133, 0.194, 0.259, 0.371) is given. By them, this is a variable weights evaluation of emphasizing weak items. The weights of weak items u4 and u5 are increased. Meanwhile, the weights of strong items u1, u2, u3 are decreased. Thus, in variable weights evaluation, we should use (3). By (1), X and ω the variable weights evaluation value is 0.4393. This value can be obtained by (3) with basic weights when p = 1.8758, i.e., f3(p) = 0.4393.

Now consider indirect variable weights, and d(p) = 0.1748 is derived by f3(p), f3(1), xs = 0.2, and (9). As we have not the value of d, only having this example, we have to see d(p) as the variable weights degree, d. By X, d, and (10) there is p = 2.3921. By it and (3) with basic weights, the evaluation value is 0.4166, closing to 0.4393. Besides, by d(p), the variable weights degree belongs to very small; thus, we take the reference value of p, 2.120, the midpoint of (1.71, 2.53]. By it and (3) with basic weights, we obtain evaluation value 0.4280, also closing to 0.4393.

Example 2

Consider a variable weights evaluation from Huang et al. (2005). This is a two-level evaluation problem. Consider U1 = {u11, u12,…, u17}. By Table 3, in the paper, there are X1 = (0.3571, 0.2143, 0.2786, 0.5857, 0.2786, 0.4714, 0.2000), ωb = (0.140, 0.144, 0.119, 0.144, 0.155, 0.147, 0.151), and ω = (0.1459, 0.1162, 0.1095, 0.1921, 0.1462, 0.1760, 0.1177). By X1 the strong items are u11, u14, u16, others are weak items. By ωb, ω and X1, this is a variable weights evaluation of emphasizing strong items. Thus, in variable weights evaluation, we should use (2). The evaluation value without variable weights is f2(1) = 0.3410 with ωb. The variable weights evaluation value is 0.3673 by (1), X1 and ω. This value can be obtained by (2) with basic weights when p = 2.0573, i.e., f2(p) = 0.3673. Now consider indirect variable weights, by (11) and f2(p), f2(1), xg there is d(p) = 0.1072. We also must see it as the d. By X1, d, and (12) there is p = 2.0901. By it and (2) with basic weights, the variable weights evaluation value is 0.3681, closing to 0.3673.

Consider another Ui, say U4. By X4, d, and (12), there is p = 2.2579. By it and (2), the variable weights evaluation value is 0.3913, closing to 0.3883, the evaluation value by the changed weights and (1). When consider other Ui, the results are also satisfactory. But computing variable weights evaluation values by this way is simpler than the method in Huang et al.(2005).

The d of examples in most references are not great, as Examples 1, 2 have shown. But in realities there are cases in which d should be very great. A famous case is “one-vote veto system,” often meaning that the poorest item plays a decisive role in variable weights evaluations (obviously it is emphasizing weak items). But this system is crude as it only considers the evaluation value of the weakest item. If we use variable weights evaluation and take a great value d enough, we can not only consider the weakest item mainly, but also consider other items suitably, thus obtain a more reasonable evaluation value for decision makings. Following we construct an example to show it.

Example 3

Consider the health of one’s body. For simplicity, we evaluate one’s health from the following aspects U = {u1(heart), u2(liver), u3(stomach), u4(lung), u5(kidney), u6(other organs)}. For an organ, the worse the function, the less the evaluation value. Suppose the basic weights vector is ωb = (0.2, 0.1, 0.15, 0.15, 0.1, 0.3), which indicates the importance of those organs in general conditions. For any one, his health is bad when one of his organs is bad even if his other organs are good. This shows that bad organs play a crucial role in the evaluation of his body, so we should give great weights for the poor organs. As different people with poor body have different poor organs, we cannot give a reasonable weights vector suitable for any one’s body evaluation. Hence, we should use variable weights evaluation of emphasizing weak items. Suppose in the evaluation for one from above aspects, an evaluation vector X = (0.9,0.85,0.9,0.95,0.1,0.8) is obtained. If the d is 0.750, use (10), X and it p = 5.687 is derived, and the evaluation value 0.40 is derived by (3), showing his body is poor, whereas 0.793 is obtained when variable weights are not considered. Obviously, the former evaluation value is more reasonable because it not only considers all evaluation values, but also considers the evaluation value of u5, the poorest organ, mainly. If 0.40 is unsatisfactory, it might show the d is unreasonable. Adjusting it properly, then we can use it and this paper’s method to do variable weights evaluations.

9 Conclusions

This paper studies fuzzy variable weights comprehensive evaluations further. The necessities of variable weights and indirect variable weights are illustrated. The defect in the traditional variable weights formula is revised. To let indirect variable weights work well, factors affecting p are discussed. Some reference values of p for different variable weights degrees and two fitting functions for p are also given. Three examples show that the results are satisfactory. In variable weights evaluations, our aim usually is to get a reasonable evaluation value rather than reasonable changed weights, so indirect variable weights can meet this requirement. Besides, although the variable weights evaluation discussed in the paper is simple, the principle of complex variable weights evaluations such as multi-level fuzzy variable weights evaluations is the same with it (Peng and Sun 2007; Li et al. 2015). So it has a general meaning.

For a real comprehensive evaluation problem, how to solve it reasonably? First, we should judge whether it needs variable weights. If the weight is not affected by the single-factor evaluation values, variable weights are unnecessary; otherwise, we need them.

When using indirect variable weights evaluation, we need to determine d. Although it is not easy to get the best value of d, it is not difficult to get a suitable value. For the objects of a class, many experts or experienced people can give such a value. If we do not know the value of d of a class but have a reasonable variable weights example for a problem with the same class, we can use this example to compute d(p), and see it as the d. If there are several such examples, average the obtained values, and then use the mean.

References

Chai ZL (2009) A novel variable weight method on fuzzy synthetic evaluation. In: 2009 International conference on artificial intelligence and computational intelligence, Shanghai, pp 471–476

Engin GO, Demir I, Hiz H (2004) Assessment of urban air quality in Istanbul using fuzzy synthetic evaluation. Atmos Environ 38(23):3809–3815

Feng F, Xu SG, Liu JW et al (2010) Comprehensive benefit of flood resources utilization through dynamic successive fuzzy evaluation model: a case study. Sci China 53(2):529–538

Haq AN, Kannan G (2006) Fuzzy analytical hierarchy process for evaluating and selecting a vendor in a supply chain model. Int J Adv Manuf Technol 29:826–835

Hu BQ (2014) Basis of fuzzy theory. Wuhan University Press, Wuhan (in Chinese)

Huang YS, Qi JX, Zhou JH (2005) Method of risk discernment in technological innovation based on path graph and variable weight fuzzy synthetic evaluation. FSKD 3613:635–644

Jia ZY, Wang CM, Huang ZW et al (2011) Evaluation research of regional power grid companies’ operation capacity based on entropy weight fuzzy comprehensive model. Procedia Eng 15:4626–4630

Khan FI, Sadiq R (2005) Risk-based prioritization of air pollution monitoring using fuzzy synthetic evaluation technique. Environ Monit Assess 105:261–283

Li DQ, Li HX (2012) The properties and construction of state variable weight vectors. J Beijing Normal University (Natural Science) 38(4):455–461

Li HX, Yen VC, Lee ES (2000) Factor space theory in fuzzy information processing-composition of states of factors and multifactorial decision making. Comput Math Appl 39:245–265

Li HX, Li LX, Wang JY et al (2004) Fuzzy decision making based on variable weights. Math Comput Model 39:163–179

Li WJ, Liang W, Zhang LB et al (2015) Performance assessment system of health, safety and environment based on experts’ weights and fuzzy comprehensive evaluation. J Loss Prev Process Ind 35:95–103

Mei SZ (1996) Fuzzy control and the determining of the variable weight. Syst Eng Theory Practice 5:78–82,9 (in Chinese)

Peng ZZ, Sun YY (2007) Fuzzy mathematics and its applications. Wuhan University Press, Wuhan (in Chinese)

Peng BZ, Dou YJ, Zhang Y (1996) Attempt to evaluate the environmental comprehensive quality with a dynamic viewpoint. China Environ Sci 16(1):16–19 (in Chinese)

Wang PZ (1985) Fuzzy sets and the falling shadow of random sets. Beijing Normal University Press, Beijing (in Chinese)

Wang Y, Yang WF, Li M et al (2012) Risk assessment of floor water inrush in coal mines based on secondary fuzzy comprehensive evaluation. Int J Rock Mech Min Sci 52(6):50–55

Wu Q, Li B, Chen YL (2016) Vulnerability assessment of groundwater inrush from underlying aquifers based on variable weight model and its application. Water Resour Manage 30(10):3331–3345

Wu Q, Zhao DK, Wang Y et al (2017) Method for assessing coal-floor water-inrush risk based on the variable-weight model and unascertained measure theory. Hydrogeol J 10:1–15

Yao BX, Li HX (2000) Axiomatic system of local variable weight. Syst Eng Theory Practice 20:106–109 (in Chinese)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has no conflicts of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Communicated by V. Loia.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Chai, Z. An indirect variable weights method to compute fuzzy comprehensive evaluation values. Soft Comput 23, 12511–12519 (2019). https://doi.org/10.1007/s00500-019-03797-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-019-03797-8