Abstract

In this survey paper, we review recent advances of compressed sensing applied to systems and control. Compressed sensing has been actively researched in the field of signal processing and machine learning. More recently, the method has been applied to systems and control problems, such as sparse feedback gain design, reduced-order control, and maximum hands-off control. This paper introduces these important applications of compressed sensing to systems and control. MATLAB programs for the numerical examples shown in this survey paper are available as supplementary materials.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Preface

It is our great pleasure to be able to contribute this article on the occasion of Eduardo Sontag’s 70th birthday.

Eduardo and the second author (hereafter I and me) both studied for Ph. D. under the guidance of the late Professor Rudolf E. Kalman. We belonged to the Center for Mathematical System Theory that Professor Kalman established in the University of Florida. I became a member of the Center in the fall of 1974, and when I came in, Eduardo was already there; he was two years ahead of me.

We both belonged to the Mathematics department as students to satisfy course requirements for Ph. D. As he was two years ahead, his main duty was to finish his Ph. D. thesis then.

His mathematical capability was simply enormous—sharp, precise, and knowledgeable, especially in algebra. He has already written several papers on systems over rings when I first met him, and I was very impressed.

In the Center where we worked for Ph. D., we shared the same habit of working late at night. We would very often come to the office around 8 or 9 pm and stayed there until 2 or 3 am in the morning.

During this time, we talked about, and discussed many things: mathematics, control theory, politics, and so on. I do not remember much of the details, but I can still recall two episodes.

At one point, our (and also Center’s) main issue was concerned with how one can grasp the notion of uniqueness of canonical realizations. At that time, we were both working on realization theory. He on algebraic systems, and myself on infinite-dimensional systems.

At least from a philosophical point of view, this is a very crucial issue—critical for modeling. If we have two essentially different models based on the same data, how can we safely draw a sound conclusion based on these different models? Such a nonuniqueness could shake the ground for the validity of a modeling process. Uniqueness of canonical realizations can save such a nuisance.

The trouble is that beyond the category of finite-dimensional linear systems, naive notions of canonical realizations do not work. One needs to be more careful for arriving at a desired uniqueness result.

Eduardo and I had some discussions, and we agreed upon the observation that the notion of canonical realizations should be taken in a categorical sense. Further, observability is the key to this. If one works with an algebraic category, the observability should be understood in an algebraic sense; if you work with a topological context, then the observability should be topological.

These observations later led to further developments for me, and they flourished into more concrete and useful realizations. I can still recall the vivid image of the night we had this discussion together. We were young, were enthusiastic, and had plenty of time to discuss. Those were golden days. I feel very fortunate to have him as a friend and an esteemed colleague.

I would like to conclude this preface by giving the second episode related to his skills in using chopsticks.

At one point—I do not remember when—I gave him a pair of chopsticks. I should remind the reader that the Japanese cuisine and chopsticks were not very popular in the USA then in the 1970s. (For example, there were no sushi shops in Gainesville.) The Japanese food was slowly gaining popularity, and so were chopsticks. Eduardo was curious and also interested in getting skillful at them. I probably gave him some basics in using chopsticks, and he would occasionally practice them when he wanted to relax from research and study. He soon (perhaps half a year later) became very good at using them, and he should be rightly proud of it. Again, to repeat, using chopsticks was not popular then. I am also proud of giving him a first motivation for becoming skillful at them.

Almost 50 years have passed since then. We chose different research directions, so we have had only one joint paper [1] together. However, I cherish wonderful mutual relationships and am grateful for his long-term friendship. Little did we dream then of a day that both of us would still remain in the academia and keep the same friendship as the old days in Florida.

I would also like to mention yet one more thing. The first author, Masaaki Nagahara, was a star student of mine, and is now my esteemed colleague. Hence, in light of our relationship, he can be regarded as your (Eduardo’s) academic nephew. In this way, our academic lineage continues, and that all dates back to our good old days.

So, Eduardo, enjoy this volume, which is a wonderful tribute to your great achievements, and I wish you many happy returns of the day, year and the occasion. My heartiest congratulations!

2 Introduction

In 1997, Eduardo Sontag and his colleagues have studied sparse approximation [2] to approximate a function in a functional space by a small number of basis functions. This idea has been extended to a very rich research area called compressed sensing, on which a number of recent studies have focused in the field of signal processing, machine learning, and statistics [3,4,5,6,7]. The core idea, which is very similar to [2], of compressed sensing is to consider sparsity in the signal analysis. A sparse signal (e.g., a sparse vector or a sparse matrix) is a signal that contains very few nonzero elements. To measure the sparsity of a signal, we use the \(\ell ^0\) norm, the number of nonzero elements in the signal, and if the \(\ell ^0\) norm is much smaller than the size (or the length) of the signal, then the signal is said to be sparse. We can find sparse signals around us. For example, audio signals are sparse in the frequency domain, since they have frequency components only in the low frequencies. In particular, the human voice can be assumed to be in the frequency range of 30 – 3400 Hz, called the telephone bandwidth, by which the voice can be coded at the sampling rate 8000 Hz [8]. Also, a pulse signal is sparse in the time domain, since it is active (i.e., nonzero) only in a short duration of time. This property has been applied, for example, to the reflection seismic survey in geophysics [9,10,11].

More recently, compressed sensing has been applied to the design of control systems, where the property of sparsity is used for reducing the number of parameters that determine the control. A motivation of sparse control design is for networked control, where observation data and control signals are exchanged between the controlled plant and the controller through a rate-limited wireless network. In networked control, the technique of compressed sensing plays an important role to realize resource-aware control that aims at reducing the communication and computational burden. For this purpose, sparse optimization to minimize the \(\ell ^0\) norm of a control packet sent through a rate-limited network has been proposed in [12,13,14,15,16,17]. Resource-aware control is also achieved by the minimum actuator placement [18,19,20,21,22,23], which minimizes the number of actuators, or control inputs, that achieve a given control objective, such as controllability.

In networked control, it is also preferred for implementation to represent a controller in a compact size. For this, the design of a sparse feedback control gain has been proposed in [24,25,26,27,28,29]. In general, there should be a tradeoff between the closed-loop performance and the sparsity of the gain, for which see the review paper [30] for detailed discussions. In addition, reduced-order control [31] is also a good strategy to obtain a compact representation of a controller, which is related to compressed sensing of matrices [32,33,34,35,36].

The compressed sensing approach has also been proposed in the context of optimal control. In particular, the papers [37, 38] have introduced a new type of optimal control called the maximum hands-off control, which minimizes the \(L^0\) norm of a continuous-time control signal under control constraints. Namely, maximum hands-off control is the sparsest control that has the minimum length of time duration on which the control is active (i.e., nonzero) to achieve a control objective with constraints. We note that hands-off control has a long time duration over which the control is exactly zero, which is actually used in practical control systems, sometimes called gliding or coasting. An example of hands-off control is a stop-start system [39, 40] in automobiles where the engine is automatically shut down when the automobile is stationary. Also in a hybrid vehicle, the internal combustion engine is stopped when the vehicle is at a stop or at a low speed while the electric motor is alternatively active [41,42,43]. In these systems, fuel consumption and CO or CO\(_2\) emissions can be effectively reduced. Railway vehicles [44, 45] and free-flying robots [46] also take advantage of hands-off control. By these properties, hands-off control is also called green control [47].

Recent studies have also explored mathematical properties of maximum hands-off control. Maximum hands-off control is mathematically described as an \(L^0\) optimal control, which is very hard to solve. Borrowing the idea of \(\ell ^1\) relaxation in compressed sensing, \(L^1\) optimal control has been proposed in [38] to solve the \(L^0\) optimal control. Since the \(L^1\) optimal control, also known as minimum fuel control, has been extensively studied in the 1960’s (see, e.g., [48]), the problem is easy to solve. In [38], the equivalence between \(L^0\) and \(L^1\) optimal controls is established under some mild assumptions. Fundamental properties of maximum hands-off control, such as the value function [49] and necessary conditions [50] have been investigated. Efficient numerical algorithms for maximum hands-off control have been proposed in recent papers [51,52,53]. Finally, practical applications of maximum hands-off control have been reported for electrically tunable lens [54], spacecraft maneuvering [55], and thermally activated building systems [56].

This survey paper provides an overview of recent advances of compressed sensing approaches to systems and control. The paper is organized as follows: Sect. 3 introduces the design of sparse feedback gains. In Sect. 4, reduced-order control as an application of compressed sensing is discussed. In Sect. 5, the maximum hands-off control is introduced and its mathematical properties are shown. Finally, Sect. 6 provides concluding remarks.

2.1 Notation

Let x be a vector. The \(\ell ^p\) norm \(\Vert x\Vert _p\) with \(p\in (0,\infty )\) is defined by

where \(x_i\) is the ith element of x. Also, the \(\ell ^0\) norm \(\Vert x\Vert _0\) is defined by

where \(\textrm{supp}(x)\) is the support set of x, that is,

and \(\#\bigl (\textrm{supp}(x)\bigr )\) is the number of elements in the finite set \(\textrm{supp}(x)\).

Let A be a matrix. The transpose of A is denoted by \(A^\top \), the trace by \(\textrm{tr}(A)\), and the rank by \(\textrm{rank}(A)\). The ith largest singular value of A is denoted by \(\sigma _i(A)\), and the maximum singular value by \(\sigma _{\max }(A)\). For matrix A, we denote by \(\Vert A\Vert \) the Frobenius norm:

and by \(\Vert A\Vert _*\) the nuclear norm:

where \(\sqrt{A^\top A}\) is a positive semidefinite matrix that satisfies \(\bigl (\sqrt{A^\top A}\bigr )^2=A^\top A\). Also, the \(\ell ^0\) and \(\ell ^1\) norms of matrix A are, respectively, defined by

where \(a_{ij}\) is the (i, j)th element of A. By \({\mathcal {S}}_n\), we denote the set of \(n\times n\) real symmetric matrices. For \(A\in {\mathcal {S}}_n\), matrix inequalities \(A\succ 0\), \(A\succeq 0\), \(A\prec 0\), and \(A\preceq 0\), respectively, mean A is positive definite, positive semidefinite, negative definite, and negative semidefinite. For \(A\in {\mathbb {R}}^{n\times m}\) with \(r=\textrm{rank}(A)<n\), \(A^\perp \) is a matrix that satisfies

We note that \(A^\perp \) is not uniquely determined for a given A. In fact, if \(A^\perp \) satisfies (7), then for any nonsingular \(T\in {\mathbb {R}}^{(n-r)\times (n-r)}\), \(TA^\perp \) also satisfies (7). The results using \(A^\perp \) shown in this paper are valid for any matrix satisfying (7). For a closed subset \(\Omega \) of a normed space \({\mathcal {S}}\) with norm \(\Vert \cdot \Vert \), the projection of \(X\in {\mathcal {S}}\) onto \(\Omega \) is denoted by \(\Pi _{\Omega }(X)\), that is,

3 Sparse feedback gain design

In this section, we will introduce a compressed sensing approach to the design of sparse feedback gains. As mentioned in the previous section, a sparse feedback gain is preferable in networked control systems as resource-aware control.

3.1 Problem formulation

Let us consider the following linear time-invariant system:

where \(x(t)\in {\mathbb {R}}^n\), \(u(t)\in {\mathbb {R}}^m\), \(A\in {\mathbb {R}}^{n\times n}\), and \(B\in {\mathbb {R}}^{n\times m}\). We assume (A, B) is stabilizable (or asymptotically controllable [57]). Then, there exists a state feedback gain \(K\in {\mathbb {R}}^{m\times n}\) such that the control

asymptotically stabilizes system (9). In other words, the matrix \(A+BK\) is Hurwitz [57, Proposition 5.5.6], which is equivalent to the existence of \(Q \succ 0\) such that the following matrix inequality holds [58, Corollary 3.5.1]:

In this inequality, K and Q are both unknown variables, and hence, it is not linear. To make the inequality linear, we introduce new variables \(P\triangleq Q^{-1}\) and \(Y\triangleq KP\). Then, from inequality (11), we have the following inequalities:

These are called linear matrix inequalities (LMIs), which play an important role in linear control systems design [58].

Now, the problem of sparse feedback gain design is formulated as follows:

Problem 1

(Sparse feedback gain) Find Y that has the minimum \(\ell ^0\) norm among matrices satisfying the LMIs in (12).

Suppose \(Y\in {\mathbb {R}}^{m\times n}\) is sparse, or \(\Vert Y\Vert _0\ll mn\), where \(\Vert Y\Vert _0\) is the \(\ell ^0\) norm of Y defined in (6). Then, choosing the output as \(y=P^{-1}x\), one can implement a sparse output feedback gain \(u=Yy\).

3.2 Solution by sparse optimization

Let us consider how to obtain such a sparse solution. First, we slightly change the LMIs in (12) as follows:

with a small number \(\epsilon >0\). Then the set

becomes a closed subset of \({\mathbb {R}}^{m\times n}\). We note that if \(Y\in \Lambda \), then this Y satisfies (12).

Now, Problem 1 is described as an optimization problem of

We note that this is a combinatorial optimization and hard to directly solve as in compressed sensing. Therefore, we approximate the \(\ell ^0\) norm of the matrix Y by the \(\ell ^1\) norm \(\Vert Y\Vert _1\), the sum of absolute values of the elements in Y as defined in (6). In fact, the \(\ell ^1\) norm is the convex envelope [32, 59] or the convex relaxation [7, Sect. 3.2], or the \(\ell ^0\) norm. By using the \(\ell ^1\) norm, the \(\ell ^0\) optimization in (15) is reduced to the following convex optimization problem:

where \(\Vert Y\Vert _1\) is the \(\ell ^1\) norm defined in (6). The \(\ell ^1\) norm heuristic approach for sparse feedback gains has been proposed in [25, 26].

To numerically solve the convex optimization problem (16), one can adapt the Douglas–Rachford splitting algorithm [60, 61]:

In this algorithm, \(S_{\gamma }\) is the soft-thresholding function defined by

where \([S_{\gamma }(V)]_{ij}\) is the (i, j)th entry of \(S_{\gamma }(V)\in {{\mathbb {R}}}^{m\times n}\), and \(\Pi _{\Lambda }\) is the projection onto the set \(\Lambda \). The projection \(\Pi _\Lambda \) onto the closed and convex subset \(\Lambda \) can be obtained by solving another LMI optimization [62, Section 2.1] (see also [29]):

Lemma 1

For matrix \(Y\in {\mathbb {R}}^{m\times n}\), the projection \(\Pi _\Lambda (Y)\) is the solution of the following optimization problem:

Other formulations of sparse feedback gain design have also been proposed. First, the iterative greedy LMI [29] is an alternating projection method to find an s-sparse matrix Y that satisfies \(\Vert Y\Vert _0\le s\) in the LMI subset \(\Lambda \) for given \(s\in {\mathbb {N}}\). The projection of matrix Y onto the subset of s-sparse matrices is given by

This projection is known to be the s-sparse operator \({\mathcal {H}}_s(Y)\), which sets all but the s largest (in magnitude) elements of Y to 0 [61, 63]. We note that the optimization in (20) may have multiple solutions in general, and the projection is not uniquely determined. In this case, we choose one matrix randomly. Applying the projection \(\Pi _\Lambda \) computed by (19) and the s-sparse operator \({\mathcal {H}}_s\) alternatively as

with an initial guess Y[0], we can find an s-sparse matrix in \(\Lambda \).

Next, the paper [26] has proposed to design a row sparse feedback gain, which can reduce the number of control channels in a multiple-input system. A row sparse gain is obtained by minimizing the row norm:

instead of the \(\ell ^1\) norm in (16). Finally, [25] has proposed sparse feedback gain design for \(H^2\) control by using the \(\ell ^1\) norm heuristic approach with LMIs.

3.3 Numerical example

Here, we design a sparse feedback gain for the linear plant (9) with

This model, named AC2, is taken from the benchmark problem set in COMPL\(_e\)ib library [64].

First, we solve the \(\ell ^1\) norm optimization in (16). We take \(\epsilon = 10^{-6}\) for (14). Using YALMIP [65] and SeDuMi [66] on MATLAB, we obtain the following solution:

This is a sparse matrix, and we obtain a sparse feedback gain.

Next, we solve the \(\ell ^0\) optimization (15) by alternating projection [29] given in (21) with sparsity \(s=1\). The solution is obtained as

We confirm that \(\Vert Y_{\textrm{alt}}\Vert _0 = 1\) and it is sparser than \(Y_{\ell ^1}\), while the alternating projection method needs more iterations than the \(\ell ^1\) optimization. You can check the numerical computation by yourself using the MATLAB program available at [67].

4 Reduced-order control design

The problem of reduced-order control is to find a low-order controller, which has a much less order than the controlled plant. This problem is known to be NP-hard [68] due to the rank condition introduced below. Then, we can adapt the idea of compressed sensing to solve this problem.

4.1 Problem formulation

Let us consider the following linear time-invariant system:

where \(x(t)\in {\mathbb {R}}^n\), \(u(t)\in {\mathbb {R}}^m\), \(y(t)\in {\mathbb {R}}^p\), \(A\in {\mathbb {R}}^{n\times n}\), \(B\in {\mathbb {R}}^{n\times m}\), and \(C\in {\mathbb {R}}^{p\times n}\).

For this system, we consider an output-feedback controller \(u=Ky\) of order \(n_c\), which is strictly less than n. Such a controller is called a reduced-order controller. Then, the reduced-order controller design is described as the following feasibility problem [69]:

Problem 2

(Reduced-order controller) Find symmetric matrices \(X, Y\in {\mathcal {S}}_n\) such that the rank condition

and the LMIs

hold for some \(\epsilon >0\).

4.2 Solution by alternating projection

To solve this problem, we introduce an algorithm using nuclear norm minimization [35, 59] to approximately solve the rank-constrained LMIs in Problem 2. The idea is to approximate the matrix rank by the nuclear norm defined in (5), the sum of the singular values of the matrix, which is known to be the convex relaxation of the matrix rank [32, 59].

Using the nuclear norm, Problem 2 is reduced to the following problem:

where F(X, Y) is defined such that the inequality \(F(X,Y)\preceq 0\) is equivalent to the LMIs in (28). This is a convex optimization problem and is easily solved.

Another approach to Problem 2 is alternating projection. Let us consider the following two subsets of \({\mathcal {S}}_n^2\):

where \(r=n+n_c\). The alternating projection alternatively applies two projections \(\Pi _{\Omega _r}\) and \(\Pi _{\Lambda }\) onto \(\Omega _r\) and \(\Lambda \), respectively. That is, we iteratively compute

with initial guess \((X[0],Y[0])\in {\mathcal {S}}_n^2\). The projection \(\Pi _{\Omega _r}(X,Y)\) for given \((X,Y) \in {\mathcal {S}}_n^2\) is easily computed by the alternating direction method of multipliers (ADMM), for which see Sect. 4.3 for details. The projection \(\Pi _{\Lambda }\) can also be easily computed using Lemma 1.

It is reported in [70] that the alternating projection method can solve some reduced-order control problems that the nuclear norm heuristic approach cannot solve (see Example 4.4). We also note that many control problems, such as \(H^2\) and \(H^\infty \) control problems, are described as LMIs with the rank condition, which can also be solved by the method introduced in this section.

4.3 ADMM algorithm for projection \(\Pi _{\Omega _r}\)

Here, we show the ADMM algorithm for the projection \(\Pi _{\Omega _r}\) defined in (30). For given \((X,Y)\in {\mathcal {S}}_n^2\), the projection \(\Pi _{\Omega _r}(X,Y)\) can be written by definition as

We note that the subset \(\Omega _r\) is closed but non-convex, and hence, there may exist multiple minimizers for the right-hand side of (33). To solve the minimization problem in (33), we consider the indicator function \({\mathcal {I}}_r\) defined by

Then, the projection \(\Pi _{{\mathcal {C}}_r}(Z)\) onto the set of rank-r matrices

is easily computed via the singular value decomposition \(Z=U\Sigma V^\top \). Let \(\Sigma _r\) be a truncated matrix of \(\Sigma \) by setting all but r largest in magnitude diagonal entries of \(\Sigma \) to 0. Then, \(\Pi _{{\mathcal {C}}_r}(Z)\) is given by

Then, the minimization problem in (33) is equivalently described as

This optimization problem can be efficiently solved by the ADMM algorithm [71]. The iterative algorithm is given by

where \(\rho >0\) is the step size, and \(M_{11}[k],M_{22}[k]\in {\mathbb {R}}^{n\times n}\) are defined as

A detailed explanation of this algorithm is found in [70].

4.4 Numerical example

We first consider the linear plant (26) with A and B given in (23) and

This is model AC2 from the COMPL\(_e\)ib library [64]. For this system, we compute a static controller with \(n_c=0\) that stabilizes the plant. We use YALMIP [65] and SeDuMi [66] on MATLAB to solve the nuclear norm minimization (29). The obtained static controller is

with which the poles of the closed-loop system (i.e., the eigenvalues of \(A+BKC\)) are

and hence this K certainly stabilizes the plant. We note that the alternating projection (32) with zero matrices as the initial guess also gives almost the same K as (44).

Next, let us consider another linear time-invariant plant with

This model is NN12 from the COMPL\(_e\)ib library [64].

First, the nuclear norm minimization failed to find a stabilizing static controller. On the other hand, the alternating projection (32) successfully outputs the following static controller

With this static controller, the closed-loop poles become

and hence K stabilizes the plant. The MATLAB program to run the numerical examples above is available at [67].

5 Maximum hands-off control

In this section, we introduce a compressed sensing approach to continuous-time optimal control. For this, we first define the \(L^0\) norm for a Lebesgue measurable function u: \([0,T]\rightarrow {\mathbb {R}}\) with fixed \(T>0\):

where \(\mu _L\) is the Lebesgue measure, and \(\textrm{supp}(u)\) is the support set of function u, that is,

We note that the definition of \(L^0\) norm in (48) is consistent with that of \(\ell ^0\) norm defined in (2). The \(L^0\) norm can be understood as the time length over which the signal takes nonzero values. Then, if a continuous-time signal \(\{u(t): t\in [0,T]\}\) has the \(L^0\) norm \(\Vert u\Vert _{L^0}\) much smaller than the horizon length T, then u is said to be sparse.

Such sparse control is important in practical applications for which energy consumption should be considered. When control is sparse, the actuator can stop when the signal value is zero, and the energy consumption can be dramatically reduced. To maximally enhance energy conservation by sparse control, we adopt maximum hands-off control described below.

5.1 Maximum hands-off control problem

Let us consider the following single-input linear time-invariant system:

where \(x(t)\in {\mathbb {R}}^n\) is the state, \(u(t)\in {\mathbb {R}}\) is the control, and \(A\in {\mathbb {R}}^{n\times n}\) and \(b\in {\mathbb {R}}^{n\times 1}\) are state-space matrices. For this linear system, we consider the problem of maximum hands-off control described as follows.

Problem 3

(Maximum hands-off control) Fix terminal time \(T>0\). Find a control \(\{u(t): t\in [0,T]\}\) that minimizes \(\Vert u\Vert _{L^0}\) such that it satisfies the control magnitude constraintFootnote 1

and steers the state x(t) in (50) from \(x(0)=\xi \) to \(x(T) = 0\).

5.2 Existence

First, we consider the existence of maximum hands-off control. For this, we define the T-controllable set [72]:

Definition 1

(T-controllable set) Let \(T>0\). The set of initial states of (50) that can be transferred to the origin by some control \(\{u(t): t\in [0,T], \Vert u\Vert _{L^\infty }\le 1\}\) is called the T-controllable set. We denoted the T-controllable set by \({\mathcal {R}}(T)\).

The T-controllable set \({\mathcal {R}}(T)\) is represented as

From the definition of \({\mathcal {R}}(T)\), if \(\xi \in {\mathcal {R}}(T)\), then there exists a control \(\{u(t): t\in [0,T], \Vert u\Vert _{L^\infty }\le 1\}\) that transfers x(t) from \(\xi \) to the origin in time T. We call this control a feasible control, and denote by \({\mathcal {U}}(T,\xi )\) the set of all feasible controls. The feasible set is also represented as

It is easily shown that \(\xi \in {\mathcal {R}}(T)\) if and only if there exists \(u\in {\mathcal {U}}(T,\xi )\). In other words, if \(\xi \not \in {\mathcal {R}}(T)\), then there is no feasible control, and \({\mathcal {U}}(T,\xi )\) is empty. In this case, if we take sufficiently large \({\tilde{T}}>T\), then \({\mathcal {U}}({\tilde{T}},\xi )\) may be non-empty. Then, we consider the minimum time among all T such that \({\mathcal {U}}(T,\xi )\) is non-empty, which is defined by

For the minimum time, the following theorem holds [48, Section 6-8][73, Section III.19]:

Theorem 1

Suppose that \(T^*(\xi )<\infty \). Then, there exists a minimum-time control \(u^*\in {\mathcal {U}}(T^*(\xi ),\xi )\). Moreover, for any \(T>T^*(\xi )\), the set \({\mathcal {U}}(T,\xi )\) is non-empty.

From this theorem, we can show the existence of maximum hands-off control [74]:

Theorem 2

Suppose that the initial state \(\xi \) satisfies \(T^*(\xi )<\infty \), and the terminal time T is strictly greater than \(T^*(\xi )\). Then, there exists at least one maximum hands-off control (i.e., an optimal solution of Problem 3).

5.3 Equivalence theorem

The maximum hands-off control problem (Problem 3) is hard to solve. Hence, we borrow the idea of the \(\ell ^1\)-norm heuristic approach introduced in compressed sensing. Namely, we consider to minimize the \(L^1\) norm of u:

Minimizing the \(L^1\) norm instead of the \(L^0\) norm in Problem 3 is known as the minimum fuel control [48]:

Problem 4

(Minimum fuel control) Fix terminal time \(T>0\). Find a control \(\{u(t): t\in [0,T]\}\) that minimizes \(\Vert u\Vert _{L^1}\) in (55) such that it satisfies the control magnitude constraint (51), and steers the state x(t) in (50) from \(x(0)=\xi \) to \(x(T) = 0\).

The minimum fuel control is a classical and well-studied control problem. This problem can be easily solved by, e.g., time discretization [74], by which the problem is reduced to a standard convex optimization problem with the \(\ell ^1\) norm.

A question is when these two optimal control problems are equivalent. An equivalent theorem has been obtained in [38, 74]:

Theorem 3

Suppose that T and \(\xi \) are chosen such that \(T^*(\xi )<\infty \) and \(T>T^*(\xi )\). Suppose also that there exists an \(L^1\) optimal control \(u^*_1(t)\) (i.e., a solution of Problem 4) that takes \(\pm 1\) or 0 for almost all \(t\in [0,T]\). Then, \(u^*_1(t)\) is also \(L^0\) optimal (i.e., a solution of Problem 3).

A control that takes \(\pm 1\) or 0 for almost all t is called a bang-off-bang control. Theorem 3 suggests to first solve Problem 4, and see the solution. If it is bang-off-bang, then it is also maximum hands-off control. The following theorem gives a sufficient condition for the \(L^1\) optimal control to be bang-off-bang:

Theorem 4

Suppose that T and \(\xi \) are chosen such that \(T^*(\xi )<\infty \) and \(T>T^*(\xi )\). Suppose also that A is non-singular and (A, b) is controllable. Then, the \(L^1\) optimal control is bang-off-bang, and hence, it is also \(L^0\) optimal.

The equivalence is easily checked before solving Problem 4.

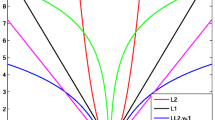

State variables \(x_i(t)\), \(i=1,2,3,4\) by the \(L^1\) optimal control in Fig. 1

5.4 Numerical example

Let us consider the linear time-invariant system (50) with

We take \(\xi = [1,1,1,1]^\top \) and \(T=10\). For this system, we compute the maximum hands-off control. Since A is singular, we cannot use Theorem 4. Therefore, we first solve Problem 4, and check whether the solution is bang-off-bang. By using time discretization and the alternating direction method of multipliers (ADMM) [61, Chapter 9], we obtain the \(L^1\) optimal control shown in Fig. 1.

We can see that the \(L^1\) optimal control takes only \(\pm \,1\) and 0, and hence, it is bang-off-bang. From Theorem 3, this is also maximum hands-off control, a solution of Problem 3. By this control, the state \(x(t)=[x_1(t),x_2(t),x_3(t),x_4(t)]^\top \) is transferred to the origin, as shown in Fig. 2.

6 Conclusion

In this survey paper, we have introduced compressed sensing approaches to three control problems, namely sparse feedback gain design, reduced-order control, and maximum hands-off control. Not limited to these control problems, compressed sensing has also been applied to important control problems such as minimum actuator placement [18,19,20], discrete-valued control [75, 76], and system identification [77, 78]. We hope the readers develop further new approaches of compressed sensing to systems and control.

7 Supplementary information

The MATLAB programs for the numerical examples in this paper are available at https://github.com/nagahara-masaaki/MCSS.

Notes

The essential supremum is defined with the Lebesgue measure \(\mu _L\) on \({\mathbb {R}}\).

References

Sontag E, Yamamoto Y (1989) On the existence of approximately coprime factorizations for retarded systems. Syst Control Lett 13(1):53–58

Donahue MJ, Gurvits L, Darken C, Sontag E (1997) Rates of convex approximation in non-Hilbert spaces. Constr Approx 13:187–220

Elad M (2010) Sparse and Redundant Representations. Springer, New York

Eldar YC, Kutyniok G (2012) Compressed sensing: theory and applications. Cambridge University Press, Cambridge

Rish I, Grabarnik G (2014) Sparse modeling: theory, algorithms, and applications. CRC Press, Boca Raton

Temlyakov V (2015) Sparse Approximation with Bases. Birkhäuser, Basel

Vidyasagar M (2019) An introduction to compressed sensing. SIAM, Philadelphia

Cox RV, De Campos Neto SF, Lamblin C, Sherif MH (2009) ITU-T coders for wideband, superwideband, and fullband speech communication. IEEE Commun Mag 47(10):106–109

Claerbout JF, Muir F (1973) Robust modeling with erratic data. Geophysics 38(5):826–844

Santosa F, Symes WW (1986) Linear inversion of band-limited reflection seismograms. SIAM J Sci Stat Comput 7(4):1307–1330

Taylor HL, Banks SC, McCoy JF (1979) Deconvolution with the \(\ell _1\) norm. Geophysics 44(1):39–52

Nagahara M, Quevedo DE (2011) Sparse representations for packetized predictive networked control. In: IFAC 18th World Congress, pp. 84–89

Gallieri M, Maciejowski JM (2012) \(\ell _{\rm asso}\) MPC: Smart regulation of over-actuated systems. In: Proc. Amer. Contr. Conf., pp. 1217–1222

Nagahara M, Matsuda T, Hayashi K (2012) Compressive sampling for remote control systems. IEICE Trans. on Fundamentals E95-A(4), 713–722

Pakazad SK, Ohlsson H, Ljung L (2013) Sparse control using sum-of-norms regularized model predictive control. In: 52nd IEEE Conference on Decision and Control, pp. 5758–5763

Nagahara M, Quevedo DE, Østergaard J (2014) Sparse packetized predictive control for networked control over erasure channels. IEEE Trans Autom Control 59(7):1899–1905

Nagahara M, Østergaard J, Quevedo DE (2016) Discrete-time hands-off control by sparse optimization. EURASIP J Adv Signal Process 2016(1):1–8

Olshevsky A (2014) Minimal controllability problems. IEEE Trans. Control Netw. Syst. 1(3):249–258

Pasqualetti F, Zampieri S, Bullo F (2014) Controllability metrics, limitations and algorithms for complex networks. IEEE Trans Control Netw Syst 1(1):40–52

Tzoumas V, Rahimian MA, Pappas GJ, Jadbabaie A (2016) Minimal actuator placement with bounds on control effort. IEEE Trans Control Netw Syst 3(1):67–78

Pequito S, Kar S, Aguiar AP (2016) A framework for structural input/output and control configuration selection of large-scale systems. IEEE Trans Autom Control 61(2):303–318

Ikeda T, Kashima K (2018) Sparsity-constrained controllability maximization with application to time-varying control node selection. IEEE Control Syst Lett 2:321–326

Jadbabaie A, Olshevsky A, Pappas GJ, Tzoumas V (2019) Minimal reachability is hard to approximate. IEEE Trans Autom Control 64(2):783–789

Lin F, Fardad M, Jovanović MR (2011) Augmented Lagrangian approach to design of structured optimal state feedback gains. IEEE Trans Autom Control 56(12):2923–2929

Lin F, Fardad M, Jovanović MR (2013) Design of optimal sparse feedback gains via the alternating direction method of multipliers. IEEE Trans Autom Control 58(9):2426–2431

Polyak B, Khlebnikov M, Shcherbakov P (2013) An LMI approach to structured sparse feedback design in linear control systems. In: 2013 European Control Conference (ECC), pp. 833–838

Münz U, Pfister M, Wolfrum P (2014) Sensor and actuator placement for linear systems based on \(H_{2}\) and \(H_{\infty }\) optimization. IEEE Trans Autom Control 59(11):2984–2989

Dhingra NK, Jovanović MR, Luo Z (2014) An ADMM algorithm for optimal sensor and actuator selection. In: 53rd IEEE Conference on Decision and Control, pp. 4039–4044

Nagahara M, Ogura M, Yamamoto Y (2022) Iterative greedy LMI for sparse control. IEEE Contr Syst Lett 6:986–991

Jovanović MR, Dhingra NK (2016) Controller architectures: tradeoffs between performance and structure. Eur J Control 30, 76–91. 15th European Control Conference, ECC16

Grigoriadis KM, Skelton RE (1996) Low-order control design for LMI problems using alternating projection methods. Automatica 32(8):1117–1125

Fazel M, Hindi H, Boyd S (2004) Rank minimization and applications in system theory. In: Proceedings of the 2004 American Control Conference, vol. 4, pp. 3273–3278

Amirifar R, Sadati N (2006) Low-order \(H_\infty \) controller design for an active suspension system via LMIs. IEEE Trans. Ind. Electron. 53(2)

Recht B, Xu W, Hassibi B (2008) Necessary and sufficient conditions for success of the nuclear norm heuristic for rank minimization. In: 2008 47th IEEE Conference on Decision and Control, pp. 3065–3070

Recht B, Fazel M, Parrilo PA (2010) Guaranteed minimum-rank solutions of linear matrix equations via nulcear norm minimization. SIAM Rev 52(3):451–501

Doelman R, Verhaegen M (2017) Sequential convex relaxation for robust static output feedback structured control. In: IFAC-PapersOnLine, pp. 15518–15523

Nagahara M, Quevedo DE, Nešić D (2013) Maximum hands-off control and \(L^1\) optimality. In: 52nd IEEE Conference on Decision and Control (CDC), pp. 3825–3830

Nagahara M, Quevedo DE, Nešić D (2016) Maximum hands-off control: a paradigm of control effort minimization. IEEE Trans Autom Control 61(3):735–747

Dunham B (1974) Automatic on/off switching gives 10-percent gas saving. Pop Sci 205(4):170

Kirchhoff R, Thele M, Finkbohner M, Rigley P, Settgast W (2010) Start-stop system distributed in-car intelligence. ATZextra worldwide 15(11):52–55

Chan C (2007) The state of the art of electric, hybrid, and fuel cell vehicles. Proc IEEE 95(4):704–718

Shakouri P, Ordys A, Darnell P, Kavanagh P (2013) Fuel efficiency by coasting in the vehicle. Int J Vehicular Technol 2013:14

Nalbach M, Korner A, Kahnt S (2015) Active engine-off coasting using 48V: Economic reduction of CO\(_2\) emissions. In: 17th International Congress ELIV, pp. 41–51

Khmelnitsky E (2000) On an optimal control problem of train operation. IEEE Trans Autom Control 45(7):1257–1266

Chang C, Sim S (1997) Optimising train movements through coast control using genetic algorithms. IEE Proceed-Electric Power Appl 144(1):65–73

Vossen G, Maurer H (2006) On \({L}^1\)-minimization in optimal control and applications to robotics. Optimal Control Appl Methods 27(6):301–321

Nagahara M, Quevedo DE, Nešić D (2014) Hands-off control as green control. In: SICE Control Division Multi Symposium 2014. arxiv: 1407.2377

Athans M, Falb PL (2007) Optimal Control. Dover Publications, New York. an unabridged republication of the work published by McGraw-Hill in 1966

Ikeda T, Nagahara M (2016) Value function in maximum hands-off control for linear systems. Automatica 64:190–195

Chatterjee D, Nagahara M, Quevedo DE, Rao KSM (2016) Characterization of maximum hands-off control. Syst Control Lett 94:31–36

Polyak B, Tremba A (2020) Sparse solutions of optimal control via newton method for under-determined systems. J Glob Optim 76:613–623

Hamada K, Maruta I, Fujimoto K, Hamamoto K (2021) Locally deforming continuation method based on a shooting method for a class of optimal control problems. SICE J Control, Measurement, and Syst Integrat 14(2):80–89

Kumar Y, Sukumar S, Chatterjee D, Nagahara M (2022) Sparse optimal control problems with intermediate constraints: Necessary conditions. Optimal Control, Appl Methods 43(2):369–385

Iwai D, Izawa H, Kashima K, Ueda T, Sato K (2019) Speeded-up focus control of electrically tunable lens by sparse optimization. Sci Rep 9:12365

Leomanni M, Bianchini G, Garulli A, Giannitrapani A, Quartullo R (2019) Sum-of-norms model predictive control for spacecraft maneuvering. IEEE Control Syst Lett 3(3):649–654

Shiraishi Y, Nagahara M, Saelens D (2021) Optimal control of TABS by sparse MPC. Building Simulation 2021 Conference

Sontag ED (1998) Mathematical Control Theory, 2nd edn. Springer, New York

Skelton RE, Iwasaki T, Grigoriadis K (1998) A Unified Algebraic Approach to Linear Control Design. Taylor & Francis, London

Fazel M (2002) Matrix rank minimization with applications. PhD thesis, Stanford University

Combettes PL, Pesquet J-C (2011) Proximal splitting methods in signal processing. In: Fixed-Point Algorithms for Inverse Problems in Science and Engineering, pp. 185–212. Springer, New York, NY

Nagahara M (2020) Sparsity Methods for Systems and Control. Now Publishers, Boston-Delft. https://doi.org/10.1561/9781680837254

Boyd S, Ghaoui LE, Feron E, Balakrishnan V (1994) Linear Matrix Inequalities in System and Control Theory. SIAM, Philadelphia

Blumensath T, Davies ME (2008) Iterative thresholding for sparse approximations. J Fourier Anal Appl 14(5):629–654

Leibfritz F (2005) COMPL\(_e\)ib: constraint matrix optimization problem library. Tech. report. http://www.complib.de/

Lofberg J (2004) YALMIP : a toolbox for modeling and optimization in MATLAB. In: 2004 IEEE International Conference on Robotics and Automation, pp. 284–289

Sturm JF (1999) Using SeDuMi 1.02, a Matlab toolbox for optimization over symmetric cones. Optimiz Methods Softw 11(1–4):625–653

Nagahara M MATLAB programs. https://github.com/nagahara-masaaki/MCSS

Toker O, Özbay H (1995) On the NP-hardness of solving bilinear matrix inequalities and simultaneous stabilization with static output feedback. In: Proceedings of 1995 American Control Conference (ACC’95), vol. 4, pp. 2525–2526

Ghaoui LE, Gahinet P (1993) Rank minimization under LMI constraints: A framework for output feedback problems. In: Proc. European Control Conference 1993, vol. 3

Nagahara M, Iwai Y, Sebe N (2022) Projection onto the set of rank-constrained structured matrices for reduced-order controller design. Algorithms 15(9)

Boyd S, Parikh N, Chu E, Peleato B, Eckstein J (2011) Distributed optimization and statistical learning via the alternating direction method of multipliers. Found Trends in Mach Learn 3(1):1–122

Schättler H, Ledzewicz U (2012) Geometric Optimal Control. Springer, New York

Pontryagin LS (1987) Mathematical theory of optimal processes, vol 4. CRC Press, Boca Raton

Nagahara M (2021) Sparse control for continuous-time systems. Int. J. Robust Nonlinear Control, 1–17

Ikeda T, Nagahara M, Ono S (2017) Discrete-valued control of linear time-invariant systems by sum-of-absolute-values optimization. IEEE Trans Autom Control 62(6):2750–2763

Ikeda T, Nagahara M (2018) Discrete-valued model predictive control using sum-of-absolute-values optimization. Asian J Control 20(1):196–206

Xu W, Bai E-W, Cho M (2014) System identification in the presence of outliers and random noises: a compressed sensing approach. Automatica 50(11):2905–2911

Bako L On sparsity-inducing methods in system identification and state estimation. International Journal of Robust and Nonlinear Control (to appear)

Acknowledgements

This work was supported in part by JSPS KAKENHI Grant Numbers 23H01436, 22H00512, 22H01653, and 22KK0155.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors of this manuscript declare that they have no conflicts of interest to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nagahara, M., Yamamoto, Y. A survey on compressed sensing approach to systems and control. Math. Control Signals Syst. 36, 1–20 (2024). https://doi.org/10.1007/s00498-023-00366-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00498-023-00366-1