Abstract

The exponential input-to-state stability (ISS) property is considered for systems of controlled nonlinear ordinary differential equations. A characterisation of this property is provided, including in terms of a so-called exponential ISS Lyapunov function and a natural concept of linear state/input-to-state \(L^2\)-gain. Further, the feedback connection of two exponentially ISS systems is shown to be exponentially ISS provided a suitable small-gain condition is satisfied.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider the exponential input-to-state stability (ISS) property for the system of controlled nonlinear ordinary differential equations

where, as usual, x is the state variable and d is an external input.

ISS is a stability concept for controlled (or forced) systems of differential equations, and dates back to the work of Sontag [25] in 1989. Sontag and others pioneered the concept in the 1990 s, with key works including [11, 26, 27], and there is a vast literature on the subject. As a brief illustration, ISS has been considered in the context of discrete-time systems [12] and, writing in 2022, there is much current interest in the infinite-dimensional setting; see [22] and the recent monograph [14]. For more background on ISS we refer the reader to the survey papers [3] and [29]. It is no overstatement to write that ISS has over the past 30 years profoundly and substantially (re)shaped how questions of nonlinear stability within the mathematical systems and control discipline are posed and answered. Illustrations of this claim include, for example, the wide-ranging applications of ISS from observer design [1], the analysis of dynamic neural networks [24], through to ecological modelling [23].

The input-to-state stability paradigm encompasses asymptotic and input–output approaches to stability, the latter initiated by Sandberg and Zames in the 1960 s, see [4]. The ISS property for (1.1) is a natural boundedness property of the state, in terms of both the initial conditions and the inputs. It generalises the familiar estimate

for positive \(L, \lambda \) (independent of x and d), which is valid for the special case of stable linear control systems, meaning f in (1.1) is given by \(f(x,d):= Ax + Bd\) with asymptotically stable matrix A, to the situation of general nonlinear controlled differential equations of the form (1.1).

In the context of systems of controlled nonlinear differential equations (1.1), the property (1.2) is called exponential ISS. It seems, at least to the best of the authors’ knowledge, that there is a dearth of systematic study of this property in the literature. On the one hand, this may be because exponential ISS is intimately related to stable linear control systems, which the ISS paradigm successfully moves beyond. Further, simple scalar examples illustrate that many nonlinear control systems may be ISS without being exponentially ISS. However, there are interesting classes of nonlinear control systems which enjoy the exponential ISS property.

On the other hand, the exponential ISS estimate (1.2) is closely related to the so-called input-to-state exponential stability (ISES) property from [8], where the final term on the right-hand side of (1.2) is replaced by a “gain” of the form \(\alpha (\Vert d \Vert _{L^\infty (\tau , t +\tau )})\), for some function \(\alpha \) with certain qualitative properties. A key result of the 1999 paper [8] is that “asymptotic stability is the same as exponential stability, and ISS is the same as ISES, up to a (in general nonlinear) change of coordinates in the state space”. At face value, one could argue that these results render the exponential ISS property uninteresting. However, and as acknowledged in [8], the change of coordinates in [8] is not constructive, and need not respect any physical interpretation of the states of the original model, a key consideration in engineering and applied sciences. Furthermore, it has been argued in [6] that global exponential stability (of uncontrolled differential equations) is a more natural and important concept than global asymptotic stability as the former has certain useful robustness properties, whilst the latter does not. Consequently, there are strong reasons for studying the exponential ISS property.

Our first main result is Theorem 3.4 which provides a characterisation of the exponential ISS property in terms of the existence of a so-called exponential ISS Lyapunov function. We also demonstrate that exponential ISS is equivalent to a certain linear state/input-to-state \(L^2\)-gain property—in the spirit of [15]. The main difficulty in establishing Theorem 3.4 is proving the necessity of an exponential ISS Lyapunov function. For which purpose, we leverage a known characterisation of global exponential stability of (autonomous) nonlinear differential equations, including a converse Lyapunov result, presented as Theorem 2.2. The second main result of the paper relates to the behaviour of exponential ISS under (output) feedback connections. Theorem 4.3 shows that a natural small-gain condition is sufficient for the feedback connection of two exponentially ISS systems to be exponentially ISS.

The paper is organised as follows. Section 2 gathers requisite preliminary material. Sections 3 and 4 comprise the heart of the paper, and contain a characterisation of the exponential ISS property and small-gain feedback connections with exponential ISS (and related output) properties, respectively. As an application of the material in Sect. 4, we study the exponential ISS properties of a Lur’e system in Sect. 5. The statement and proof of a technical lemma used in Sect. 3 can be found in Appendix.

2 Preliminaries

The mathematical notation we use is standard. The state and input variables x and d in (1.1) take their values in \(\mathbb R^n\) and \(\mathbb R^q\), respectively. Throughout we shall assume that d appearing in (1.1) is locally essentially bounded, that is, \(d \in L^\infty _\textrm{loc}(\mathbb R_+, \mathbb R^q)\). More generally, for an interval \(J \subseteq \mathbb R\), non-empty subspace \(E \subseteq \mathbb R^n\) and \(1 \le p \le \infty \), the symbol \(L^p(J,E)\) denotes the usual Lebesgue space of (equivalence classes of) functions \(J \rightarrow E\), with the usual \(L^p\) norm. If J is not compact, then \(L^p_\textrm{loc}(J,E)\) denotes the usual local version of \(L^p(J,E)\).

We formulate the following assumptions on the function \(f: \mathbb R^n \times \mathbb R^q \rightarrow \mathbb R^n\) in (1.1):

-

(H1)

f is locally Lipschitz (jointly in both variables);

-

(H2)

\(f(0,0) = 0\).

From here on for the sake of brevity, when we write that a function f of two variables is (locally) Lipschitz, then we mean jointly in both variables. For \(d \in L^\infty _\textrm{loc}(\mathbb R_+, \mathbb R^q)\) and \(\sigma >0\), we call (d, x), where \(x: [0, \sigma ) \rightarrow \mathbb R^n\) is locally absolutely continuous, a pre-trajectory of (1.1) defined on \([0, \sigma )\) if

A pre-trajectory (d, x) defined on \([0, \sigma )\) is said to be maximally defined if there does not exist another pre-trajectory \((d,{\tilde{x}})\) with \({\tilde{x}}: [0, \tau ) \rightarrow \mathbb R^n\) such that \(\tau >\sigma \) and \(x|_{[0, \sigma )} = \tilde{x}|_{[0, \sigma )}\). We let \(\tilde{\mathcal {T}}\) denote the set of all maximally defined pre-trajectories.

The hypothesis (H1) guarantees that the integral appearing on the right-hand side of (2.1) is well-defined and finite for all \((d,x) \in \tilde{\mathcal {T}}\). The hypothesis (H1) further ensures (from, for example [28, Theorem 54]) that, for every \(d \in L^\infty _\textrm{loc}(\mathbb R_+, \mathbb R^q)\) and \(\xi \in \mathbb R^n\), there exists a unique maximally defined pre-trajectory of (1.1) with \(x(0) = \xi \). A pre-trajectory defined on \([0, \infty )\) is called a trajectory, and we denote the set of all trajectories of (1.1) by \(\mathcal {T}\). Hypothesis (H2) ensures that the zero trajectory \((0,0) \in \mathcal {T}\) is always a trajectory of (1.1). Obviously, every trajectory is a maximally defined pre-trajectory, and thus, \(\mathcal {T}\subseteq \tilde{\mathcal {T}}\). If \(\tilde{\mathcal {T}} =\mathcal {T}\), then we say that (1.1) is forward-complete.

We note that the above terminology applies in the situation wherein f is independent of the input variable, that is, \(f(x,d) = f(x)\). Indeed, let \(g: \mathbb R^n \rightarrow \mathbb R^n\) be locally Lipschitz with \(g(0) = 0\). Recall that the zero trajectory of the differential equation

is called globally exponentially stable (GES) if \(\tilde{\mathcal {T}} = \mathcal {T}\) and there exist \(k,r >0\) such that every trajectory x of (2.2) satisfies

In this case, we simply write that (2.2) is GES. (We note that since (2.2) is autonomous, the estimate (2.3) is satisfied if the inequality holds for \(\tau = 0\).) The next result is a characterisation of the GES property, and includes a converse Lyapunov theorem. It is a key ingredient in proving the desired characterisation of exponential ISS, Theorem 3.4. Before stating the result, we recall the definition of a GES Lyapunov function for (2.2).

Definition 2.1

A continuously differentiable function \(V: \mathbb R^n \rightarrow \mathbb R_+\) is called a GES Lyapunov function (for (2.2)) if there exist positive constants \(a_1\), \(a_2\) and \(a_3\) such that

\(\diamond \)

Theorem 2.2

Consider (2.2) and assume that g is globally Lipschitz with \(g(0) = 0\). Then \(\tilde{\mathcal {T}} = \mathcal {T}\) and the following statements are equivalent.

-

(1)

(2.2) is GES.

-

(2)

There exist a GES Lyapunov function for (2.2), V, and a positive constant \(a_4\) such that

$$\begin{aligned} \Vert (\nabla V)(z)\Vert \le a_4 \Vert z \Vert \quad \forall \, z \in \mathbb R^n. \end{aligned}$$(2.5) -

(3)

There exist \(p \in (0, \infty )\) and \(\alpha _p >0\) such that every trajectory x of (2.2) satisfies

$$\begin{aligned} \int _\tau ^{t+\tau } \Vert x(s) \Vert ^p \, \textrm{d}s \le \alpha _p \Vert x(\tau ) \Vert ^p \quad \forall \, t, \tau \ge 0. \end{aligned}$$(2.6) -

(4)

For every \(p \in (0, \infty )\), there exists \(\alpha _p >0\) such that every trajectory x of (2.2) satisfies (2.6).

Theorem 2.2 combines known results from the literature. Indeed, the equivalence of statements (1) and (2) is contained in [17, Theorem 11.1, p.60], as well as [2, Theorem 1, Remark 6] and [16, Theorems 4.10, 4.14].

The integral characterisation of GES, namely the equivalence of statements (1) and (3), follows from [30, Theorem 2] (which considers more general differential inclusions). The equivalence of statements (1) and (3) in the specific case that \(p = 2\) essentially appears in [20, Proposition 1]. It is clear that statement (1) implies statement (4), which in turn implies statement (3). Statements (1) and (3)/(4) (with \(p \ge 1\)) are equivalent for strongly continuous operator semigroups associated with linear evolution equations on Banach spaces, where this result is known as the Datko–Pazy Theorem; see, for example [5, Theorem 1.8 p. 300].

By way of further remarks, the assumption that g is globally Lipschitz is imposed in Theorem 2.2 to ensure that the gradient of V is linearly bounded, that is, so that (2.5) holds. If the assumption that g is globally Lipschitz is replaced by g is locally Lipschitz and satisfies the linear bound condition

(which together are weaker than g being globally Lipschitz), then the equivalence of statement (1) and the existence of a GES Lyapunov function for (2.2) appears in [10, Theorem 3.11, p.167].

3 The exponential ISS property

The following definition underpins the present section.

Definition 3.1

The zero trajectory of (1.1) (or just (1.1)) is said to be exponentially input-to-state stable (ISS) if \(\tilde{\mathcal {T}} = \mathcal {T}\), and there exist positive constants \(L, \lambda \) such that every trajectory (d, x) of (1.1) satisfies (1.2). \(~\diamond \)

Associated with the concept of exponential ISS is that of a so-called exponential ISS Lyapunov function for (1.1), namely, a continuously differentiable function \(V: \mathbb R^n \rightarrow \mathbb R_+\) and \(a_i >0\), \(i \in \{1,2,3,4\}\), such that

and

The next lemma is routinely established by adjusting arguments from, for example, [19, Theorem 5.41]. The proof is thus omitted.

Lemma 3.2

Assume that f satisfies (H1) and (H2). If (1.1) admits an exponential ISS Lyapunov function, then (1.1) is exponentially ISS.

The following definition is inspired by [15, Definition 5].

Definition 3.3

The zero trajectory of (1.1) (or just (1.1)) is said to have linear state/input-to-state (SIS) \(L^2\)-gain if \(\tilde{\mathcal {T}} = \mathcal {T}\) and there exist \(\alpha _1, \alpha _2 >0\) such that every trajectory (d, x) of (1.1) satisfies

As we shall see, one characterisation of the exponential ISS property is in terms of the concept of weak robust exponential stability, which we discuss next. It will be convenient to collect a certain subset of pre-trajectories of (1.1), namely

where \(D \subseteq \mathbb R^q\) is non-empty and compact, and \(\mathcal {M}_D\) denotes the set of measurable \(d: \mathbb R_+ \rightarrow D\). Clearly, \(\mathcal {M}_D \subseteq L^\infty (\mathbb R_+, \mathbb R^q)\). The set of all trajectories in \(\tilde{\mathcal {T}}_D\) is denoted by \(\mathcal {T}_D\). Given such D, consider the time-varying differential equation (1.1) where \(d \in \mathcal {M}_D\). We call (1.1) uniformly globally exponentially stable (UGES) with respect to D if \(\tilde{\mathcal {T}}_D = \mathcal {T}_D\) and there exist \(k,r >0\) such that, for all \(d \in \mathcal {M}_D\), every trajectory (d, x) of (1.1) satisfies (2.3). Note that this definition requires that \(f(0,d) = 0\) for all \(d \in D\) and so is a different stability notion for (1.1) to that of exponential ISS.

Now consider the following time-varying differential equation

where \(\phi : \mathbb R^n \rightarrow \mathbb R_+\) is locally Lipschitz and \(d \in \mathcal {M}_\mathbb D\), with \(\mathbb D\subseteq \mathbb R^q\) denoting the closed unit ball centred at zero. We let \(\tilde{\mathcal {T}}_\mathbb D(\phi )\) and \(\mathcal {T}_\mathbb D(\phi )\) denote the sets of pre-trajectories and trajectories associated with (3.2), respectively, where \(d \in \mathcal {M}_\mathbb D\). It is clear that if \((d,x) \in \mathcal {T}_\mathbb D(\phi )\), then \((\phi (x)d,x) \in \mathcal {T}\). We recall from [26, p. 356] that (1.1) is called weakly robustly stable if there exists positive definite, radially unbounded, infinitely differentiableFootnote 1 \(\phi \) such that (3.2) is uniformly globally asymptotically stable (UGAS) in the sense of [26]. We say that (1.1) is weakly robustly exponentially stable if there exists \(\phi \) of the form \(\phi (z) = a \Vert z \Vert \), for some \(a>0\) and all \(z \in \mathbb R^n\) such that (3.2) is UGES with respect to \(D = \mathbb D\).

As is noted in [27], for general locally Lipschitz \(\phi \), the differential equation (3.2) need not be forward-complete, even if (1.1) is. The regularity assumed on f and \(\phi \) in the main results of this section in fact ensures that \(\tilde{\mathcal {T}}_\mathbb D(\phi ) = \mathcal {T}_\mathbb D(\phi )\), as shall be shown in Lemma 3.6.

The following theorem is a characterisation of the exponential ISS property, and is the main result of this section.

Theorem 3.4

Consider the controlled differential equation (1.1) where f satisfies (H1), (H2) and

-

(H3)

\(z \mapsto f(z,0)\) is globally Lipschitz;

-

(H4)

\(w \mapsto f(z,w)\) is globally Lipschitz, uniformly with respect to \(z \in \mathbb R^q\).

Define \(g: \mathbb R^n \rightarrow \mathbb R^n\) by \(g(z): = f(z,0)\) for all \(z \in \mathbb R^n\). The following statements are equivalent.

-

(1)

(1.1) is exponentially ISS.

-

(2)

(1.1) admits an exponential ISS Lyapunov function.

-

(3)

(1.1) is weakly robustly exponentially stable.

-

(4)

(1.1) has linear SIS \(L^2\)-gain.

-

(5)

(2.2) is globally exponentially stable.

A sufficient condition for (H3) and (H4) is that the function f determining (1.1) is globally Lipschitz. The converse is false, however, as even in the scalar case \(n = q = 1\) a function of the form

where \(\phi : \mathbb R\rightarrow \mathbb R\) is bounded and differentiable, but with unbounded derivative, satisfies (H3) and (H4) but is not globally Lipschitz.

Under the assumption that f is globally Lipschitz, it is known that GES of the uncontrolled differential equation (1.1) is sufficient for ISS of (1.1), see [16, Lemma 4.6, p. 176]. There the author does not explicitly conclude that the exponential ISS property holds, but it is a consequence of their argument. The upshot is that, for “Lipschitz systems”, exponential ISS of (1.1) is equivalent to GES of the uncontrolled differential equation (1.1)—a feature known to be true of stable linear control systems, but not true of nonlinear control systems in general.

By way of related results, [15, Theorem 2] states that if (1.1) has linear \(L^2\)-gain, meaning there exist \(\alpha _1 \in \mathcal {K}\) and \(\alpha _2 >0\) such that for all trajectories (d, x) of (1.1), it follows that

then (1.1) is ISS. Conversely, [15, Theorem 2] also gives that if (1.1) is ISS, then there exists a diffeomorphic change of coordinates such that the transformed version of (1.1) has linear \(L^2\)-gain. ISS is equivalent to the weak robust stability property by [26, Theorem 1], and it is this latter property which plays a crucial role in [26] in establishing that the existence of an ISS Lyapunov function is necessary for the ISS property. Here we see that equivalence holds for the exponential versions of these properties.

The paper [27] contains a number of further characterisations of the ISS property, roughly in terms of a range of stability- and attractivity-type assumptions. Theorem 3.4 shows that the situation considered here is much simpler.

As is the case with characterisations of stability properties involving Lyapunov functions, the main technical difficulty in establishing Theorem 3.4 is establishing the necessity of an exponential ISS Lyapunov function. The “heavy lifting” in the proof given is performed by the characterisation of global exponential stability, Theorem 2.2, and the following result which essentially states that exponential ISS Lyapunov functions for (1.1) are precisely GES Lyapunov functions for the corresponding uncontrolled differential equation. This brings us to the key reasons we impose global Lipschitz assumptions in (H3) and (H4). The first is to invoke Theorem 2.2 to ensure the existence of V satisfying (2.4), with linearly bounded gradient (2.5) as well, and the second is to facilitate the proof of Lemma 3.5.

Lemma 3.5

Consider (1.1), assume that f satisfies (H1)–(H4), and define \(g(z): = f(z,0)\) for all \(z \in \mathbb R^n\). An exponential ISS Lyapunov function for (1.1) is a GES Lyapunov function for (2.2). Conversely, a GES Lyapunov function for (2.2) satisfying (2.5) is an exponential ISS Lyapunov function for (1.1).

The remainder of this section is dedicated to proving Theorem 3.4. We shall prove a cycle of equivalences, and record the more involved steps as preliminary lemmas. We begin with a proof of Lemma 3.5, the essence of which is present in the proof of [16, Lemma 4.6, p. 176]. However, in [16] the connection between the Lyapunov functions is not made explicit and it is assumed that f is globally Lipschitz.

Proof of Lemma 3.5

The first claim is immediate by taking \(d = 0\) in (2.4). Note that the linearly bounded gradient condition (2.5) is not required for a GES Lyapunov function. Conversely, let continuously differentiable function \(V: \mathbb R^n \rightarrow \mathbb R_+\) and positive constants \(a_1\), \(a_2\), \(a_3\) and \(a_4\) be such that (2.4) and (2.5) hold for \(g(z): = f(z,0)\), which is globally Lipschitz by hypothesis (H3). In the light of (2.4a) it is clear that the function V satisfies (3.1a).

To establish (3.1b), we simply estimate that

where we have used hypothesis (H4), namely that \(w \mapsto f(z,w)\) is globally Lipschitz, uniformly with respect to \(z \in \mathbb R^n\), with \(L > 0\) a Lipschitz constant. A routine quadratic inequality applied to the final inequality above, combined with the lower bound in (2.4a), yields the inequality (3.1b). \(\square \)

Lemma 3.6

Consider (3.2) and assume that f satisfies (H1)–(H4), and that \(\phi \) is globally Lipschitz and zero at zero. Then \(\tilde{\mathcal {T}}_\mathbb D(\phi ) = \mathcal {T}_\mathbb D(\phi )\).

Proof

Let \(L_1\), \(L_2\) and \(L_3\) be Lipschitz constants for \(z \mapsto f(z,0)\), \(w \mapsto f(z,w)\) and \(\phi \), respectively. The claim follows from [19, Proposition 4.12] once we note that

\(\square \)

Lemma 3.7

Assume that f satisfies (H1)–(H4). If (1.1) is exponentially ISS, then (1.1) is weakly robustly exponentially stable.

The proof of Lemma 3.7 uses a technical result, Lemma A.1, which is stated and proven in Appendix.

Proof of Lemma 3.7

Assume that (1.1) is exponentially ISS, and let \(L, \lambda >0\) be such that the exponential ISS estimate (1.2) holds. Define \(\phi : \mathbb R^n \rightarrow \mathbb R_+\) by \(\phi (z):= \frac{1}{2L}\Vert z\Vert \) for all \(z \in \mathbb R^n\). We claim that (3.2) with this choice of \(\phi \) and \(D:= \mathbb D\) is UGES. An application of Lemma 3.6 yields that \(\tilde{\mathcal {T}}_\mathbb D(\phi ) = \mathcal {T}_\mathbb D(\phi )\). Let \((d,x_\phi ) \in \mathcal {T}_\mathbb D(\phi )\). By construction, we have

Since \((d\phi (x_\phi ), x_\phi ) \in \mathcal {T}\), invoking (1.2) and (3.4), we estimate that

from which we infer that

Now fix \(\tau \ge 0\) and \(\kappa >0\) sufficiently large so that \(\rho := Le^{-\kappa \lambda } +1/2 <1\). We use (1.2) and (3.4) again to estimate that

Setting

and maximising both sides of (3.6) over \(s \ge 0\) gives

where \(\mathbb Z_+\) is the set of nonnegative integers. An application of Lemma A.1 with \(n=m=1\), \(S = \rho \in (0,1)\) and \(v =0\) gives \(\lambda _0 >0\), \(\Gamma _1 >1\) such that

where the final inequality follows from (3.5) and the definition of \(\zeta \). The above estimate shows that (3.2) is UGES with respect to \(D = \mathbb D\) and \(\phi \) of the form \(\phi (z) = a \Vert z \Vert \) for positive a. Therefore, we conclude that (1.1) is weakly exponentially robustly stable. \(\square \)

Lemma 3.8

Assume that f satisfies (H1)–(H4). If (1.1) admits an exponential ISS Lyapunov function, then (1.1) has linear SIS \(L^2\)-gain.

Proof

By Lemma 3.2, it follows that \(\tilde{\mathcal {T}} = \mathcal {T}\). Let \((d,x) \in \mathcal {T}\). The inequality (3.1b) yields that

Using the variation of parameters formula, and a suitably modified version of [19, Lemma 5.43], the inequality

holds. Invoking the bounds in (3.1a) for V and integrating the above between \(s=0\) to \(s=t \ge 0\), for fixed \(\tau \ge 0\), we obtain

Routine calculations interchanging the order of integration show that

The conjunction of (3.7) and (3.8) gives that

where \(\alpha _1:= a_2/(a_1 a_3)\) and \(\alpha _2:= a_4 /(a_1 a_3)\). We conclude that (1.1) has linear SIS \(L^2\)-gain. \(\square \)

Proof

Recall the statements of the result:

-

(1)

(1.1) is exponentially ISS.

-

(2)

(1.1) admits an exponential ISS Lyapunov function.

-

(3)

(1.1) is weakly robustly exponentially stable.

-

(4)

(1.1) has linear SIS \(L^2\)-gain.

-

(5)

(2.2) is globally exponentially stable.

The proof is a conjunction of the earlier lemmas and the following steps.

Step 1. That statement (3) is sufficient for statement (5) follows immediately by taking \(d = 0\) in (3.2).

Step 2. If statement (5) holds, then an application of Theorem 2.2 is sufficient for the existence of a GES Lyapunov function for (2.2) which satisfies (2.4). Invoking Lemma 3.5 now yields an exponential ISS Lyapunov function for (1.1), that is, statement (2) holds.

Step 3. That statement (4) is sufficient for statement (5) again follows by taking \(d =0\), only now invoking statement (3) of Theorem 2.2 with \(p=2\).

Summarising the above lemmas and steps, we have proven the first cycle:

We have also proven

which completes the proof. \(\square \)

We comment that there is a characterisation of the UGES property for (1.1) which parallels Theorem 2.2 and is essentially an exponential version of the main result of [18], and also has some overlap with [21, Theorem 2]. The UGES characterisation may be used instead of Theorem 2.2 to prove Theorem 3.4. This approach is reminiscent of that taken in the early literature (notably [26]) on characterisations of the ISS property. In particular, to exploit a characterisation of UGES as the basis for the construction of an exponential ISS Lyapunov function, the weak robust exponential stability property in statement (3) of Theorem 3.4 plays a key role.

We conclude this section with a characterisation of the so-called local exponential ISS property. Recall that the (uncontrolled) differential equation (2.2) is called locally exponentially stable if there exist positive constants \(\delta , k, r\) such that

and every trajectory x of (2.2) with \(\Vert x(0) \Vert < \delta \) satisfies (2.3).

The natural generalisation of local exponential stability, and associated Lyapunov functions, to the setting of controlled differential equations is formulated below. For \(\delta >0\), we let \(B(\delta ) \subseteq \mathbb R^n\) denote the open ball of radius \(\delta \) centred at zero.

Definition 3.9

(1) The zero trajectory (0, 0) of (1.1) (or just (1.1)) is said to be locally exponentially input-to-state stable if there exist positive constants \(\delta , L, \lambda \) such that

and every trajectory (d, x) of (1.1) with \(\Vert x(0) \Vert + \Vert d \Vert _{L^\infty } < \delta \) satisfies (1.2).

(2) Given \(\delta >0\), a continuously differentiable function \(V: B(\delta ) \rightarrow \mathbb R_+\) is called a local exponential ISS Lyapunov function (for (1.1)) if there exist constants \(a_i >0\), \(i \in \{1,2,3,4\}\) such that the inequalities in (3.1) hold for all \(z \in \mathbb R^n\) and \(w \in \mathbb R^q\) such that \(\Vert z \Vert + \Vert w \Vert < \delta \). \(\diamond \)

Our main result on the local exponential ISS property is Proposition 3.11, and is a local analogue of Theorem 3.4. Indeed, when the right-hand side of (1.1) is continuously differentiable on a neighbourhood of zero, then the local exponential ISS property is equivalent to the existence of a local exponential ISS Lyapunov function and, moreover, to local exponential stability of the corresponding uncontrolled differential equation. In other words, the known equivalence from [27, Lemma 1.1] of local ISS and asymptotic stability of the zero trajectory of the corresponding uncontrolled differential equation is also true in the exponential setting. Our approach follows that of the section so far, only leveraging the characterisation [2, Theorem 1] of local exponential stability, rather than invoking Theorem 2.2.

The following lemma is a local version of Lemma 3.2 and is routine to prove.

Lemma 3.10

Assume that f satisfies (H1) and (H2). If (1.1) admits a local exponential ISS Lyapunov function, then (1.1) is locally exponentially ISS.

Proposition 3.11

Consider the controlled differential equation (1.1) and assume that f satisfies (H1), (H2) and

-

(H5)

f is continuously differentiable on a neighbourhood of zero.

Define \(g: \mathbb R^n \rightarrow \mathbb R^n\) by \(g(z): = f(z,0)\) for all \(z \in \mathbb R^n\). The following statements are equivalent.

-

(1)

(1.1) is locally exponentially ISS.

-

(2)

(1.1) admits a local exponential ISS Lyapunov function.

-

(3)

(2.2) is locally exponentially stable.

Proof of Proposition 3.11

An application of Lemma 3.10 yields that statement (2) is sufficient for statement (1) which, in turn, is sufficient for statement (3) by simply taking \(d = 0\). Now assume that statement (3) holds. Hypothesis (H5) ensures that the assumptions of [2, Theorem 1] are satisfied, and an application of this result guarantees the existence of \(\delta >0\), continuously differentiable function \(V: B(\delta ) \rightarrow \mathbb R\) and positive constants \(a_i\), \(i \in \{1,2,3,4\}\), such that (2.4) holds for all \(z \in \mathbb R^n\) with \(\Vert z \Vert < \delta \).

Invoking hypothesis (H1), there exists a positive constant \(L_{\delta }\) such that

In the light of the above bound, the estimates (3.3) remain valid with L replaced by \(L_{\delta }\) and now for all \((z, w) \in \mathbb R^n \times \mathbb R^q\) with \(\Vert z \Vert + \Vert w\Vert < \delta \). Consequently, we conclude that V is a local exponential ISS Lyapunov function for (1.1). \(\square \)

4 Small-gain conditions for exponential ISS of feedback systems

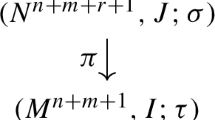

Here we consider the output-feedback connection of two nonlinear systems of controlled and observed differential equations of the form

where, as usual, x is the state variable, u is a feedback variable, d is an external input, and y is the measured output. These are assumed to take values in \(\mathbb R^{n}\), \(\mathbb R^{m}\), \(\mathbb R^{q}\) and \(\mathbb R^{p}\), respectively. For typographical reasons, we write column vectors inline as pairs—\((x_1,x_2)\) and so on. We extend the hypotheses (H1) and (H2) from Sect. 2 to f in (4.1a) so that, in particular, \(f(0,0,0) =0\). We shall assume that h satisfies

-

(F1)

h is locally Lipschitz with \(h(0,0,0) =0\).

For \((u,d) \in L^\infty _\textrm{loc}(\mathbb R_+, \mathbb R^{m} \times \mathbb R^{q})\), we define pre-trajectories of (4.1a) as in Sect. 1 with d replaced by (u, d), and denote the set of maximally defined pre-trajectories by \(\tilde{\mathcal {T}}\). Further, for \(0 < \sigma \le \infty \), we call (u, d, x, y) where \(y: [0, \sigma ) \rightarrow \mathbb R^p\), a pre-trajectory of (4.1) defined on \([0, \sigma )\) if (u, d, x) is a pre-trajectory of (4.1a) on \([0, \sigma )\) and (4.1b) holds. We denote the set of maximally defined pre-trajectories of (4.1) by \(\tilde{\mathcal {O}}\). Pre-trajectories of (4.1a) and (4.1) which are defined on \([0, \infty )\) are called trajectories, the sets of which are denoted by \(\mathcal {T}\) and \(\mathcal {O}\), respectively. Observe that \(\tilde{\mathcal {O}} = \mathcal {O}\) whenever \(\tilde{\mathcal {T}} = \mathcal {T}\).

The key stability concepts in the current section are presented in the following definition.

Definition 4.1

(1) The zero trajectory of (4.1) (or just (4.1)) is called exponentially input-to-output stable (IOS) if \(\tilde{\mathcal {O}} = \mathcal {O}\) and there exist positive constants K, M, N such that every trajectory \((u,d,x,y) \in \mathcal {O}\) satisfies, \(\forall \, t, \tau \ge 0\)

We call the constant M in (4.2) the input–output gain.

(2) We say that the zero trajectory of (4.1) (or just (4.1)) has linear state/input-to-output (SIO) \(L^2\)-gain if \(\tilde{\mathcal {O}} = \mathcal {O}\) and there exist positive constants \(\alpha , \beta , \gamma \) such that every trajectory \((u,d,x,y) \in \mathcal {O}\) satisfies

We call the constant \(\beta \) in (4.3) the \(L^2\)-input–output gain. If \(y=x\), then (4.1a) is said to have linear SIS \(L^2\)-gain. \(\diamond \)

Consider now two systems of the form (4.1)

where the state-, input-, external input- and output-spaces have dimensions \(n_i, m_i,q_i\) and \(p_i\), respectively, for \(i \in \{1,2\}\). Assuming that \(m_1 = p_2\) and \(m_2 = p_1\), the standard feedback connection

in (4.4) leads to the feedback control system

We refer to the individual versions of (4.1) in (4.6) as subsystems.

Given \((d_1, d_2) \in L^\infty _\textrm{loc}(\mathbb R_+, \mathbb R^{q_1} \times \mathbb R^{q_2})\), if there exist \(0 <\sigma \le \infty \), locally absolutely continuous functions \(x_i:[0, \sigma ) \rightarrow \mathbb R^{n_i}\), and locally essentially bounded functions \(y_i:[0, \sigma ) \rightarrow \mathbb R^{p_i}\) for \(i \in \{1,2\}\), such that (4.6) holds almost everywhere on \([0, \sigma )\), then we call \((d_1,d_2, x_1, x_2, y_1,y_2)\) a pre-trajectory of (4.6) on \([0,\sigma )\). The set of all maximally defined pre-trajectories is denoted by \(\tilde{\mathcal {F}}\). As usual, a pre-trajectory of (4.6) defined on \([0,\infty )\) is called a trajectory of (4.6), the set of which is denoted \(\mathcal {F}\). Given a (pre-)trajectory of (4.6), it is clear that \((d_i, y_{3-i}, x_i, y_i)\) is a (pre-)trajectory of (4.1) for \(i \in \{1,2\}\).

The feedback connection (4.6) is called well-posed if, for all \((d_1, d_2) \in L^\infty _\textrm{loc}(\mathbb R_+, \mathbb R^{q_1} \times \mathbb R^{q_2})\) and all \((x_0^1,x_0^2) \in \mathbb R^{n_1} \times \mathbb R^{n_2}\), there exist unique maximally defined pre-trajectories of (4.6) with \(x_i(0) = x_0^i\) for \(i \in \{1,2\}\). Some additional assumptions are required to ensure well-posedness, and we comment that exhaustively detailing sufficient conditions for this property is not the primary focus here. A bespoke approach will usually be required in specific contexts. Presently, the following well-posedness result is taken from [13, Example 1.5.1, p.44].

Lemma 4.2

Given the feedback system (4.6), assume that both subsystems satisfy (H1), (H2) and (F1). If the hypothesis

-

(F2)

for all \(z_1,z_2,w_1,w_2 \in \mathbb R^{n_1}\times \mathbb R^{n_2}\times \mathbb R^{q_1}\times \mathbb R^{q_2}\) there exist unique solutions \(v_i = g_i(z_1,z_2,w_1,w_2)\), to the pair of algebraic equations \(v_1 = h_1(z_1,v_2,w_1)\) and \(v_2 = h_2(z_2,v_1,w_2)\), and the functions \(g_i\) are locally Lipschitz;

holds, then the feedback system (4.6) is well-posed.

A special case wherein hypothesis (F2) is satisfied is when either \(h_1\) or \(h_2\) is independent of their second variable. If \(h_1\) does not depend on \(u_1\), then the equations in (F2) are solved by \(g_1=h_1(z_1,w_1)\) and \(g_2= h_2(z_2,h_1(z_1,w_1),w_2)\).

Proof of Lemma 4.2

The hypotheses imposed, including that \(g_i\) are locally Lipschitz from (F2), ensure that the system of controlled nonlinear differential equations

has locally Lipschitz right-hand side. Therefore, given \((d_1, d_2) \in L^\infty _\textrm{loc}(\mathbb R_+, \mathbb R^{q_1} \times \mathbb R^{q_2})\) and \((x_0^1,x_0^2) \in \mathbb R^{n_1} \times \mathbb R^{n_2}\), let \(((d_1,d_2),(x_1,x_2))\) denote the unique, maximally defined pre-trajectory of (4.7) satisfying \(x_i(0) = x_0^i\) for \(i \in \{1,2\}\), the existence of which follows from arguments standard in ODE theory. Suppose that the pre-trajectory is defined on \([0,\sigma )\). For \(i \in \{1,2\}\), define \(y_i: [0, \sigma )\rightarrow \mathbb R^{p_i}\) by

In the light of the algebraic condition in (F2), it is clear that \((d_1,d_2, x_1, x_2, y_1,y_2)\) is a unique, maximally defined pre-trajectory of (4.6), establishing well-posedness. \(\square \)

The following theorem is the main result of this section.

Theorem 4.3

Consider the feedback connection (4.6). Assume that both subsystems satisfy (H1), (H2), and (F1), and are exponentially ISS and exponentially IOS with input–output gains \(M_i\) for \(i \in \{1,2\}\). Assume further that (F2) holds. If \(M_1 M_2 <1\), then \(\tilde{\mathcal {F}} = \mathcal {F}\) and the feedback connection is exponentially IOS (exponentially ISS) from external input \((d_1, d_2)\) to output \((y_1, y_2)\) (to state \((x_1, x_2)\).)

Note that exponential ISS of the subsystems (4.1a) means that, for \(i \in \{1,2\}\), the forward-complete property \(\tilde{\mathcal {T}}_i = \mathcal {T}_i\) holds and that there exist positive constants \(L_i, \lambda _i\) such that every trajectory \((u_i,d_i,x_i) \in \mathcal {T}_i\) satisfies

Similarly, exponential IOS of the subsystems (4.1) means that, for \(i \in \{1,2\}\), \(\tilde{\mathcal {O}}_i = \mathcal {O}_i\) and there exist positive constants \(K_i, M_i, N_i\) such that every trajectory \((u_i,d_i,x_i,y_i) \in \mathcal {O}_i\) satisfies for all \(t, \tau \ge 0\),

Proof of Theorem 4.3

Let \(L_i, K_i, M_i, N_i, \gamma _i, \lambda _i >0\) be as in the estimates (4.8) and (4.9) for subsystem \(i \in \{1,2\}\). Fix \(\kappa >0\) sufficiently large so that \(\rho (S)<1\), where

which is evidently possible by continuity, as the spectral radius of the above matrix in the limit as \(\kappa \rightarrow \infty \) is equal to \(\sqrt{M_1 M_2} \in (0,1)\).

Assume first that \(d_1\) and \(d_2\) are essentially bounded. Let \((d_1,d_2, x_1, x_2, y_1,y_2) \in \mathcal {F}\) denote a trajectory of (4.6). (We shall show that \(\tilde{\mathcal {F}} = \mathcal {F}\) later.) We use (4.5) as a definition of \(u_i\) and, for notational convenience, we write \(y_i(t):= y_i(t; x_i(0), u_i, d_i)\) and \(v_i(\tau ):= \Vert d_i \Vert _{L^\infty (\tau , \infty )}\) for \(i \in \{1,2\}\) and \(\tau \ge 0\). We set

We first derive an \(L^\infty \) estimate for y. Maximising (4.9) over \(t \in [0,T]\) for \(T \ge 0\) yields

Inserting the estimate (4.11) for \(\Vert y_2\Vert _{L^\infty (\tau ,\infty )}\) into that for \(\Vert y_1\Vert _{L^\infty (\tau ,\infty )}\) gives

and so, as \(M_1 M_2 <1\), we have

Interchanging the roles of \(i=1\) and \(i=2\), we obtain the corresponding estimate for \(\Vert y_2 \Vert _{L^\infty (\tau ,\tau + T)}\). Letting \(T \rightarrow \infty \), we conclude that there exist \(K_3, M_3 >0\) such that

The exponential ISS property (4.1a) gives

Combining (4.12) and (4.13), we see that there exist positive constants \(K_4\), \(N_4\) such that

Now fix \(\tau \ge 0\). For \(k \in \mathbb Z_+\), we use (4.9) to estimate that

and so, maximising both sides over \(s \ge 0\) gives, for \(\, i \in \{1,2\}\),

where

The exponential ISS estimates (1.2) give that, for all \(i \in \{1,2\}\), all \(k \in \mathbb Z_+\) and all \(s \ge 0\)

Maximising over \(s \ge 0\) gives for \(i \in \{1,2\}\).

Writing the combination of (4.15) and (4.16) in linear system form yields, for all \(k \in \mathbb Z_+\),

Setting

the above inequalities read

where S is as in (4.10). Since \(\rho (S)<1\), an application of Lemma A.1 yields the existence of \(\Gamma _1, \theta >0\) such that

In the light of (4.14), the definitions of p and v, and (4.17), we conclude that

for some constant \(\Gamma \). By causality, it is clear that the term \( \Vert d \Vert _{L^\infty (\tau , \infty )}\) in (4.18) may be replaced by \( \Vert d \Vert _{L^\infty (\tau , t+\tau )}\). Finally, in the light of the proof of Lemma 4.2, to show that \(\tilde{\mathcal {F}} = \mathcal {F}\) it suffices to show that, given a pre-trajectory of (4.7) with state component \(x: [0,\sigma ) \rightarrow \mathbb R^{n_1} \times \mathbb R^{n_2}\), then x is bounded on \([0, \sigma )\). This follows from the arguments at the start of the present proof, up to (4.13), with \(\tau = 0\) and \(T = \sigma \). This completes the proof. \(\square \)

We reiterate that assumption (F2) has been imposed to ensure well-posedness of the feedback system (4.6) (and, in conjunction with the other hypotheses of Theorem 4.3, to guarantee the forward-complete property \(\tilde{\mathcal {F}} = \mathcal {F}\)). Theorem 4.3 remains true if hypothesis (F2) is replaced by another hypothesis which ensures well-posedness and \(\tilde{\mathcal {F}} = \mathcal {F}\). We further comment that the above proof as given extends to the case that \(f_i\) and \(h_i\) are explicitly time-varying.

The following corollary of Theorem 4.3 states that the cascade connection of two exponentially IOS/ISS systems is exponentially IOS/ISS. The cascade connection is depicted in Fig. 1 and comprises two systems of the form (4.1) with the single additional equality \(u_2 = y_1\).

Corollary 4.4

Consider the cascade connection of two systems of the form (4.1) via \(u_2 = y_1\). Assume that both subsystems satisfy (H1), (H2) and (F1). If both subsystems are exponentially IOS and exponentially ISS, then the cascade connection is exponentially IOS (exponentially ISS) from external input \((u_1, d_1, d_2)\) to output \((y_1, y_2)\) (to state \((x_1, x_2)\).)

Proof

We shall apply Theorem 4.3 by writing the cascade connection as a feedback connection. For which purpose, define \({\tilde{d}}_1:=(d_1,u_1)\) and introduce the “phantom” input variable \({\tilde{u}}_1\) by setting

The feedback connection given by the equations \(u_2=y_1\) and \({\tilde{u}}_1=y_2\) leads to a feedback system of the form (4.6) with \(f_1\), \(u_1\) and \(d_1\) replaced by \({\tilde{f}}_1\), \({\tilde{u}}_1\) and \({\tilde{d}}_1\), respectively. Observe that the corresponding input–output gain \({\tilde{M}}_1\) in the first subsystem is equal to zero. Hence, the small-gain condition \({\tilde{M}}_1 M_2 = 0 <1\) is satisfied. Moreover, \(h_1 = h_1(x_1, {\tilde{d}}_1)\) is independent of \({\tilde{u}}_1\) and, therefore, hypothesis (F2) is satisfied. An application of Theorem 4.3 completes the proof. \(\square \)

Our next result provides a small-gain condition under which the feedback connection (4.6) inherits the linear SIO/SIS \(L^2\)-gain property from its subsystems.

Proposition 4.5

Consider the feedback connection (4.6). Assume that both subsystems satisfy (H1), (H2), and (F1), and that both subsystems have linear SIS and SIO \(L^2\)-gains, with \(L^2\)-input–output gains \(\beta _i\) for \(i \in \{1,2\}\). Assume further that (F2) holds and that \(\tilde{\mathcal {F}} = \mathcal {F}\). If \(\beta _1 \beta _2 <1\), then the feedback system (4.6) has linear SIS and SIO \(L^2\)-gains.

If \(f_i\) satisfies hypotheses (H3) and (H4) for \(i \in \{1,2\}\), then, by Theorem 3.4, the subsystems having linear SIS \(L^2\)-gains is equivalent to exponential ISS of the subsystems. Additionally, if the functions \(g_i\) in hypothesis (F2) satisfy (H3) and (H4), then, again by Theorem 3.4, the feedback system (4.6) having linear SIS \(L^2\)-gain is equivalent to the feedback system (4.6) being exponentially ISS.

The proof of Proposition 4.5 is elementary, and so only an outline is provided. Note that the subsystems (4.1a) having linear SIO \(L^2\)-gains means that \(\tilde{\mathcal {O}}_i = \mathcal {O}_i\) and there exist positive constants \(K_i, M_i, N_i\) such that every trajectory \((u_i,d_i,x_i,y_i) \in \mathcal {O}_i\) satisfies

The feedback connection considered means that the term \(\Vert y_i \Vert _{L^2(\tau , t+\tau )}\) appears on both sides of (4.19), and the small-gain assumption \(\beta _1 \beta _2<1\) readily affords by routine algebraic manipulation the desired upper bound for \(\Vert y_i \Vert _{L^2(\tau , t+\tau )}\).

Theorem 4.3 is inspired by [11, Theorem 2.1] which, broadly, provides a small-gain condition under which the output-feedback connection of two IOS systems is IOS. A key ingredient in the proof of that result is [11, Lemma A.1]. Interestingly, this result cannot be strengthened in general to produce an exponentially decaying estimate, as the next result shows. Therefore, it seems that Theorem 4.3 cannot be established as a special case of [11, Theorem 2.1].

Lemma 4.6

For all positive constants \(K, \gamma , s >0\) and \(L, \mu \in (0,1)\), the continuous, nonnegative function \(z: \mathbb R_+ \rightarrow \mathbb R_+\) given by

for sufficiently small \(P >0\) and \(\alpha \in \mathbb N\) such that \(\mu ^\alpha <L\) satisfies

but does not decay exponentially as \(t\rightarrow \infty \).

The proof of Lemma 4.6 is clear, and so we do not include it.

5 An example

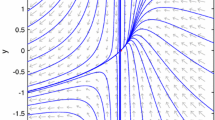

As an example, we consider the application of Theorem 3.4 and Proposition 4.5 to a Lur’e system—namely a nonlinear control system of the form

where \((A,B,C,B_\textrm{e}, D_\textrm{e}) \in \mathbb R^{n \times n} \times \mathbb R^{n \times m} \times \mathbb R^{p \times n} \times \mathbb R^{n \times q} \times \mathbb R^{p \times q}\). The function \(\psi : \mathbb R^p \rightarrow \mathbb R^m\) is assumed to be locally Lipschitz, and \(v \in L^\infty _\textrm{loc}(\mathbb R_+, \mathbb R^q)\) is an external input. For simplicity we assume that A is Hurwitz (meaning all eigenvalues have negative real parts), and let \({\textbf{G}}(s):= C(sI-A)^{-1}B\), so that \({\textbf{G}}\) is the transfer function associated with the linear control system described by A, B and C.

To fit (5.1) into the framework of Sect. 4, we view (5.1) as the feedback connection (4.6) where

with \(x:= x_1\) and \(d_1 = d_2 = v\). The state space associated with the second subsystem in (5.3) is the 0-dimensional trivial space \(\{0\}\). Thus, \(f_2\) maps \(\{0\} \times \mathbb R^p \times \mathbb R^q \rightarrow {0}\). In particular, the associated state variable is (always) equal to zero. Since the first subsystem (5.2) is linear, the variation of parameters formula gives that

and routine estimates of the above now give for all \(t,\tau \ge 0\),

for some constants \(\alpha _1, \gamma _1 \ge 0\). Assuming that there exists \(\beta _2 >0\) such that

we evidently have that

Therefore, in the light of (5.5) and (5.6), whenever the small-gain condition \(\beta _2 \Vert {\textbf{G}}\Vert _{H^\infty }<1\) holds, it follows from Proposition 4.5 that the Lur’e system (5.1) has linear SIS/SIO \(L^2\)-gain. If \(\psi \) is additionally globally Lipschitz (with arbitrary Lipschitz constant), then Theorem 3.4 guarantees that the Lur’e system (5.1) is exponentially ISS from external signal v to state x.

Furthermore, defining \(G: \mathbb R_+ \rightarrow \mathbb R^{p\times m}\) by \(G(t): = Ce^{At}B\) and \(G_\textrm{e}: \mathbb R_+ \rightarrow \mathbb R^{p\times q}\) by \(G_\textrm{e}(t): = Ce^{At}B_\textrm{e}\), it follows that (5.4) with \(\tau = 0\) may be expressed as

where \(*\) denotes convolution. (For simplicity we take \(\tau = 0\), the general case is treated by a usual shift argument.) Taking norms in the above and invoking Holder’s inequality gives that

for some positive scalars \(K_1\) and \(\gamma _1\). If the small-gain condition \(\Vert G \Vert _{L^1(\mathbb R_+)} \beta _2 <1\) is satisfied, then we conclude from Theorem 4.3 that the Lur’e system (5.1) is exponentially ISS from external signal v to state x, without requiring that \(\psi \) is globally Lipschitz. Note that \({\textbf{G}}= \mathcal {L}(G)\)—the Laplace transform of G, and since \(\Vert {\textbf{G}}\Vert _{H^\infty } \le \Vert G \Vert _{L^1(\mathbb R_+)}\), this latter small-gain condition is more conservative than \(\beta _2 \Vert {\textbf{G}}\Vert _{H^\infty }<1\). However, the equality \(\Vert {\textbf{G}}\Vert _{H^\infty } = \Vert G\Vert _{L^1(\mathbb R_+)}\) is possible, for example, in the so-called single-input single-output (\(m = p = 1\)) setting where A is Metzler (that is, all off-diagonal entries of A are nonnegative) and \(\pm B\) and \(\pm C\) are componentwise nonnegative vectors. Indeed, in this case we have that

(see [9, Example 3.7] for other classes of Lur’e system where the above equality holds.)

As an illustrative example, consider the state equations for a steam boiler model described in [7, Example 3]. The model is of the form (5.1) with

and does not contain the external signal v. The focus of [7, Example 3] is on asymptotic stability of the Lur’e system (5.1) with the linear data as in (5.8). The nonlinear term \(\psi \) in (5.1) is assumed in [7] to be continuously differentiable, satisfy \(\psi (0) =0\),

Since A is Metzler and evidently Hurwitz, and B, \(-C\) are componentwise nonnegative, we have from (5.7) that

Therefore, the above analysis shows that, for any \(B_\textrm{e}\) and \(D_\textrm{e}\), and any locally Lipschitz \(\psi \) which satisfies

with \(\beta _2 < 1.9355 = 1/ |{\textbf{G}}(0)|\), the resulting Lur’e system is exponentially ISS. Although the condition (5.10) on \(\psi \) is global, and so not directly comparable with (5.9), it is significantly weaker than the global version of (5.9) and does not require that \(\psi \) is continuously differentiable.

Notes

A similar definition is used in [27, p. 1291] where the function \(\phi \) is assumed only to be locally Lipschitz.

References

Alessandri A (2004) Observer design for nonlinear systems by using input-to-state stability. In: Proceedings of the 43rd IEEE conference on decision control (CDC) vol 4, pp 3892–3897

Corless M, Glielmo L (1998) New converse Lyapunov theorems and related results on exponential stability. Math Control Signals Syst 11:79–100

Dashkovskiy SN, Efimov DV, Sontag ED (2011) Input to state stability and allied system properties. Autom Remote Control 72:1579–1614

Desoer CA, Vidyasagar M (1975) Feedback systems: input-output properties. Academic Press, New York

Engel K-J, Nagel R (2000) One-parameter semigroups for linear evolution equations. Springer, New York

Glas MD (1987) Exponential stability revisited. Int J Control 46:1505–1510

Grujić LT (1981) On absolute stability and the Aizerman conjecture. Automatica 17:335–349

Grüne L, Sontag ED, Wirth FR (1999) Asymptotic stability equals exponential stability, and ISS equals finite energy gain-if you twist your eyes. Syst Control Lett 38:127–134

Guiver C, Logemann H (2020) A circle criterion for strong integral input-to-state stability. Automatica 111:108641

Haddad WM, Chellaboina V (2008) Nonlinear dynamical systems and control. Princeton University Press, Princeton

Jiang Z-P, Teel AR, Praly L (1994) Small-gain theorem for ISS systems and applications. Math Control Signals Syst 7:95–120

Jiang Z-P, Wang Y (2001) Input-to-state stability for discrete-time nonlinear systems. Automatica 37:857–869

Karafyllis I, Jiang Z-P (2011) Stability and stabilization of nonlinear systems. Springer, London

Karafyllis I, Krstic M (2019) Input-to-state stability for PDEs. Springer, Cham

Kellett CM, Dower PM (2015) Input-to-state stability, integral input-to-state stability, and \({{\cal{L} }}_{2}\)-gain properties: qualitative equivalences and interconnected systems. IEEE Trans Autom Control 61:3–17

Khalil H (2002) Nonlinear systems, 3rd edn. Prentice Hall, New Jersey

Krasovskiĭ NN (1963) Stability of motion. Stanford University Press, Stanford

Lin Y, Sontag ED, Wang Y (1996) A smooth converse Lyapunov theorem for robust stability. SIAM J Control Optim 34:124–160

Logemann H, Ryan EP (2014) Ordinary differential equations. Springer Undergraduate Mathematics Series. Springer, London

Megretski A, Rantzer A (1997) System analysis via integral quadratic constraints. IEEE Trans Autom Control 42:819–830

Meilakhs AM (1979) On design of stable control systems subjected to parametric disturbances. Autom Remote Control 39:1409–1418

Mironchenko A, Prieur C (2020) Input-to-state stability of infinite-dimensional systems: recent results and open questions. SIAM Rev 62:529–614

Müller M, Sierra CA (2017) Application of input to state stability to reservoir models. Theor Ecol 10:451–475

Sanchez EN, Perez JP (1999) Input-to-state stability (ISS) analysis for dynamic neural networks. IEEE Trans Circuits Syst I Fundam Theory Appl 46:1395–1398

Sontag ED (1989) Smooth stabilization implies coprime factorization. IEEE Trans Autom Control 34:435–443

Sontag ED, Wang Y (1995) On characterizations of the input-to-state stability property. Syst Control Lett 24:351–359

Sontag ED, Wang Y (1997) New characterizations of input-to-state stability. IEEE Trans Autom Control 41:1283–1294

Sontag ED (1998) Mathematical control theory: deterministic finite dimensional systems. Springer, New York

Sontag ED (2006) Input to state stability: basic concepts and results. In: Nistri P, Stefani G (eds) Nonlinear and optimal control theory. Springer, Berlin, pp 163–220

Teel A, Panteley E, Loría A (2002) Integral characterizations of uniform asymptotic and exponential stability with applications. Math Control Signals Syst 15:177–201

Acknowledgements

We are grateful to three anonymous reviewers, and Prof. Matthew Turner, whose constructive and insightful comments have helped to improve the work. Chris Guiver’s contribution to this work has been partially supported by a Personal Research Fellowship from the Royal Society of Edinburgh (RSE). Chris Guiver expresses gratitude to the RSE for their support.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A Appendix

A Appendix

We state and prove a technical lemma used in the paper. In words, the following lemma extracts a continuous-time exponential ISS estimate from a discrete-time one.

Lemma A.1

Fix \(n, m \in \mathbb N\), \(\tau \ge 0~\) and let \(\kappa > 0\) be given. Given bounded functions \(z_i: \mathbb R_+ \rightarrow \mathbb R^n\) for \(i \in \{1,2,\dots , m\}\), define \(p: \mathbb Z_+ \rightarrow \mathbb R^m_+\) by

If there exist a nonnegative matrix \(S \in \mathbb R^{m \times m}\) with \(\rho (S) <1\) and \(v \in \mathbb R^m_+\) such that

(componentwise inequality), then there exist \(\Gamma , \theta >0\) such that

Proof

An induction argument gives that p satisfies the inequality

Therefore, as \(\rho (S) < 1\), there exist \(\gamma \in (0,1)\) and \(\Gamma _0 \ge 1\) such that

Then, choosing \(\theta >0\) and \(\Gamma > \Gamma _0\) such that

it follows that

For all \(t \ge 0\), we have \(t = k \kappa + s\) for some \(k \in \mathbb Z_+\) and \(s \in [0,\kappa )\), and so

as required. \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Guiver, C., Logemann, H. The exponential input-to-state stability property: characterisations and feedback connections. Math. Control Signals Syst. 35, 375–398 (2023). https://doi.org/10.1007/s00498-023-00344-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00498-023-00344-7

Keywords

- Differential equation

- Exponential input-to-state stability

- Feedback connection

- Global exponential stability

- Robust stability

- Small-gain condition