Abstract

Slope failures possess destructive power that can cause significant damage to both life and infrastructure. Monitoring slopes prone to instabilities is therefore critical in mitigating the risk posed by their failure. The purpose of slope monitoring is to detect precursory signs of stability issues, such as changes in the rate of displacement with which a slope is deforming. This information can then be used to predict the timing or probability of an imminent failure in order to provide an early warning. Most approaches to predicting slope failures, such as the inverse velocity method, focus on predicting the timing of a potential failure. However, such approaches are deterministic and require some subjective analysis of displacement monitoring data to generate reliable timing predictions. In this study, a more objective, probabilistic-learning algorithm is proposed to detect and characterise the risk of a slope failure, based on spectral analysis of serially correlated displacement time-series data. The algorithm is applied to satellite-based interferometric synthetic radar (InSAR) displacement time-series data to retrospectively analyse the risk of the 2019 Brumadinho tailings dam collapse in Brazil. Two potential risk milestones are identified and signs of a definitive but emergent risk (27 February 2018-26 August 2018) and imminent risk of collapse of the tailings dam (27 June 2018-24 December 2018) are detected by the algorithm as the empirical points of inflection and maximum on a risk trajectory, respectively. Importantly, this precursory indication of risk of failure is detected as early as at least five months prior to the dam collapse on 25 January 2019. The results of this study demonstrate that the combination of spectral methods and second order statistical properties of InSAR displacement time-series data can reveal signs of a transition into an unstable deformation regime, and that this algorithm can provide sufficient early-warning that could help mitigate catastrophic slope failures.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Slope failures in the form of landslides or the collapse of engineered structures (e.g., tailings dams) pose a considerable risk to both life and infrastructure. Mitigating the risk they pose through providing an early warning relies critically upon monitoring slopes for precursory signs of instability (Intrieri et al. 2013). Conventionally, this has involved using survey monuments, inclinometers, piezometers, extensometers and ground-based radar to monitor how a rock mass is deforming, with changes in the displacement and velocity providing the most reliable indication of the stability of a slope (Carlà et al. 2017). Accordingly, several empirical approaches have been developed to predict the timing of a slope failure based on displacement monitoring data (Intrieri and Gigli 2016).

The majority of failure timing prediction approaches utilise the concept of accelerating (tertiary) creep theory (Saito 1969; Fukuzuno 1985), under which the behaviour of a material in the terminal stages of failure under constant stress and temperature conditions is governed by the empirical power-law (Voight 1988, 1989):

where v is the creep velocity, t is the time, and \(\alpha \) and A are constants. It has been observed that for a wide range of slope failures \(\alpha \approx 2\) (Voight 1989). Consequently, for \(\alpha \) = 2, Eq. 1 has the solution:

where \(t_f\) is the time of failure. Equation 2 implies that approaching the time of failure, the inverse of the velocity scales as a linear function of time. Consequently, the intercept point on the time axis of an inverse velocity (1/v(t)) vs. time (t) plot corresponds to the time of failure (\(t_f\)). This approach, often referred to as the inverse velocity method, is commonly applied to surface displacement monitoring data to predict the time of slope failures, by using linear regression to extrapolate the inverse velocity trend to the point of intersection on the time axis (Carlà et al. 2018).

Although predicting the timing of slope failures can be crucial in mitigating the risk they pose, there are several factors that affect the ability to do so effectively. Firstly, the conventional ground-based monitoring techniques often provide measurements that are low density, have limited coverage and have a low or irregular temporal sampling frequency, which can make it difficult to detect the precursory tertiary creep (Carlà et al. 2019). Secondly, empirical prediction approaches like the inverse velocity method require expert user-intervention in order to derive reliable estimates of the slope failure timing. This includes filtering the velocity time-series measurements to remove instrumental and random "noise" to help determine the onset of tertiary creep (i.e., Onset Of Acceleration) and increase the fit of the linear regression line on the inverse velocity-time plot (Dick et al. 2015; Carlà et al. 2017). However, in the absence of a general rule regarding filtering, the selection of the optimal method is subjective and typically determined through trial-and-error (Rose and Hungr 2007). Furthermore, the inverse velocity trend often only approaches linearity in the final few weeks prior to a slope failure (Rose and Hungr 2007), therefore only enabling reliable short-term predictions that leave little time for risk mitigation measures to be implemented.

The use of satellite Interferometric Synthetic Aperture Radar (InSAR) has been increasingly recognised as an effective solution in overcoming the issue of limited surface displacement measurement coverage. The InSAR method is based upon the concept of interferometry, with precise changes in the surface elevation being derived from the interference of electromagnetic waves from two Synthetic Aperture Radar (SAR) acquisitions (Rosen et al. 2000). Accordingly, satellite InSAR has been used to measured ground motion for a wide range of applications, including volcanology (Massonnet and Feigl 1995; Pinel et al. 2014), landslides (Fruneau et al. 1996; Colesanti and Wasowski 2006; Song et al. 2022), seismology (Massonnet et al. 1993; Peltzer and Rosen 1995; Cheloni et al. 2024) and various other types of surface deformation monitoring (Gee et al. 2019; Raspini et al. 2022). More recently, satellite InSAR has also been applied to slope failure prediction in relation to landslides, tailings dams and open-pit mines (Intrieri et al. 2018; Carlà et al. 2019). A particularly pertinent example concerns the 25 January 2019 catastrophic collapse of the upstream-constructed tailings Dam I at the Córrego do Feijão iron ore mine complex in Brumadinho, Brazil. The dam, storing 11.7 million \(m^{3}\) of tailings, collapsed suddenly releasing of flow of material that resulted in the death of approximately 270 people, without any apparent risk indicators from the array of conventional methods (e.g., inclinometers, piezometers, ground-based radar) being used to monitor its stability (Robertson et al. 2019). However, several studies have retrospectively analysed the stability of the dam preceding the collapse using satellite InSAR (Du et al. 2020), with some of these deriving inverse velocity-based failure timing predictions from the precursory deformation (Gama et al. 2020; Grebby et al. 2021). While successful predictions close to the actual time of failure were possible using the inverse velocity method, there remains some degree of uncertainty over the reliability of these predictions, due largely to the fitting of the linear regression line and the amount of prior warning of a potential failure that can be provided.

There have been attempts to generalise the inverse velocity method in Eq. 1 by extending the temporal dynamics of the governing mechanisms of failure to consider locally (spatially) varying dynamics. Recent examples include studies by Tordesillas et al. (2018); Niu and Zhou (2021) and references therein. Furthermore, there is a growing body of literature exploring the potential to offer probabilistic predictions of slope failure by modifying the inverse velocity method in various ways, such as through the application of linear regression (Zhang et al. 2020), stochastic differential equations (Bevilacqua et al. 2019), quantile-regression (Das and Tordesillas 2019; Wang et al. 2020), and mixture-distribution (Zhou et al. 2020). However, with the exception of Bevilacqua et al. (2019), none of these studies explicitly consider the temporal serial correlation of the displacement or velocity fields. Additionally, almost all of these studies assume a parametric statistical distribution on the observed response (i.e., displacement or velocity). Nevertheless, rapid developments in technology for monitoring ground displacements, such as ground-based (Casagli et al. 2010) and satellite-based InSAR (Wasowski and Bovenga 2014) present the opportunity to develop much faster and sensitive precursory warnings using algorithms that are based on streaming data and require less stringent statistical assumptions about the displacement mechanism. Das and Tordesillas (2019) proposed a data-driven, non-parametric multivariate statistical methodology for near real-time monitoring of slope failures. However, Das and Tordesillas (2019) acknowledged that serial correlation inherent in such deformation signals was not modelled. Nonetheless, their framework led to a much earlier prediction of the failure than that offered by the inverse velocity method.

Accordingly, the aim of this study is to further develop the framework of Das and Tordesillas (2019) to propose a new generalised algorithm that can accommodate temporal correlation whilst retaining its non-parametric nature. One key advantage of this statistical approach is that often regular sampling may reveal a change in the slope stability regime much earlier than that observed using the mechanistic failure law. The failure dynamics obtained by the algorithm is driven entirely by properties of the data and a fundamental statistical property underpinning conventional theory of time-series analysis called second order stationarity. Consequently, the statistical nature of this approach also makes it more objective in comparison to the inverse velocity method because the subjectivity associated with manually identifying the Onset of Acceleration and optimally filtering the time-series data is circumvented.

Heuristically, a second order stationary time-series has time-invariant statistical properties of second order. It is therefore postulated that the displacement time-series of a slope, sampled during a stable epoch, must be second order stationary. However, gradual deviations from second order stationary should occur as the slope transitions into an unstable epoch. Here, it is this principle that is utilised in order develop the new algorithm, which can be broadly defined as two phases:

-

1.

Phase 1: Detecting a change in regime – this involves first characterising (estimating) the second order statistical features of an area during a stable regime based on regularly sampled InSAR displacement time-series data. This is achieved through estimating statistical features of multiple time-series in terms of their dynamic periodograms. The stable regime (stationarity) is then defined as the entire history of the observations up until the detection of statistical variation in periodogram features; this point marks the time of regime change.

-

2.

Phase 2: Risk classification – this involves continuously tracking the deviation of the time-series from second order stationarity after the time of regime change detect in Phase 1. Using a combination of supervised and unsupervised learning algorithms, this deviation is described as a concave probabilistic function of time. Risk of failure warnings are then generated based on estimates of inflection points on the trajectory of this function.

In this study, the proposed algorithm is applied to satellite InSAR time-series data to retrospectively analyse the risk of the Dam I tailings dam collapse. Section 2 describes the study area and the InSAR time-series data used in the analysis. The proposed algorithm is then presented in Section 3, before the results and discussion for its application are provide in Section 4. Finally, the key conclusions, limitations and future research associated with this study are outlined in Section 5.

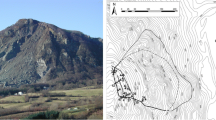

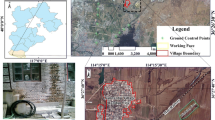

The Brumadinho (Brazil) study area. a) Satellite image of Dam I pre- and post-collapse, b) Relative LOS displacement maps for tracks 155 and 53 prior to the collapse, with locations 1-6 indicated (after (Grebby et al. 2021))

2 Study area and data

Dam I (Fig. 1a), at the Córrego do Feijão iron ore mine complex in Brumadinho, Minas Gerais State (southeastern Brazil), was an upstream tailings dam (active during 1976–2015) that underwent a sudden and complete failure across its full 720 m width and 86 m height at 12:28pm on 25 January 2019 as a result of a flow (static) liquefaction mechanism (de Lima et al. 2020). This was initially attributed to a combination of internal creep and a loss of suction induced by a period of heavy rainfall from about October 2018 to the time of the failure (Robertson et al. 2019), although recent modelling has suggested that creep alone would have been sufficient to initiate the failure of the dam (Zhu et al. 2024). The catastrophic failure of Dam I caused a debris- and mudlfow of approximately 11 million \(m^{3}\) of mining waste to flow rapidly downstream, destroying properties, infrastructure and agricultural land and entering the Paraopeba River (Gama et al. 2020). Within days of the collapse, the mud covered an area of 3.13 x \(10^{6}\) \(m^{3}\) (Rotta et al. 2020), ultimately claiming approximately 270 lives and affecting the whole region’s ecosystem (Porsani et al. 2019).

The data used to analyse the precursory deformation and risk in this study comprises Sentinel-1 InSAR displacement time-series data for two overlapping descending orbit tracks (tracks 53 and 155), for the 17 months preceding the dam collapse. The relative line-of-sight displacement (RLOSD) time-series data covering the extent of Dam I at 20-m spatial resolution and a 12-day interval (sampling frequency) were generated using the Intermittent Small Baseline Subset (ISBAS) differential InSAR technique (Sowter et al. 2013) and made publicly available by Grebby et al. (2021). Representative displacement time-series for areas exhibiting distinctive deformation within each of the main structural elements of the dam (i.e., dam wall, tailings beach) were obtained by extracting the time-series of a subset of contiguous pixels at each locality (Grebby et al. 2021). Figure 1b) shows the locations of the six areas (labelled locations 1-6) identified as exhibiting distinctive deformation over the dam. The subsets of pixels were delineated manually based on the uniformity of the respective deformation patterns observed at each location, and vary in size according to the area over which the patterns extend. Accordingly, the number of contiguous pixels for which the time-series were extracted at locations 1 through to 6 is \(30,~ 44,~ 46,~ 25,~ 38~\text {and}~37\), respectively. Both the multiple individual displacement time-series and the average time-series at each of the six locations were used to construct the machine-based algorithm for characterising and precursory monitoring of the tailings dam failure.

3 Methods

The method can be summarised in the following three stages: (i) Monitoring of the RLOSD to identify the time of regime change; (ii) Estimating state-of-the-system at the time of regime change; (iii) Sequential risk estimation and hazard classification. All the above steps build on monitoring the second order statistical properties of a time-series signal. These steps and the related concepts are described in the following subsections.

3.1 Statistical preliminaries

Conventional statistical modelling of a time-series, \(\{Y_t\}\), often involves modelling its second order statistical properties, its trend – slowly varying mean effect (\(\mu _t\)) – the autocovariance (\({\text {Cov}}(Y_t, Y_{t+u}\)), and variance (\(\sigma ^{2}_t\)), which are defined as:

Key to such modelling is the notion of second order stationarity.

3.1.1 Second order stationarity

Let \(\{Y_t\}\) be a time-series that is discretely sampled at n regular time intervals \(\{ t= 1,2, \ldots ,n\}\), such that its population mean, variance ( \(\textsf {var}\ (.)\)) and auto-covariance (\({\text {Cov}}(.)\)) are defined as in Eq. 3. Then, \(Y_t\) is second order stationary if:

A second order stationary time-series is a characteristic property that can be observed in the Fourier domain.

3.1.2 Spectral density and periodogram

If \(Y_t\) is a second-order stationary time-series with the auto-covariance function, c(u), satisfying \(\sum _{u}|u|c(|u|)<\infty \), then \(Y_t\) has a continuous spectrum \(f(\omega )\) that is defined as:

The spectrum \(f(\omega )\) is a unique signature of a second order stationary time-series marked by invariance over time. It partitions the total variance (power) of the time-series among sinusoidal event frequencies. Physicist Schuster (1906) proposed the periodogram to estimate the spectrum of a second order stationary time-series. The discrete Fourier transform (DFT) and periodogram of observed time-series data \(y_t, t=1,2,\ldots ,n\) are defined, respectively, by \(d(\omega _{k}\)) and \(I(\omega _{k}\)) as:

3.2 Non-stationarity: detecting geological regime change

The algorithm assesses instability based on non-stationary stochastic (statistical) transition in the time series. It is well-known that trend, seasonality or other cyclical signals - that are interpreted as deterministic, as opposed to stochastic - can show (unwanted) non-stationarity. Hence, the algorithm begins by filtering them out at the start of the process, using methods such as regression, to ensure that they do not influence the detection of a regime change.

A non-stationary time-series is characterized by a spectrum that varies over time. The empirical methods for monitoring non-stationarity are described below.

3.2.1 Moving window mean and variance

Exploratory investigations into deviations from second order stationarity of a time-series \(Y_t\) can be performed by estimating local sample means and variances of the time-series within subsets of time windows. If partitioning the length of the time-series n into L overlapping time windows \(w_{t_{l}}, l =1,2,\ldots ,L\) of equal length, \(n_L\), the local sample mean and variances of \(Y_t\) in window \(w_l\) are defined as:

3.2.2 Dynamic periodogram: evolving features of a time-series

\(I_{s}(\omega _{k},l)\) is defined as the local periodogram (see Eq. 6) of the time-series \(Y_t\) partitioned in each of the \(w_{t_{l}}, l: l=1,2...,L\) overlapping time windows of length \(n_L\), for all sampling locations, \(\{s: s=1,2,...,v\}\), across the Fourier frequencies, \(\omega _{k} = 2\pi k/n_{L}, k = 1,2,...,n_{L}\). Accordingly, \(\textbf{I}_{s}\) denotes the complete periodogram matrix at any arbitrary location, s, defined as:

where, for simplicity, the local time window \(I_{s}(\omega _{1},w_{t_{l}})\) is denoted by \(I_{s}(\omega _{k},l)\). Reordering the periodogram matrix in Eq. 8 for the periodograms at time window \(w_{t_{l}}\) across all sampling locations \(\{s: s=1,2,...,v\}\):

For a second order stationary time-series, \(\textbf{I}_{w_{t_{l}}}\) defines the signature of multiple time-series, \(\textbf{Y}_{t} = \{Y_{s_{1}}(t),Y_{s_{2}}(t),\ldots ,Y_{s_{v}}(t)\}^{t}\) in time window \(w_{t_{l}}\). If \(\textbf{Y}_{t}\) is composed of all second order stationary time-series, \(\textbf{I}_{l_{1}}\) would be time invariant. However, statistically evolving time-series would lead to a time varying periodogram matrix, \(\textbf{I}_{l_{1}}\).

3.2.3 Dynamic feature space: PCA periodograms

Principal components analysis (PCA) was introduced by Karl Pearson and has since become a standard unsupervised statistical learning method (Hastie et al. 2009). A common use of PCA is for reducing the dimensionality of correlated primary features in multivariable dataset. If a dataset consists of n — potentially correlated — features, the application of PCA leads to a smaller number \((m < n)\) of uncorrelated secondary features \(\{Z_1,Z_2,\ldots ,Z_m\}\) that comprise linear combinations of the primary (n) features.

It is well known that periodogram ordinates \(I(\omega _k)\) of second order stationary time-series are asymptotically uncorrelated at Fourier frequencies, \(\omega _k = 2 \pi k/n, k =0,1,2,...,n-1\) (Shumway et al. 2000). However, a non-stationary time-series has correlated periodograms. Here, PCA is used for two purposes:

-

to reduce the dimension of periodogram vectors (i.e., spectral features in each time window, \(w_l\)) retaining most of the variation in the signal;

-

to obtain uncorrelated secondary spectral features (see columns of Eq. 9, \(\textbf{I}_{w_{t_{l}}}\)) for multiple RLOSD time-series.

Mathematically, this is achieved by solving the following optimization problem using a linear combination of periodogram columns of \(\textbf{I}_{w_{t_{l}}}\):

Subsequently, the obtained uncorrelated secondary features are used to characterize the evolution of the state-of-the-system, using multivariate statistical learning methods, cluster analysis and classification.

3.2.4 Characterizing the system at regime change

The first stage in the stability monitoring process is to determine a time window within which the statistical features of the ground displacement signal undergo a structural change from a period of second order stationarity – known as the time of regime change (\(w_{t_{0}}\)). A variety of statistical methods can be used to detect the transition of the system from a second order stationary to a non-stationary regime (e.g., frequentist, Bayesian). However, owing to the relatively short time-series in this retrospective analysis, an alternative approach is adopted here. Defining:

as the local variance of a time-series in window, \(w_{t_{l}}\). Under second order stationarity, \(Var(w_{t_{l}})\) remains approximately the same in all windows. However, a substantial departure indicates a change in the statistical regime, which can be identified by plotting \(Var(w_{t_{l}})\) – for the average RLOSD time-series for each of the six locations on the dam – against time windows, \(w_{t_{l}}, l = 1,2,\ldots ,L\).

Inflection points on the the \(Var(w_{t_{l}})\) plot are chosen as potential candidates for the time of regime change. From a pool of candidate inflection points, the most likely candidate for the time window of regime change (\(w_{t_{0}}\)) is determined using the principle of maximum inter-cluster variance (Das and Tordesillas 2019). To achieve this, the local periodogram in the window of regime change, \(\textbf{I}_{w_{t_{0}}}\), for all locations is estimated, as shown in Eq. 9, before using PCA to obtain a matrix of secondary spectral features, \(\textbf{Z}_{w_{t_{0}}}\), for each location \(s=,1,2,\ldots ,v\). The feature matrix \(\textbf{Z}_{w_{t_{0}}}\) encapsulates the signature (i.e., slope stability) of the state-of-the-system (i.e., the dam) at \(w_{t_{0}}\), and is derived from the average RLOSD time-series of all pixels at each location. This feature matrix is then used to partition the (six) sampling locations of the dam into a finite number (k) of clusters, \(\mathbb {C} = \{C_1,C_2,\ldots ,C_k\}\). \(\mathbb {C}\) is assumed to be the baseline stable state-of-the-system immediately preceding a dynamic transition into an unstable deformation regime. In the final stage, the evolution of the system is measured using a statistic that sequentially compares all subsequent spectral features \(\textbf{Z}_{w_{t_{0}}}\) against the baseline, \(\mathbb {C}\).

3.3 Classification and risk of failure

In this final phase we derive risk thresholds based on a sequential classification methodology against the baseline feature matrix \(\mathbb {C}\).

3.3.1 Classification

The following statistical law underpinning classification error was a key observation by Das and Tordesillas (2019). Consider a classification problem where at any given point in time, t, it is desired to classify a group of observations \(\{1,2,\ldots ,n\}\) based on features \(\{I_1, I_2,\ldots ,I_n\}\) into a finite number of classes, \(\mathbb {C} = \{C_1, C_2,\ldots ,C_k\}.\) Then,

which denotes the probability of classification of a randomly selected observation \(j =1,2,\ldots ,n\) into class \(C_{l}\) at time t. For simplicity, the superscript, t, is ignored henceforth.

3.3.2 Risk trajectory

The classifier allocates the \(j^{th}\) observation to class \(C_z; z \in \{1,2,\ldots ,k\}\) if the class posterior probability \(p_j\), given features \(I_j\), satisfies:

Defining \(M(p_j) = p_jq_j, j =1,2,\ldots ,n\) to be the theoretical classification variation and \(M(\hat{p}_j)\) as the corresponding maximum likelihood estimator, then Das and Tordesillas (2019) showed that expectation and variance of \(\hat{p}_j\) share a parabolic relationship:

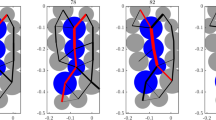

as shown in Fig. 2. The algorithm can then estimate two sequential slope instability risk warning thresholds – emergent risk (\(t_R\)) and imminent risk (\(t_I\)) – based on the progression of this trajectory (Fig. 2):

The first milestone, \(t_R\), is the time of an emergent instability risk, which corresponds to the theoretical maximum of the trajectory at \(p_jq_j=0.125\) (i.e., the point of inflection), indicating a time of monotonic progression towards failure. This is followed by \(t_I\), the time of imminent failure. The risk warning \(t_I\) is estimated as the time window when the classification variance reaches close to its theoretical maximum that is, \(p_jq_j=0.25\) (i.e., the maximum point of the trajectory). Intuitively, \(t_R\) corresponds to the time point of highest spatial (pixel) volatility of mis-classification (misalignment with equilibrium classification) for the estimated features, whereas \(t_I\) corresponds to the time block of highest spatial average of mis-classification for the estimated features. On this basis, retrospective empirical estimates of \(t_R\) and \(t_I\) for the Brumadinho tailings dam collapse are generated.

Note that estimates of population parameters (e.g. p) based on n samples \(p_1, p_2,\ldots , p_n\) are formally denoted by \(\hat{p}\). Accordingly, functions of parameters such as M(p) are formally denoted by \(M(\hat{p})\). However, for brevity we drop hat notation from the estimates in the remainder of the article.

3.4 Algorithm implementation

Algorithm 1 summarises the methodology for generating warnings of an emergent risk of failure (\(t_R\)) and an imminent risk of failure (\(t_I\)). A primary difference of this new algorithm compared with Das and Tordesillas (2019) is in the features used in monitoring. While (Das and Tordesillas 2019) focused on first order properties of time-series, here the framework is extended to include serially correlated and non-stationary time-series. The algorithm is implemented as a two-phase process using the statistical software R (R Core Team 2021). For visualization, the package ggplot2 (Wickham 2016) is used.

4 Results and discussion

4.1 Estimating the time of regime change

The first phase of the algorithm is the estimation of the time of regime change, \(w_{t_0}\), as described in Section 3.2.4. Figure 3a) and b) show the trend and moving window variance of the time-series of the mean RLOSD observed at each location of the six locations, respectively. As previously outlined, the mean RLOSD was computed as the spatial mean of all the individual time-series extracted for the subsets of contiguous pixels at the six locations (see Fig. 1), as defined by Grebby et al. (2021). The time-series for locations 1, 2 and 3 cover the time period (sampling window) from 19 August 2017 until 17 January 2019 (extracted from track 155), while those for locations 4, 5 and 6 covered 24 August 2017 until 22 January 2019 (extracted from track 53). With the exception of location 1, all locations exhibit a monotonically decreasing (downward and/or westward) displacement trend (Fig. 3a). Furthermore, for locations 2–6, it is also apparent that both mean displacement and the moving window variances vary over time, which indicates non-stationary in second order statistical properties.

A key time-varying second order property is that of sample variance. An examination of Fig. 3b) shows that the Brumadinho tailings dam underwent a deformation phase transition. Prior to 2 July 2018, the trajectory of spatial variance decreased in magnitude at each location, while after this date, increases in variance can be seen. The trend-adjusted moving window variances shown in Fig. 3c) retain this property. This pattern is consistent across all six locations, albeit in varying magnitude.

As discussed in Section 3, the temporal invariance of the spectral density function (Eq. 5) is the unique signature of a secondary order stationary time-series, whereas the periodogram (Eq. 6) is the sample estimator of the spectrum. Thus, a common approach of assessing non-stationarity in a time-series is to estimate local periodograms in moving overlapping time windows and to then analyse the temporal variation of local periodograms. Periodograms of the mean adjusted RLOSD in local windows of several different lengths \((n_L)\) were estimated. In each local window \(w_{t_{l}}, l = 1,2,\ldots ,L\) we add one time unit (12 days) and remove the first time point of the previous window. The resulting local periodograms for each location for a local window length of 16 and overlaps of 15 time units are shown in Fig. 4, where the \(x-axis\) on the plots represents the Fourier frequencies, \(\omega _{k}\). For a time-series of length 16, it is only obtain possibly to obtain distinct estimates of spectra at 8 Fourier frequencies due to aliasing (Nason et al. 2017). Figure 4 clearly shows clear temporal variation in the local periodogram curves for each location. The evolving nature of the time-series is evident in that the periodogram ordinates appear more similar in some time windows – in terms of both intercept and slope – than in other windows. It is the 8 periodogram ordinates that are used as features in the sequential slope stability monitoring algorithm.

The next key step in Phase I of the algorithm is to then utilise the periodograms to identify a time (window) of regime change – i.e., a phase transition of the system from a stationary to non-stationary paradigm. To do this, Das and Tordesillas (2019) suggested implementing the non-stationarity metric on second order time domain properties proposed by Das and Nason (2016) to assess deviation from stationarity. Here, however, a simpler approach that is applicable to spectral methods was adopted. It is well-known that the spectrum is a orthogonal partition of the variance of a time-series over Fourier frequencies (Shumway et al. 2000). Equivalently, the moving window spectra partitions the local variance – within a particular time window – among the Fourier frequencies. When a time-series is second order stationary, the spectrum is its unique signature and does not vary over time. This is observed as invariance in periodogram plots, over local time windows. In other words, the local variance – obtained as the sum of local periodograms at Fourier frequencies – must be uniform across all time windows. However, non-stationary time-series would have time varying (or evolving) local variance as shown in Fig. 5a).

For all locations (1-6), the spatial median local variance across all contiguous pixels across moving time blocks were estimated to identify the points of inflection on the local variance trajectory (Fig. 5b). Each point of inflection is considered to be a hypothetical point of regime change, \(w_{t_{0}}\).

Accordingly, as shown in Fig. 5b), three prospective time windows of regime change were identified, corresponding to windows 7 (2017-10-30 : 2018-04-28), 9 (2017-11-23 : 2018-05-22), and 16 (2018-02-15 : 2018-08-14). At each prospective \(w_{t_{0}}\), medoid clustering (Hu et al. 2021) of all pixels into a finite number of clustering with periodograms as features was performed, as described in Section 3.2.4. The time window that led to the highest proportion of explained inter-cluster variation (with a threshold of \(\approx 80\%\)) for the fewest number of cluster partitions was chosen as \(w_{t_{0}}\) (Das and Tordesillas 2019). The corresponding variations for the different numbers of cluster partitions are given in Table 1. With inter-cluster variation of 81% for 4 cluster partitions, \(w_{t_{0}} = 16\) (2018-02-15 : 2018-08-14) was selected as the optimal time of regime change.

4.2 Risk of failure warnings

Using local periodograms for a window length of 16 as features, all time-series were subsequently classified into the class labels of \(w_{t_{0}}\) at all subsequent points of time \(w_{t_{0+1}}, w_{t_{0 +2}}, \ldots \). Mis-classification (pq) is treated as an indication of the evolution of the state-of-the-system. At each time window, the spatial median of mis-classification and the corresponding interquartile range (IQR) for pq were calculated. The time-series of Median(pq) and IQR(pq) for \(w_{t_{0}}=16\) (2018-02-15 : 2018-08-14) are shown in Fig. 6. Based on the relationship between pq and its variability, retrospective warnings for the time windows of emergent (\(t_R\)) and imminent risk of failure (\(t_I\)) for the Brumadinho tailings dam were 27 February 2018–26 August 2018 and 27 June 2018–24 December 2018, respectively. Consequently, the algorithm identifies a risk of imminent failure of the dam at least a month prior to the actual event. This is comparable with the 40 days of advanced warning based on the inverse velocity method by Grebby et al. (2021). If monitored using this approach in near real-time, this would have provided adequate warning for detailed site investigations or risk mitigation measures to have been implemented. Moreover, the warning of an emergent risk is even earlier, at least five months prior to the collapse, coinciding with an initial phase of accelerating deformation highlighted by Gama et al. (2020). This is considerably earlier than the earliest reliable indication of an emerging failure 51 days prior based on the inverse velocity method (Grebby et al. 2021). Although, with hindsight, this deformation could correspond to a period of secondary creep rather than tertiary, at the time, such a warning would still have been useful in initiating crucial follow-up assessments of the stability of the dam.

4.3 Effect of time windows on risk of failure warnings

4.3.1 Local periodograms

The discussion thus far has focused on the results associated with the estimation of local periodograms over time windows of length 16. In order to assess the effect of the length of the moving window on the regime change and risk estimation, several other window lengths were used in the computations. These included periodogram estimates for the same time-series over moving windows of length 24 and 32, with varying overlap lengths.

Comparing Figs. 7 and 8 with Fig. 4, it can be observed that all three window estimates show temporal variation (and clustering) of local periodogram curves. The primary differences are two-fold:

-

longer windows allow for a broader spectrum of variation. That is, when choosing local periodograms for time-series of length 32 it is possible to estimate (low frequency) periodic components at twice the length of that allowed by time window of length 16 (1 time unit = 12 days).

-

Accordingly, the local window (numbers) of clustering vary.

Overall, the analysis reveals that all time window lengths estimated the maximum variance to coincide with the Fourier frequency that corresponds to the period of July–August 2018. This confirms that the window length over which the local periodograms are estimated have negligible effect on the prospective time windows of regime change and, hence, the subsequent timing of the risk of failure warnings. However, this may not necessarily always be true, and so a more objective window selection approach would be beneficial in generalising the algorithm for application to a broader range of cases.

The key to objectively optimising window selection is a methodology that can estimate periodograms at all frequencies that have significant variance components, for given a slope failure process. In other words, such a method must allow estimation of power, inherent in the RLOSD time-series, at the smallest typical frequency or longest time period typical of that type of slope failure, conditional on the sampling frequency. Examples of window width estimation (as also a window kernel) include those from within the statistical sciences and signal processing (equivalent to bandwidth), such as density estimation (Green and Silverman 1993; Bowman 1984), trend estimation (Fried 2004), and periodogram estimation (Ombao et al. 2001). As demonstrated by many of these examples, a rigorous and automatic approach can be developed using the resampling methodology known as cross-validation. One potential solution, based on mechanistic apriori knowledge of prevalent Fourier spectra of the RLOSD time-series for a given type of slope failure, would be to use a cross-validation-based decision criteria to select the optimal window length by minimizing a loss function which will ensure that the spectra at the lowest relevant frequency can be estimated.

4.3.2 Time of regime change windows

As previously shown in Fig. 5b), three inflection points were identified and then subjected to cluster analysis (Table 1) to estimate the optimal time window of regime change (\(\hat{w}_{t_{0}}\)). As a result, \({w}_{t_{0}} = 16\) (2018-02-15 : 2018-08-14) was selected. However, to assess the effect of the time window of regime change on the risk of failure warnings, estimates of the risk thresholds, \(t_R\) and \(t_I\), were computed for the two alternative prospective time windows of \(w_{t_{0}} = 7\) (2017-10-30 : 2018-04-28) and 9 (2017-11-23 : 2018-05-22). Figures 9 and 10 show the time-series of the median and interquartile range (IQR) for the risk statistic (pq) and risk thresholds \(t_R\) and \(t_I\) for \(w_{t_{0}} =7\) and \(w_{t_{0}} =9\), respectively. As for \(w_{t_{0}} = 16\), it can be seen that both alternative candidates for the time of regime change also predict an imminent risk of failure (\(t_I\)) well in advance of the actual collapse of the dam. For \(w_{t_{0}} =7\), an imminent risk of failure is detected in the time window 11 March 2018–7 September 2018, while for \(w_{t_{0}} = 9\) this risk is detected during the period 16 April 2018–13 October 2018. These risk warnings precede that for the optimal window for the time of regime change (\({w}_{t_{0}} = 16\)) by 2–3 months and the actual collapse by several months. However, as also observed by Das and Tordesillas (2019), these earlier time windows of regime change (\(w_{t_{0}} = 7\) and \(w_{t_{0}} = 9\)) produce maximum values for the risk statistic (pq) that are less than that for both \(w_{t_{0}} = 16\) and the theoretical maximum of 0.25. Consequently, the risk warnings generated for the earlier alternative time windows are less accurate than those for the optimal time of regime change window of 16.

Although in this case the cluster analysis was able to identify the optimal time of regime change window from three prospective candidates, a more robust approach could be more effective in addressing the issue of identifying a unique solution. The problem of detecting a time of regime change is similar in essence to that of detecting a structural change point in a time-series. With regards to change point detection, there are numerous potential approaches from both the frequentist and Bayesian perspectives that require future investigation (Killick et al. 2012; Cho and Fryzlewicz 2012, 2015; Ghassempour et al. 2014). Alternately, detecting \(\hat{w}_{t_{0}}\) can also be considered analogous to detecting anomalies in streaming data, as in Hill et al. (2009); Dereszynski and Dietterich (2011).

5 Conclusions

Conventional approaches to predicting slope failures are based on the inverse velocity method. However, predictions made using this method can be unreliable for risk management owing to its highly subjective nature and the fact that the deterministic relationships of this exponential law often materialises close to the occurrence of a failure, therefore offering limited prior warning of a potential catastrophe. In this study, an alternative risk identification approach is proposed, which incorporates theories from spectral analysis of time-series to extend a data-driven sequential monitoring algorithm developed by Das and Tordesillas (2019). This statistical learning algorithm is more objective and can be applied to any displacement (or velocity) time-series, sampled at regular time intervals, accounting for serial correlation.

The efficacy of this new algorithm has been demonstrated through application to satellite InSAR displacement monitoring data associated with the 2019 Dam I Brumadinho tailings dam collapse. The algorithm analyses second order properties to show that the tailings dam transitioned into an unstable epoch around July 2018, several months before the actual collapse. This was evident in the moving window variance plots and further corroborated using the periodogram-based local variance plots; the latter of which accounts for serial correlation within each displacement time-series. Whereas most slope stability monitoring analysis only considers the trend or first order moments of the deformation signal, the results of this study shows that investigating second order statistical properties is critical.

The optimum time of regime change window, \(w_{t_{0}}\) = 16 (2018-02-15 : 2018-08-14), was identified based on cluster analysis of dynamic spectral features and then subsequently used to retrospectively estimate risk of failure warnings for Dam I. The algorithm detected an emerging risk of failure during the period 27 February 2018–26 August 2018 and an imminent (maximum) risk of collapse of the tailings dam during 27 June 2018–24 December 2018. The latter warning comes at least several weeks prior to the actual dam failure on 25 January 2019, while the first sign of an emerging risk is evident at least five months prior to the failure. The amount of advanced warning for the risk of imminent failure provided by the algorithm is comparable to previous analysis based on the inverse velocity method, although considerably longer in terms of reliably detecting signs of an emerging failure. This is ultimately due to the statistical nature of the algorithm making it more objective and robust than empirical approaches, which also means that it can be readily applied to other slope failures. Although the risk warnings generated here are based on retrospective analysis, application of the algorithm on near real-time monitoring data could have provided sufficient early-warning to enable detailed site investigations or more urgent risk mitigation measures to have been implemented in order to advert a humanitarian and environmental catastrophe. Overall, the results of this study further attests the role that satellite InSAR can have in integrated dam monitoring and early warning systems.

This study has demonstrated the efficacy of the algorithm on deformation time-series acquired by at a sampling frequency of 12-day intervals through the Sentinel-1 satellite mission. Nevertheless, it is readily adaptable to any regularly sampled deformation time-series, as demonstrated in Das and Tordesillas (2019) with ground-based radar data acquired at a significantly higher frequency of 6-minute intervals. However, whilst a higher sampling frequency would likely allow a faster lead time for the detection of the time of regime change, it could also introduce more false-positive risk warnings. Although this can be addressed by determining the optimum frequency at which to sample the specific process being studied (Nason et al. 2017), the algorithm itself could be made more robust and extensible to analysing the risk associated with any potential slope failure scenario. This could be primarily achieved through the implementation of more objective or automated approaches for selecting the optimal time window length and time window of regime change. Additionally, the algorithm may also be further augmented by considering both spatial and temporal auto-correlation, in order to develop a spatio-temporal risk statistic. This would enable not just a temporal warning of a risk of failure, but also highlight the specific location of the instability.

Data Availability

Please contact authors for availability of data, if the manuscript is accepted for publication.

References

Bevilacqua A et al (2019) Probabilistic enhancement of the failure forecast method using a stochastic differential equation and application to volcanic eruption forecasts. Front Earth Sci 7. https://doi.org/10.3389/feart.2019.00135

Bowman AW (1984) An alternative method of cross-validation for the smoothing of density estimates. Biometrika 71(2):353–360

Carlà T et al (2017) Guidelines on the use of inverse velocity method as a tool for setting alarm thresholds and forecasting landslides and structure collapses. Landslides 14(2):517–534

Carlà T et al (2019) Perspectives on the prediction of catastrophic slope failures from satellite insar. Sci Rep 9(1):1–9

Carlà T, Farina P, Intrieri E, Botsialas K, Casagli N (2017) On the monitoring and early-warning of brittle slope failures in hard rock masses: examples from an open-pit mine. Eng Geol 228:71–81

Carlà T, Farina P, Intrieri E, Ketizmen H, Casagli N (2018) Integration of ground-based radar and satellite insar data for the analysis of an unexpected slope failure in an open-pit mine. Eng Geol 235:39–52

Casagli N, Catani F, Del Ventisette C, Luzi G (2010) Monitoring, prediction, and early warning using ground-based radar interferometry. Landslides 7(3):291–301

Cheloni D, Famiglietti NA, Tolomei C, Caputo R, Vicari A (2024) The 8 september 2023, \(m_w\) 6.8, Morocco earthquake: a deep transpressive faulting along the active high atlas mountain belt. Geophys Res Lett 51(2):e2023GL106992

Cho H, Fryzlewicz P (2012) Multiscale and multilevel technique for consistent segmentation of nonstationary time series. Stat Sin 207–229

Cho H, Fryzlewicz P (2015) Multiple-change-point detection for high dimensional time series via sparsified binary segmentation. J R Stat Soc: Ser B: Stat Methodol 475–507

Colesanti C, Wasowski J (2006) Investigating landslides with space-borne synthetic aperture radar (sar) interferometry. Eng Geol 88(3–4):173–199

Das S, Nason GP (2016) Measuring the degree of non-stationarity of a time series. Stat 5(1):295–305

Das S, Tordesillas A (2019) Near real-time characterization of spatio-temporal precursory evolution of a rockslide from radar data: integrating statistical and machine learning with dynamics of granular failure. Remote Sens 11(23). https://doi.org/10.3390/rs11232777

de Lima RE, de Lima Picanço J, da Silva AF, Acordes FA (2020) An anthropogenic flow type gravitational mass movement: the córrego do feijão tailings dam disaster. brumadinho brazil. Landslides 17:2895–2906

Dereszynski EW, Dietterich TG (2011) Spatiotemporal models for data-anomaly detection in dynamic environmental monitoring campaigns. ACM Trans Sens Netw (TOSN) 8(1):1–36

Dick GJ, Eberhardt E, Cabrejo-Liévano AG, Stead D, Rose ND (2015) Development of an early-warning time-of-failure analysis methodology for open-pit mine slopes utilizing ground-based slope stability radar monitoring data. Can Geotech J 52(4):515–529

Du Z et al (2020) Risk assessment for tailings dams in brumadinho of brazil using insar time series approach. Sci Total Environ 717:137125

Fried R (2004) Robust filtering of time series with trends. J Nonparametric Stat 16(3–4):313–328

Fruneau B, Achache J, Delacourt C (1996) Observation and modelling of the saint-etienne-de-tinée landslide using sar interferometry. Tectonophysics 265(3–4):181–190

Fukuzuno T (1985) A method to predict the time of slope failure caused by rainfall using the inverse number of velocity of surface displacement. Landslides 22(2):8–13

Gama FF, Mura JC, Paradella WR, de Oliveira CG (2020) Deformations prior to the brumadinho dam collapse revealed by sentinel-1 insar data using sbas and psi techniques. Remote Sens 12(21):3664

Gee D et al (2019) National geohazards mapping in Europe: Interferometric analysis of the Netherlands. Eng Geol 256:1–22

Ghassempour S, Girosi F, Maeder A (2014) Clustering multivariate time series using hidden markov models. Int J Environ Res Public Health 11(3):2741–2763

Grebby S et al (2021) Advanced analysis of satellite data reveals ground deformation precursors to the brumadinho tailings dam collapse. Commun Earth Environ 2(1):1–9

Green PJ, Silverman BW (1993) Nonparametric regression and generalized linear models: a roughness penalty approach (Crc Press)

Hastie T, Tibshirani R, Friedman JH, Friedman JH (2009) The elements of statistical learning: data mining, inference, and prediction, vol 2. Springer

Hill DJ, Minsker BS, Amir E (2009) Real-time bayesian anomaly detection in streaming environmental data. Water Resour Res 45(4)

Hu J et al (2021) A novel landslide susceptibility mapping portrayed by oa-hd and k-medoids clustering algorithms. Bull Eng Geol Environ 80(2):765–779

Intrieri E et al (2018) The maoxian landslide as seen from space: detecting precursors of failure with sentinel-1 data. Landslides 15(1):123–133

Intrieri E, Gigli G (2016) Landslide forecasting and factors influencing predictability. Nat Hazards Earth Syst Sci 16(12):2501–2510

Intrieri E, Gigli G, Casagli N, Nadim F (2013) Brief communication" landslide early warning system: toolbox and general concepts". Nat Hazards Earth Syst Sci 13(1):85–90

Killick R, Fearnhead P, Eckley IA (2012) Optimal detection of changepoints with a linear computational cost. J Am Stat Assoc 107(500):1590–1598

Massonnet D et al (1993) The displacement field of the landers earthquake mapped by radar interferometry. Nature 364(6433):138–142

Massonnet D, Feigl KL (1995) Discrimination of geophysical phenomena in satellite radar interferograms. Geophys Res Lett 22(12):1537–1540

Nason GP, Powell B, Elliott D, Smith PA (2017) Should we sample a time series more frequently?: decision support via multirate spectrum estimation. J R Stat Soc: Ser A (Stat Soc) 180(2):353–407

Niu Y, Zhou X-P (2021) Forecast of time-of-instability in rocks under complex stress conditions using spatial precursory ae response rate. Int J Rock Mech Min Sci 147:104908

Ombao HC, Raz JA, Strawderman RL, Von Sachs R (2001) A simple generalised crossvalidation method of span selection for periodogram smoothing. Biometrika 88(4):1186–1192

Peltzer G, Rosen P (1995) Surface displacement of the 17 May 1993 Eureka Valley, California, earthquake observed by sar interferometry. Science 268(5215):1333–1336

Pinel V, Poland MP, Hooper A (2014) Volcanology: lessons learned from synthetic aperture radar imagery. J Volcanol Geotherm Res 289:81–113

Porsani JL, Jesus FANd, Stangari MC (2019) Gpr survey on an iron mining area after the collapse of the tailings dam i at the córrego do feijão mine in Brumadinho-mg, Brazil. Remote Sens 11(7):860

R Core Team (2021) R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. https://www.R-project.org/

Raspini F et al (2022) Review of satellite radar interferometry for subsidence analysis. Earth-Sci Rev 235:104239

Robertson P, de Melo L, Williams D, Wilson G (2019) Report of the expert panel on the technical causes of the failure of feijão dam i. Commissioned by Vale

Rose ND, Hungr O (2007) Forecasting potential rock slope failure in open pit mines using the inverse-velocity method. Int J Rock Mech Min Sci 44(2):308–320

Rosen PA et al (2000) Synthetic aperture radar interferometry. Proc IEEE 88(3):333–382

Rotta LHS et al (2020) The 2019 brumadinho tailings dam collapse: possible cause and impacts of the worst human and environmental disaster in brazil. Int J Appl Earth Obs Geoinf 90:102119

Saito T (1969) Geomagnetic pulsations. Space Sci Rev 10(3):319–412

Schuster A (1906) Ii. On the periodicities of sunspots. Philos Trans R Soc Lond. Ser A, Contain Paper Math Phys Character 206(402–412):69–100

Shumway RH, Stoffer DS, Stoffer DS (2000) Time series analysis and its applications, vol 3. Springer

Song C et al (2022) Triggering and recovery of earthquake accelerated landslides in central italy revealed by satellite radar observations. Nat Commun 13:7278

Sowter A, Bateson L, Strange P, Ambrose K, Syafiudin MF (2013) Dinsar estimation of land motion using intermittent coherence with application to the south derbyshire and leicestershire coalfields. Remote Sens Lett 4(10):979–987

Tordesillas A, Zhou Z, Batterham R (2018) A data-driven complex systems approach to early prediction of landslides. Mech Res Commun 92:137–141

Voight B (1988) A method for prediction of volcanic eruptions. Nature 332(6160):125–130

Voight B (1989) A relation to describe rate-dependent material failure. Science 243(4888):200–203

Wang H, Qian G, Tordesillas A (2020) Modeling big spatio-temporal geo-hazards data for forecasting by error-correction cointegration and dimension-reduction. Spat Stat 36. https://doi.org/10.1016/j.spasta.2020.100432

Wasowski J, Bovenga F (2014) Investigating landslides and unstable slopes with satellite multi temporal interferometry: current issues and future perspectives. Eng Geol 174:103–138

Wickham H (2016) ggplot2: elegant graphics for data analysis. Springer-Verlag New York. https://ggplot2.tidyverse.org

Zhang J, Wang Z, Zhang G, Xue Y (2020) Probabilistic prediction of slope failure time. Eng Geol 271:105586

Zhou S, Bondell H, Tordesillas A, Rubinstein BI, Bailey J (2020) Early identification of an impending rockslide location via a spatially-aided gaussian mixture model. Ann Appl Stat 14(2):977–992

Zhu F, Zhang W, Puzrin AM (2024) The slip surface mechanism of delayed failure of the Brumadinho tailings dam in 2019. Commun Earth Environ 5:33

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions.

Author information

Authors and Affiliations

Contributions

S.D.: Conceptualization, Formal Analysis, Methodology, Software, Supervision, Visualization, Writing - Original Draft, S.G.: Data curation, Supervision, Writing- Original draft, Reviewing and Editing. A.P.: Conceptualization, Formal Analysis, Methodology, Software, Supervision, Visualization, Writing - Original Draft

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Das, S., Priyadarshana, A. & Grebby, S. Monitoring the risk of a tailings dam collapse through spectral analysis of satellite InSAR time-series data. Stoch Environ Res Risk Assess 38, 2911–2926 (2024). https://doi.org/10.1007/s00477-024-02713-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00477-024-02713-3