Abstract

Fatal Shock, competing risks and stress models are utilized in reliability and survival analysis to characterize various application types. In this context, this paper aimed to introduce a new class of bivariate distribution based on the Marshall–Olkin type. The proposed distribution termed as the Bivariate Extended Chen (BEC) distribution so that the marginals have Extended Chen distribution. Some properties of this distribution are derived and explained. The BEC distribution parameters are estimated using the maximum likelihood method. Finally, two applications to real life applications highlight the significance and adaptability of the Bivariate Extended Chen (BEC) distribution. The BEC distribution offers a superior fit than different competing bivariate distributions, illustrating its flexibility and applicability in modelling.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the survival and reliability analysis there are situations in which we detect two lifetimes for the same patient or device, which is known as bivariate survival data. Many studies apply this model to a wide-ranging of important problems, involving engineering, sports and medicine, which is an urgent necessity these days. In such cases, it is important to use various bivariate distributions to model such bivariate data. Marshall–Olkin (MO) type is one of the most used in for modelling failure of different paired data (i.e., the lifetime of the first component is smaller, greater, or equal to that of the of the second component). Various bivariate distributions were obtained based on the Marshall–Olkin type. The bivariate exponential distribution with Marshall–Olkin type is introduced by Marshall and Olkin (1967). For a detailed search of bivariate models using Marshall–Olkin method, see Kotz et al. (2000). Recently, many articles have been devoted to bivariate distribution with Marshall–Olkin type. Bai et al. (2019) proposed a bivariate Weibull distribution. Bakouch et al. (2019) suggested a bivariate Kumaraswamy-Exponential distribution. Shoaee and Khorram (13) introduced a bivariate Pareto distribution. Ortega (2010) provided some examples of bivariate distributions in survival analysis and reliability, that illustrate the application of the bivariate ageing models including Marshall–Olkin shock model.

The Extended Chen (EC) distribution has been considered by Bhatti et al. (2021). The hazard rate function of EC distribution holds various behaviour (increasing, decreasing, bathtub, modified bathtub, decreasing–increasing and increasing–decreasing–increasing). Thus, the EC distribution is the best model for modelling the survival, life testing and reliability data. Therefore, the purpose of this paper is to present a Bivariate Extended Chen (BEC) distribution whose marginals is EC distribution based on the idea of Marshall and Olkin (1967). The major aim of BEC distribution is to introduce a powerful and flexible model that for modelling the various shapes of the hazard function for bivariate data and studying many bivariate data sets in various practical situations.

If X is a random variable following the Extended Chen distribution, then the probability density function (pdf), cumulative distribution function (cdf), survival function and hazard rate function are as follows, respectively

where \(x > 0\), the shape parameters \(\alpha , \beta > 0\) and the scale parameter \(\lambda > 0\).

The proposed bivariate Extended Chen (BEC) distribution is constructed from three independent (EC) random variable using a minimization process. The Marshall–Olkin BEC model can handle the following models,

1.1 Competing risks model

Suppose a system has two components, with labels 1 and 2, and \({X}_{i}\) denotes the survival time of component i, where \(i=\mathrm{1,2}.\) The system could have been affected by three independent sources of failure. Component 1 can fail because of source 1 of failure only and component 2 can fail because of source 2 of failure only, while source 3 can cause both components 1 and 2 fail at the same time. If \({U}_{1} , {U}_{2} \ and\ {U}_{3}\) are the lifetime of cause and follow the EC distribution, then \(({X}_{1}, {X}_{2})\) has the BEC distribution.

1.2 Shock model

Take into consideration three independent causes of shocks: labelled 1, 2 and 3. These shocks are having an effect on a system that consists of two components, component 1 and component 2. Supposing shocks 1 and 2 enter the system and completely destroy components 1 and 2, respectively, while shock 3 enters the system and completely destroys both components. Let \({U}_{i}\) indicate the inter-interval times between the shocks \(i\) which follow the EC distribution. If \({X}_{1} \ and\ {X}_{2}\) are the survival times of the components, then \(({X}_{1}, {X}_{2})\) follows the BEC distribution.

1.3 Stress model

Assume a system has two components and each component undergoes individual independent stress say \({U}_{1}\ and\ {U}_{2}\). There is a total stress \({U}_{3}\) which has been conveyed to both the components equally, regardless of their separate stresses. Therefore, \({X}_{1}=max({U}_{1},{U}_{3}\)) and \({X}_{2}=max({U}_{2},{U}_{3}\)) are the observed stress at the two components.

1.4 Maintenance model

Consider a system has two components and each component has been maintained independently and there is a total maintenance. Assume that the lifetime of the individual components is increased by \({U}_{i}\) amount due to component maintenance. The lifetime of each component is increased by \({U}_{3}\) amount because of the total maintenance. Thus, \({X}_{1}=max({U}_{1},{U}_{3}\)) and \({X}_{2}=max({U}_{2},{U}_{3}\)) are the increased lifetimes of the two components.

The paper is structured as follows: Sect. 2 contains the formulation of the new bivariate model as well as the derivation of the joint probability density function, joint cumulative distribution function, joint survival function, and the conditional probability density function of the BEC distribution. Section 3 discusses reliability measures including bivariate hazard rate function and stress strength reliability. The most likely candidates for the unknown parameters are estimated in Sect. 4. In Sect. 5, an application of the proposed distribution is presented along with comparisons to a number of existing bivariate distributions using actual data sets.

2 Bivariate extended Chen distribution

The formulation of the Bivariate Extended Chen (BEC) distribution is introduced in this Section. The joint survival function of the new model is derived, the joint cumulative distribution function and the conditional probability density functions are obtained.

2.1 The joint survival function

Assume \({U}_{\mathrm{i}}\sim EC\left({\alpha }_{\mathrm{i}}, \beta ,\uplambda \right),\) where, \(i=\mathrm{1,2},3\) and \({U}_{1} , {U}_{2}\ and\ {U}_{3}\) are independent random variables Define the random lifetime of the component 1, say \({X}_{1}\)= min (\({U}_{1},{U}_{3}\)). While the random lifetime of component 2, say \({X}_{2}\)= min (\({U}_{2},{U}_{3}\)). The vector \(({X}_{1}, {X}_{2})\) has BEC distribution with parameters\(({\alpha }_{1}, {\alpha }_{2}, {\alpha }_{3}, \beta , \lambda )\). The following theorem constructs the joint survival function of the random variables \({X}_{1}\) and \({X}_{2 }.\)

Theorem 1.

If \(({X}_{1}, {X}_{2})\sim BEC({\alpha }_{1}, {\alpha }_{2}, {\alpha }_{3}, \beta , \lambda )\), then the joint survival function of \({X}_{1}\) and \({X}_{2}\) is expressed as.

where\(, z = \max \left( {x_{1} , x_{2} } \right)\).

Proof

Indeed, the joint survival function of \(X_{1}\) and \(X_{2 }\) is defined as follows,

Thus,

where \(z = \max \left( {x_{1} , x_{2} } \right)\)

As the random variables \(U_{1} , U_{2} \ and \ U_{3}\) are independent, we may directly derive

If \(x_{2} > x_{1}\) and \(z = \max \left( {x_{1} , x_{2} } \right) = x_{2}\) , then we get

Similarly, if \(x_{1} > x_{2}\), then \(z = \max \left( {x_{1} , x_{2} } \right) = x_{1}\) Thus,

Finally, in the case \(x_{1} = x_{2}\), then \(z = \max \left( {x_{1} , x_{2} } \right) = x.\) Thus,

Proposition 1

If \(\left( {X_{1} , X_{2} } \right) \sim BEC\left( {\alpha_{1} , \alpha_{2} , \alpha_{3} , \beta , \lambda } \right)\), then.

-

(i)

The marginal distribution of \(X_{1}\) and\(, X_{2}\) are \(EC\left( {\alpha_{1} + \alpha_{3} , \beta , \lambda } \right)\) and \(EC\left( { \alpha_{2} + \alpha_{3} , \beta , \lambda } \right)\), respectively.

-

(ii)

\(min(X_{1} ,X_{2} ) \sim EC\left( {\alpha_{1} + \alpha_{2} + \alpha_{3} , \beta , \lambda } \right)\)

Proof

-

(i)

If \(x_{2} > x_{1}\), then \({\text{z}} = \max \left( {x_{1} ,{ }x_{2} } \right) = x_{2}\). Thus, from Eq. (5) we get

$$\begin{aligned} & \mathop {\lim }\limits_{{x_{1} \to 0}} S_{{X_{1} , X_{2} }} \left( {x_{1} , x_{2} } \right) = \left[ {1 + \lambda \left( {e^{{x_{2}^{\beta } }} - 1} \right)} \right]^{{ - \alpha_{2} }} \left[ {1 + \lambda \left( {e^{{x_{2}^{\beta } }} - 1} \right)} \right]^{{ - \alpha_{3} }} \\ & \quad \quad = \left[ {1 + \lambda \left( {e^{{x_{2}^{\beta } }} - 1} \right)} \right]^{{ - \left( {\alpha_{2} + \alpha_{3} } \right)}} = \user2{ }S_{EC} \left( {x_{2} ;\alpha_{2} + \alpha_{3} , \beta ,\lambda } \right) \\ \end{aligned}$$

Analogously, if \(x_{1} > x_{2}\), then \({\text{z}} = \max \left( {x_{1} ,{ }x_{2} } \right) = x_{1}\). Thus,

-

(ii)

Based on the fact that

$$\begin{aligned} & P(min(X_{1} ,X_{2} ) > x) = P(X_{1} > x,X_{2} > x) = P(U_{1} > x,U_{2} > x,U_{3} > x) \\ & \quad \quad = P\left( {U_{1} > x} \right){ }P\left( {U_{2} > x} \right){ }P(U_{3} > x) \\ & \quad \quad = \left[ {1 + \lambda \left( {e^{{x^{\beta } }} - 1} \right)} \right]^{{ - \left( {\alpha_{1} + \alpha_{2} + \alpha_{3} } \right)}} { } \\ \end{aligned}$$

Thus, result (ii) holds.

2.2 The joint cumulative distribution function

The following Theorem provides the joint cdf of the new Bivariate Extended Chen distribution.

Theorem 2

If \(\left({X}_{1}, {X}_{2}\right) \sim BEC\left({\alpha }_{1}, {\alpha }_{2}, {\alpha }_{3},\beta ,\lambda \right),\) then the joint cumulative distribution function of \({X}_{1}\) and \({X}_{2}\) has the following form.

Proof

In the case of \(x_{2} > x_{1} { }\), from Theorem 1 and Proposition 1 we have.

Analogously follows the case \(x_{1} > x_{2}\) and for \(x_{1} = x_{2} = x\) is obvious.

2.3 The joint probability density function

The joint pdf of the new Bivariate Extended Chen distribution is given by following theorem.

Theorem 3

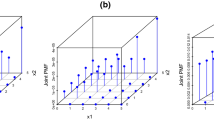

If \(\left( {X_{1} , X_{2} } \right) \sim BEC\left( {\alpha_{1} , \alpha_{2} , \alpha_{3} , \beta , \lambda } \right)\), then the joint probability density function of \(X_{1}\) and \(X_{2}\) is expressed as.

where

Proof

By taking the second derivative \(\frac{{\partial^{2} F_{{X_{1} , X_{2} }} \left( {x_{1} , x_{2} } \right)}}{{\partial_{{x_{1} }} \partial_{{x_{2} }} }}\), then we can obtain \(f_{1} \left( {x_{1} ,x_{2} } \right)\) and \(f_{2} \left( {x_{1} ,x_{2} } \right)\) for \(x_{2} > x_{1}\) and \(x_{1} > x_{2}\). Then, \(f_{3} \left( x \right)\) is obtained based on the fact in Eq. (8).

Let \(I_{1} = { }\mathop \smallint \limits_{0}^{\infty } \mathop \smallint \limits_{0}^{{x_{2} }} f_{1} \left( {x_{1} ,x_{2} } \right)dx_{1} dx_{2}\) and \(I_{2} = { }\mathop \smallint \limits_{0}^{\infty } \mathop \smallint \limits_{0}^{{x_{1} }} f_{2} \left( {x_{1} ,x_{2} } \right)dx_{2} dx_{1}\) Then,

Similarly,

Equations (9) and (10) are substituted into Eq. (8) to produce

As a result, we obtain \(\mathop \smallint \limits_{0}^{\infty } f_{3} \left( x \right)dx = \frac{{\alpha_{3} }}{{\left( {\alpha_{1} + \alpha_{2} + \alpha_{3} } \right)}}\).

Therefore, \(f_{3} \left( x \right) = \frac{{\alpha_{3} }}{{\alpha_{1} + \alpha_{2} + \alpha_{3} }}{ }f_{EC} \left( {x;\alpha_{1} + \alpha_{2} + \alpha_{3} ,{ }\beta ,\lambda } \right)\) which completes the proof.

2.4 Conditional probability density functions

If \(\left( {X_{1} , X_{2} } \right) \sim BEC\left( {\alpha_{1} , \alpha_{2} , \alpha_{3} , \beta , \lambda } \right)\) and from Proposition 1, the marginal pdf of \(X_{1} \ and\ X_{2}\) are expressed by

In Theorem 4, the conditional probability density function for BEC distribution is derived.

Theorem 4

If \(\left( {X_{1} , X_{2} } \right) \sim BEC\left( {\alpha_{1} , \alpha_{2} , \alpha_{3} , \beta , \lambda } \right)\), the conditional probability density functions of \(X_{i}\), given \(X_{j} = x_{j} ,\left( {i ,j = 1 , 2} \right) ,\) \(\left( {i \ne j } \right)\) can be stated as follows:

where

Proof.

Using Eq. (7) and the marginal probability density function of \(X_{i}\), \(\left( {i = 1 , 2 } \right)\) presented in Eq. (11) and substituting them into the following expression.

The proof of Theorem 4 is complete.

3 Reliability measures

Reliability theory is concerned with the application of probability theory to the modelling of failures and the prediction of success probability. This section highlights some of the reliability measures.

3.1 Stress-strength reliability measure

The lifetime of a component with a strength \({X}_{2}\) and stress \({X}_{1}\) is described by the stress strength reliability measure. If the stress \({X}_{1}\) is less than the strength \({X}_{2}\), then the component fails. The stress-strength reliability measure defined as,

If \(({X}_{1},{X}_{2})\) has a BEC distribution, then the stress-strength reliability measure R is calculated as follows,

3.2 Hazard rate function

In the literature, the bivariate failure rate function is described in various methods. One of these ways was defined by Basu (1971) as

if \(({X}_{1},{X}_{2})\) has a BEC distribution, then the joint hazard rate function of \({X}_{1}\) and \({X}_{2}\) has the following form

where,

The bivariate hazard rate function was defined by Johnson and Kotz (1975) and Marshall (1975) as the hazard gradient in vector form as follows,

As a result of some calculations, the hazard gradient for BEC distribution is given by

and

4 Maximum likelihood estimation

The maximum likelihood estimate method is used in this Section to construct the estimators for the five parameters of the BEC distribution. Consider a sample of size n from the BEC distribution with parameters \({\alpha }_{1}, {\alpha }_{2}, {\alpha }_{3}, \beta , \lambda\) and let

\({n}_{1}=\left|{I}_{1}\right|, {n}_{2}=\left|{I}_{2}\right|, {n}_{3}=\left|{I}_{3}\right|\) and \(n= {n}_{1}+{n}_{2}+{n}_{3}\).

The likelihood function for the parameter vector \(\theta =\)(\({\alpha }_{1}, {\alpha }_{2}, {\alpha }_{3}, \beta , \lambda )\) is obtained as

where,

The logarithm of the likelihood function in Eq. (16) is as follows,

By taking the first partial derivatives of Eq. (17) with respect \({\alpha }_{1}, {\alpha }_{2}, {\alpha }_{3}, \beta , \lambda\) and equating these first partial derivatives by zero, the likelihood equations are as follows,

Because the above system of five nonlinear equations is difficult to solve, a numerical method is required to obtain the MLEs.

5 Application

Using two actual data sets, this section illustrates the new BEC model's behavior and compares the new BEC model's ability and efficiency to other existing models.

5.1 Diabetic retinopathy data

One of the main reasons of blindness and visual loss in diabetes patients is the Diabetic Retinopathy. National Eye Institute presented a data set for diabetic patients who had blindness, see Huster et al. (1989). A study was performed to find out the result of laser treatment in reducing the blindness. Each patient had one eye randomly chosen for laser photocoagulation and the time for both eyes to go blind was recorded in months. The primary objective of this study is to determine whether laser treatment has any effect on delaying blindness. This study included a subset of 38 DRS patients from 197 high-risk patients to investigate the efficacy of the new model. The data are presented in Table 1. Let X1 and X2 defined as follows:

X1: represents the time to blindness in the untreated or control eye (in months).

X2: represent the time to blindness in the treated eye (in months).

Before analyzing data with the BEC distribution, the Extended Chen distribution is fitted to \({X}_{1}\), \({X}_{2}\) and \(\mathit{min}\left({X}_{1},{X}_{2}\right)\). For computational stability with the fitting of the distribution, we divided all the data points by 10. This has no direct effect on the computational process.

Table 2 displays the maximum likelihood estimates (MLEs) of parameters, the associated Kolmogorov–Smirnov (K–S) distances and the p-values for Diabetic Retinopathy Data. The Extended Chen distribution is used to fit the marginals and the minimum established the p-values.

Now, two bivariate distributions that have taken a lot of interest in the literature will be compared to the BEC distribution's goodness of fit criteria. For the results of this data set, the BEC distribution will be compared to the bivariate Pareto distribution introduced by Shoaee and Khorram (2020) and the bivariate Generalized Linear Failure Rate distribution introduced by Sarhan et al. (2011).

We will evaluate the adequacy of fit for the new model BEC with bivariate Pareto and bivariate GLFR models using the Akaike Information Criterion (AIC), Bayesian Information Criterion (BIC), Consistent AIC (CAIC), and Hannan-Quinn Information Criterion (HQIC). Table 3 provides a summary of the findings.

5.2 American football league data

The data in Table 4 was drawn from games played over three weekends in 1986 and was gathered from the National Football League. The data are presented in Csorgo and Welsh (1989). Let the X1 and X2 be the following variables:

X1: the game time to the first points scored by kicking the ball between goal posts.

X2: the game time to the first points scored by moving the ball into the end zone.

These times are useful for spectators who are just getting into the game or who are curious about how long they will have to wait to see a touchdown. The data are shown in Table 4 (scoring times in minutes and seconds). Before analyzing data with the BEC distribution, the Extended Chen distribution is fitted to \({X}_{1}\), \({X}_{2}\) and \(\mathit{min}\left({X}_{1},{X}_{2}\right)\). For computational stability with the fitting of the distribution, we divided all the data points by 10.

The MLEs, the associated K–S distances and the p-values for American Football League data are shown in Table 5. According to the p-values, the extended chen distribution is used to fit the marginals as well as the minimum.

Table 6 summarises the results of the MLEs AIC, BIC, CAIC and HQIC to study the capability of fitting for the proposed BEC model with other bivariate models. According to the results, The BEC model offers a better fit to the two data sets because it has the smallest goodness of fit information criteria values. Therefore, the new Bivariate Extended Chen (BEC) distribution using the Marshall–Olkin method is more suitable than the other models.

6 Conclusions

In this paper we presented and studied the new class of bivariate distributions, namely Bivariate Extended Chen (BEC) distribution. Different properties of this distribution have been derived and discussed such as joint pdf and cdf, marginal distributions, conditional distributions, the hazard rate function, and stress strength reliability. The estimations of unknown parameters are studied using maximum likelihood method. In a practical application, some built models of Bivariate Pareto and GLFR distributions were used to compare data sets. When compared, the study confirmed that the BEC distribution could provide a good and adaptable model. According to the goodness of fit criteria results, the BEC distribution offers a superior fit than other competitor bivariate models, demonstrating its flexibility and applicability in modelling and allowing the BEC distribution to be used in practice without hesitation.

Data availability

The data that support the findings of this study are available within the article.

References

Bai X, Shi Y, Liu B, Fu Q (2019) Statistical inference of Marshall Olkin bivariate Weibull distribution with three shocks based on progressive interval censored data. Commun Stat Simul Comput 48:637–654

Bakouch AS, Moala FA, Saboor A, Samad H (2019) A bivariate Kumaraswamy-exponential distribution with application. Math Slovaca 69(5):1185–1212

Basu AP (1971) Bivariate failure rate. Am Stat Assoc 66:103–104

Bhatti FA, Hamedani GG, Najibi SM, Ahmad M (2021) On the extended chen distribution: development, properties, characterizations and applications. Ann Data Sci 8(1):159–180

Csorgo S, Welsh AH (1989) Testing for exponential and Marshall–Olkin distribution. J Stat Plan Inference 23:287–300

Huster WJ, Brookmeyer R, Self SG (1989) Modelling paired survival data with covariates. Biometrics 45:145–156

Johnson NL, Kotz S (1975) A vector valued multivariate hazard rate. J Multivar Anal 5:53–66

Kotz S, Balakrishnan N, Johnson NL (2000) Continuous multivariate distributions. John Wiley and Sons, New York

Marshall AW (1975) Some comments on hazard gradient. Stoch Process Appl 3:293–300

Marshall AW, Olkin I (1967) A multivariate exponential distribution. J Am Stat Assoc 62:30–44

Ortega EM (2010) Modeling bivariate lifetimes based on expected present values of residual lives. Stoch Env Res Risk Assess 24:675–684. https://doi.org/10.1007/s00477-009-0354-7

Sarhan AM, Hamilton BS, Kundu D (2011) The bivariate generalized linear failure rate distribution and its multivariate extension. Comput Stat Data Anal 55:644–654

Shoaee S, Khorram E (2020) A generalization of Marshall–Olkin bivariate Pareto model and its applications in shock and competing risk models. AUT J Math Comput 1(1):69–87

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB).

Author information

Authors and Affiliations

Contributions

All authors have equally made contributions. The authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kilany, N.M., El-Qareb, F.G. Modelling bivariate failure time data via bivariate extended Chen distribution. Stoch Environ Res Risk Assess 37, 3517–3525 (2023). https://doi.org/10.1007/s00477-023-02461-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00477-023-02461-w