Abstract

For more than three decades, the part of the geoscientific community studying landslides through data-driven models has focused on estimating where landslides may occur across a given landscape. This concept is widely known as landslide susceptibility. And, it has seen a vast improvement from old bivariate statistical techniques to modern deep learning routines. Despite all these advancements, no spatially-explicit data-driven model is currently capable of also predicting how large landslides may be once they trigger in a specific study area. In this work, we exploit a model architecture that has already found a number of applications in landslide susceptibility. Specifically, we opt for the use of Neural Networks. But, instead of focusing exclusively on where landslides may occur, we extend this paradigm to also spatially predict classes of landslide sizes. As a result, we keep the traditional binary classification paradigm but we make use of it to complement the susceptibility estimates with a crucial information for landslide hazard assessment. We will refer to this model as Hierarchical Neural Network (HNN) throughout the manuscript. To test this analytical protocol, we use the Nepalese area where the Gorkha earthquake induced tens of thousands of landslides on the 25th of April 2015. The results we obtain are quite promising. The component of our HNN that estimates the susceptibility outperforms a binomial Generalized Linear Model (GLM) baseline we used as benchmark. We did this for a GLM represents the most common classifier in the landslide literature. Most importantly, our HNN also suitably performed across the entire procedure. As a result, the landslide-area-class prediction returned not just a single susceptibility map, as per tradition. But, it also produced several informative maps on the expected landslide size classes. Our vision is for administrations to consult these suite of model outputs and maps to better assess the risk to local communities and infrastructure. And, to promote the diffusion of our HNN, we are sharing the data and codes in a githubsec repository in the hope that we would stimulate others to replicate similar analyses.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

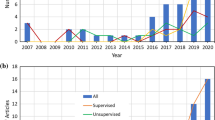

Landslides are capable of inflicting large-scale human casualties and economic losses (Petley 2012), and as a result they are widely researched. Continuing geoscientific advances have established routines to estimate where landslides may occur. This concept is referred to as susceptibility (Reichenbach et al. 2018). The standard definition of susceptibility corresponds to a relative likelihood of landslide occurrence within a specific mapping unit, under the control of a set of predisposing factors (Fell et al. 2008; Tanyaş et al. 2021). For over three decades, this definition has been addressed by implementing different practices or techniques that have largely evolved following recent technological advances. During the early 1970’ies and until the 1980’ies, drawing susceptible slopes on a map was the result of a combination of field surveys and manual geomorphological mapping (Verstappen 1983). As GIS made its appearance, bivariate statistical models followed closely and they became commonplace (Naranjo et al. 1994) until they were superseded by their multivariate counterpart (Süzen and Doyuran 2004). The latter, in the form of binomial Generalized Linear Models (GLM), has then become the standard and is still the most widely used technique in the landslide susceptibility literature (Budimir et al. 2015; Reichenbach et al. 2018). However, the hypothesis that landslide occurrences are linearly related to a number of predisposing factors is a strong assumption that may not be supported by evidence in reality. Because of this, in recent years, a number of models capable of estimating nonlinear relations have been proposed as a further improvement to the more simplistic GLM framework. Some of these have simply translated the GLM context to a more flexible Generalized Additive Model (GAM) structure (Goetz et al. 2011; Lombardo and Tanyas 2020; Steger et al. 2021; Titti et al. 2021). Others have taken the route of machine learning. Among these, support vector machines (e.g., Marjanović et al. 2011), decision trees (e.g., Lombardo et al. 2015), maxent (Di Napoli et al. 2020) and neural networks (e.g., Lee et al. 2004; James et al. 2021; Anderson-Bell et al. 2021; Schillaci et al. 2021) have gained the spotlight within the geoscientific community.

However, irrespective of the algorithmic architecture, all these models have kept answering the same scientific question over the decades. They have sought to estimate where landslides may occur, being blind though to how large landslides may become, once they trigger in a given location. Conversely, this problem is typically addressed via physically-based approaches (Li et al. 2012; Bout et al. 2018; Van den Bout et al. 2021), although their applications over large regions is commonly hindered by geotechnical data availability. On the contrary, data-driven approaches can by-pass this issue by using proxies instead of geotechnical parameters. And yet, only a single contribution currently exists where landslide sizes (or their aggregated measure per slope units to be precise) are statistically estimated over territories whose scale is not suited for physically-based modeling. This work, authored by Lombardo et al. (2021), makes use of a GAM framework, under the assumption that landslide sizes (measured as planimetric areas) behave over space according to a log-Gaussian probability distribution. However, this work missed to address a fundamental problem. In fact, the use of a Gaussian likelihood implies that the bulk of the distribution is well estimated but the tails are inevitably misrepresented. As a result, large landslides pertaining to the right tail of the size distribution are underestimated, hence underestimating the hazard to which local communities and infrastructures would be exposed to.

In this work, we take a different route, while still trying to combine some essential elements in the research progress described above. Firstly, we opted for Neural Network as our reference model structure. Such a framework is particularly appealing thanks to the widespread support and use in Python (Van Rossum and Drake Jr 1995). Its libraries make codes easily shareable and the resulting analyses easily reproducible. Secondly, we modeled both the traditional landslide susceptibility together with the landslide sizes. However, differently from Lombardo et al. (2021), here we introduce the structure of a multi-label classifier rather than regressing the continuous distribution of landslide planimetric areas. Specifically, we run a Hierarchical Neural Network, where the susceptibility element is linked to the landslide susceptibility, and a multi-label element returns the probability of a specific landslide size class. This is one of the major strength of a Neural Network, as it allows to implement hierarchical models with relative ease, which would otherwise require complex joint probability models in statistics (Pimont et al. 2021).

We do so by testing our modeling protocol over the co-seismic landslides triggered by the Gorkha earthquake (25th April 2015). The landslide inventory genereted by Roback et al. (2018) was accessed at the repository built by Schmitt et al. (2017); Tanyaş et al. (2017). And, the mapping unit we chose corresponds to Slope Units (Carrara 1988).

The manuscript is structured as follows: Sect. 2 provides a description of the area where landslides occurred in response to the Gorkha earthquake, this being followed by a description of the mapping unit and predictors we chose (Sect. 3). In Sect. 6 we explain the Neural Network structure and how we made use in this work. Sections 7 and 8 present and comment the results, respectively. And ultimately, Sect. 9 summarizes the novelty of the work we present and anticipates future extensions.

We stress here that to promote similar applications we have shared data and codes in a github repository accessible at link here.

2 Study area and landslide inventory

On April 25th 2015, the Gorkha earthquake 7.8 \(\hbox {M}_W\) (Elliott et al. 2016) struck the area in the proximity of Kathmandu, Nepal (see Fig. 1a, b). The resulting losses amounted to approximately 9000 victims which, together with the infrastructural damage, made this event the worst natural disaster in Nepal since 1934 (Kargel et al. 2016). The earthquake affected-region covered an area of about 500 by 200 kilometers. And, a large portion of this area, also suffered from widespread landslides (see Fig. 1c), with 24,915 landslides—of which, 24,903 of them were triggered by the 25th of April mainshock—and a total of 86.6 \(\hbox {km}^2\) of planimetric landslide area (mean = 0.003 \(\hbox {km}^2\); standard deviation = 1.74 \(\hbox {km}^2\); maximum = 1.72 \(\hbox {km}^2\)). Figure 1c shows both the spatial distribution of the mapped landslides and the boundary of the surveyed area by Roback et al. (2017) to generate the coseismic inventory. Their inventory is currently considered one of the most accurate and complete (Tanyaş and Lombardo 2020) among the available global coseismic inventories (Schmitt et al. 2017; Tanyaş et al. 2017).

Panel a show the large scale geographic context; panel b zooms in to highlight the ground motion induced by the Gorkha earthquake; panel c depicts the spatial distribution of the coseismic landslides, this being shown in terms of spatial densities; panel d zooms further in to provide an overview of the slope unit partition we generated and its consistency with the underlying aspect distribution

3 Mapping unit

We partition the study area into Slope Units (SUs). These correspond to a refinement of the SUs already generated and presented in Tanyaş et al. (2019)—and further details on their computation will be provided below. We remind here that a slope unit corresponds to the space bounded between ridges and streamlines, of catchments definable across different scales. Therefore, they efficiently depict the morphodynamics of a landslide and they also provide a spatial partition for which a single unit is independent from the others in terms of initial failure mechanism (Alvioli et al. 2020; Amato et al. 2019). We generated the SUs by using r.slopeunits, a software developed and made available by Alvioli et al. (2016). The software optimizes the SU generation by maximizing within-unit homogeneity and outside-unit heterogeneity of the slope exposition (Alvioli et al. 2018), in a deterministic framework where the output is mainly controlled by four parameters (Alvioli et al. 2016; Castro Camilo et al. 2017):

-

1.

Circular variance (cv), the parameter which regulates how strictly r.slopeunits should define homogeneity. The possible values are bound between 0 and 1, one allows for small variance of the aspect wheres the other extreme allows for large aspect variance.

-

2.

Flow accumulation threshold (FAtresh), this parameter initializes the search and it typically represents the starting size of the spatial partition, from which r.slopeunits further dissects the landscape into smaller units.

-

3.

Minimum SU area (areamin), a parameter representing the lower bound r.slopeunits tries to optimize for the SU delineation.

-

4.

Cleaning SU area (cleansize), a parameter representing the size below which r.slopeunits optimizes a merging routine where small units are fused into larger adjacent ones.

Here we start from the r.slopeunits parameterization used in Tanyaş et al. (2019). But, as their work was based on a global scale, the SU they delineated were too coarse for a site specific assessment. Therefore, we slightly modified the required parameterization and set the cv to 0.4, FAthres to 500,000 \(\hbox {m}^2\), areamin to 80,000 \(\hbox {m}^2\), and cleansize to 50,000 \(\hbox {m}^2\). Figure 1d shows the details of the SUs we generated, superimposed to the aspect for clarity. Overall, their resulting characteristics feature an average SU area of 0.22 \(\hbox {km}^2\) and a standard deviation of 0.21 \(\hbox {km}^2\); the equivalent size of these two statistical moments being already a clear indication of how rough the landscape is at the transition between lesser and greater Himalayas (Burbank 2005).

4 Predictors

The predictors we use are reported in Table 1. There we report ten predictors, nine of which (all but \(\hbox {SU}_A\)) have been calculated at a much finer spatial resolution compared to the SUs. But, as our model is expressed at the SU scale, we summarized at this level the distribution of each predictors, via their respective mean and standard deviation values. As a result, the model we build features 18 predictors rather than nine, with the addition of the \(\hbox {SU}_A\).

Overall, each of the predictor variables are normally distributed, so standardization ( mean of 0 and a standard deviation of 1; Ali et al. 2014) took place to regulate the scales of each variable. We also tried a min-max normalization (see Al Shalabi et al. 2006), but the standardization produced better performance (the result of these preliminary tests are not reported here), resulting in the choice to standardize the predictors instead of normalizing them.

5 Preprocessing

There does not currently exist any standard for classifying landslide size in terms of planimetric area. One of the most important reason one might consider dividing the landslides into classes based on area is to then be able to investigate how certain predisposing factors affect each class, as this may shed light upon how small to large landslides are catalyzed. In order to develop a method to optimally divide the landslide area (aggregated as the sum of all landslide planimetric areas per SUs) into classes, we used the Fischer-Jenks algorithm to derive the class boundaries (see, Jenks 1967). The Fischer-Jenks algorithm determines the optimal break points by choosing those that minimize each category’s variance, though it requires information on how many breaks are to be used. To determine the optimal number of breaks, various break counts were trialed in ascending order, starting with two, on each iteration measuring the Goodness of Variance Fit (GVF). GVF is a value between 0 and 1 and indicates how well the categories produced by the Fischer-Jenks algorithm reflect the “natural breaks” in the data (Khamis et al. 2018). This iterative process continues until the number of breaks associated with a GVF value beyond a certain threshold is reached, in this case, the value chosen was 0.85. Class 0 landslides are SUs with an area of 0, indicating that a landslide did not occur.The remaining non-zero values were broken into 5 classes using the Fischer-Jenks method described above, resulting in the six classes described in Table 2.

However, two observations arose at this stage: firstly, the data is heavily imbalanced. This is not an uncommon issue when dealing with classification problems, and there are several ways of remedying this issue. In this case, we have opted to apply oversampling, which uses existing datapoints to interpolate artificial values, assigned to artificial records that closely resemble existing ones. The process of generating artificial data points occurs until each class has the same number of records, albeit with a proportion of them being synthetic (Chawla et al. 2002).

The second issue, however, is that for oversampling to take place, a minimum number of existing datapoints are required in each category to allow for interpolation. As is seen in Table 2, class 5 only has one record—an insufficient number to apply oversampling to. We have then overcome this issue by combining class 5 with class 4. We have also merged Class 2 into class 1 to reduce the number of tunable parameters by 33% overall, thus decreasing training time substantially and increasing performance as a result (more information available in Sect. 6.2). This produced the final classes detailed in Table 3.

The next step was to create landslide presence/absence binary values. It was done by making a copy of the landslide class data and setting all non-class 0 values to 1, and all class 0 values to 0 (as numerical data). The final preprocessing done on the dependent variables was to encode the landslide class data so it could be used in a multi-class classifier model.

The result of these operations can be seen in Fig. 2 where the panel a shows the traditional presence/absence landslide data, whereas panel b provides further insight into the classification scheme we adopted to run landslide-size-oriented prediction models.

5.1 Exploratory data analysis

Figure 2a shows that the spatial distribution of landslide occurrence does not appear to be uniform across the study area. Conversely, the overwhelming majority of SUs with landslides occurred in the Northern-Central sector. Small clusters of landslides also appear on the Southern border, although they are generally fewer in number, and even fewer are present in the Eastern and Western peripheries. Table 4 shows that the pattern described above results in a significant global spatial autocorrelation. This implies that there are spatial processes that influence the SUs landslide susceptibility, and this observation can be used to inform the interpretation of results, as good results should also reflect this spatial autocorrelation, likely induced by local amplification of the ground motion.

Figure 2b shows the spatial distribution of landslides classes, using the categories defined in Sect. 5. Figure 2b further elucidates that the Northern sector features more slope units with landslide occurrences. And that as expected, the number of SU associated to Class 1 is predominant compared to Class 2, and that Class 2 is again more numerous than Class 3. This is a natural consequence of the landslide size distribution and it follows the the Frequency Area Distribution summarized in (Fan et al. 2019) for the Gorkha case.

6 Hierarchical deep neural network for landslide class prediction

The HNN implemented in this study is designed to predict the likelihood of landslide occurrence and size in spatial units, across the study area.

Hence, for each SU (see Sect. 3) and by using the variables introduced in Sect. 4 the model predicts the likelihood of landslide occurrence, and if a landslide is likely to occur, it also estimates what landslide-size-class is expected.

We have chosen to build a two output hierarchical neural network to guide the model’s training (inductively bias the model) to focus on the binary occurrence of landslides and subsequently the size of the landslide. As a result, the model becomes more accurate. Another merit of having both outputs is that it enables a more straightforward comparison to existing approaches. The output predicting the landslide susceptibility can be used to compare our HNN to other models that perform the same task. This is the best way of comparing the model to existing approaches because there is no widely used or accepted model that predicts size and therefore it is not possible to compare the landslide-size-class prediction with an equivalent baseline.

6.1 Model architecture

We stress here that the model we implemented is a single one. But for simplicity, we will separately refer for the rest of the manuscript to the two outputs, as binary and class. The former represents the traditional notion of landslide susceptibility or the likelihood of landslide occurrence. The latter refers instead to the estimation of the likelihood of a landslide belonging to a specific size class, reported in Table 3.

The proposed neural network architecture has a sequence of layers starting with: an input layer. This is followed by a Multi-Layer Perceptron (MLP). The aim of this MLP is to encode the input and provide the first classification, the binary one. This first MLP is then followed by another MLP, which takes as input the binary prediction and the encoded input trough the first MLP. This second MLP outputs the class prediction. For clarity, we have graphically translated the architecture descried above in Fig. 3.

The loss functions for the binary and class outputs are the binary cross entropy and the categorical cross entropy respectively (details in Appendix A). The overall loss of the model is calculated as the linear combination of both losses. The parameter of the linear combination weights the importance of one classification vs the other. This loss equation is formally defined as:

where \(\alpha \in [0,1]\) is an hyper-parameter.

Further information on the model architecture and activation functions are also provided in Appendix A.

6.2 Performance metrics

Throughout the landslide literature, the most common form of evaluation metric for landslide susceptibility is the Area Under the Receiver Operating Characteristic (ROC) curve, or AUC (Reichenbach et al. 2018). For consistency, the AUC will be used as the evaluation metric to compare our binary model with respect to a binomial GLM baseline. This will also be valid for the evaluation of the class output, as we will compute a ROC curve for each class. Moreover, there are also additional formats of the AUC that average the performance across classes and allow for a single value to reflect the performance of the model on all classes. In this study, we will use both the one-vs-one weighted average and one-vs-rest weighted and unweighted average AUC to evaluate the performance of the class output. The one-vs-one approach generates a ROC curve for each pair of classes, where one class is considered as the positive example and the other as the negative example. Then, it computes their AUC and average them weighting each value based on the total number of examples belonging to the class pair.

The one-vs-rest approach generates a ROC curve for each class, where one class is considered as the positive example and the rest of the classes as the negative examples. Then, it computes their AUC and average them. In the weighted version, the weights are computed based on the total number of examples belonging to the selected class. The weighted version of these metrics is important because it is less affected by the class imbalance present in the dataset.

These type of metrics are slight modification to the common AUC metric already used in the geomorphological literature. We opted to use them to provide a more complete model performance description and further details on this suite of performance metrics is reported in the Appendix A.

6.3 Influence of the binary and the class components

The performance assessment we opted for also includes a loss weight ratio test. The aim of loss weight ratio testing is to be able to better understand the impact that the presence of the first output has on the second, and vice versa, as well as to find the optimal combination of losses in a Neural Network.

By prescribing loss weights in a multi-output model (adjusting \(\alpha\)), it is possible to specify the extent to which each output’s loss contributes to the overall loss of the model. During the process of loss weight ratio testing, 11 combinations of weights at equally spaced integer intervals are tested, with \(\alpha\) going from 0 to 1 at steps of 0.1. More information on this test is provided in Appendix A.

6.4 Ablation analysis

To understand the importance of each feature or set of features, we perform an ablation analysis. This essentially consists in removing predictors from the dataset and retrain the model. The difference observed when using and not using a given predictor can be used as a measure of importance or explanatory power. In our dataset, we have 19 predictors. Eighteen of these can be thought as nine distinguishable signals that we convey to the model in a dual form, by using their mean and standard deviation values per SU. We recall here that the area of SU does not have a dual representation (refer to Table 1). It can therefore also be said that there are 10 independent categories of predictors, each one reflecting distinct attributes of the slope units. When conducting the ablation study, rather than removing a single component of the dual signal, only to leave its counterpart in the dataset, we opted to remove both. The results will be shown in Sects. 7.1.1 and 7.2.1 , for the binary and class models, respectively.

7 Results

7.1 Binary benchmark

The first stage of our modeling protocol features a benchmark step where the binary component of our HNN is compared to a traditional binomial GLM (Atkinson and Massari 1998; Lombardo and Mai 2018), for the latter represent the most common modeling routine used to assess landslide susceptibility (Budimir et al. 2015; Reichenbach et al. 2018).

Figure 4a shows the tests we have run to select the best binary model. The abscissa reports the number of epochs we tested our model for, up to 500 iterations. Examining the loss measured across epochs, the minimum corresponds to the 27th epoch, which we selected as our best model. Figure 4b shows the difference in performance between the best model explained before and the baseline. Specifically, our binary NN (AUC = 0.88) better performs compared to the binomial GLM (0.82) one, albeit both fall in the excellent performance class proposed by (Hosmer and Lemeshow 2000). This slight difference is noticeable in Fig. 5 where in the central portion of the study area, the co-seismic susceptibility is shown to have been estimated slightly higher in the baseline. However, the baseline also produces high susceptibility in the peripheries (e.g., north-western and south-eastern sectors) where no landslides occurred, this being the reason for the difference in performance among the two models.

7.1.1 Binary ablation tests

Figure 6 provides an overview of the predictor importance for the binary model. This operation essentially corresponds to a Jacknife test (see, Lombardo et al. 2016; Shrestha and Kang 2019), where one variable is removed at a time, measuring the drop in performance as the model loses supporting information. Therefore, important predictors induce a large performance drop. This is clearly the case for the PGA, whose removal implies a median decrease in performance of approximately 0.07 AUC, leading the model from outstanding to excellent performance. This is not surprising because the PGA represents the role of ground shaking, which is the most important variable controlling the spatial distribution of earthquake-induced landslides (e.g., Lombardo et al. 2019; Tanyaş et al. 2019). As for the remaining predictors, they do not significantly differ one from the others for they induce performance drops similar in magnitude.

Ablation test run for the binary model. The AUC reported to the y-axis is measured each time a predictor variable is removed from the initial set. We stress here that the removal of a predictor implies taking away both the corresponding mean and standard deviation values per slope unit. The green line corresponds to the best binary model shown in Figure 4a, obtained at the 27th epoch

7.2 Class HNN component

Our HNN concatenates the binary model reported above to the four landslide-size-classes. To assess whether this component performs well, similarly to the previous figure, we monitored the HNN model as it converged to its final form. We stress here that our HNN sequentially links the output of the binary case to the model component that probabilistically distinguishes the four landslide size classes. This is shown in Fig. 7a, where the loss estimated for class model converged to the best solution at the 26th epoch. In panel 7b, this performance is further described in a separate form, one ROC curve for each of the classes under consideration. There, the effect of the sample size can be seen across the landslide-size-classes. Class 0 and class 1 returned smooth ROC curves as they are constructed by using large data samples. This becomes rougher already for Class 2, due to the reduced amount of landslide with an average dimension and it is further exacerbated for the ROC curve of Class 3, where the frequency of landslide with extreme sizes is significantly less.

Irrespective of the specific Class, our HNN suitably recognizes the four landslide size groups, with outstanding performances according to Hosmer and Lemeshow (2000).

The resulting susceptibility estimates are translated in map form in Fig. 8. There, it is possible to appreciate the different information our model provides. The probability Class 0 map (not to have a landslide) is essentially the inverse of the probability Class 1 (to have a small landslide). This is expected because small landslides constitute the vast majority of the landslide size distribution. As a result, the two maps appear to depict inverse spatial patterns. As for the probability Class 2 and Class 3 maps, they provide the complementary information we sought when we devised this experiment. In fact, specific slope units are highlighted with probability of landslide size occurrence, and the number drastically decreases from one class to the other, as also empirically expected.

7.2.1 Class ablation tests

In analogy to the analyses run for the binary component of our HNN model, we featured a series of ablation tests also for the class model. These are shown in Fig. 9, where the effect of the predictors’ removal is measured for the four landslide size classes separately. Similarly to what happened in the case of the binary ablation tests, for the Classes 0 and 1, taking away the PGA (both its mean and standard deviation per SU) induced the largest drop in performance among all the predictors. Interestingly, as the landslide size class increased, the drop induced by removing the PGA drastically reduced. For instance, in the case of Class 2 removing the PGA still induces the largest drop in performance among the predictors, but the drop is almost in line with removing any of the others. As for the Class 3, here PGA is not the most relevant predictor anymore. In fact, none of the predictors’ removal cause a significant drop in the modelling performance. In other words, in Class 3, none of the predictors dominate the contribution of others. We believe this behavior to be linked to the fact, that extremely large landslides may not be only linked to the ground motion but they may rather initiate because of very localized landscape characteristics. For instance, structural features have long been recognized as factors that control the occurrence of large landslides (e.g., Chigira and Yagi 2006; Peart 1991; Tanyas et al. 2021). This is due to the fact that weakness planes could make hillslopes kinematically susceptible even under the conditions of strong material properties and/or low ground shaking (Goodman et al. 1989). This makes the identification of weakness planes quite important, although it mostly missing in regional scale landslide susceptibility assessment (Ling and Chigira 2020) including this study as such surveys are practically not possible to carry out for large areas. This means that in the case of Class 3, none of the variables may replace the role of that missing component individually. However, the high modelling performance also shows that the compound effect of all variables acts as a proxy to identify those mapping units associated with the largest landslides.

In fact, a closer look at the predictor’s removal effect shows that, for instance, VRM is as one of the variables responsible for the largest drop in perfomance. We recall here that the VRM expresses how rough the terrain is, this being linked in several studies to the rock mass strength (e.g., Tanyaş et al. 2019). In other words, when the roughness is high, the landscape is likely made of rock masses with higher strength and whenever the roughness is low, this may imply soft materials that drape over the landscape, accommodating the deformation of induced by local tectonic regimes in the form of hills rather than steep scarps. This type of considerations can be behind the role of VRM with respect to extremely large landslides as their failure initiation maybe linked to weakness planes such as fractures or fissures dissecting rocky outcrops. Conversely, a landscape characterized by low VRW values mostly correspond of gentle slopes, where the tectonic response likely gives rise to ductile rather than fragile deformations.

Ablation test run for each landslide size class estimated via the class model. For each class, the AUC reported to the y-axis is measured each time a predictor variable is removed from the initial set. We stress here that the removal of a predictor implies taking away both the corresponding mean and standard deviation values per slope unit. The horizontal lines correspond to the best binary model shown in Fig. 7a, obtained at the 26th epoch

7.3 Loss ratio consideration

To complete the description of the HNN we propose, we opted to share the results of additional tests we have run to assess how binary and class component relate to each other in terms of performances.

Unsurprisingly, as shown in Fig. 10a, the AUC values produced when the outputs are turned off are substantially below those when it is turned on. In other words, we limited the influence provided by one model (binary) onto the other (class) while checking the effect in terms of performance drop, and viceversa. For instance, in the case of the binary output, its AUC value was initially less than 0.5, meaning that it was worse than a random selection. The remainder of results are more similar and found in the 0.86 to 0.88 range. But, as we zoom in towards the upper bound of the AUC distribution, Fig. 10b unveils an interesting behavior. The maximum performance of the binary output occurred when the weightings were 10 and 0 for binary and class outputs respectively. This means that the presence of the class output only hindered the performance of the binary output. More interestingly, this was not the case for the class output. The second-worst performance of the class output was when the binary output was turned off, better only than when the class output itself was turned off. In fact, as the class output’s weight decreases on subsequent iterations, its performance generally improves, reaching a maximum on the antepenultimate iteration of the eleven trials, when the weights are 8 and 2 for the binary and class outputs respectively.

This demonstrates that the presence of the binary output can be leveraged to improve the performance of the class output. The implications of this finding are that the model should be tuned differently depending on the desired output. When making predictions using the binary output alone, the class output should be turned off, whereas when making predictions with the class output, the setting should be considered much more carefully.

8 Discussion

The model we propose is the first of its kind in the literature for it combines the prediction of which slope are susceptible to fail in response to a ground motion disturbance, together with the prediction of which size-class the landslides are expected to fall into, per slope unit. When we originally thought of implementing this model, we envisioned the landslide size component to be modeled closely to what has been done in Gallen et al. (2017); Lombardo et al. (2021). Gallen and co-authors proposed the first model able to spatially predict landslide sizes via Newmark sliding block analysis, a physically-based model. Lombardo and co-authors proposed something analogous but framed in the context of statistical modeling. In this work we took inspiration from the second example mentioned above, where the landslide size was modeled by using the continuous representation of the planimetric areas transformed into the logarithmic scale. Here, we initially envisioned a Hierarchical Neural Network capable of jointly modeling landslide occurrences and sizes, further improving on previous research by featuring a hierarchical model and also by keeping the planimetric area in its original squared meter scale. With this idea in mind we have run a number of tests but likely due to the heavy tailed distribution of the landslide sizes, our HNN struggled to reproduce the whole distribution. The binary component was never an issue, as also demonstrated by the number of successful ANN applications in the context of landslide modeling (Conforti et al. 2014; Amato et al. 2021a, b). As for the landslide size component, due to the long tailed shape of the landslide planimetric distribution, we opted for a solution with a dual advantage. We used a common solution to this type of problems, by slicing the distribution into four bins (Goel et al. 2010) and adopting a multi-label classifier to take on the task of probabilistically recognizing each bin. In addition to the fact that this type of solution is particularly common, we also exploited the added value brought by the Neural Network architecture itself. In fact, this type of hierarchical models are well studied within the artificial intelligence community, allowing us to bring years of already built-in experience to solve a problem that was still not addressed within the geomorphological community.

This structure produced outstanding results across the two model components, (binary and class). This is shown in Figs. 4 and 7 . And, such observation opens up the question whether this type of models should represent the next direction to be taken when modeling natural hazards. In fact, especially within the geomorphological community, the vast majority of applications are confined within the scope of predicting only landslide occurrences (i.e., susceptibility assessments). Much more rarely, the community extends its reach to assessing the hazard associated with a potential landslide occurrence. This happens because the definition of landslide hazard features a temporal and size components which are not easily modeled. The temporal element is rarely accounted for because until the appearance of semi-automated mapping routines, monitoring a given area and manually mapping multi-temporal inventories has been a very difficult and expensive task (see explanation in Guzzetti et al. 2012). As for the dimension of the landslides, for decades the community has been focused on estimating the landslide event magnitude (Malamud et al. 2004; Tanyaş et al. 2018), a single measure valid for a large population of landslides. Only recently, Lombardo et al. (2021) have proposed a model that spatially estimates how prone slopes may be to release landslides of specific sizes. The combination of the landslide susceptibility and size elements proposed in this work belong to a currently uncharted territory, and their use is not featured in any international guidelines. However, we believe that the type of information compressed in Fig. 8 can be really important to support decision makers and generally for territorial management practices. Knowing or at least expecting which slope is more likely to release an extremely large landslide is an information that at least could save money when investing on slope stabilization. And at its best, it could help minimize the risk to human lives.

It is fair to point out that this type of models are at their infancy stage within the natural hazard community. Therefore, their potential success relies on further testing, before even considering making them part of any well established procedure. For this reason, we are sharing the data as well as the codes we wrote during this experiment. In such a way, we intend to promote repeatability and reproducibility of analogous experiments. For instance, we have currently developed this tool in the framework of co-seismic landslides. But, it is still unclear whether the same HNN is applicable in case of event-based rainfall induced landslides. Or, further tests need to be run on multi-temporal inventories.

9 Concluding remarks

We present the first hierarchical data-driven model able to simultaneously estimate where landslides may occur and to which landslide size class they may belong. We believe this model to be particularly suited for landslide hazard assessment. We recognize that the proposed model does not yet yield a full landslide hazard assessment as it is blind to the expected temporal frequency of such landslides. It should also be recognized, however, that the proposed model provides significantly more information than existing landslide susceptibility models. What makes this model particularly appealing is that its architecture hierarchically links the binary and the class elements. In other words, all the results reported in this manuscript are produced via a single data driven model.

In addition to future tests of the very same model—which we hope to stimulate by sharing data and codes with the readers in a github repository, we expect to venture into additional variations of the present scheme. For instance, the simplest iterations we envision simply reproduce similar tests on rainfall induced landslides or on geomorphological inventories that cover a large time span rather than being linked to a single event. And, we are already planning to extend the complexity even further. In fact, withing the very same hierarchical structure, we could also add the type of landslides in between the binary and the class components. This could provide a complete description of the landslide process, which would need to be “just” extended in time to fully solve any requirement reported in the standard landslide hazard definition.

10 Software and data availability

In the hope of promoting similar applications, we have shared data and codes in a github repository accessible at: click here.

The original landslide inventory can also be downloaded from the global repository of earthquake-induced landslides, accessible at: click here.

References

Agarap AF (2018) Deep learning using rectified linear units (relu). arXiv preprint arXiv:1803.08375

Al Shalabi L, Shaaban Z, Kasasbeh B (2006) Data mining: a preprocessing engine. J Comput Sci 2(9):735–739

Ali PJM, Faraj RH, Koya E, Ali PJM, Faraj RH (2014) Data normalization and standardization: a technical report. Mach Learn Tech Rep 1(1):1–6

Alvioli M, Guzzetti F, Marchesini I (2020) Parameter-free delineation of slope units and terrain subdivision of Italy. Geomorphology, p 107124

Alvioli M, Marchesini I, Guzzetti F (2018) Nation-wide, general-purpose delineation of geomorphological slope units in Italy. Technical report, PeerJ Preprints

Alvioli M, Marchesini I, Reichenbach P, Rossi M, Ardizzone F, Fiorucci F, Guzzetti F (2016) Automatic delineation of geomorphological slope units with r.slopeunits v1.0 and their optimization for landslide susceptibility modeling. Geosci Model Dev 9(11):3975–3991

Amato G, Palombi L, Raimondi V (2021) Data-driven classification of landslide types at a national scale by using Artificial Neural Networks. Int J Appl Earth Obs Geoinf 104:102549

Amato G, Eisank C, Castro-Camilo D, Lombardo L (2019) Accounting for covariate distributions in slope-unit-based landslide susceptibility models. a case study in the alpine environment. Eng Geol 260, In print

Amato G, Fiorucci M, Martino S, Lombardo L, Palombi L (2021a) Earthquake-triggered landslide susceptibility in Italy by means of Artificial Neural Network

Anderson-Bell J, Schillaci C, Lipani A (2021) Predicting non-residential building fire risk using geospatial information and convolutional neural networks. Remote Sens Appl: Soc Environ 21:100470

Atkinson PM, Massari R (1998) Generalised linear modelling of susceptibility to landsliding in the central Apennines, Italy. Comput Geosci 24(4):373–385

Banerjee K, Gupta RR, Vyas K, Mishra B et al (2020) Exploring alternatives to softmax function. arXiv preprint arXiv:2011.11538

Bout B, Lombardo L, van Westen C, Jetten V (2018) Integration of two-phase solid fluid equations in a catchment model for flashfloods, debris flows and shallow slope failures. Environ Model Softw 105:1–16

Budimir M, Atkinson P, Lewis H (2015) A systematic review of landslide probability mapping using logistic regression. Landslides 12(3):419–436

Burbank DW (2005) Cracking the Himalaya. Nature 434(7036):963–964

Carrara A (1988) Drainage and divide networks derived from high-fidelity digital terrain models. In: Quantitative analysis of mineral and energy resources. Springer, pp 581–597

Castro Camilo D, Lombardo L, Mai P, Dou J, Huser R (2017) Handling high predictor dimensionality in slope-unit-based landslide susceptibility models through LASSO-penalized Generalized Linear Model. Environ Model Softw 97:145–156

Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP (2002) SMOTE: synthetic minority over-sampling technique. J Artif Intell Res 16:321–357

Chigira M, Yagi H (2006) Geological and geomorphological characteristics of landslides triggered by the 2004 mid Niigta prefecture earthquake in Japan. Eng Geol 82(4):202–221

Clevert D-A, Unterthiner T, Hochreiter S (2015) Fast and accurate deep network learning by exponential linear units (elus). arXiv preprint arXiv:1511.07289

Conforti M, Pascale S, Robustelli G, Sdao F (2014) Evaluation of prediction capability of the artificial neural networks for mapping landslide susceptibility in the Turbolo River catchment (northern Calabria, Italy). CATENA 113:236–250

Di Napoli M, Carotenuto F, Cevasco A, Confuorto P, Di Martire D, Firpo M, Pepe G, Raso E, Calcaterra D (2020) Machine learning ensemble modelling as a tool to improve landslide susceptibility mapping reliability. Landslides 17(8):1897–1914

Elliott J, Jolivet R, González PJ, Avouac J-P, Hollingsworth J, Searle M, Stevens V (2016) Himalayan megathrust geometry and relation to topography revealed by the Gorkha earthquake. Nat Geosci 9(2):174–180

Ercanoglu M, Gokceoglu C (2004) Use of fuzzy relations to produce landslide susceptibility map of a landslide prone area (West Black Sea Region, Turkey). Eng Geol 75(3–4):229–250

Fan X, Scaringi G, Korup O, West AJ, van Westen CJ, Tanyas H, Hovius N, Hales TC, Jibson RW, Allstadt KE et al (2019) Earthquake-induced chains of geologic hazards: patterns, mechanisms, and impacts. Rev Geophys

Fell R, Corominas J, Bonnard C, Cascini L, Leroi E, Savage WZ et al (2008) Guidelines for landslide susceptibility, hazard and risk zoning for land-use planning. Eng Geol 102(3–4):99–111

Gallen SF, Clark MK, Godt JW, Roback K, Niemi NA (2017) Application and evaluation of a rapid response earthquake-triggered landslide model to the 25 April 2015 Mw 7.8 Gorkha earthquake, Nepal. Tectonophysics 714:173–187

Goel S, Broder A, Gabrilovich E, Pang B (2010) Anatomy of the long tail: ordinary people with extraordinary tastes. In: Proceedings of the third ACM international conference on Web search and data mining, pp 201–210

Goetz JN, Guthrie RH, Brenning A (2011) Integrating physical and empirical landslide susceptibility models using generalized additive models. Geomorphology 129(3–4):376–386

Goodman RE et al (1989) Introduction to Rock Mechanics, vol 2. Wiley, New York

Guzzetti F, Mondini AC, Cardinali M, Fiorucci F, Santangelo M, Chang K-T (2012) Landslide inventory maps: new tools for an old problem. Earth Sci Rev 112(1–2):42–66

Heerdegen RG, Beran MA (1982) Quantifying source areas through land surface curvature and shape. J Hydrol 57(3–4):359–373

Hosmer DW, Lemeshow S (2000) Applied logistic regression, 2nd edn. Wiley, New York

James T, Schillaci C, Lipani A (2021) Convolutional neural networks for water segmentation using sentinel-2 red, green, blue (rgb) composites and derived spectral indices. Int J Remote Sens 42(14):5338–5365

Jasiewicz J, Stepinski TF (2013) Geomorphons: a pattern recognition approach to classification and mapping of landforms. Geomorphology 182:147–156

Jenks GF (1967) The data model concept in statistical mapping. Int Yearbook Cartography 7:186–190

Kargel JS, Leonard GJ, Shugar DH, Haritashya UK, Bevington A, Fielding E, Fujita K, Geertsema M, Miles E, Steiner J et al (2016) Geomorphic and geologic controls of geohazards induced by Nepal’s 2015 Gorkha earthquake. Science 351(6269)

Khamis N, Sin TC, Hock G C (2018) Segmentation of residential customer load profile in peninsular Malaysia using Jenks natural breaks. In: 2018 IEEE 7th international conference on power and energy (PECon), pp 128–131

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980

Kumar Y, Dubey AK, Arora RR, Rocha A (2020) Multiclass classification of nutrients deficiency of apple using deep neural network. Neural Comput Appl, pp 1–12

Lee S, Ryu J-H, Won J-S, Park H-J (2004) Determination and application of the weights for landslide susceptibility mapping using an artificial neural network. Eng Geol 71(3–4):289–302

Li WC, Li HJ, Dai F, Lee LM (2012) Discrete element modeling of a rainfall-induced flowslide. Eng Geol 149:22–34

Ling S, Chigira M (2020) Characteristics and triggers of earthquake-induced landslides of pyroclastic fall deposits: an example from Hachinohe during the 1968 M7. 9 Tokachi-Oki earthquake, Japan. Eng Geol 264:105301

Lombardo L, Mai PM (2018) Presenting logistic regression-based landslide susceptibility results. Eng Geol 244:14–24

Lombardo L, Tanyas H (2020) Chrono-validation of near-real-time landslide susceptibility models via plug-in statistical simulations. Eng Geol 278:105818

Lombardo L, Cama M, Conoscenti C, Märker M, Rotigliano E (2015) Binary logistic regression versus stochastic gradient boosted decision trees in assessing landslide susceptibility for multiple-occurring landslide events: application to the 2009 storm event in Messina (Sicily, southern Italy). Nat Hazards 79(3):1621–1648

Lombardo L, Fubelli G, Amato G, Bonasera M (2016) Presence-only approach to assess landslide triggering-thickness susceptibility: a test for the Mili catchment (north-eastern Sicily, Italy). Nat Hazards 84(1):565–588

Lombardo L, Saia S, Schillaci C, Mai PM, Huser R (2018) Modeling soil organic carbon with Quantile Regression: dissecting predictors’ effects on carbon stocks. Geoderma 318:148–159

Lombardo L, Bakka H, Tanyas H, van Westen C, Mai PM, Huser R (2019) Geostatistical modeling to capture seismic-shaking patterns from earthquake-induced landslides. J Geophys Res Earth Surf 124(7):1958–1980

Lombardo L, Tanyas H, Huser R, Guzzetti F, Castro-Camilo D (2021) Landslide size matters: a new data-driven, spatial prototype. Eng Geol 293:106288

Lombardo L, Tanyas H, Nicu IC (2020) Spatial modeling of multi-hazard threat to cultural heritage sites. Eng Geol, p 105776

Lu L, Shin Y, Su Y, Karniadakis GE (2019) Dying relu and initialization: theory and numerical examples. arXiv preprint arXiv:1903.06733

Lydia A, Francis S (2019) Adagrad-an optimizer for stochastic gradient descent. Int J Inf Comput Sci 6(5)

Maas AL, Hannun AY, Ng AY et al (2013) Rectifier nonlinearities improve neural network acoustic models. InI Proceedings of ICML, 30:3

Malamud BD, Turcotte DL, Guzzetti F, Reichenbach P (2004) Landslides, earthquakes, and erosion. Earth Planet Sci Lett 229(1–2):45–59

Marjanović M, Kovačević M, Bajat B, Voženílek V (2011) Landslide susceptibility assessment using SVM machine learning algorithm. Eng Geol 123(3):225–234

Naranjo JL, Van Westen C, Soeters R (1994) Evaluating the use of training areas in bivariate statistical landslide hazard analysis-a case study in Colombia. ITC J 3:292–300

Ngah S, Bakar RA, Embong A, Razali S (2016) Two-steps implementation of sigmoid function for artificial neural network in field programmable gate array. ARPN J Eng Appl Sci 11(7):4882–4888

Park S, Kwak N (2016) Analysis on the dropout effect in convolutional neural networks. In: Asian conference on computer vision, pp 189–204

Peart M (1991) The kaiapit landslide: events and mechanisms. Q J Eng Geol Hydrogeol 24(4):399–411

Petley D (2012) Global patterns of loss of life from landslides. Geology 40(10):927–930

Pimont F, Fargeon H, Opitz T, Ruffault J, Barbero R, Martin-StPaul N, Rigolot E, Rivière M, Dupuy J-L (2021) Prediction of regional wildfire activity in the probabilistic Bayesian framework of Firelihood. Ecol Appl 31(5):e02316

Raychaudhuri S (2008) Introduction to monte carlo simulation. In: 2008 Winter simulation conference, pp 91–100

Reichenbach P, Rossi M, Malamud BD, Mihir M, Guzzetti F (2018) A review of statistically-based landslide susceptibility models. Earth Sci Rev 180:60–91

Roback K, Clark MK, West AJ, Zekkos D, Li G, Gallen SF, Chamlagain D, Godt JW (2018) The size, distribution, and mobility of landslides caused by the 2015 Mw7.8 Gorkha earthquake, Nepal. Geomorphology 301:121–138

Roback K, Clark M, West A, Zekkos D, Li G, Gallen S, Godt J (2017) Map data of landslides triggered by the 25 April 2015 Mw 7.8 Gorkha, Nepal earthquake. US Geological Survey data release

Sappington JM, Longshore KM, Thompson DB (2007) Quantifying landscape ruggedness for animal habitat analysis: a case study using bighorn sheep in the Mojave Desert. J Wildl Manag 71(5):1419–1426

Schillaci C, Perego A, Valkama E, Märker M, Saia S, Veronesi F, Lipani A, Lombardo L, Tadiello T, Gamper HA, Tedone L, Moss C, Pareja-Serrano E, Amato G, Kühl K, Dǎmǎtîrcǎ C, Cogato A, Mzid N, Eeswaran R, Rabelo M, Sperandio G, Bosino A, Bufalini M, Tunçay T, Ding J, Fiorentini M, Tiscornia G, Conradt S, Botta M, Acutis M (2021) New pedotransfer approaches to predict soil bulk density using wosis soil data and environmental covariates in mediterranean agro-ecosystems. Sci Total Environ 780:146609

Schmitt RG, Tanyas H, Jessee MAN, Zhu J, Biegel KM, Allstadt KE, Jibson RW, Thompson EM, van Westen CJ, Sato HP, Wald DJ, Godt JW, Gorum T, Xu C, Rathje EM, Knudsen KL (2017) An open repository of earthquake-triggered ground-failure inventories. U.S, Geological Survey Data Series, p 1064

Sharma S, Sharma S (2017) Activation functions in neural networks. Towards Data Sci 6(12):310–316

Shrestha S, Kang T-S (2019) Assessment of seismically-induced landslide susceptibility after the 2015 Gorkha earthquake, Nepal. Bull Eng Geol Environ 78(3):1829–1842

Srivastava N (2013) Improving neural networks with dropout. Univ Toronto 182(566):7

Steger S, Mair V, Kofler C, Pittore M, Zebisch M, Schneiderbauer S (2021) Correlation does not imply geomorphic causation in data-driven landslide susceptibility modelling: benefits of exploring landslide data collection effects. Sci Total Environ 776:145935

Süzen ML, Doyuran V (2004) A comparison of the GIS based landslide susceptibility assessment methods: multivariate versus bivariate. Environ Geol 45(5):665–679

Tanyaş H, van Westen C, Allstadt K, Nowicki AJM, Görüm T, Jibson R, Godt J, Sato H, Schmitt R, Marc O, Hovius N (2017) Presentation and analysis of a worldwide database of earthquake-induced landslide inventories. J Geophys Res Earth Surf 122(10):1991–2015

Tanyaş H, Allstadt KE, van Westen CJ (2018) An updated method for estimating landslide-event magnitude. Earth Surf Proc Land 43(9):1836–1847

Tanyaş H, Rossi M, Alvioli M, van Westen CJ, Marchesini I (2019) A global slope unit-based method for the near real-time prediction of earthquake-induced landslides. Geomorphology 327:126–146

Tanyaş H, Kirschbaum D, Lombardo L (2021) Capturing the footprints of ground motion in the spatial distribution of rainfall-induced landslides. Bull Eng Geol Environ 80(6):4323–4345

Tanyas H, Hill K, Mahoney L, Fadel I, Lombardo L (2021) The world’s second-largest, recorded landslide event: lessons learnt from the landslides triggered during and after the 2018 Mw 7.5 Papua New Guinea earthquake

Tanyaş H, Lombardo L (2020) Completeness index for earthquake-induced landslide inventories. Eng Geol 264:105331

Titti G, van Westen C, Borgatti L, Pasuto A, Lombardo L (2021) When enough is really enough? On the minimum number of landslides to build reliable susceptibility models. Geosciences 11(11):469

Van den Bout B, Lombardo L, Chiyang M, van Westen C, Jetten V (2021) Physically-based catchment-scale prediction of slope failure volume and geometry. Eng Geol 284:105942

Van Rossum G, Drake Jr FL (1995) Python reference manual. Centrum voor Wiskunde en Informatica Amsterdam

Varshney M, Singh P (2021) Optimizing nonlinear activation function for convolutional neural networks. Signal, Image and Video Processing, pp 1–8

Verros SA, Wald DJ, Worden CB, Hearne M, Ganesh M (2017) Computing spatial correlation of ground motion intensities for Shakemap. Comput Geosci 99:145–154

Verstappen HT (1983) Applied geomorphology: geomorphological surveys for environmental development. Number 551.4 VER

Wichrowska O, Maheswaranathan N, Hoffman MW, Colmenarejo SG, Denil M, Freitas N, Sohl-Dickstein J (2017) Learned optimizers that scale and generalize. In: International conference on machine learning, pp 3751–3760

Worden C, Wald D (2016) ShakeMap manual online: technical manual, user’s guide, and software guide. US Geol, Surv

Yang L, Meng X, Zhang X (2011) SRTM DEM and its application advances. Int J Remote Sens 32(14):3875–3896

Zevenbergen LW, Thorne CR (1987) Quantitative analysis of land surface topography. Earth Surf Proc Land 12(1):47–56

Acknowledgements

We would like to thank the five anonymous reviewers for their insightful comments. This article was partially supported by King Abdullah University of Science and Technology (KAUST) in Thuwal, Saudi Arabia, Grant URF/1/4338-01-01.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Further details on the proposed model

Appendix A: Further details on the proposed model

1.1 A.1 Activation functions

The binary output has a sigmoid activation function, which produces values in between 0 and 1. The sigmoid was chosen because it is a common output activation when making binary classifications (Ngah et al. 2016). The class output uses a SoftMax (Sharma and Sharma 2017) activation function with four outputs, one for each possible classification of landslide size. SoftMax was also chosen based on its common usage for multiclass classification models (Banerjee et al. 2020; Kumar et al. 2020).

In the first batch of hidden layers, a Rectified Linear Unit (ReLU, Agarap 2018) is used as the activation function for all layers, as is common in many contemporary neural networks (Varshney and Singh 2021). In the second batch of hidden layers, Leaky ReLUs (Clevert et al. 2015) are used to combat the somewhat common dying ReLU (Lu et al. 2019) problem that was encountered when initially attempting to build the network with standard ReLUs. The dying ReLU problem occurs when a standard ReLU function learns a large negative bias term for its weights, and given the shape of the function, this can result in the function exclusively outputting zero. Using a Leaky Relu addresses this issue by allowing the function to generate a small negative output when the function returns a negative value (Maas et al. 2013). Using leaky ReLUs in the second batch of hidden layers allowed the network to successfully overcome the dying ReLU problem.

1.2 A.2 Regularization

On each neuron in the hidden and output layers, kernel regularization reduces the values of the weights learned to prevent overfitting to training data. The type of regularization used is L2. L2 regularization applies a penalty to the weights learned of each neuron and is proportional to the initial weights learned. This means that while the weights will decrease in magnitude and be “closer to zero”, they will never reach zero (provided that the initial weight is not zero) and this will prevent overfitting while maintaining the general distribution of the weights learned. The range of values tested for finding the optimal L2 value in hyperparameter tuning can be found in Table 5.

1.3 A.3 Dropout layers

Dropout approaches are technically considered a type of activity regularization but are discussed here separately because they operate in a way distinctly different from the L2 regularization. The key idea behind dropouts is to randomly remove neurons (and their connections) from a network during training. This is designed to prevent the neurons from coadapting excessively. Excessive co-adaption can lead to overtraining, and studies have shown that adding dropout layers to various kinds of neural network markedly reduces overfitting and increases performance (Park and Kwak 2016). The neurons dropped in any given layer are chosen randomly, but the proportion of neurons that are dropped is defined by a predetermined dropout rate. In this study, the dropout rate is tested at values from 0.2 to 0.8 in increasing increments of 0.2. These values were chosen based on a study by Srivastava (2013), that found optimal dropout rates typically are found between these values.

This additional form of activity regularization is included because Srivastava (2013) find that coupling dropout rates with other forms of regularization can further improve the performance of neural networks. In their study, they coupled dropout regularization with L2, and max-norm activity regularization, and find that in both instances, the coupled techniques outperformed any individual technique used on its own.

1.4 A.4 Loss functions

Binary cross entropy was used on the binary output, and categorical cross entropy on the class output. Binary cross entropy, also known as log-loss is defined by the formula below:

where \({\hat{y}}_i\) is the predicted output value and \(y_i\) is the observed value of the ith model output. This loss function penalizes the result based on the distance of the prediction from the observed value. This means that high loss values indicate larger distances of predictions from the observed values. Categorical cross entropy performs a very similar role but is formatted to deal with multiple classes (in this case four). The notation is the same as for binary cross entropy.

In this case, \({\hat{y}}_i\) is the probability an event will occur and the sum of all \({\hat{y}}_i\) values is equal to 1 (one of the events will occur). When used in this paper, the class 0 landslide is treated as an event even though it technically represents the absence of an event to ensure that the sum of all \({\hat{y}}_i\) probabilities is equal to one even if no landslide is likely. Finally, the total loss of the model is the sum of both output’s loss:

1.5 A.5 Loss weight ratio testing

The aim of loss weight ratio testing is to be able to better understand the impact that the presence of the first output has on the second, and vice versa, as well as to find the optimal combination for producing results. During this stage, the loss equation 4 is updated to the equation below, where \(\omega _1\) and \(\omega _2\) are the weights for each of the individual loss functions.

By prescribing loss weights in a multi-output model, it is possible to specify the extent to which each output’s loss contributes to the overall loss of the model. The objective is to examine how changes in these weights impact the learning process and results. As administered in this study, 11 combinations of weights are tested, with each combination of weights summing to 10, as shown in Fig. 10.

1.6 A.6 Optimizer

Recently introduced by Kingma and Ba (2014), the Adam optimizer was chosen based on its superior performance compared to other optimizers such as AdaGrad (Lydia and Francis 2019) or RMSProp (Wichrowska et al. 2017). Adam is algorithm for gradient-descent optimization based around “adaptive estimates of lower order moments”. This means that as the algorithm iterates, the parameters change. In the case of gradient descent, this means the learning rate starts at a large rate and decreases as the process continues to ensure convergence occurs at the correct minimum. It is computationally efficient and well suited to problems that use large amounts of data and parameters (Kingma and Ba 2014). The authors also suggest a learning rate of 0.001, although in this paper several learning rates are tested, visible in table 4. The keras default setting for the Adam optimizer (’adam’) is also trialed.

1.7 A.7 Hyperparameter tuning

The range of tunable hyperparameters and the values tested is visible in Table 5:

Due to the size of the search space (there are over 100,000 combinations) and the number of records (120,000+) an exhaustive grid search would have been very computationally demanding. Therefore, a Montecarlo hyperparameter tuning approach was used instead.

Montecarlo tuning is a process that relies on repeated random sampling to determine appropriate hyperparameters (Raychaudhuri 2008). In this case, the process consisted of generating a random combination of hyperparameters 1000 times, with non-duplicate combinations being added to a cache. The training data was then split into test and validation training sets at an 80:20 ratio and run for 300 epochs. An early stopping mechanism was implemented whereby if the validation loss did not decrease for 15 epochs, then the training process halted. Each set of parameters was tested 5 times and the average minimum validation loss was added to the cache with its corresponding parameter combination. The parameter combination with the lowest average validation loss value was then selected as the final model.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Aguilera, Q., Lombardo, L., Tanyas, H. et al. On the prediction of landslide occurrences and sizes via Hierarchical Neural Networks. Stoch Environ Res Risk Assess 36, 2031–2048 (2022). https://doi.org/10.1007/s00477-022-02215-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00477-022-02215-0