Abstract

This paper introduces a metamodelling technique that employs gradient-enhanced Gaussian process regression (GPR) to emulate diverse internal energy densities based on the deformation gradient tensor \(\varvec{F}\) and electric displacement field \(\varvec{D}_0\). The approach integrates principal invariants as inputs for the surrogate internal energy density, enforcing physical constraints like material frame indifference and symmetry. This technique enables accurate interpolation of energy and its derivatives, including the first Piola-Kirchhoff stress tensor and material electric field. The method ensures stress and electric field-free conditions at the origin, which is challenging with regression-based methods like neural networks. The paper highlights that using invariants of the dual potential of internal energy density, i.e., the free energy density dependent on the material electric field \(\varvec{E}_0\), is inappropriate. The saddle point nature of the latter contrasts with the convexity of the internal energy density, creating challenges for GPR or Gradient Enhanced GPR models using invariants of \(\varvec{F}\) and \(\varvec{E}_0\) (free energy-based GPR), compared to those involving \(\varvec{F}\) and \(\varvec{D}_0\) (internal energy-based GPR). Numerical examples within a 3D Finite Element framework assess surrogate model accuracy across challenging scenarios, comparing displacement and stress fields with ground-truth analytical models. Cases include extreme twisting and electrically induced wrinkles, demonstrating practical applicability and robustness of the proposed approach.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Electro Active Polymers (EAPs) have emerged as a category of intelligent materials capable of undergoing substantial changes in shape in response to electrical stimuli [9, 32, 53,54,55]. Among these, dielectric elastomers are particularly noteworthy due to their remarkable actuation capabilities, encompassing attributes such as light weight, rapid response times, flexibility, and low stiffness properties. Notably, these materials can undergo electrically induced substantial strains (with reported area expansions of up to 1962% [37] as observed in research conducted at Harvard’s Suo Lab). Their potential is exceptionally promising, with applications spanning bio-inspired robotics [8, 11, 29, 36, 47], humanoid robotics, and advanced prosthetics [9, 40, 68], as well as implications for tissue regeneration [48].

The realm of nonlinear continuum mechanics has reached an advanced stage of development, encompassing the variational formulation, finite element implementation, and principles related to the constitutive modeling of EAPs [6, 7, 16, 17, 67]. In the context of the latter, the reversible constitutive model for dielectric elastomers is encapsulated within the free energy density, contingent upon the deformation gradient tensor \(\varvec{F}\) and the material electric field \(\varvec{E}_0\). Complementary to this potential, which exhibits a saddle point nature, is the internal energy density, contingent upon the deformation gradient tensor and the electric displacement field \(\varvec{D}_0\). Building upon this foundation, researchers in [22, 51, 52] introduced an extension of the concept of polyconvexity, originally from the field of hyperelasticity [2, 12,13,14, 64], into this coupled electromechanical scenario. This novel definition of polyconvexity played a pivotal role in establishing the existence of minimizers in this context for the first time [65], serving as a sufficient condition for the extension of the rank-one convexity criterion within electromechanics.

In spite of the considerable inherent potential exhibited by EAPs, a primary limitation arises from their demand for a substantial electric field magnitude to induce significant deformation, rendering them prone to electromechanical instabilities or electrical breakdown [4, 57, 70]. To mitigate the requirement for high voltage operation, some researchers propose the adoption of composite materials as the basis for EAPs. These composites often amalgamate a low-stiffness, low-permittivity elastomer matrix with stiffer, higher-permittivity inclusions distributed randomly as fibers or particles. Experimental investigations have evidenced a substantial enhancement in the coupled electromechanical performance of electroactive composites, thereby reducing the voltage prerequisites for actuation. A noteworthy development in recent years pertains to rank-one laminate composite dielectric elastomers [15, 43, 44].

The determination of the macroscopic constitutive response of the composite material hinges upon the specific type of microstructure under consideration. In the case of laminated composite materials, the homogenization challenge at the microstructure level is governed by a system of nonlinear equations that implicitly establish the microstructural parameters with respect to the macroscopic strain gradient tensor and the electric displacement field [15, 21, 58]. In the case of more intricate microstructures, such as randomly distributed inclusions embedded within an elastomeric matrix, the determination of the macroscopic constitutive response of the composite material necessitates the utilization of computationally intensive homogenization techniques. However, these methods come with a limitation. EAPs exhibit nonlinear behavior, leading to a non-linear dependency of their macroscopic response on macroscopic deformations and electric or magnetic fields. Essentially, this signifies that a boundary value problem must be solved at the micro level, considering suitable boundary conditions, for every stress and electric/magnetic field combination [63].

In the effort to mitigate the high computational demands associated with methods like computational homogenization, recent developments in the realm of nonlinear continuum mechanics have witnessed the emergence of Machine Learning algorithms. These methods enable the generation of diverse constitutive models through the utilization of data gathered from experimental tests or in-silico (computational)-based computations. This paper aims to explore a specific type of Machine Learning technique, namely Gaussian Process Regression (GPR), to showcase its viability in approximating the constitutive model of analytical constitutive models in electromechanics, particularly within a specific category of composites known as rank-one laminates. By applying rank-n homogenization principles, it becomes feasible to derive the homogenized or effective response of rank-one laminates in an almost analytical manner, without necessitating computational homogenization. Utilizing in-silico data generated based on this model, the objective is to create surrogate models capable of replicating the behavior of the aforementioned types of constitutive models. This initial focus pertains to simpler scenarios, with the intention of demonstrating the feasibility and accuracy of the employed GPR technique. This approach sets the stage for addressing more complex composite cases in near future works, eliminating the need for computational homogenization and facilitating a computationally efficient evaluation of their effective behaviour [63].

Artificial Neural Networks (ANNs) have been employed for learning or discovering constitutive models based on data generated either in silico or in physical laboratories, as demonstrated in studies several [10, 20, 31, 38, 39]. The work by Klein [31] represents a pioneering effort in the successful application of ANNs for uncovering constitutive laws in nonlinear electromechanics. Additionally, Gaussian Process Regression (GPR) has gained traction and found application in the development of data-based constitutive models for moderate strains in soft tissue applications, as shown by Aggarwal et al. [27]. A distinctive feature GPR compared to the ANN approach lies in its inherent probabilistic nature. GPR allows for the specification of prior knowledge, the generation of a distribution encompassing potential predictive functions, and the direct calculation of prediction uncertainties [5, 41, 56]. Moreover, GPR or Kriging offers control over the degree of interpolation between known points through the specification of noise in the correlation function [20].

Kriging [33, 46, 62] predicts Gaussian random field values using observed data from a finite set of points, finding applications in geostatistics, numerical code approximation, global optimization, and machine learning [28, 46, 56, 61]. It employs Gaussian distributions to define a joint distribution based on observations and predictions, utilizing spatially correlated covariance to weigh observation importance. The joint distribution conditions on observed data, yielding a prediction distribution characterized by mean and covariance, facilitating sampling for predictions [49]. This emulator type has grown popular due to its nonlinear function capture and statistical output [28, 45], yielding confidence intervals and adaptive metamodel refinement strategies.

This paper proposes a gradient-enhanced Gaussian process regression metamodelling technique for emulating internal energy densities characterizing soft/flexible EAP behavior. The method enforces physical constraints upfront by incorporating principal invariants as inputs. Gradient Kriging excels in precise interpolation of energy, first Piola-Kirchhoff stress tensor. Derivative incorporation reduces sampling points while maintaining accuracy. In contrast to neural networks, Kriging’s interpolatory nature precisely matches stress tensors at sample points, ensuring stress-free origin compliance.

The structure of this paper unfolds as follows: In Sect. 2, we establish the foundational concepts by introducing the essential elements of nonlinear continuum electromechanics, emphasizing constitutive modeling. Moving forward, Sect. 3 provides a comprehensive and self-contained overview of Gaussian Process Regression (GPR) or Kriging. Proceeding to Sect. 4, we undertake the calibration of Kriging-based surrogate models by employing synthetic data derived from well-established ground truth internal energy densities. Lastly, Sect. 5 exemplifies the practical application of these surrogate models within a 3D Finite Element computational framework. A thorough assessment is conducted to gauge the precision of these models across diverse and demanding scenarios, juxtaposing displacement and stress fields against their corresponding ground-truth analytical model counterparts.

Notation Throughout this paper, \(\varvec{A}:\varvec{B}=A_{IJ}B_{IJ}\), \(\forall \varvec{A},\varvec{B}\in {\mathbb {R}}^{3\times 3}\), and the use of repeated indices implies summation. The tensor product is denoted by \(\otimes \) and the second order identity tensor by \(\varvec{I}\). The tensor cross product operation  between two artibrary second order tensor \(\varvec{A}\) and \(\varvec{B}\) entails

between two artibrary second order tensor \(\varvec{A}\) and \(\varvec{B}\) entails  . Furthermore, \(\varvec{{\mathcal {E}}}\) represents the third-order alternating tensor. The full and special orthogonal groups in \({\mathbb {R}}^3\) are represented as \(\text {O}(3)=\{\varvec{A}\in {\mathbb {R}}^{3\times 3},\vert \,\varvec{A}^T\varvec{A}=\varvec{I}\}\) and \(\text {SO}(3)=\{\varvec{A}\in {\mathbb {R}}^{3\times 3},\vert \,\varvec{A}^T\varvec{A}=\varvec{I},\,\text {det}\varvec{A}=1\}\), respectively and the set of invertible second order tensors with positive determinant is denoted by \(\text {GL}^+(3)=\{\varvec{A}\in {\mathbb {R}}^{3\times 3} \vert \,\text {det}{\varvec{A}}>0\}\).

. Furthermore, \(\varvec{{\mathcal {E}}}\) represents the third-order alternating tensor. The full and special orthogonal groups in \({\mathbb {R}}^3\) are represented as \(\text {O}(3)=\{\varvec{A}\in {\mathbb {R}}^{3\times 3},\vert \,\varvec{A}^T\varvec{A}=\varvec{I}\}\) and \(\text {SO}(3)=\{\varvec{A}\in {\mathbb {R}}^{3\times 3},\vert \,\varvec{A}^T\varvec{A}=\varvec{I},\,\text {det}\varvec{A}=1\}\), respectively and the set of invertible second order tensors with positive determinant is denoted by \(\text {GL}^+(3)=\{\varvec{A}\in {\mathbb {R}}^{3\times 3} \vert \,\text {det}{\varvec{A}}>0\}\).

2 Finite strain electromechanics

2.1 Differential governing equations in finite strain electromechanics

Let \({\mathcal {B}}_0\) denote a subset of three-dimensional Euclidean space \({\mathbb {R}}^3\), representing the initial, undeformed state of an Electro Active Polymer (EAP) material. We postulate the existence of an injective function \(\varvec{\phi }\), which uniquely maps each point \(\varvec{X}\) in the material configuration \({\mathcal {B}}_0\) to a corresponding point \(\varvec{x}\) in the deformed, spatial configuration \({\mathcal {B}}\in {\mathbb {R}}^3\). This mapping relationship is mathematically expressed as \(\varvec{x}=\varvec{\phi }(\varvec{X})\) (as illustrated in Fig. 1). Associated with the mapping \(\varvec{\phi }\), we define the deformation gradient tensor \(\varvec{F}\in \text {GL}^+(3)\) as \(\varvec{F} = \partial _{\varvec{X}}\varvec{\phi }\).

The behavior of the EAP represented by \({\mathcal {B}}_0\) is governed by the ensuing coupled boundary value problem:

where the equations on the left correspond to the purely mechanical physics and those on the right hand side, with the electrostatics equations. In (1), \(\text {DIV}(\bullet )\) signifies the divergence with respect to the material coordinates \(\varvec{X}\in {\mathcal {B}}_0\), while \(\varvec{f}_0\) represents the force applied per unit volume \({\mathcal {B}}_0\). Dirichlet boundary conditions for the field \(\varvec{\phi }\) are imposed on \(\partial _{\varvec{\phi }}{\mathcal {B}}_0\), and \(\varvec{t}_0\) represents a force per unit undeformed area, being \(\varvec{N}\) the outward normal at \(\varvec{X}\in \partial _{\varvec{t}}{\mathcal {B}}_0\). Furthermore, on the right hand side of (1) \(\rho _0\) represents an electric charge per unit undeformed volume \({\mathcal {B}}_0\). Dirichlet boundary conditions are prescribed on \(\partial _{{\varphi }}{\mathcal {B}}_0\) for the field \(\varphi \), and \(\omega _0\) represents an electric charge per unit undeformed area \(\partial _{{\omega }}{\mathcal {B}}_0\), being \(\varvec{N}\) the outward normal at \(\varvec{X}\in \partial _{{\omega }}{\mathcal {B}}_0\). For both coupled physical problems, the boundaries where Dirichlet and Neumann boundary conditions are prescribed satisfy the following

Finally, \(\varvec{P}\) and \(\varvec{D}_0\) symbolize the first Piola-Kirchhoff stress tensor and the material electric displacement field, respectively. These tensors are interlinked with the deformation gradient tensor \(\varvec{F}\) and the material electric field \(\varvec{E}_0\) by means of an appropriate constitutive law, as described in Sect. 2.2.

2.2 The internal energy density in electromechanics

The constitutive model of the undeformed solid \({\mathcal {B}}_0\) is encapsulated in the internal energy density per unit underformed volume, denoted as

Taking the derivative of the internal energy density with respect to both \(\varvec{F}\) and \(\varvec{D}_0\) gives rise to the first Piola-Kirchhoff stress tensor \(\varvec{P}\) and the material electric field \(\varvec{E}_0\) as defined in Eq. (1)

The internal energy density \(e(\varvec{F},\varvec{D}_0)\) is required to adhere to the principle of objectivity, also known as material frame indifference. This entails its invariance with respect to rotations \(\varvec{Q}\in \text {SO}(3)\) applied to the spatial configuration, as follows

Moreover, the internal energy density must conform to the material symmetry group \({\mathcal {G}}\subseteq \text {O}(3)\), a defining factor in determining the isotropic or anisotropic attributes of the underlying material. This requirement can be succinctly expressed in mathematical terms as follows

Furthermore, the internal energy density \(e(\varvec{F},\varvec{D}_0)\), along with the first Piola-Kirchhoff stress tensor \(\varvec{P}\) and the material electric field \(\varvec{E}_0\), must all vanish when no deformations are present, i.e.

The conditions in Eqs. (5), (6), and (7) embody essential physical criteria. Alongside these, there is a requisite for the internal energy density function to satisfy pertinent mathematical criteria. Specifically, the internal energy density function conventionally adheres to mathematical constraints rooted in the concept of convexity. One of the simplest conditions is that of convexity of \(e(\varvec{F},\varvec{D}_0)\), that is

which requires positive semi-definiteness of the Hessian operator \([{\mathbb {H}}_e]\), defined as

However, convexity away from the origin (i.e., \(\varvec{F} \approx \varvec{I}\), \(\varvec{D}_0\approx \varvec{0}\)) is not practically suitable, as it doesn’t encompass realistic material behaviors like buckling [2]. An alternative mathematical constraint is the quasiconvexity of \(e(\varvec{F},\varvec{D}_0)\) [3]. Unfortunately, quasiconvexity is a nonlocal condition that is challenging, and even infeasible, to verify. An implied requirement of quasiconvexity is that of generalized rank-one convexity of \(e(\varvec{F},\varvec{D}_0)\). A generalized rank-one convex energy density satisfies

Remark 1

Notice that the vector \(\varvec{V}_{\perp }\) in (10) is orthogonal to \(\varvec{V}\). The reason for this choice has its roots in the analysis of the hyperbolicity of the system of PDEs in (10) in the dynamic context. In this case, it is customary to express the fields \(\varvec{\phi }\) and \(\varvec{D}_0\) as a perturbation with respect to equilibrium states \(\varvec{\phi }^{\text {eq}}\) and \(\varvec{D}_0^{\text {eq}}\), respectively, by means of the addition of travelling wave functions as

where \(\varvec{V}\) represents the polarisation vector of the travelling wave and c the associated speed of propagation of the perturbation with amplitudes \(\varvec{u}\) and \(\varvec{V}_{\perp }\). Introduction of the ansatz for \(\varvec{D}_0\) into the Gauss’s law in Eq. (1) reveals that

and therefore, \(\varvec{V}_{\perp }\) must be orthogonal to \(\varvec{V}\).

Condition (10) is known as the Legendre-Hadamard condition or ellipticity of \(e(\varvec{F},\varvec{D}_0)\). It is associated with the propagation of traveling plane waves in the material, defined by a vector \(\varvec{V}\) and speed c [50]. Importantly, the existence of real wave speeds ab initio for the specific governing Eq. in (1) is assured when the electromechanical acoustic tensor \(\varvec{Q}_{ac}\) is positive definite, with

with

A sufficient and localized condition aligned with the rank-one condition in (10) is the polyconvexity of e. The internal energy density is considered polyconvex [2, 22] if a convex and lower semicontinuous function \({\mathbb {W}}:\text {GL}^+(3)\times \text {GL}^+(3)\times {\mathbb {R}}^+\times {\mathbb {R}}^3\times {\mathbb {R}}^3 \rightarrow {\mathbb {R}}\cup {+\infty }\) (generally non-unique) is defined as

where \(\varvec{H}\) and J represent the co-factor and determinant of \(\varvec{F}\), and with the vector \(\varvec{d}\) a vector in the spatial configuration, being the three of them defined as

Polyconvexity of the internal energy density entails the satisfaction of the following inequality

2.3 Invariant-based electromechanics

A simple manner to accommodate the principle of objectivity or material frame indifference and the requirement of material symmetry is through the dependence of the internal energy density function \(e(\varvec{F},\varvec{D}_0)\) with respect to invariants of the right Cauchy-Green deformation gradient tensor \(\varvec{C}=\varvec{F}^T\varvec{F}\) and \(\varvec{D}_0\). Let \(\textbf{I}=\{I_1,I_2,\dots ,I_n\}\), represent the n objective invariants required to characterise a given material symmetry group \({\mathcal {G}}\). Then, it is possible to express the strain energy density \(e(\varvec{F},\varvec{D}_0)\) equivalently as

Application of the chain rule into Eq. (4) yields the first Piola-Kirchhoff stress tensor \(\varvec{P}\) and the material electric displacement field \(\varvec{D}_0\) in terms of the derivatives of \(U(\textbf{I})\) as

2.3.1 Isotropic electromechanics

For the case of isotropy, the invariants required to characterise this material symmetry group, and the first derivatives of the latter with respect to \(\varvec{F}\) and \(\varvec{D}_0\) (featuring in the definition of \(\varvec{P}\) and \(\varvec{E}_0\) in (19)) are

Inserting the expressions in (20) into (19) yields the following expression for the first Piola-Kirchhoff stress tensor \(\varvec{P}\) and for \(\varvec{E}_0\)

2.3.2 Transversely isotropic elastromechanics

In the context of transverse isotropy, a preferred direction \(\varvec{N}\) emerges, perpendicular to the material’s plane of isotropy, imparting anisotropic characteristics. Our focus centers on the material symmetry group \({\mathcal {D}}_{\infty h}\) [25], where the structural tensor takes the form \(\varvec{N}\otimes \varvec{N}\). This group is distinct from \({\mathcal {C}}_{\infty }\), also present in transversely isotropic materials, characterized by the structural vector \(\varvec{N}\) and encompassing the potential for piezoelectricity. The \({\mathcal {D}}_{\infty h}\) group, beyond the invariants \(\{I_1,I_2,I_3,I_4,I_5\}\) in (20), is distinguished by three additional invariants, detailed below

In this case, the first Piola Kirchhoff stress tensor \(\varvec{P}\) and the electric field \(\varvec{E}_0\) adopt the following expressions

2.4 Application to rank-one laminates

Section 2.3 presented the case of phenomenological internal energy densities using principal invariants. In composite materials, computing effective strain energy density requires homogenization. This section focuses on rank-one laminates, composed of two constituents perpendicular to \(\varvec{N}\). Rank-n homogenization theory [15] relates macroscopic \(\bar{\varvec{F}}\), \(\bar{\varvec{D}}_0\)Footnote 1 to microscopic \(\varvec{F}^a\), \(\varvec{F}^b\), \(\varvec{D}_0^a\), \(\varvec{D}_0^b\) as

where indices a and b differentiate the constituents and \(c^a\) and \(c^b\) denote their respective volume fractions, with \(c^b=1-c^a\). A possible definition for \(\varvec{F}^a\), \(\varvec{F}^b\), \(\varvec{D}_0^a\) and \(\varvec{D}_0^b\) compatible with (24) is

where \(\varvec{\alpha }\in {\mathbb {R}}^3\) and \(\varvec{\beta }\in {\mathbb {R}}^2\) represent the mechanical and electric amplitude vectors, respectively, which need to be determined. Furthermore, \(\varvec{{\mathcal {T}}_N}=\varvec{T}_1\otimes \varvec{E}_1+\varvec{T}_2\otimes \varvec{E}_2\), being \(\varvec{T}_1\) and \(\varvec{T}_2\) any two perpendicular vectors to \(\varvec{N}\), and \(\varvec{E}_1=\begin{bmatrix} 1&0 \end{bmatrix}^T\) and \(\varvec{E}_2=\begin{bmatrix} 0&1 \end{bmatrix}^T\).

Remark 2

Notice that in Eq. in (25), although it might seem a priori not intuitive, the definition of \(\varvec{F}^a\) in terms of \(c^b\) and vice-versa (and also for \(\varvec{D}_0^a\) and \(\varvec{D}_0^b\)) is necessary in order to comply with Eq. (24). The first of these two equations entails

which is clearly satisfied, and the second

which is also satisfied. The same derivations and conclusions are obtained when considering \(\varvec{D}_0^a\) and \(\varvec{D}_0^b\) as in (25).

The determination of \(\varvec{\alpha }\) and \(\varvec{\beta }\) can be done by postulating the effective energy \(e(\bar{\varvec{F}},\bar{\varvec{D}}_0)\) as

The stationary conditions of \(\hat{e}\) with respect to \(\varvec{\alpha }\) and \(\varvec{\beta }\) yield

which represent two nonlinear vector equations from which \(\{\varvec{\alpha },\varvec{\beta }\}\) can be obtained. Finally, computation of \(\{\varvec{\alpha },\varvec{\beta }\}\) permits to obtain the effective first Piola-Kirchhoff stress tensor and electric field as

3 Gaussian process predictors

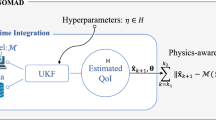

In the realm of computer experiments, metamodelling or surrogate modelling entails substituting a resource-intensive model or simulator \({U}=\mathscr {M}(\textbf{I})\) with a computationally efficient emulator \(\hat{\mathscr {M}}(\textbf{I})\). Both the simulator and emulator share the same input space \({\mathcal {D}}_I \subseteq {\mathbb {R}}^n\) and output space \({\mathcal {D}}_U \subseteq {\mathbb {R}}\). In our context, \(\mathscr {M}\) represents the response of an actual internal energy density U, dependent on principal invariants \(\textbf{I}\) (as discussed in Sect. 2.3). Thus, we replace the common input field \(\varvec{x}\) with \(\textbf{I}\) and the output y with U. As the internal energy U is scalar, the theoretical developments presented in this paper exclusively pertain to scalar outputs. Our approach employs Kriging models [30, 60], also known as Gaussian Process (GP) modelling. Succinctly, the key components of this method will be detailed in Sects. 3.1–3.3.

3.1 Gaussian process based prediction

GP modelling assumes that the output \({U}=\mathscr {M}(\textbf{I})\) is characterised by

where, \(\varvec{g}(\textbf{I})\cdot \varvec{\beta } \) signifies the prior mean of the Gaussian Process (GP), representing a linear regression model over a specific functional basis \({ g_i, i=1,\ldots ,p} \in {\mathcal {L}}_2({\mathcal {D}}I, {\mathbb {R}})\). The subsequent component, denoted as \({Z}(\textbf{I})\), characterizes a GP with a zero mean, a constant variance \(\sigma ^2_{U}\), and a stationary autocovariance function, defined as follows

where \({\mathcal {R}}\) is a symmetric positive definite autocorrelation function, and \(\varvec{\theta }\), the vector of hyperparameters. In this work we employ the Gaussian kernel for the definition of \({\mathcal {R}}\), defined as

The construction of a Kriging model consist of the two-stage framework described in the upcoming Sects. 3.2 and 3.3.

3.2 The conditional distribution of the prediction

The Bayesian prediction methodology assumes that observations gathered in the vector

The observed values, including any unobserved \( U(\varvec{I})\), constitute a realization of a random vector adhering to a parametric joint distribution. This section seeks to derive a stochastic prediction for this unobserved quantity by harnessing this statistical interdependence. The Gaussian assumption for \(Z(\textbf{I})\) in Eq. (31) and the linear regression model’s nature enable the inference that the observation vector \(\varvec{U}\) is also Gaussian, characterized by

being \(\varvec{G}\) and \(\varvec{R}\) the regression and correlation matrices, defined as

and

Likewise, a new random vector, encompassing the observed set \(\varvec{U}\) alongside any unobserved value \(U(\varvec{I})\), follows a joint Gaussian distribution, presented as

where \( {\varvec{ g}}(\textbf{I})\) is the vector of regressors evaluated at \(\textbf{I}\) and \(\varvec{ r}(\textbf{I})\) is the vector of cross-correlations between the observations and prediction given by

Assuming that the autocovariance function given by Eq. (32) is known, the conditional distribution of the prediction \(\hat{U}(\varvec{I})=U(\varvec{I})|\varvec{U}\) is governed by the Best Linear Unbiased Predictor (BLUP) theorem [62]. As per BLUP, the unobserved quantity \(U(\varvec{I})=\mathscr {M}(\textbf{I})\) in the prior model of Eq. (31) follows a Gaussian distribution, represented by the Gaussian random variable \(\hat{U}\) with a specific mean:

and variance

where

is the generalised least-squares estimate of the underlying regression problem, and

For those readers unfamiliar with the previous derivations, we suggest consulting the comprehensive treatment offered in Reference [18], which provides a thorough elucidation of the foundational mathematical principles and methodologies involved.

3.3 Joint maximum likelihood estimation of the GP parameters

In the previous sections, we operated under the assumption of a known autocovariance function. However, the specifics of the correlation functions \({\mathcal {R}}(\textbf{I},\textbf{I}^{'}, {\varvec{\theta }})\) and the variance value \(\sigma _U^2\) are typically not known in advance. In this study, we pre-define the correlation function type (specifically we make use of Gaussian kernels in (33) [18]), and the determination of hyperparameters \(\varvec{\theta }\) and variance \(\sigma _U^2\) is achieved using the observation dataset via the technique of maximum likelihood estimation (MLE). The outcome of this process yields the empirical best linear unbiased predictors (EBLUP) [62]. The estimation of GP parameters involves solving the following minimization problem

where \(\mathscr {L}(\varvec{U}|\varvec{\beta },\sigma _U^2,\varvec{\theta })\) is the opposite log-likelihood of the observations \(\varvec{U}\) with respect to its multivariate normal distribution given by

The MLE of \(\varvec{\beta }\) and \(\sigma _{U}^2\) are obtained from the first order optimality conditions of \(\mathscr {L}(\varvec{U}| \varvec{\beta },\sigma _U^2,\varvec{\theta })\), namely

from which the following optimal values can be obtained

Substituting \(\varvec{\beta }^{*}(\varvec{\theta }) \) and \({\sigma _U^2}^*(\varvec{\theta }) \) into the log-likelihood function (44) enables it to be re-written as

where the reduced likelihood function has been introduced as

This entails that the minimisation problem in Eq. (43) is equivalent to

Unfortunately, an analytical solution for the optimal hyperparameters \(\varvec{\theta }\in {\mathbb {R}}^n\) is unavailable. Instead, a numerical minimization approach is typically employed. In our research, we employ the box-min algorithm [69].

3.4 Gradient-enhanced Gaussian-process based prediction

In addition to function observations, leveraging output derivatives concerning input variables is possible, aiming to enhance predictor accuracy. This gives rise to what is termed Gradient Enhanced Kriging in the literature [23, 35], in contrast to the conventional Kriging detailed in Sects. 3.1–3.3. To establish a gradient-enhanced predictor, the observation vector is extended to encompass derivatives of the strain energy density U concerning its input variables \(\textbf{I}\), resulting in:

where

To interpolate both the variable and its gradient at any unobserved location, the extension of the correlation matrix \(\varvec{R}\) is necessary to incorporate the correlation between the variable and its gradient, formulated as

where \(\varvec{R}_{\varvec{UU}} \) is the correlation matrix presented in (36) for the non-gradient case. \(\varvec{R}_{\varvec{UU'}} \) includes the partial derivatives of \({\mathcal {R}}\) according to

given

The submatrix \(\varvec{R}_{\varvec{U^{\prime }U^{\prime }}} \) contains the second derivatives

where

Similarly the vector of cross-correlations between the observations and the prediction is extended as follows

Once these adaptations have been made, the revised definitions for the respective quantities can be inserted into the descriptions provided in Sects. 3.2 and 3.3. To begin, let us revisit the mean prediction

and the variance

with

and

Finally, the optimal hyperparameters are achieved by minimizing the log-likelihood function.

Remark 3

The conceptual framework presented in Sects. 2 and 3 has been constructed around the internal energy density \(e(\varvec{F},\varvec{D}_0)\). Yet, intertwined with this formulation, lies the ability to define its corresponding dual, referred to as the free energy density, symbolized as \(\varPsi (\varvec{F},\varvec{E})\). This duality is established through the subsequent Legendre transformation (Fig. 2)

The free energy density \(\varPsi (\varvec{F},\varvec{E}_0)\) imposes distinct convexity constraints compared to its dual counterpart \(e(\varvec{F},\varvec{D}_0)\). As a consequence, in the proximity of \(\varvec{F}\approx \varvec{I}\) and \(\varvec{E}_0\approx \varvec{0}\), it assumes the character of a saddle point function, exhibiting convexity with respect to \(\varvec{F}\) while displaying concavity concerning \(\varvec{E}_0\). This divergence in convexity/concavity attributes in the context of both mechanics and electro physics can introduce challenges in the application of Kriging or Gradient Enhanced Kriging interpolation models that rely on invariants of \(\varvec{F}\) and \(\varvec{E}_0\) (i.e., free energy-based Kriging), as opposed to those formulated in terms of \(\varvec{F}\) and \(\varvec{D}_0\) (i.e., internal energy-based Kriging). Notably, our observations underscore that an internal energy-focused approach yields markedly superior outcomes compared to the utilization of the free energy density methodology.

Remark 4

Applying Kriging and Gradient Enhanced Kriging techniques (discussed in Sects. 3.1 to 3.4) can be criticized due to the need to include strain energy values (U) at each observation or training point. This constraint limits their suitability for datasets from physical experiments, unlike those from in-silico or numerical sources, the paper’s focus. Quantifying energy measurements poses challenges in such cases. Yet, the Gradient Enhanced Kriging excels in adaptability, accommodating cases with a single observation (\(\varvec{F}=\varvec{I}\)) where U often equals zero. Here, derivative information at this point, combined with derivatives from other points, can be seamlessly integrated. This tailored approach is elaborated in Appendix C.

3.5 Derivatives of strain energy density for Gradient Enhanced Kriging

As detailed in Sect. 3.4, the gradient-enhanced Kriging method incorporates both the internal energy density U and its derivatives concerning the invariants \(\textbf{I}={I_1,I_2,\dots ,I_n}\). In cases involving isotropy or transverse isotropy within material symmetry groups, coupled with a principal invariant approach (see Sect. 2.3), addressing the derivatives of U with respect to \(\textbf{I}\) becomes imperative. While obtaining these derivatives for analytical energies like those derived from a Mooney–Rivlin model is straightforward, intricate internal energy densities arising from complex homogenization techniques in composites (e.g., rank-one laminates in Sect. 2.4) may lack readily available derivatives. In such scenarios, deriving these derivatives from the first Piola-Kirchhoff stress tensor \(\varvec{P}\) and the electric field \(\varvec{E}_0\) can be undertaken using conventional linear algebra principles. To facilitate this, let’s revisit the invariant-based expressions for \(\varvec{P}\) and \(\varvec{E}_0\) in (19), conveniently restated below

where

Let us introduce now the following notation

where \(\hat{\varvec{P}}\in {\mathbb {R}}^9\) and \(\hat{\varvec{U}}_i\in {\mathbb {R}}^9\) represent the vectorised expressions for both \(\varvec{P}\) and \(\varvec{U}_i\). This entails that \(\varvec{{\mathcal {A}}}\) can be conveniently written in terms of \(\varvec{{\mathcal {W}}}_i\) as

In (67), \(\varvec{{\mathcal {W}}}_i\) can be understood as the linear independent vectors of a basis, whilst \(\Big (\partial _{I_i} U\Big )\) represent the coordinates of \(\varvec{{\mathcal {A}}}\) along the vectors \(\varvec{{\mathcal {W}}}_i\), (\(i=\{1,\dots ,n\}\)). As standard in basic courses of linear algebra, given \(\varvec{{\mathcal {A}}}\), the coordinates \(\Big (\partial _{I_i} U\Big )\) can be obtained through projection of the latter over the n vector of the basis, which yields the following linear system of equations

Remark 5

By examining the algebraic system of equations presented in (68), it becomes feasible to ascertain the conditions under which a solution for \(\{\partial _{I_1}U,\dots ,\partial _{I_n}U\}\), representing the components of \(\varvec{{\mathcal {A}}}\) with respect to the basis \(\{\varvec{{\mathcal {W}}}_1,\dots ,\varvec{{\mathcal {W}}}_n\}\), can be derived. Notably, the solvability of (68) (i.e., the linearity independence of \(\{\varvec{{\mathcal {W}}}_1,\dots ,\varvec{{\mathcal {W}}}_n\}\)) hinges on the determinant of the system, which must not equal zero. Ill-conditioning in the equation system (68) can stem from several factors: identical principal stretches of deformation (found in both isotropic and transverse isotropic models), alignment of principal deformation directions with the preferred direction of transverse isotropy, or alignment of \(\varvec{D}_0\) with one of the principal directions of \(\varvec{F}\). To rectify this numerical issue, we propose a perturbation approach, introducing slight variations to the identical principal stretches and a misalignment of the coinciding principal direction with the preferred direction. This ensures solvability of (68).

3.5.1 Noise regularisation

In cases of substantial training data and the incorporation of derivative information into the training strategy (e.g., in the context of gradient-enhanced Kriging), the correlation matrix \(\varvec{R}\) defined in Eq. (52) can become ill-conditioned. To mitigate this issue, a customary practice is to introduce regularization by augmenting the correlation matrix with a diagonal matrix as follows: [19, 49, 66]

While our paper primarily highlights the interpolation properties of this technique, we consistently employ sufficiently small values of \(\varepsilon _1\) and \(\varepsilon _2\) to mitigate potential challenges. It is noteworthy, as elucidated in Remark 3, that Kriging and its gradient counterpart can achieve interpolation when \(\varvec{R}\) remains unregularized, specifically for \(\varepsilon _1=\varepsilon _2=0\). However, when \(\varepsilon _1\ne 0\) and \(\varepsilon _2\ne 0\), and in the extreme scenario of both parameters assuming larger values, Kriging transitions from an interpolation technique to a regression technique. Thus enabling to filter noisy data.

To illustrate this technique we explore the performance of Gradient-Enhanced Kriging in a regression context, particularly when confronted with severely ill-conditioned correlation matrices arising from noise-contaminated data. To elucidate this aspect, we employ two designated training samples, denoted as:

-

Unperturbed sample training sample devoid from noise in the output variables, including the values of the energy \(\varPsi (\varvec{F})=U(I_1,I_2,I_3,I_5)\) and its derivatives \(\{\partial _{I_1}U,\partial _{I_2}U,\partial _{I_3}U,\partial _{I_5}U\}\), where the ground-truth constitutive model from which these data have been generated in-silico corrresponds with the Mooney–Rivlin/ideal dielectric model described in Appendix A.

-

Noisy sample this training sample has been obtained by perturbing the deterministic sample according to:

$$\begin{aligned}{} & {} \widetilde{U}=U + {\mathcal {N}}(0,\sigma _U);\qquad \widetilde{\partial _{I_i}U}=\partial _{I_i}U + {\mathcal {N}}(0,\sigma _{\partial _{I_i}U}),\, \nonumber \\{} & {} \quad i=\{1,2,3,5\} \end{aligned}$$(70)with

$$\begin{aligned}{} & {} \sigma _U=\,0.2\cdot \text {mean}(U);\qquad \sigma _{\partial _{I_i}U}=0.2\nonumber \\{} & {} \quad \cdot \,\text {mean}({\partial _{I_i}U}),\,i=\{1,2,3,5\} \end{aligned}$$(71)

In both datasets, Fig. 3 illustrates the performance of interpolation and regression based approaches. In the case of the unperturbed sample (see Fig. 3a and b), Kriging prefectly reproduces the training data points (represented by circles). Conversely, in the noisy sample, Kriging strives to replicate the perturbed and irregular data to the greatest extent possible. Discrepancies observed at certain points, resulting in the ill-conditioning of the correlation matrix and subsequently the loss of interpolation properties. Notably, it is evident that the condition number of the matrix \(\varvec{R}\) experiences a substantial increase when dealing with the noisy sample, as illustrated in Fig. 3c. This observation aligns with expectations and raises concerns regarding the predictive accuracy of Kriging between training points, potentially leading to undesired oscillations.

Alternatively, we have explored a regression-based methodology, as detailed in [19, 49]. In this context, the regularization parameters \(\{\varepsilon _1,\varepsilon _2\}\) are treated as supplementary hyperparameters. Consequently, both sets of hyperparameters, namely \(\{\theta _1,\theta _2,\theta _3\}\) and \(\{\varepsilon _1,\varepsilon _2\}\), are optimized through the minimization of the reduced likelihood function \(\psi (\widetilde{\mathbf {\theta }})\)

where the augmented set of hyperparameters is defined as \(\widetilde{\varvec{\theta }}=\{\theta _1,\theta _2,\theta _3,\varepsilon _1,\varepsilon _2\}\). Applying this approach to only the the noisy sample yields the outcomes depicted in Fig. 3. The values of \(\{\varepsilon _1,\varepsilon _2\}\) are determined to strike a balance between the interpolation and regression properties of the Kriging response. Naturally, the response does not precisely match the noisy data, thereby avoiding the introduction of undesirable oscillations caused by data perturbations (see Fig. 3f).

4 Calibration of Kriging and Gradient Enhanced Kriging predictors

4.1 Design of Experiments

In this section, we present the procedure used for generating synthetic data, utilizing a diverse set of ground truth constitutive models. The internal energy densities and material parameters for these models can be found in A. To acquire the dataset, we adhere to the procedure outlined in [34], extended to the coupled context of electromechanics. The deformation gradient tensor \(\varvec{F}\) is parameterized via a chosen set of deviatoric directions, amplitudes, and Jacobians (J, i.e., the determinant of \(\varvec{F}\)). The process of generating sample points for deviatoric directions, amplitudes, and Jacobians is elucidated in Algorithm 1. Similarly, the electric displacement \(\varvec{D}_0\) is also parametrised in terms of unitary directions and amplitudes. Concerning the deviatoric directions for \(\varvec{F}\), denoted as \(\varvec{V_F}\) we formulate them using a spherical parametrization in \({\mathbb {R}}^5\), precisely representing these directions using five pertinent angular measures (\(\phi _1, \phi _2, \phi _3, \phi _4, \phi _5\in [0,2\pi ]\times [0,\pi ]\times [0,\pi ]\times [0,\pi ]\times [0,\pi ]\)) within this 5-dimensional space. For the directions employed for the parametrisation of \(\varvec{D}_0\), denoted as \(\varvec{V}_{\varvec{D}_0}\), these are created using a spherical parametrization in \({\mathbb {R}}^3\), using as angular measures \((\theta ,\psi )\in [0,2\pi ]\times [0,\pi ]\), namely

Once the sample is generated following Algorithm 1, the reconstruction of the deformation gradient tensor \(\varvec{F}\) and of \(\varvec{D}_0\) becomes possible at each of the sampling points. This reconstruction process is demonstrated in Algorithm 2, where \({\varvec{\Psi }}\) represents the basis for symmetric and traceless tensors (refer to Appendix B for details on \(\varvec{\Psi }\)).

4.2 Calibration and Validation

The synthetic data, generated as per Sect. 4.1, calibrates Kriging and Gradient Enhanced Kriging surrogates, following principles in Sect. 3. To assess surrogate accuracy at non-observation points, generated evaluation points mirror the procedure in Sect. 4.1. These points test the model performance but are not part of calibration. For validation, a substantial validation set of 10, 000 data points is used. This density ensures assessment of the smaller calibration set’s accuracy. Validation comprehensively evaluates the surrogate model’s performance, verifying its reliability and generalizability.

The calibration and validation process has been carried across a diverse range of constitutive models. These include: (a) Mooney–Rivlin/ideal dielectric model (MR/ID); (b) Arruda-Boyce/ideal dielectric (AB/ID) (see Reference [1]); (c) Gent/ideal dielectric (Gent/ID); (d) Quadratic Mooney–Rivlin/ideal dielectric (QMR/ID); (e) Yeoh/ideal dielectric (Yeoh/ID); (f) Rank-one laminate composite (ROL). Specific expressions for strain energy densities and material parameters are available in A. For each model, 2 training datasets are generated, each containing \(N=\{45, 100\}\) training points. Kriging and Gradient Enhanced Kriging models are calibrated for all 6 ground truth models within each training set. Results include mean squared error (\(R^2(\varvec{P})\) and \(R^2(\varvec{E}_0)\)) for first Piola-Kirchhoff stress tensor \(\varvec{P}\) and \(\varvec{E}_0\), and values of \(\hat{E}_{\varvec{P}}\) and \(\hat{E}_{\varvec{E}_0}\), defined below

are presented in Table 1 (for \(N=45\) training points) and 2 (for \(N=100\) training points). In Eq. (74), \(||\varvec{A}||\) denotes the Frobenius norm of \(\varvec{A}\), n is the number of experiments, \({\varvec{P}^{An}}^i\) and \({\varvec{P}^{Kr}}^i\) represent the analytical and Kriging-predicted first Piola-Kirchhoff stress tensors, respectively. Similarly, \({\varvec{E}_0^{An}}^i\) and \({\varvec{E}_0^{Kr}}^i\) represent the analytical and Kriging-predicted material electric field, respectively.

The findings of the analysis, as presented in Tables 1 and 2, offer insights into the performance of Kriging and Gradient Enhanced Kriging techniques. In both tables, the achieved \(R^2(\varvec{P})\) and \(R^2(\varvec{E}_0)\) values are notably high, approaching unity, signifying an impressive level of accuracy in predicting the first Piola–irchhoff stress tensor. This is true for all the models except for the rank-one laminate composite material, where the performance of the ordinary Kriging approach is extremely poor. Furthermore, under the consideration of the alternative metric, specifically \({\hat{E}_{\varvec{P}}, \hat{E}_{\varvec{E}_0}}\), Gradient Enhanced Kriging demonstrates a significantly superior accuracy, consistently yielding values approximately an order of magnitude smaller compared to the Kriging counterpart.

For comprehensive understanding, Fig. 4 depicts the evolution of the \(\hat{E}_{\varvec{P}}\) metric for both conventional and Gradient Enhanced Kriging methodologies. This illustration pertains to two specific constitutive models considered in Tables 1 and 2, namely the Mooney–Rivlin/ideal dielectric model and the rank-one laminate composite model. Notably, as the number of training points increases, the Gradient Enhanced technique adeptly diminishes this metric, substantiating its efficacy. On the contrary, the ordinary Kriging method is incapable of decreasing the metric \(\hat{E}_{\varvec{P}}\) as the number of infill points increases.

These observations emphasize the distinct advantage of adopting the Gradient Enhanced technique, as it facilitates precise predictions of the first Piola-Kirchhoff stress tensor \(\varvec{P}\) and of the material electric field \(\varvec{E}_0\) even when operating with an considerably small number of training points. This characteristic positions Gradient Enhanced Kriging as an exceedingly expedient and efficacious alternative in comparison to the conventional Kriging methodology.

Remark 6

Appendix A contains the material parameters used in each of the constitutive models (MR/ID, AB/ID, Gent/ID, QMR/ID, Yeoh/ID, ROL) considered for calibration of their respective Kriging and Gradient Krigind predictors. Notice that the values of these material parameters do not correspond with those of typical dielectric elastomers such as the VHB 4910 by 3 M. It is importante to emphasize that our Kriging and Gradient Kriging predictors are flexible to deal with any realistic value of material constants, and in particular, those typical of the popular VHB 4910. These materials exhibit a large disparity between the values of mechanical constants and electrical constants. For instance, the shear modulus \(\mu \) and electric permittivity \(\varepsilon \) of VHB 4910 material [26] take values of approximately \(\mu \approx 10^3-10^5\) (Pa) and \(\varepsilon \approx 10^{-11}-10^{-12}\) (F/m).

The enormous gap between the material constants of both physics (i.e. mechanics and electric physics) can ultimately pose challenges for the accurate calibration of the Kriging predictor (i.e. yielding ill-conditioning of the correlation matrix \(\varvec{R}\) (36)) or of any other type of machine learning technique. In order to remedy this, instead of considering the data generated by the model with such material constants, and in particular, the first Piola-Kirchhoff stress tensor \(\varvec{P}\), the material electric field \(\varvec{E}_0\) and the material electric displacement \(\varvec{D}_0\), we can alternatively perform the calibration with their dimensionless counterparts, \(\widetilde{\varvec{P}}\), \(\widetilde{\varvec{E}}_0\) and \(\widetilde{\varvec{D}}_0\) (notice that the deformation gradient tensor \(\varvec{F}\) is already dimensionless), respectively, defined as

Remark 7

With regards to the rank-one laminate material, in our previous publications (see Reference [42]), we demonstrated that whenever each of the phases a, b of the rank-one laminate comply with the polyconvexity condition (15), and therefore with the ellipticity condition (10), the solvability of \(\varvec{\alpha }\) and \(\varvec{\beta }\) in (29) is always guaranteed. This entails that at microscopic level there is no apparent difficulty. However, the homogenised response of phases which are elliptic individually does not necessarily inherit this desirable property, and can indeed exhibit loss of ellipticity. We have not discarded this situation for the calibration of the Kriging and Gradient Kriging predictors, and in fact, some points within the data generated violate the ellipticity condition (at the macroscopic level). Despite this, the predictors can handle these situations.

5 Numerical three-dimensional examples

The analysis in Sect. 4 strongly supports the superiority of gradient enhanced Kriging over its energy-only counterpart, which lacks first derivatives. Inspired by these promising results, the primary objective of this section entails the seamless integration of gradient enhanced Kriging models into an in-house Finite Element computational framework. This assimilation endeavors to establish the accuracy and efficacy of these metamodels through meticulous juxtaposition with the Finite Element solutions provided by their respective ground truth counterparts. Specifically, this evaluation embraces intricate and exacting scenarios including complex bending and wrinkling, thus furnishing a robust appraisal of the metamodels’ performance within demanding contexts.

5.1 Electrically induced bending example: isotropic ground truth model

The inaugural exemplification within the Finite Element domain revolves around a cantilever beam configuration, as illustrated in Fig. 5. The geometric attributes and boundary conditions underpinning this scenario are succinctly elucidated in Fig. 5. Pertaining to the discretization framework, tri-quadratic Q2 Finite Elements have been judiciously employed to effectuate the interpolation of the displacement field.

In this illustrative instance, we examine a Mooney–Rivlin/ideal dielectric model (as expressed in Eq. (76)) as the ground truth internal energy density. We adopt the material parameters specified in Table 3. Upon subjecting the system to a voltage differential \(\Delta V\sqrt{\varepsilon /\mu _1}=0.5\) (see Table 3 for the value of \(\mu _1\)), the ensuing deformation is depicted in Fig. 6a and b for the ground truth Mooney–Rivlin model and its gradient enhanced Kriging counterpart, respectively. Evident congruity emerges between both figures. This congruence is also manifest in the contour plot of \(\sigma _{13}\), where \(\varvec{\sigma }\) denotes the Cauchy stress tensor, i.e., \(\varvec{\sigma }=J^{-1}\varvec{P}\varvec{F}^T\). These consistent parallels serve to affirm the precision and robustness of the gradient enhanced Kriging models, signifying their potential in effectively capturing the intricate behavior of the underlying physical systems.

Electrically induced actuation: a Mooney–Rivlin ground truth model; b isotropic Gradient Enhanced Kriging counterpart. Contour plot distribution of \(\sigma _{13}/\mu _1\) (see Table 3 for the value of \(\mu _1\))

5.2 Complex electrically induced bending example: rank one laminate ground truth model

The second example considers the same cantilever beam as in the preceding section, subjected to a more complex set of boundary conditions for the electric potential \(\varphi \). This can be seen in Fig. 7. Pertaining to the discretization framework, tri-quadratic Q2 Finite Elements have been judiciously employed to effectuate the interpolation of the displacement field.

Complex electrically induced actuation. Geometry and electrical boundary conditions. Displacements fixed at \(X_1=0\). \(\{a,b,c\}=\{120,10,1\}\) (mm). Electrodes highlighted with colour green (two regions in the lowest surface across the thickness and one region on the top surface) and red colour (one region in the mid surface across the thickness)

In this instance, we consider a more complex constitutive model in comparison to the precedent section, where the selection encompassed an isotropic ground truth model. Our present investigation is directed towards a rank-one laminate composite ground truth model. The homogenized internal energy governing this model is encapsulated within Eq. (81), while the associated material parameters are cataloged in Table 9. Upon the imposition of a voltage gradient denoted as \(\Delta V\sqrt{\varepsilon ^a/\mu _1^a}=2.5\) across the electrodes (see Table 9 for the value of \(\mu _1^a\) and \(\varepsilon ^a\)), the intricate phenomenon of electrically induced bending is explored for both the ground truth and Gradient Enhanced Kriging models. The outcomes of this analysis are presented in Fig. 8, effectively showcasing the marked concordance evident in the electrically induced deformations, as well as the alignment in stress distributions, between the two models.

Complex electrically induced actuation. Contour plot distribution of \(\sigma _{13}/\mu _1^a\) for various of \(\Delta V\sqrt{\mu _1^a/\varepsilon ^a}\) (see Table 9 for the value of \(\mu _1^a\) and \(\varepsilon ^a\)): a Rank-one laminate composite ground truth model; b Transversely isotropic Gradient Enhanced Kriging counterpart

For a more comprehensive evaluation of the electrically induced deformation in the context of both models (the isotropic ground truth model and its corresponding counterpart developed using the Gradient Enhanced Kriging approach), an enhanced comparative perspective is available in Fig. 9. This representation serves to underscore the notable concurrence observed between the deformation predictions of the two models.

5.3 Electrically induced wrinkles: rank one laminate ground truth model

The last example considers the geometry and boundary conditions shown in Fig. 10. This example has been previously analysed in other works by the authors [43, 44]. The squared plate is completely fixed in its borders. The voltage is grounded at the maximum value of coordinate \(X_3\) whilst a surface charge \(\omega _0=220/\sqrt{\mu ^a_1\varepsilon ^a}\) (Q\(\cdot \text {mm}^{-2} \)) (see Table 9 for the value of \(\mu _1^a\) and \(\varepsilon ^a\)) is applied at the minimum value of coordinate \(X_3\). The Finite Element discretisation considers Q2 (tri-quadratic) hexahedral Finite Elements with \(80\times 80\times 2\) elements in \(X_1\), \(X_2\) and \(X_3\) directions.

Electrically induced wrinkles. Geometry and boundary conditions. Squared plate with side 0.06 (m) and thickness 1 (mm). Maximum applied surface charge \(\omega _0=20/\sqrt{\mu _1\varepsilon _1}\) (Q\(\cdot \text {mm}^{-2} \)) (see Table 9 for the value of \(\mu _1^a\) and \(\varepsilon ^a\)). Volumetric force of value \(9.8\times 10^{-2}\) (N/\(\hbox {m}^3\)) action in along \(X_3\) axis (in the positive direction)

The primary objective of this illustrative instance is to assess the precision of the Gradient Enhanced Kriging model within scenarios characterized by the emergence of wrinkles induced through electrical stimuli. In a specific context, our focus centers on employing the rank-one laminate model, as defined by Eq. (81), as the baseline model representing ground truth.

The pertinent material parameters integral to this model can be found in Table 9. Upon the application of an electric charge denoted as \(\omega _0\), the progression of electrically induced wrinkles is portrayed in Fig. 11, these predictions being furnished by the Gradient Enhanced Kriging model across a spectrum of escalating \(\omega _0\) values. In addition, this figure represent the evolution of the displacement along Z or \(X_3\) direction of points A and B (see Fig. 11) predicted by both ground truth and Gradient Enhanced Kriging (Emulator) models, showing a clear similarity between the paths of both models.

Electrically induced wrinkles. Wrinkling patterns for various values of surface charge \(\Lambda \omega _0\), being \(\Lambda \) the load factor. Top row: results predicted by the transversely isotropic Grandient Enhanced Kriging model, calibrated against rank-one laminate composite ground truth model. The graphics represent the evolution of the displacement along Z or \(X_3\) direction of points A and B predicted by both ground truth and Gradient Enhanced Kriging (Emulator) models

Crucially, Fig. 12 offers a side-by-side comparison of the wrinkles projected by the rank-one laminate composite model, serving as the veritable benchmark, and its concomitant representation through the Gradient Enhanced Kriging methodology. Evidently, the congruence between the patterns of electrically induced wrinkling is remarkable, further corroborated by the similarity observed in the distribution of stress patterns as depicted in the contour plots of both models.

Electrically induced wrinkles. Contour plot distribution of \(\sigma _{13}/\mu _1^a\) (see Table 9 for the value of \(\mu _1^a\)) for \(\Lambda =0.1\). a Rank-one laminate composite ground truth model; b transversely isotropic Gradient Enhanced Kriging model

6 Concluding Remarks

This manuscript introduced an innovative metamodelling technique that leverages gradient-enhanced Gaussian Process Regression or Kriging to emulate a diverse range of internal energy densities. The methodology seamlessly incorporates principal invariants as input variables for the surrogate internal energy density, thereby enforcing crucial physical constraints such as material frame indifference and symmetry. This advancement has facilitated precise interpolation not only of energy values, but also their derivatives, including the first Piola–Kirchhoff stress tensor and material electric field. Furthermore, it ensures stress and electric field-free conditions at the origin, a challenge typically encountered when employing regression-based methodologies like neural networks.

The research has indicated the inadequacy of utilizing invariants derived from the dual potential of the internal energy density, particularly the free energy density. The inherent saddle point nature of the latter diverges from the convex nature of the internal energy density, engendering complexities for models based on GPR or Gradient Enhanced GPR that rely on invariants of \(\varvec{F}\) and \(\varvec{E}_0\) (free energy-based GPR). This is contrasted with models formulated using \(\varvec{F}\) and \(\varvec{D}_0\) (internal energy-based GPR).

Numerical examples within a 3D Finite Element framework have been thoughtfully incorporated, rigorously assessing the accuracy of surrogate models across intricate scenarios. The comprehensive analysis juxtaposing

displacement and stress fields with ground-truth analytical models encompasses situations involving extreme bending and electrically induced wrinkles, thus showcasing the utility and accuracy of the proposed approach.

Notes

We could continue using the notation \(\varvec{F}\) and \(\varvec{D}_0\) for the macroscopic deformation gradient tensor and electric displacement, respectively. However, we prefer to adhere to the traditional convention in homogenisation theory [4, 24, 59], where macroscopic fields are generally referred to as average fields, for which the bar symbol on top of these fields can be used, hence the notation \(\bar{\varvec{F}}\), \(\bar{\varvec{D}}_0\).

References

Arruda EM, Boyce MC (1993) A three-dimensional constitutive model for the large stretch behavior of rubber elastic materials. J Mech Phys Solids 41:389–412

Ball JM (1976) Convexity conditions and existence theorems in nonlinear elasticity. Arch Ration Mech Anal 63(4):337–403

Ball JM (2002) Geometry, mechanics and dynamics, chapter some open problems in elasticity. Springer, Berlin, pp 3–59

Bertoldi K, Gei M (2011) Instabilities in multilayered soft dielectrics. J Mech Phys Solids 59(1):18–42

Bishop Christopher M (2006) Pattern recognition and machine learning. Springer, New York

Bustamante R (2009) Transversely isotropic non-linear electro-active elastomers. Acta Mech 206(3–4):237–259

Bustamante R, Dorfmann A, Ogden RW (2009) On electric body forces and Maxwell stresses in nonlinearly electroelastic solids. Int J Eng Sci 47(11–12):1131–1141

Cao J, Qin L, Liu J, Ren Q, Foo CC, Wang H, Lee HP, Zhu J (2018) Untethered soft robot capable of stable locomotion using soft electrostatic actuators. Extreme Mech Lett 21:9–16

Carpi F, De Rossi D (2007) Bioinspired actuation of the eyeballs of an adroid robotic face: concept and preliminary investigations. Bioinspiration Biomimetics 2:50–63

Chen P, Guilleminot J (2022) Polyconvex neural networks for hyperelastic constitutive models: A rectification approach. Mech Res Commun 125:103993

Chen Y, Zhao H, Mao J, Chirarattananon P, Helbling EF, Hyun NSP, Clarke David R, Wood RJ (2019) Controlled flight of a microrobot powered by soft artificial muscles. Nature 575:324–329

Ciarlet P (2010) Existence theorems in intrinsic nonlinear elasticity. Journal des mathématiques pures et appliqués 94:229–243

Ciarlet PG (1988) Mathematical elasticity. Volume 1: three dimensional elasticity

Dacorogna B (2008) Direct methods in the calculus of variations. Springer

deBotton G, Tevet-Deree L, Socolsky EA (2007) Analysis and applications to laminated composites. Electroactive heterogeneous polymers. Mech Adv Mater Struct 14:13–22

Dorfmann A, Ogden RW (2005) Nonlinear electroelasticity. Acta Mech 174(3–4):167–183

Dorfmann A, Ogden RW (2006) Nonlinear electroelastic deformations. J Elast 82(2):99–127

Dubourg V (2011) Adaptive surrogate models for reliability analysis and reliability-based design optimization. PhD thesis, Universite Blaise Pascal - Clermont II

Forrester A, Sobester A, Keane A (2008) Engineering design via surrogate modelling: a practical guide. Wiley, Chichester

(2022) Fuhg JN, Marino M, Bouklas N (2022) Local approximate Gaussian process regression for data-driven constitutive models: development and comparison with neural networks. Comput Methods Appl Mech Eng 388, 114217

Gei M, Springhetti R, Bortot E (2013) Performance of soft dielectric laminated composites. Smart Mater Struct 22:1–8

Gil AJ, Ortigosa R (2016) A new framework for large strain electromechanics based on convex multi-variable strain energies: variational formulation and material characterisation. Comput Methods Appl Mech Eng 302:293–328

Han ZH, Görtz S, Zimmermann R (2013) Improving variable-fidelity surrogate modeling via gradient-enhanced kriging and a generalized hybrid bridge function. Aerosp Sci Technol 25:177–289

Henann DL, Chester SA, Bertoldi K (2013) Modeling of dielectric elastomers: design of actuators and energy harvesting devices. J Mech Phys Solids 61(10):2047–2066

Horak M, Gil AJ, Ortigosa R, Kruzík M (2023) A polyconvex transversely-isotropic invariant-based formulation for electro-mechanics: stability, minimisers and computational implementation. Comput Methods Appl Mech Eng 403:115695

Hossain M, Vu DK, Steinmann P (2015) A comprehensive characterization of the electro-mechanically coupled properties of VHB 4910 polymer. Arch Appl Mech, 85

Aggarwal A, Jensen BS, Pant S, Lee CH (2023) Strain energy density as a Gaussian process and its utilization in stochastic finite element analysis: application to planar soft tissues. Comput Methods Appl Mech Eng 404:115812

Jones DR (2001) A taxonomy of global optimization methods based on response surfaces. J Global Optim 21:345–383

Jordi C, Michel S, Fink E (2010) Fish-like propulsion of an airship with planar membrane dielectric elastomer actuators. Bioinspiration Biomimetics 5:1–9

Kleijnen JPC (2009) Kriging metamodeling in simulation: a review. Eur J Oper Res 192:707–716

Klein DK, Ortigosa R, Martínez-Frutos J, Weeger O (2022) Finite electro-elasticity with physics-augmented neural networks. CMAME 400:1–33

Kofod G, Sommer-Larsen P, Kornbluh R, Pelrine R (2003) Actuation response of polyacrylate dielectric elastomers. J Intell Mater Syst Struct 14(12):787–793

Krige DG (1951) A statistical approach to some basic mine valuation problems on the witwatersrand. J South Afr Inst Min Metall 52(6):119–139

Kunc O, Fritzen F (2019) Finite strain homogenization using a reduced basis and efficient sampling. Math Comput Appl 24:1–28

Laurent L, Le Riche R, Soulier B, Boucard P-A (2019) An overview of gradient-enhanced metamodels with applications. Arch Comput Methods Eng 26:61–106

Li G, Chen X, Zhou F, Liang Y, Xiao Y, Cao X, Zhang Z, Zhang M, Baosheng W, Yin S, Yi X, Fan H, Chen Z, Song W, Yang W, Pan B, Hou J, Zou W, He S, Yang X, Mao G, Jia Z, Zhou H, Li T, Shaoxing Q, Zhongbin X, Huang Z, Luo Y, Xie T, Jason G, Zhu S, Yang W (2021) Self-powered soft robot in the mariana trench. Nature 591:66–71

Li T, Keplinger C, Baumgartner R, Bauer S, Yang W, Suo Z (2013) Giant voltage-induced deformation in dielectric elastomers near the verge of snap-through instability. J Mech Phys Solids 61(2):611–628

Linka K, Hillgärtner M, Abdolazizi KP, Aydin RC, Itskov M, Cryon CJ (2021) Constitutive artificial neural networks: A fast and general approach to predictive data-driven constitutive modeling by deep learning. J Comput Phys 429:1–17

Linka K, Hillgartner M, Abdolazizi KP, Aydin RC, Itskov M, Cyron CJ (2021) Constitutive artificial neural networks: a fast and general approach to predictive data-driven constitutive modeling by deep learning. J Comput Phys 429:110010

Tongqing L, Shi Z, Shi Q, Wang TJ (2016) Bioinspired bicipital muscle with fibre-constrained dielectric elastomer actuator. Extreme Mech Lett 6:75–81

Marden John I (2015) Multivariate statistics: old school. CreateSpace Independent Publishing

Marín F, Martínez-Frutos J, Ortigosa R, Gil AJ (2021) A convex multi-variable based computational framework for multilayered electro-active polymers. Comput Methods Appl Mech Eng 374:113567

Marín F, Martínez-Frutos J, Rogelio O, Gil Antonio J (2021) A convex multi-variable based computational framework for multilayered electro-active polymers. CMAME 374:1–42

Marín F, Rogelio Ortigosa J, Martínez-Frutos Antonio JG (2022) Viscoelastic up-scaling rank-one effects in in-silico modelling of electro-active polymers. CMAME 389:1–44

Martínez-Frutos J, Herrero D (2016) Kriging-based infill sampling criterion for constraint handling in multi-objective optimization. J Global Optim 64:97–115

Matheron G (1962) Traité de géostatistique appliquée. Editions Technip

Nguyen CT, Phung H, Nguyen TD, Jung H, Choi HR (2017) Multiple-degrees-of-freedom dielectric elastomer actuators for soft printable hexapod robot. Sens Actuators, A 267:505–516

Ning C, Zhou Z, Tan G, Zhu Y, Mao C (2018) Electroactive polymers for tissue regeneration: developments and perspectives. Prog Polym Sci 81:144–162

Ollar J, Mortished C, Jones R, Sienz J, Toropov V (2017) Gradient based hyper-parameter optimisation for well conditioned kriging metamodels. Struct Multidiscip Optim 55:2029–2044

Ortigosa R, Gil A (2016) A new framework for large strain electromechanics based on convex multi-variable strain energies: Conservation laws, hyperbolicity and extension to electro-magneto-mechanics. Comput Methods Appl Mech Eng 309:202–242

Ortigosa R, Gil AJ (2016) A new framework for large strain electromechanics based on convex multi-variable strain energies: Conservation laws, hyperbolicity and extension to electro-magneto-mechanics. Comput Methods Appl Mech Eng 309:202–242

Ortigosa R, Gil AJ (2016) A new framework for large strain electromechanics based on convex multi-variable strain energies: Finite element discretisation and computational implementation. Comput Methods Appl Mech Eng 302:329–360

Pelrine R, Kornbluh R, Joseph J (1998) Electrostriction of polymer dielectrics with compliant electrodes as a means of actuation. Sens Actuators, A 64(1):77–85

Pelrine R, Kornbluh R, Pei Q, Joseph J (2000) High-speed electrically actuated elastomers with strain greater than 100 %. Science 287(5454):836–839

Pelrine R, Kornbluh R, Pei Q, Stanford S, Oh S, Eckerle J, Full RJ, Rosenthal MA, Meijer K (2002) Dielectric elastomer artificial muscle actuators: toward biomimetic motion. Smart Structures and Materials 2002: Electroactive Polymer Actuators and Devices (EAPAD). volume 4695. International Society for Optics and Photonics, SPIE, pp 126–137

Rasmussen C, Williams C (2006) Gaussian processes for machine learning. Adaptive computation and machine learning. MIT Press, Cambridge, MA

Rudykh S, Bhattacharya K, deBotton G (2012) Snap-through actuation of thick-wall electroactive balloons. Int J Non-Linear Mech 47:206–209

Rudykh S, Bhattacharya K, deBotton G (2014) Multiscale instabilities in soft heterogeneous dielectric elastomers. Proc R Soc A 470:20130618

Rudykh S, deBotton G (2011) Stability of anisotropic electroactive polymers with application to layered media. ZAMP 62:1131–1142

Sacks J, Welch WJ, Mitchell TJ, Wynn HP (1989) Design and analysis of computer experiments. Stat Sci 4:409-435

Sacks J, Welch WJ, Mitchell TJ, Wynn HP (1989) Design and analysis of computer experiments. Stat Sci 4(4):409–423

Santner T, Williams B, Notz W (2003) The design and analysis of computer experiments. Springer series in Statistics. Springer

Schroder J, Keip MA (2012) Two-scale homogenization of electromechanically coupled boundary value problems. Comput Mech 50:229–244

Schröder J, Neff P, Balzani D (2005) A variational approach for materially stable anisotropic hyperelasticity. Int J Solids Struct 42:4352–4371

Silhavy M (2018) A variational approach to nonlinear electro-magneto-elasticity: Convexity conditions and existence theorems. Math Mech Solids 23(6):907–928

Tikhonov AN, Arsenin VY (1977) Solutions of Ill-posed problems. Winston, Washington

Vu DK, Steinmann P, Possart G (2007) Numerical modelling of non-linear electroelasticity. Int J Numer Meth Eng 70(6):685–704

Wang Y, Zhu J (2016) Artificial muscles for jaw movements. Extreme Mech Lett 6:88–95

Xiaojian W, Yuan Y et al (2020) Boxmin: a fast and accurate derivative-free algorithm for black-box optimization. IEEE Trans Cybernet 50(2):503–515

Zhang J, Zhao F, Yang JZ, Yijun Z, Xiaoming C, Bo L, Nan Z, Gang N, Wei R, Zuoguang Y (2020) Improving actuation strain and breakdown strength of dielectric elastomers using core-shell structured cnt-al2o3. Composit Sci Technol 200:108393

Acknowledgements

R. Ortigosa and J. Martínez-Frutos acknowledge the support of grant PID2022-141957OA-C22 funded by MCIN/AEI/10.13039/501100011033 and by “RDF A way of making Europe". The authors also acknowledge the support provided by the Autonomous Community of the Region of Murcia, Spain through the programme for the development of scientific and technical research by competitive groups (21996/PI/22), included in the Regional Program for the Promotion of Scientific and Technical Research of Fundacion Seneca - Agencia de Ciencia y Tecnologia de la Region de Murcia. The fourth author also acknowledges the financial support received through the European Training Network Protechtion (Project ID: 764636) and of the UK Defense, Science and Technology Laboratory.

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Constitutive Models

1.1 Mooney–Rivlin/ideal dielectric elastomer model

The internal energy density for this model is:

and the material parameters used are

1.2 Quadratic Mooney–Rivlin/ideal dielectric elastomer model

The internal energy density for this model is:

and the material parameters used are (Table 4)

1.3 Gent/ideal dielectric elastomer model

The internal energy density for this model is:

and the specific values for the material parameters used are (Table 5)

1.4 Yeoh/ideal dielectric elastomer model

The strain energy density for this model is:

and the material parameters used are (Table 6):

1.5 Arruda-Boyce/ideal dielectric elastomer model

The internal energy density for this model is:

where

The material parameters used in the model are (Table 7):

1.6 Transversely Isotropic/ideal dielectric elastomer model

The internal energy of the this model is:

The material parameters used for this model are (Table 8):

1.7 Rank-One Laminate

We consider Mooney–Rivlin and ideal dielectric internal energy densities for the individual phases a and b within this composite (refer to Sect. 2.4), namely

being the effective strain energy \(\varPsi \left( \bar{\varvec{F}},\bar{\varvec{D}}_0\right) \)

with

where \(\{I_1^a,I_2^a,I_3^a,I_5^a\}\) and \(\{I_1^b,I_2^b,I_3^b,I_5^b\}\) represent the principal invariants of \(\varvec{F}^a\), \(\varvec{F}^b\), \(\varvec{D}_0^a\) and \(\varvec{D}_0^b\) related to the macroscopic deformation gradient tensor \(\bar{\varvec{F}}\) and \(\bar{\varvec{D}}_0\) through Eq. (25). The material parameters used for this composite material are found below (Table 9):

where the mechanical and electrical contrasts \(f_m\) and \(f_e\) are defined as

and with the vector of lamination \(\varvec{N}\) spherically parametrised in terms of \(\alpha \) and \(\beta \) according to

Basis for Symmetric Traceless Second Order Tensors

Gradient-enhanced Gaussian-process based prediction using a single observation point in the strain energy

The gradient enhanced Kriging approach, described in Sect. 3.4, can be particularised to the case when there is only one observation point for energy, whilst still retaining n observation points for the derivatives of the energy with respect to invariants. In order to particularise this approach to this scenario, the vector of observations \(\varvec{U}\) is now defined as

where

The correlation matrix \(\varvec{R}\) is similar to that in (52), with the same block structure, namely

with \(\varvec{R}_{\varvec{UU}}={\mathcal {R}}(\textbf{I}^1,\textbf{I}^1,\varvec{\theta })\). \(\varvec{R}_{\varvec{UU'}} \) includes the partial derivatives of \({\mathcal {R}}\) according to

given

The submatrix \(\varvec{R}_{\varvec{U^{\prime }U^{\prime }}} \) exactly the same as that in (55)-(56). Similarly the vector of cross-correlations between the observations and the prediction is extended as follows

Once these adaptations have been made, the new definitions for the various quantities can be substituted into the definitions detailed in Sects. 3.2 and 3.3. To start with, recall the mean prediction

and the variance

with

and

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pérez-Escolar, A., Martínez-Frutos, J., Ortigosa, R. et al. Learning nonlinear constitutive models in finite strain electromechanics with Gaussian process predictors. Comput Mech (2024). https://doi.org/10.1007/s00466-024-02446-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00466-024-02446-8