Abstract

This paper presents a novel plasticity-based formulation for three-dimensional (3D) topology optimization of continuum structures. The proposed formulation addresses the optimization problem by combining mixed rigid-plastic analysis with density-based topology optimization, resulting in a volume minimization approach. Unlike conventional stress-constrained topology optimization methods that rely on linear elastic structural analysis, our developed formulation focuses on enhancing the loading capacity of the designed structures based on the plastic limit theory, leading to more cost-effective designs. To improve computational efficiency, we employ the smoothed finite element technique in our proposed method, enabling the utilization of linear tetrahedral elements for 3D mesh refinement. Moreover, the final formulation of our developed method can be efficiently solved using the advanced primal–dual interior point method, eliminating the need for a separate nonlinear finite element structural analysis. Numerical examples are presented to demonstrate the effectiveness of the proposed approach in offering enhanced design possibilities for continuum structures.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Topology optimization is an advanced structural design method that aims to achieve the optimal structural configuration by distributing materials in a rational manner to meet specified load conditions, properties, and constraints. Compared to size and shape optimization, topology optimization is not dependent on the initial configuration and offers a broader design space. Due to its advantages, topology optimization has become a widely used technique in engineering structural design.

Several topology optimization approaches have been developed over the past few decades, including but not limited to the density approach [1,2,3], the level set approach [4,5,6], the evolutionary structural optimization approach [7,8,9], the phase field method [10,11,12], and the moving morphable components [13,14,15]. For a comprehensive review of these approaches, interested readers are referred to [16]. Among these approaches, the density-based topology optimization method is particularly popular. In this approach, discrete optimization problems of 0–1 are transformed into continuous optimization problems to relax the binary design form. Initially, material layouts were controlled by density variables using the homogenisation method, which is mathematically complex and challenging to implement [17]. Subsequently, an alternative method called Solid Isotropic Materials with Penalty (SIMP) was proposed. SIMP enforces exponential penalties on elemental elastic modulus [18]. Due to its simplicity and high computational stability, SIMP quickly became the most widely adopted topology optimization method.

Traditional density-based approaches primarily focus on compliance design, which involves minimizing the elastic strain energy of a structure under given external loads, a volume constraint, and linear elastic material behavior. While these approaches are efficient, they often lead to high stress concentrations in the designed layout, necessitating troublesome sequential changes [19], especially when stress states exceed the material strength. To address this issue, researchers are dedicated their efforts to stress-constrained topology optimization [20]. The stress-constrained topology optimization can be approached in two ways: either as a conventional stiffness-based topology optimization with additional stress constraints [19, 21] or as a volume minimization problem subject to equilibrium equations and stress constraints [22, 23]. It is important to note that, in the conventional stress-constrained topology optimization, linear elastic structural analysis is employed despite the inclusion of additional stress constraints. This means that only elastic behavior of materials is considered, leading to more conservative designs.

Recently, Kammoun et al. [24] introduced a density-based topology optimization formulation that incorporates limit plasticity. Building upon the lower bound finite element limit analysis, this method ensures the optimization process maintains a statically admissible stress field. As such, it can determine the ultimate plastic limit of a structure during topology optimization. Subsequently, an alternative plasticity-based formulation was presented in [25], enabling both upper and lower bound finite element limit analysis through the use of different elements. However, the formulation in [25] only yields a grey design. To address this limitation, an extension of the work in [25] was proposed in [26], allowing for black-and-white designs. Notably, this extension demonstrates that the method does not require a stress-relaxation technique. Another formulation for topology optimization incorporating plastic limit analysis was put forth in [27]. Unlike the approaches in [24,25,26], the formulation in [27] seeks to maximize the load-bearing capacity of the structure, while considering material strength properties and a material volume constraint.

Despite the progress made in developing topology optimization methods based on plastic limit analysis, the majority of contributions have been limited to two-dimensional (2D) cases [24,25,26,27]. Building upon the work presented in [26], this paper introduces a novel plasticity-based method specifically tailored for three-dimensional (3D) topology optimization. The proposed method integrates a mixed rigid-plastic analysis formulation into the density-based topology optimization approach to account for plasticity effects. By applying an exponential penalty to the objective function, a black-and-white solution is achieved. To enhance computational efficiency in 3D modelling, linear tetrahedral elements, known for their favorable mesh refinement properties, are employed. The issue of volumetric locking associated with these constant stress elements is addressed by utilizing the smoothed finite element technique. In 3D formulations with von Mises yield criterion, an additional constraint, not required in plane-stress cases, must be included to prevent the occurrence of spherical stress states in void regions. The developed plasticity-based method exhibits excellent convergence rates and computational efficiency for 3D topology optimization. To illustrate its features and advantages, a series of numerical examples are presented with results compared to these from the traditional stress-constrained method.

2 Mixed smoothed finite element limit analysis

2.1 Mixed limit analysis

Limit analysis determines the maximum load that a mechanical system can withstand. There are three categories of limit analysis: the upper bound (kinematical) formulation, the lower bound (static) formulation, and the mixed formulation. The kinematical formulation considers the displacement as the only variable and provides an upper limit for the maximum load. On the other hand, stress is the sole variable in the static formulation, resulting in a lower limit for the maximum load. The mixed formulation makes use of both displacement and stress as variables. According to [28, 29], a mixed limit analysis formulation can be expressed as a min–max optimization problem

where

\({\varvec{\sigma}} = \left( {\begin{array}{*{20}c} {\begin{array}{*{20}c} {\sigma_{xx} } & {\sigma_{yy} } & {\sigma_{zz} } \\ \end{array} } & {\begin{array}{*{20}c} {\sigma_{yz} } & {\sigma_{zx} } & {\sigma_{xy} } \\ \end{array} } \\ \end{array} } \right)^{T} \user2{ }\) is the Cauchy stress;

\({\mathbf{u}}\) is the displacement;

\({\mathbf{b}}\) is the body force;

\(\overline{\user2{t}}\) is the prescribed traction;

\(f\left( {\varvec{\sigma}} \right)\) is the yield function;

\(\alpha\) is the collapse load factor meaning \(\alpha \overline{\user2{t}}\) the ultimate force the structure can sustain; and \(\nabla\) is the differential operator matrix taking the form of

As illustrated in (1), both the stress and displacement fields are considered as independent variables in the mixed limit analysis. Remarkably, the mixed formulation is versatile since it can also provide a rigorous upper bound solution, as demonstrated in [28, 30, 31].

2.2 Nodal integration based mixed FELA formulation

In this section, we derive the formulation for 3D smoothed Finite Element Limit Analysis (FELA) based on the limit analysis problem (1). This formulation allows the use of linear tetrahedral elements without encountering volumetric locking issues. The computational domain is discretized using four-node tetrahedral elements whereas the integration is carried out over cells rather than finite elements. In the following, we will provide a comprehensive explanation of domain discretization, cell construction, and numerical interpolation and integration, which together yield the final formulation for 3D mixed smoothed FELA.

2.2.1 Domain discretization and cell construction

For clarity, we will initially consider a 2D case to explain the process of domain discretization and cell construction. However, it should be noted that extending this approach to 3D cases is forthright.

Figure 1a shows a 2D domain that has been discretized using three-node triangles. To facilitate the construction of cells, each triangle is divided into three quadrilaterals of equal area by connecting the centroid of the triangle to its three mid-edge points as depicted in Fig. 1b. These quadrilaterals have an area equivalent to one-third of the triangle’s area. In this context, the cell associated with each node consists of one-third of the triangles that are adjacent to that node. Figure 1b highlights two representative cells, specifically the cells associated with the \(k{\text {th}}\) and \(l{\text {th}}\) nodes for the purpose of illustration.

Cell construction in 3D can be achieved using a similar procedure. Initially, the domain is discretized using four-node tetrahedrons. Each tetrahedron is then divided into four hexahedrons, as illustrated in Fig. 2a. This division is accomplished by connecting the centroid of each surface triangle (green nodes) to the three mid-point nodes of that surface triangle (yellow nodes) and to the centroid of the tetrahedron (red node). In the 3D case, the four resulting hexahedrons have equal volume, each corresponding to one-fourth of the volume of the tetrahedron. The cell associated with each node in the 3D domain consists of all hexahedrons that are adjacent to that particular node. To illustrate this, Fig. 2b provides an example of the cells constructed for a cube.

2.2.2 Numerical interpolation and integration

The optimization problem (1) involves two master fields, namely the displacement and the stress. We start by approximating the displacement field, \({\varvec{u}}\), using the four-node tetrahedron as shown in Fig. 3. The approximation is given by:

In the above equation, the symbol \(\left( {\hat{ \cdot }} \right)\) denotes the variable at mesh nodes, and \({\varvec{N}}\) is the shape function defined as:

with

where \(\xi\), \(\eta\), and \(\zeta\) are the natural coordinates as depicted in Fig. 3.

The strain, \(\nabla^{T} {\varvec{u}}\), in (1) is uniform within each tetrahedron given by:

in which \({\varvec{B}}\) is the strain–displacement matrix expressed as below

In Eq. (7), the coefficients are partial derivatives of the shape functions with respect to the physical Cartesian coordinates. Specifically, they are calculated by

where \(x\), \(y\), and \(z\) are physical Cartesian coordinates. The derivative of shape functions with respect to physical coordinates can be determined using the chain rule

with Jacobian matrix being

Substituting Eqs. (3) and (6) into min–max problem (1), we have

The minimization part of (11) can be resolved analytically, leading to the following maximization problem:

The nodal integration of maximization problem (12) over cells result in

in which the notation \(\left( \cdot \right)^{i}\) represents the value of \(\left( \cdot \right)\) at the \(i {\text{th}}\) node, unless otherwise specified, and \(NN\) is the total number of nodes, which is also equal to the total number of cells (\(NC\)), indicating that the yield criterion is applied to all nodes. In (13), the stress is denoted by the symbol \(\hat{\user2{\sigma }}\), which is a vector comprising stress components at all mesh nodes. Notably, the stress at each node can be considered as a weighted average of the stress at the tetrahedrons adjacent to that node. For instance, this includes the tetrahedrons that make up the cell associated with that specific node.

The global matrix \(\overline{\user2{B}}^{{\text{T}}}\) is calculated based on nodal integration as

in which \(\sum \left( \cdot \right)\) represents the standard finite element assembly operator; \(V^{i}\) is the volume of the \(i{\text{th}}\) cell; \(V^{j}\) is the volume of the \(j{\text{th}}\) tetrahedron; \(ne\) is the total number of tetrahedron elements adjacent to the \(i{\text{th}}\) node; and \({\varvec{B}}_{ij}^{{}}\) is the strain–displacement operator at the \(i{\text{th}}\) node, which is estimated based on the \(j{\text{th}}\) adjacent tetrahedron. Therefore, \(\frac{1}{{V^{i} }}\mathop \sum \nolimits_{j = 1}^{ne} \left( {\frac{1}{4}{\varvec{B}}_{ij}^{{\text{T}}} V^{j} } \right)\) represents the weighted average of \({\varvec{B}}_{{}}^{{\text{T}}}\) at the \(i{\text{th}}\) node.

Similarly, \(\overline{\user2{F}}_{{{\text{ext}}}}\) is estimated based on nodal integration

in which \({\Gamma }^{i}\) is the nodal contour area; and \(\beta_{{\Gamma }}^{i}\) is a factor that equals to one if the node belongs to a Neumann boundary and is null otherwise.

Hence, the formulation for mixed smoothed FELA can be summarized as:

Although the above maximization problem lacks the upper/lower bound feature, the solution obtained from this formulation is often much closer to the exact solution for the bearing capacity of a structure [32].

3 Topology optimization formulation

3.1 Black-and-white topology optimization in limit analysis

Based on reference [25], density-based topology optimization in limit analysis can be formulated as a volume minimization problem by introducing a new design variable, ‘density’ \(\rho \in \left[ {0,1} \right]\), in (16). The modified optimization problem, considering the density variable, can be expressed as:

where \(\hat{\user2{\rho }} = \left[ {\rho_{1} , \rho_{2} , \cdots ,\rho_{NN} } \right]^{{\text{T}}}\) is a vector consisting of density at all nodes, and \({\varvec{L}} = \left[ {V_{1} , V_{2} , \cdots ,V_{NC} } \right]^{{\text{T}}}\) is a vector consisting of the volumes of all cells. \(NN\) refers to the total number of nodes, which is equal to the total number of cells \(NC\). In this study, we have employed the von Mises yield criterion to enable consistent comparisons between the developed method and the traditional stress-constrained topology optimization approach (i.e., PolyStress [33]). This is because a stress constraint for the traditional stress constrained topology optimization is usually expressed as the von Mises equivalent stress as indicated in [34]. However, it is crucial to highlight that the developed framework is flexible and can accommodate other plasticity models. For instance, the Mohr–Coulomb model and the Rankine model can also be incorporated within the proposed methodology following [27, 35]. The yield criterion for the ith node/cell (i.e., \(f^{i}\) in (16)) is defined as:

where \(J_{2} = \frac{1}{6}\left( {\sigma_{x} - \sigma_{y} } \right)^{2} + \frac{1}{6}\left( {\sigma_{y} - \sigma_{z} } \right)^{2} + \frac{1}{6}\left( {\sigma_{z} - \sigma_{x} } \right)^{2} + \sigma_{xy}^{2} + \sigma_{yz}^{2} + \sigma_{zx}^{2}\) is the second invariant of the deviatoric stress, \(f_{y}\) is the yield stress, and \(\rho_{i}\) denotes the density at the ith node.

Remark 1

The modified criterion (18) indicates that when \(\rho_{i} = 0\), shear stresses are zero (i.e., \(\sigma_{xy}^{{}} = \sigma_{yz}^{{}} = \sigma_{zx}^{{}} = 0\)) but a spherical stress state (i.e., \(\sigma_{x} = \sigma_{y} = \sigma_{z}\)) is allowed. To address this issue, an additional inequality condition \(f_{sp}^{i} = \left| {\sigma_{x} + \sigma_{y} + \sigma_{z} } \right| - kf_{y} \rho_{i} \le 0\) should be enforced for each node as suggested in [24], where \(k\) is a constant with a sufficiently large value. Obviously, this additional condition ensures that stress states are unaffected when \(\rho_{i} = 1\), while all stress components are zero when \(\rho_{i} = 0\) recalling (18).

Remark 2

Formulation (17) tends to produce grayscale solutions. To obtain a black-and-white layout, penalties should be applied to the objective function. Following [26, 36], the vector \({\varvec{L}}\) is replaced with \(\tilde{\user2{L}} = \left[ {c_{1} V_{1} , c_{2} V_{2} , \cdots ,c_{NC} V_{NC} } \right]^{{\text{T}}}\), where the penalty factor for the ith node is determined by.

The above exponential penalty function is based on \(\rho_{i}^{*}\), the density at the ith node obtained in the previous iteration, and a constant, \(p\). The performance of this exponential penalty function has been investigated in [26, 36].

Thus, the black-and-white topology optimization formulation in the context of mixed smoothed FELA can be summarized as:

The above optimization problem can be reformulated as a standard second-order cone programming (SOCP) problem, as described in [26, 37]. It can then be resolved straightforwardly using the advanced primal–dual interior point method available in MOSEK [38].

3.2 Density filtering and mesh refinement

Checkerboard issues are a common problem in density-based topology optimization. To address this issue, the proposed method employs density filtering, as outlined in [39]. The filtered density at the ith point is calculated using the following equation:

where \(v_{j}\) is the volume of the jth cell; \(\rho_{j}^{{}}\) is the density at the jth node; and \(N_{n} \) denotes the total number of nodes located within the filtering region of the ith node. The filtering region is defined as a circle with a radius of \(R\). The weighting function, denoted as \(w\left( {x_{j} } \right)\), is a Gaussian (bell shape) distribution function, as suggested in [39]:

in which \(x_{i}\) represents the coordinates of the ith node whose density is filtered, and \(x_{j}\) represents the coordinates of the jth nodes within the filtering region. It has been shown in [26] that the above weighting function performs well for plasticity-based topology optimization in 2D cases. Therefore, it is adopted for 3D studies in this paper. The parameter \({ }\sigma_{d}\) in Eq. (22) is set as \(R/2\), where \(R\) is 1.5 times the mesh size, as suggested in [26].

3.3 Algorithm steps

To summarize, the basic steps of the topology optimization procedure are as follows:

-

(i)

Assume an initial density \(\rho = 1\) for all nodes in the domain;

-

(ii)

Calculate the exponential penalty factor \(c_{i}\) using (19) for each node;

-

(iii)

Solve the optimization problem (20) using MOSEK to obtain the density field;

-

(iv)

Perform density filtering for each node using (21) and use the filtered value as the density at each node;

-

(v)

Check the convergence criterion: cease the iteration if the criterion is satisfied (i.e., the change in the objective function, \(Obj = \tilde{\user2{L}}^{{\text{T}}} \hat{\user2{\rho }}\),between two iterations fulfils \(\bigg {|} \frac{{Obj_{{{\text{n}} + 1}} - Obj_{{\text{n}}} }}{{Obj_{{{\text{n}} + 1}} }}\bigg {|} \le {\text{tolerance value}}\)); otherwise, go back to step (ii) and repeat the process.

It is acknowledged that 3D topology optimization often requires significant computational resources. Therefore, enhancing the computational efficiency of the proposed method is of paramount importance. While developing a parallelized computing scheme for resolving SOCP problems is a common approach, we would also like to emphasize the potential of employing warm start strategies to improve computational efficiency. This is particularly relevant in our solution scheme, which involves an iterative procedure where the SOCP problem is repeatedly solved in step (iii) to obtain a converged density field. Research has shown that warm start strategies can substantially enhance the efficiency of the interior point method when solving SOCP problems. For more detailed information on this topic, interested readers are encouraged to refer to [40, 41].

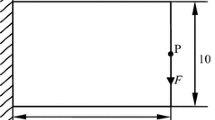

Remarkably, in conventional stress-constrained topology optimization, elastic finite element analysis is conducted with stress constrains. This means that all materials are assumed to behave elastically, and the structure is considered to fail when any local yielding occurs. The maximum load that the structure can sustain is denoted as \({P}_{e}\), as shown in Fig. 4. In contrast, the plasticity-based topology optimization method developed in this study treats the material as rigid plastic. By incorporating plastic limit theory into the optimization problem (20), the external force represents the load-bearing capacity of the layout, denoted as \({P}_{Y}\) in Fig. 4, as indicated in the work of plasticity-based topology optimization [24, 26, 27]. Consequently, the developed plasticity-based topology optimization method generally provides a more efficient solution compared to the conventional stress-constrained method. This will be demonstrated through numerical examples in the following section.

4 Numerical examples

In this section, several examples are presented to demonstrate the correctness and robustness of the proposed method. All simulations were conducted on a DELL PC with a 2.20 GHz CPU and 32.0 GB memory, running on Microsoft Windows server (Version 10.0). The final SOCP problem was solved using MOSEK [38], an advanced optimization tool designed for solving large-scale optimization problems. The implementation of the method was carried out in the MATLAB environment (R2021b).

4.1 A short plate

The first example involves a thin short plate subjected to a shear load, as depicted in Fig. 5. This classical problem has been widely employed for the verification of various topology optimization methods [42,43,44]. In this study, the plate has dimensions of 1 m × 1 m × 0.01 m. The left surface of the plate is clamped, and a shear force of \(F=90 \mathrm{kN}\) is applied at the lower right corner of the plate. The material used in the analysis has a yield stress of \({f}_{y}=220 \mathrm{MPa}\). The mesh size is set to 0.01 m, resulting in one layer element along the thickness direction. A total of 60,000 elements are employed in this simulation. Throughout all the simulations, the penalization factor is set to \(\mathrm{p}=5\), which is considered sufficiently large to achieve a black-and-white layout, as suggested in [26]. The constant \(k\) for the spherical stress state is set to \(1\times {10}^{3}\), unless otherwise specified.

Remarkably, the adopted plastic model in this study is the rigid-perfectly plastic model with von Mises yield criterion, which assumes incompressible materials. Traditional constant stress elements, such as the three-node triangular element in 2D and the four-node tetrahedral element in 3D, are uncompetitive for such problems due to volumetric locking issues. This issue has been extensively discussed in the literature [37, 45, 46], and its adverse impact on topology optimization has also been explored in [42]. To overcome the volumetric locking issues associated with incompressibility, the mixed smoothed finite element formulation developed in [37] is employed in this study for constructing 3D limit analysis based topology optimization. The effectiveness of this formulation in tackling incompressibility issues has been demonstrated in [37] and is not repeated in this study. In the following, we will present a comparison between the simulation results obtained from the developed 3D plasticity-based method and the traditional stress-constrained topology optimization method (i.e. PolyStress) [33].

The final layouts obtained from both simulations are shown in Fig. 6a and b. Albeit they appear similar, the amount of material required differs. Figure 6c illustrates the convergence history of the two methods in terms of the volume ratio. It can be observed that the developed method achieves a converged volume ratio of 20%, which is lower than that obtained from PolyStress (i.e., 28.2%). This discrepancy arises from the adoption of the plasticity theory in the developed topology optimization formulation. Our approach allows for material yielding as long as the global structure remains stable which means it targets the loading capacity of the overall structure. In contrast, the traditional stress constrained method solves the elastic governing equation of the structure and restricts yielding in all material points, implying that the structure designed using PolyStress behaves elastically under the applied load. This point can be further verified by examining the distribution of von Mises stress across the layouts from the two methods. Figure 7a illustrates that a significant portion of the layout obtained from the developed method exhibits a von Mises stress equal to the yield stress (e.g. 220 MPa) indicating that the structure has reached its maximum sustainable state. In contrast, fewer parts of the layout obtained from PolyStress possess a von Mises stress equal to the yield stress. Additionally, it is worth noting that the proposed method demonstrates a faster convergence rate in terms of volume ratios compared to the conventional stress-constrained method, as shown in Fig. 6c. In fact, a converged solution is obtained with 5 iterations using the developed method.

Simulation results: a layout from this study; b layout from PolyStress [33]; and c convergence history in terms of volume ratio

4.2 A clamped beam

As the second example, we consider a double-clamped beam as shown in Fig. 8. The length and height of the designed domain are 20 m and 5 m, respectively, and the thickness of the beam varies from t = 0.01 to 10 m. The yield stress of the material is set to 300 kPa. A uniform pressure of \(\mathrm{p}=15.3\) kPa is applied to the top central zone with a length of 2.5 m. Due to symmetry, only half of the domain is considered in the modelling process.

4.2.1 Thin beam & mesh refinement

We begin by focusing on a thin beam with a thickness of \(t=0.01\) m. The mesh size used on x–y plane is 0.15 m, and only one layer is assigned in the thickness direction (i.e. z axis) resulting in 20,604 nodes and 60,000 elements in the simulation. Figure 9 illustrates the evolutionary history of the structure in the optimal design using both PolyStress and the plasticity-based method. A grey design is obtained in both approaches if no iterations are conducted. A satisfactory black-and-white layout is achieved with 8 iterations using the developed method (Fig. 9), and the volume ratio from the developed method is 33% (Fig. 10). PolyStress also produces a black-and-white layout similar to that from our approach. Nevertheless, the layout of the structure varies slightly even after 8 iterations. This fluctuation is evident in the curve of volume ratio against iteration, as shown in Fig. 10. In contrast, the volume ratio exhibits little change for iteration greater than 6 in the modelling using our developed method. The volume ratio from our method is 33%, which is lower than the 45% obtained from the traditional stress-constrained approach. Notably, the curves of the volume ratio against iteration from this study and the 2D plasticity-based method using quadratic elements [26] are in good agreement, which further validates the proposed method.

To further compare the results from the method developed in this study and the conventional stress-constrained topology optimization method, the distribution of VM stress on the layouts from the two methods is shown in Fig. 11, and the percentage distribution is shown in Fig. 12. These results are obtained using uniform meshes, and the mesh sizes for the two simulations are the same (0.15 m). As expected, most areas of the layout from the developed plasticity-based method exhibit a VM stress equal to the yield stress, whereas many parts of the layout from PolyStress have a VM stress smaller than the yield stress. The quantitative comparison shown in Fig. 12 indicates that the percentages of VM stress located in the ranges of 0–30 kPa and 270–300 kPa from the developed method are larger than these from PolyStress. Very low percentages of VM stress in the range from 30–270 kPa also demonstrates the efficiency of the adopted exponential penalty function in Eq. (19).

Noteworthily, 3D simulations are generally computationally demanding. To reduce the computational burden, we employ a simple and efficient mesh refinement scheme. The basic idea is to check the density of each node and the length of all edges associated with the node. If both the density and edge length exceed given threshold values, an additional point is added to the middle of the associated edge for mesh refinement.

To illustrate the efficiency of this approach, we re-analyze this example with mesh refinement. Initially, we discretize the domain with a larger mesh size of \({h}_{e}=0.2\) m, resulting in 4,556 nodes and 13,068 meshes. The mesh refinement is carried out using a density threshold of 0.9, and an edge threshold of 0.15 m, which is identical to the mesh size used in the simulation without mesh refinement. Figure 13 shows the evolution of the layout in the two simulations. Clearly, a satisfactory black-and-white structure is obtained with 5 iterations in the simulation with mesh refinement. In contrast, more than 7 iterations are required in the simulation without mesh refinement. The convergence history in terms of volume ratio against iteration for the two cases is illustrated in Fig. 14. As observed, the volume ratio exhibits little variation for iterations greater than 7 in the simulation with mesh refinement, whereas there is a slight fluctuation, with a magnitude of less than 2%, in the case without mesh refinement.

The computational cost for the two cases is presented in Table 1. The simulation with mesh refinement requires only half of the elements and nodes compared to the simulation without mesh refinement to achieve a similar solution. The time required for the simulation with mesh refinement for 9 iterations is 150.34 s – approximately 36% of the time required in the case without mesh refinement. Remarkably, there is no need for 9 iterations in the simulation with mesh refinement, as a converged solution is obtained at 7 iterations (Fig. 14), suggesting that the computational cost of the case with mesh refinement should be even lower than 150.34 s.

4.2.2 A thick beam

With mesh refinement, we further investigate a beam with a significant thickness of \(t=5\) m. The parameters and the imposed pressure are the same as those in Sect. 4.2.1. The domain is initially discretized using a total of 41,106 nodes and 225,000 meshes.

Figure 15 illustrates the evolution of the layout at different iteration steps. As shown, a converged black-and-white layout is obtained at the 6th iteration step, which is in line with the curve of volume ratio against iteration shown in Fig. 16. The volume ratio in this case is 32.5%, slightly lower than the ratio for the thin beam case (33.1%). This is because the topology structure can be optimized in the thickness direction, resulting in an optimal spatial structure as depicted in Fig. 17a. Figure 17b displays the normalized VM stress. As seen, the normalized VM stress is less than 1 in most parts of the designed structure surface, given that the surface is in the thin blur area. This aligns with the distribution of the normalized VM stress on the three specific slices at \(z=\) 2.5 m, 4.3 m, and 5.0 m (Fig. 17c–e). When subjected to the given pressure, most inner parts of the structure have a \({\sigma }_{VM}\) equal to 1, while a thin layer exists on the surface of the optimal structure. Remarkably, the layouts on the three slices differ from each other, indicating that optimized structure adapts to different sections along the thickness direction. The number of node and element at the 6th iteration is 211,546 and 1,291,368, respectively, and the computational cost for iterations from step 1 to 6 is 10.9 h.

It is worth noting that extracting a manufacturable structure from the 3D density fields obtained from topology optimization is nontrivial. Here, we conducted a more detailed investigation into the extraction of structure from different density ranges. The topologies extracted from four density ranges, namely \(\rho >0.1\), \(\rho >0.3\), \(\rho >0.5\), and \(\rho >0.7\), are illustrated in Fig. 18. Very little difference can be identified for the four layouts, which echoes the distribution of density shown in Fig. 19. A large portion of the design domain possesses density either close to 0 or 1. Consequently, in this study, all layouts are extracted for \(\rho >0.5\) unless otherwise specified.

4.3 Bridge design

The final example in this study focuses on the design of a bridge. Figure 20 depicts the design domain with dimensions of \(18\mathrm{m}\times 6\mathrm{m}\times 4\mathrm{m}\). The bridge is clamped at the two bottom supports, indicated by the shaped zones, each measuring \(2\mathrm{m}\times 4\mathrm{m}\). A uniformly distributed traffic load F = 875 kPa is applied to the top blue plate in the design. The plate has a thickness of 0.1 m and is positioned 4.5 m from the top and 1.4 m from the bottom of the design domain. The yield stress of the material is 10 MPa, and the density of the blue plate is set to be one in the simulation. The initial discretization of the design domain employs a mesh size of \({h}_{e}=0.2\) m, resulting in 59,241 nodes and 324,000 elements. Mesh refinement is carried out using a density threshold of 0.9 and an edge threshold of 0.08 m. The tolerance value for this case is set to 0.5%.

Table 2 presents the simulation history in terms of the volume ratio and error. A converged result is achieved with 5 iterations, and the error, defined as \(\bigg{|}\frac{ {Obj}_{\mathrm{n}+1}-{Obj}_{\mathrm{n}} }{{Obj}_{\mathrm{n}+1}}\bigg{|}\) from the 5th iteration, is 0.24%, which is lower than the tolerance value of 0.5%. The resulting converged layout has a volume ratio of 12.3%.

Figure 21 shows the design history of the bridge. As the number of iterations increases, the bridge structure gradually undergoes optimization, resulting in a trail bridge structure. The optimized structure features two walls with holes in the upper part of the domain, with the holes separated from each other by several cable-like structures. The lower part of the bridge consists of two hollow piers connecting to the areas with fixed boundaries. The computational cost for this design is 6.55 h, and the converged solution is achieved with a total of 154,615 nodes and 909,331 elements.

Additionally, the effect of the height of the deck surface on the bridge design is investigated. The deck surface is varied at the heights of 1 m, 2 m, 3 m, 4 m, 5 m, 6 m from the bottom of the design domain. Figure 22 shows that as the deck height increases, the volume ratio decreases. For lower deck heights, the optimal bridge design includes piers as well as upper part arch-like structure to support the traffic load as illustrated in Fig. 23. On the other hand, for higher deck heights, the structure features piers with a more complex topology and does not have an upper structure.

5 Conclusions

This paper presents a method for 3D black-and-white topology optimization of continuum structures considering plasticity. The method combines density-based topology optimization and mixed limit analysis, formulating a sequence of continuous convex topology optimisation problems. These problems are efficiently solved using the primal–dual interior point method in modern optimisation engine. To enhance computational efficiency, mesh refinement operations are introduced using linear tetrahedral elements. Volumetric locking issues commonly associated with linear tetrahedral elements are effectively addressed by incorporating the smoothed finite element technique.

In contrast to conventional stress-constrained topology optimisation approaches, the proposed plasticity-based method eliminates the need for a separate finite element structural analysis procedure. It offers a more cost-effective design solution by allowing material yielding as long as the global structure remains stable. This stands in contrast to the traditional stress-constrained method where structures are restricted to elastic behavior only. The correctness and robustness of the developed 3D plasticity-based approach are demonstrated through a series of classical numerical examples. Qualitative and quantitative comparisons with traditional stress-constrained approaches validate the effectiveness of the proposed method.

Data availability statement

The data on optimization algorithm are available from the corresponding author upon reasonable request. All the details necessary to reproduce the results have been defined in the paper.

References

Bendsøe MP (1989) Optimal shape design as a material distribution problem. Struct Optimiz 1(4):193–202

Zhou M, Rozvany G (1991) The COC algorithm, Part II: Topological, geometrical and generalized shape optimization. Comput Methods Appl Mech Eng 89(1–3):309–336

Sigmund O, Clausen PM (2007) Topology optimization using a mixed formulation: an alternative way to solve pressure load problems. Comput Methods Appl Mech Eng 196(13–16):1874–1889

Allaire G, Jouve F, Toader A-M (2004) Structural optimization using sensitivity analysis and a level-set method. J Comput Phys 194(1):363–393

Wang MY, Wang X, Guo D (2003) A level set method for structural topology optimization. Comput Methods Appl Mech Eng 192(1–2):227–246

Amstutz S, Andrä H (2006) A new algorithm for topology optimization using a level-set method. J Comput Phys 216(2):573–588

Xie YM, Steven GP (1993) A simple evolutionary procedure for structural optimization. Comput Struct 49(5):885–896

Huang X, Xie Y (2007) Convergent and mesh-independent solutions for the bi-directional evolutionary structural optimization method. Finite Elem Anal Des 43(14):1039–1049

Tanskanen P (2002) The evolutionary structural optimization method: theoretical aspects. Comput Methods Appl Mech Eng 191(47–48):5485–5498

Takezawa A, Nishiwaki S, Kitamura M (2010) Shape and topology optimization based on the phase field method and sensitivity analysis. J Comput Phys 229(7):2697–2718

Dedè L, Borden MJ, Hughes TJ (2012) Isogeometric analysis for topology optimization with a phase field model. Archiv Comput Methods Eng 19(3):427–465

Auricchio F et al (2020) A phase-field-based graded-material topology optimization with stress constraint. Math Models Methods Appl Sci 30(08):1461–1483

Zhang W et al (2016) A new topology optimization approach based on Moving Morphable Components (MMC) and the ersatz material model. Struct Multidiscip Optim 53(6):1243–1260

Guo X, Zhang W, Zhong W (2014) Doing topology optimization explicitly and geometrically—a new moving morphable components based framework. J Appl Mech. 81(8).

Zhang W et al (2017) A new three-dimensional topology optimization method based on moving morphable components (MMCs). Comput Mech 59(4):647–665

Sigmund O, Maute K (2013) Topology optimization approaches. Struct Multidiscip Optim 48(6):1031–1055

Bendsøe MP, Kikuchi N (1988) Generating optimal topologies in structural design using a homogenization method. Comput Methods Appl Mech Eng 71(2):197–224

Bendsøe MP, Sigmund O (1999) Material interpolation schemes in topology optimization. Arch Appl Mech 69(9):635–654

Holmberg E, Torstenfelt B, Klarbring A (2013) Stress constrained topology optimization. Struct Multidiscip Optim 48(1):33–47

Duysinx P, Bendsøe MP (1998) Topology optimization of continuum structures with local stress constraints. Int J Numer Meth Eng 43(8):1453–1478

Werme M (2008) Using the sequential linear integer programming method as a post-processor for stress-constrained topology optimization problems. Int J Numer Meth Eng 76(10):1544–1567

Pereira JT, Fancello EA, Barcellos C (2004) Topology optimization of continuum structures with material failure constraints. Struct Multidiscip Optim 26(1):50–66

Bruggi M (2008) On an alternative approach to stress constraints relaxation in topology optimization. Struct Multidiscip Optim 36(2):125–141

Kammoun Z, Smaoui H (2015) A direct method formulation for topology plastic design of continua. In: Fuschi P, Pisano AA, Weichert D (eds) Direct methods for limit and shakedown analysis of structures: advanced computational algorithms and material modelling. Springer International Publishing, Cham, pp 47–63

Herfelt MA, Poulsen PN, Hoang LC (2019) Strength-based topology optimisation of plastic isotropic von Mises materials. Struct Multidiscip Optim 59(3):893–906

Zhang X, Li X, Zhang Y (2022) A framework for plasticity-based topology optimization of continuum structure. International Journal for Numerical Methods in Engineering,: p. (doi: https://doi.org/10.1002/nme.7172, in press).

Mourad L et al (2021) Topology optimization of load-bearing capacity. Struct Multidiscip Optim 64(3):1367–1383

Krabbenhøft K, Lyamin AV, Sloan SW (2007) Formulation and solution of some plasticity problems as conic programs. Int J Solids Struct 44(5):1533–1549

Zhang X et al (2019) A unified Lagrangian formulation for solid and fluid dynamics and its possibility for modelling submarine landslides and their consequences. Comput Methods Appl Mech Eng 343:314–338

Pastor F, Loute E (2010) Limit analysis decomposition and finite element mixed method. J Comput Appl Math 234(7):2213–2221

Pastor F et al (2009) Mixed method and convex optimization for limit analysis of homogeneous Gurson materials: a kinematical approach. Eur J Mech A Solids 28(1):25–35

Nguyen HC (2023) A mixed formulation of limit analysis for seismic slope stability. Géotechnique Letters 13(1):54–64

Giraldo-Londoño O, Paulino GH (2021) PolyStress: a Matlab implementation for local stress-constrained topology optimization using the augmented Lagrangian method. Struct Multidiscip Optim 63(4):2065–2097

Bendsoe MP, Sigmund O (2004) Topology optimization: theory, methods, and applications. 2004: Springer Science & Business Media.

Kammoun Z, Fourati M, Smaoui H (2019) Direct limit analysis based topology optimization of foundations. Soils Found 59(4):1063–1072

Smaoui H, Kammoun Z (2022) Convergence of the direct limit analysis design method for discrete topology optimization. Optim Eng 23(1):1–24

Meng J et al (2020) A smoothed finite element method using second-order cone programming. Comput Geotech 123:103547

ApS M (2019) Mosek optimization toolbox for matlab. User’s Guide and Reference Manual, Version, 4.

Sigmund O (2007) Morphology-based black and white filters for topology optimization. Struct Multidiscip Optim 33(4):401–424

Yonekura K, Kanno Y (2012) Second-order cone programming with warm start for elastoplastic analysis with von Mises yield criterion. Optim Eng 13:181–218

Hayashi S, Okuno T, Ito Y (2016) Simplex-type algorithm for second-order cone programmes via semi-infinite programming reformulation. Optimiz Methods Softw 31(6):1272–1297

Li E et al (2016) Smoothed finite element method for topology optimization involving incompressible materials. Eng Optim 48(12):2064–2089

Wallin M, Ristinmaa M (2014) Boundary effects in a phase-field approach to topology optimization. Comput Methods Appl Mech Eng 278:145–159

Carrasco M, Ivorra B, Ramos AM (2015) Stochastic topology design optimization for continuous elastic materials. Comput Methods Appl Mech Eng 289:131–154

Liu G, Dai K, Nguyen TT (2007) A smoothed finite element method for mechanics problems. Comput Mech 39(6):859–877

Nguyen H, Vo-Minh T (2022) Calculation of seismic bearing capacity of shallow strip foundations using the cell-based smoothed finite element method. Acta Geotechnica, p. 1–24.

Acknowledgements

We gratefully acknowledge the financial support provided by the New Investigator Award grant from the UK Engineering and Physical Sciences Research Council (EP/V012169/1), the Royal Society International Exchanges grant (IEC/NSFC/191261), and the joint University of Liverpool/China Scholarship Council award (CSC number: 202108420035).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, X., Zhang, X. & Zhang, Y. Three-dimensional plasticity-based topology optimization with smoothed finite element analysis. Comput Mech 73, 533–548 (2024). https://doi.org/10.1007/s00466-023-02378-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00466-023-02378-9