Abstract

Numerical simulation based on FEM/IGA methods is the standard approach for the approximated solution of applied physical problems. In this context, the differentiation of the numerical counterpart of mechanical fields is required. Moreover, the differentiated function can have a complicated shape, depend on many variables and change within the process. Many state-of-the-art numerical differentiation methods are not suitable for this kind of applications and the common way is to exploit analytical differentiation. Thus, an on-the-fly differentiation method is desirable particularly when the process is complicated and when new mechanical models are under development. In this paper, a new method is proposed for a precise computation of the gradient and Hessian. This method has been applied to nonlinear analysis of Kirchhoff–Love shells, which can be considered as an appropriate test bench to prove the reliability in relevant physical context. Numerical experiments show the advantages of the proposed techniques with respect to standard approaches.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Finite Element Analysis is the standard approach for solving a large number of problems based on partial differential equations. It is typical for applied mathematics and physics due to its simplicity in software implementation on modern computers, which become nowadays faster and faster. Recently Isogeometric analysis (IGA) (see, e.g., [9, 42]) has been proposed as an alternative discretization tool allowing one, in connection with CAD, to exactly describe the geometry. IGA is based on interpolation spline functions (like, e.g., B-splines or NURBS), which make it possible to fulfil the continuity requirement easily, particularly suitable for problems which require haigh order derivatives.

Isogemetric analysis has attracted the attention of many researchers in the context of different applications based on various mechanical models (see, e.g., [27, 28, 30, 33, 36, 37]). Moreover, interesting industrial applications have been presented recently for the optimization of post-buckling behaviour of aeronautical specimens made of advanced composite materials in [19, 34]. In the following we refer to the application of these numerical techniques to structural mechanical problems. Typically, for the numerical solution of mechanical problems, it is required to evaluate the variation of the potential energy which leads to variations of strain measure addressed in the paper. The derivation of the stress with respect to strain is usually required for the linearization in terms of the Newton–Raphson method. Depending on the chosen mechanical model for the analysis, this derivation procedure may be complex and thus cumbersome to compute and implement, sometimes due to their analytical formulae the derivation of the stress with respect to strain.

In particular, the Kirchhoff–Love model is potentially able to furnish the most efficient analysis of shell structures, due to the reduced number of degrees of freedom (DOF) paying for this advantage a more complicated strain measure, compared to solid-shell models (see, e.g., [28]), and a consequent effort for the analytical derivation and the computation of the discrete quantities, even though, in this respect, more complex applications in multi-physics analysis can be found. The aim of this work is to propose a numerical differentiation tool that is less error prone, for the construction of the numerical problem. Moreover, engineering models of appreciable complexity are typically constructed using multiple surface patches. An efficient proposal for the patch-coupling has been presented in [29] that makes efficient the application to large scale problems in which extra non trivial terms are introduced and make the analytical derivation process much more difficult. For these reasons, the Kirchhoff–Love model is a good candidate to show the effectiveness of the derivation procedure proposed in the present paper.

As it has been already mentioned earlier, the problem of the efficient computation of the first- and second-order derivatives with respect to the strain measure arises. There are different ways to calculate the derivatives with a high accuracy: Automatic differentiation (see, e.g., [4, 18, 55]), symbolic differentiation (see, e.g., MATLAB symbolic toolbox https://mathworks.com/products/symbolic.html or open source SymPy library for Python https://www.sympy.org/); differentiation using frameworks for working with numerical infinitesimals (see, e.g., [47, 52]); differentiation using complex or dual numbers (see, e.g., [26, 54, 56]), etc. Moreover, there has been proposed a multi-language and multi-environment software tool (called AceGen, see [23, 24]) for the automatic code generation using Wolfram Mathematica system as the core. In particular, this tool allows to generate the formulae for the required derivatives by combining Mathematica’s symbolic and algebraic procedures with automatic differentiation and code generation, simultaneous optimization of expressions and theorem-proving via stochastic evaluation of expressions. For these reasons, AceGen can also be successfully used in FEM based analysis.

However, some properties of the function to be differentiated create several difficulties and limitations for these methods. In particular, since the strain measure can be multidimensional with, eventually, high number of variables, then it is required to calculate partial and mixed second-order derivatives for each evaluation point. Second, the objective function depends on additional data and parameters (e.g., current displacements and tensions), which are changed or obtained during the simulation. This leads to the fact that different evaluations of the gradient and Hessian are executed on different functions, which makes it difficult to apply differentiation techniques requiring a pre-processing of the software (e.g., algorithmic (or automatic) differentiation, which requires the construction of the code for the derivatives, or symbolic differentiation, which constructs the formulae for the respective derivatives). For this issue, only differentiation techniques, which do not require an explicit generation of the code or formulae of the derivatives, like the ones using numerical infinitesimals or complex step methods, can be applied for this purpose. Moreover, AceGen described above has also several limitations in this context. First of all, even if it is multi-language and multi-environment, it requires the Mathematica system, which is not currently available for free, while the approach proposed in the present paper is domain-independent, general-purpose and can be easily implemented in any language and environment using available frameworks for working numerically with infinite and infinitesimal numbers. Second, in order to apply this tool, the finite element formulation must be developed using the same programming environment and language (however, not necessarily Mathematica) by developing all the manipulations required by the chosen model starting from the expressions of shape functions, that are usually simple polynomial formulae in case of standard FEMs. It is a bit different in case of NURBS based analysis, for which the shape functions and their derivatives are evaluated at a point by using procedures [32], while their analytical expression is, often, not explicitly given. However, it should be noted that AceGen can also be used in IGA analysis (see, e.g., the AceFEM package http://symech.fgg.uni-lj.si/ and https://www.wolfram.com/products/applications/acefem/) representing so an alternative available software solution in this context.

There exist several differentiation techniques using numerical infinitesimals in the literature. In [47], there has been proposed the method for differentiation of a univariate function. However, it has been constructed for univariate derivatives only and has not been implemented for the gradient or Hessian. Multidimensional differentiation techniques have been also proposed in [20, 21], but they are applicable only for Lie derivatives embedded in initial value problems. In [52], there has been proposed the method for higher-order mixed differentiation. However, the method from [52] requires a numerical solution to ill-conditioned linear systems (or inversion of the respective matrix), the dimension of which grows significantly, if the objective function has many variables and dimensions. In particular, it requires to solve the \(l\times l\) ill-conditioned linear system for finding the Hessian, where \(l = \frac{m(m+1)}{2}\) and m is the number of variables, leading so to both higher computational costs (e.g., inverting and multiplying matrices or solving linear systems of a high dimension) and lower accuracy (since the respective linear system can become ill-conditioned). To overcome the issues described above, a new method for computation of both the gradient and Hessian is proposed in this paper. This method is suitable for the frameworks for working with infinite and infinitesimal numbers. It is shown that the proposed method is simple, efficient and easily parallelizable, allowing one to calculate the required derivatives on-the-fly with a high precision and not involving ill-conditioned linear systems even if the objective function is changing within an iterative process. Numerical simulation results show the advantages of the proposed method with its direct competitors.

Proposed differentiation method is based on the efficient computation of the derivatives for a univariate function. In this paper, the frameworks for working numerically with infinite and infinitesimal numbers are considered for this purpose, since they have been already successfully used for this aim. There exist different approaches for working with infinite and infinitesimal quantities: see, e.g., [48] for the Infinity Computer, [52] for the Levi-Civita fields, [44] for the Non-Standard Analysis, [5] for labeled sets and numerosities, etc. In this paper, the Infinity Computer (see, e.g., [46, 48] for its complete description and the patents [45] for technical implementation details) is used for this purpose, since it has already been successfully used in different applications, where a high precision computation of the derivatives is involved (see, e.g., [13, 20, 21, 47, 51]). Moreover, it has been also successfully used in many other different applied fields, e.g., in optimization [7, 8, 11, 12], in ordinary differential equations [2, 50], in handling ill-conditioning and high precision computations [1, 17, 49], numerical simulation and modeling [13, 14], etc. However, it should be noted that the method proposed and described in this paper does not depend explicitly on the selected framework and can be implemented in other similar frameworks listed above.

In order to show the effectiveness of the proposed method, in this paper the nonlinear analysis of Kirchhoff–Love shells is performed by means of step-by-step approach using the so-called full model as referred in [31] where also a simplified third order strain measure providing the same results as the exact one has been presented together with a very accurate patch-wise integration rule adapted for this model.

The rest of the paper is organized as follows. Section 2 briefly describes the Kirchhoff–Love shell model. Section 3 presents the differentiation techniques and briefly describes the chosen framework for working with infinite and infinitesimal numbers. Sect. 4 presents the results of the numerical comparison of the proposed methods with their direct competitors on several benchmark test problems. Section 5 presents the numerical experiments and obtained results. Section 6 concludes the paper. Finally, analytical strain variations are presented in Appendix in order to show the complex structure of the objective function and its derivatives, substantiating so the necessity of an efficient differentiation method.

1 Kirchhoff–Love shells in large deformation

Kirchhoff–Love theory assumes that transverse shear strains are negligible, for this reason the model well adapts thin shells widely used in industrial applications. In this case, the shell kinematics is described in terms of displacement variables only and second order derivatives appear in the governing variational equations of the Kirchhoff–Love theory. This implies the necessity of \(C^1\)-continuous approximation functions, which has always been a major obstacle for the development of efficient finite element formulations for thin shells, which have been overcame by using Spline based interpolations [3, 22].

1.1 Kirchhoff–Love shell kinematics

The model described in this section has been presented in [22], to which the reader is invited to refer for a deeper discussion. Herein, we use a Total Lagrangian formulation to identify material points on the middle surface of the current configuration in terms of their position vector \(\mathbf{X }(\xi , \eta )\) in the reference configuration and the displacement field \(\mathbf{u }(\xi , \eta )\), as follows

where \(\varvec{\xi }=[\xi , \eta ]\) denotes convective curvilinear shell coordinates with \((\xi ,\eta )\) representing in-plane coordinates. The middle surface covariant basis vectors in the undeformed and actual configuration are by definition, respectively

where the symbol \((),_i\) is used to denote the partial derivation with respect to the i-th component of \(\varvec{\xi }\), while the unit normal ones are

This is sufficient to impose the Kirchhoff–Love (KL) shell constraint: the director remains straight and normal to the mid-surface during deformation and the Green–Lagrange strain tensor reduces to

where \(\bar{E}_{ij}\) are the covariant strain components. Assuming the strain to vary linearly through the thickness, it is splitted into a membrane (constant) a bending (linear) part respectively. The covariant strain coefficients are

with \(\zeta \in [-t/2, t/2]\) and t being the thickness of the shell. The curvature tensor coefficients are defined as in [22]

Following the isoparametric concept, geometry and displacement fields are approximated, over the element, as follows

where \(\mathbf{X }_e\) and \(\mathbf{d }_e\) collect the element control points and the element control displacements, respectively. The matrix \(\mathbf{N }_u(\xi , \eta )\) collects bivariate NURBS functions [9]. Adopting Voigt’s notation, the covariant strain components in (5) are collected in the vector \(\bar{\mathbf{E }} = [\bar{E}_{\xi \xi }, \bar{E}_{\eta \eta }, 2\bar{E}_{\xi \eta }]^T\), that, exploiting (6), becomes

with \(\bar{\mathbf{e }} = [\bar{e}_{\xi \xi }, \bar{e}_{\eta \eta }, 2\bar{e}_{\xi \eta }]^T\) and \(\bar{\varvec{\chi }} = [\bar{\chi }_{\xi \xi }, \bar{\chi }_{\eta \eta }, 2\bar{\chi }_{\xi \eta }]^T\). The generalized covariant strain vector \(\bar{\varvec{\varepsilon }} \equiv [\bar{\mathbf{e }}, \bar{\varvec{\chi }}]^T\). by exploiting the isogeometric approximation, \(\bar{\varvec{\varepsilon }}\) becomes

where \(\bar{\mathbf{D }}\) depends on the displacement DOFs. The map between parametric and physical domain [31] allows to obtain their Cartesian expression as

The mapping between the parametric and the physical domains and the homogenized constitutive matrix \(\mathbf{C }_\epsilon \) necessary to construct coherently the mechanical model are given in [31] and \(\varvec{\sigma }=\mathbf{C }_\epsilon \varvec{\varepsilon }.\)

1.2 Nonlinear analysis framework

The equilibrium of slender elastic structures subject to conservative loads f amplified by a proportionality factor \(\lambda \) is expressed by the virtual work equation

where \(u \in \mathcal {U}\) is the field of configuration variables, \(\varPhi (u)\) denotes the strain energy, \(\mathcal {T}\) is the tangent space of \(\mathcal {U}\) at u and \(()'\) is used to express the Fréchet derivative with respect to u. \(\mathcal {U}\) is a linear manifold so that its tangent space \(\mathcal {T}\) is independent of u. The discrete counterpart of (10) is

where \({\mathbf{r }}:{\mathbb {R}}^{N+1}\rightarrow {\mathbb {R}}^N\) is a nonlinear vectorial function of the vector \({\mathbf{z }} \equiv \{\mathbf{d },\lambda \} \in {\mathbb {R}}^{N+1}\), collecting the configuration \({\mathbf{d }} \in {\mathbb {R}}^{N}\) and the load multiplier \(\lambda \in {\mathbb {R}}\), \(\mathbf{s }[\mathbf{d }]\) is the internal force vector, and \(\varvec{f}\) the reference load vector. (11) represents a system of N equations and \(N+1\) unknowns and its solutions define the equilibrium paths as curves in \({\mathbb {R}}^{N+1}\). The Riks’ approach [43] can be used to trace these curves step-by-step from a known initial configuration \(\mathbf{d }_0\) corresponding to \(\lambda = 0\). At each step some Newton iterations are needed to solve (11). To this end, we also define the tangent stiffness matrix as

where \(\delta u\) and \({\tilde{u}}\) are generic variations of the configuration field u and \(\delta \mathbf{d }\) and \({\tilde{\mathbf{d }}}\) the corresponding discrete vectors.

In displacement-based formulations, the strain energy can be expressed as a sum of element contributions \(\varPhi (u) \equiv \sum _e \varPhi _e(u)\)

where \(\varOmega _e\) is the element domain. The first variation of the generalized strains in (9) can be written as

and, then, the first variation of the strain energy is

where \(\mathbf{s }_e(\mathbf{d }_e)\) is the element internal force vector. The second variation of the strain measure is

and its k-th component can be evaluated, introducing the symmetric matrix \(\varvec{\varPsi }_k(\mathbf{d }_e)\), as

Letting \(\varvec{\sigma }(\mathbf{d }_e)= \mathbf{C }_\epsilon \varvec{\varepsilon }(\mathbf{d }_e)\), the following expression holds

with

The second variation of the strain energy is

with the element tangent stiffness matrix defined as

The discrete operators involved in the evaluation of the internal force vector and stiffness matrix are defined by means of the strain derivatives. In order to avoid locking, the patch-wise reduced integration proposed in [31] is used to evaluate the integrals in (15) and (19). The proposed derivation technique is used to evaluate the derivatives \(\delta \varvec{\varepsilon }\) and \(\delta {\tilde{\varvec{\varepsilon }}}\) of the discrete strain measure \(\varvec{\varepsilon }\) with respect to the input vector \(\mathbf{d }\). As it is shown in Appendix, the formulae for the analytical evaluation of \(\delta \varvec{\varepsilon }\) and \(\delta {\tilde{\varvec{\varepsilon }}}\) using (14) and (16) can be complicated as well as their explicit generation. Moreover, these functions can depend not only on the input \(\mathbf{d }\) but on the additional parameters and structures changing during the execution. For these reasons, an efficient tool for computation of these derivatives is required.

The isogeometric shell model described in this section with the patch-wise reduced integration rule proposed in [31] is accurate and efficiently solved exploiting the so called called Mixed Integration Point strategy [16, 35], proposed in order to overcome the inefficiency of standard displacement-based finite element problems and then extended and tested in displacement-based isogeometric formulations [28, 38, 39].

2 Differentiation techniques

2.1 General considerations

The following Proposition reformulates the multidimensional differentiation problem of computation of the gradient and Hessian to the computation of the respective univariate derivatives.

Proposition 1

Let \(f({\mathbf {x}})\) be a scalar-valued function, at least twice continuously differentiable at \({\mathbf {x}}^0 = (x_{1}^0,x_{2}^0,\ldots ,x_{m}^0)\). Let also \(y_i(t)\) and \(y_{ij}(t),~i,j = 1,\ldots ,m,\) be univariate functions defined as follows:

where \({\mathbf {e}}^l\) is the l-th column of the m-dimensional identity matrix, \(t,~t\in {\mathbb {R}},\) is an auxiliary univariate variable. Then, the following equations are valid:

where \(\nabla _i f({\mathbf {x}}^0)\), \(H_{ii}({\mathbf {x}}^0)\), and \(H_{ij}({\mathbf {x}}^0)\) are the i-th component of the gradient \(\nabla f({\mathbf {x}}^0)\), i-th diagonal entry and (i, j)-th non-diagonal entry of the Hessian matrix \(\mathbf{H }({\mathbf {x}}^0)\).

Proof

In order to prove this Proposition, let us consider the following Taylor expansion of \(f(\mathbf{x })\) around \(\mathbf{x }^0\):

On the one hand, substituting \(\mathbf{x }= \mathbf{x }^0 + t\mathbf{e }^i\) in (25), one can obtain that

being the Taylor expansion of \(y_i(t)\) around \(t = 0\). It should be noted that \(\mathbf{x }= \mathbf{x }^0 + t\mathbf{e }^i\) differs from \(\mathbf{x }^0\) at only one i-th component \(x_i^0\), while all the remaining components coincide. Thus, the first- and second-order derivatives \(f,_i(\mathbf{x }^0)\) and \(f,_{ii}(\mathbf{x }^0)\) are equal to \(y_i'(0)\) and \(y_i''(0)\), respectively, i.e., Eqs. (22)–(23) hold.

On the other hand, substituting \(\mathbf{x }= \mathbf{x }^0 + t(\mathbf{e }^i+\mathbf{e }^j)\) in (25), one can also obtain that

being the Taylor expansion of \(y_{ij}(t)\) around \(t = 0\). Thus, the second-order derivative \(y_{ij}''(0)\) is equal to \(f,_{ii}(\mathbf{x }^0) + 2f,_{ij}(\mathbf{x }^0) + f,_{jj}(\mathbf{x }^0)\), from where it is easy to see that (24) is also satisfied. \(\square \) \(\square \)

One can see from (22), (23), and (24) that both the gradient and Hessian can be successfully calculated using differentiation techniques for a univariate function. It is shown in the following sections how to apply these techniques for the computation of the gradient and Hessian on the Infinity Computer—a framework for working numerically with infinite and infinitesimal numbers, which can be used for computation of the respective univariate derivatives.

2.2 Differentiation on the infinity computer

The Infinity Computer is based on using of the positional numeral system with the infinite radix  (called grossone, see, e.g., [45, 48] for details). A number X in this framework can be represented as follows:

(called grossone, see, e.g., [45, 48] for details). A number X in this framework can be represented as follows:

where \(c_i,~i=0,\ldots ,N,\) are finite (positive or negative) floating-point numbers, while \(p_i,~i=0,\ldots ,N,\) can be finite, infinite, and infinitesimal of the form (28) and ordered in a decreasing order, \(p_0>p_1> \cdots > p_N\). In this paper, a software simulator with only finite powers \(p_i\) is used, since they are sufficient for the differentiation purposes, thus, it is supposed that \(p_i,~i=0,\ldots ,N,\) are also finite floating-point numbers. The number N is the maximum number of powers used in computations and represents the precision: results of arithmetic operations are truncated after N powers of  or, in other words, after N items in (28).

or, in other words, after N items in (28).

In the Infinity Computing framework, the number  possesses all properties of a natural number, including

possesses all properties of a natural number, including  . Thus, the item

. Thus, the item  where \(p_k = 0,\) represents the finite part of (28) (if there is no such an item in (28), then the finite part is equal to zero). The number X having at least one finite positive power \(p_i\) in (28) is called infinite, while the number having only negative finite powers is called infinitesimal. Finally, the number

where \(p_k = 0,\) represents the finite part of (28) (if there is no such an item in (28), then the finite part is equal to zero). The number X having at least one finite positive power \(p_i\) in (28) is called infinite, while the number having only negative finite powers is called infinitesimal. Finally, the number  , i.e., having only the power of

, i.e., having only the power of  equal to zero, is called purely finite. A more detailed description of the Infinity Computer including its technical implementation information can be found, e.g., in [14, 21, 45].

equal to zero, is called purely finite. A more detailed description of the Infinity Computer including its technical implementation information can be found, e.g., in [14, 21, 45].

In different papers, there have been already proposed several methods for computation of the first- and second-order derivatives of a purely finite univariate function y(t) (i.e., a function not depending explicitly on  and having only purely finite values at any purely finite t) at the purely finite point z using numerical infinitesimals, for example, the Taylor-based method on the Infinity Computer proposed in [47] and extended in [13] for Simulink. This method is based on the Taylor expansion of y(t):

and having only purely finite values at any purely finite t) at the purely finite point z using numerical infinitesimals, for example, the Taylor-based method on the Infinity Computer proposed in [47] and extended in [13] for Simulink. This method is based on the Taylor expansion of y(t):

As it was shown in [47], in order to find the derivatives of y(t) at the point z numerically, the value \(y(z + h)\) is calculated, where h is infinitesimal (the value  was used in [47], but this method is valid for other infinitesimals as well):

was used in [47], but this method is valid for other infinitesimals as well):

where \(c_k,~k=0,1,2,\ldots ,\) are purely finite numbers. It should be noted that the result in (30) is written down in powers of h instead of the powers of  as in (28) just for simplicity: all computations are performed in the form (28), while the coefficients of h in (32) can be easily calculated from the coefficients of

as in (28) just for simplicity: all computations are performed in the form (28), while the coefficients of h in (32) can be easily calculated from the coefficients of  in (28). Moreover, if, e.g.,

in (28). Moreover, if, e.g.,  is chosen, then the coefficients of \(h^i,~i=0,1,\ldots ,\) in (32) are equal to the coefficients of

is chosen, then the coefficients of \(h^i,~i=0,1,\ldots ,\) in (32) are equal to the coefficients of  in (28).

in (28).

As it has been shown in [47], the finite part \(c_0\) in (30) corresponds to the purely finite value y(z). Moreover, the coefficients \(c_k,~k=1,2,\ldots ,\) give us the k-th derivatives of y(t) at z up to machine precision:

It should be noted that, since the Infinity Computer uses numerical and not symbolic infinitesimals, all computations are performed using floating-point arithmetic. For this reason, the error in the computation of the respective coefficients \(c_k\) is equivalent to the machine precision.

The algorithms proposed in the following are described in terms of the Infinity Computer framework. However, it is easy to see that these methods can also be used with any other similar framework, e.g., using Levi-Civita field ([52]) or hyper-dual numbers ([54]). However, one can easily see that the Infinity Computer with finite powers of  (which is used in the present paper) can be simply considered as a particular implementation of the Levi-Civita field substituting the Levi-Civita’s generic infinitesimal \(\varepsilon \) by a concrete

(which is used in the present paper) can be simply considered as a particular implementation of the Levi-Civita field substituting the Levi-Civita’s generic infinitesimal \(\varepsilon \) by a concrete  -based infinitesimal, e.g.,

-based infinitesimal, e.g.,  (but a detailed comparison of these two methodologies is out of scope for the present paper).

(but a detailed comparison of these two methodologies is out of scope for the present paper).

On the other hand, the concepts of the hyper-dual numbers allow to work only with finite and infinitesimal numbers without possibility of working with infinite numbers in the same framework. However, it has been shown in several papers that the possibility of working with infinite numbers can be very important from the practical point of view: e.g., in penalty functions and optimization (see, e.g., [10]), handling ill-conditioning ([17, 49]), truncated characteristic polynomial for quadratic matrices ([41]), etc. For these reasons, a general-purpose framework like the Infinity Computer or Levi-Civita field is more appropriate for a complex analysis of real-life processes instead of hyper-dual numbers. Moreover, the hyper-dual numbers use different number of “axis” w.r.t. the proposed approach. The algorithms for the computation of the scalar-valued second-order derivatives on the Infinity Computer (31) use only 3 axis being the powers 0, 1, and 2 of h in (30). The hyper-dual numbers, instead, use different infinitesimals \(\varepsilon _1\) and \(\varepsilon _2\), so each computation of the scalar-valued second-order derivatives requires 4 axis: the finite (real) part of the result, the “imaginary” parts (the powers 1 of \(\varepsilon _1\) and \(\varepsilon _2\), respectively, being the first order derivatives) and the term \(\varepsilon _1\varepsilon _2\) representing the second-order derivative. So the evaluations used in the proposed approach are expected to be lighter than those required by the hyper-dual numbers. However, their fair comparison requires an efficient software implementation of the Infinity Computer, which is absent at this moment and its realization requires vast scientific and software development contributions (out of scope of the current paper).

2.3 Computation of the gradient

Let us modify the method from [13, 47] for computation of the gradient \(\nabla f(\mathbf{x }^0)\) using (22). First, the function \(y_i(t) = f(\mathbf{x }^0+t\mathbf{e }^i)\) can be defined according to (20), where \(\mathbf{e }^i\) is the i-th column of the m-dimensional identity matrix,. Then, its first-order derivative can be easily calculated at \(t=0\) using (30) as follows. The function f is evaluated at the point \(\mathbf{x }^0 + h\mathbf{e }^i\), where h is a numerical infinitesimal of the form (28):

where \(a_k,~k=0,1,\ldots ,\) are purely finite numbers.

As it has been shown previously, the finite part \(a_0\) in (32) corresponds to the purely finite value \(f(\mathbf{x }^0)\), while the coefficient \(a_1\) corresponds to the first-order derivative:

where the error in computation of \(f,_i(\mathbf{x }^0)\) is an infinitesimal of order O(h), so the finite part of \((f(\mathbf{x }^0 + h\mathbf{e }^i) - f(\mathbf{x }^0))h^{-1}\) gives us the required first partial derivative up to machine precision. However, since for a purely finite \(\mathbf{x }^0\), the value \(f(\mathbf{x }^0)\) is also purely finite, so \(f(\mathbf{x }^0)h^{-1}\) is infinitesimal (or equal to zero if \(f(\mathbf{x }^0) = 0\)). Thus, the finite part of \((f(\mathbf{x }^0 + h\mathbf{e }^i) - f(\mathbf{x }^0)) h^{-1}\) is simply equal to the finite part of \(f(\mathbf{x }^0 + h\mathbf{e }^i) h^{-1}\), which gives us exactly the required first-order partial derivative (being also the i-th component \(\nabla _i f(\mathbf{x }^0)\) of the gradient \(\nabla f(\mathbf{x }^0)\)):

where FinitePart[x] is the finite part of the number x, i.e., the coefficient of  in (28). In other words, the i-th partial derivative \(f,_i(\mathbf{x }^0)\) (being the i-th component of \(\nabla f(\mathbf{x }^0)\)) is the coefficient of the infinitesimal h in \(f(\mathbf{x }^0 + h\mathbf{e }^i)\) given in the form (28): if, e.g.,

in (28). In other words, the i-th partial derivative \(f,_i(\mathbf{x }^0)\) (being the i-th component of \(\nabla f(\mathbf{x }^0)\)) is the coefficient of the infinitesimal h in \(f(\mathbf{x }^0 + h\mathbf{e }^i)\) given in the form (28): if, e.g.,  then the i-th component of the gradient can be calculated extracting the coefficient of

then the i-th component of the gradient can be calculated extracting the coefficient of  from

from  . Formally, the algorithm for the computation of the gradient is presented below:

. Formally, the algorithm for the computation of the gradient is presented below:

One can see that the Algorithm 1 is simple and easily parallelizable along the main “for” loop in case of large dimensions m. An example of the application of the Algorithm 1 is presented below.

Example 1

Let us calculate the gradient of the function \(f(\mathbf{x }) = \frac{x_1^2 + x_2^2}{x_1+x_2-2x_1x_2}\) at \(\mathbf{x }^0 = (1,2)\). First, let us fix the infinitesimal h, e.g., equal to the simplest infinitesimal of the form (28):  . Thus, \(\nabla f(\mathbf{x }^0) = (f,_1(\mathbf{x }^0), f,_2(\mathbf{x }^0))\).

. Thus, \(\nabla f(\mathbf{x }^0) = (f,_1(\mathbf{x }^0), f,_2(\mathbf{x }^0))\).

Let us first calculate \(f(\mathbf{x }^0 + h\mathbf{e }^1)\):

Let us now extract the coefficient of  from (35): \(f,_1(\mathbf{x }^0) = 13.\)

from (35): \(f,_1(\mathbf{x }^0) = 13.\)

Second, let us calculate \(f(\mathbf{x }^0 + h\mathbf{e }^2)\):

Let us now extract the coefficient of  from (36): \(f,_2(\mathbf{x }^0) = 1.\)

from (36): \(f,_2(\mathbf{x }^0) = 1.\)

Finally, \(\nabla f(\mathbf{x }^0) = (f,_1(\mathbf{x }^0), f,_2(\mathbf{x }^0)) = (13,1)\).

In Sect. 5, the proposed algorithm is applied for the computation of the first variation \(\delta \varvec{\varepsilon }\) of the strain measure from (14).

2.4 Computation of the Hessian

Let us first calculate the diagonal entries \(H_{ii}(\mathbf{x }^0),~i=1,\ldots ,m,\) of the Hessian \(\mathbf{H }(\mathbf{x }^0)\) from the second-order derivatives of the function \(y_{i}(t) = f(\mathbf{x }^0 + t\mathbf{e }^i)\) at \(t = 0\) according to (23). Again, the value \(f(\mathbf{x }^0 + h\mathbf{e }^i)\) is calculated according to (32). Then, the second-order partial derivative \(f,_{ii}(\mathbf{x }^0)\) (being the i-th diagonal entry \(H_{ii}(\mathbf{x }^0)\) of the Hessian matrix \(\mathbf{H }(\mathbf{x }^0)\)) can be calculated extracting the coefficient \(a_2\) of \(h^2\):

where \(\mathbf{e }^i\) is the i-th column of the m-dimensional identity matrix, h is a numerical infinitesimal of the form (28), FinitePart[x] is the finite part of the number x, i.e., the coefficient of \(h^0\) or  in (28). In other words, the i-th second-order partial derivative \(f,_{ii}(\mathbf{x }^0)\) (being the i-th diagonal entry of \(\mathbf{H }(\mathbf{x }^0)\)) is the coefficient (multiplied by 2) of the infinitesimal \(h^2\) of the value \(f(\mathbf{x }^0 + h\mathbf{e }^i)\) given in the form (28): if, e.g.,

in (28). In other words, the i-th second-order partial derivative \(f,_{ii}(\mathbf{x }^0)\) (being the i-th diagonal entry of \(\mathbf{H }(\mathbf{x }^0)\)) is the coefficient (multiplied by 2) of the infinitesimal \(h^2\) of the value \(f(\mathbf{x }^0 + h\mathbf{e }^i)\) given in the form (28): if, e.g.,  then the i-th diagonal entry of the Hessian can be calculated extracting the coefficient of

then the i-th diagonal entry of the Hessian can be calculated extracting the coefficient of  from

from  . An example of the computation of the derivatives in this way is presented below.

. An example of the computation of the derivatives in this way is presented below.

Example 2

Let us calculate the second-order derivatives \(f,_{11}(\mathbf{x }^0)\) and \(f,_{22}(\mathbf{x }^0)\) of the function \(f(\mathbf{x }) = \frac{x_1^2 + x_2^2}{x_1+x_2-2x_1x_2}\) at \(\mathbf{x }^0 = (1,2)\). Let us fix the infinitesimal h, e.g., equal to the simplest infinitesimal of the form (28):  .

.

Let us first calculate \(f(\mathbf{x }^0 + h\mathbf{e }^1)\):

Let us now extract the coefficient of  from (38):

from (38):

Second, let us calculate \(f(\mathbf{x }^0 + h\mathbf{e }^2)\):

Let us now extract the coefficient of  from (39):

from (39):

Let us now calculate the mixed second-order derivative \(f,_{ij}(\mathbf{x }^0),~i\ne j,\) using the respective univariate derivatives of the function \(y_{ij}(t) = f(\mathbf{x }^0 + t\mathbf{e }^i + t\mathbf{e }^j)\) at \(t = 0\) from (24). First, the value \(f(\mathbf{x }^0 + h\mathbf{e }^i + h\mathbf{e }^j)\) is calculated:

where \(b_k,~k=0,1,\ldots ,\) are purely finite numbers. It should be noted that the coefficients \(b_k\) in (40) can be different from the coefficients \(a_k\) in (32), except \(a_0 = b_0 = f(\mathbf{x }^0).\)

Second, the coefficient \(b_2\) is extracted from (40) and the second-order mixed derivative \(f,_{ij}(\mathbf{x }^0)\) (being the (i, j)-th entry \(H_{ij}(\mathbf{x }^0)\) of the Hessian matrix \(\mathbf{H }(\mathbf{x }^0)\)) is calculated as follows:

Formally, the full algorithm for computation of the Hessian matrix is presented below.

One can see that the Algorithm 2 is also easy to implement and parallelizable in different ways (e.g., along the i-based outer loop or along the j-based inner loop). Moreover, all partial derivatives \(f,_{jj}(\mathbf{x }^0),~j=1,2,\ldots ,i,\) used in the inner j-loop by (41) are known, since the inner j-loop calculates the i-th column of \(\mathbf{H }(\mathbf{x }^0)\) only up to the i-th row, i.e., using only known derivatives \(f,_{jj}(\mathbf{x }^0)\). An example of the application of the algorithm is presented below.

Example 3

Let us again consider the function \(f(\mathbf{x }) = \frac{x_1^2 + x_2^2}{x_1+x_2-2x_1x_2}\) and \(\mathbf{x }^0 = (1,2)\). Let us calculate the Hessian \(\mathbf{H }(\mathbf{x }^0)\) using

The diagonal entries \(H_{ii}(\mathbf{x }^0),~i=1,2\) have been already calculated in the previous example: \(H_{11}(\mathbf{x }^0) = -80,\) \(H_{22}(\mathbf{x }^0) = -4.\)

In order to calculate the mixed derivative \(H_{12}(\mathbf{x }^0) = f,_{12}(\mathbf{x }^0)\), let us first calculate the value  :

:

Let us now extract the coefficient of  from (42) and apply (41):

from (42) and apply (41):

Thus, \(\mathbf{H }(\mathbf{x }^0) = \begin{pmatrix} -80 &{}\quad -6\\ -6 &{}\quad -4 \end{pmatrix}.\)

Remark 1

It should be noted that the Algorithms 1 and 2 require the computation of \(f(\mathbf{x }^0+h\mathbf{e }^i)\) with a different accuracy with respect to the powers of h: if only the gradient is required, then \(f(\mathbf{x }^0+h\mathbf{e }^i)\) can be calculated using only the powers of h up to 1 truncating all the powers greater than 1. If the Hessian is required, then \(f(\mathbf{x }^0+h\mathbf{e }^i)\) (as well as \(f(\mathbf{x }^0+h\mathbf{e }^i + h\mathbf{e }^j)\)) should be calculated using the powers of h up to 2 truncating all the powers greater than 2.

Remark 2

One can also note that the gradient and the diagonal entries of the Hessian require different coefficients from the same value \(f(\mathbf{x }^0+h\mathbf{e }^i)\) in (32). For this reason, the gradient and the Hessian can be also calculated simultaneously within the same loop and using the same value \(f(\mathbf{x }^0+h\mathbf{e }^i)\) for both the i-th entry of the gradient and the i-th diagonal entry of the Hessian, if it is required to calculate them both. In this case, \(f(\mathbf{x }^0+h\mathbf{e }^i)\) should be calculated using the powers of h up to 2 and no additional computation of f is required to complete the gradient.

Remark 3

Since \(H_{ij}(\mathbf{x }^0)\) is calculated in (41) using subtractions, then the obtained values are calculated with a lower than the machine precision, if \(f(\mathbf{x })\) with its derivatives is ill-conditioned. For example, if the values \(H_{ii}(\mathbf{x }^0)\) are too small, while \(H_{ij}(\mathbf{x }^0)\) are large (or vice versa), then, due to ill-conditioning and limitations of the floating-point arithmetic, the results can loose significant digits. However, since the presented method does not use small finite stepsizes h (unlike standard finite difference schemes), then the obtained accuracy is still acceptable.

In Sect. 5, the proposed algorithm is applied for the computation of the second variation \(\delta {\tilde{\varvec{\varepsilon }}}\) of the strain measure from (16).

3 Numerical results on benchmark problems

In this section, the proposed methodology for computation of the gradient and the Hessian is compared with several state-of-the-art methods on several widely used m-dimensional benchmark test problems from [40]: Shubert function (function \(f_1(\mathbf{x }),~m=2\)), Shekel function (function \(f_2(\mathbf{x }),~m=4\)) and Griewank functions (functions \(f_3(\mathbf{x })\)–\(f_9(\mathbf{x })\) with the dimensions \(m=2\), 4, 8, 16, 32, 64 and 128) over the interval \([1,10]^m\) (the interval has been chosen in order to guarantee the twice continuous differentiability of the functions). The parameters of the functions and their implementation have been taken from the Virtual Library of Simulation Experiments http://www.sfu.ca/~ssurjano/:

The following methods have been applied for differentiation of the functions given above:

-

Adi—Automatic differentiation tool called “Adigator” from [55];

-

TIC—The proposed method using the Infinity Computer for dealing with infinitesimals;

-

Mat—The method based on the solution of a system of linear equations from [52] (the Infinity Computer was used for computation of the univariate derivatives up to machine precision);

-

IS—Imaginary step method from [25].

As the automatic differentiation technique Adi is already exploited in the numerical experiments, the use of the software AceGen, discussed in the introduction, is not compared as it is based on automatic differentiation too and, thus, it will not improve the quality of results. Moreover, it requires the Wolfram Mathematica software adding so additional costs and difficulties for the authors of the present paper.

Numerical experiments have been carried out in MATLAB R2016b. For each test problem, symbolic derivatives have been calculated as reference values for the comparison of the algorithms. The infinitesimal perturbation for TIC has been set equal to the simplest infinitesimal  . The stepsize parameter h of the IS method has been set equal to \(10^{-20}\) for the gradients according to [25], since this choice allows to calculate the gradient up to machine precision due to absence of cancellations. However, the computation of the Hessian using IS requires a more specific setting of h, since it involves subtractions and numerical cancellation for small h. For this reason, a parametric study of the impact of different values of the characteristic parameter h (from \(10^{-1}\) to \(10^{-5}\)) of the IS algorithm for computation of the Hessian is also carried out.

. The stepsize parameter h of the IS method has been set equal to \(10^{-20}\) for the gradients according to [25], since this choice allows to calculate the gradient up to machine precision due to absence of cancellations. However, the computation of the Hessian using IS requires a more specific setting of h, since it involves subtractions and numerical cancellation for small h. For this reason, a parametric study of the impact of different values of the characteristic parameter h (from \(10^{-1}\) to \(10^{-5}\)) of the IS algorithm for computation of the Hessian is also carried out.

For each test function \(f_k(\mathbf{x }),~k=1,\ldots ,9,\) 50 trial points \(\mathbf{x }^i,~i=1,\ldots ,50,\) have been generated randomly in the hyper-interval \([1,10]^m\). The respective gradients \(\nabla ^{met} f_k(\mathbf{x }^i)\) and Hessians \(\mathbf{H }^{met}(\mathbf{x }^i)\) have been calculated at \(\mathbf{x }^i\) by the algorithm met, \(met \in \{Adi,TIC,Mat,IS\}\). The relative errors with respect to the symbolic derivatives \(\nabla f_k(\mathbf{x }^i)\) and \(\mathbf{H }(\mathbf{x }^i)\) have been calculated componentwise as follows:

The average relative errors have been calculated:

The obtained results are presented in Tables 1 and 2 for the gradients and Hessians, respectively.

One can see from Tables 1 and 2 that the proposed TIC algorithm allows to obtain the same accuracy as the automatic differentiation technique Adi: the obtained average relative error on the first test problem coincides for these two algorithms (being higher than the Machine precision due to the presence of small values in the derivatives), while the errors on the remaining functions are smaller than the machine precision for both the algorithms (the difference between \(10^{-36}\) and \(10^{-21}\) on the last function for the Hessian is non significant, since it is much smaller than the machine precision).

On the other hand, the algorithm Mat from [52] is able to obtain a high accuracy only on the problems with a relatively small dimension (functions 1–5 with the dimensions not higher than 8). It can be also seen that the accuracy of the method Mat decreases with the dimension of the function to be differentiated, while the accuracy of the proposed algorithm TIC does not depend on the dimensionality of the problem. Thus, the proposed algorithm TIC can be considered as an improvement of the “Mat” method showing so the desirable accuracy without concerns about the dimensionality of the problem.

Finally, the IS algorithm shows the same accuracy as Adi and TIC for the gradient, but lower accuracy for the Hessian. One can see that the accuracy of the IS method does not depend on the dimensionality of the problem as well as the TIC and Adi algorithms, but it involves numerical cancellation issues related to the finite stepsize h.

For the above mentioned reasons, the algorithms Adi and TIC produce equivalent accuracies. However, they are different from the computational point of view. The Adi algorithm overrides the arithmetic operations in the software code of the function \(f(\mathbf{x })\) in order to construct the code for the respective derivatives. It is well-known that the generation of the code by Adi reqiures additional execution time, which increases with the complexity of the function to be differentiated (see, e.g., [21]). Consecutive evaluations of the derivatives depend on the complexity of the function \(f(\mathbf{x })\) and its code. For complicated real-life examples where the function to be differentiated can change during the simulation, application of an automatic differentiation algorithm can require re-generation of the code for the derivatives every time when the function is changed. For this reason, its application requires not only temporal resources for the execution, but the resources for generation and storing of the respective derivatives.

In its turn, TIC algorithm does not involve generation of the code, but it uses the overrided operations similarly to the Adi algorithm. However, it does not require any additional space resources. For this reason, it does not re-generate the derivatives if the function changes. The latter property allows one to calculate the derivatives on-the-fly with the highest accuracy. A detailed comparison between TIC and Adi is not presented here, since it requires a fair implementation of both the methods. The Adi algorithm has been implemented in MATLAB for an efficient work with the matrices and vectors allowing vectorized operations, while TIC requires an efficient implementation of the framework for working with infinite and infinitesimal numbers. However, at this moment, only a preliminary non-optimized version of the Infinity Computer is available. This software simulator does not allow to execute operations efficiently (e.g., using vectorized MATLAB operations) and is aimed to execution of the experiments oriented to the study of the accuracy. For this reason, the execution times of the algorithms are not provided here. To give an idea of the performance of the used framework of the Infinity Computer, the papers [21, 41, 50] can be studied where the computational performance of the Infinity Computer is analyzed and compared with its competitors. Moreover, since the Infinity Computer with finite powers of  is equivalent to Levi-Civita field from the implementational and computational points of view, then the paper [15] can be also consulted for possible execution times and performance.

is equivalent to Levi-Civita field from the implementational and computational points of view, then the paper [15] can be also consulted for possible execution times and performance.

4 Numerical results on computational mechanics

In this section, the efficiency of the proposed methodology for computation of the gradient and the Hessian of the nonlinear equations governing the static analysis of structures in large deformation problems is studied on several physical applications. Isogeometric Kirchhoff–Love shell model with \(C^2\) NURBS interpolation and patch-wise integration schemes from [31] is employed as a physical context of application. In this model, the accuracy of the simulation depends on the computation of the gradient involved in the evaluation of the structural response expressed by (10). The accuracy of the computation of the Hessian affects the convergence speed of the Newton algorithm. Small deviations with respect to the exact Hessian still enable the convergence but with a lower rate.

The proposed TIC methods are compared with the IS algorithm in terms of both the accuracy and the efficiency of the simulation. It should be noted that the Adi method is not involved in this section, since it produces results with the accuracy similar to TIC, while the Mat algorithm has been proven to be “unstable” on the dimensions higher than 5. Instead, the IS method has been chosen for the comparison, since it uses only one additional framework being the complex numbers, which is well-implemented in different programming languages and environments (e.g., MATLAB or Python). Meanwhile, the accuracy of the IS algorithm for the gradient is high enough, while it can also be acceptable using “good” values of h for the Hessian. For these reasons, the IS algorithm can also be applicable for solving difficult real-life problems, so it is important to study the limits of its successful usage. Simulations using exact analytical formulae for the derivatives (AF) are also added as a reference solution for the comparison.

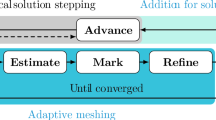

For each test problem, the parameter h of the IS method has been set equal to \(10^{-20}\) for the gradients according to [25], since this choice allows to calculate the gradient up to machine precision due to absence of cancellations. However, the computation of the Hessian using IS requires a more specific setting of h, since it involves subtractions and numerical cancellation for small h (see Table 2). For this reason, a parametric study of the impact of different values of the characteristic parameter h of the IS algorithm for computation of the Hessian is also carried out. In this context, two numerical tolerances, \(10^{-4}\) and \(10^{-8}\), respectively, in solving the nonlinear equations are considered. The solution method is implemented in a predictor–corrector scheme based on a linear extrapolation of the previous solution step. Its length is modulated on the basis of the number of iterations required to reach the convergence. Then, if the step is correctly solved, the step-length grows along the less nonlinear part of the equilibrium path and decreases where the path is strongly nonlinear. When the discrete operators are affected by errors slowness in the nonlinear process can be observed.

Euler beam: equilibrium path for L/h \(=\) 10 and \(tol=1e{-}4\) (left) and \(tol=1e{-}8\)(right). A part of the graph is zoomed in to improve the readability of the figures. The results coincide for AF (red points and dashed line) and TIC (red squares and red solid line) in both the cases; IS generates the same iterations only using \(h=1e{-}4\) (black triangles and lines) for \(tol=1e{-}4\) (left figure), while it generates different iterations w.r.t. AF using other values of h: IS with \(h=1e{-}2\) (blue triangles and lines) and \(1e{-}3\) (green triangles and lines) and \(1e{-}4\) with \(tol=1e{-}8\) don’t coincide with the analytical values obtained by AF; using \(h=1e{-}6\) (brown triangles and lines) it generates so many and too dense iterations even for \(tol=1e{-}4\), so it hasn’t been launched for \(tol=1e{-}8\). (Color figure online)

4.1 Cantilever beam under compression

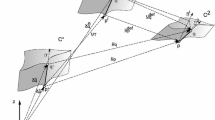

The first example is the simple Euler Beam test from [6, 31]. The simple cantilever beam depicted in Fig. 1 is analyzed for different values of the slenderness parameters \(k=L/t,\) where L and t are the length and the thickness of the beam, respectively. A very small shear imperfection load (\(\epsilon =0.01\)) is added to avoid the jump of the bifurcation as shown in [31].

Euler beam: equilibrium path for L/h \(=\) 100 and \(tol=1e{-}4\) (left) and \(tol=1e{-}8\)(right). A part of the graph is zoomed in to improve the readability of the figures. The results coincide for AF (red points and dashed line) and TIC (red squares and red solid line) in both the cases; IS generates the same iterations only using \(h=1e{-}3\) and \(h=1e{-}4\) (green and black triangles and lines, respectively), while it generates different iterations w.r.t. AF using other \(h=1e{-}2\) (blue triangles and lines) for \(tol=1e{-}8\) and significantly more iterations using \(h=1e{-}6\) (brown triangles and lines) even for \(tol=1e{-}4\), so it hasn’t been launched for \(tol=1e{-}8\). (Color figure online)

Euler beam: equilibrium path for L/h=1000 and \(tol=1e{-}4\) (left) and \(tol=1e{-}8\)(right). A part of the graph is zoomed in to improve the readability of the figures. The results coincide for AF (red points and dashed line) and TIC (red squares and red solid line) in both the cases; IS generates the same iterations only using \(h=1e{-}3\) and \(h=1e{-}4\) (green and black triangles and lines, respectively), while it generates different iterations w.r.t. AF using other \(h=1e{-}2\) (blue triangles and lines) for \(tol=1e{-}8\) and significantly more iterations using \(h=1e{-}6\) (brown triangles and lines) even for \(tol=1e{-}4\), so it hasn’t been launched for \(tol=1e{-}8\). (Color figure online)

The equilibrium path for different slenderness ratios, structural models, and values of h of the IS method, are reported in Figs. 2, 3 and 4 for the \(C^2\) NURBS. The load factor is normalized with respect to the analytical buckling load \(\lambda _b\). The obtained iteration numbers for each model are presented in Table 3.

As it can be seen from Figs. 2, 3 and 4 and Table 3, the simulation using both AF and TIC gives always the lowest number of iterations keeping so the highest convergence speed of the Newton algorithm. However, since the IS calculates the Hessian using the finite stepsize h, its value influences significantly the performance of the IS algorithm in computation of the Hessian. In particular, one can see that the gradient is always calculated correctly, since the shape of the path obtained by IS in Figs. 2, 3 and 4 coincides with the shape obtained by TIC and AF. However, one can also see that different values of h lead to different accuracies in Hessian for IS. In particular, for small values of h (e.g., \(h \le 10^{-6}\)), the simulation generates much more iterations, than the higher precision methods like TIC or AF, due to large errors in computation of the Hessian. In the case of \(h = 10^{-6}\), the IS method is not usable and it is possible to observe that the behavior of the algorithm is optimal only at the beginning of the equilibrium path where the nonlinear aspects are not relevant yet. For this issue, the results obtained by IS with \(h = 10^{-6}\) are presented in Figs. 2, 3 and 4 only for the case of tol\(=10^{-4}\) where it is also evident that for \(L/t=10\) the process is not able to track the whole equilibrium path.

It can be also noted that the IS method with bigger values of h allows to obtain the same solution as AF or TIC for large values of \(k = \frac{L}{t}\), while it generates more iterations for \(k = 10\) also in this case. One can see that the results obtained by TIC coincide with the AF, since the proposed methods allow to calculate both the gradient and Hessian with a higher precision.

4.2 Hinged cylindrical shallow roof

The benchmark depicted in Fig. 5 is analyzed in the multi-layered cases [0/90/0] and [90/0/90] with the material properties reported in Tab. 4 and thickness \(t=12.7\) (see [31] for details). The pointwise force is applied at the apex of the shell (point \(\mathbf{A }\)). This test is particularly popular due to the snapping behaviour and because of that at some intermediate state, the tangential global stiffness matrices become singular with a subsequent unstable character. For this reason this test is a good candidate to check the accuracy in the evaluation of the second order derivatives.

In Figs. 6 and 7, the equilibrium paths are shown in terms of the load parameter \(\lambda \) and \(w_A\) for both the considered stacking sequences. The total number of iterations is also presented for each model in Table 5.

Also in this case, the results obtained by TIC coincide with the AF. However, one can also see that the IS gives acceptable results in this case without significant loss in efficiency, but still for small values of the parameter h the method is neither accurate nor efficient. One can also see from Fig. 7(right) that the results obtained by IS with \(h=10^{-4}\) can differ from AF and TIC starting from some moment due to numerical errors in computation of the Hessian. Moreover, IS with \(h=10^{-6}\) generated too many iterations, so the analysis has not been completed due to the difficulties of the numerical solution process. Also in this case, the results of IS with \(h=10^{-6}\) are presented in Figs. 6 and 7 only for the case of tol\(=10^{-4}\) for the same reasons as previously stated.

4.3 Clamped semi-cylinder

Composite shallow roof: equilibrium path for [0/90/0] \(tol=1e{-}4\) (left) and \(tol=1e{-}8\) (right). A part of the graph is zoomed in to improve the readability of the figures. The results coincide for AF (red points and dashed line) and TIC (red squares and red solid line) in both the cases; IS generates the same iterations only using the values of h up to \(1e{-}4\), while it generates significantly more iterations using \(h=1e{-}6\) (brown triangles and lines) even for \(tol=1e{-}4\), so it hasn’t been launched for \(tol=1e{-}8\). (Color figure online)

Composite shallow roof: equilibrium path for [90/0/90] and \(tol=1e{-}4\) (left) and \(tol=1e{-}8\) (right). A part of the graph is zoomed in to improve the readability of the figures. The results coincide for AF (red points and dashed line) and TIC (red squares and red solid line) in both the cases; IS generates the same iterations only using the values of h up to \(1e{-}3\), while it generates different iterations using \(h=1e{-}4\) for \(tol=1e{-}8\) and significantly more iterations using \(h=1e{-}6\) (brown triangles and lines) even for \(tol=1e{-}4\), so it hasn’t been launched for \(tol=1e{-}8\). (Color figure online)

Clamped semi-cylinder: equilibrium path for \(tol=1e{-}4\) and \(tol=1e{-}8\). A part of the graph is zoomed in to improve the readability of the figures. The results coincide for AF (red points and dashed line) and TIC (red squares and red solid line) in both the cases; IS generates the same iterations only using the values of h up to \(1e{-}3\), while it generates different iterations using \(h=1e{-}4\) (black triangles and lines) and significantly more iterations using \(h=1e{-}6\) (brown triangles and lines) even for \(tol=1e{-}4\), so it hasn’t been launched for \(tol=1e{-}8\). (Color figure online)

In Fig. 8, the geometry and the boundary conditions of another classical benchmark test from [53] are reported. The structure is a semi-cylinder loaded by a concentrated force at the middle of one of the curved edges (point A), while the other one is clamped. The vertical displacement of the straight edges is constrained. Due to its symmetry, only a half of the structure is analyzed. A composite multi-layered material is considered. The local reference system, used for defining the material proprieties, has the direction 1 aligned with the y axis of the global system and the direction 3 is the normal to the surface from inside out. The stacking sequence of the laminated material is [0/90/0], measured with respect to the direction 1 of the local reference system and the material properties are \(E_1 = 2068.50\), \(E_2 = E_3 = 517.125\), \(\nu _{12} = \nu _{23} =\nu _{13} = 0.3\) and \(G_{12} = G_{23} =G_{13} = 759.58\). This test is of interest in the shell context of analysis because the deformation is characterized by the development of wrinkles and a loss of accuracy could determine inaccuracy in the description of its nonlinear behaviour.

Also in this case the numerical experiments show that the results obtained by AF and TIC coincide, while the IS gives acceptable results only for large values of h. One can also see that inaccuracy in the evaluation of the second derivatives arises already for \(h=10^{-4}\). Moreover, one can see from Tables 3 and 6 that the best value of h for IS depends on the problem and does not guarantee to generate the same solutions as TIC or AF do. For example, the IS using \(h=10^{-4}\) has generated less iterations (being so more efficient) than using other values of h for the Euler Beam problem, while it performed significantly worse for the present example generating more iterations than AF or TIC. Again for smaller values of h (e.g. \(h=10^{-6}\)) the IS method is not applicable and with respect to the previous tests it seems particularly pathological. The solution process has been interrupted due to the high number of iterations nevertheless the equilibrium path have been tracked only at early part as it is shown in Fig. 9(left).

The equilibrium path in terms of the load parameter \(\lambda \) and \(w_A\) being the latter the displacement along z of the point \(\mathbf{A }\) within the analysis is shown in Fig. 9.

5 Conclusion

A new numerical method for computation of the first and second order derivatives has been proposed within the frameworks for working numerically with infinite and infinitesimal numbers. The proposed method is simple and efficient allowing one to calculate the required derivatives with a high precision in both sequential and parallel ways. Moreover, it doesn’t require solution to ill-conditioned linear systems and doesn’t involve any small finite stepsize h avoiding the issues related to numerical cancellation, being one of the main limitations for the numerical differentiation using finite differences. The proposed method allows to calculate the derivatives on-the-fly without neither pre-processing of the code nor analytical formulae for the functions. For these reasons, it becomes an effective numerical tool for real scale simulations.

It should be noted that the proposed approach is not limited by the Infinity Computer only and can be successfully used with alternative approaches for dealing with infinite and infinitesimal numbers (e.g., Levi-Civita field or hyper-dual numbers), so the computational effort of the proposed algorithms directly depends on the software implementation of the respective framework.

In particular, the application of this method in numerical analysis of structural behavior as black-box tool is useful due to its high accuracy and less error prone character that allow to avoid the analytical burden during the development of new mechanical models. Its use in other contexts of applied mathematics and physics is also desirable especially in coupled problems when the derivation process has to be applied to more complicated functions. Direct competitors of the proposed approach, being precise differentiation techniques like Automatic Differentiation or methods based on hyper-dual numbers, require a pre-processing of the code every time the system changes adding so high computational costs in the case when the system changes frequently during the simulation (automatic differentiation) or require more “axis” to perform similar executions (hyper-dual numbers) making the executions heavier. Other approaches similar to Imaginary Step are still possible but they require an accurate choice of the characteristic parameter h, which is problem dependent, and cannot guarantee a precise solution being so inefficient for practical use.

References

Amodio P, Brugnano L, Iavernaro F, Mazzia F (2020) On the use of the infinity computer architecture to set up a dynamic precision floating-point arithmetic. Soft Comput 24(23):17589–17600

Amodio P, Iavernaro F, Mazzia F, Mukhametzhanov MS, Sergeyev Y (2017) A generalized Taylor method of order three for the solution of initial value problems in standard and infinity floating-point arithmetic. Math Comput Simul 141:24–39

Aristodemo M (1985) A high-continuity finite element model for two-dimensional elastic problems. Comput Struct 21(5):987–993. https://doi.org/10.1016/0045-7949(85)90211-1

Baydin AG, Pearlmutter BA, Radul AA, Siskind M (2018) Automatic differentiation in machine learning: a survey. J Mach Learn Res 18:1–43

Benci V, Di Nasso M (2003) Numerosities of labeled sets: a new way of counting. Adv Math 173:50–67

Caseiro J, Valente R, Reali A, Kiendl J, Auricchio F, Alves de Sousa R (2015) Assumed natural strain NURBS-based solid-shell element for the analysis of large deformation elasto-plastic thin-shell structures. Comput Methods Appl Mech Eng 284:861–880. https://doi.org/10.1016/j.cma.2014.10.037

Cococcioni M, Cudazzo A, Pappalardo M, Sergeyev Y (2020) Solving the lexicographic multi-objective mixed-integer linear programming problem using branch-and-bound and grossone methodology. Commun Nonlinear Sci Numer Simul. https://doi.org/10.1016/j.cnsns.2020.105177

Cococcioni M, Pappalardo M, Sergeyev Y (2018) Lexicographic multiobjective linear programming using grossone methodology: theory and algorithm. Appl Math Comput 318:298–311

Cottrell JA, Hughes TJR, Bazilevs Y (2009) Isogeometric analysis: toward integration of CAD and FEA . 978-0-470-74873-2

De Cosmis S, De Leone R (2012) The use of grossone in mathematical programming and operations research. Appl Math Comput 218(16):8029–8038

De Leone R (2018) Nonlinear programming and grossone: quadratic programming and the role of constraint qualifications. Appl Math Comput 318:290–297

De Leone R, Fasano G, Sergeyev Y (2018) Planar methods and grossone for the conjugate gradient breakdown in nonlinear programming. Comput Optim Appl 71(1):73–93

Falcone A, Garro A, Mukhametzhanov MS, Sergeyev Y (2020) A Simulink-based software solution using the infinity computer methodology for higher order differentiation. Appl Math Comput. https://doi.org/10.1016/j.amc.2020.125606

Falcone A, Garro A, Mukhametzhanov MS, Sergeyev Y (2020) Representation of grossone-based arithmetic in simulink for scientific computing. Soft Comput 24(23):17525–17539. https://doi.org/10.1007/s00500-020-05221-y

Flynn D, Shamseddine K (2020) On computational applications of the Levi-Civita field. J Comput Appl Math 382:113041. https://doi.org/10.1016/j.cam.2020.113041

Garcea G, Trunfio G, Casciaro R (1998) Mixed formulation and locking in path-following nonlinear analysis. Comput Methods Appl Mech Eng 165(1–4):247–272

Gaudioso M, Giallombardo G, Mukhametzhanov MS (2018) Numerical infinitesimals in a variable metric method for convex nonsmooth optimization. Appl Math Comput 318:312–320

Griewank A (1989) On automatic differentiation. In: Iri M, Tanabe K (eds) Mathematical programming: recent developments and applications. Kluwer Academic Publisher, pp 83–108

Herrema AJ, Johnson EL, Proserpio D, Wu MCH, Kiendl J, Hsu MC (2018) Penalty coupling of non-matching isogeometric Kirchhoff–Love shell patches with application to composite wind turbine blades. Comput Methods Appl Mech Eng 346:810–840

Iavernaro F, Mazzia F, Mukhametzhanov MS, Sergeyev Y (2020) Conjugate-symplecticity properties of Euler–Maclaurin methods and their implementation on the infinity computer. Appl Numer Math 155:58–72

Iavernaro F, Mazzia F, Mukhametzhanov MS, Sergeyev Y (2021) Computation of higher order Lie derivatives on the infinity computer. J Comput Appl Math 383:113135

Kiendl J, Bletzinger KU, Linhard J, Wüchner R (2009) Isogeometric shell analysis with Kirchhoff–Love elements. Comput Methods Appl Mech Eng 198(49):3902–3914. https://doi.org/10.1016/j.cma.2009.08.013

Korelc J (2002) Multi-language and multi-environment generation of nonlinear finite element codes. Eng Comput 18(4):312–327

Korelc J, Wriggers P (2016) Automation of Finite Element Methods, Springer, Cham. https://doi.org/10.1007/978-3-319-39005-5

Lai KL, Crassidis J (2008) Extensions of the first and second complex-step derivative approximations. J Comput Appl Math 219(1):276–293

Lantoine G, Russell R, Dargent T (2012) Using multicomplex variables for automatic computation of high-order derivatives. ACM Trans Math Softw 38(3):1–21

Leonetti L, Garcea G, Magisano D, Liguori F, Formica G, Lacarbonara W (2020) Optimal design of CNT-nanocomposite nonlinear shells. Nanomaterials. https://doi.org/10.3390/nano10122484

Leonetti L, Liguori F, Magisano D, Garcea G (2018) An efficient isogeometric solid-shell formulation for geometrically nonlinear analysis of elastic shells. Comput Methods Appl Mech Eng 331:159–183. https://doi.org/10.1016/j.cma.2017.11.025

Leonetti L, Liguori FS, Magisano D, Kiendl J, Reali A, Garcea G (2020) A robust penalty coupling of non-matching isogeometric Kirchhoff–Love shell patches in large deformations. Comput Methods Appl Mech Eng 371:113289. https://doi.org/10.1016/j.cma.2020.113289

Leonetti L, Magisano D, Liguori F, Garcea G (2018) An isogeometric formulation of the Koiter’s theory for buckling and initial post-buckling analysis of composite shells. Comput Methods Appl Mech Eng 337:387–410. https://doi.org/10.1016/j.cma.2018.03.037

Leonetti L, Magisano D, Madeo A, Garcea G, Kiendl J, Reali A (2019) A simplified Kirchhoff–Love large deformation model for elastic shells and its effective isogeometric formulation. Comput Methods Appl Mech Eng 354:369–396. https://doi.org/10.1016/j.cma.2019.05.025

Les Piegl WT (1997). The NURBS book. https://doi.org/10.1007/978-3-642-59223-2

Liguori FS, Zucco G, Madeo A, Garcea G, Leonetti L, Weaver PM (2021) An isogeometric framework for the optimal design of variable stiffness shells undergoing large deformations. Int J Solids Struct 210–211:18–34. https://doi.org/10.1016/j.ijsolstr.2020.11.003

Liguori FS, Zucco G, Madeo A, Magisano D, Leonetti L, Garcea G, Weaver PM (2019) Postbuckling optimisation of a variable angle tow composite wingbox using a multi-modal Koiter approach. Thin Walled Struct 138:183–198. https://doi.org/10.1016/j.tws.2019.01.035

Magisano D, Leonetti L, Garcea G (2017) Advantages of the mixed format in geometrically nonlinear analysis of beams and shells using solid finite elements. Int J Numer Methods Eng 109(9):1237–1262. https://doi.org/10.1002/nme.5322

Magisano D, Leonetti L, Garcea G (2021) Isogeometric analysis of 3d beams for arbitrarily large rotations: locking-free and path-independent solution without displacement DOFs inside the patch. Comput Methods Appl Mech Eng 373:113437. https://doi.org/10.1016/j.cma.2020.113437

Magisano D, Liguori F, Madeo A, Leonetti L, Garcea G (2021) Material design for optimal postbuckling behaviour of composite shells. Materials. https://doi.org/10.3390/ma14071665

Maurin F, Greco F, Dedoncker S, Desmet W (2018) Isogeometric analysis for nonlinear planar Kirchhoff rods: weighted residual formulation and collocation of the strong form. Comput Methods Appl Mech Eng 340:1023–1043. https://doi.org/10.1016/j.cma.2018.05.025

Maurin F, Greco F, Desmet W (2018) Isogeometric analysis for nonlinear planar pantographic lattice: discrete and continuum models. Contin Mech Thermodyn. https://doi.org/10.1007/s00161-018-0641-y

Momin J, Yang XS (2013) A literature survey of benchmark functions for global optimization problems. Int J Math Model Numer Optim 4(2):150–194

Mukhametzhanov MS, Sergeyev Y (2020) The infinity computer vs. symbolic computations: first steps in comparison. In: AIP conference proceedings, vol 2293, p 420045

Nguyen VP, Anitescu C, Bordas SPA, Rabczuk T (2015) Isogeometric analysis: an overview and computer implementation aspects. Math Comput Simul 117:89–116. https://doi.org/10.1016/j.matcom.2015.05.008

Riks E (1979) An incremental approach to the solution of snapping and buckling problems. Int J Solids Struct 15(7):529–551. https://doi.org/10.1016/0020-7683(79)90081-7

Robinson A (1996) Non-standard analysis. Princeton University Press, Princeton

Sergeyev Y (2010) Computer system for storing infinite, infinitesimal, and finite quantities and executing arithmetical operations with them. USA patent 7,860,914 (2010), EU patent 1728149 (2009), RF patent 2395111

Sergeyev Y (2003) Arithmetic of infinity. Edizioni Orizzonti Meridionali (2003, 2nd edn. 2013)

Sergeyev Y (2011) Higher order numerical differentiation on the infinity computer. Optim Lett 5(4):575–585

Sergeyev Y (2017) Numerical infinities and infinitesimals: methodology, applications, and repercussions on two Hilbert problems. EMS Surv Math Sci 4:219–320

Sergeyev Y, Kvasov D, Mukhametzhanov MS (2018) On strong homogeneity of a class of global optimization algorithms working with infinite and infinitesimal scales. Commun Nonlinear Sci Numer Simul 59:319–330

Sergeyev Y, Mukhametzhanov MS, Mazzia F, Iavernaro F, Amodio P (2016) Numerical methods for solving initial value problems on the infinity computer. Int J Unconv Comput 12(1):3–23

Sergeyev Y, Nasso M, Mukhametzhanov MS, Kvasov D (2021) Novel local tuning techniques for speeding up one-dimensional algorithms in expensive global optimization using Lipschitz derivatives. J Comput Appl Math 383:113134

Shamseddine K, Berz M (1996) Exception handling in derivative computation with non-archimedean calculus. In: Berz M, Bischof C, Corliss G, Griewank A Computational differentiation: techniques. applications, and tools. SIAM, Philadelphia, pp 37–51

Sze K, Liu X, Lo S (2004) Popular benchmark problems for geometric nonlinear analysis of shells. Finite Elem Anal Des 40(11):1551–1569. https://doi.org/10.1016/j.finel.2003.11.001

Tanaka M, Sasagawa T, Omote R, Fujikawa M, Balzani D, Schröder J (2015) A highly accurate 1st- and 2nd-order differentiation scheme for hyperelastic material models based on hyper-dual numbers. Comput Methods Appl Mech Eng 283:22–45. https://doi.org/10.1016/j.cma.2014.08.020

Weinstein M, Rao A (2017) Algorithm 984: Adigator, a toolbox for the algorithmic differentiation of mathematical functions in MATLAB using source transformation via operator overloading. ACM Trans Math Softw 44(2):1–25

Yu W, Blair M (2013) DNAD, a simple tool for automatic differentiation of Fortran codes using dual numbers. Comput Phys Commun 184(5):1446–1452

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Analytical strain variations of the full model

Appendix: Analytical strain variations of the full model

In this section, it is shown the shape of the first and second strain variations. Even though the mechanical model is yet quiet simple it is evident that the derivation process and the optimal shape for their use in a computational framework is not trivial. Since the strain variation of the membrane part is quadratic function of the displacements, only the strain variation of curvature is investigated in this paper. The curvature

is expressed in the following convenient format, by using the spin operator \(\mathbf{W }\)

By defining the following quantities

the first variation of the curvature (being the gradient of the respective function) is

The second variation \(\delta \dot{\chi }_{ij}\) (being the Hessian of the respective function), involved in the construction of the iteration matrix of the Newton procedure can be calculated as follows:

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Leonetti, L., Mukhametzhanov, M.S. Novel first and second order numerical differentiation techniques and their application to nonlinear analysis of Kirchhoff–Love shells. Comput Mech 70, 29–47 (2022). https://doi.org/10.1007/s00466-022-02154-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00466-022-02154-1